elysium

Automatically apply AI-generated code changes in Emacs

Stars: 247

Elysium is an Emacs package that allows users to automatically apply AI-generated changes while coding. By calling `elysium-query`, users can request a set of changes that will be merged into the code buffer. The tool supports making queries on a specific region without leaving the code buffer. It uses the `gptel` backend and currently recommends using the Claude 3-5 Sonnet model for generating code. Users can customize the window size and style of the Elysium buffer. The tool also provides functions to keep or discard AI-suggested changes and navigate conflicting hunks with `smerge-mode`.

README:

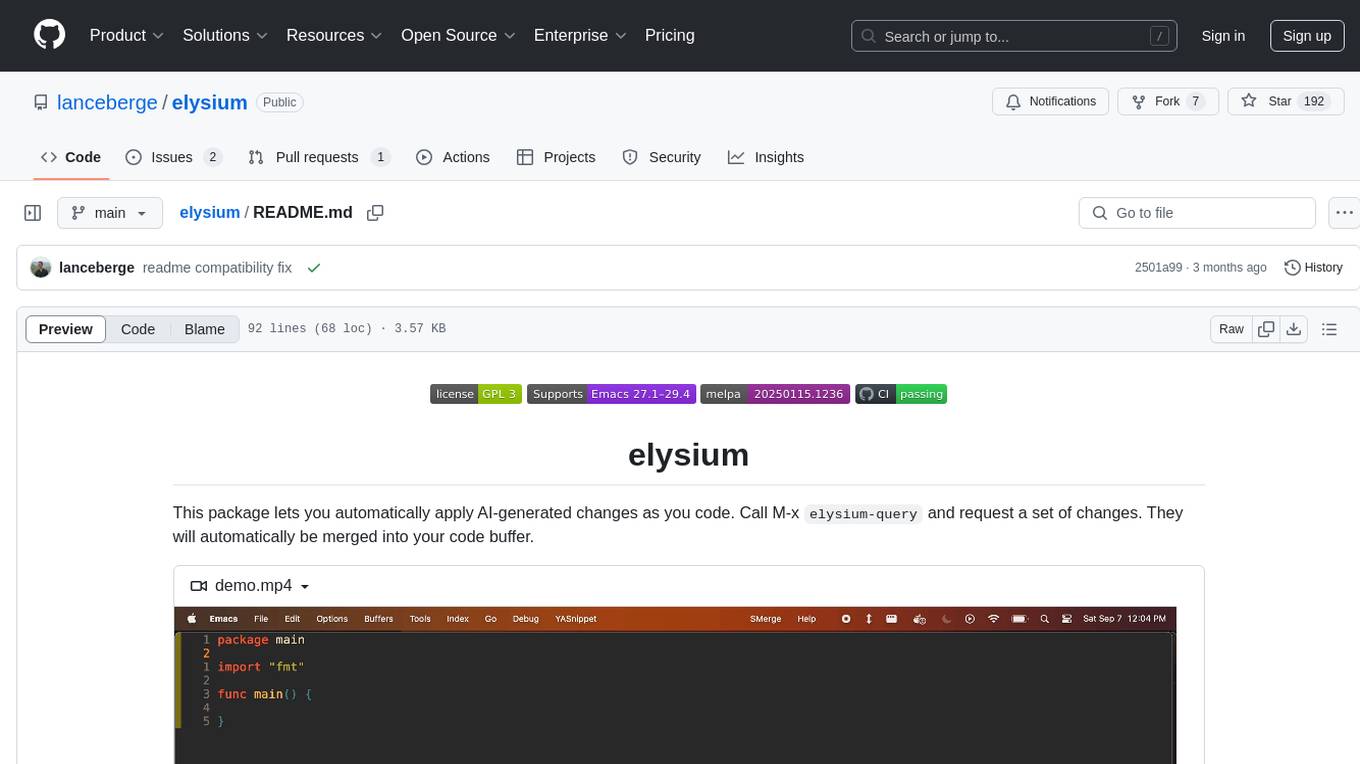

This package lets you automatically apply AI-generated changes as you code. Call M-x elysium-query

and request a set of changes. They will automatically be merged into your code buffer.

https://github.com/user-attachments/assets/275e292e-c480-48d1-9a13-27664c0bbf12

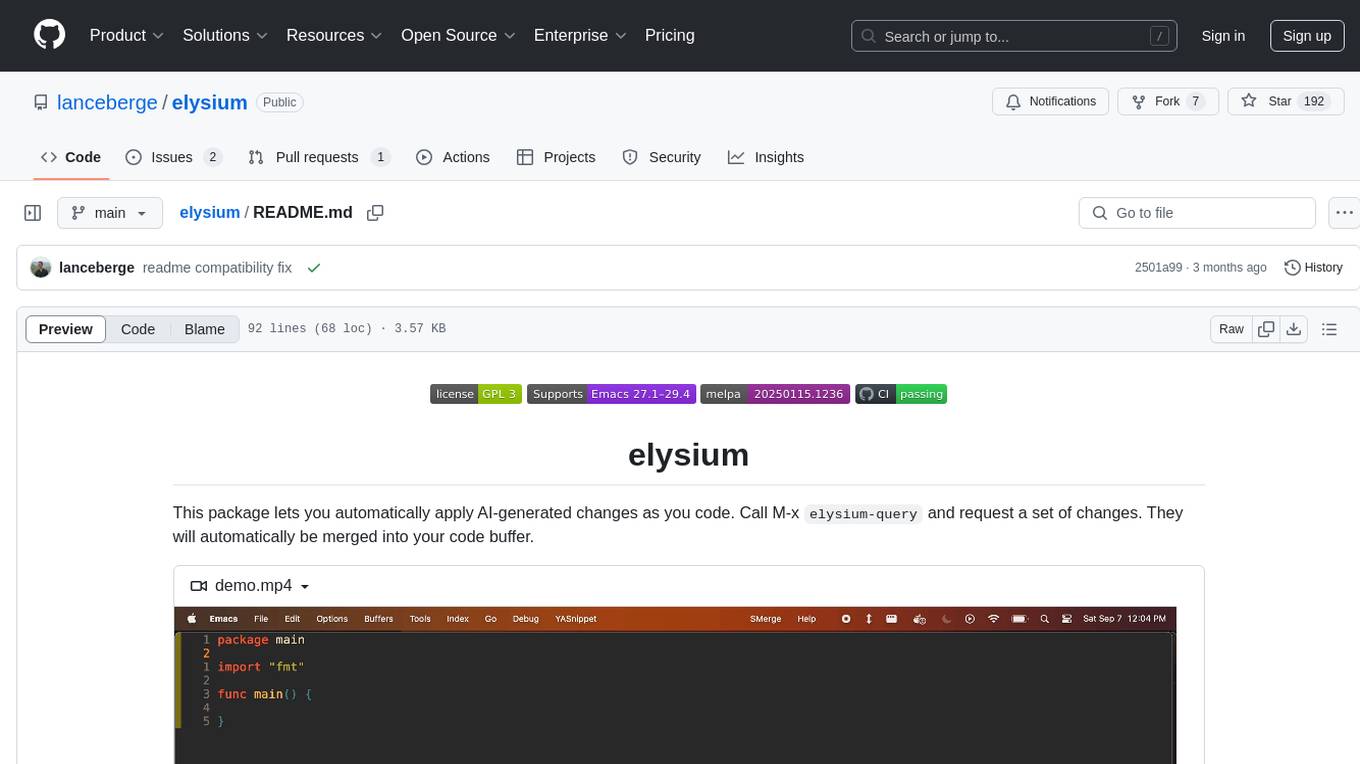

You can make queries on a region without leaving the the code buffer

https://github.com/user-attachments/assets/73bd4c38-dc03-47b7-b943-a4b9b3203f06

Elysium is now on Melpa!

(add-to-list 'package-archives

'("melpa-stable" . "https://stable.melpa.org/packages/") t)

(use-package elysium)(use-package elysium

:custom

;; Below are the default values

(elysium-window-size 0.33) ; The elysium buffer will be 1/3 your screen

(elysium-window-style 'vertical)) ; Can be customized to horizontal

(use-package gptel

:custom

(gptel-model 'claude-3-5-sonnet-20240620)

:config

(defun read-file-contents (file-path)

"Read the contents of FILE-PATH and return it as a string."

(with-temp-buffer

(insert-file-contents file-path)

(buffer-string)))

(defun gptel-api-key ()

(read-file-contents "~/secrets/claude_key"))

(setq

gptel-backend (gptel-make-anthropic "Claude"

:stream t

:key #'gptel-api-key)))Use smerge-mode to then merge in the changes

(use-package smerge-mode

:ensure nil

:hook

(prog-mode . smerge-mode))| Function | Description |

|---|---|

elysium-query |

send a query to the gptel backend |

elysium-keep-all-suggested-changes |

keep all of the AI-suggested changes |

elysium-discard-all-suggested-changes |

discard all of the AI-suggested changes |

elysium-clear-buffer |

clear the elysium buffer |

elysium-add-context |

add the contents of a region to the elysium buffer |

smerge-next |

go to the next conflicting hunk |

smerge-previous |

go to the next conflicting hunk |

smerge-keep-other |

keep this set of changes |

smerge-keep-mine |

discard this set of changes |

elysium-toggle-window |

toggle the chat window |

elysium uses gptel as a backend. It supports any of the models supported by gptel, but currently (9/24)

Claude 3-5 Sonnet seems to be the best for generating code.

If there is a region active, then elysium will send only that region to the LLM. Otherwise, the entire code buffer will be sent. If you're using Claude, then I recommend only ever sending a region to avoid getting rate-limited.

- Implementing Prompt Caching with Anthropic to let us send more queries before getting rate-limited

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for elysium

Similar Open Source Tools

elysium

Elysium is an Emacs package that allows users to automatically apply AI-generated changes while coding. By calling `elysium-query`, users can request a set of changes that will be merged into the code buffer. The tool supports making queries on a specific region without leaving the code buffer. It uses the `gptel` backend and currently recommends using the Claude 3-5 Sonnet model for generating code. Users can customize the window size and style of the Elysium buffer. The tool also provides functions to keep or discard AI-suggested changes and navigate conflicting hunks with `smerge-mode`.

org-ai

org-ai is a minor mode for Emacs org-mode that provides access to generative AI models, including OpenAI API (ChatGPT, DALL-E, other text models) and Stable Diffusion. Users can use ChatGPT to generate text, have speech input and output interactions with AI, generate images and image variations using Stable Diffusion or DALL-E, and use various commands outside org-mode for prompting using selected text or multiple files. The tool supports syntax highlighting in AI blocks, auto-fill paragraphs on insertion, and offers block options for ChatGPT, DALL-E, and other text models. Users can also generate image variations, use global commands, and benefit from Noweb support for named source blocks.

chatgpt-arcana.el

ChatGPT-Arcana is an Emacs package that allows users to interact with ChatGPT directly from Emacs, enabling tasks such as chatting with GPT, operating on code or text, generating eshell commands from natural language, fixing errors, writing commit messages, and creating agents for web search and code evaluation. The package requires an API key from OpenAI's GPT-3 model and offers various interactive functions for enhancing productivity within Emacs.

ai-code-interface.el

AI Code Interface is an Emacs package designed for AI-assisted software development, providing a uniform interface for various AI backends. It offers context-aware AI coding actions and seamless integration with AI-driven agile development workflows. The package supports multiple AI coding CLIs such as Claude Code, Gemini CLI, OpenAI Codex, GitHub Copilot CLI, Opencode, Grok CLI, Cursor CLI, Kiro CLI, CodeBuddy Code CLI, and Aider CLI. It aims to streamline the use of different AI tools within Emacs while maintaining a consistent user experience.

text-embeddings-inference

Text Embeddings Inference (TEI) is a toolkit for deploying and serving open source text embeddings and sequence classification models. TEI enables high-performance extraction for popular models like FlagEmbedding, Ember, GTE, and E5. It implements features such as no model graph compilation step, Metal support for local execution on Macs, small docker images with fast boot times, token-based dynamic batching, optimized transformers code for inference using Flash Attention, Candle, and cuBLASLt, Safetensors weight loading, and production-ready features like distributed tracing with Open Telemetry and Prometheus metrics.

minuet-ai.el

Minuet AI is a tool that brings the grace and harmony of a minuet to your coding process. It offers AI-powered code completion with specialized prompts and enhancements for chat-based LLMs, as well as Fill-in-the-middle (FIM) completion for compatible models. The tool supports multiple AI providers such as OpenAI, Claude, Gemini, Codestral, Ollama, and OpenAI-compatible providers. It provides customizable configuration options and streaming support for completion delivery even with slower LLMs.

skyvern

Skyvern automates browser-based workflows using LLMs and computer vision. It provides a simple API endpoint to fully automate manual workflows, replacing brittle or unreliable automation solutions. Traditional approaches to browser automations required writing custom scripts for websites, often relying on DOM parsing and XPath-based interactions which would break whenever the website layouts changed. Instead of only relying on code-defined XPath interactions, Skyvern adds computer vision and LLMs to the mix to parse items in the viewport in real-time, create a plan for interaction and interact with them. This approach gives us a few advantages: 1. Skyvern can operate on websites it’s never seen before, as it’s able to map visual elements to actions necessary to complete a workflow, without any customized code 2. Skyvern is resistant to website layout changes, as there are no pre-determined XPaths or other selectors our system is looking for while trying to navigate 3. Skyvern leverages LLMs to reason through interactions to ensure we can cover complex situations. Examples include: 1. If you wanted to get an auto insurance quote from Geico, the answer to a common question “Were you eligible to drive at 18?” could be inferred from the driver receiving their license at age 16 2. If you were doing competitor analysis, it’s understanding that an Arnold Palmer 22 oz can at 7/11 is almost definitely the same product as a 23 oz can at Gopuff (even though the sizes are slightly different, which could be a rounding error!) Want to see examples of Skyvern in action? Jump to #real-world-examples-of- skyvern

evedel

Evedel is an Emacs package designed to streamline the interaction with LLMs during programming. It aims to reduce manual code writing by creating detailed instruction annotations in the source files for LLM models. The tool leverages overlays to track instructions, categorize references with tags, and provide a seamless workflow for managing and processing directives. Evedel offers features like saving instruction overlays, complex query expressions for directives, and easy navigation through instruction overlays across all buffers. It is versatile and can be used in various types of buffers beyond just programming buffers.

mcpdoc

The MCP LLMS-TXT Documentation Server is an open-source server that provides developers full control over tools used by applications like Cursor, Windsurf, and Claude Code/Desktop. It allows users to create a user-defined list of `llms.txt` files and use a `fetch_docs` tool to read URLs within these files, enabling auditing of tool calls and context returned. The server supports various applications and provides a way to connect to them, configure rules, and test tool calls for tasks related to documentation retrieval and processing.

nano-graphrag

nano-GraphRAG is a simple, easy-to-hack implementation of GraphRAG that provides a smaller, faster, and cleaner version of the official implementation. It is about 800 lines of code, small yet scalable, asynchronous, and fully typed. The tool supports incremental insert, async methods, and various parameters for customization. Users can replace storage components and LLM functions as needed. It also allows for embedding function replacement and comes with pre-defined prompts for entity extraction and community reports. However, some features like covariates and global search implementation differ from the original GraphRAG. Future versions aim to address issues related to data source ID, community description truncation, and add new components.

llm-in-sandbox

LLM-in-Sandbox is a project that aims to unlock general agentic intelligence by placing a large language model (LLM) inside a code sandbox with basic computer capabilities. This approach allows the LLM to outperform standalone models across various domains such as chemistry, physics, math, biomedicine, long-context understanding, and instruction-following without additional training. The project leverages reinforcement learning (RL) to further enhance performance, with benefits including consistent improvements in non-code domains, using the file system as long-term memory for up to 8× token savings, Docker isolation for security, and compatibility with various LLM providers like OpenAI, Anthropic, vLLM, and SGLang.

moatless-tools

Moatless Tools is a hobby project focused on experimenting with using Large Language Models (LLMs) to edit code in large existing codebases. The project aims to build tools that insert the right context into prompts and handle responses effectively. It utilizes an agentic loop functioning as a finite state machine to transition between states like Search, Identify, PlanToCode, ClarifyChange, and EditCode for code editing tasks.

counselors

Counselors is a tool created by Aaron Francis to fan out prompts to multiple AI coding agents in parallel. It dispatches prompts to AI tools like Claude, Codex, Gemini, Amp, or custom tools simultaneously, collects their responses, and writes everything to a structured output directory. The tool does not call provider APIs directly, extract or reuse auth tokens, or perform any 'tricky' actions. It orchestrates around the CLIs installed locally, providing an easy way to interact with multiple AI agents. Users can install the CLI via npm, Homebrew, or a standalone binary, and then configure and run prompts to gather insights from various AI agents.

green-bit-llm

Green-Bit-LLM is a Python toolkit designed for fine-tuning, inferencing, and evaluating GreenBitAI's low-bit Language Models (LLMs). It utilizes the Bitorch Engine for efficient operations on low-bit LLMs, enabling high-performance inference on various GPUs and supporting full-parameter fine-tuning using quantized LLMs. The toolkit also provides evaluation tools to validate model performance on benchmark datasets. Green-Bit-LLM is compatible with AutoGPTQ series of 4-bit quantization and compression models.

agenticSeek

AgenticSeek is a voice-enabled AI assistant powered by DeepSeek R1 agents, offering a fully local alternative to cloud-based AI services. It allows users to interact with their filesystem, code in multiple languages, and perform various tasks autonomously. The tool is equipped with memory to remember user preferences and past conversations, and it can divide tasks among multiple agents for efficient execution. AgenticSeek prioritizes privacy by running entirely on the user's hardware without sending data to the cloud.

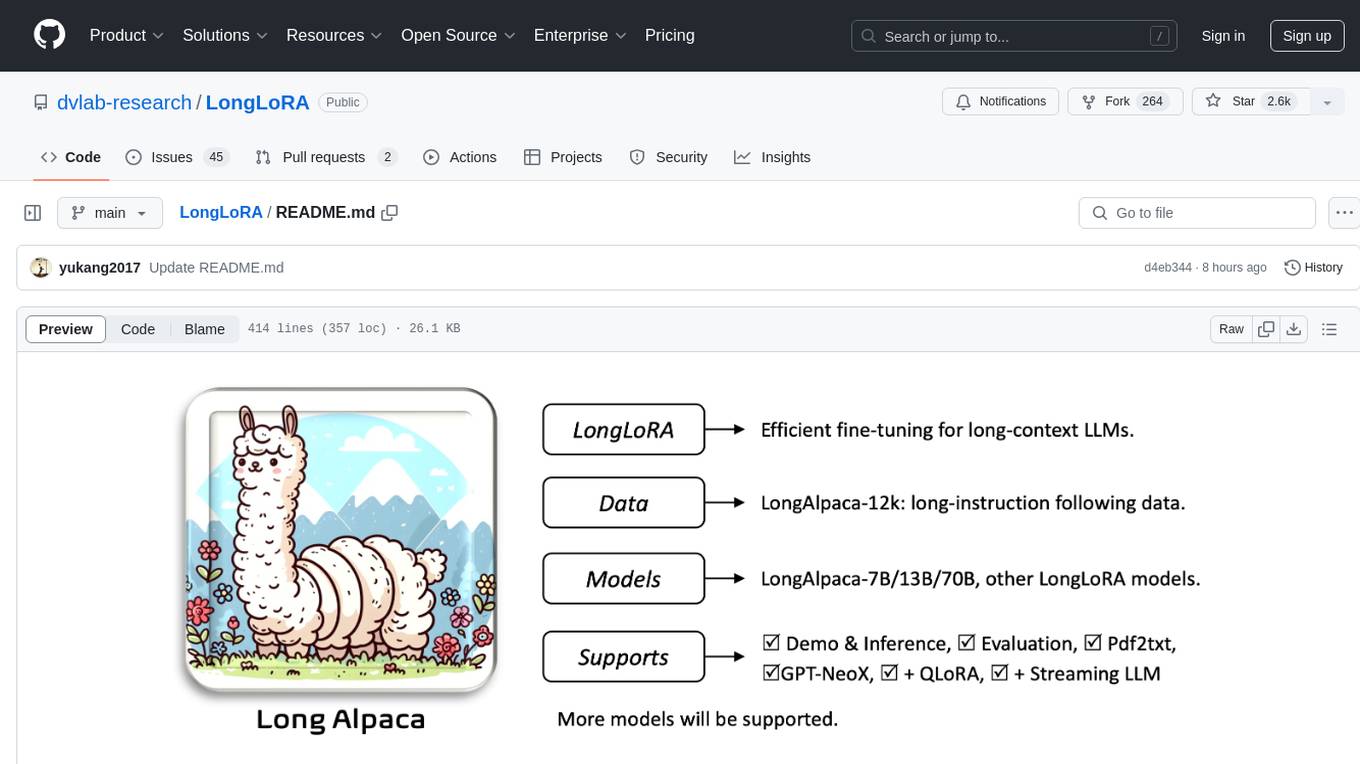

LongLoRA

LongLoRA is a tool for efficient fine-tuning of long-context large language models. It includes LongAlpaca data with long QA data collected and short QA sampled, models from 7B to 70B with context length from 8k to 100k, and support for GPTNeoX models. The tool supports supervised fine-tuning, context extension, and improved LoRA fine-tuning. It provides pre-trained weights, fine-tuning instructions, evaluation methods, local and online demos, streaming inference, and data generation via Pdf2text. LongLoRA is licensed under Apache License 2.0, while data and weights are under CC-BY-NC 4.0 License for research use only.

For similar tasks

elysium

Elysium is an Emacs package that allows users to automatically apply AI-generated changes while coding. By calling `elysium-query`, users can request a set of changes that will be merged into the code buffer. The tool supports making queries on a specific region without leaving the code buffer. It uses the `gptel` backend and currently recommends using the Claude 3-5 Sonnet model for generating code. Users can customize the window size and style of the Elysium buffer. The tool also provides functions to keep or discard AI-suggested changes and navigate conflicting hunks with `smerge-mode`.

code-cli

Autohand Code CLI is an autonomous coding agent in CLI form that uses the ReAct pattern to understand, plan, and execute code changes. It is designed for seamless coding experience without context switching or copy-pasting. The tool is fast, intuitive, and extensible with modular skills. It can be used to automate coding tasks, enforce code quality, and speed up development. Autohand can be integrated into team workflows and CI/CD pipelines to enhance productivity and efficiency.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.