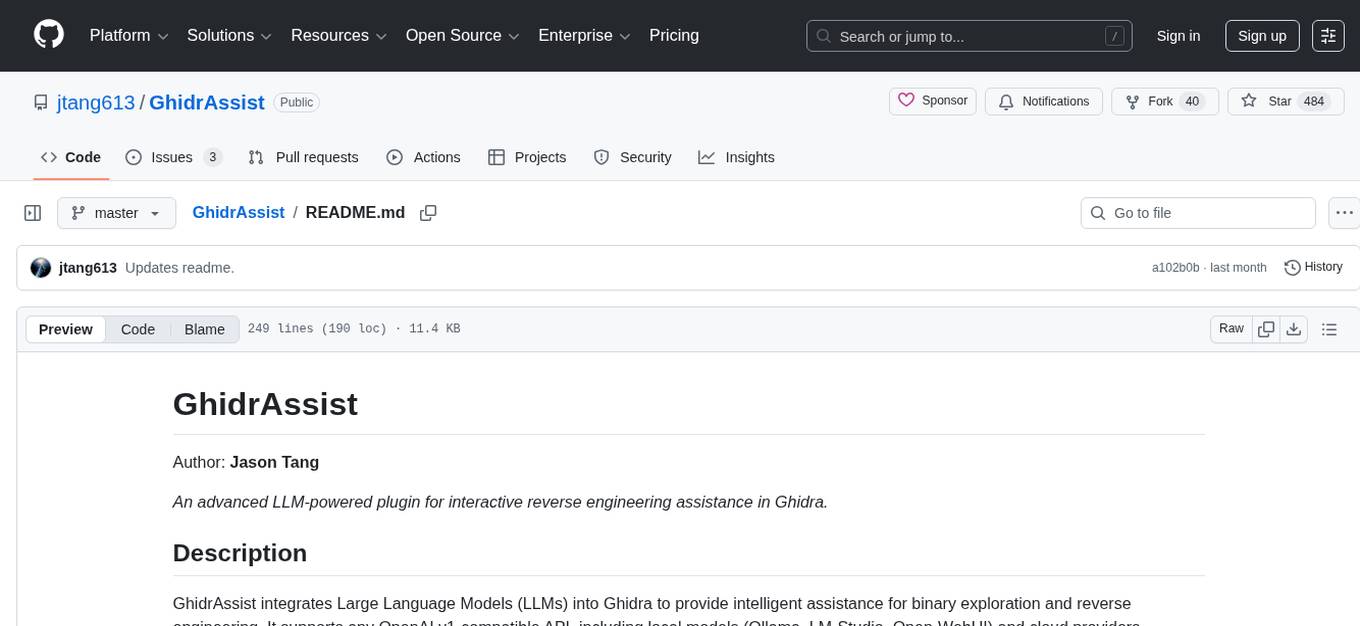

GhidrAssist

An LLM extension for Ghidra to enable AI assistance in RE.

Stars: 479

GhidrAssist is an advanced LLM-powered plugin for interactive reverse engineering assistance in Ghidra. It integrates Large Language Models (LLMs) to provide intelligent assistance for binary exploration and reverse engineering. The tool supports various OpenAI v1-compatible APIs, including local models and cloud providers. Key features include code explanation, interactive chat, custom queries, Graph-RAG knowledge system with semantic knowledge graph, community detection, security feature extraction, semantic graph tab, extended thinking/reasoning control, ReAct agentic mode, MCP integration, function calling, actions tab, RAG (Retrieval Augmented Generation), and RLHF dataset generation. The plugin uses a modular, service-oriented architecture with core services, Graph-RAG backend, data layer, and UI components.

README:

Author: Jason Tang

An advanced LLM-powered plugin for interactive reverse engineering assistance in Ghidra.

GhidrAssist integrates Large Language Models (LLMs) into Ghidra to provide intelligent assistance for binary exploration and reverse engineering. It supports any OpenAI v1-compatible API, including local models (Ollama, LM-Studio, Open-WebUI) and cloud providers (OpenAI, Anthropic, Azure).

Core Functionality:

-

Code Explanation - Explain functions and instructions in both disassembly and decompiled pseudo-C

- Security analysis panel showing risk level, activity profile, and API usage

- Editable summaries with user-edit protection from auto-overwrite

- Interactive Chat - Multi-turn conversational queries with persistent chat history

- Custom Queries - Direct LLM queries with optional context from current function/location

Graph-RAG Knowledge System:

-

Semantic Knowledge Graph - Hierarchical representation of binary analysis

- 5-level semantic hierarchy: Statement → Block → Function → Module → Binary

- Pre-computed LLM summaries enable fast, LLM-free queries

- SQLite persistence with JGraphT graph algorithms

- Full-text search (FTS5) on summaries and security annotations

-

Community Detection - Automatic module discovery via Leiden algorithm

- Groups related functions into logical modules

- Hierarchical community structure with summaries

- Visual graph exploration with configurable depth

-

Security Feature Extraction - Comprehensive security analysis

- Network APIs: POSIX sockets, WinSock, DNS, SSL/TLS, WinHTTP, WinINet

- File I/O APIs: POSIX, Windows, C library functions

- Crypto APIs: OpenSSL, Windows crypto, platform-specific

- String patterns: IP addresses, URLs, domains, file paths, registry keys

- Risk level classification (LOW/MEDIUM/HIGH) and activity profiling

-

Semantic Graph Tab - Visual knowledge graph interface

- Graph view with N-hop depth exploration

- List view of all indexed functions

- Semantic search across summaries

- One-click re-indexing and security analysis

Advanced Capabilities:

-

Extended Thinking/Reasoning Control - Adjust LLM reasoning depth for quality vs. speed trade-offs

- Support for OpenAI o1/o3/o4, Claude with extended thinking, and local reasoning models

- Configurable effort levels: Low (fast), Medium (balanced), High (thorough)

- Per-program persistence - different binaries can use different reasoning levels

- Provider-agnostic implementation (Anthropic, OpenAI, Azure, LiteLLM, LMStudio, Ollama)

-

ReAct Agentic Mode - Autonomous investigation using structured reasoning (Think-Act-Observe)

- LLM proposes investigation steps based on your query

- Systematic tool execution with progress tracking via todo lists

- Iteration history preservation showing all investigation steps

- Final synthesis with comprehensive answer and key findings

- Accurate metrics (iterations, tool calls, duration)

-

MCP Integration - Model Context Protocol client for tool-based analysis

- Works with GhidrAssistMCP for Ghidra-specific tools

- Conversational tool calling with automatic function execution

- Support for SSE (Server-Sent Events) transport

-

Function Calling - LLM can autonomously navigate binaries and modify analysis

- Rename functions and variables

- Navigate to addresses and cross-references

- Execute Ghidra commands

-

Actions Tab - Propose and apply bulk analysis improvements

- Security vulnerability detection

- Code quality analysis

- Automated refactoring suggestions

-

RAG (Retrieval Augmented Generation) - Enhance queries with contextual documents

- Add custom documentation, exploit notes, architecture references

- Lucene-based full-text search

- Context injection into queries

- RLHF Dataset Generation - Collect feedback for model fine-tuning

The plugin uses a modular, service-oriented architecture:

Core Services:

- Query Modes: Regular queries, MCP-enhanced queries, or full agentic investigation

- ReAct Orchestrator: Manages autonomous investigation loops with todo tracking and findings accumulation

- Conversational Tool Handler: Manages multi-turn tool calling sessions

- MCPToolManager: Interfaces with external MCP servers for specialized tools

Graph-RAG Backend:

- BinaryKnowledgeGraph: Hybrid SQLite + JGraphT storage for semantic knowledge

- GraphRAGEngine: LLM-free query engine using pre-computed summaries

- SemanticExtractor: LLM-powered function summarization with batch processing

- SecurityFeatureExtractor: Static analysis for network, file I/O, and crypto APIs

- CommunityDetector: Leiden algorithm implementation for module discovery

Data Layer:

- AnalysisDB: SQLite database for chat history, RLHF feedback, and knowledge graphs

- SchemaMigrationRunner: Versioned database migrations for transparent upgrades

- RAGEngine: Lucene-powered document search for custom context injection

UI Components:

- Tab-based interface: Explain, Query, Actions, Semantic Graph, RAG Management, MCP Servers

- Service orchestration via TabController

Future Roadmap:

- Model fine-tuning using collected RLHF dataset

- Additional MCP tool integrations

- Enhanced agentic capabilities, multi-agent collaboration

- Embedding-based similarity search

https://github.com/user-attachments/assets/bd79474a-c82f-4083-b432-96625fef1387

- If necessary, copy the binary release ZIP archive to the Ghidra_Install/Extensions/Ghidra directory.

- Launch Ghidra -> File -> Install Extension -> Enable GhidrAssist.

- Load a binary and launch the CodeBrowser.

- CodeBrowser -> File -> Configure -> Miscellaneous -> Enable GhidrAssist.

- CodeBrowser -> Tools -> GhidraAssist Settings.

- Ensure the RLHF and RAG database paths are appropriate for your environment.

- Point the API host to your preferred API provider and set the API key.

- (Optional) In the Analysis Options tab, set the Reasoning Effort level (None/Low/Medium/High) for models that support extended thinking.

- Open GhidrAssist with the GhidrAssist option in the Windows menu and start exploring.

GhidrAssist works with any OpenAI v1-compatible API. Setup details are provider-specific - here are some helpful resources:

Local LLM Providers:

- LM Studio - Easy local model hosting with GUI

- Ollama - Command-line local model management

- Open-WebUI - Web interface for local models

Cloud Providers:

- OpenAI API

- Anthropic Claude

- Azure OpenAI

LiteLLM Proxy (Multi-Provider Gateway):

- LiteLLM - Unified API for 100+ LLM providers

- Supports AWS Bedrock, Google Vertex AI, Azure, and many others

- Select "LiteLLM" as provider type in GhidrAssist settings

- Automatic model family detection for proper message formatting

For Agentic Mode (requires strong reasoning and tool use):

- Cloud: GPT-5.1, Claude Sonnet 4.5

- Local: GPT-OSS, Llama 3.3 70B, DeepSeek-R1 70B, Qwen2.5 72B

Models with Extended Thinking/Reasoning Support:

-

OpenAI: o1-preview, o1-mini, o3-mini, o4-mini, gpt-5 (use

reasoning_effortparameter) -

Anthropic: Claude Sonnet 4.5, Claude Opus 4.5, Claude Haiku 4.5, Claude Opus 4.1/4, Claude Sonnet 4 (use

thinking.budget_tokensparameter) - Local: openai/gpt-oss-20b via Ollama/LMStudio (supports effort levels)

Reasoning Effort Guidelines:

- Low: Quick analysis, minimal thinking tokens (~5-10s, lower cost)

- Medium: Balanced reasoning depth (~15-30s, moderate cost)

- High: Deep security analysis (~30-60s, 2x cost, recommended for vulnerability hunting)

Note: Agentic mode requires models with strong function calling and multi-step reasoning capabilities. Smaller models may struggle with complex investigations. Extended thinking is optional but can significantly improve analysis quality for complex reverse engineering tasks.

GhidrAssistMCP provides MCP tools that allow the LLM to interact directly with Ghidra's analysis capabilities.

-

Start the MCP Server

-

Configure GhidrAssist:

- Open Tools → GhidrAssist Settings → MCP Servers tab

- Add server:

http://127.0.0.1:8081asGhidrAssistMCPwith transport typeSSE

-

Enable MCP in queries:

- In the Custom Query tab, check "Use MCP"

- Optionally enable "Agentic" for autonomous investigation mode

Regular MCP Queries:

- Enable "Use MCP" checkbox

- Ask questions like "What does the current function do?"

- LLM can call tools to get decompilation, cross-references, etc.

Agentic Mode (Recommended):

- Enable both "Use MCP" and "Agentic" checkboxes

- Ask complex questions like "Find vulnerabilities in this function" or "Analyze the call graph"

- The ReAct agent will:

- Propose investigation steps as a todo list

- Systematically execute tools to gather information

- Track progress and accumulate findings

- Synthesize a comprehensive answer with evidence

Example Queries:

- "What security vulnerabilities exist in this function?"

- "Trace the data flow from user input to this call"

- "Find all functions that modify global variable X"

- "Analyze the error handling in the current function"

The Semantic Graph tab provides a knowledge graph interface for exploring binary analysis results without requiring LLM calls for every query.

-

Index the Binary:

- Open the Semantic Graph tab

- Click "ReIndex Binary" to extract structural relationships

- Click "Semantic Analysis" to generate LLM summaries (requires API)

- Progress is shown in the status bar

-

Explore the Graph:

- List View: Browse all indexed functions with summaries and security flags

- Graph View: Visualize call relationships with configurable N-hop depth

- Search View: Full-text search across summaries and security annotations

-

Security Analysis:

- Click "Security Analysis" to scan for security-relevant features

- Results include: network APIs, file I/O, crypto usage, string patterns

- Risk levels (LOW/MEDIUM/HIGH) are assigned based on detected features

When viewing a function in the Explain tab:

- If the function is indexed, the pre-computed summary is shown instantly

- Security panel displays: risk level, activity profile, APIs used

- Click "Edit" to modify summaries (protected from auto-overwrite)

- Use "Refresh" to re-generate the summary with the LLM

- Fast Queries: Pre-computed summaries eliminate LLM latency for repeat queries

- Offline Analysis: Browse indexed data without API connectivity

- Security Focus: Automatic detection of security-relevant code patterns

- Module Discovery: Community detection groups related functions automatically

https://github.com/jtang613/GhidrAssist

This plugin requires the following minimum version of Ghidra:

- 11.0

This plugin is released under a MIT license.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for GhidrAssist

Similar Open Source Tools

GhidrAssist

GhidrAssist is an advanced LLM-powered plugin for interactive reverse engineering assistance in Ghidra. It integrates Large Language Models (LLMs) to provide intelligent assistance for binary exploration and reverse engineering. The tool supports various OpenAI v1-compatible APIs, including local models and cloud providers. Key features include code explanation, interactive chat, custom queries, Graph-RAG knowledge system with semantic knowledge graph, community detection, security feature extraction, semantic graph tab, extended thinking/reasoning control, ReAct agentic mode, MCP integration, function calling, actions tab, RAG (Retrieval Augmented Generation), and RLHF dataset generation. The plugin uses a modular, service-oriented architecture with core services, Graph-RAG backend, data layer, and UI components.

WeKnora

WeKnora is a document understanding and semantic retrieval framework based on large language models (LLM), designed specifically for scenarios with complex structures and heterogeneous content. The framework adopts a modular architecture, integrating multimodal preprocessing, semantic vector indexing, intelligent recall, and large model generation reasoning to build an efficient and controllable document question-answering process. The core retrieval process is based on the RAG (Retrieval-Augmented Generation) mechanism, combining context-relevant segments with language models to achieve higher-quality semantic answers. It supports various document formats, intelligent inference, flexible extension, efficient retrieval, ease of use, and security and control. Suitable for enterprise knowledge management, scientific literature analysis, product technical support, legal compliance review, and medical knowledge assistance.

ApeRAG

ApeRAG is a production-ready platform for Retrieval-Augmented Generation (RAG) that combines Graph RAG, vector search, and full-text search with advanced AI agents. It is ideal for building Knowledge Graphs, Context Engineering, and deploying intelligent AI agents for autonomous search and reasoning across knowledge bases. The platform offers features like advanced index types, intelligent AI agents with MCP support, enhanced Graph RAG with entity normalization, multimodal processing, hybrid retrieval engine, MinerU integration for document parsing, production-grade deployment with Kubernetes, enterprise management features, MCP integration, and developer-friendly tools for customization and contribution.

JamAIBase

JamAI Base is an open-source platform integrating SQLite and LanceDB databases with managed memory and RAG capabilities. It offers built-in LLM, vector embeddings, and reranker orchestration accessible through a spreadsheet-like UI and REST API. Users can transform static tables into dynamic entities, facilitate real-time interactions, manage structured data, and simplify chatbot development. The tool focuses on ease of use, scalability, flexibility, declarative paradigm, and innovative RAG techniques, making complex data operations accessible to users with varying technical expertise.

chunkhound

ChunkHound is a modern tool for transforming your codebase into a searchable knowledge base for AI assistants. It utilizes semantic search via the cAST algorithm and regex search, integrating with AI assistants through the Model Context Protocol (MCP). With features like cAST Algorithm, Multi-Hop Semantic Search, Regex search, and support for 22 languages, ChunkHound offers a local-first approach to code analysis and discovery. It provides intelligent code discovery, universal language support, and real-time indexing capabilities, making it a powerful tool for developers looking to enhance their coding experience.

Mira

Mira is an agentic AI library designed for automating company research by gathering information from various sources like company websites, LinkedIn profiles, and Google Search. It utilizes a multi-agent architecture to collect and merge data points into a structured profile with confidence scores and clear source attribution. The core library is framework-agnostic and can be integrated into applications, pipelines, or custom workflows. Mira offers features such as real-time progress events, confidence scoring, company criteria matching, and built-in services for data gathering. The tool is suitable for users looking to streamline company research processes and enhance data collection efficiency.

Vodalus-Expert-LLM-Forge

Vodalus Expert LLM Forge is a tool designed for crafting datasets and efficiently fine-tuning models using free open-source tools. It includes components for data generation, LLM interaction, RAG engine integration, model training, fine-tuning, and quantization. The tool is suitable for users at all levels and is accompanied by comprehensive documentation. Users can generate synthetic data, interact with LLMs, train models, and optimize performance for local execution. The tool provides detailed guides and instructions for setup, usage, and customization.

chunkhound

ChunkHound is a tool that transforms your codebase into a searchable knowledge base for AI assistants using semantic and regex search. It integrates with AI assistants via the Model Context Protocol (MCP) and offers features such as cAST algorithm for semantic code chunking, multi-hop semantic search, natural language queries, regex search without API keys, support for 22 languages, and local-first architecture. It provides intelligent code discovery by following semantic relationships and discovering related implementations. ChunkHound is built on the cAST algorithm from Carnegie Mellon University, ensuring structure-aware chunking that preserves code meaning. It supports universal language parsing and offers efficient updates for large codebases.

abi

ABI (Agentic Brain Infrastructure) is a Python-based AI Operating System designed to serve as the core infrastructure for building an Agentic AI Ontology Engine. It empowers organizations to integrate, manage, and scale AI-driven operations with multiple AI models, focusing on ontology, agent-driven workflows, and analytics. ABI emphasizes modularity and customization, providing a customizable framework aligned with international standards and regulatory frameworks. It offers features such as configurable AI agents, ontology management, integrations with external data sources, data processing pipelines, workflow automation, analytics, and data handling capabilities.

heurist-agent-framework

Heurist Agent Framework is a flexible multi-interface AI agent framework that allows processing text and voice messages, generating images and videos, interacting across multiple platforms, fetching and storing information in a knowledge base, accessing external APIs and tools, and composing complex workflows using Mesh Agents. It supports various platforms like Telegram, Discord, Twitter, Farcaster, REST API, and MCP. The framework is built on a modular architecture and provides core components, tools, workflows, and tool integration with MCP support.

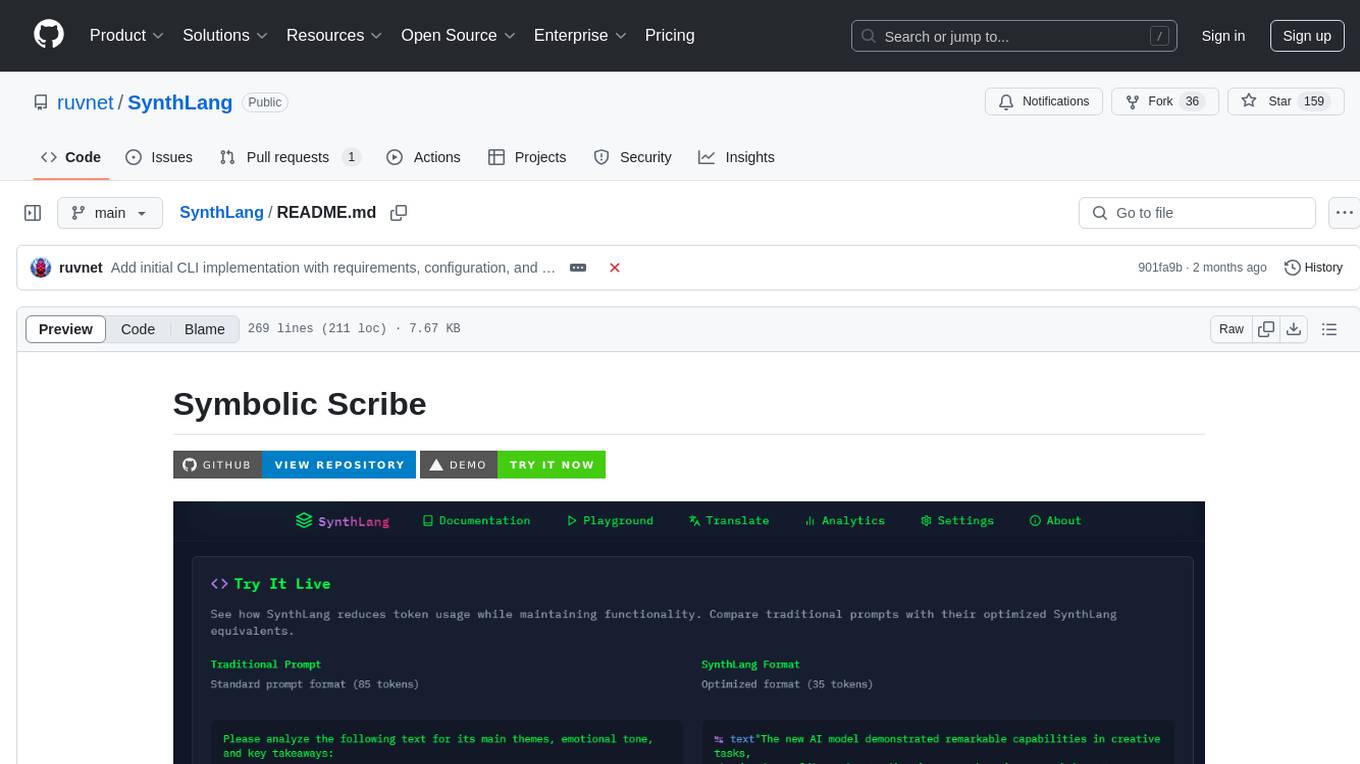

SynthLang

SynthLang is a tool designed to optimize AI prompts by reducing costs and improving processing speed. It brings academic rigor to prompt engineering, creating precise and powerful AI interactions. The tool includes core components like a Translator Engine, Performance Optimization, Testing Framework, and Technical Architecture. It offers mathematical precision, academic rigor, enhanced security, a modern interface, and instant testing. Users can integrate mathematical frameworks, model complex relationships, and apply structured prompts to various domains. Security features include API key management and data privacy. The tool also provides a CLI for prompt engineering and optimization capabilities.

OAD

OAD is a powerful open-source tool for analyzing and visualizing data. It provides a user-friendly interface for exploring datasets, generating insights, and creating interactive visualizations. With OAD, users can easily import data from various sources, clean and preprocess data, perform statistical analysis, and create customizable visualizations to communicate findings effectively. Whether you are a data scientist, analyst, or researcher, OAD can help you streamline your data analysis workflow and uncover valuable insights from your data.

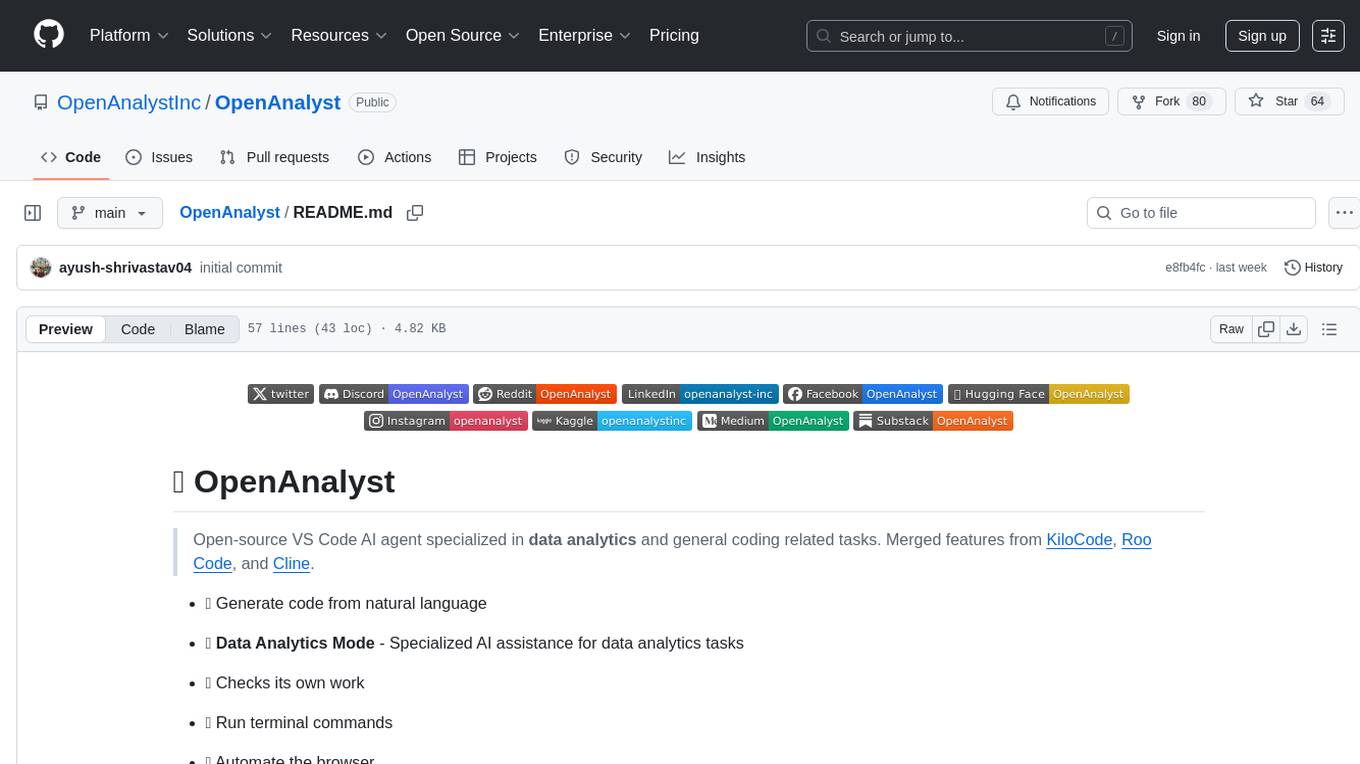

OpenAnalyst

OpenAnalyst is an open-source VS Code AI agent specialized in data analytics and general coding tasks. It merges features from KiloCode, Roo Code, and Cline, offering code generation from natural language, data analytics mode, self-checking, terminal command running, browser automation, latest AI models, and API keys option. It supports multi-mode operation for roles like Data Analyst, Code, Ask, and Debug. OpenAnalyst is a fork of KiloCode, combining the best features from Cline, Roo Code, and KiloCode, with enhancements like MCP Server Marketplace, automated refactoring, and support for latest AI models.

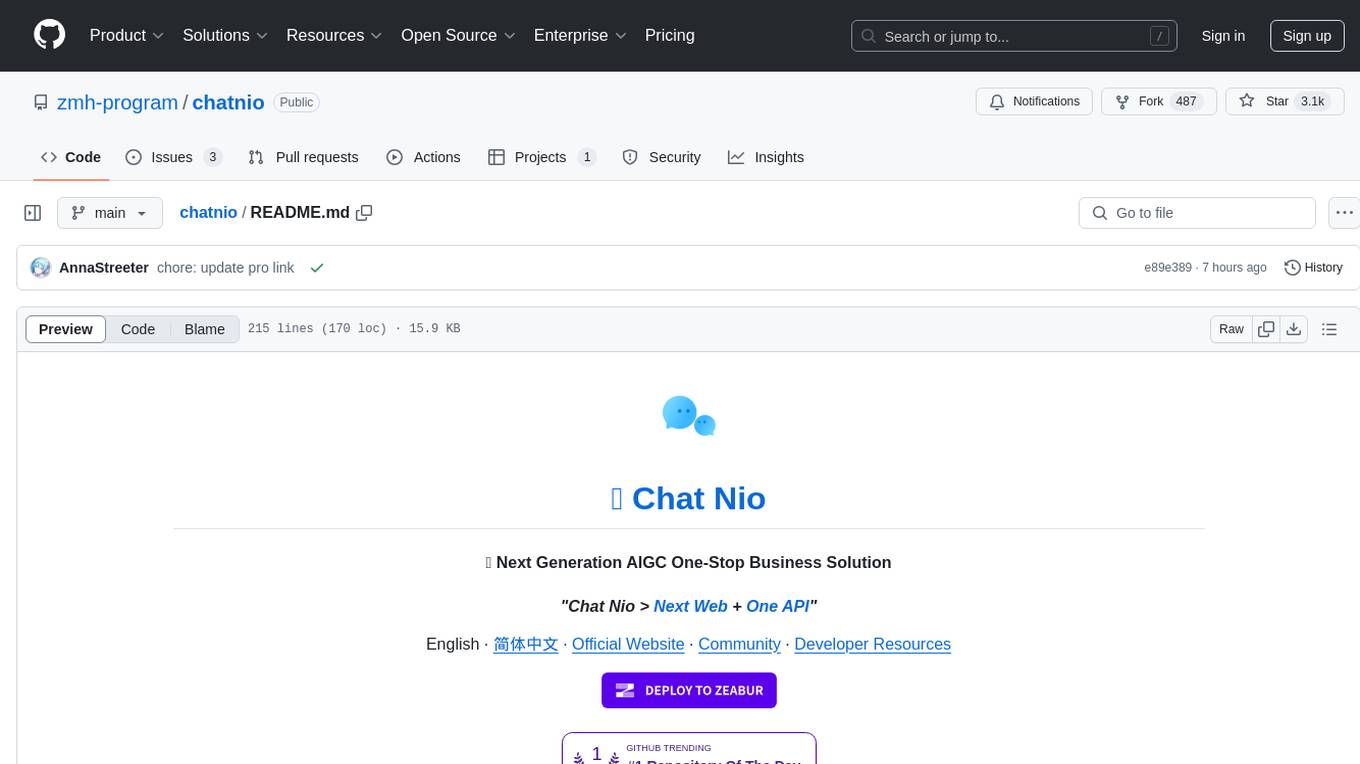

chatnio

Chat Nio is a next-generation AIGC one-stop business solution that combines the advantages of frontend-oriented lightweight deployment projects with powerful API distribution systems. It offers rich model support, beautiful UI design, complete Markdown support, multi-theme support, internationalization support, text-to-image support, powerful conversation sync, model market & preset system, rich file parsing, full model internet search, Progressive Web App (PWA) support, comprehensive backend management, multiple billing methods, innovative model caching, and additional features. The project aims to address limitations in conversation synchronization, billing, file parsing, conversation URL sharing, channel management, and API call support found in existing AIGC commercial sites, while also providing a user-friendly interface design and C-end features.

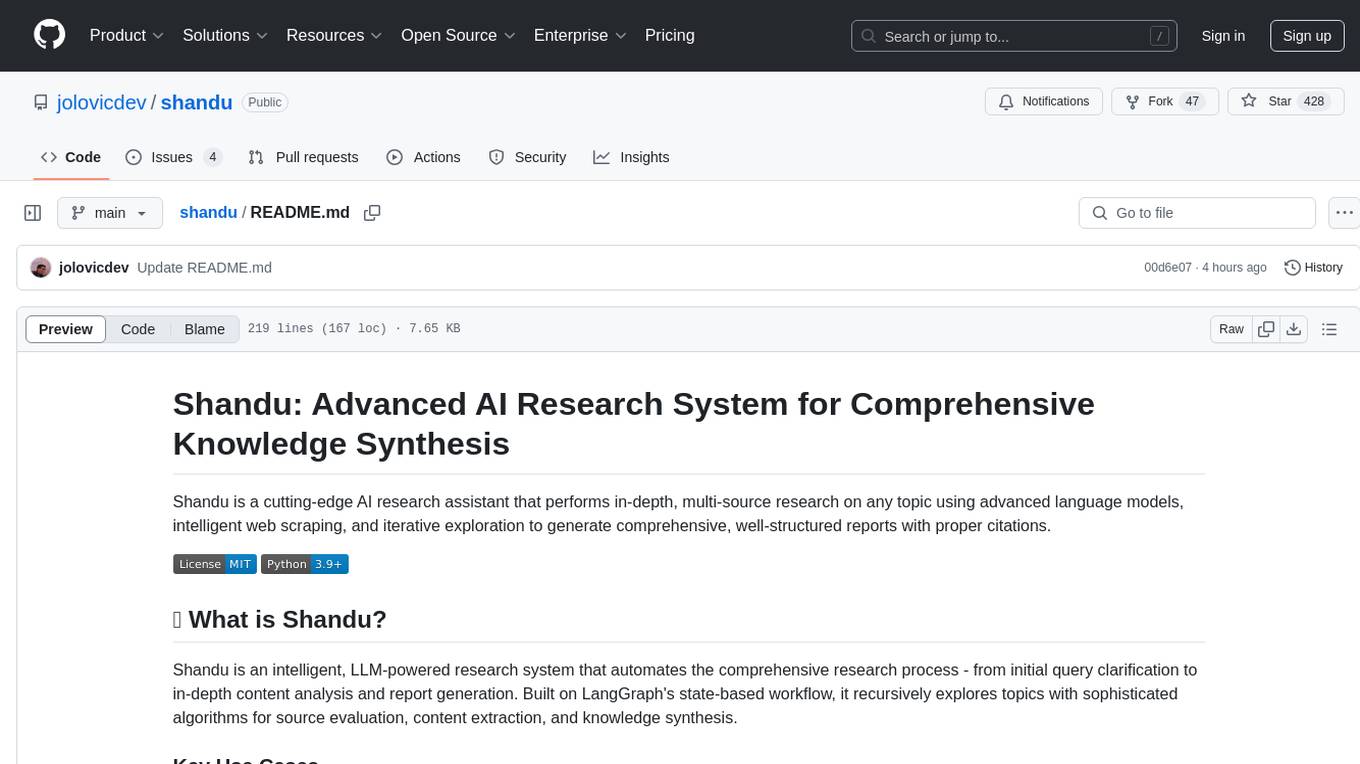

shandu

Shandu is an advanced AI research system that automates comprehensive research processes using language models, web scraping, and iterative exploration to generate well-structured reports with citations. It features intelligent state-based workflow, deep exploration, multi-source information synthesis, enhanced web scraping, smart source evaluation, content analysis pipeline, comprehensive report generation, parallel processing, adaptive search strategy, and full citation management.

persistent-ai-memory

Persistent AI Memory System is a comprehensive tool that offers persistent, searchable storage for AI assistants. It includes features like conversation tracking, MCP tool call logging, and intelligent scheduling. The system supports multiple databases, provides enhanced memory management, and offers various tools for memory operations, schedule management, and system health checks. It also integrates with various platforms like LM Studio, VS Code, Koboldcpp, Ollama, and more. The system is designed to be modular, platform-agnostic, and scalable, allowing users to handle large conversation histories efficiently.

For similar tasks

GhidrAssist

GhidrAssist is an advanced LLM-powered plugin for interactive reverse engineering assistance in Ghidra. It integrates Large Language Models (LLMs) to provide intelligent assistance for binary exploration and reverse engineering. The tool supports various OpenAI v1-compatible APIs, including local models and cloud providers. Key features include code explanation, interactive chat, custom queries, Graph-RAG knowledge system with semantic knowledge graph, community detection, security feature extraction, semantic graph tab, extended thinking/reasoning control, ReAct agentic mode, MCP integration, function calling, actions tab, RAG (Retrieval Augmented Generation), and RLHF dataset generation. The plugin uses a modular, service-oriented architecture with core services, Graph-RAG backend, data layer, and UI components.

watchtower

AIShield Watchtower is a tool designed to fortify the security of AI/ML models and Jupyter notebooks by automating model and notebook discoveries, conducting vulnerability scans, and categorizing risks into 'low,' 'medium,' 'high,' and 'critical' levels. It supports scanning of public GitHub repositories, Hugging Face repositories, AWS S3 buckets, and local systems. The tool generates comprehensive reports, offers a user-friendly interface, and aligns with industry standards like OWASP, MITRE, and CWE. It aims to address the security blind spots surrounding Jupyter notebooks and AI models, providing organizations with a tailored approach to enhancing their security efforts.

LLM-PLSE-paper

LLM-PLSE-paper is a repository focused on the applications of Large Language Models (LLMs) in Programming Language and Software Engineering (PL/SE) domains. It covers a wide range of topics including bug detection, specification inference and verification, code generation, fuzzing and testing, code model and reasoning, code understanding, IDE technologies, prompting for reasoning tasks, and agent/tool usage and planning. The repository provides a comprehensive collection of research papers, benchmarks, empirical studies, and frameworks related to the capabilities of LLMs in various PL/SE tasks.

invariant

Invariant Analyzer is an open-source scanner designed for LLM-based AI agents to find bugs, vulnerabilities, and security threats. It scans agent execution traces to identify issues like looping behavior, data leaks, prompt injections, and unsafe code execution. The tool offers a library of built-in checkers, an expressive policy language, data flow analysis, real-time monitoring, and extensible architecture for custom checkers. It helps developers debug AI agents, scan for security violations, and prevent security issues and data breaches during runtime. The analyzer leverages deep contextual understanding and a purpose-built rule matching engine for security policy enforcement.

OpenRedTeaming

OpenRedTeaming is a repository focused on red teaming for generative models, specifically large language models (LLMs). The repository provides a comprehensive survey on potential attacks on GenAI and robust safeguards. It covers attack strategies, evaluation metrics, benchmarks, and defensive approaches. The repository also implements over 30 auto red teaming methods. It includes surveys, taxonomies, attack strategies, and risks related to LLMs. The goal is to understand vulnerabilities and develop defenses against adversarial attacks on large language models.

Awesome-LLM4Cybersecurity

The repository 'Awesome-LLM4Cybersecurity' provides a comprehensive overview of the applications of Large Language Models (LLMs) in cybersecurity. It includes a systematic literature review covering topics such as constructing cybersecurity-oriented domain LLMs, potential applications of LLMs in cybersecurity, and research directions in the field. The repository analyzes various benchmarks, datasets, and applications of LLMs in cybersecurity tasks like threat intelligence, fuzzing, vulnerabilities detection, insecure code generation, program repair, anomaly detection, and LLM-assisted attacks.

quark-engine

Quark Engine is an AI-powered tool designed for analyzing Android APK files. It focuses on enhancing the detection process for auto-suggestion, enabling users to create detection workflows without coding. The tool offers an intuitive drag-and-drop interface for workflow adjustments and updates. Quark Agent, the core component, generates Quark Script code based on natural language input and feedback. The project is committed to providing a user-friendly experience for designing detection workflows through textual and visual methods. Various features are still under development and will be rolled out gradually.

vulnerability-analysis

The NVIDIA AI Blueprint for Vulnerability Analysis for Container Security showcases accelerated analysis on common vulnerabilities and exposures (CVE) at an enterprise scale, reducing mitigation time from days to seconds. It enables security analysts to determine software package vulnerabilities using large language models (LLMs) and retrieval-augmented generation (RAG). The blueprint is designed for security analysts, IT engineers, and AI practitioners in cybersecurity. It requires NVAIE developer license and API keys for vulnerability databases, search engines, and LLM model services. Hardware requirements include L40 GPU for pipeline operation and optional LLM NIM and Embedding NIM. The workflow involves LLM pipeline for CVE impact analysis, utilizing LLM planner, agent, and summarization nodes. The blueprint uses NVIDIA NIM microservices and Morpheus Cybersecurity AI SDK for vulnerability analysis.

For similar jobs

last_layer

last_layer is a security library designed to protect LLM applications from prompt injection attacks, jailbreaks, and exploits. It acts as a robust filtering layer to scrutinize prompts before they are processed by LLMs, ensuring that only safe and appropriate content is allowed through. The tool offers ultra-fast scanning with low latency, privacy-focused operation without tracking or network calls, compatibility with serverless platforms, advanced threat detection mechanisms, and regular updates to adapt to evolving security challenges. It significantly reduces the risk of prompt-based attacks and exploits but cannot guarantee complete protection against all possible threats.

aircrack-ng

Aircrack-ng is a comprehensive suite of tools designed to evaluate the security of WiFi networks. It covers various aspects of WiFi security, including monitoring, attacking (replay attacks, deauthentication, fake access points), testing WiFi cards and driver capabilities, and cracking WEP and WPA PSK. The tools are command line-based, allowing for extensive scripting and have been utilized by many GUIs. Aircrack-ng primarily works on Linux but also supports Windows, macOS, FreeBSD, OpenBSD, NetBSD, Solaris, and eComStation 2.

reverse-engineering-assistant

ReVA (Reverse Engineering Assistant) is a project aimed at building a disassembler agnostic AI assistant for reverse engineering tasks. It utilizes a tool-driven approach, providing small tools to the user to empower them in completing complex tasks. The assistant is designed to accept various inputs, guide the user in correcting mistakes, and provide additional context to encourage exploration. Users can ask questions, perform tasks like decompilation, class diagram generation, variable renaming, and more. ReVA supports different language models for online and local inference, with easy configuration options. The workflow involves opening the RE tool and program, then starting a chat session to interact with the assistant. Installation includes setting up the Python component, running the chat tool, and configuring the Ghidra extension for seamless integration. ReVA aims to enhance the reverse engineering process by breaking down actions into small parts, including the user's thoughts in the output, and providing support for monitoring and adjusting prompts.

AutoAudit

AutoAudit is an open-source large language model specifically designed for the field of network security. It aims to provide powerful natural language processing capabilities for security auditing and network defense, including analyzing malicious code, detecting network attacks, and predicting security vulnerabilities. By coupling AutoAudit with ClamAV, a security scanning platform has been created for practical security audit applications. The tool is intended to assist security professionals with accurate and fast analysis and predictions to combat evolving network threats.

aif

Arno's Iptables Firewall (AIF) is a single- & multi-homed firewall script with DSL/ADSL support. It is a free software distributed under the GNU GPL License. The script provides a comprehensive set of configuration files and plugins for setting up and managing firewall rules, including support for NAT, load balancing, and multirouting. It offers detailed instructions for installation and configuration, emphasizing security best practices and caution when modifying settings. The script is designed to protect against hostile attacks by blocking all incoming traffic by default and allowing users to configure specific rules for open ports and network interfaces.

watchtower

AIShield Watchtower is a tool designed to fortify the security of AI/ML models and Jupyter notebooks by automating model and notebook discoveries, conducting vulnerability scans, and categorizing risks into 'low,' 'medium,' 'high,' and 'critical' levels. It supports scanning of public GitHub repositories, Hugging Face repositories, AWS S3 buckets, and local systems. The tool generates comprehensive reports, offers a user-friendly interface, and aligns with industry standards like OWASP, MITRE, and CWE. It aims to address the security blind spots surrounding Jupyter notebooks and AI models, providing organizations with a tailored approach to enhancing their security efforts.

Academic_LLM_Sec_Papers

Academic_LLM_Sec_Papers is a curated collection of academic papers related to LLM Security Application. The repository includes papers sorted by conference name and published year, covering topics such as large language models for blockchain security, software engineering, machine learning, and more. Developers and researchers are welcome to contribute additional published papers to the list. The repository also provides information on listed conferences and journals related to security, networking, software engineering, and cryptography. The papers cover a wide range of topics including privacy risks, ethical concerns, vulnerabilities, threat modeling, code analysis, fuzzing, and more.

DeGPT

DeGPT is a tool designed to optimize decompiler output using Large Language Models (LLM). It requires manual installation of specific packages and setting up API key for OpenAI. The tool provides functionality to perform optimization on decompiler output by running specific scripts.