abi

AI Operating System - Build your own AI using ontologies as the unifying field connecting data, models, workflows, and systems.

Stars: 61

ABI (Agentic Brain Infrastructure) is a Python-based AI Operating System designed to serve as the core infrastructure for building an Agentic AI Ontology Engine. It empowers organizations to integrate, manage, and scale AI-driven operations with multiple AI models, focusing on ontology, agent-driven workflows, and analytics. ABI emphasizes modularity and customization, providing a customizable framework aligned with international standards and regulatory frameworks. It offers features such as configurable AI agents, ontology management, integrations with external data sources, data processing pipelines, workflow automation, analytics, and data handling capabilities.

README:

A multi-agent AI System that uses ontologies to unify data, AI models, and workflows. ⭐ Star and follow to stay updated!

ABI (Agentic Brain Infrastructure) is an AI Operating System that uses intent-driven routing to match user requests with pre-configured responses and actions. When you make a request, ABI identifies your intent and triggers the appropriate response - whether that's a direct answer, tool usage, or routing to a specific AI agent.

The system combines multiple AI models (ChatGPT, Claude, Gemini, Grok, Llama, Mistral) with a semantic knowledge graph to map intents to actions, enabling intelligent routing based on what you're trying to accomplish.

graph TD

%% === USER INTERACTION LAYER ===

USER["👤 User"] <-->|"uses"| APPS["📱 Apps<br/>Chat | API | Dashboard"]

APPS <-->|"talks to"| AGENTS

%% === AGENTS LAYER ===

subgraph AGENTS["Multi-Agents System"]

ABI["🧠 ABI<br/>AI SuperAssistant"]

CUSTOM_AGENTS["🎯 Agents<br/>Application & Domain Experts"]

ABI -->|"coordinates"| CUSTOM_AGENTS

end

%% === STORAGE LAYER ===

subgraph STORAGE["Storage"]

MEMORY[("🐘 Memory<br/>Persisting context")]

TRIPLESTORE[("🧠 Semantic Knowledge Graph<br/>Information, Relations & Reasoning")]

VECTORDB[("🔍 Vector DB<br/>Embeddings")]

FILES[("💾 Object Storage<br/>Files")]

end

AGENTS <-->|"query"| TRIPLESTORE

AGENTS <-->|"search"| VECTORDB

AGENTS <-->|"retrieve"| FILES

AGENTS <-->|"access"| MEMORY

FILES -->|"index in"| VECTORDB

MEMORY -->|"index in"| VECTORDB

%% === EXECUTION LAYER ===

subgraph C["Components"]

MODELS["🤖 AI Models<br/>Open & Closed Source"]

ANALYTICS["📊 Analytics<br/>Dashboards & Reports"]

WORKFLOWS["🔄 Workflows<br/>Processes"]

ONTOLOGIES["📚 Ontologies<br/>BFO Structure"]

PIPELINES["⚙️ Pipelines<br/>Data → Semantic"]

INTEGRATIONS["🔌 Integrations<br/>APIs, Files"]

end

AGENTS <-->|"use"| ONTOLOGIES

AGENTS -->|"execute"| INTEGRATIONS["🔌 Integrations<br/>APIs, Exports"]

AGENTS -->|"execute"| PIPELINES["⚙️ Pipelines<br/>Data → Semantic"]

AGENTS -->|"access"| ANALYTICS["📊 Analytics<br/>Dashboards & Reports"]

AGENTS -->|"execute"| WORKFLOWS["🔄 Workflows<br/>Processes"]

AGENTS -->|"use"| MODELS["🤖 AI Models<br/>Open & Closed Source"]

%% === DATA PIPELINE ===

ONTOLOGIES-->|"structure"| PIPELINES

PIPELINES-->|"use"| WORKFLOWS

PIPELINES-->|"use"| INTEGRATIONS

PIPELINES -->|"create triples"| TRIPLESTORE

WORKFLOWS-->|"use"| INTEGRATIONS

%% === KINETIC ACTIONS ===

TRIPLESTORE -.->|"trigger"| PIPELINES

TRIPLESTORE -.->|"trigger"| WORKFLOWS

TRIPLESTORE -.->|"trigger"| INTEGRATIONS

%% === FILE GENERATION ===

WORKFLOWS -->|"create"| FILES

WORKFLOWS -->|"generate"| ANALYTICS

INTEGRATIONS -->|"create"| FILES

%% === STYLING ===

classDef user fill:#2c3e50,stroke:#fff,stroke-width:2px,color:#fff

classDef abi fill:#e74c3c,stroke:#fff,stroke-width:3px,color:#fff

classDef apps fill:#9b59b6,stroke:#fff,stroke-width:2px,color:#fff

classDef agents fill:#3498db,stroke:#fff,stroke-width:2px,color:#fff

classDef workflows fill:#1abc9c,stroke:#fff,stroke-width:2px,color:#fff

classDef integrations fill:#f39c12,stroke:#fff,stroke-width:2px,color:#fff

classDef ontologies fill:#27ae60,stroke:#fff,stroke-width:2px,color:#fff

classDef pipelines fill:#e67e22,stroke:#fff,stroke-width:2px,color:#fff

classDef analytics fill:#8e44ad,stroke:#fff,stroke-width:2px,color:#fff

classDef models fill:#e91e63,stroke:#fff,stroke-width:2px,color:#fff

classDef infrastructure fill:#95a5a6,stroke:#fff,stroke-width:2px,color:#fff

class USER user

class ABI abi

class APPS apps

class CUSTOM_AGENTS agents

class WORKFLOWS workflows

class INTEGRATIONS integrations

class ONTOLOGIES ontologies

class PIPELINES pipelines

class ANALYTICS analytics

class MODELS models

class TRIPLESTORE,VECTORDB,MEMORY,FILES infrastructureABI is an AI Operating System that orchestrates intelligent agents, data systems, and workflows through semantic understanding and automated reasoning.

1. User Interaction Layer

- Multiple Interfaces: Chat, REST API, Web Dashboard, MCP Protocol (Claude Desktop integration)

- Universal Access: Single entry point to all AI capabilities and domain expertise

2. Agent Orchestration Layer using LangGraph agents

- ABI SuperAssistant: Central coordinator that analyzes requests and routes to optimal resources

- AI Model Agents: Access to ChatGPT, Claude, Gemini, Grok, Llama, Mistral, and local models

- Domains Expert Agents: 20+ specialized agents (Software Engineer, Data Analyst, Content Creator, etc.)

- Applications Expert Agents: 20+ specialized agents (GitHub, Google, LinkedIn, Powerpoint, etc.)

3. Adaptive Storage Layer based on Hexagonal architecture

- Semantic Knowledge Graph: RDF triples in BFO-compliant ontologies for reasoning and relationships (default: Oxygraph)

- Vector Database: Intent embeddings for semantic similarity matching and context understanding (default: Qdrant)

- Memory System: For conversation history and persistent context (default: PostgresSQL)

- File Storage: Generated reports, documents, and workflow outputs (default: AWS S3)

4. Execution Components Layer

- Ontologies: BFO-structured knowledge that defines how data relates and flows

- Pipelines: Automated data processing that transforms raw information into semantic knowledge

- Workflows: Business process automation triggered by user requests or system events

- Integrations: Connections to external APIs, databases, and applications

- Analytics: Real-time dashboards and reporting capabilities

- Request Analysis: ABI receives your request through any interface

- Semantic Understanding: Vector search and SPARQL queries identify intent and context

- Agent Routing: Knowledge graph determines the best agent/model combination

- Resource Coordination: Agents access ontologies, trigger workflows, and use integrations as needed

- Knowledge Creation: Results are stored back into the knowledge graph for future reasoning

- Kinetic Actions: System automatically triggers related processes and workflows based on new knowledge

Ontology-Based AI to Preserve the Freedom to Reason: The convergence of semantic alignment and kinetic action through ontology-driven systems represents one of the most powerful technologies ever created. When such transformative capabilities exist behind closed doors or within a single organization's control, it threatens the fundamental freedom to reason and act independently. We believe this power should be distributed, not concentrated - because the ability to understand, reason about, and act upon complex information is a cornerstone of human autonomy and democratic society.

Core Capabilities for the Innovation Community:

- Ontology-Driven Intelligence: Semantic understanding that connects data, meaning, and action

- Knowledge Graph Operations: Real-time reasoning over complex, interconnected information

- Automated Decision Systems: AI that understands context and triggers appropriate responses

- Semantic Data Integration: Connect disparate systems through shared understanding, not just APIs

The Open Source Advantage:

- Research & Education: Academic institutions and researchers can explore ontological AI without barriers

- Innovation Acceleration: Developers can build upon proven semantic architectures

- Community Collaboration: Collective advancement of ontology-based AI methodologies

- Accessible Entry Point: Learn and experiment with enterprise-grade semantic technologies

Moreover, this project is built with international standards and regulatory frameworks as guiding principles, including ISO/IEC 42001:2023 (AI Management Systems), ISO/IEC 21838-2:2021 (Basic Formal Ontology), and forward-compatibility with emerging regulations like the EU AI Act, ABI provides a customizable framework suitable for individuals and organizations aiming to create intelligent, automated systems aligned to their needs.

For innovators who want to own their AI:

- 👤 Individuals: Run locally, choose your models, own your data

- ⚡ Pro: Automate workflows, optimize AI costs

- 👥 Teams: Share knowledge, build custom agents

- 🏢 Enterprise: Deploy organization-wide, integrate legacy systems, maintain full control

ABI Local & Open Source + Naas.ai Cloud = Complete AI Operating System

- 🏠 Local: Open source, privacy-first, full control

- ☁️ Cloud: Managed infrastructure, marketplace, enterprise features

- 🔗 Hybrid: Start local, scale cloud, seamless migration

For cloud users, we offer Naas AI Credits that aggregate multiple AI models on our platform - giving you access to ChatGPT, Claude, Gemini, and more through a single, cost-optimized interface. Available for anyone with a naas.ai workspace account.

- Supervisor: ABI, a Supervisor Agent with intelligent routing across all AI models.

- Cloud: ChatGPT, Claude, Gemini, Grok, Llama, Mistral, Perplexity

- Local: Privacy-focused Qwen, DeepSeek, Gemma (via Ollama)

- Domain Expert Agents: 20+ specialized agents (Software Engineer, Content Creator, Data Engineer, Accountant, Project Manager, etc.)

- Applications Module: GitHub, LinkedIn, Google Search, PostgreSQL, ArXiv, Naas, Git, PowerPoint, and more

-

Modular Architecture: Enable/disable any module via

config.yaml

- Semantic Knowledge Graph: BFO-compliant ontologies with Oxigraph backend

- SPARQL Queries: 30+ optimized queries for intelligent agent routing

- Vector Search: Intent matching via embeddings and similarity search

- Object Storage: File storage and retrieval with MinIO compatibility

- Memory: Persistent context and conversation history storage

- Integrations: Seamless connectivity with external APIs and data export capabilities

- Workflows: End-to-end automation of complex business processes with intelligent task orchestration

- Pipelines: Data processing and semantic transformation

- Event-Driven: Real-time reactivity with automatic triggers based on knowledge graph updates

- Cache System: Intelligent caching layer to optimize API usage and manage rate limits efficiently

- Terminal: Interactive chat with any agent

- REST API: HTTP endpoints for all agents and workflows

- MCP Protocol: Integration with Claude Desktop and VS Code

- Web UI: Knowledge graph explorer and SPARQL editor

git clone https://github.com/jupyter-naas/abi.git

cd abi

makeWhat happens:

- Setup wizard walks you through configuration (API keys, preferences)

- Local services start automatically (knowledge graph, database)

- ABI chat opens - your AI SuperAssistant that routes to the best model for each task

Chat commands:

-

@claude analyze this data- Route to Claude for analysis -

@qwen write some code- Use local Qwen for privacy -

make chat agent=ChatGPTAgent- Run ChatGPT directly -

/?- Show all available agents and commands -

/exit- End session

Other interfaces:

make api # REST API (http://localhost:9879)

make oxigraph-explorer # Knowledge graph browserABI is in active R&D and deploying projects with collaboration between:

- NaasAI - Applied Research Lab focused on creating universal data & AI platform that can connect the needs of individuals and organizations

- OpenTeams - Open SaaS infrastructure platform led by Python ecosystem pioneers, providing enterprise-grade open source AI/ML solutions and packaging expertise

- University at Buffalo - Research university providing academic foundation and institutional support

- National Center for Ontological Research (NCOR) - Leading research center for ontological foundations and formal knowledge representation

- Forvis Mazars - Global audit and consulting firm providing governance and risk management expertise

This collaborative effort aims to better manage and control the way we use AI in society, ensuring responsible development and deployment of agentic AI systems through rigorous research, international standards compliance, and professional oversight.

ABI development is supported through:

- Applied Research Grants - Funding for ontological AI research and development

- Academic Partnership - University at Buffalo research collaboration and institutional support

- Industry Partnerships - Strategic partnerships including Quansight, Forvis Mazars, VSquared AI, and other enterprise collaborators

- Open Source Community - Community contributions, collaborative development, and infrastructure support from OpenTeams

For funding opportunities, research partnerships, or enterprise support, contact us at [email protected]

We welcome contributions! Please read the contributing guidelines for more information.

ABI Framework is open-source and available for use under the MIT license. Professionals and enterprises are encouraged to contact our support for custom services as this project evolves rapidly at [email protected]

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for abi

Similar Open Source Tools

abi

ABI (Agentic Brain Infrastructure) is a Python-based AI Operating System designed to serve as the core infrastructure for building an Agentic AI Ontology Engine. It empowers organizations to integrate, manage, and scale AI-driven operations with multiple AI models, focusing on ontology, agent-driven workflows, and analytics. ABI emphasizes modularity and customization, providing a customizable framework aligned with international standards and regulatory frameworks. It offers features such as configurable AI agents, ontology management, integrations with external data sources, data processing pipelines, workflow automation, analytics, and data handling capabilities.

Zettelgarden

Zettelgarden is a human-centric, open-source personal knowledge management system that helps users develop and maintain their understanding of the world. It focuses on creating and connecting atomic notes, thoughtful AI integration, and scalability from personal notes to company knowledge bases. The project is actively evolving, with features subject to change based on community feedback and development priorities.

heurist-agent-framework

Heurist Agent Framework is a flexible multi-interface AI agent framework that allows processing text and voice messages, generating images and videos, interacting across multiple platforms, fetching and storing information in a knowledge base, accessing external APIs and tools, and composing complex workflows using Mesh Agents. It supports various platforms like Telegram, Discord, Twitter, Farcaster, REST API, and MCP. The framework is built on a modular architecture and provides core components, tools, workflows, and tool integration with MCP support.

ComfyUI-Copilot

ComfyUI-Copilot is an intelligent assistant built on the Comfy-UI framework that simplifies and enhances the AI algorithm debugging and deployment process through natural language interactions. It offers intuitive node recommendations, workflow building aids, and model querying services to streamline development processes. With features like interactive Q&A bot, natural language node suggestions, smart workflow assistance, and model querying, ComfyUI-Copilot aims to lower the barriers to entry for beginners, boost development efficiency with AI-driven suggestions, and provide real-time assistance for developers.

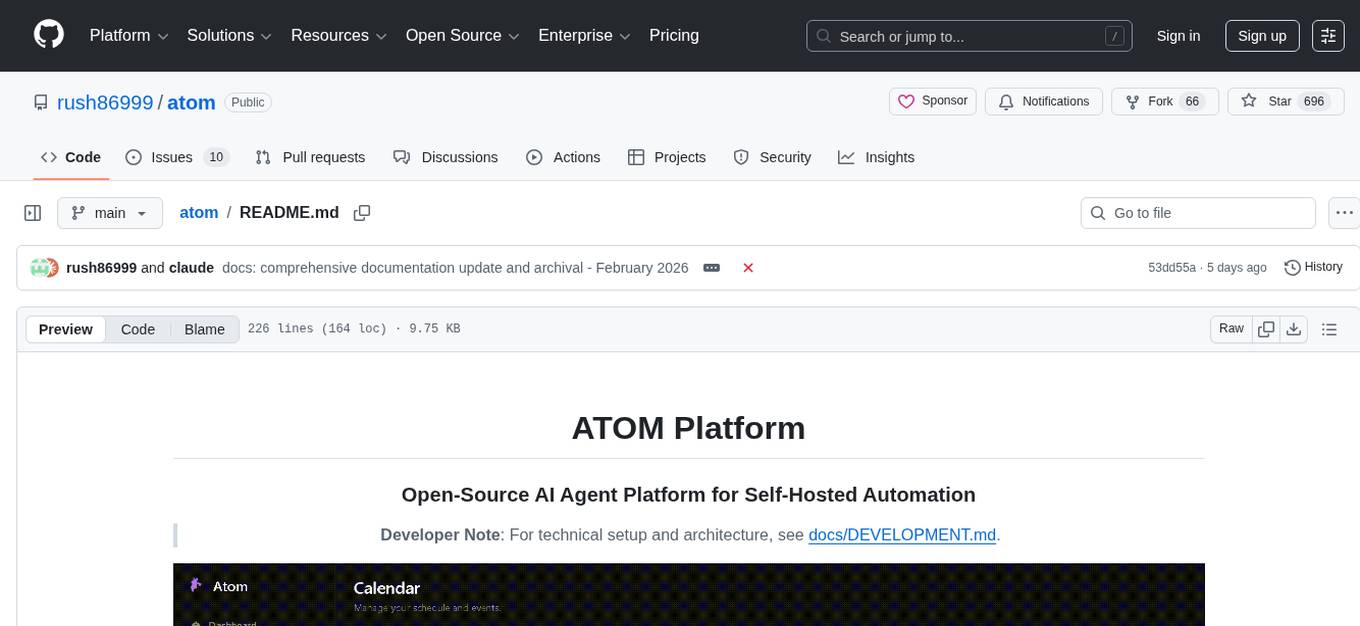

atom

Atom is an open-source, self-hosted AI agent platform that allows users to automate workflows by interacting with AI agents. Users can speak or type requests, and Atom's specialty agents can plan, verify, and execute complex workflows across various tech stacks. Unlike SaaS alternatives, Atom runs entirely on the user's infrastructure, ensuring data privacy. The platform offers features such as voice interface, specialty agents for sales, marketing, and engineering, browser and device automation, universal memory and context, agent governance system, deep integrations, dynamic skills, and more. Atom is designed for business automation, multi-agent workflows, and enterprise governance.

OpenAnalyst

OpenAnalyst is an open-source VS Code AI agent specialized in data analytics and general coding tasks. It merges features from KiloCode, Roo Code, and Cline, offering code generation from natural language, data analytics mode, self-checking, terminal command running, browser automation, latest AI models, and API keys option. It supports multi-mode operation for roles like Data Analyst, Code, Ask, and Debug. OpenAnalyst is a fork of KiloCode, combining the best features from Cline, Roo Code, and KiloCode, with enhancements like MCP Server Marketplace, automated refactoring, and support for latest AI models.

ai

Jetify's AI SDK for Go is a unified interface for interacting with multiple AI providers including OpenAI, Anthropic, and more. It addresses the challenges of fragmented ecosystems, vendor lock-in, poor Go developer experience, and complex multi-modal handling by providing a unified interface, Go-first design, production-ready features, multi-modal support, and extensible architecture. The SDK supports language models, embeddings, image generation, multi-provider support, multi-modal inputs, tool calling, and structured outputs.

aigne-hub

AIGNE Hub is a unified AI gateway that manages connections to multiple LLM and AIGC providers, eliminating the complexity of handling API keys, usage tracking, and billing across different AI services. It provides self-hosting capabilities, multi-provider management, unified security, usage analytics, flexible billing, and seamless integration with the AIGNE framework. The tool supports various AI providers and deployment scenarios, catering to both enterprise self-hosting and service provider modes. Users can easily deploy and configure AI providers, enable billing, and utilize core capabilities such as chat completions, image generation, embeddings, and RESTful APIs. AIGNE Hub ensures secure access, encrypted API key management, user permissions, and audit logging. Built with modern technologies like AIGNE Framework, Node.js, TypeScript, React, SQLite, and Blocklet for cloud-native deployment.

eureka-framework

The Eureka Framework is an open-source toolkit that leverages advanced Artificial Intelligence and Decentralized Science principles to revolutionize scientific discovery. It enables researchers, developers, and decentralized organizations to explore scientific papers, conduct AI-driven experiments, monetize research contributions, provide token-gated access to AI agents, and customize AI agents for specific research domains. The framework also offers features like a RESTful API, robust scheduler for task automation, and webhooks for real-time notifications, empowering users to automate research tasks, enhance productivity, and foster a committed research community.

tensorzero

TensorZero is an open-source platform that helps LLM applications graduate from API wrappers into defensible AI products. It enables a data & learning flywheel for LLMs by unifying inference, observability, optimization, and experimentation. The platform includes a high-performance model gateway, structured schema-based inference, observability, experimentation, and data warehouse for analytics. TensorZero Recipes optimize prompts and models, and the platform supports experimentation features and GitOps orchestration for deployment.

flow-like

Flow-Like is an enterprise-grade workflow operating system built upon Rust for uncompromising performance, efficiency, and code safety. It offers a modular frontend for apps, a rich set of events, a node catalog, a powerful no-code workflow IDE, and tools to manage teams, templates, and projects within organizations. With typed workflows, users can create complex, large-scale workflows with clear data origins, transformations, and contracts. Flow-Like is designed to automate any process through seamless integration of LLM, ML-based, and deterministic decision-making instances.

persistent-ai-memory

Persistent AI Memory System is a comprehensive tool that offers persistent, searchable storage for AI assistants. It includes features like conversation tracking, MCP tool call logging, and intelligent scheduling. The system supports multiple databases, provides enhanced memory management, and offers various tools for memory operations, schedule management, and system health checks. It also integrates with various platforms like LM Studio, VS Code, Koboldcpp, Ollama, and more. The system is designed to be modular, platform-agnostic, and scalable, allowing users to handle large conversation histories efficiently.

dspy.rb

DSPy.rb is a Ruby framework for building reliable LLM applications using composable, type-safe modules. It enables developers to define typed signatures and compose them into pipelines, offering a more structured approach compared to traditional prompting. The framework embraces Ruby conventions and adds innovations like CodeAct agents and enhanced production instrumentation, resulting in scalable LLM applications that are robust and efficient. DSPy.rb is actively developed, with a focus on stability and real-world feedback through the 0.x series before reaching a stable v1.0 API.

astron-agent

Astron Agent is an enterprise-grade, commercial-friendly Agentic Workflow development platform that integrates AI workflow orchestration, model management, AI and MCP tool integration, RPA automation, and team collaboration features. It supports high-availability deployment, enabling organizations to rapidly build scalable, production-ready intelligent agent applications and establish their AI foundation for the future. The platform is stable, reliable, and business-friendly, with key features such as enterprise-grade high availability, intelligent RPA integration, ready-to-use tool ecosystem, and flexible large model support.

databend

Databend is an open-source cloud data warehouse built in Rust, offering fast query execution and data ingestion for complex analysis of large datasets. It integrates with major cloud platforms, provides high performance with AI-powered analytics, supports multiple data formats, ensures data integrity with ACID transactions, offers flexible indexing options, and features community-driven development. Users can try Databend through a serverless cloud or Docker installation, and perform tasks such as data import/export, querying semi-structured data, managing users/databases/tables, and utilizing AI functions.

For similar tasks

askui

AskUI is a reliable, automated end-to-end automation tool that only depends on what is shown on your screen instead of the technology or platform you are running on.

abi

ABI (Agentic Brain Infrastructure) is a Python-based AI Operating System designed to serve as the core infrastructure for building an Agentic AI Ontology Engine. It empowers organizations to integrate, manage, and scale AI-driven operations with multiple AI models, focusing on ontology, agent-driven workflows, and analytics. ABI emphasizes modularity and customization, providing a customizable framework aligned with international standards and regulatory frameworks. It offers features such as configurable AI agents, ontology management, integrations with external data sources, data processing pipelines, workflow automation, analytics, and data handling capabilities.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.