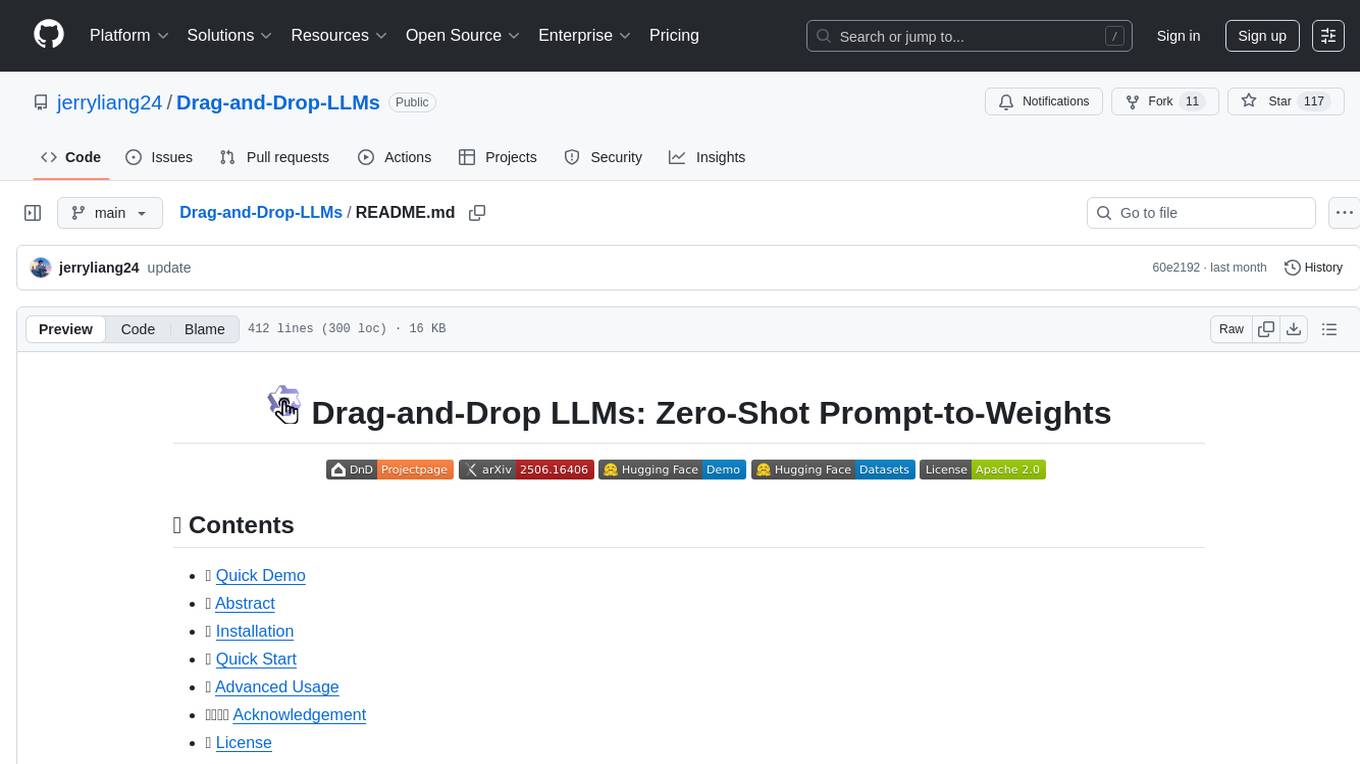

Drag-and-Drop-LLMs

None

Stars: 116

Drag-and-Drop LLMs (DnD) is a prompt-conditioned parameter generator that eliminates per-task training by mapping task prompts directly to LoRA weight updates. It uses a lightweight text encoder to distill prompt batches into condition embeddings, transformed by a cascaded hyper convolutional decoder into LoRA matrices. DnD offers up to 12,000× lower overhead than full fine-tuning, gains up to 30% in performance over strong LoRAs on various tasks, and shows robust cross-domain generalization. It provides a rapid way to specialize large language models without gradient-based adaptation.

README:

- 🎥 Quick Demo

- 📖 Abstract

- 🚀 Installation

- 🗂️ Quick Start

- 🤖 Advanced Usage

- 👩👩👧👦 Acknowledgement

- 📄 License

- 🎓 Citation

https://github.com/user-attachments/assets/ec1ea0d1-3e1c-47b7-8c30-3623866d9369

Explore generating your LLMs for various tasks using our demo!

Modern Parameter-Efficient Fine-Tuning (PEFT) methods such as low-rank adaptation (LoRA) reduce the cost of customizing large language models (LLMs), yet still require a separate optimization run for every downstream dataset. We introduce Drag-and-Drop LLMs (DnD), a prompt-conditioned parameter generator that eliminates per-task training by mapping a handful of unlabeled task prompts directly to LoRA weight updates. A lightweight text encoder distills each prompt batch into condition embeddings, which are then transformed by a cascaded hyper convolutional decoder into the full set of LoRA matrices. Once trained in a diverse collection of prompt-checkpoint pairs, DnD produces task-specific parameters in seconds, yielding i) up to 12,000× lower overhead than full fine-tuning, ii) average gains up to 30% in performance over the strongest training LoRAs on unseen common-sense reasoning, math, coding, and multimodal benchmarks, and iii) robust cross-domain generalization despite never seeing the target data or labels. Our results demonstrate that prompt-conditioned parameter generation is a viable alternative to gradient-based adaptation for rapidly specializing LLMs.

Before you get started, you need to set up a conda environment first.

- Create your conda environment.

conda create -n dnd python=3.12

conda activate dnd

pip install torch==2.5.1 torchvision==0.20.1 torchaudio==2.5.1 --index-url https://download.pytorch.org/whl/cu124- Install dependencies for DnD.

git clone https://github.com/jerryliang24/Drag-and-Drop-LLMs.git

cd Drag-and-Drop-LLMs

bash install.shThis section covers the entire process from preparing the checkpoint dataset to training and testing the DnD model.

- Download foundation models and sentenceBERT in the ./model folder.

huggingface-cli download <model_name> --local-dir models/<model_name>

# The models you may need for DnD: Qwen/Qwen2.5-0.5/1.5/7B-Instruct, Qwen/Qwen2.5-VL-3B-Instruct, sentence-transformers/all-MiniLM-L12-v2, google-t5/t5-base- Preparing the checkpoint is laborious, so we recommend using our released LoRA adapters for training.

python download_data.py --lora_type <lora_type>

# please refer to this script to specify the kind of LoRAs you want to download.If you want to enjoy the process of checkpoint collection, see the Adapt your own dataset section for detailed instructions.

- Register whether you want to use wandb and your wandb key in ./workspace/main/config.json.

{"use_wandb": <bool>,

"wandb_api_key":""}- Use our example scripts to train DnD model for common sense reasoning dataset ARC-c.

bash scripts/common_sense_reasoning/ARC-c/training_and_generation.shYou can refer to ./scripts folder for a variety of experiments, generating LLMs for common sense reasoning, coding, math, and multimodal tasks.

bash scripts/ablations/condition_forms/training.sh

bash scripts/ablations/condition_forms/generation.shThis experiment explores different forms of conditions' (Prompts, Prompts+Answers, Answers) influence on DnD's performance.

bash scripts/ablations/extractor_types/<type>_extractor.shThis experiment explores different condition extractors' (Word2Vector, Encoder-Decoder, Decoder-Only) influence on DnD's performance. Note that you need to first download the model in ./models folder.

We also open-source other experiments' code in ./scripts/ablations folder, please feel free to explore!

In this section, we will introduce how to train DnD on customized data-checkpoint pairs and further predict novel parameters.

Click here for details

- Register the dataset

You first need to place your dataset file in ./prepare/data folder in .json format, and register it in ./prepare/data/dataset_info.json:

<dataset_name>:

{

"file_name": "<dataset_name>.json",

"columns": {"prompt":"prompt",

"response":"response",

"system":"system"},Note that the format of your json file should be like:

[{ "prompt": "",

"response": "",

"system": ""},

...,

...

...,

{ "prompt": "",

"response": "",

"system": ""}]

please refer to LLaMA-Factory for more details.

- Collect checkpoints for this dataset. You need to train LLMs on previous datasets to collect relevent checkpoints, forming data-checkpoint pairs for DnD's training.

We give an example of how to modify the training script:

### model

-model_name_or_path: ../models/Qwen2.5-0.5B-Instruct

+model_name_or_path: ../models/<your desired base model>

################# line9-21 of training_your_dataset.yaml #################

-lora_rank: 8

+lora_rank: <expected rank>

lora_target: all

### dataset

-dataset: ARC-c

+dataset: <dataset_name> # should be consistent with your json file name

template: qwen

cutoff_len: 2048

-max_samples: 5000

+max_samples: <expected sample>

overwrite_cache: true

preprocessing_num_workers: 16

### output

-output_dir: saves/common_sense_reasoning/ARC-c

+output_dir: saves/<task_name>/<dataset_name>

################# line9-21 of training_your_dataset.yaml #################

################# line28-33 of training_your_dataset.yaml #################

-per_device_train_batch_size: 1

-gradient_accumulation_steps: 8

-learning_rate: 1.0e-4

-num_train_epochs: 1.0

-lr_scheduler_type: cosine

-warmup_ratio: 0.01

#you can modify the training settings

+per_device_train_batch_size:

+gradient_accumulation_steps:

+learning_rate:

+num_train_epochs:

+lr_scheduler_type:

+warmup_ratio:

################# line28-33 of training_your_dataset.yaml #################- After training, you need to do the following to get checkpoint collections.

- You need to observe the loss curve, and decide the starting point of fine-tuning for checkpoint collection.

- The trainer_state.json in the checkpoint folder (usually named checkpoint-xxx) needs to be modified, setting "save_steps"=1.

- You can follow the scripts in ./prepare/training_scripts folder that end with "finetune" to design your fine-tuning process.

- After running the scripts and obtaining multiple checkpoints, you can simply run ./workspace/datasets/process_datasets/post_process_ckpts.py to clean your checkpoint folder, deleting config files and rename checkpoints to ease the process of data loading.

- Calculate importance scores for the collected checkpoints.

DnD utilizes a weighted MSE for training, assigning different importance to different layers' weights. The specific importance is calculated by the channel-wise variance and we provide scripts in ./workspace/datasets, like : criterion_weight_for_<model_type>.py. You need to select a script and adjust it accordingly.

######################## on line 26-28 in ...<dataset_name>.py ########################

-DATASET_ROOT = "./data/common_sense_reasoning"

-CONFIG_ROOT = f"./workspace/datasets/common_sense_reasoning"

+DATASET_ROOT = "./data/<task_name>"

+CONFIG_ROOT = f"./workspace/datasets/<task_name>"

######################## on line 26-28 in ...<dataset_name>.py ########################

###################### on line 24 in ...<dataset_name>.py #######################

-dataset_tag = "ARC-c"

+dataset_tag = <your dataset_tag>

###################### on line 24 in ...<dataset_name>.py #######################

###################### on line 37 in ...<dataset_name>.py #######################

-datasets = ["ARC-e","OBQA","BoolQ","WinoGrande","PIQA","HellaSwag"]

+datasets = ["<dataset_name_1>","<dataset_name_2>",...,"<dataset_name_n>"]

# All datasets you collect for the target task

###################### on line 37 in ...<dataset_name>.py #######################

4. Create your training script. (<dataset_name> is decided by yourself. And we strongly recommend keeping this name in data registration, checkpoint collection, and DnD training consistent, since it can save much trouble.)

We use ./workspace/main/tasks/common_sense_reasoning/train_qwen0.5lora_ARC-c.py to give an example. You need to create your training script like ./workspace/main/tasks/<task_name>/train_<model_type>_<dataset_name>.py:

```diff

######################## on line 26-28 in ...<dataset_name>.py ########################

-DATASET_ROOT = "./data/common_sense_reasoning"

-CONFIG_ROOT = f"./workspace/datasets/common_sense_reasoning"

+DATASET_ROOT = "./data/<task_name>"

+CONFIG_ROOT = f"./workspace/datasets/<task_name>"

######################## on line 26-28 in ...<dataset_name>.py ########################

###################### on line 24 in ...<dataset_name>.py #######################

-dataset_tag = "ARC-c"

+dataset_tag = <your dataset_tag>

###################### on line 24 in ...<dataset_name>.py #######################

###################### on line 37 in ...<dataset_name>.py #######################

-datasets = ["ARC-e","OBQA","BoolQ","WinoGrande","PIQA","HellaSwag"]

+datasets = ["<dataset_name_1>","<dataset_name_2>",...,"<dataset_name_n>"]

# All datasets you collect for the target task

###################### on line 37 in ...<dataset_name>.py #######################

###################### on line 47 in ...<dataset_name>.py #######################

-max_text_length = xxx

+max_text_length = <The max prompt length in your dataset>

###################### on line 47 in ...<dataset_name>.py #######################

###################### on line 42-90 in ...<dataset_name>.py #######################

config: dict[str, [float, int, str, dict]] = {

# global setting

"seed": SEED,

"model_tag": os.path.basename(__file__)[:-3].split("_")[1],

"need_test": False,

"use_wandb": True,

# data setting

- "token_size": (10, 130),

+ "token_size": <suitable token size>

- "real_length": 50,

+ "real_length": <number of checkpoints you like to use>

"train_checkpoint_folders": [f"{DATASET_ROOT}/{dataset}" for dataset in datasets],

"test_checkpoint_folder": "",

"dataset_tag": dataset_tag,

"generated_file": f"{CONFIG_ROOT}/{dataset_tag}/",

# train setting

"max_num_gpus": 8,

- "batch_size": 64,

+ "batch_size": <suitable batch_size>

- "num_workers": 8,

+ "num_workers": <suitable num_workers>

"prefetch_factor": 1,

"warmup_steps": 1,

- "total_steps": 4000,

- "learning_rate": 3e-5,

+ "total_steps": <your preferred training setting>

+ "learning_rate":

"weight_decay": 0.1,

"max_grad_norm": 1.0,

"save_every": 100,

"print_every": 20,

- "num_texts": 128,

+ "num_texts": <suitable length of prompt batch>

"save_folder": "./checkpoints",

"noise_enhance": 0.0001,

"criterion_weight": calculate_mean_criterion_weight([f"{CONFIG_ROOT}/{dataset}/criterion_weight.pt" for dataset in datasets]),

"extractor_type":"BERT",

"text_tokenizer":AutoTokenizer.from_pretrained(extractor),

"extra_condition_module":

AutoModel.from_pretrained(extractor,

torch_dtype="auto").to(accelerator.device),

"max_text_length":max_text_length,

- "model_config": {

- "features": [

- (128, max_text_length, 384), (128, 200, 300),

- (128, 100, 256), (256, 50, 200),

- (512, 50, 200),

- (1024, 25, 200), (1024, 10, 200), (2048, 10, 200),

- (4296, 10, 130),

- ],

- "condition_dim": (128, max_text_length, 384),

- "kernel_size": 9,

- },

+ <your desired model size (the features actually denotes the shape transition of input embeddings, pretty convenient isn't it?)>

}

###################### on line 42-90 in ...<dataset_name>.py #######################- Train DnD model.

cd ./workspace/main

bash launch_multi.sh tasks/<task_name>/train_<model_type>_<dataset_name>.py <number_of_gpus>Note that the adjustment of generation scripts is similar.

We sincerely appreciate Yuxiang Li, Jiaxin Wu, Zhiheng Chen, Lei Feng, Jingle Fu, Bohan Zhuang, Ziheng Qin, Zangwei Zheng, Zihan Qiu, Zexi Li, Gongfan Fang, Xinyin Ma, and Qinglin Lu for valuable discussions and feedbacks during this work.

This project is licensed under the Apache 2.0 License - see the LICENSE file for details.

@misc{liang2025draganddropllmszeroshotprompttoweights,

title={Drag-and-Drop LLMs: Zero-Shot Prompt-to-Weights},

author={Zhiyuan Liang and Dongwen Tang and Yuhao Zhou and Xuanlei Zhao and Mingjia Shi and Wangbo Zhao and Zekai Li and Peihao Wang and Konstantin Schürholt and Damian Borth and Michael M. Bronstein and Yang You and Zhangyang Wang and Kai Wang},

year={2025},

eprint={2506.16406},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/abs/2506.16406},

}

🌟 Star us on GitHub if you find DnD helpful! 🌟

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Drag-and-Drop-LLMs

Similar Open Source Tools

Drag-and-Drop-LLMs

Drag-and-Drop LLMs (DnD) is a prompt-conditioned parameter generator that eliminates per-task training by mapping task prompts directly to LoRA weight updates. It uses a lightweight text encoder to distill prompt batches into condition embeddings, transformed by a cascaded hyper convolutional decoder into LoRA matrices. DnD offers up to 12,000× lower overhead than full fine-tuning, gains up to 30% in performance over strong LoRAs on various tasks, and shows robust cross-domain generalization. It provides a rapid way to specialize large language models without gradient-based adaptation.

ceLLama

ceLLama is a streamlined automation pipeline for cell type annotations using large-language models (LLMs). It operates locally to ensure privacy, provides comprehensive analysis by considering negative genes, offers efficient processing speed, and generates customized reports. Ideal for quick and preliminary cell type checks.

gaianet-node

GaiaNet-node is a tool that allows users to run their own GaiaNet node, enabling them to interact with an AI agent. The tool provides functionalities to install the default node software stack, initialize the node with model files and vector database files, start the node, stop the node, and update configurations. Users can use pre-set configurations or pass a custom URL for initialization. The tool is designed to facilitate communication with the AI agent and access node information via a browser. GaiaNet-node requires sudo privilege for installation but can also be installed without sudo privileges with specific commands.

ai-enablement-stack

The AI Enablement Stack is a curated collection of venture-backed companies, tools, and technologies that enable developers to build, deploy, and manage AI applications. It provides a structured view of the AI development ecosystem across five key layers: Agent Consumer Layer, Observability and Governance Layer, Engineering Layer, Intelligence Layer, and Infrastructure Layer. Each layer focuses on specific aspects of AI development, from end-user interaction to model training and deployment. The stack aims to help developers find the right tools for building AI applications faster and more efficiently, assist engineering leaders in making informed decisions about AI infrastructure and tooling, and help organizations understand the AI development landscape to plan technology adoption.

weixin-dyh-ai

WeiXin-Dyh-AI is a backend management system that supports integrating WeChat subscription accounts with AI services. It currently supports integration with Ali AI, Moonshot, and Tencent Hyunyuan. Users can configure different AI models to simulate and interact with AI in multiple modes: text-based knowledge Q&A, text-to-image drawing, image description, text-to-voice conversion, enabling human-AI conversations on WeChat. The system allows hierarchical AI prompt settings at system, subscription account, and WeChat user levels. Users can configure AI model types, providers, and specific instances. The system also supports rules for allocating models and keys at different levels. It addresses limitations of WeChat's messaging system and offers features like text-based commands and voice support for interactions with AI.

llm-dev

The 'llm-dev' repository contains source code and resources for the book 'Practical Projects of Large Models: Multi-Domain Intelligent Application Development'. It covers topics such as language model basics, application architecture, working modes, environment setup, model installation, fine-tuning, quantization, multi-modal model applications, chat applications, programming large model applications, VS Code plugin development, enhanced generation applications, translation applications, intelligent agent applications, speech model applications, digital human applications, model training applications, and AI town applications.

aipan-netdisk-search

Aipan-Netdisk-Search is a free and open-source web project for searching netdisk resources. It utilizes third-party APIs with IP access restrictions, suggesting self-deployment. The project can be easily deployed on Vercel and provides instructions for manual deployment. Users can clone the project, install dependencies, run it in the browser, and access it at localhost:3001. The project also includes documentation for deploying on personal servers using NUXT.JS. Additionally, there are options for donations and communication via WeChat.

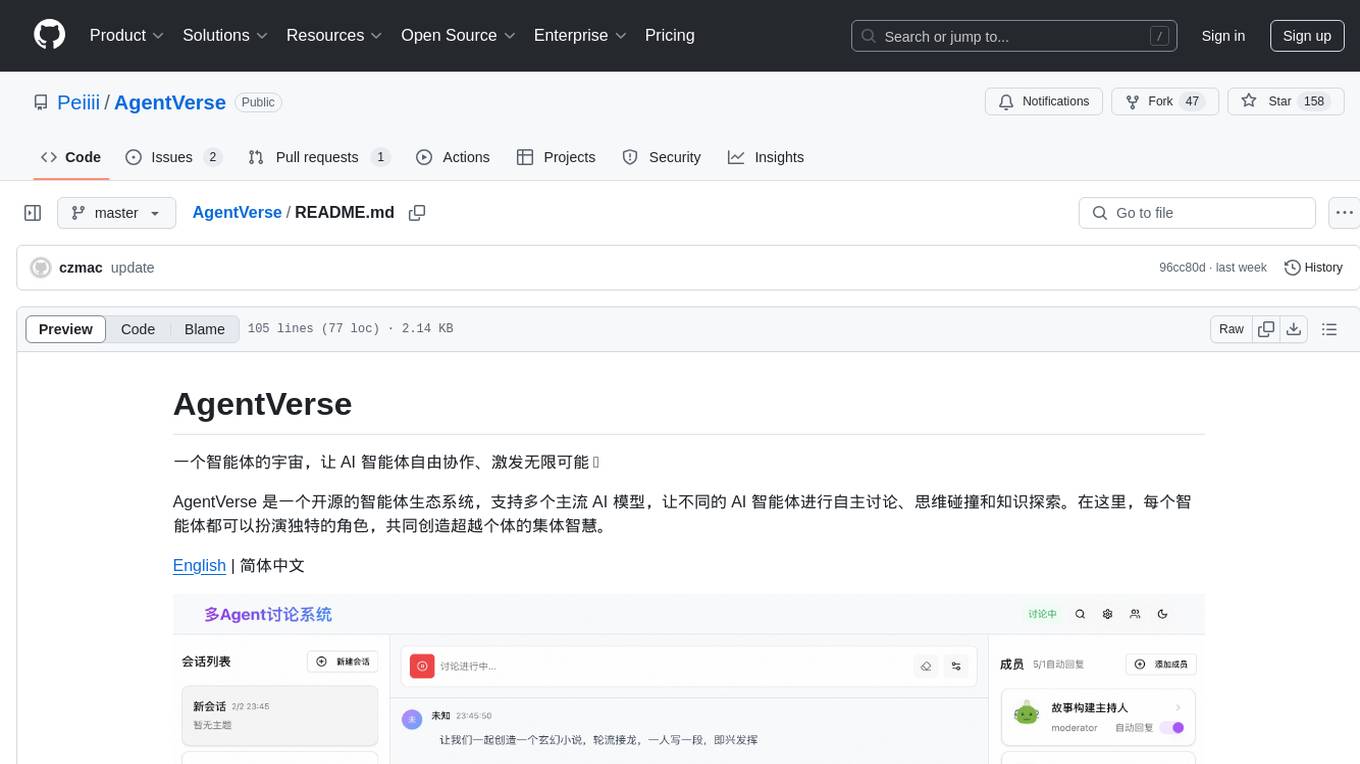

AgentVerse

AgentVerse is an open-source ecosystem for intelligent agents, supporting multiple mainstream AI models to facilitate autonomous discussions, thought collisions, and knowledge exploration. Each intelligent agent can play a unique role here, collectively creating wisdom beyond individuals.

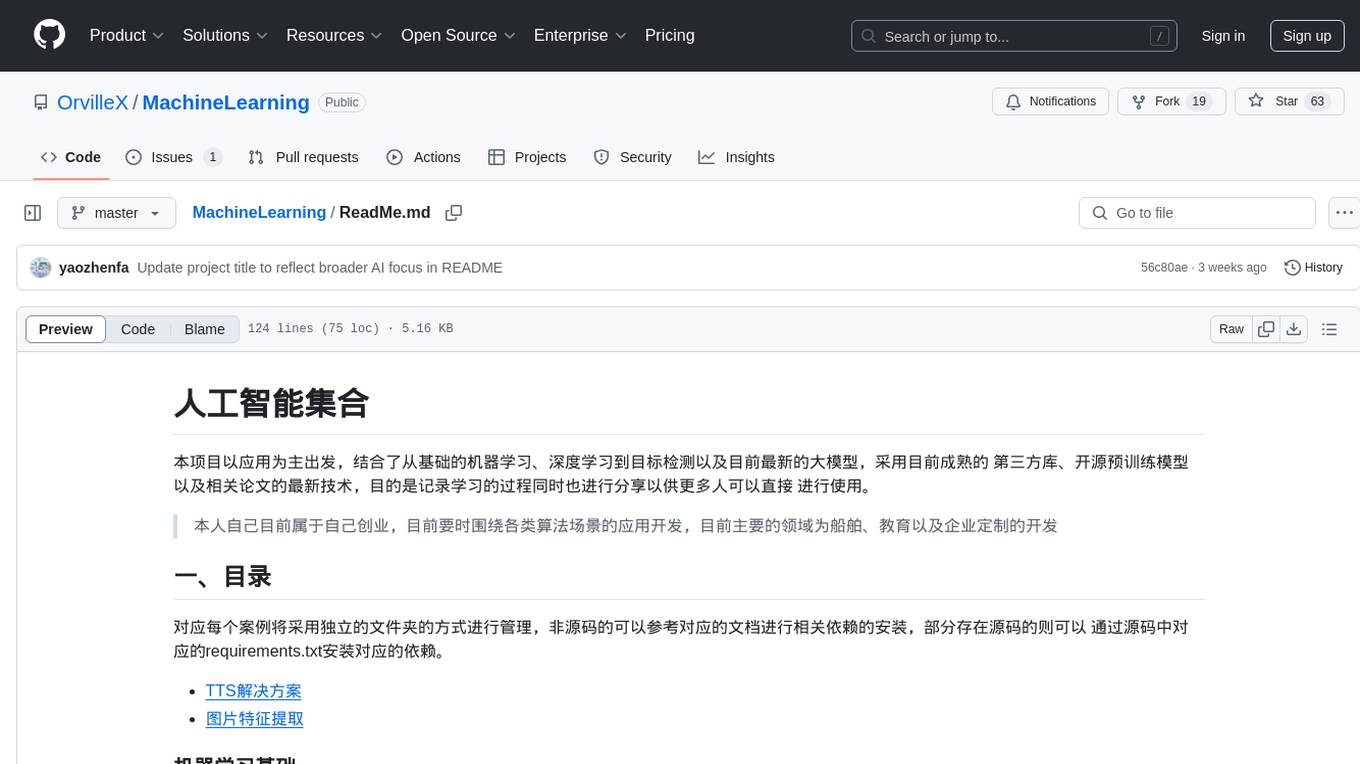

MachineLearning

MachineLearning is a repository focused on practical applications in various algorithm scenarios such as ship, education, and enterprise development. It covers a wide range of topics from basic machine learning and deep learning to object detection and the latest large models. The project utilizes mature third-party libraries, open-source pre-trained models, and the latest technologies from related papers to document the learning process and facilitate direct usage by a wider audience.

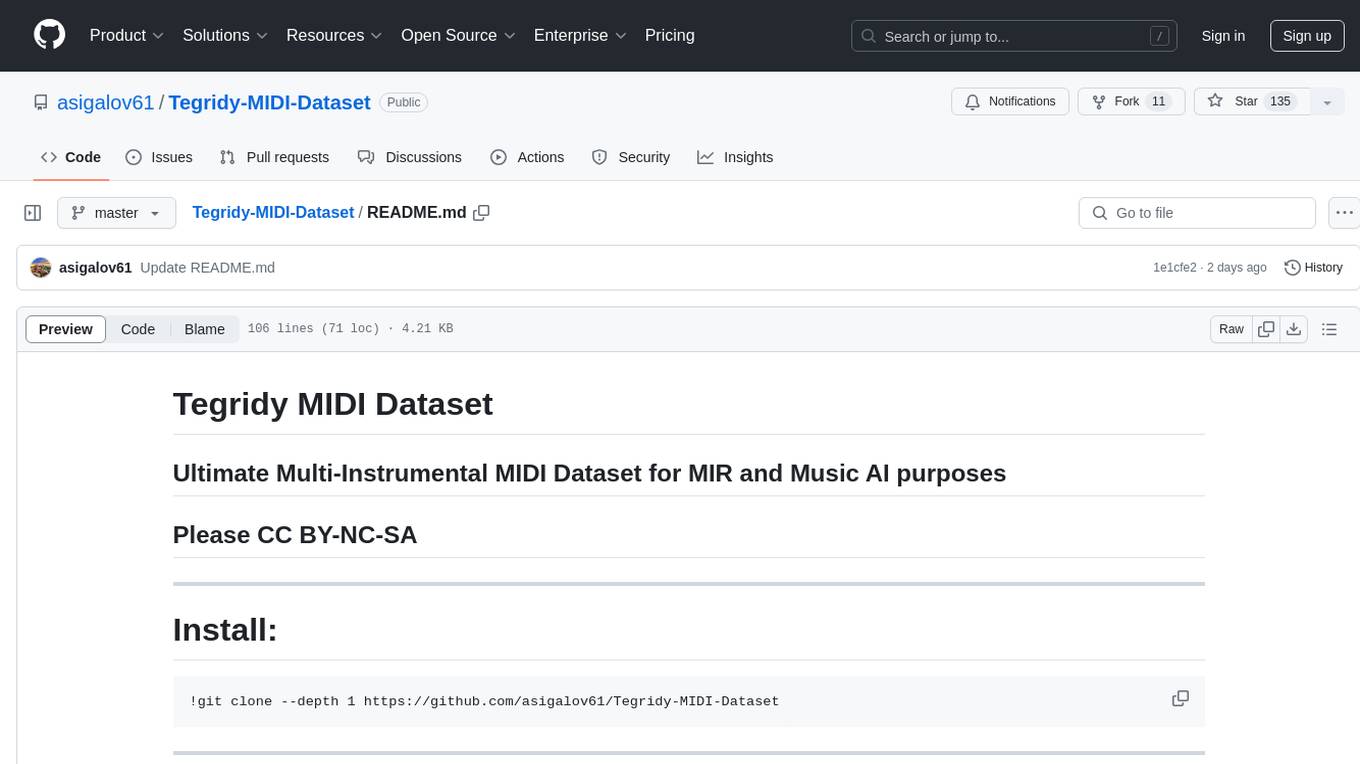

Tegridy-MIDI-Dataset

Tegridy MIDI Dataset is an ultimate multi-instrumental MIDI dataset designed for Music Information Retrieval (MIR) and Music AI purposes. It provides a comprehensive collection of MIDI datasets and essential software tools for MIDI editing, rendering, transcription, search, classification, comparison, and various other MIDI applications.

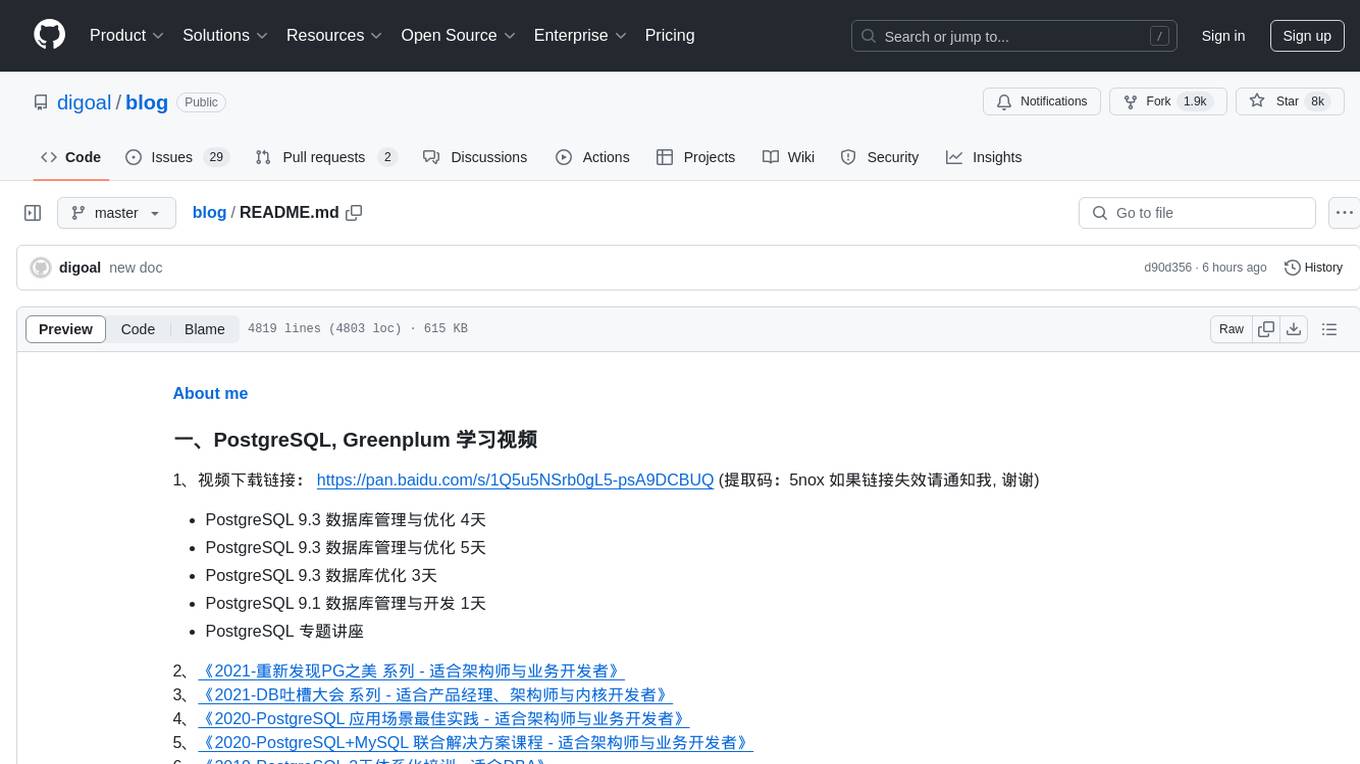

blog

This repository contains a simple blog application built using Python and Flask framework. It allows users to create, read, update, and delete blog posts. The application uses SQLite database for storing blog data and provides a basic user interface for interacting with the blog. The code is well-organized and easy to understand, making it suitable for beginners looking to learn web development with Python and Flask.

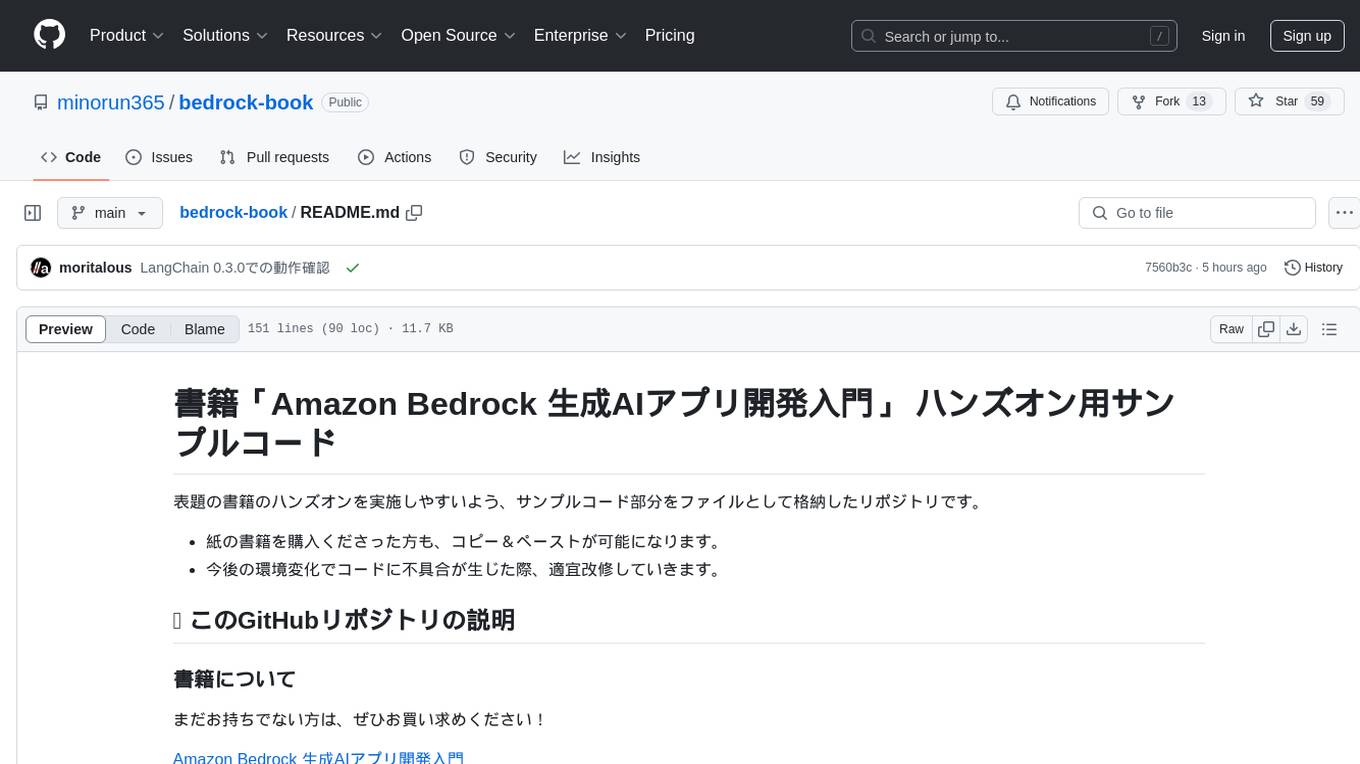

bedrock-book

This repository contains sample code for hands-on exercises related to the book 'Amazon Bedrock 生成AIアプリ開発入門'. It allows readers to easily access and copy the code. The repository also includes directories for each chapter's hands-on code, settings, and a 'requirements.txt' file listing necessary Python libraries. Updates and error fixes will be provided as needed. Users can report issues in the repository's 'Issues' section, and errata will be published on the SB Creative official website.

rime_wanxiang_pro

Rime Wanxiang Pro is an enhanced version of Wanxiang, supporting the 9, 14, and 18-key layouts. It features a pinyin library with optimized word and language models, supporting accurate sentence output with tones. The tool also allows for mixed Chinese and English input, offering various usage scenarios. Users can customize their input method by selecting different decoding and auxiliary code rules, enabling flexible combinations of pinyin and auxiliary codes. The tool simplifies the complex configuration of Rime and provides a unified word library for multiple input methods, enhancing input efficiency and user experience.

ESP32_AI_LLM

ESP32_AI_LLM is a project that uses ESP32 to connect to Xunfei Xinghuo, Dou Bao, and Tongyi Qianwen large models to achieve voice chat functions, supporting online voice wake-up, continuous conversation, music playback, and real-time display of conversation content on an external screen. The project requires specific hardware components and provides functionalities such as voice wake-up, voice conversation, convenient network configuration, music playback, volume adjustment, LED control, model switching, and screen display. Users can deploy the project by setting up Xunfei services, cloning the repository, configuring necessary parameters, installing drivers, compiling, and burning the code.

ezdata

Ezdata is a data processing and task scheduling system developed based on Python backend and Vue3 frontend. It supports managing multiple data sources, abstracting various data sources into a unified data model, integrating chatgpt for data question and answer functionality, enabling low-code data integration and visualization processing, scheduling single and dag tasks, and integrating a low-code data visualization dashboard system.

prose-polish

prose-polish is a tool for AI interaction through drag-and-drop cards, focusing on editing copy and manuscripts. It can recognize Markdown-formatted documents, automatically breaking them into paragraph cards. Users can create prefabricated prompt cards and quickly connect them to the manuscript for editing. The modified manuscript is still presented in card form, allowing users to drag it out as a new paragraph. To use it smoothly, users just need to remember one rule: 'Plug the plug into the socket!'

For similar tasks

Drag-and-Drop-LLMs

Drag-and-Drop LLMs (DnD) is a prompt-conditioned parameter generator that eliminates per-task training by mapping task prompts directly to LoRA weight updates. It uses a lightweight text encoder to distill prompt batches into condition embeddings, transformed by a cascaded hyper convolutional decoder into LoRA matrices. DnD offers up to 12,000× lower overhead than full fine-tuning, gains up to 30% in performance over strong LoRAs on various tasks, and shows robust cross-domain generalization. It provides a rapid way to specialize large language models without gradient-based adaptation.

LMOps

LMOps is a research initiative focusing on fundamental research and technology for building AI products with foundation models, particularly enabling AI capabilities with Large Language Models (LLMs) and Generative AI models. The project explores various aspects such as prompt optimization, longer context handling, LLM alignment, acceleration of LLMs, LLM customization, and understanding in-context learning. It also includes tools like Promptist for automatic prompt optimization, Structured Prompting for efficient long-sequence prompts consumption, and X-Prompt for extensible prompts beyond natural language. Additionally, LLMA accelerators are developed to speed up LLM inference by referencing and copying text spans from documents. The project aims to advance technologies that facilitate prompting language models and enhance the performance of LLMs in various scenarios.

lighteval

LightEval is a lightweight LLM evaluation suite that Hugging Face has been using internally with the recently released LLM data processing library datatrove and LLM training library nanotron. We're releasing it with the community in the spirit of building in the open. Note that it is still very much early so don't expect 100% stability ^^' In case of problems or question, feel free to open an issue!

Firefly

Firefly is an open-source large model training project that supports pre-training, fine-tuning, and DPO of mainstream large models. It includes models like Llama3, Gemma, Qwen1.5, MiniCPM, Llama, InternLM, Baichuan, ChatGLM, Yi, Deepseek, Qwen, Orion, Ziya, Xverse, Mistral, Mixtral-8x7B, Zephyr, Vicuna, Bloom, etc. The project supports full-parameter training, LoRA, QLoRA efficient training, and various tasks such as pre-training, SFT, and DPO. Suitable for users with limited training resources, QLoRA is recommended for fine-tuning instructions. The project has achieved good results on the Open LLM Leaderboard with QLoRA training process validation. The latest version has significant updates and adaptations for different chat model templates.

Awesome-Text2SQL

Awesome Text2SQL is a curated repository containing tutorials and resources for Large Language Models, Text2SQL, Text2DSL, Text2API, Text2Vis, and more. It provides guidelines on converting natural language questions into structured SQL queries, with a focus on NL2SQL. The repository includes information on various models, datasets, evaluation metrics, fine-tuning methods, libraries, and practice projects related to Text2SQL. It serves as a comprehensive resource for individuals interested in working with Text2SQL and related technologies.

create-million-parameter-llm-from-scratch

The 'create-million-parameter-llm-from-scratch' repository provides a detailed guide on creating a Large Language Model (LLM) with 2.3 million parameters from scratch. The blog replicates the LLaMA approach, incorporating concepts like RMSNorm for pre-normalization, SwiGLU activation function, and Rotary Embeddings. The model is trained on a basic dataset to demonstrate the ease of creating a million-parameter LLM without the need for a high-end GPU.

StableToolBench

StableToolBench is a new benchmark developed to address the instability of Tool Learning benchmarks. It aims to balance stability and reality by introducing features such as a Virtual API System with caching and API simulators, a new set of solvable queries determined by LLMs, and a Stable Evaluation System using GPT-4. The Virtual API Server can be set up either by building from source or using a prebuilt Docker image. Users can test the server using provided scripts and evaluate models with Solvable Pass Rate and Solvable Win Rate metrics. The tool also includes model experiments results comparing different models' performance.

BetaML.jl

The Beta Machine Learning Toolkit is a package containing various algorithms and utilities for implementing machine learning workflows in multiple languages, including Julia, Python, and R. It offers a range of supervised and unsupervised models, data transformers, and assessment tools. The models are implemented entirely in Julia and are not wrappers for third-party models. Users can easily contribute new models or request implementations. The focus is on user-friendliness rather than computational efficiency, making it suitable for educational and research purposes.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.