iterate

The most hackable AI agent

Stars: 126

The 'iterate' repository is a collection of applications and tools designed for efficient development and deployment processes. It includes a primary application built with React and Cloudflare Workers, a local daemon for managing streams and agents, and the iterate.com website. The repository also contains detailed documentation and patterns to support development. Development commands are provided for running applications, testing, type checking, linting, and code formatting. Additionally, Cloudflare Tunnels can be used to expose local development servers via public URLs. Users can also build daytona snapshots for configuration purposes.

README:

-

Depot CLI for fast Docker builds with shared caching:

brew install depot/tap/depot depot login

pnpm install

pnpm docker:up

pnpm os db:migrate

docker buildx create --name iterate --driver docker-container --use

pnpm sandbox build

pnpm os dev-

apps/os/- Primary application (React + Cloudflare Workers) -

apps/daemon/- Local daemon for durable streams and agent orchestration -

apps/iterate-com- iterate.com website -

docs/- Detailed documentation and patterns

pnpm dev # Run all apps in parallel

pnpm os dev # Run apps/os only

pnpm daemon dev # Run apps/daemon only

pnpm test # Run all tests

pnpm typecheck # Type check all packages

pnpm lint # Lint and fix

pnpm format # Format codeExpose local dev servers via public URLs (useful for webhooks, OAuth callbacks):

DEV_TUNNEL=1 pnpm dev # → {app}-dev-{ITERATE_USER}.dev.iterate.com

DEV_TUNNEL=bob pnpm dev # → bob.dev.iterate.com (custom, no stage/app suffix)

DEV_TUNNEL=0 pnpm dev # disabled (also: false, or unset)Build a daytona snapshot and write DAYTONA_DEFAULT_SNAPSHOT to your daytona config (needs brew install daytonaio/cli/daytona)

pnpm sandbox daytona:pushFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for iterate

Similar Open Source Tools

iterate

The 'iterate' repository is a collection of applications and tools designed for efficient development and deployment processes. It includes a primary application built with React and Cloudflare Workers, a local daemon for managing streams and agents, and the iterate.com website. The repository also contains detailed documentation and patterns to support development. Development commands are provided for running applications, testing, type checking, linting, and code formatting. Additionally, Cloudflare Tunnels can be used to expose local development servers via public URLs. Users can also build daytona snapshots for configuration purposes.

genassist

GenAssist is an AI-powered platform for managing and leveraging various AI workflows, focusing on conversation management, analytics, and agent-based interactions. It provides user management, AI agents configuration, knowledge base management, analytics, conversation management, and audit logging features. The platform is built with React, TypeScript, Vite, Tailwind CSS, FastAPI, SQLAlchemy ORM, and PostgreSQL database. GenAssist offers integration options for React, JavaScript Widget, and iOS, along with UI test automation and backend testing capabilities.

batteries-included

Batteries Included is an all-in-one platform for building and running modern applications, simplifying cloud infrastructure complexity. It offers production-ready capabilities through an intuitive interface, focusing on automation, security, and enterprise-grade features. The platform includes databases like PostgreSQL and Redis, AI/ML capabilities with Jupyter notebooks, web services deployment, security features like SSL/TLS management, and monitoring tools like Grafana dashboards. Batteries Included is designed to streamline infrastructure setup and management, allowing users to concentrate on application development without dealing with complex configurations.

amazon-q-developer-cli

The `amazon-q-developer-cli` monorepo houses core code for the Amazon Q Developer desktop app and CLI. It includes projects like autocomplete, dashboard, figterm, q CLI, fig_desktop, fig_input_method, VSCode plugin, and JetBrains plugin. The repo also contains build scripts, internal rust crates, internal npm packages, protocol buffer message specification, and integration tests. The architecture involves different components communicating via IPC.

webapp-starter

webapp-starter is a modern full-stack application template built with Turborepo, featuring a Hono + Bun API backend and Next.js frontend. It provides an easy way to build a SaaS product. The backend utilizes technologies like Bun, Drizzle ORM, and Supabase, while the frontend is built with Next.js, Tailwind CSS, Shadcn/ui, and Clerk. Deployment can be done using Vercel and Render. The project structure includes separate directories for API backend and Next.js frontend, along with shared packages for the main database. Setup involves installing dependencies, configuring environment variables, and setting up services like Bun, Supabase, and Clerk. Development can be done using 'turbo dev' command, and deployment instructions are provided for Vercel and Render. Contributions are welcome through pull requests.

backend.ai-webui

Backend.AI Web UI is a user-friendly web and app interface designed to make AI accessible for end-users, DevOps, and SysAdmins. It provides features for session management, inference service management, pipeline management, storage management, node management, statistics, configurations, license checking, plugins, help & manuals, kernel management, user management, keypair management, manager settings, proxy mode support, service information, and integration with the Backend.AI Web Server. The tool supports various devices, offers a built-in websocket proxy feature, and allows for versatile usage across different platforms. Users can easily manage resources, run environment-supported apps, access a web-based terminal, use Visual Studio Code editor, manage experiments, set up autoscaling, manage pipelines, handle storage, monitor nodes, view statistics, configure settings, and more.

skynet

Skynet is an API server for AI services that wraps several apps and models. It consists of specialized modules that can be enabled or disabled as needed. Users can utilize Skynet for tasks such as summaries and action items with vllm or Ollama, live transcriptions with Faster Whisper via websockets, and RAG Assistant. The tool requires Poetry and Redis for operation. Skynet provides a quickstart guide for both Summaries/Assistant and Live Transcriptions, along with instructions for testing docker changes and running demos. Detailed documentation on configuration, running, building, and monitoring Skynet is available in the docs. Developers can contribute to Skynet by installing the pre-commit hook for linting. Skynet is distributed under the Apache 2.0 License.

OmniSteward

OmniSteward is an AI-powered steward system based on large language models that can interact with users through voice or text to help control smart home devices and computer programs. It supports multi-turn dialogue, tool calling for complex tasks, multiple LLM models, voice recognition, smart home control, computer program management, online information retrieval, command line operations, and file management. The system is highly extensible, allowing users to customize and share their own tools.

sandbox

Sandbox is an open-source cloud-based code editing environment with custom AI code autocompletion and real-time collaboration. It consists of a frontend built with Next.js, TailwindCSS, Shadcn UI, Clerk, Monaco, and Liveblocks, and a backend with Express, Socket.io, Cloudflare Workers, D1 database, R2 storage, Workers AI, and Drizzle ORM. The backend includes microservices for database, storage, and AI functionalities. Users can run the project locally by setting up environment variables and deploying the containers. Contributions are welcome following the commit convention and structure provided in the repository.

vibe-kanban

Vibe Kanban is a tool designed to streamline the process of planning, reviewing, and orchestrating tasks for human engineers working with AI coding agents. It allows users to easily switch between different coding agents, orchestrate their execution, review work, start dev servers, and track task statuses. The tool centralizes the configuration of coding agent MCP configs, providing a comprehensive solution for managing coding tasks efficiently.

flink-agents

Apache Flink Agents is an Agentic AI framework based on Apache Flink. It provides a platform for building and deploying AI agents using Flink's capabilities. The framework supports both Java and Python development, allowing users to leverage the power of Flink for AI applications. With a focus on agent-based AI systems, Flink Agents offers a flexible and scalable solution for developing intelligent agents that can interact with their environment and make decisions autonomously. The framework includes tools for building, training, and deploying AI agents, making it suitable for a wide range of AI applications.

BotSharp-UI

BotSharp UI is a web app for managing agents and conversations. It allows users to build new AI assistants quickly using a Node-based Agent building experience. The project is written in SvelteKit v2 and utilizes BotSharp as the LLM services.

mcpm.sh

MCPM is an open source CLI tool for managing MCP servers, providing a simplified global configuration approach to install servers once, organize them with profiles, and integrate them into any MCP client. Features include server discovery, direct execution, sharing capabilities, and client integration tools. It eliminates the complexity of v1's target-based system in favor of a clean global workspace model. The tool is designed to be AI agent friendly with comprehensive automation support and a rich CLI interface.

chat

Full-featured AI Chatbot Nuxt application with authentication, chat history, multiple pages, collapsible sidebar, keyboard shortcuts, light & dark mode, command palette and more. Built using Nuxt UI components and integrated with AI SDK v5 for a complete chat experience. Features include streaming AI messages, multiple model support via various AI providers, authentication via nuxt-auth-utils, chat history persistence using PostgreSQL database and Drizzle ORM, easy deploy to Vercel with zero configuration. The application is configured to use Vercel AI Gateway providing a unified API to access hundreds of AI models through a single endpoint with features like high reliability, spend monitoring, load balancing, and automatic retries and fallbacks between providers.

aiogram_bot_template

Aiogram bot template is a boilerplate for creating Telegram bots using Aiogram framework. It provides a solid foundation for building robust and scalable bots with a focus on code organization, database integration, and localization.

For similar tasks

iterate

The 'iterate' repository is a collection of applications and tools designed for efficient development and deployment processes. It includes a primary application built with React and Cloudflare Workers, a local daemon for managing streams and agents, and the iterate.com website. The repository also contains detailed documentation and patterns to support development. Development commands are provided for running applications, testing, type checking, linting, and code formatting. Additionally, Cloudflare Tunnels can be used to expose local development servers via public URLs. Users can also build daytona snapshots for configuration purposes.

avante.nvim

avante.nvim is a Neovim plugin that emulates the behavior of the Cursor AI IDE, providing AI-driven code suggestions and enabling users to apply recommendations to their source files effortlessly. It offers AI-powered code assistance and one-click application of suggested changes, streamlining the editing process and saving time. The plugin is still in early development, with functionalities like setting API keys, querying AI about code, reviewing suggestions, and applying changes. Key bindings are available for various actions, and the roadmap includes enhancing AI interactions, stability improvements, and introducing new features for coding tasks.

air

air is an R formatter and language server written in Rust. It is currently in alpha stage, so users should expect breaking changes in both the API and formatting results. The tool draws inspiration from various sources like roslyn, swift, rust-analyzer, prettier, biome, and ruff. It provides formatters and language servers, influenced by design decisions from these tools. Users can install air using standalone installers for macOS, Linux, and Windows, which automatically add air to the PATH. Developers can also install the dev version of the air CLI and VS Code extension for further customization and development.

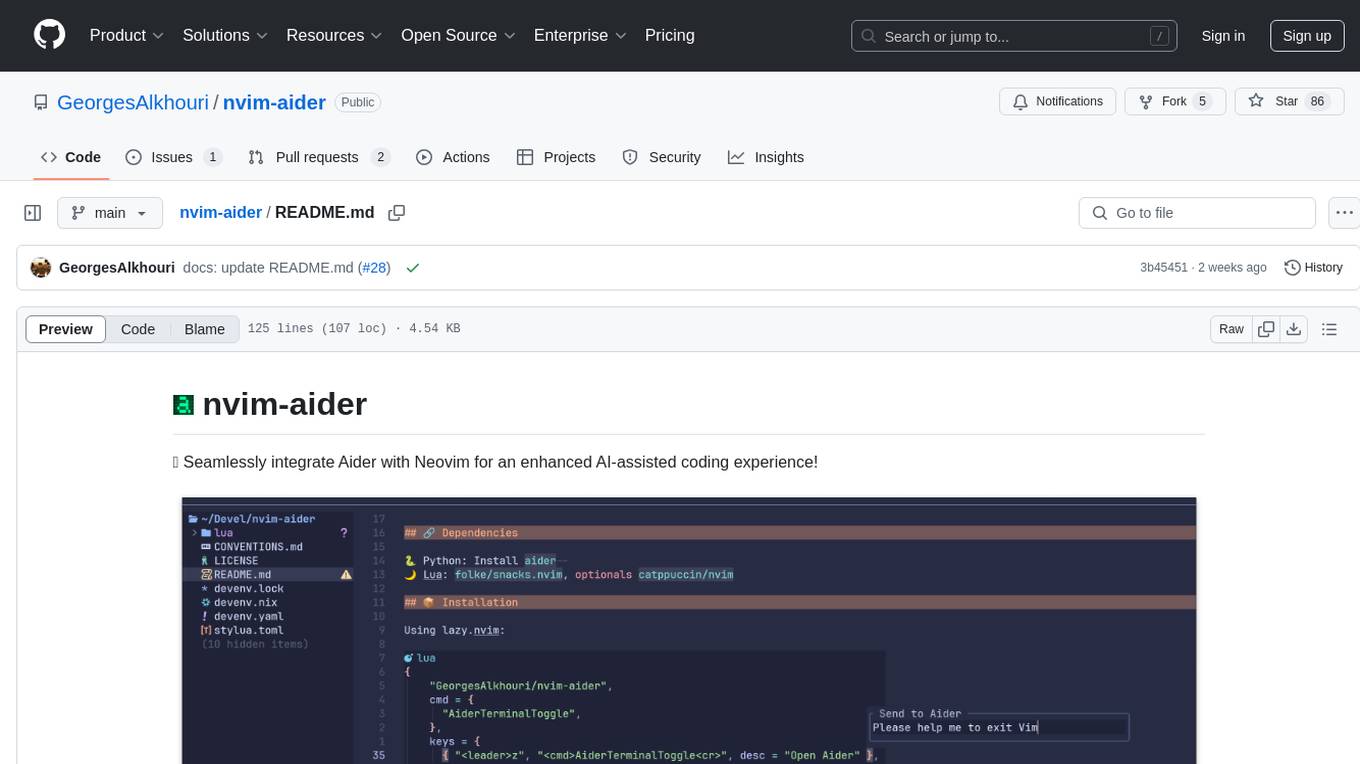

nvim-aider

Nvim-aider is a plugin for Neovim that provides additional functionality and key mappings to enhance the user's editing experience. It offers features such as code navigation, quick access to commonly used commands, and improved text manipulation tools. With Nvim-aider, users can streamline their workflow and increase productivity while working with Neovim.

langchain-google

LangChain Google is a repository containing three packages with Google integrations: langchain-google-genai for Google Generative AI models, langchain-google-vertexai for Google Cloud Generative AI on Vertex AI, and langchain-google-community for other Google product integrations. The repository is organized as a monorepo with a structure including libs for different packages, and files like pyproject.toml and Makefile for building, linting, and testing. It provides guidelines for contributing, local development dependencies installation, formatting, linting, working with optional dependencies, and testing with unit and integration tests. The focus is on maintaining unit test coverage and avoiding excessive integration tests, with annotations for GCP infrastructure-dependent tests.

ultracite

Ultracite is an AI-ready formatter built in Rust for lightning-fast performance, providing robust linting and formatting experience for Next.js, React, and TypeScript projects. It enforces strict type checking, ensures code style consistency, and integrates seamlessly with AI models like GitHub Copilot. With zero configuration needed, Ultracite automatically formats code, fixes lint issues, and improves accessibility on save, allowing developers to focus on coding and shipping without interruptions.

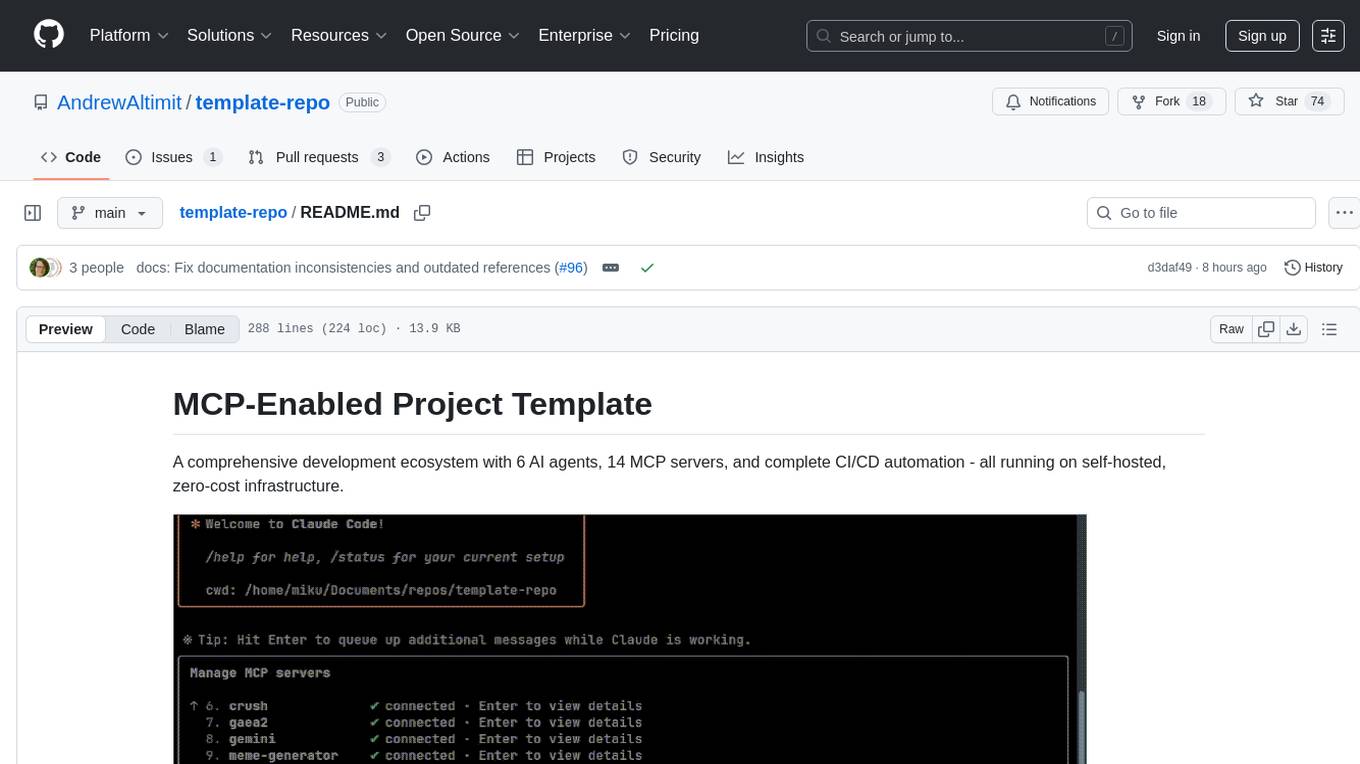

template-repo

The template-repo is a comprehensive development ecosystem with 6 AI agents, 14 MCP servers, and complete CI/CD automation running on self-hosted, zero-cost infrastructure. It follows a container-first approach, with all tools and operations running in Docker containers, zero external dependencies, self-hosted infrastructure, single maintainer design, and modular MCP architecture. The repo provides AI agents for development and automation, features 14 MCP servers for various tasks, and includes security measures, safety training, and sleeper detection system. It offers features like video editing, terrain generation, 3D content creation, AI consultation, image generation, and more, with a focus on maximum portability and consistency.

langstream

LangStream is a tool for natural language processing tasks, providing a CLI for easy installation and usage. Users can try sample applications like Chat Completions and create their own applications using the developer documentation. It supports running on Kubernetes for production-ready deployment, with support for various Kubernetes distributions and external components like Apache Kafka or Apache Pulsar cluster. Users can deploy LangStream locally using minikube and manage the cluster with mini-langstream. Development requirements include Docker, Java 17, Git, Python 3.11+, and PIP, with the option to test local code changes using mini-langstream.

For similar jobs

AirGo

AirGo is a front and rear end separation, multi user, multi protocol proxy service management system, simple and easy to use. It supports vless, vmess, shadowsocks, and hysteria2.

mosec

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API. * **Highly performant** : web layer and task coordination built with Rust 🦀, which offers blazing speed in addition to efficient CPU utilization powered by async I/O * **Ease of use** : user interface purely in Python 🐍, by which users can serve their models in an ML framework-agnostic manner using the same code as they do for offline testing * **Dynamic batching** : aggregate requests from different users for batched inference and distribute results back * **Pipelined stages** : spawn multiple processes for pipelined stages to handle CPU/GPU/IO mixed workloads * **Cloud friendly** : designed to run in the cloud, with the model warmup, graceful shutdown, and Prometheus monitoring metrics, easily managed by Kubernetes or any container orchestration systems * **Do one thing well** : focus on the online serving part, users can pay attention to the model optimization and business logic

llm-code-interpreter

The 'llm-code-interpreter' repository is a deprecated plugin that provides a code interpreter on steroids for ChatGPT by E2B. It gives ChatGPT access to a sandboxed cloud environment with capabilities like running any code, accessing Linux OS, installing programs, using filesystem, running processes, and accessing the internet. The plugin exposes commands to run shell commands, read files, and write files, enabling various possibilities such as running different languages, installing programs, starting servers, deploying websites, and more. It is powered by the E2B API and is designed for agents to freely experiment within a sandboxed environment.

pezzo

Pezzo is a fully cloud-native and open-source LLMOps platform that allows users to observe and monitor AI operations, troubleshoot issues, save costs and latency, collaborate, manage prompts, and deliver AI changes instantly. It supports various clients for prompt management, observability, and caching. Users can run the full Pezzo stack locally using Docker Compose, with prerequisites including Node.js 18+, Docker, and a GraphQL Language Feature Support VSCode Extension. Contributions are welcome, and the source code is available under the Apache 2.0 License.

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

fluid

Fluid is an open source Kubernetes-native Distributed Dataset Orchestrator and Accelerator for data-intensive applications, such as big data and AI applications. It implements dataset abstraction, scalable cache runtime, automated data operations, elasticity and scheduling, and is runtime platform agnostic. Key concepts include Dataset and Runtime. Prerequisites include Kubernetes version > 1.16, Golang 1.18+, and Helm 3. The tool offers features like accelerating remote file accessing, machine learning, accelerating PVC, preloading dataset, and on-the-fly dataset cache scaling. Contributions are welcomed, and the project is under the Apache 2.0 license with a vendor-neutral approach.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.