ultracite

The AI-ready formatter that helps you write and generate code faster.

Stars: 1939

Ultracite is an AI-ready formatter built in Rust for lightning-fast performance, providing robust linting and formatting experience for Next.js, React, and TypeScript projects. It enforces strict type checking, ensures code style consistency, and integrates seamlessly with AI models like GitHub Copilot. With zero configuration needed, Ultracite automatically formats code, fixes lint issues, and improves accessibility on save, allowing developers to focus on coding and shipping without interruptions.

README:

The AI-ready formatter that helps you write and generate code faster.

Ultracite is a zero-config Biome preset that provides a robust linting and formatting experience for your team and your AI integrations. Built in Rust for lightning-fast performance, it automatically formats your code and fixes lint issues on save without interrupting your workflow.

Install and initialize Ultracite in your project:

npx ultracite initThat's it! Ultracite will automatically format your code and fix lint issues every time you save. No configuration required.

Built in Rust for instant code analysis and processing. On-save checks feel seamless without interrupting your workflow.

Preconfigured rules optimized for Next.js, React, and TypeScript projects with sensible defaults. Customize when needed, but it works perfectly out of the box.

Enforces strict type checking and best practices by default, catching type errors and preventing unsafe code patterns before they become problems.

Unified toolchain configuration across all packages and apps, eliminating thousands of lines of duplicate config files while maintaining consistency.

Ensures consistent code style and quality across all team members and AI models, eliminating debates over formatting and reducing code review friction. Supports GitHub Copilot, Cursor, Windsurf, Zed, Claude Code, and OpenAI Codex.

Automatically reformats code and fixes lint issues on save, with clear error reporting for issues that need manual attention.

Once set up, Ultracite runs mostly in the background:

- Automatic formatting on every save

- Lint fixes applied automatically when possible

- Type safety enforced with strict rules

- Import organization and cleanup

- Accessibility improvements built-in

Because Biome is extremely fast, even on large projects, running Ultracite's checks is instantaneous and can comfortably run on every save without lag.

# Format your code

npx ultracite fix

# Check for lint issues

npx ultracite checkFinally — a lightning-fast formatter that ensures you, your team, and your AI agents are writing code in harmony. Stop spending time on code style debates and formatting fixes. Let Ultracite handle the mundane so you can focus on building and shipping.

Used by over 500 developers

Tests are written in Vitest. You can run them with:

pnpm testCheck the coverage of the CLI with:

pnpm test:coverageRead the docs for detailed setup instructions, configuration options, and examples.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ultracite

Similar Open Source Tools

ultracite

Ultracite is an AI-ready formatter built in Rust for lightning-fast performance, providing robust linting and formatting experience for Next.js, React, and TypeScript projects. It enforces strict type checking, ensures code style consistency, and integrates seamlessly with AI models like GitHub Copilot. With zero configuration needed, Ultracite automatically formats code, fixes lint issues, and improves accessibility on save, allowing developers to focus on coding and shipping without interruptions.

code2prompt

code2prompt is a command-line tool that converts your codebase into a single LLM prompt with a source tree, prompt templating, and token counting. It automates generating LLM prompts from codebases of any size, customizing prompt generation with Handlebars templates, respecting .gitignore, filtering and excluding files using glob patterns, displaying token count, including Git diff output, copying prompt to clipboard, saving prompt to an output file, excluding files and folders, adding line numbers to source code blocks, and more. It helps streamline the process of creating LLM prompts for code analysis, generation, and other tasks.

Auditor

TheAuditor is an offline-first, AI-centric SAST & code intelligence platform designed to find security vulnerabilities, track data flow, analyze architecture, detect refactoring issues, run industry-standard tools, and produce AI-ready reports. It is specifically tailored for AI-assisted development workflows, providing verifiable ground truth for developers and AI assistants. The tool orchestrates verifiable data, focuses on AI consumption, and is extensible to support Python and Node.js ecosystems. The comprehensive analysis pipeline includes stages for foundation, concurrent analysis, and final aggregation, offering features like refactoring detection, dependency graph visualization, and optional insights analysis. The tool interacts with antivirus software to identify vulnerabilities, triggers performance impacts, and provides transparent information on common issues and troubleshooting. TheAuditor aims to address the lack of ground truth in AI development workflows and make AI development trustworthy by providing accurate security analysis and code verification.

cline-based-code-generator

HAI Code Generator is a cutting-edge tool designed to simplify and automate task execution while enhancing code generation workflows. Leveraging Specif AI, it streamlines processes like task execution, file identification, and code documentation through intelligent automation and AI-driven capabilities. Built on Cline's powerful foundation for AI-assisted development, HAI Code Generator boosts productivity and precision by automating task execution and integrating file management capabilities. It combines intelligent file indexing, context generation, and LLM-driven automation to minimize manual effort and ensure task accuracy. Perfect for developers and teams aiming to enhance their workflows.

MyDeviceAI

MyDeviceAI is a personal AI assistant app for iPhone that brings the power of artificial intelligence directly to the device. It focuses on privacy, performance, and personalization by running AI models locally and integrating with privacy-focused web services. The app offers seamless user experience, web search integration, advanced reasoning capabilities, personalization features, chat history access, and broad device support. It requires macOS, Xcode, CocoaPods, Node.js, and a React Native development environment for installation. The technical stack includes React Native framework, AI models like Qwen 3 and BGE Small, SearXNG integration, Redux for state management, AsyncStorage for storage, Lucide for UI components, and tools like ESLint and Prettier for code quality.

AgentPilot

Agent Pilot is an open source desktop app for creating, managing, and chatting with AI agents. It features multi-agent, branching chats with various providers through LiteLLM. Users can combine models from different providers, configure interactions, and run code using the built-in Open Interpreter. The tool allows users to create agents, manage chats, work with multi-agent workflows, branching workflows, context blocks, tools, and plugins. It also supports a code interpreter, scheduler, voice integration, and integration with various AI providers. Contributions to the project are welcome, and users can report known issues for improvement.

Instrukt

Instrukt is a terminal-based AI integrated environment that allows users to create and instruct modular AI agents, generate document indexes for question-answering, and attach tools to any agent. It provides a platform for users to interact with AI agents in natural language and run them inside secure containers for performing tasks. The tool supports custom AI agents, chat with code and documents, tools customization, prompt console for quick interaction, LangChain ecosystem integration, secure containers for agent execution, and developer console for debugging and introspection. Instrukt aims to make AI accessible to everyone by providing tools that empower users without relying on external APIs and services.

draive

draive is an open-source Python library designed to simplify and accelerate the development of LLM-based applications. It offers abstract building blocks for connecting functionalities with large language models, flexible integration with various AI solutions, and a user-friendly framework for building scalable data processing pipelines. The library follows a function-oriented design, allowing users to represent complex programs as simple functions. It also provides tools for measuring and debugging functionalities, ensuring type safety and efficient asynchronous operations for modern Python apps.

eole

EOLE is an open language modeling toolkit based on PyTorch. It aims to provide a research-friendly approach with a comprehensive yet compact and modular codebase for experimenting with various types of language models. The toolkit includes features such as versatile training and inference, dynamic data transforms, comprehensive large language model support, advanced quantization, efficient finetuning, flexible inference, and tensor parallelism. EOLE is a work in progress with ongoing enhancements in configuration management, command line entry points, reproducible recipes, core API simplification, and plans for further simplification, refactoring, inference server development, additional recipes, documentation enhancement, test coverage improvement, logging enhancements, and broader model support.

LLMstudio

LLMstudio by TensorOps is a platform that offers prompt engineering tools for accessing models from providers like OpenAI, VertexAI, and Bedrock. It provides features such as Python Client Gateway, Prompt Editing UI, History Management, and Context Limit Adaptability. Users can track past runs, log costs and latency, and export history to CSV. The tool also supports automatic switching to larger-context models when needed. Coming soon features include side-by-side comparison of LLMs, automated testing, API key administration, project organization, and resilience against rate limits. LLMstudio aims to streamline prompt engineering, provide execution history tracking, and enable effortless data export, offering an evolving environment for teams to experiment with advanced language models.

your-source-to-prompt.html

Your Source to Prompt is a single HTML file tool that allows users to easily select code files and combine them into a single text output. It runs entirely in the browser, ensuring local and secure operation without any external dependencies. The tool offers features like preset management, efficient file selection, context size awareness, hierarchical structure preview, minification, and user-friendly UI with dark mode. It aims to simplify the process of preparing code for Large Language Models (LLMs) by providing a well-structured prompt context.

extensionOS

Extension | OS is an open-source browser extension that brings AI directly to users' web browsers, allowing them to access powerful models like LLMs seamlessly. Users can create prompts, fix grammar, and access intelligent assistance without switching tabs. The extension aims to revolutionize online information interaction by integrating AI into everyday browsing experiences. It offers features like Prompt Factory for tailored prompts, seamless LLM model access, secure API key storage, and a Mixture of Agents feature. The extension was developed to empower users to unleash their creativity with custom prompts and enhance their browsing experience with intelligent assistance.

ConvoForm

ConvoForm.com transforms traditional forms into interactive conversational experiences, powered by AI for an enhanced user journey. It offers AI-Powered Form Generation, Real-time Form Editing and Preview, and Customizable Submission Pages. The tech stack includes Next.js for frontend, tRPC for backend, GPT-3.5-Turbo for AI integration, and Socket.io for real-time updates. Local setup requires Node.js, pnpm, Git, PostgreSQL database, Clerk for Authentication, OpenAI key, Redis Database, and Sentry for monitoring. The project is open for contributions and is licensed under the MIT License.

ProxyAI

ProxyAI is an open-source AI copilot for JetBrains, offering advanced code assistance features powered by top-tier language models. Users can customize their coding experience, receive AI-suggested code changes, autocomplete suggestions, and context-aware naming suggestions. The tool also allows users to chat with images, reference project files and folders, web docs, git history, and search the web. ProxyAI prioritizes user privacy by not collecting sensitive information and only gathering anonymous usage data with consent.

LlamaPen

LlamaPen is a no-install needed GUI tool for Ollama, featuring a web-based interface accessible on both desktop and mobile. It allows easy setup and configuration, renders markdown, text, and LaTeX math, provides keyboard shortcuts for quick navigation, includes a built-in model and download manager, supports offline and PWA, and is 100% free and open-source. Users can chat with complete privacy as all chats are stored locally in the browser, ensuring near-instant chat load times. The tool also offers an optional cloud service, LlamaPen API, for running up-to-date models if unable to run locally, with a subscription option for increased rate limits and access to more expensive models.

frontman

Frontman is a framework-integrated AI coding agent that allows users to edit their frontend directly in the browser. It enables users to point at any element in their running app, describe the change they want, and Frontman edits the source code for them without the need for copy-pasting or context switching. The tool supports popular frameworks like Next.js, Astro, and Vite, providing features such as point-and-click editing, real-time streaming of AI-generated edits, and framework-aware context. Frontman also offers an open protocol layer for decoupled and extensible client, server, and framework adapters.

For similar tasks

ultracite

Ultracite is an AI-ready formatter built in Rust for lightning-fast performance, providing robust linting and formatting experience for Next.js, React, and TypeScript projects. It enforces strict type checking, ensures code style consistency, and integrates seamlessly with AI models like GitHub Copilot. With zero configuration needed, Ultracite automatically formats code, fixes lint issues, and improves accessibility on save, allowing developers to focus on coding and shipping without interruptions.

avante.nvim

avante.nvim is a Neovim plugin that emulates the behavior of the Cursor AI IDE, providing AI-driven code suggestions and enabling users to apply recommendations to their source files effortlessly. It offers AI-powered code assistance and one-click application of suggested changes, streamlining the editing process and saving time. The plugin is still in early development, with functionalities like setting API keys, querying AI about code, reviewing suggestions, and applying changes. Key bindings are available for various actions, and the roadmap includes enhancing AI interactions, stability improvements, and introducing new features for coding tasks.

air

air is an R formatter and language server written in Rust. It is currently in alpha stage, so users should expect breaking changes in both the API and formatting results. The tool draws inspiration from various sources like roslyn, swift, rust-analyzer, prettier, biome, and ruff. It provides formatters and language servers, influenced by design decisions from these tools. Users can install air using standalone installers for macOS, Linux, and Windows, which automatically add air to the PATH. Developers can also install the dev version of the air CLI and VS Code extension for further customization and development.

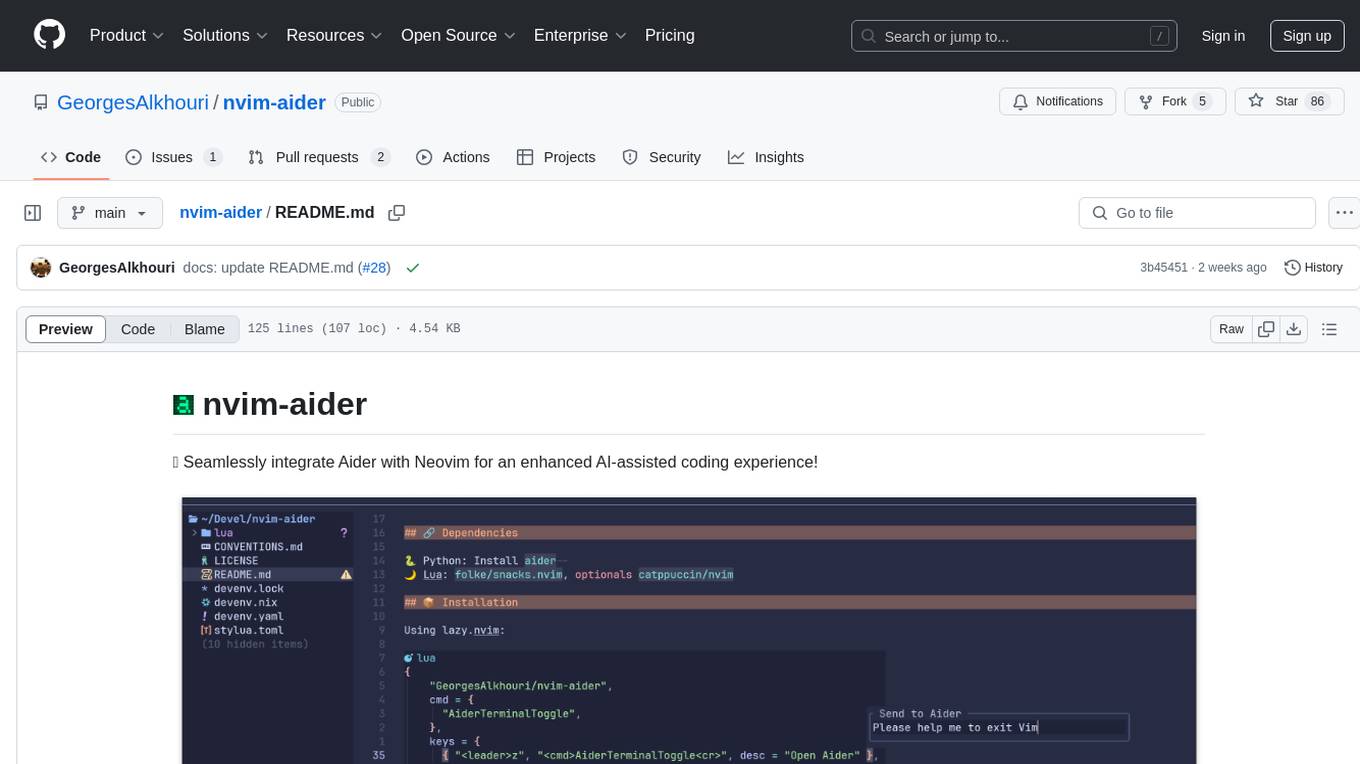

nvim-aider

Nvim-aider is a plugin for Neovim that provides additional functionality and key mappings to enhance the user's editing experience. It offers features such as code navigation, quick access to commonly used commands, and improved text manipulation tools. With Nvim-aider, users can streamline their workflow and increase productivity while working with Neovim.

langchain-google

LangChain Google is a repository containing three packages with Google integrations: langchain-google-genai for Google Generative AI models, langchain-google-vertexai for Google Cloud Generative AI on Vertex AI, and langchain-google-community for other Google product integrations. The repository is organized as a monorepo with a structure including libs for different packages, and files like pyproject.toml and Makefile for building, linting, and testing. It provides guidelines for contributing, local development dependencies installation, formatting, linting, working with optional dependencies, and testing with unit and integration tests. The focus is on maintaining unit test coverage and avoiding excessive integration tests, with annotations for GCP infrastructure-dependent tests.

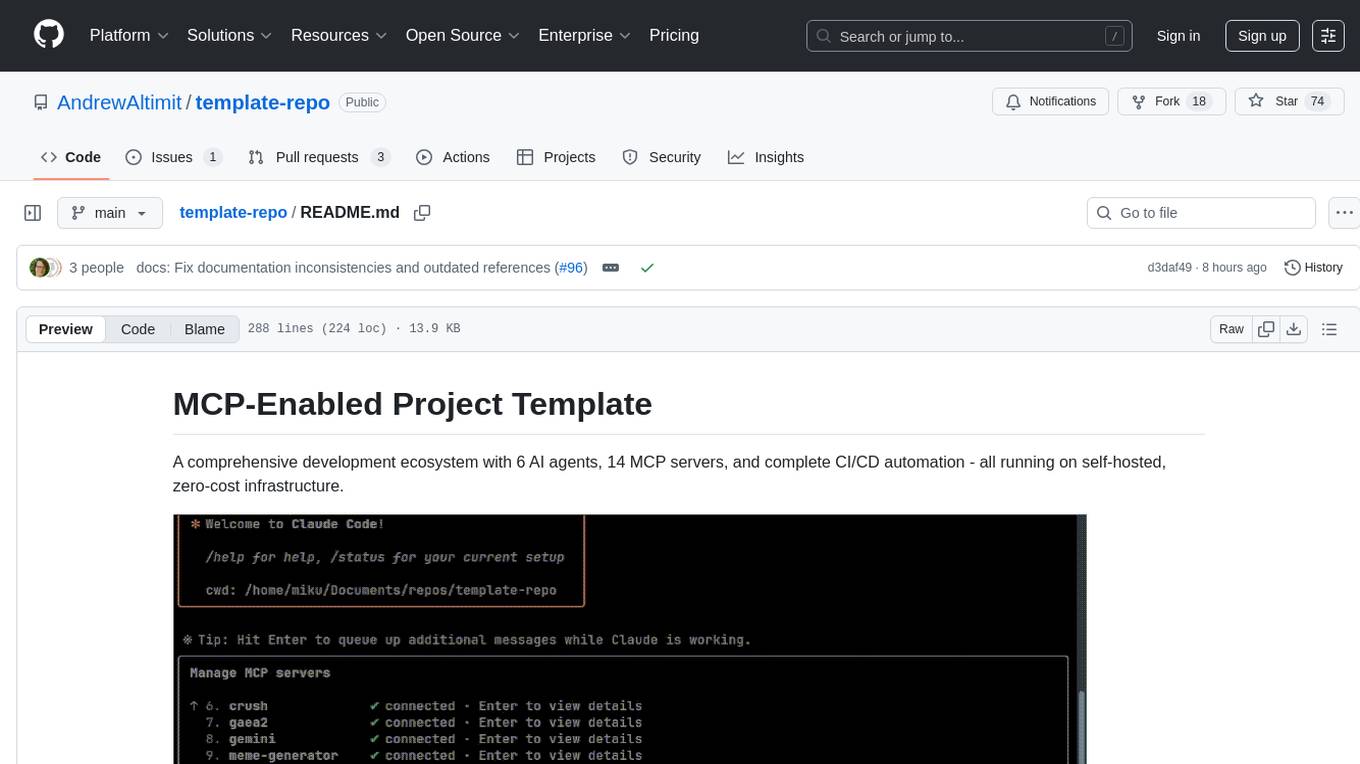

template-repo

The template-repo is a comprehensive development ecosystem with 6 AI agents, 14 MCP servers, and complete CI/CD automation running on self-hosted, zero-cost infrastructure. It follows a container-first approach, with all tools and operations running in Docker containers, zero external dependencies, self-hosted infrastructure, single maintainer design, and modular MCP architecture. The repo provides AI agents for development and automation, features 14 MCP servers for various tasks, and includes security measures, safety training, and sleeper detection system. It offers features like video editing, terrain generation, 3D content creation, AI consultation, image generation, and more, with a focus on maximum portability and consistency.

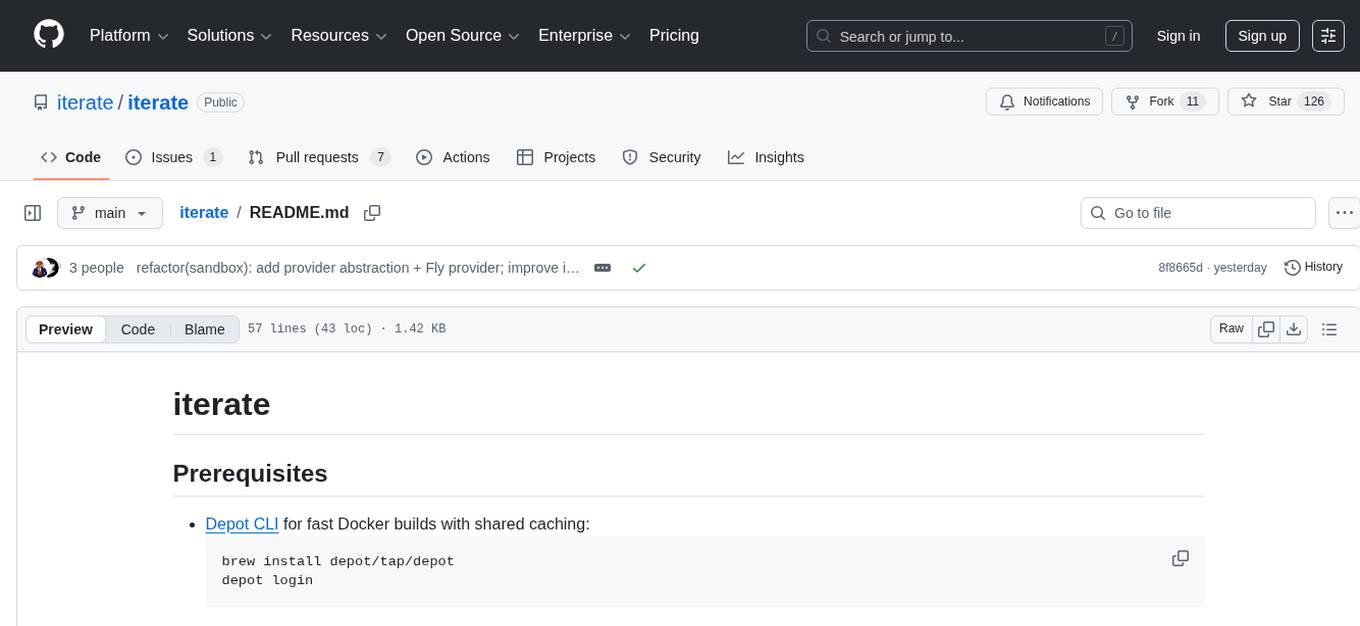

iterate

The 'iterate' repository is a collection of applications and tools designed for efficient development and deployment processes. It includes a primary application built with React and Cloudflare Workers, a local daemon for managing streams and agents, and the iterate.com website. The repository also contains detailed documentation and patterns to support development. Development commands are provided for running applications, testing, type checking, linting, and code formatting. Additionally, Cloudflare Tunnels can be used to expose local development servers via public URLs. Users can also build daytona snapshots for configuration purposes.

wingman-ai

Wingman AI allows you to use your voice to talk to various AI providers and LLMs, process your conversations, and ultimately trigger actions such as pressing buttons or reading answers. Our _Wingmen_ are like characters and your interface to this world, and you can easily control their behavior and characteristics, even if you're not a developer. AI is complex and it scares people. It's also **not just ChatGPT**. We want to make it as easy as possible for you to get started. That's what _Wingman AI_ is all about. It's a **framework** that allows you to build your own Wingmen and use them in your games and programs. The idea is simple, but the possibilities are endless. For example, you could: * **Role play** with an AI while playing for more immersion. Have air traffic control (ATC) in _Star Citizen_ or _Flight Simulator_. Talk to Shadowheart in Baldur's Gate 3 and have her respond in her own (cloned) voice. * Get live data such as trade information, build guides, or wiki content and have it read to you in-game by a _character_ and voice you control. * Execute keystrokes in games/applications and create complex macros. Trigger them in natural conversations with **no need for exact phrases.** The AI understands the context of your dialog and is quite _smart_ in recognizing your intent. Say _"It's raining! I can't see a thing!"_ and have it trigger a command you simply named _WipeVisors_. * Automate tasks on your computer * improve accessibility * ... and much more

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.