template-repo

Template repo using AI agents and custom MCP tooling

Stars: 74

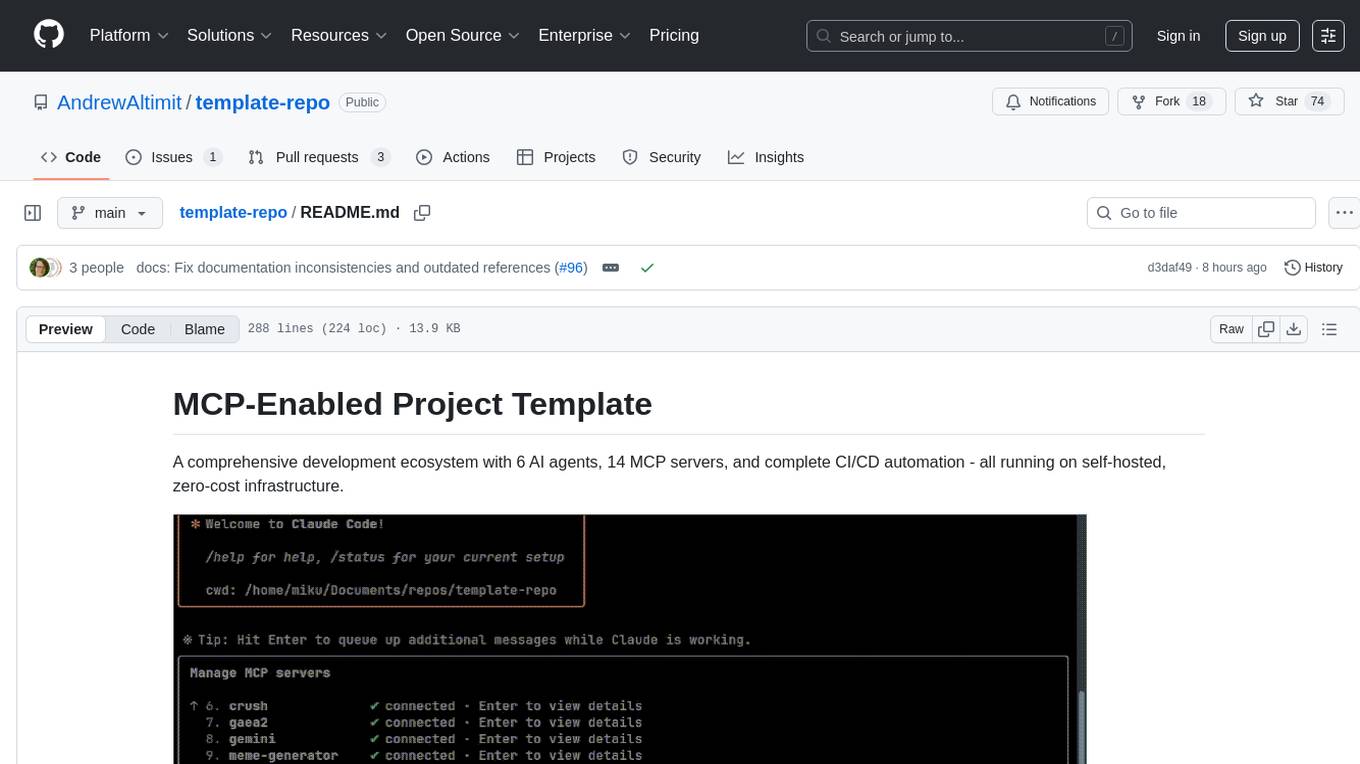

The template-repo is a comprehensive development ecosystem with 6 AI agents, 14 MCP servers, and complete CI/CD automation running on self-hosted, zero-cost infrastructure. It follows a container-first approach, with all tools and operations running in Docker containers, zero external dependencies, self-hosted infrastructure, single maintainer design, and modular MCP architecture. The repo provides AI agents for development and automation, features 14 MCP servers for various tasks, and includes security measures, safety training, and sleeper detection system. It offers features like video editing, terrain generation, 3D content creation, AI consultation, image generation, and more, with a focus on maximum portability and consistency.

README:

A comprehensive development ecosystem with 6 AI agents, 14 MCP servers, and complete CI/CD automation - all running on self-hosted, zero-cost infrastructure.

This project follows a container-first approach:

- All tools and CI/CD operations run in Docker containers for maximum portability

- Zero external dependencies - runs on any Linux system with Docker

- Self-hosted infrastructure - no cloud costs, full control over runners

- Single maintainer design - optimized for individual developer productivity

- Modular MCP architecture - Separate specialized servers for different functionalities

Six AI agents working in harmony for development and automation. See AI Agents Documentation for complete details:

- Claude Code - Primary development assistant

- Codex - AI-powered code generation and completion (Setup Guide)

- OpenCode - Comprehensive code generation (Integration Guide)

- Crush - Fast code generation (Quick Reference)

- Gemini CLI - Interactive development & automated PR reviews

- GitHub Copilot - Code review suggestions

Security: Keyword triggers, user allow list, secure token management. See AI Agents Security

Safety Training: Essential AI safety concepts for human-AI collaboration. See Human Training Guide

Sleeper Detection: Advanced AI agent backdoor and sleeper detection system. See Sleeper Detection Package

- 14 MCP Servers - Modular tools for code quality, content creation, AI assistance, 3D graphics, video editing, speech synthesis, virtual characters, and more

- 6 AI Agents - Comprehensive development automation

- Sleeper Detection System - Advanced AI backdoor detection using TransformerLens residual stream analysis

- Company Integration - Custom agent builds for corporate AI APIs (Documentation)

- Video Editor - AI-powered video editing with transcription, speaker diarization, and intelligent scene detection

- Gaea2 Terrain Generation - Professional terrain generation

- Blender 3D Creation - Full 3D content creation, rendering, and simulation

- ComfyUI & AI Toolkit - Image generation and LoRA training

- Container-First Architecture - Maximum portability and consistency

- Self-Hosted CI/CD - Zero-cost GitHub Actions infrastructure

- Automated PR Workflows - AI-powered reviews and fixes

New to the template? Check out our Template Quickstart Guide for step-by-step customization instructions!

- Choose from 4 pre-configured paths (Minimal, AI-Powered, Content Creation, Full Stack)

- Learn what to enable/disable for your specific use case

- Get up and running in 15 minutes

-

Prerequisites

- Linux system (Ubuntu/Debian recommended)

- Docker (v20.10+) and Docker Compose (v2.0+)

- No other dependencies required!

-

Clone and setup

git clone https://github.com/AndrewAltimit/template-repo cd template-repo # Install AI agents package (for CLI tools) pip3 install -e ./packages/github_ai_agents # Set up API keys (if using AI features) export OPENROUTER_API_KEY="your-key-here" # For OpenCode/Crush export GEMINI_API_KEY="your-key-here" # For Gemini (dont use API key for free tier, use web auth) # For Codex: run 'codex auth' after installing @openai/codex

-

Use MCP servers with Claude Code and other agents

- MCP servers are configured in

.mcp.json - No manual startup required! Agents can start the services themselves.

- MCP servers are configured in

-

For standalone usage

# Start HTTP servers for testing/development docker-compose up -d # Test all servers python automation/testing/test_all_servers.py --quick # Use AI agents directly ./tools/cli/agents/run_codex.sh # Interactive Codex session ./tools/cli/agents/run_opencode.sh -q "Create a REST API" ./tools/cli/agents/run_crush.sh -q "Binary search function" ./tools/cli/agents/run_gemini.sh # Interactive Gemini CLI session

For detailed setup instructions, see CLAUDE.md

For enterprise environments that require custom certificates (e.g., corporate proxy certificates, self-signed certificates), we provide a standardized installation mechanism:

-

Certificate Installation Script:

automation/corporate-proxy/shared/scripts/install-corporate-certs.sh- Placeholder script that organizations can customize with their certificate installation process

- Automatically executed during Docker image builds for all corporate proxy containers

-

Customization Guide: See

automation/corporate-proxy/shared/scripts/README.mdfor:- Step-by-step instructions on customizing the certificate installation

- Examples for different certificate formats and installation methods

- Best practices for certificate management in containerized environments

-

Affected Services:

- All corporate proxy integrations (Gemini, Codex, OpenCode, Crush)

- Python CI/CD containers

- Any custom Docker images built from this template

This pattern ensures consistent certificate handling across all services while maintaining security and flexibility for different corporate environments.

.

├── .github/workflows/ # GitHub Actions workflows

├── docker/ # Docker configurations

├── packages/ # Installable packages

│ ├── github_ai_agents/ # AI agent implementations

│ └── sleeper_detection/ # AI backdoor detection system

├── tools/ # MCP servers and utilities

│ ├── mcp/ # Modular MCP servers

│ │ ├── code_quality/ # Formatting & linting

│ │ ├── content_creation/ # Manim & LaTeX

│ │ ├── gemini/ # AI consultation

│ │ ├── gaea2/ # Terrain generation

│ │ ├── blender/ # 3D content creation

│ │ ├── codex/ # AI-powered code generation

│ │ ├── opencode/ # Code generation

│ │ ├── crush/ # Code generation

│ │ ├── video_editor/ # AI-powered video editing

│ │ ├── meme_generator/ # Meme creation

│ │ ├── elevenlabs_speech/# Speech synthesis

│ │ ├── virtual_character/# AI agent embodiment

│ │ ├── ai_toolkit/ # LoRA training interface

│ │ ├── comfyui/ # Image generation interface

│ │ └── core/ # Shared components

│ └── cli/ # Command-line tools

│ ├── agents/ # Agent runner scripts

│ │ ├── run_claude.sh # Claude Code Runner

│ │ ├── run_codex.sh # Codex runner

│ │ ├── run_opencode.sh # OpenCode runner

│ │ ├── run_crush.sh # Crush runner

│ │ └── run_gemini.sh # Gemini CLI runner

│ ├── containers/ # Container runner scripts

│ │ ├── run_codex_container.sh # Codex in container

│ │ ├── run_opencode_container.sh # OpenCode in container

│ │ ├── run_crush_container.sh # Crush in container

│ │ └── run_gemini_container.sh # Gemini in container

│ └── utilities/ # Other CLI utilities

├── automation/ # CI/CD and automation scripts

│ ├── analysis/ # Code and project analysis tools

│ ├── ci-cd/ # CI/CD pipeline scripts

│ ├── corporate-proxy/ # Corporate proxy integrations

│ │ ├── gemini/ # Gemini CLI proxy wrapper

│ │ ├── codex/ # Codex proxy wrapper

│ │ ├── opencode/ # OpenCode proxy wrapper

│ │ ├── crush/ # Crush proxy wrapper

│ │ └── shared/ # Shared proxy components

│ ├── launchers/ # Service launcher scripts

│ ├── monitoring/ # Service and PR monitoring

│ ├── review/ # Code review automation

│ ├── scripts/ # Utility scripts

│ ├── security/ # Security and validation

│ ├── sleeper-detection/ # Sleeper detection automation

│ ├── setup/ # Setup and installation scripts

│ └── testing/ # Testing utilities

├── tests/ # Test files

├── docs/ # Documentation

├── config/ # Configuration files

- Code Quality - Formatting, linting, auto-formatting

- Content Creation - Manim animations, LaTeX, TikZ diagrams

- Gaea2 - Terrain generation (Documentation)

- Blender - 3D content creation, rendering, physics simulation (Documentation)

- Gemini - AI consultation (containerized and host modes available)

- Codex - AI-powered code generation and completion

- OpenCode - Comprehensive code generation

- Crush - Fast code snippets

- Meme Generator - Create memes with templates

- ElevenLabs Speech - Advanced TTS with v3 model, 50+ audio tags, 74 languages (Documentation)

- Video Editor - AI-powered video editing with transcription and scene detection (Documentation)

- Virtual Character - AI agent embodiment in virtual worlds (VRChat, Blender, Unity) (Documentation)

- AI Toolkit - LoRA training interface (remote: 192.168.0.152:8012)

- ComfyUI - Image generation interface (remote: 192.168.0.152:8013)

-

STDIO Mode (local MCPs): Configured in

.mcp.json, auto-started by Claude - HTTP Mode (remote MCPs): Run the MCP using docker-compose on the remote node.

See MCP Architecture Documentation and STDIO vs HTTP Modes for details.

For complete tool listings, see MCP Tools Reference

See .env.example for all available options.

-

.mcp.json- MCP server configuration for Claude Code -

docker-compose.yml- Container services configuration -

CLAUDE.md- Project-specific Claude Code instructions (root directory) -

CRUSH.md- Crush AI assistant instructions (root directory) -

AGENTS.md- Universal AI agent configuration and guidelines (root directory) -

docs/ai-agents/project-context.md- Context for AI reviewers

All Python operations run in Docker containers:

# Run CI operations

./automation/ci-cd/run-ci.sh format # Check formatting

./automation/ci-cd/run-ci.sh lint-basic # Basic linting

./automation/ci-cd/run-ci.sh test # Run tests

./automation/ci-cd/run-ci.sh full # Full CI pipeline

# Run specific tests

docker-compose run --rm python-ci pytest tests/test_mcp_tools.py -v- Pull Request Validation - Automatic Gemini AI review

- Continuous Integration - Full CI pipeline

- Code Quality - Multi-stage linting (containerized)

- Automated Testing - Unit and integration tests

- Security Scanning - Bandit and safety checks

All workflows run on self-hosted runners for zero-cost operation.

- AGENTS.md - Universal AI agent configuration and guidelines

- CLAUDE.md - Claude-specific instructions and commands

- CRUSH.md - Crush AI assistant instructions

- MCP Architecture - Modular server design

- AI Agents Documentation - Seven AI agents overview

- Template Quickstart Guide - Customize the template for your needs

- Self-Hosted Runner Setup

- GitHub Environments Setup

- Containerized CI

This project is released under the Unlicense (public domain dedication).

For jurisdictions that do not recognize public domain: As a fallback, this project is also available under the MIT License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for template-repo

Similar Open Source Tools

template-repo

The template-repo is a comprehensive development ecosystem with 6 AI agents, 14 MCP servers, and complete CI/CD automation running on self-hosted, zero-cost infrastructure. It follows a container-first approach, with all tools and operations running in Docker containers, zero external dependencies, self-hosted infrastructure, single maintainer design, and modular MCP architecture. The repo provides AI agents for development and automation, features 14 MCP servers for various tasks, and includes security measures, safety training, and sleeper detection system. It offers features like video editing, terrain generation, 3D content creation, AI consultation, image generation, and more, with a focus on maximum portability and consistency.

Call

Call is an open-source AI-native alternative to Google Meet and Zoom, offering video calling, team collaboration, contact management, meeting scheduling, AI-powered features, security, and privacy. It is cross-platform, web-based, mobile responsive, and supports offline capabilities. The tech stack includes Next.js, TypeScript, Tailwind CSS, Mediasoup-SFU, React Query, Zustand, Hono, PostgreSQL, Drizzle ORM, Better Auth, Turborepo, Docker, Vercel, and Rate Limiting.

bifrost

Bifrost is a high-performance AI gateway that unifies access to multiple providers through a single OpenAI-compatible API. It offers features like automatic failover, load balancing, semantic caching, and enterprise-grade functionalities. Users can deploy Bifrost in seconds with zero configuration, benefiting from its core infrastructure, advanced features, enterprise and security capabilities, and developer experience. The repository structure is modular, allowing for maximum flexibility. Bifrost is designed for quick setup, easy configuration, and seamless integration with various AI models and tools.

mcp-memory-service

The MCP Memory Service is a universal memory service designed for AI assistants, providing semantic memory search and persistent storage. It works with various AI applications and offers fast local search using SQLite-vec and global distribution through Cloudflare. The service supports intelligent memory management, universal compatibility with AI tools, flexible storage options, and is production-ready with cross-platform support and secure connections. Users can store and recall memories, search by tags, check system health, and configure the service for Claude Desktop integration and environment variables.

AgriTech

AgriTech is an AI-powered smart agriculture platform designed to assist farmers with crop recommendations, yield prediction, plant disease detection, and community-driven collaboration—enabling sustainable and data-driven farming practices. It offers AI-driven decision support for modern agriculture, early-stage plant disease detection, crop yield forecasting using machine learning models, and a collaborative ecosystem for farmers and stakeholders. The platform includes features like crop recommendation, yield prediction, disease detection, an AI chatbot for platform guidance and agriculture support, a farmer community, and shopkeeper listings. AgriTech's AI chatbot provides comprehensive support for farmers with features like platform guidance, agriculture support, decision making, image analysis, and 24/7 support. The tech stack includes frontend technologies like HTML5, CSS3, JavaScript, backend technologies like Python (Flask) and optional Node.js, machine learning libraries like TensorFlow, Scikit-learn, OpenCV, and database & DevOps tools like MySQL, MongoDB, Firebase, Docker, and GitHub Actions.

pluely

Pluely is a versatile and user-friendly tool for managing tasks and projects. It provides a simple interface for creating, organizing, and tracking tasks, making it easy to stay on top of your work. With features like task prioritization, due date reminders, and collaboration options, Pluely helps individuals and teams streamline their workflow and boost productivity. Whether you're a student juggling assignments, a professional managing multiple projects, or a team coordinating tasks, Pluely is the perfect solution to keep you organized and efficient.

cc-sdd

The cc-sdd repository provides a tool for AI-Driven Development Life Cycle with Spec-Driven Development workflows for Claude Code and Gemini CLI. It includes powerful slash commands, Project Memory for AI learning, structured AI-DLC workflow, Spec-Driven Development methodology, and Kiro IDE compatibility. Ideal for feature development, code reviews, technical planning, and maintaining development standards. The tool supports multiple coding agents, offers an AI-DLC workflow with quality gates, and allows for advanced options like language and OS selection, preview changes, safe updates, and custom specs directory. It integrates AI-Driven Development Life Cycle, Project Memory, Spec-Driven Development, supports cross-platform usage, multi-language support, and safe updates with backup options.

opcode

opcode is a powerful desktop application built with Tauri 2 that serves as a command center for interacting with Claude Code. It offers a visual GUI for managing Claude Code sessions, creating custom agents, tracking usage, and more. Users can navigate projects, create specialized AI agents, monitor usage analytics, manage MCP servers, create session checkpoints, edit CLAUDE.md files, and more. The tool bridges the gap between command-line tools and visual experiences, making AI-assisted development more intuitive and productive.

aegra

Aegra is a self-hosted AI agent backend platform that provides LangGraph power without vendor lock-in. Built with FastAPI + PostgreSQL, it offers complete control over agent orchestration for teams looking to escape vendor lock-in, meet data sovereignty requirements, enable custom deployments, and optimize costs. Aegra is Agent Protocol compliant and perfect for teams seeking a free, self-hosted alternative to LangGraph Platform with zero lock-in, full control, and compatibility with existing LangGraph Client SDK.

ito

Ito is an intelligent voice assistant that provides seamless voice dictation to any application on your computer. It works in any app, offers global keyboard shortcuts, real-time transcription, and instant text insertion. It is smart and adaptive with features like custom dictionary, context awareness, multi-language support, and intelligent punctuation. Users can customize trigger keys, audio preferences, and privacy controls. It also offers data management features like a notes system, interaction history, cloud sync, and export capabilities. Ito is built as a modern Electron application with a multi-process architecture and utilizes technologies like React, TypeScript, Rust, gRPC, and AWS CDK.

Callytics

Callytics is an advanced call analytics solution that leverages speech recognition and large language models (LLMs) technologies to analyze phone conversations from customer service and call centers. By processing both the audio and text of each call, it provides insights such as sentiment analysis, topic detection, conflict detection, profanity word detection, and summary. These cutting-edge techniques help businesses optimize customer interactions, identify areas for improvement, and enhance overall service quality. When an audio file is placed in the .data/input directory, the entire pipeline automatically starts running, and the resulting data is inserted into the database. This is only a v1.1.0 version; many new features will be added, models will be fine-tuned or trained from scratch, and various optimization efforts will be applied.

structured-prompt-builder

A lightweight, browser-first tool for designing well-structured AI prompts with a clean UI, live previews, a local Prompt Library, and optional Gemini-powered prompt optimization. It supports structured fields like Role, Task, Audience, Style, Tone, Constraints, Steps, Inputs, and Few-shot examples. Users can copy/download prompts in Markdown, JSON, and YAML formats, and utilize model parameters like Temperature, Top-p, Max tokens, Presence & Frequency penalties. The tool also features a Local Prompt Library for saving, loading, duplicating, and deleting prompts, as well as a Gemini Optimizer for cleaning grammar/clarity without altering the schema. It offers dark/light friendly styles and a focused reading mode for long prompts.

botserver

General Bots is a self-hosted AI automation platform and LLM conversational platform focused on convention over configuration and code-less approaches. It serves as the core API server handling LLM orchestration, business logic, database operations, and multi-channel communication. The platform offers features like multi-vendor LLM API, MCP + LLM Tools Generation, Semantic Caching, Web Automation Engine, Enterprise Data Connectors, and Git-like Version Control. It enforces a ZERO TOLERANCE POLICY for code quality and security, with strict guidelines for error handling, performance optimization, and code patterns. The project structure includes modules for core functionalities like Rhai BASIC interpreter, security, shared types, tasks, auto task system, file operations, learning system, and LLM assistance.

Lynkr

Lynkr is a self-hosted proxy server that unlocks various AI coding tools like Claude Code CLI, Cursor IDE, and Codex Cli. It supports multiple LLM providers such as Databricks, AWS Bedrock, OpenRouter, Ollama, llama.cpp, Azure OpenAI, Azure Anthropic, OpenAI, and LM Studio. Lynkr offers cost reduction, local/private execution, remote or local connectivity, zero code changes, and enterprise-ready features. It is perfect for developers needing provider flexibility, cost control, self-hosted AI with observability, local model execution, and cost reduction strategies.

AutoAgents

AutoAgents is a cutting-edge multi-agent framework built in Rust that enables the creation of intelligent, autonomous agents powered by Large Language Models (LLMs) and Ractor. Designed for performance, safety, and scalability. AutoAgents provides a robust foundation for building complex AI systems that can reason, act, and collaborate. With AutoAgents you can create Cloud Native Agents, Edge Native Agents and Hybrid Models as well. It is so extensible that other ML Models can be used to create complex pipelines using Actor Framework.

Automodel

Automodel is a Python library for automating the process of building and evaluating machine learning models. It provides a set of tools and utilities to streamline the model development workflow, from data preprocessing to model selection and evaluation. With Automodel, users can easily experiment with different algorithms, hyperparameters, and feature engineering techniques to find the best model for their dataset. The library is designed to be user-friendly and customizable, allowing users to define their own pipelines and workflows. Automodel is suitable for data scientists, machine learning engineers, and anyone looking to quickly build and test machine learning models without the need for manual intervention.

For similar tasks

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

basehub

JavaScript / TypeScript SDK for BaseHub, the first AI-native content hub. **Features:** * ✨ Infers types from your BaseHub repository... _meaning IDE autocompletion works great._ * 🏎️ No dependency on graphql... _meaning your bundle is more lightweight._ * 🌐 Works everywhere `fetch` is supported... _meaning you can use it anywhere._

novel

Novel is an open-source Notion-style WYSIWYG editor with AI-powered autocompletions. It allows users to easily create and edit content with the help of AI suggestions. The tool is built on a modern tech stack and supports cross-framework development. Users can deploy their own version of Novel to Vercel with one click and contribute to the project by reporting bugs or making feature enhancements through pull requests.

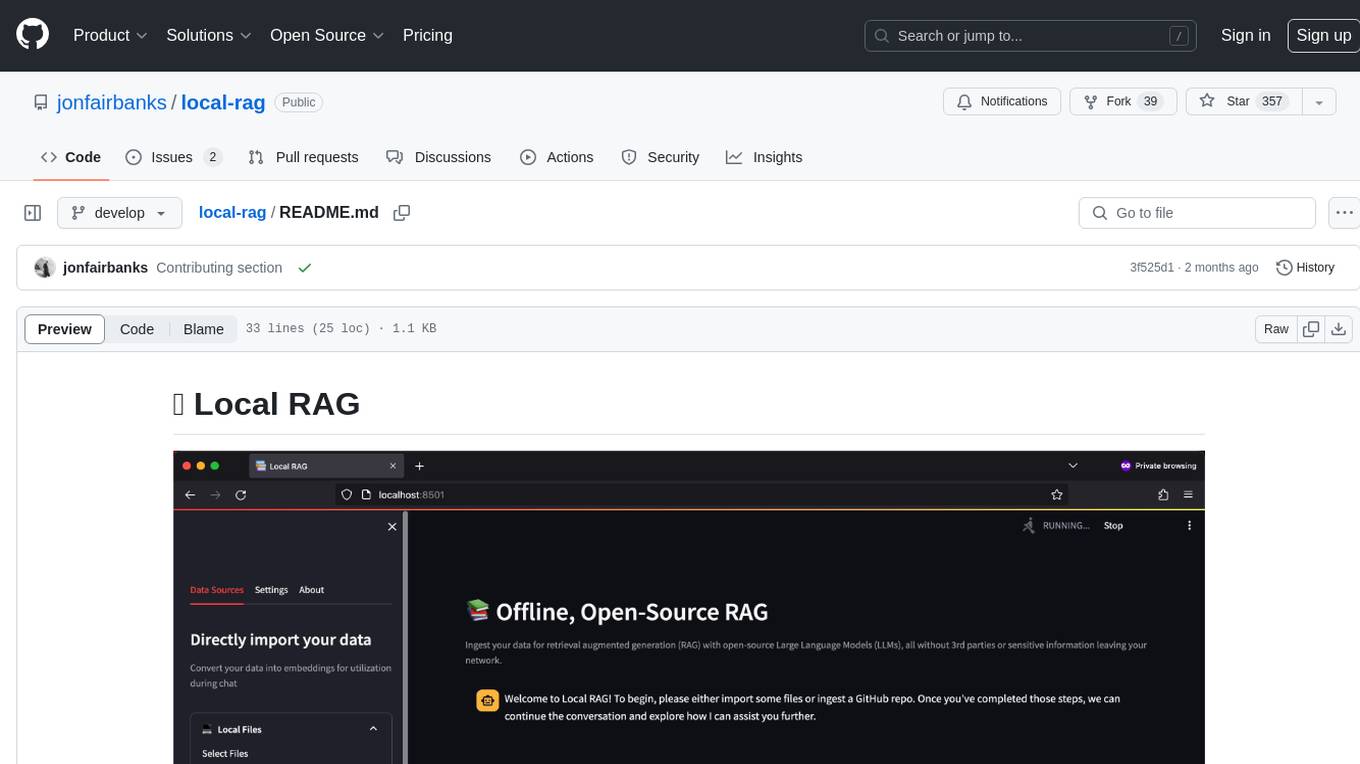

local-rag

Local RAG is an offline, open-source tool that allows users to ingest files for retrieval augmented generation (RAG) using large language models (LLMs) without relying on third parties or exposing sensitive data. It supports offline embeddings and LLMs, multiple sources including local files, GitHub repos, and websites, streaming responses, conversational memory, and chat export. Users can set up and deploy the app, learn how to use Local RAG, explore the RAG pipeline, check planned features, known bugs and issues, access additional resources, and contribute to the project.

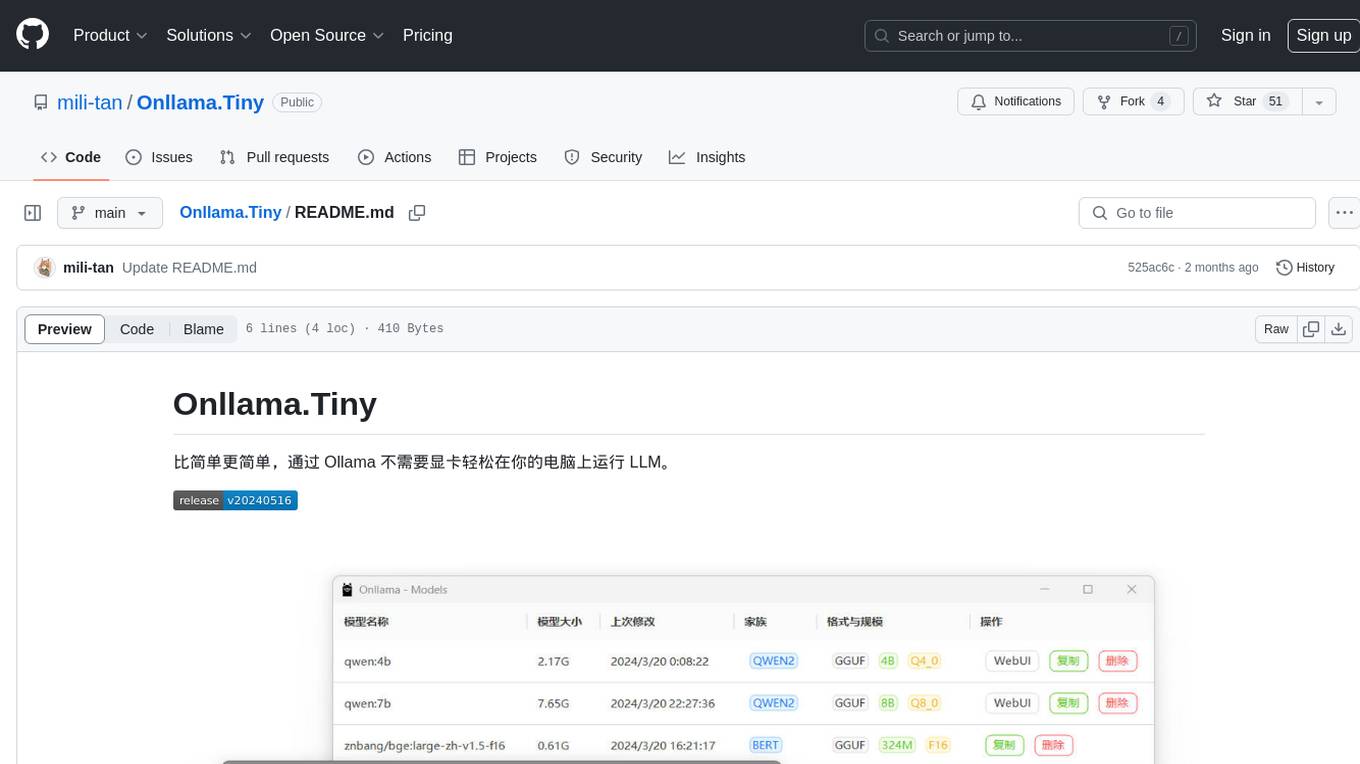

Onllama.Tiny

Onllama.Tiny is a lightweight tool that allows you to easily run LLM on your computer without the need for a dedicated graphics card. It simplifies the process of running LLM, making it more accessible for users. The tool provides a user-friendly interface and streamlines the setup and configuration required to run LLM on your machine. With Onllama.Tiny, users can quickly set up and start using LLM for various applications and projects.

ComfyUI-BRIA_AI-RMBG

ComfyUI-BRIA_AI-RMBG is an unofficial implementation of the BRIA Background Removal v1.4 model for ComfyUI. The tool supports batch processing, including video background removal, and introduces a new mask output feature. Users can install the tool using ComfyUI Manager or manually by cloning the repository. The tool includes nodes for automatically loading the Removal v1.4 model and removing backgrounds. Updates include support for batch processing and the addition of a mask output feature.

enterprise-h2ogpte

Enterprise h2oGPTe - GenAI RAG is a repository containing code examples, notebooks, and benchmarks for the enterprise version of h2oGPTe, a powerful AI tool for generating text based on the RAG (Retrieval-Augmented Generation) architecture. The repository provides resources for leveraging h2oGPTe in enterprise settings, including implementation guides, performance evaluations, and best practices. Users can explore various applications of h2oGPTe in natural language processing tasks, such as text generation, content creation, and conversational AI.

semantic-kernel-docs

The Microsoft Semantic Kernel Documentation GitHub repository contains technical product documentation for Semantic Kernel. It serves as the home of technical content for Microsoft products and services. Contributors can learn how to make contributions by following the Docs contributor guide. The project follows the Microsoft Open Source Code of Conduct.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.