cc-sdd

Kiro compatible Spec-Driven Development for Claude Code, Gemini CLI and Cursor. High quality slash commands that enforce structured requirements→design→tasks workflow and steering, transforming how you build with AI

Stars: 944

The cc-sdd repository provides a tool for AI-Driven Development Life Cycle with Spec-Driven Development workflows for Claude Code and Gemini CLI. It includes powerful slash commands, Project Memory for AI learning, structured AI-DLC workflow, Spec-Driven Development methodology, and Kiro IDE compatibility. Ideal for feature development, code reviews, technical planning, and maintaining development standards. The tool supports multiple coding agents, offers an AI-DLC workflow with quality gates, and allows for advanced options like language and OS selection, preview changes, safe updates, and custom specs directory. It integrates AI-Driven Development Life Cycle, Project Memory, Spec-Driven Development, supports cross-platform usage, multi-language support, and safe updates with backup options.

README:

📦 Beta Release - Ready to use, actively improving. Report issues →

One command installs AI-DLC (AI-Driven Development Life Cycle) with SDD (Spec-Driven Development) workflows for Claude Code and Gemini CLI.

# Basic installation (default: Claude Code)

npx cc-sdd@latest

# With language: --lang en (English) or --lang ja (Japanese) or --lang zh-TW (Traditional Chinese)

# With OS: --os mac | --os windows | --os linux (if auto-detection fails)

npx cc-sdd@latest --lang ja --os mac

# With different agents: gemini-cli

npx cc-sdd@latest --gemini-cli

# Ready to go! Now Claude Code and Gemini CLI can leverage `/kiro:spec-init <what to build>` and the full SDD workflowAfter running cc-sdd, you'll have:

-

8 powerful slash commands (

/kiro:steering,/kiro:spec-requirements, etc.) - Project Memory (steering) - AI learns your codebase, patterns, and preferences

- Structured AI-DLC workflow with quality gates and approvals

- Spec-Driven Development methodology built-in

- Kiro IDE compatibility for seamless spec management

Perfect for: Feature development, code reviews, technical planning, and maintaining development standards across your team.

Includes Claude Code your project context, Project Memory (steering) and development patterns: requirements → design → tasks → implementation. Kiro IDE compatible — Reuse Kiro-style SDD specs and workflows seamlessly.

【Claude Code/Gemini CLI】ワンライナーで AI-DLC(AI-Driven Development Life Cycle) と Spec-Driven Development(仕様駆動開発) のワークフローを導入。プロジェクト直下に Slash Commands 一式と設定ファイル(Claude Code用の CLAUDE.md / Gemini CLI用の GEMINI.md)を配置し、プロジェクトの文脈と開発パターン(要件 → 設計 → タスク → 実装)、プロジェクトメモリ(ステアリング) を含みます。

📝 関連記事

Kiroの仕様書駆動開発プロセスをClaude Codeで徹底的に再現した - Zenn記事

📖 Project Overview (Spec-Driven Development workflow) • 日本語: README_ja.md • English: README_en.md • 繁體中文: README_zh-TW.md

Transform your agentic development workflow with Spec-Driven Development

- ✅ Claude Code - Fully supported with all 8 custom slash commands and CLAUDE.md

- ✅ Gemini CLI - Fully supported with all 8 custom commands and GEMINI.md

- 📅 More agents - Additional AI coding assistants planned

Currently optimized for Claude Code. Use --agent claude-code (default) for full functionality.

Step 0: Setup Project Memory (Recommended)

# Teach Claude Code about your project

/kiro:steeringSDD Development Flow:

# 1. Start a new feature spec

/kiro:spec-init User authentication with OAuth and 2FA

# 2. Generate detailed requirements

/kiro:spec-requirements user-auth

# 3. Create technical design (after requirements review)

/kiro:spec-design user-auth -y

# 4. Break down into tasks (after design review)

/kiro:spec-tasks user-auth -y

# 5. Implement with TDD (after task review)

/kiro:spec-impl user-auth 1.1,1.2,1.3Quality Gates: Each phase requires human approval before proceeding (use -y to auto-approve).

# Choose language and OS

npx cc-sdd@latest --lang ja --os mac

# Preview changes before applying

npx cc-sdd@latest --dry-run

# Safe update with backup

npx cc-sdd@latest --backup --overwrite force

# Custom specs directory

npx cc-sdd@latest --kiro-dir docs/specs✅ AI-DLC Integration - Complete AI-Driven Development Life Cycle

✅ Project Memory - Steering that learns your codebase and patterns

✅ Spec-Driven Development - Structured requirements → design → tasks → implementation

✅ Cross-Platform - macOS, Linux, and Windows support with auto-detection (Linux reuses mac templates)

✅ Multi-Language - Japanese, English, Traditional Chinese

✅ Safe Updates - Interactive prompts with backup options

📝 Related Articles

Kiroの仕様書駆動開発プロセスをClaude Codeで徹底的に再現した - Zenn Article (Japanese)

This repository contains the cc-sdd NPM package located in tools/cc-sdd/.

For detailed documentation, installation instructions, and usage examples, see:

- Tool Documentation - Complete cc-sdd tool guide

- Japanese Documentation - 日本語版ツール説明

claude-code-spec/

├── tools/cc-sdd/ # Main cc-sdd NPM package

│ ├── src/ # TypeScript source code

│ ├── templates/ # Agent templates (Claude Code, Gemini CLI)

│ ├── package.json # Package configuration

│ └── README.md # Tool documentation

├── docs/ # Documentation

├── .claude/ # Example Claude Code commands

├── .gemini/ # Example Gemini CLI commands

├── README.md # This file (English)

├── README_ja.md # Japanese project README

└── README_zh-TW.md # Traditional Chinese project README

MIT License

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for cc-sdd

Similar Open Source Tools

cc-sdd

The cc-sdd repository provides a tool for AI-Driven Development Life Cycle with Spec-Driven Development workflows for Claude Code and Gemini CLI. It includes powerful slash commands, Project Memory for AI learning, structured AI-DLC workflow, Spec-Driven Development methodology, and Kiro IDE compatibility. Ideal for feature development, code reviews, technical planning, and maintaining development standards. The tool supports multiple coding agents, offers an AI-DLC workflow with quality gates, and allows for advanced options like language and OS selection, preview changes, safe updates, and custom specs directory. It integrates AI-Driven Development Life Cycle, Project Memory, Spec-Driven Development, supports cross-platform usage, multi-language support, and safe updates with backup options.

opcode

opcode is a powerful desktop application built with Tauri 2 that serves as a command center for interacting with Claude Code. It offers a visual GUI for managing Claude Code sessions, creating custom agents, tracking usage, and more. Users can navigate projects, create specialized AI agents, monitor usage analytics, manage MCP servers, create session checkpoints, edit CLAUDE.md files, and more. The tool bridges the gap between command-line tools and visual experiences, making AI-assisted development more intuitive and productive.

claude-code-plugins-plus-skills

Claude Code Skills & Plugins Hub is a comprehensive marketplace for agent skills and plugins, offering 1537 production-ready agent skills and 270 total plugins. It provides a learning lab with guides, diagrams, and examples for building production agent workflows. The package manager CLI allows users to discover, install, and manage plugins from their terminal, with features like searching, listing, installing, updating, and validating plugins. The marketplace is not on GitHub Marketplace and does not support built-in monetization. It is community-driven, actively maintained, and focuses on quality over quantity, aiming to be the definitive resource for Claude Code plugins.

claude-007-agents

Claude Code Agents is an open-source AI agent system designed to enhance development workflows by providing specialized AI agents for orchestration, resilience engineering, and organizational memory. These agents offer specialized expertise across technologies, AI system with organizational memory, and an agent orchestration system. The system includes features such as engineering excellence by design, advanced orchestration system, Task Master integration, live MCP integrations, professional-grade workflows, and organizational intelligence. It is suitable for solo developers, small teams, enterprise teams, and open-source projects. The system requires a one-time bootstrap setup for each project to analyze the tech stack, select optimal agents, create configuration files, set up Task Master integration, and validate system readiness.

AgriTech

AgriTech is an AI-powered smart agriculture platform designed to assist farmers with crop recommendations, yield prediction, plant disease detection, and community-driven collaboration—enabling sustainable and data-driven farming practices. It offers AI-driven decision support for modern agriculture, early-stage plant disease detection, crop yield forecasting using machine learning models, and a collaborative ecosystem for farmers and stakeholders. The platform includes features like crop recommendation, yield prediction, disease detection, an AI chatbot for platform guidance and agriculture support, a farmer community, and shopkeeper listings. AgriTech's AI chatbot provides comprehensive support for farmers with features like platform guidance, agriculture support, decision making, image analysis, and 24/7 support. The tech stack includes frontend technologies like HTML5, CSS3, JavaScript, backend technologies like Python (Flask) and optional Node.js, machine learning libraries like TensorFlow, Scikit-learn, OpenCV, and database & DevOps tools like MySQL, MongoDB, Firebase, Docker, and GitHub Actions.

sandboxed.sh

sandboxed.sh is a self-hosted cloud orchestrator for AI coding agents that provides isolated Linux workspaces with Claude Code, OpenCode & Amp runtimes. It allows users to hand off entire development cycles, run multi-day operations unattended, and keep sensitive data local by analyzing data against scientific literature. The tool features dual runtime support, mission control for remote agent management, isolated workspaces, a git-backed library, MCP registry, and multi-platform support with a web dashboard and iOS app.

finite-monkey-engine

FiniteMonkey is an advanced vulnerability mining engine powered purely by GPT, requiring no prior knowledge base or fine-tuning. Its effectiveness significantly surpasses most current related research approaches. The tool is task-driven, prompt-driven, and focuses on prompt design, leveraging 'deception' and hallucination as key mechanics. It has helped identify vulnerabilities worth over $60,000 in bounties. The tool requires PostgreSQL database, OpenAI API access, and Python environment for setup. It supports various languages like Solidity, Rust, Python, Move, Cairo, Tact, Func, Java, and Fake Solidity for scanning. FiniteMonkey is best suited for logic vulnerability mining in real projects, not recommended for academic vulnerability testing. GPT-4-turbo is recommended for optimal results with an average scan time of 2-3 hours for medium projects. The tool provides detailed scanning results guide and implementation tips for users.

llxprt-code

LLxprt Code is an AI-powered coding assistant that works with any LLM provider, offering a command-line interface for querying and editing codebases, generating applications, and automating development workflows. It supports various subscriptions, provider flexibility, top open models, local model support, and a privacy-first approach. Users can interact with LLxprt Code in both interactive and non-interactive modes, leveraging features like subscription OAuth, multi-account failover, load balancer profiles, and extensive provider support. The tool also allows for the creation of advanced subagents for specialized tasks and integrates with the Zed editor for in-editor chat and code selection.

evi-run

evi-run is a powerful, production-ready multi-agent AI system built on Python using the OpenAI Agents SDK. It offers instant deployment, ultimate flexibility, built-in analytics, Telegram integration, and scalable architecture. The system features memory management, knowledge integration, task scheduling, multi-agent orchestration, custom agent creation, deep research, web intelligence, document processing, image generation, DEX analytics, and Solana token swap. It supports flexible usage modes like private, free, and pay mode, with upcoming features including NSFW mode, task scheduler, and automatic limit orders. The technology stack includes Python 3.11, OpenAI Agents SDK, Telegram Bot API, PostgreSQL, Redis, and Docker & Docker Compose for deployment.

astrsk

astrsk is a tool that pushes the boundaries of AI storytelling by offering advanced AI agents, customizable response formatting, and flexible prompt editing for immersive roleplaying experiences. It provides complete AI agent control, a visual flow editor for conversation flows, and ensures 100% local-first data storage. The tool is true cross-platform with support for various AI providers and modern technologies like React, TypeScript, and Tailwind CSS. Coming soon features include cross-device sync, enhanced session customization, and community features.

ito

Ito is an intelligent voice assistant that provides seamless voice dictation to any application on your computer. It works in any app, offers global keyboard shortcuts, real-time transcription, and instant text insertion. It is smart and adaptive with features like custom dictionary, context awareness, multi-language support, and intelligent punctuation. Users can customize trigger keys, audio preferences, and privacy controls. It also offers data management features like a notes system, interaction history, cloud sync, and export capabilities. Ito is built as a modern Electron application with a multi-process architecture and utilizes technologies like React, TypeScript, Rust, gRPC, and AWS CDK.

flashinfer

FlashInfer is a library for Language Languages Models that provides high-performance implementation of LLM GPU kernels such as FlashAttention, PageAttention and LoRA. FlashInfer focus on LLM serving and inference, and delivers state-the-art performance across diverse scenarios.

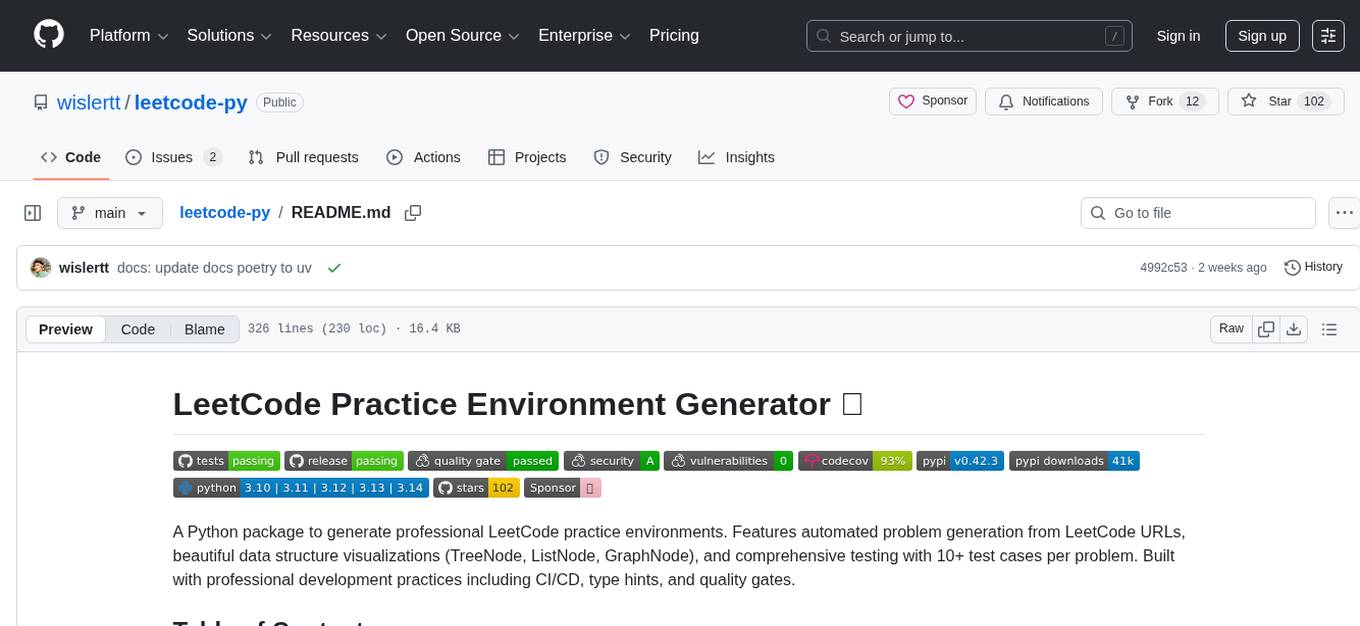

leetcode-py

A Python package to generate professional LeetCode practice environments. Features automated problem generation from LeetCode URLs, beautiful data structure visualizations (TreeNode, ListNode, GraphNode), and comprehensive testing with 10+ test cases per problem. Built with professional development practices including CI/CD, type hints, and quality gates. The tool provides a modern Python development environment with production-grade features such as linting, test coverage, logging, and CI/CD pipeline. It also offers enhanced data structure visualization for debugging complex structures, flexible notebook support, and a powerful CLI for generating problems anywhere.

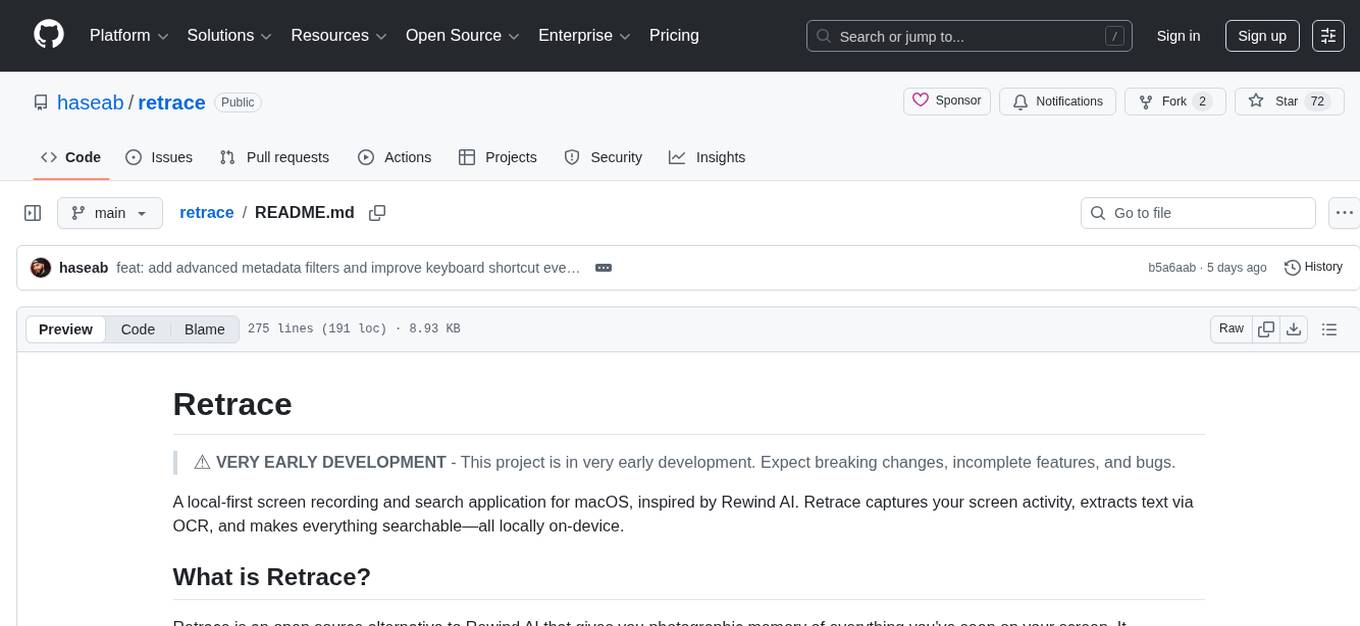

retrace

Retrace is a local-first screen recording and search application for macOS, inspired by Rewind AI. It captures screen activity, extracts text via OCR, and makes everything searchable locally on-device. The project is in very early development, offering features like continuous screen capture, OCR text extraction, full-text search, timeline viewer, dashboard analytics, Rewind AI import, settings panel, global hotkeys, HEVC video encoding, search highlighting, privacy controls, and more. Built with a modular architecture, Retrace uses Swift 5.9+, SwiftUI, Vision framework, SQLite with FTS5, HEVC video encoding, CryptoKit for encryption, and more. Future releases will include features like audio transcription and semantic search. Retrace requires macOS 13.0+ (Apple Silicon required) and Xcode 15.0+ for building from source, with permissions for screen recording and accessibility. Contributions are welcome, and the project is licensed under the MIT License.

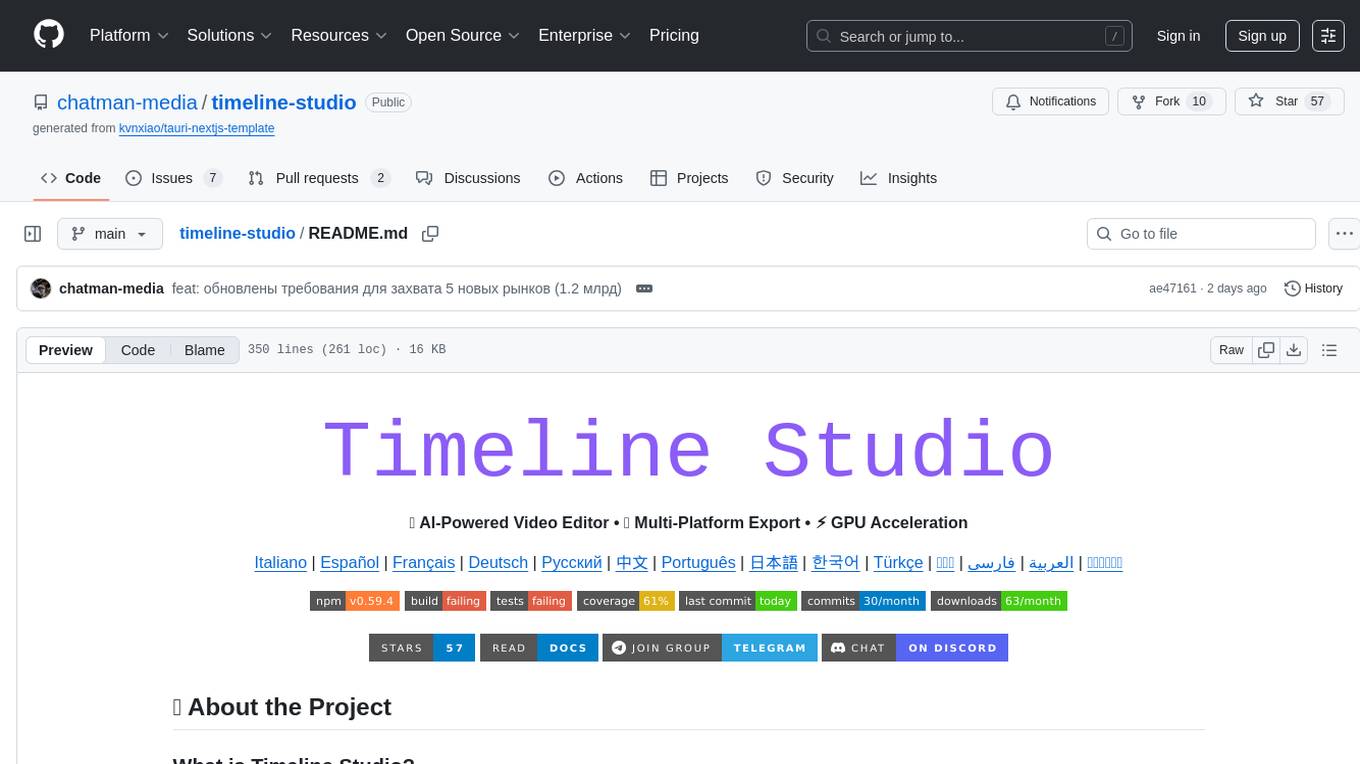

timeline-studio

Timeline Studio is a next-generation professional video editor with AI integration that automates content creation for social media. It combines the power of desktop applications with the convenience of web interfaces. With 257 AI tools, GPU acceleration, plugin system, multi-language interface, and local processing, Timeline Studio offers complete video production automation. Users can create videos for various social media platforms like TikTok, YouTube, Vimeo, Telegram, and Instagram with optimized versions. The tool saves time, understands trends, provides professional quality, and allows for easy feature extension through plugins. Timeline Studio is open source, transparent, and offers significant time savings and quality improvements for video editing tasks.

For similar tasks

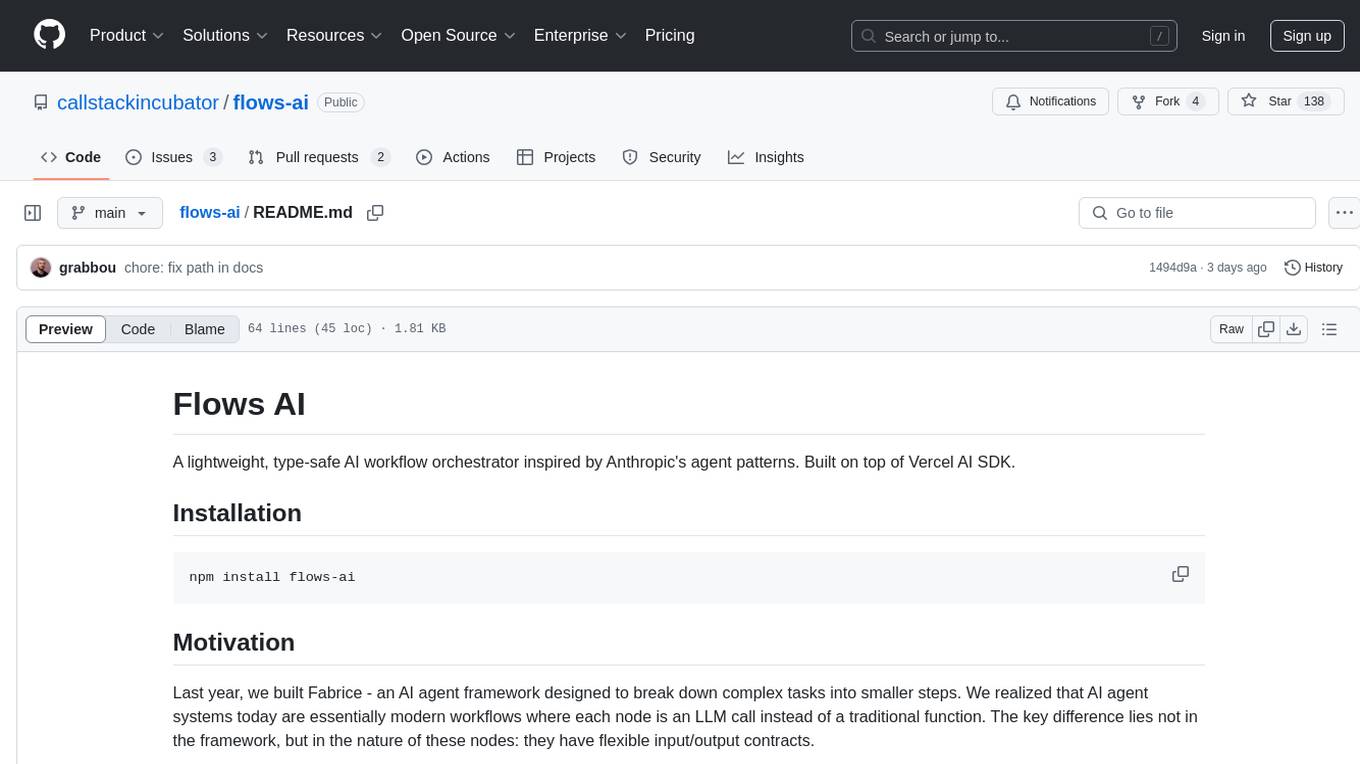

flows-ai

Flows AI is a lightweight, type-safe AI workflow orchestrator inspired by Anthropic's agent patterns and built on top of Vercel AI SDK. It provides a simple and deterministic way to build AI workflows by connecting different input/outputs together, either explicitly defining workflows or dynamically breaking down complex tasks using an orchestrator agent. The library is designed without classes or state, focusing on flexible input/output contracts for nodes.

cc-sdd

The cc-sdd repository provides a tool for AI-Driven Development Life Cycle with Spec-Driven Development workflows for Claude Code and Gemini CLI. It includes powerful slash commands, Project Memory for AI learning, structured AI-DLC workflow, Spec-Driven Development methodology, and Kiro IDE compatibility. Ideal for feature development, code reviews, technical planning, and maintaining development standards. The tool supports multiple coding agents, offers an AI-DLC workflow with quality gates, and allows for advanced options like language and OS selection, preview changes, safe updates, and custom specs directory. It integrates AI-Driven Development Life Cycle, Project Memory, Spec-Driven Development, supports cross-platform usage, multi-language support, and safe updates with backup options.

glisten-ai

Glisten-ai Tutorial Course is the final code for a YouTube tutorial course demonstrating the creation of a dark Next.js, Prismic, Tailwind, TypeScript, and GSAP website. The repository contains the code used in the tutorial, providing a practical example for building websites using these technologies.

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

code2prompt

code2prompt is a command-line tool that converts your codebase into a single LLM prompt with a source tree, prompt templating, and token counting. It automates generating LLM prompts from codebases of any size, customizing prompt generation with Handlebars templates, respecting .gitignore, filtering and excluding files using glob patterns, displaying token count, including Git diff output, copying prompt to clipboard, saving prompt to an output file, excluding files and folders, adding line numbers to source code blocks, and more. It helps streamline the process of creating LLM prompts for code analysis, generation, and other tasks.

ClashRoyaleBuildABot

Clash Royale Build-A-Bot is a project that allows users to build their own bot to play Clash Royale. It provides an advanced state generator that accurately returns detailed information using cutting-edge technologies. The project includes tutorials for setting up the environment, building a basic bot, and understanding state generation. It also offers updates such as replacing YOLOv5 with YOLOv8 unit model and enhancing performance features like placement and elixir management. The future roadmap includes plans to label more images of diverse cards, add a tracking layer for unit predictions, publish tutorials on Q-learning and imitation learning, release the YOLOv5 training notebook, implement chest opening and card upgrading features, and create a leaderboard for the best bots developed with this repository.

RA.Aid

RA.Aid is an AI software development agent powered by `aider` and advanced reasoning models like `o1`. It combines `aider`'s code editing capabilities with LangChain's agent-based task execution framework to provide an intelligent assistant for research, planning, and implementation of multi-step development tasks. It handles complex programming tasks by breaking them down into manageable steps, running shell commands automatically, and leveraging expert reasoning models like OpenAI's o1. RA.Aid is designed for everyday software development, offering features such as multi-step task planning, automated command execution, and the ability to handle complex programming tasks beyond single-shot code edits.

claudine

Claudine is an AI agent designed to reason and act autonomously, leveraging the Anthropic API, Unix command line tools, HTTP, local hard drive data, and internet data. It can administer computers, analyze files, implement features in source code, create new tools, and gather contextual information from the internet. Users can easily add specialized tools. Claudine serves as a blueprint for implementing complex autonomous systems, with potential for customization based on organization-specific needs. The tool is based on the anthropic-kotlin-sdk and aims to evolve into a versatile command line tool similar to 'git', enabling branching sessions for different tasks.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.