sdialog

Synthetic Dialog Generation and Analysis with LLMs

Stars: 125

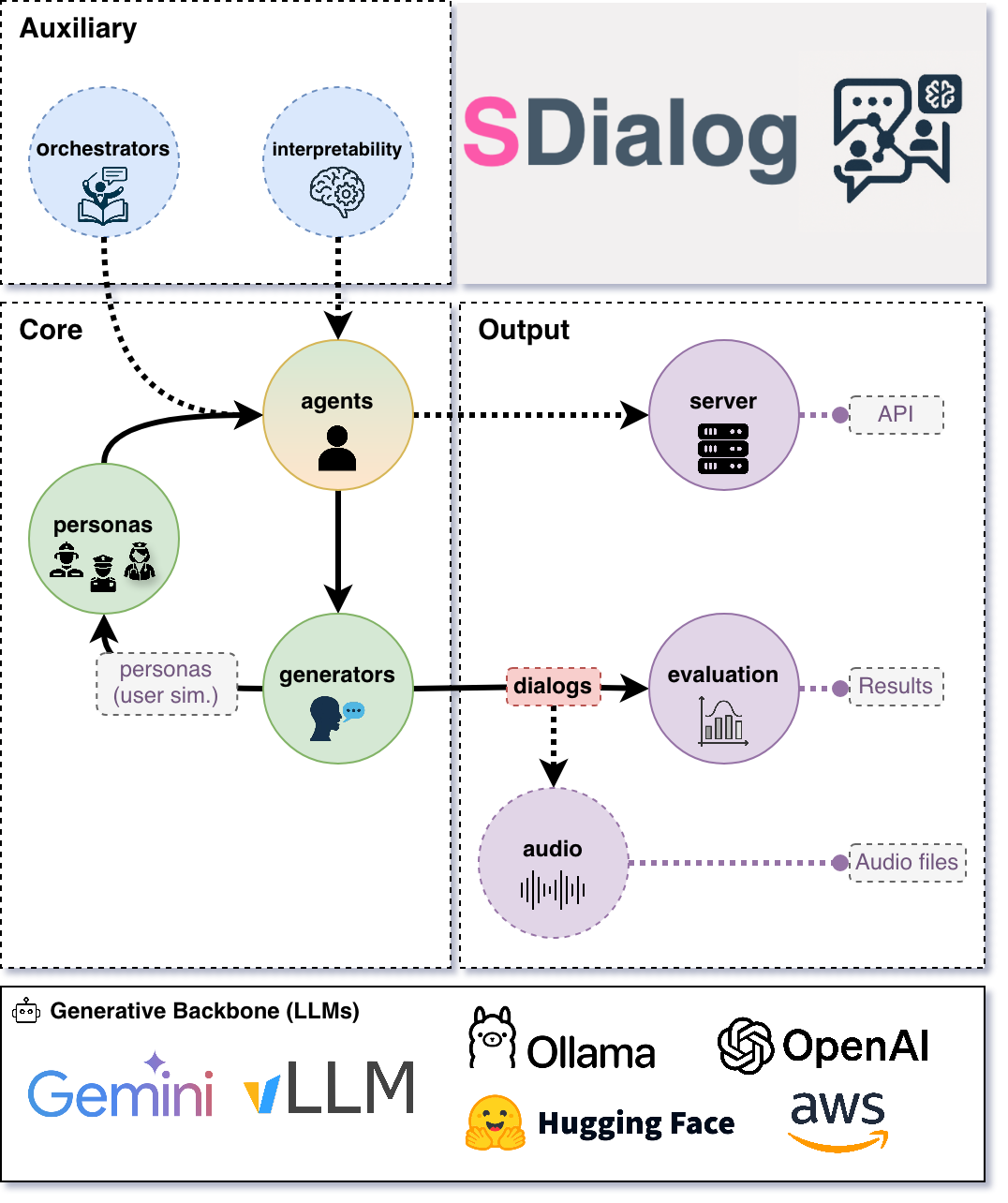

SDialog is an MIT-licensed open-source toolkit for building, simulating, and evaluating LLM-based conversational agents end-to-end. It aims to bridge agent construction, user simulation, dialog generation, and evaluation in a single reproducible workflow, enabling the generation of reliable, controllable dialog systems or data at scale. The toolkit standardizes a Dialog schema, offers persona-driven multi-agent simulation with LLMs, provides composable orchestration for precise control over behavior and flow, includes built-in evaluation metrics, and offers mechanistic interpretability. It allows for easy creation of user-defined components and interoperability across various AI platforms.

README:

Quick links: Website • GitHub • Docs • API • ArXiv paper • Demo (video) • Tutorials • Datasets (HF) • Issues

SDialog is an MIT-licensed open-source toolkit for building, simulating, and evaluating LLM-based conversational agents end-to-end. It aims to bridge agent construction → user simulation → dialog generation → evaluation in a single reproducible workflow, so you can generate reliable, controllable dialog systems or data at scale.

It standardizes a Dialog schema and offers persona‑driven multi‑agent simulation with LLMs, composable orchestration, built‑in metrics, and mechanistic interpretability.

- Standard dialog schema with JSON import/export (aiming to standardize dialog dataset formats with your help 🙏)

- Persona‑driven multi‑agent simulation with contexts, tools, and thoughts

- Composable orchestration for precise control over behavior and flow

- Built‑in evaluation (metrics + LLM‑as‑judge) for comparison and iteration

- Native mechanistic interpretability (inspect and steer activations)

- Easy creation of user-defined components by inheriting from base classes (personas, metrics, orchestrators, etc.)

- Interoperability across OpenAI, Hugging Face, Ollama, AWS Bedrock, Google GenAI, Anthropic, and more.

If you are building conversational systems, benchmarking dialog models, producing synthetic training corpora, simulating diverse users to test or probe conversational systems, or analyzing internal model behavior, SDialog provides an end‑to‑end workflow.

pip install sdialogAlternatively, a ready-to-use Apptainer image (.sif) with SDialog and all dependencies is available on Hugging Face and can be downloaded here.

apptainer exec --nv sdialog.sif python3 -c "import sdialog; print(sdialog.__version__)"[!NOTE] This Apptainer image also has the Ollama server preinstalled.

Here's a short, hands‑on example: a support agent helps a customer disputing a double charge. We add a small refund rule and two simple tools, generate three dialogs for evaluation, then serve the agent on port 1333 for Open WebUI or any OpenAI‑compatible client.

import sdialog

from sdialog import Context

from sdialog.agents import Agent

from sdialog.personas import SupportAgent, Customer

from sdialog.orchestrators import SimpleReflexOrchestrator

# First, let's set our preferred default backend:model and parameters

sdialog.config.llm("openai:gpt-4.1", temperature=1, api_key="YOUR_KEY") # or export OPENAI_API_KEY=YOUR_KEY

# sdialog.config.llm("ollama:qwen3:14b") # etc.

# Let's define our personas (use built-ins like in this example, or create your own!)

support_persona = SupportAgent(name="Ava", politeness="high", communication_style="friendly")

customer_persona = Customer(name="Riley", issue="double charge", desired_outcome="refund")

# (Optional) Let's define two mock tools (just plain Python functions) for our support agent

def verify_account(user_id):

"""Verify user account by user id."""

return {"user_id": user_id, "verified": True}

def refund(amount):

"""Process a refund for the given amount."""

return {"status": "refunded", "amount": amount}

# (Optional) Let's also include a small rule-based orchestrator for our support agent

react_refund = SimpleReflexOrchestrator(

condition=lambda utt: "refund" in utt.lower(),

instruction="Follow refund policy; verify account, apologize, refund.",

)

# Now, let's create the agents!

support_agent = Agent(

persona=support_persona,

think=True, # Let's also enable thinking mode

tools=[verify_account, refund],

name="Support"

)

simulated_customer = Agent(

persona=customer_persona,

first_utterance="Hi!",

name="Customer"

)

# Since we have one orchestrator, let's attach it to our target agent

support_agent = support_agent | react_refund

# Let's generate 3 dialogs between them! (we can evaluate them later)

# (Optional) Let's also define a concrete conversational context for the agents in these dialogs

web_chat = Context(location="chat", environment="web", circumstances="billing")

for ix in range(3):

dialog = simulated_customer.dialog_with(support_agent, context=web_chat) # Generate the dialog

dialog.to_file(f"dialog_{ix}.json") # Save it

dialog.print(all=True) # And pretty print it with all its events (thoughts, orchestration, etc.)

# Finally, let's serve our support agent to interact with real users (OpenAI-compatible API)

# Point Open WebUI or any OpenAI-compatible client to: http://localhost:1333

support_agent.serve(port=1333)[!TIP]

- Choose your LLMs and backends freely.

- Personas and context can be automatically generated (e.g. generate different customer profiles!).

[!NOTE]

- See "agents with tools and thoughts" tutorial for a more complete example.

- See Serving Agents via REST API for more details on server options.

Probe OpenAI‑compatible deployed systems with controllable simulated users and capture dialogs for evaluation.

You can also use SDialog as a controllable test harness for any OpenAI‑compatible system such as vLLM-based ones by role‑playing realistic or adversarial users against your deployed system:

- Black‑box functional checks (Does the system follow instructions? Handle edge cases?)

- Persona / use‑case coverage (Different goals, emotions, domains)

- Regression testing (Run the same persona batch each release; diff dialogs)

- Safety / robustness probing (Angry, confused, or noisy users)

- Automated evaluation (Pipe generated dialogs directly into evaluators - See Evaluation section below)

Core idea: wrap your system as an Agent using openai: as the prefix of your model name string, talk to it with simulated user Agents, and capture Dialogs you can save, diff, and score.

Below is a minimal example where our simulated customer interacts once with your hypothetical remote endpoint:

# Our remote system (your conversational backend exposing an OpenAI-compatible API)

system = Agent(

model="openai:your/model", # Model name exposed by your server

openai_api_base="http://your-endpoint.com:8000/v1", # Base URL of the service

openai_api_key="EMPTY", # Or a real key if required

name="System"

)

# Let's make our simulated customer talk with the system

dialog = simulated_customer.dialog_with(system)

dialog.to_file("dialog_0.json")Import, export, and transform dialogs from JSON, text, CSV, or Hugging Face datasets.

Dialogs are rich objects with helper methods (filter, slice, transform, etc.) that can be easily exported and loaded using different methods:

from sdialog import Dialog

# Load from JSON (generated by SDialog using `to_file()`)

dialog = Dialog.from_file("dialog_0.json")

# Load from HuggingFace Hub datasets

dialogs = Dialog.from_huggingface("sdialog/Primock-57")

# Create from plain text files or strings - perfect for converting existing datasets!

dialog_from_txt = Dialog.from_str("""

Alice: Hello there! How are you today?

Bob: I'm doing great, thanks for asking.

Alice: That's wonderful to hear!

""")

# Or, equivalently if the content is in a txt file

dialog_from_txt = Dialog.from_file("conversation.txt")

# Load from CSV files with custom column names

dialog_from_csv = Dialog.from_file("conversation.csv",

csv_speaker_col="speaker",

csv_text_col="value",)

# All Dialog objects have rich manipulation methods

dialog.filter("Alice").rename_speaker("Alice", "Customer").upper().to_file("processed.json")

avg_words_turn = sum(len(turn) for turn in dialog) / len(dialog)See Dialog section in the documentation for more information.

Score dialogs with built‑in metrics and LLM judges, and compare datasets with aggregators and plots.

Dialogs can be evaluated using the different components available inside the sdialog.evaluation module.

Use built‑in metrics—conversational features, readability, embedding-based, LLM-as-judge, flow-based, functional correctness (30+ metrics across six categories)—or easily create new ones, then aggregate and compare datasets (sets of dialogs) via Comparator.

from sdialog import Dialog

from sdialog.evaluation import LLMJudgeYesNo, ToolSequenceValidator

from sdialog.evaluation import FrequencyEvaluator, Comparator

# Two quick checks: did the agent ask for verification, and did it call tools in order?

judge_verify = LLMJudgeYesNo(

"Did the support agent try to verify the customer?",

reason=True,

)

tool_seq = ToolSequenceValidator(["verify_account", "refund"])

comparator = Comparator([

FrequencyEvaluator(judge_verify, name="Asked for verification"),

FrequencyEvaluator(tool_seq, name="Correct tool order"),

])

results = comparator({

"model-A": Dialog.from_folder("output/model-A"),

"model-B": Dialog.from_folder("output/model-B"),

})

comparator.plot()[!TIP] See evaluation tutorial.

Capture per‑token activations and steer models via Inspectors for analysis and interventions.

Attach Inspectors to capture per‑token activations and optionally steer (add/ablate directions) to analyze or intervene in model behavior.

import sdialog

from sdialog.interpretability import Inspector

from sdialog.agents import Agent

sdialog.config.llm("huggingface:meta-llama/Llama-3.2-3B-Instruct")

agent = Agent(name="Bob")

inspector = Inspector(target="model.layers.15")

agent = agent | inspector

agent("How are you?")

agent("Cool!")

# Let's get the last response's first token activation vector!

act = inspector[-1][0].act # [response index][token index]Steering intervention (subtracting a direction):

import torch

anger_direction = torch.load("anger_direction.pt") # A direction vector (e.g., PCA / difference-in-mean vector)

agent_steered = agent | inspector - anger_direction # Ablate the anger direction from the target activations

agent_steered("You are an extremely upset assistant") # Agent "can't get angry anymore" :)[!TIP] See the tutorial on using SDialog to remove the refusal capability from LLaMA 3.2.

Convert text dialogs to audio conversations with speech synthesis, voice assignment, and acoustic simulation.

SDialog can transform text dialogs into audio conversations with a simple one-line command. The audio module supports:

- Text-to-Speech (TTS): Kokoro and HuggingFace models (with planned support for better TTS like IndexTTS and API-based TTS like OpenAI)

- Voice databases: Automatic or manual voice assignment based on persona attributes (age, gender, language)

- Acoustic simulation: Room acoustics simulation for realistic spatial audio

- Microphone simulation: Professional microphones simulation from brands like Shure, Sennheiser, and Sony

- Multiple formats: Export to WAV, MP3, or FLAC with custom sampling rates

- Multi-stage pipeline: Step 1 (tts and concatenate utterances) and Step 2/3 (position based timeline generation and room acoustics)

Generate audio from any dialog easily with just a few lines of code:

Install dependencies (see the documentation for complete setup instructions):

apt-get install sox ffmpeg espeak-ng

pip install sdialog[audio]Then, simply:

from sdialog import Dialog

dialog = Dialog.from_file("my_dialog.json")

# Convert to audio with default settings (HuggingFace TTS - single speaker)

audio_dialog = dialog.to_audio(perform_room_acoustics=True)

print(audio_dialog.display())

# Or customize the audio generation

audio_dialog = dialog.to_audio(

perform_room_acoustics=True,

audio_file_format="mp3",

re_sampling_rate=16000,

)

print(audio_dialog.display())[!TIP] See the Audio Generation documentation for more details. For usage examples including acoustic simulation, room generation, and voice databases, check out the audio tutorials.

- ArXiv paper

- Demo (video)

- Tutorials

- API reference

- Documentation

- Documentation for AI coding assistants like Copilot is also available at

https://sdialog.readthedocs.io/en/latest/llm.txtfollowing the llm.txt specification. In your Copilot chat, simply use:#fetch https://sdialog.readthedocs.io/en/latest/llm.txt Your prompt goes here...(e.g. Write a python script using sdialog to have an agent for criminal investigation, define its persona, tools, orchestration...)

To accelerate open, rigorous, and reproducible conversational AI research, SDialog invites the community to collaborate and help shape the future of open dialog generation.

- 🗂️ Dataset Standardization: Help convert existing dialog datasets to SDialog format. Currently, each dataset stores dialogs in different formats, making cross-dataset analysis and model evaluation challenging. Converted datasets are made available as Hugging Face datasets in the SDialog organization for easy access and integration.

- 🔧 Component Development: Create new personas, orchestrators, evaluators, generators, or backend integrations

- 📊 Evaluation & Benchmarks: Design new metrics, evaluation frameworks, or comparative studies

- 🧠 Interpretability Research: Develop new analysis tools, steering methods, or mechanistic insights

- 📖 Documentation & Tutorials: Improve guides, add examples, or create educational content

- 🐛 Issues & Discussions: Report bugs, request features, or share research ideas and use cases

[!NOTE] Example: Check out Primock-57, a sample dataset already available in SDialog format on Hugging Face.

If you have a dialog dataset you'd like to convert to SDialog format, need help with the conversion process, or want to contribute in any other way, please open an issue or reach out to us. We're happy to help and collaborate!

See CONTRIBUTING.md. We welcome issues, feature requests, and pull requests. If you want to contribute to the project, please open an issue or submit a PR, and help us make SDialog better 👍. If you find SDialog useful, please consider starring ⭐ the GitHub repository to support the project and increase its visibility 😄.

This project follows the all-contributors specification. All-contributors list:

|

Sergio Burdisso 💻 🤔 📖 ✅ |

Labrak Yanis 💻 🤔 |

Séverin 💻 🤔 ✅ |

Ricard Marxer 💻 🤔 |

Thomas Schaaf 🤔 💻 |

David Liu 💻 |

ahassoo1 🤔 💻 |

|

Pawel Cyrta 💻 🤔 |

ABCDEFGHIJKL 💻 |

Fernando Leon Franco 💻 🤔 |

Esaú Villatoro-Tello, Ph. D. 🤔 📖 |

If you use SDialog in academic work, please consider citing our paper:

@misc{burdisso2025sdialogpythontoolkitendtoend,

title = {SDialog: A Python Toolkit for End-to-End Agent Building, User Simulation, Dialog Generation, and Evaluation},

author = {Sergio Burdisso and Séverin Baroudi and Yanis Labrak and David Grunert and Pawel Cyrta and Yiyang Chen and Srikanth Madikeri and Thomas Schaaf and Esaú Villatoro-Tello and Ahmed Hassoon and Ricard Marxer and Petr Motlicek},

year = {2025},

eprint = {2506.10622},

archivePrefix = {arXiv},

primaryClass = {cs.AI},

url = {https://arxiv.org/abs/2506.10622},

}(A system demonstration version of the paper has been submitted to EACL 2026 and is under review; we will update this BibTeX if accepted)

This work was mainly supported by the European Union Horizon 2020 project ELOQUENCE and received a significant development boost during the Johns Hopkins University JSALT 2025 workshop, as part of the "Play your Part" research group. We thank all contributors and the open-source community for their valuable feedback and contributions.

MIT License

Copyright (c) 2025 Idiap Research Institute

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for sdialog

Similar Open Source Tools

sdialog

SDialog is an MIT-licensed open-source toolkit for building, simulating, and evaluating LLM-based conversational agents end-to-end. It aims to bridge agent construction, user simulation, dialog generation, and evaluation in a single reproducible workflow, enabling the generation of reliable, controllable dialog systems or data at scale. The toolkit standardizes a Dialog schema, offers persona-driven multi-agent simulation with LLMs, provides composable orchestration for precise control over behavior and flow, includes built-in evaluation metrics, and offers mechanistic interpretability. It allows for easy creation of user-defined components and interoperability across various AI platforms.

zenml

ZenML is an extensible, open-source MLOps framework for creating portable, production-ready machine learning pipelines. By decoupling infrastructure from code, ZenML enables developers across your organization to collaborate more effectively as they develop to production.

semantic-kernel

Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. What makes Semantic Kernel _special_ , however, is its ability to _automatically_ orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

LightAgent

LightAgent is a lightweight, open-source Agentic AI development framework with memory, tools, and a tree of thought. It supports multi-agent collaboration, autonomous learning, tool integration, complex task handling, and multi-model support. It also features a streaming API, tool generator, agent self-learning, adaptive tool mechanism, and more. LightAgent is designed for intelligent customer service, data analysis, automated tools, and educational assistance.

mlflow

MLflow is a platform to streamline machine learning development, including tracking experiments, packaging code into reproducible runs, and sharing and deploying models. MLflow offers a set of lightweight APIs that can be used with any existing machine learning application or library (TensorFlow, PyTorch, XGBoost, etc), wherever you currently run ML code (e.g. in notebooks, standalone applications or the cloud). MLflow's current components are:

* `MLflow Tracking

unify

The Unify Python Package provides access to the Unify REST API, allowing users to query Large Language Models (LLMs) from any Python 3.7.1+ application. It includes Synchronous and Asynchronous clients with Streaming responses support. Users can easily use any endpoint with a single key, route to the best endpoint for optimal throughput, cost, or latency, and customize prompts to interact with the models. The package also supports dynamic routing to automatically direct requests to the top-performing provider. Additionally, users can enable streaming responses and interact with the models asynchronously for handling multiple user requests simultaneously.

agents

The LiveKit Agent Framework is designed for building real-time, programmable participants that run on servers. Easily tap into LiveKit WebRTC sessions and process or generate audio, video, and data streams. The framework includes plugins for common workflows, such as voice activity detection and speech-to-text. Agents integrates seamlessly with LiveKit server, offloading job queuing and scheduling responsibilities to it. This eliminates the need for additional queuing infrastructure. Agent code developed on your local machine can scale to support thousands of concurrent sessions when deployed to a server in production.

swiftide

Swiftide is a fast, streaming indexing and query library tailored for Retrieval Augmented Generation (RAG) in AI applications. It is built in Rust, utilizing parallel, asynchronous streams for blazingly fast performance. With Swiftide, users can easily build AI applications from idea to production in just a few lines of code. The tool addresses frustrations around performance, stability, and ease of use encountered while working with Python-based tooling. It offers features like fast streaming indexing pipeline, experimental query pipeline, integrations with various platforms, loaders, transformers, chunkers, embedders, and more. Swiftide aims to provide a platform for data indexing and querying to advance the development of automated Large Language Model (LLM) applications.

bee-agent-framework

The Bee Agent Framework is an open-source tool for building, deploying, and serving powerful agentic workflows at scale. It provides AI agents, tools for creating workflows in Javascript/Python, a code interpreter, memory optimization strategies, serialization for pausing/resuming workflows, traceability features, production-level control, and upcoming features like model-agnostic support and a chat UI. The framework offers various modules for agents, llms, memory, tools, caching, errors, adapters, logging, serialization, and more, with a roadmap including MLFlow integration, JSON support, structured outputs, chat client, base agent improvements, guardrails, and evaluation.

AgentFly

AgentFly is an extensible framework for building LLM agents with reinforcement learning. It supports multi-turn training by adapting traditional RL methods with token-level masking. It features a decorator-based interface for defining tools and reward functions, enabling seamless extension and ease of use. To support high-throughput training, it implemented asynchronous execution of tool calls and reward computations, and designed a centralized resource management system for scalable environment coordination. A suite of prebuilt tools and environments are provided.

arbigent

Arbigent (Arbiter-Agent) is an AI agent testing framework designed to make AI agent testing practical for modern applications. It addresses challenges faced by traditional UI testing frameworks and AI agents by breaking down complex tasks into smaller, dependent scenarios. The framework is customizable for various AI providers, operating systems, and form factors, empowering users with extensive customization capabilities. Arbigent offers an intuitive UI for scenario creation and a powerful code interface for seamless test execution. It supports multiple form factors, optimizes UI for AI interaction, and is cost-effective by utilizing models like GPT-4o mini. With a flexible code interface and open-source nature, Arbigent aims to revolutionize AI agent testing in modern applications.

MInference

MInference is a tool designed to accelerate pre-filling for long-context Language Models (LLMs) by leveraging dynamic sparse attention. It achieves up to a 10x speedup for pre-filling on an A100 while maintaining accuracy. The tool supports various decoding LLMs, including LLaMA-style models and Phi models, and provides custom kernels for attention computation. MInference is useful for researchers and developers working with large-scale language models who aim to improve efficiency without compromising accuracy.

multi-agent-orchestrator

Multi-Agent Orchestrator is a flexible and powerful framework for managing multiple AI agents and handling complex conversations. It intelligently routes queries to the most suitable agent based on context and content, supports dual language implementation in Python and TypeScript, offers flexible agent responses, context management across agents, extensible architecture for customization, universal deployment options, and pre-built agents and classifiers. It is suitable for various applications, from simple chatbots to sophisticated AI systems, accommodating diverse requirements and scaling efficiently.

agent-squad

Agent Squad is a flexible, lightweight open-source framework for orchestrating multiple AI agents to handle complex conversations. It intelligently routes queries, maintains context across interactions, and offers pre-built components for quick deployment. The system allows easy integration of custom agents and conversation messages storage solutions, making it suitable for various applications from simple chatbots to sophisticated AI systems, scaling efficiently.

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

DevoxxGenieIDEAPlugin

Devoxx Genie is a Java-based IntelliJ IDEA plugin that integrates with local and cloud-based LLM providers to aid in reviewing, testing, and explaining project code. It supports features like code highlighting, chat conversations, and adding files/code snippets to context. Users can modify REST endpoints and LLM parameters in settings, including support for cloud-based LLMs. The plugin requires IntelliJ version 2023.3.4 and JDK 17. Building and publishing the plugin is done using Gradle tasks. Users can select an LLM provider, choose code, and use commands like review, explain, or generate unit tests for code analysis.

For similar tasks

Awesome-LLM-Interpretability

Awesome-LLM-Interpretability is a curated list of materials related to LLM (Large Language Models) interpretability, covering tutorials, code libraries, surveys, videos, papers, and blogs. It includes resources on transformer mechanistic interpretability, visualization, interventions, probing, fine-tuning, feature representation, learning dynamics, knowledge editing, hallucination detection, and redundancy analysis. The repository aims to provide a comprehensive overview of tools, techniques, and methods for understanding and interpreting the inner workings of large language models.

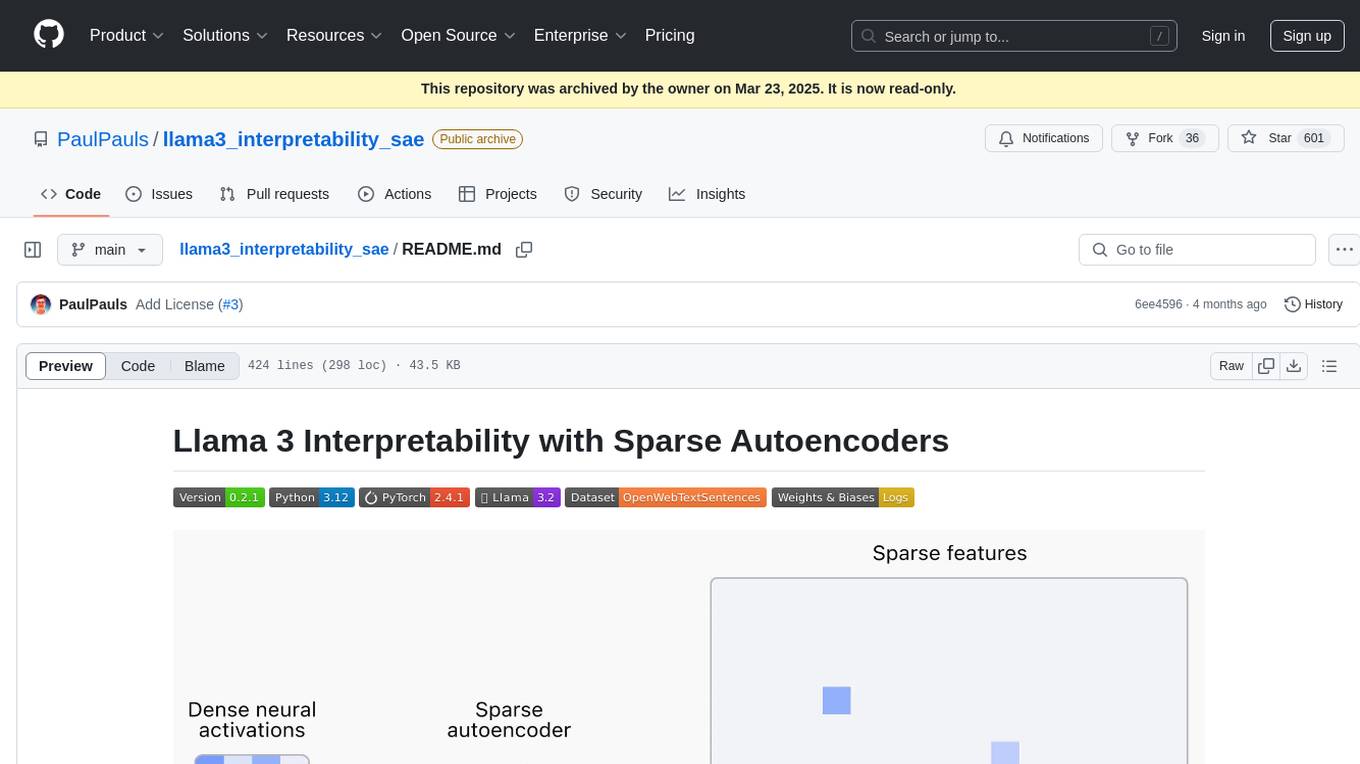

llama3_interpretability_sae

This project focuses on implementing Sparse Autoencoders (SAEs) for mechanistic interpretability in Large Language Models (LLMs) like Llama 3.2-3B. The SAEs aim to untangle superimposed representations in LLMs into separate, interpretable features for each neuron activation. The project provides an end-to-end pipeline for capturing training data, training the SAEs, analyzing learned features, and verifying results experimentally. It includes comprehensive logging, visualization, and checkpointing of SAE training, interpretability analysis tools, and a pure PyTorch implementation of Llama 3.1/3.2 chat and text completion. The project is designed for scalability, efficiency, and maintainability.

sdialog

SDialog is an MIT-licensed open-source toolkit for building, simulating, and evaluating LLM-based conversational agents end-to-end. It aims to bridge agent construction, user simulation, dialog generation, and evaluation in a single reproducible workflow, enabling the generation of reliable, controllable dialog systems or data at scale. The toolkit standardizes a Dialog schema, offers persona-driven multi-agent simulation with LLMs, provides composable orchestration for precise control over behavior and flow, includes built-in evaluation metrics, and offers mechanistic interpretability. It allows for easy creation of user-defined components and interoperability across various AI platforms.

For similar jobs

LitServe

LitServe is a high-throughput serving engine designed for deploying AI models at scale. It generates an API endpoint for models, handles batching, streaming, and autoscaling across CPU/GPUs. LitServe is built for enterprise scale with a focus on minimal, hackable code-base without bloat. It supports various model types like LLMs, vision, time-series, and works with frameworks like PyTorch, JAX, Tensorflow, and more. The tool allows users to focus on model performance rather than serving boilerplate, providing full control and flexibility.

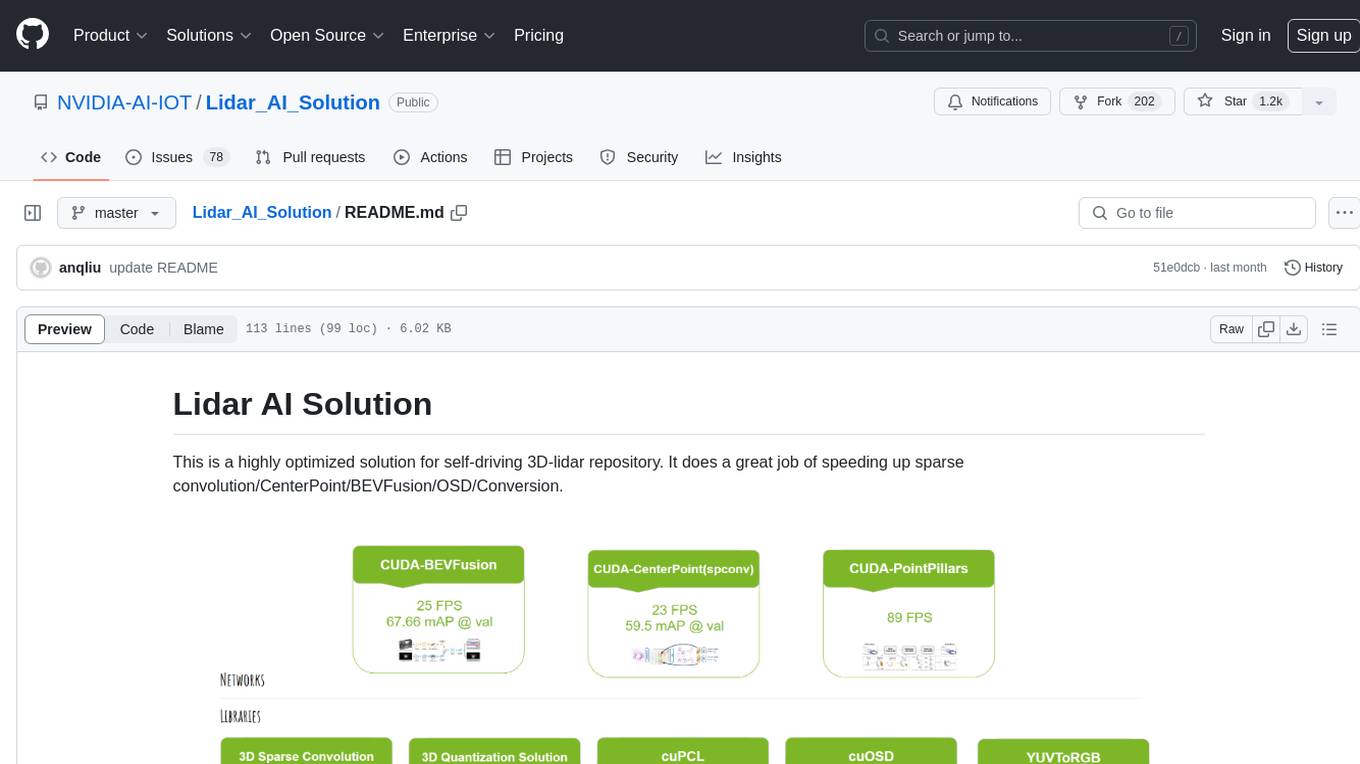

Lidar_AI_Solution

Lidar AI Solution is a highly optimized repository for self-driving 3D lidar, providing solutions for sparse convolution, BEVFusion, CenterPoint, OSD, and Conversion. It includes CUDA and TensorRT implementations for various tasks such as 3D sparse convolution, BEVFusion, CenterPoint, PointPillars, V2XFusion, cuOSD, cuPCL, and YUV to RGB conversion. The repository offers easy-to-use solutions, high accuracy, low memory usage, and quantization options for different tasks related to self-driving technology.

generative-ai-sagemaker-cdk-demo

This repository showcases how to deploy generative AI models from Amazon SageMaker JumpStart using the AWS CDK. Generative AI is a type of AI that can create new content and ideas, such as conversations, stories, images, videos, and music. The repository provides a detailed guide on deploying image and text generative AI models, utilizing pre-trained models from SageMaker JumpStart. The web application is built on Streamlit and hosted on Amazon ECS with Fargate. It interacts with the SageMaker model endpoints through Lambda functions and Amazon API Gateway. The repository also includes instructions on setting up the AWS CDK application, deploying the stacks, using the models, and viewing the deployed resources on the AWS Management Console.

cake

cake is a pure Rust implementation of the llama3 LLM distributed inference based on Candle. The project aims to enable running large models on consumer hardware clusters of iOS, macOS, Linux, and Windows devices by sharding transformer blocks. It allows running inferences on models that wouldn't fit in a single device's GPU memory by batching contiguous transformer blocks on the same worker to minimize latency. The tool provides a way to optimize memory and disk space by splitting the model into smaller bundles for workers, ensuring they only have the necessary data. cake supports various OS, architectures, and accelerations, with different statuses for each configuration.

Awesome-Robotics-3D

Awesome-Robotics-3D is a curated list of 3D Vision papers related to Robotics domain, focusing on large models like LLMs/VLMs. It includes papers on Policy Learning, Pretraining, VLM and LLM, Representations, and Simulations, Datasets, and Benchmarks. The repository is maintained by Zubair Irshad and welcomes contributions and suggestions for adding papers. It serves as a valuable resource for researchers and practitioners in the field of Robotics and Computer Vision.

tensorzero

TensorZero is an open-source platform that helps LLM applications graduate from API wrappers into defensible AI products. It enables a data & learning flywheel for LLMs by unifying inference, observability, optimization, and experimentation. The platform includes a high-performance model gateway, structured schema-based inference, observability, experimentation, and data warehouse for analytics. TensorZero Recipes optimize prompts and models, and the platform supports experimentation features and GitOps orchestration for deployment.

vector-inference

This repository provides an easy-to-use solution for running inference servers on Slurm-managed computing clusters using vLLM. All scripts in this repository run natively on the Vector Institute cluster environment. Users can deploy models as Slurm jobs, check server status and performance metrics, and shut down models. The repository also supports launching custom models with specific configurations. Additionally, users can send inference requests and set up an SSH tunnel to run inference from a local device.

rhesis

Rhesis is a comprehensive test management platform designed for Gen AI teams, offering tools to create, manage, and execute test cases for generative AI applications. It ensures the robustness, reliability, and compliance of AI systems through features like test set management, automated test generation, edge case discovery, compliance validation, integration capabilities, and performance tracking. The platform is open source, emphasizing community-driven development, transparency, extensible architecture, and democratizing AI safety. It includes components such as backend services, frontend applications, SDK for developers, worker services, chatbot applications, and Polyphemus for uncensored LLM service. Rhesis enables users to address challenges unique to testing generative AI applications, such as non-deterministic outputs, hallucinations, edge cases, ethical concerns, and compliance requirements.