datapizza-ai

Build reliable Gen AI solutions without overhead 🍕

Stars: 2126

Datapizza AI is a Python-based GenAI framework designed for speed, providing an API-first design with multi-provider support, tool integration, memory management, and observability features. It offers less abstraction, more control, and is vendor-agnostic, allowing easy model swapping and clear interfaces. The framework enables building sophisticated AI systems, document processing, RAG pipelines, and more, with a focus on observability and performance monitoring. Datapizza AI is trusted by engineers for its predictability, fast debugging, and reliable code in production.

README:

Build reliable Gen AI solutions without overhead

Written in Python. Designed for speed. A no-fluff GenAI framework that gets your agents from dev to prod, fast

🏠Homepage • 🚀 Quick Start • 📖 Documentation • 🎯 Examples • 🤝 Community

A framework that keeps your agents predictable, your debugging fast, and your code trusted in production. Built by Engineers, trusted by Engineers.

pip install datapizza-aifrom datapizza.clients.openai import OpenAIClient

client = OpenAIClient(api_key="YOUR_API_KEY")

result = client.invoke("Hi, how are u?")

print(result.text)

|

|

|

|

# Core framework

pip install datapizza-ai

# With specific providers (optional)

pip install datapizza-ai-clients-openai

pip install datapizza-ai-clients-google

pip install datapizza-ai-clients-anthropicfrom datapizza.agents import Agent

from datapizza.clients.openai import OpenAIClient

from datapizza.tools import tool

@tool

def get_weather(city: str) -> str:

return f"The weather in {city} is sunny"

client = OpenAIClient(api_key="YOUR_API_KEY")

agent = Agent(name="assistant", client=client, tools = [get_weather])

response = agent.run("What is the weather in Rome?")

# output: The weather in Rome is sunnyA key requirement for principled development of LLM applications over your data (RAG systems, agents) is being able to observe and debug.

Datapizza-ai provides built-in observability with OpenTelemetry tracing to help you monitor performance and understand execution flow.

pip install datapizza-ai-tools-duckduckgofrom datapizza.agents import Agent

from datapizza.clients.openai import OpenAIClient

from datapizza.tools.duckduckgo import DuckDuckGoSearchTool

from datapizza.tracing import ContextTracing

client = OpenAIClient(api_key="OPENAI_API_KEY")

agent = Agent(name="assistant", client=client, tools = [DuckDuckGoSearchTool()])

with ContextTracing().trace("my_ai_operation"):

response = agent.run("Tell me some news about Bitcoin")

# Output shows:

# ╭─ Trace Summary of my_ai_operation ──────────────────────────────────╮

# │ Total Spans: 3 │

# │ Duration: 2.45s │

# │ ┏━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━┓ |

# │ ┃ Model ┃ Prompt Tokens ┃ Completion Tokens ┃ Cached Tokens ┃ |

# │ ┡━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━┩ |

# │ │ gpt-4o-mini │ 31 │ 27 │ 0 │ |

# │ └─────────────┴───────────────┴───────────────────┴───────────────┘ |

# ╰─────────────────────────────────────────────────────────────────────╯Build sophisticated AI systems where multiple specialized agents collaborate to solve complex tasks. This example shows how to create a trip planning system with dedicated agents for weather information, web search, and planning coordination.

# Install DuckDuckGo tool

pip install datapizza-ai-tools-duckduckgofrom datapizza.agents.agent import Agent

from datapizza.clients.openai import OpenAIClient

from datapizza.tools import tool

from datapizza.tools.duckduckgo import DuckDuckGoSearchTool

client = OpenAIClient(api_key="YOUR_API_KEY", model="gpt-4.1")

@tool

def get_weather(city: str) -> str:

return f""" it's sunny all the week in {city}"""

weather_agent = Agent(

name="weather_expert",

client=client,

system_prompt="You are a weather expert. Provide detailed weather information and forecasts.",

tools=[get_weather]

)

web_search_agent = Agent(

name="web_search_expert",

client=client,

system_prompt="You are a web search expert. You can search the web for information.",

tools=[DuckDuckGoSearchTool()]

)

planner_agent = Agent(

name="planner",

client=client,

system_prompt="You are a trip planner. You should provide a plan for the user. Make sure to provide a detailed plan with the best places to visit and the best time to visit them."

)

planner_agent.can_call([weather_agent, web_search_agent])

response = planner_agent.run(

"I need to plan a hiking trip in Seattle next week. I want to see some waterfalls and a forest."

)

print(response.text)Process and index documents for retrieval-augmented generation (RAG). This pipeline automatically parses PDFs, splits them into chunks, generates embeddings, and stores them in a vector database for efficient similarity search.

pip install datapizza-ai-parsers-doclingfrom datapizza.core.vectorstore import VectorConfig

from datapizza.embedders import ChunkEmbedder

from datapizza.embedders.openai import OpenAIEmbedder

from datapizza.modules.parsers.docling import DoclingParser

from datapizza.modules.splitters import NodeSplitter

from datapizza.pipeline import IngestionPipeline

from datapizza.vectorstores.qdrant import QdrantVectorstore

vectorstore = QdrantVectorstore(location=":memory:")

embedder = ChunkEmbedder(client=OpenAIEmbedder(api_key="YOUR_API_KEY", model_name="text-embedding-3-small"))

vectorstore.create_collection("my_documents",vector_config=[VectorConfig(name="embedding", dimensions=1536)])

pipeline = IngestionPipeline(

modules=[

DoclingParser(),

NodeSplitter(max_char=1024),

embedder,

],

vector_store=vectorstore,

collection_name="my_documents"

)

pipeline.run("sample.pdf")

results = vectorstore.search(query_vector = [0.0] * 1536, collection_name="my_documents", k=5)

print(results)Create a complete RAG pipeline that enhances AI responses with relevant document context. This example demonstrates query rewriting, embedding generation, document retrieval, and response generation in a connected workflow.

from datapizza.clients.openai import OpenAIClient

from datapizza.embedders.openai import OpenAIEmbedder

from datapizza.modules.prompt import ChatPromptTemplate

from datapizza.modules.rewriters import ToolRewriter

from datapizza.pipeline import DagPipeline

from datapizza.vectorstores.qdrant import QdrantVectorstore

openai_client = OpenAIClient(

model="gpt-4o-mini",

api_key="YOUR_API_KEY"

)

dag_pipeline = DagPipeline()

dag_pipeline.add_module("rewriter", ToolRewriter(client=openai_client, system_prompt="Rewrite user queries to improve retrieval accuracy."))

dag_pipeline.add_module("embedder", OpenAIEmbedder(api_key= "YOUR_API_KEY", model_name="text-embedding-3-small"))

dag_pipeline.add_module("retriever", QdrantVectorstore(host="localhost", port=6333).as_retriever(collection_name="my_documents", k=5))

dag_pipeline.add_module("prompt", ChatPromptTemplate(user_prompt_template="User question: {{user_prompt}}\n:", retrieval_prompt_template="Retrieved content:\n{% for chunk in chunks %}{{ chunk.text }}\n{% endfor %}"))

dag_pipeline.add_module("generator", openai_client)

dag_pipeline.connect("rewriter", "embedder", target_key="text")

dag_pipeline.connect("embedder", "retriever", target_key="query_vector")

dag_pipeline.connect("retriever", "prompt", target_key="chunks")

dag_pipeline.connect("prompt", "generator", target_key="memory")

query = "tell me something about this document"

result = dag_pipeline.run({

"rewriter": {"user_prompt": query},

"prompt": {"user_prompt": query},

"retriever": {"collection_name": "my_documents", "k": 3},

"generator":{"input": query}

})

print(f"Generated response: {result['generator']}")|

OpenAI |

Google Gemini |

Anthropic |

Mistral |

Azure OpenAI |

| Category | Components |

|---|---|

| 📄 Document Parsers | Azure AI Document Intelligence, Docling |

| 🔍 Vector Stores | Qdrant |

| 🎯 Rerankers | Cohere, Together AI |

| 🌐 Tools | DuckDuckGo Search, Custom Tools |

| 💾 Caching | Redis integration for performance optimization |

| 📊 Embedders | OpenAI, Google, Cohere, FastEmbed |

- 📖 Complete Documentation - Comprehensive guides and API reference

- 🎯 RAG Tutorial - Build production RAG systems

- 🤖 Agent Examples - Real-world agent implementations

We love contributions! Whether it's:

- 🐛 Bug Reports - Help us improve

- 💡 Feature Requests - Share your ideas

- 📝 Documentation - Make it better for everyone

- 🔧 Code Contributions - Build the future together

Check out our Contributing Guide to get started.

This project is licensed under the MIT License - see the LICENSE file for details.

Built by Datapizza, the AI native company

A framework made to be easy to learn, easy to maintain and ready for production 🍕

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for datapizza-ai

Similar Open Source Tools

datapizza-ai

Datapizza AI is a Python-based GenAI framework designed for speed, providing an API-first design with multi-provider support, tool integration, memory management, and observability features. It offers less abstraction, more control, and is vendor-agnostic, allowing easy model swapping and clear interfaces. The framework enables building sophisticated AI systems, document processing, RAG pipelines, and more, with a focus on observability and performance monitoring. Datapizza AI is trusted by engineers for its predictability, fast debugging, and reliable code in production.

DeepTutor

DeepTutor is an AI-powered personalized learning assistant that offers a suite of modules for massive document knowledge Q&A, interactive learning visualization, knowledge reinforcement with practice exercise generation, deep research, and idea generation. The tool supports multi-agent collaboration, dynamic topic queues, and structured outputs for various tasks. It provides a unified system entry for activity tracking, knowledge base management, and system status monitoring. DeepTutor is designed to streamline learning and research processes by leveraging AI technologies and interactive features.

lihil

Lihil is a performant, productive, and professional web framework designed to make Python the mainstream programming language for web development. It is 100% test covered and strictly typed, offering fast performance, ergonomic API, and built-in solutions for common problems. Lihil is suitable for enterprise web development, delivering robust and scalable solutions with best practices in microservice architecture and related patterns. It features dependency injection, OpenAPI docs generation, error response generation, data validation, message system, testability, and strong support for AI features. Lihil is ASGI compatible and uses starlette as its ASGI toolkit, ensuring compatibility with starlette classes and middlewares. The framework follows semantic versioning and has a roadmap for future enhancements and features.

mcp-ts-template

The MCP TypeScript Server Template is a production-grade framework for building powerful and scalable Model Context Protocol servers with TypeScript. It features built-in observability, declarative tooling, robust error handling, and a modular, DI-driven architecture. The template is designed to be AI-agent-friendly, providing detailed rules and guidance for developers to adhere to best practices. It enforces architectural principles like 'Logic Throws, Handler Catches' pattern, full-stack observability, declarative components, and dependency injection for decoupling. The project structure includes directories for configuration, container setup, server resources, services, storage, utilities, tests, and more. Configuration is done via environment variables, and key scripts are available for development, testing, and publishing to the MCP Registry.

VT.ai

VT.ai is a multimodal AI platform that offers dynamic conversation routing with SemanticRouter, multi-modal interactions (text/image/audio), an assistant framework with code interpretation, real-time response streaming, cross-provider model switching, and local model support with Ollama integration. It supports various AI providers such as OpenAI, Anthropic, Google Gemini, Groq, Cohere, and OpenRouter, providing a wide range of core capabilities for AI orchestration.

cordum

Cordum is a control plane for AI agents designed to close the Trust Gap by providing safety, observability, and control features. It allows teams to deploy autonomous agents with built-in governance mechanisms, including safety policies, workflow orchestration, job routing, observability, and human-in-the-loop approvals. The tool aims to address the challenges of deploying AI agents in production by offering visibility, safety rails, audit trails, and approval mechanisms for sensitive operations.

aichildedu

AICHILDEDU is a microservice-based AI education platform for children that integrates LLMs, image generation, and speech synthesis to provide personalized storybook creation, intelligent conversational learning, and multimedia content generation. It offers features like personalized story generation, educational quiz creation, multimedia integration, age-appropriate content, multi-language support, user management, parental controls, and asynchronous processing. The platform follows a microservice architecture with components like API Gateway, User Service, Content Service, Learning Service, and AI Services. Technologies used include Python, FastAPI, PostgreSQL, MongoDB, Redis, LangChain, OpenAI GPT models, TensorFlow, PyTorch, Transformers, MinIO, Elasticsearch, Docker, Docker Compose, and JWT-based authentication.

mcp-memory-service

The MCP Memory Service is a universal memory service designed for AI assistants, providing semantic memory search and persistent storage. It works with various AI applications and offers fast local search using SQLite-vec and global distribution through Cloudflare. The service supports intelligent memory management, universal compatibility with AI tools, flexible storage options, and is production-ready with cross-platform support and secure connections. Users can store and recall memories, search by tags, check system health, and configure the service for Claude Desktop integration and environment variables.

incidentfox

IncidentFox is an open-source AI SRE tool designed to assist in incident response by automatically investigating incidents, finding root causes, and suggesting fixes. It integrates with observability stack, infrastructure, and collaboration tools, forming hypotheses, collecting data, and reasoning through to find root causes. The tool is built for production on-call scenarios, handling log sampling, alert correlation, anomaly detection, and dependency mapping. IncidentFox is highly customizable, Slack-first, and works on various platforms like web UI, GitHub, PagerDuty, and API. It aims to reduce incident resolution time, alert noise, and improve knowledge retention for engineering teams.

GitVizz

GitVizz is an AI-powered repository analysis tool that helps developers understand and navigate codebases quickly. It transforms complex code structures into interactive documentation, dependency graphs, and intelligent conversations. With features like interactive dependency graphs, AI-powered code conversations, advanced code visualization, and automatic documentation generation, GitVizz offers instant understanding and insights for any repository. The tool is built with modern technologies like Next.js, FastAPI, and OpenAI, making it scalable and efficient for analyzing large codebases. GitVizz also provides a standalone Python library for core code analysis and dependency graph generation, offering multi-language parsing, AST analysis, dependency graphs, visualizations, and extensibility for custom applications.

Lynkr

Lynkr is a self-hosted proxy server that unlocks various AI coding tools like Claude Code CLI, Cursor IDE, and Codex Cli. It supports multiple LLM providers such as Databricks, AWS Bedrock, OpenRouter, Ollama, llama.cpp, Azure OpenAI, Azure Anthropic, OpenAI, and LM Studio. Lynkr offers cost reduction, local/private execution, remote or local connectivity, zero code changes, and enterprise-ready features. It is perfect for developers needing provider flexibility, cost control, self-hosted AI with observability, local model execution, and cost reduction strategies.

httpjail

httpjail is a cross-platform tool designed for monitoring and restricting HTTP/HTTPS requests from processes using network isolation and transparent proxy interception. It provides process-level network isolation, HTTP/HTTPS interception with TLS certificate injection, script-based and JavaScript evaluation for custom request logic, request logging, default deny behavior, and zero-configuration setup. The tool operates on Linux and macOS, creating an isolated network environment for target processes and intercepting all HTTP/HTTPS traffic through a transparent proxy enforcing user-defined rules.

lobe-editor

LobeHub Editor is a modern, extensible rich text editor built on Meta's Lexical framework with dual-architecture design, featuring both a powerful kernel and React integration. Optimized for AI applications and chat interfaces, it offers a dual architecture with kernel-based API and React components, rich plugin ecosystem, chat interface ready, slash commands, multiple export formats, customizable UI, file & media support, TypeScript native, and modern build system. The editor provides features like dual architecture, React-first design, rich plugin ecosystem, chat interface readiness, slash commands, multiple export formats, customizable UI, file & media support, TypeScript native, and modern build system.

pluely

Pluely is a versatile and user-friendly tool for managing tasks and projects. It provides a simple interface for creating, organizing, and tracking tasks, making it easy to stay on top of your work. With features like task prioritization, due date reminders, and collaboration options, Pluely helps individuals and teams streamline their workflow and boost productivity. Whether you're a student juggling assignments, a professional managing multiple projects, or a team coordinating tasks, Pluely is the perfect solution to keep you organized and efficient.

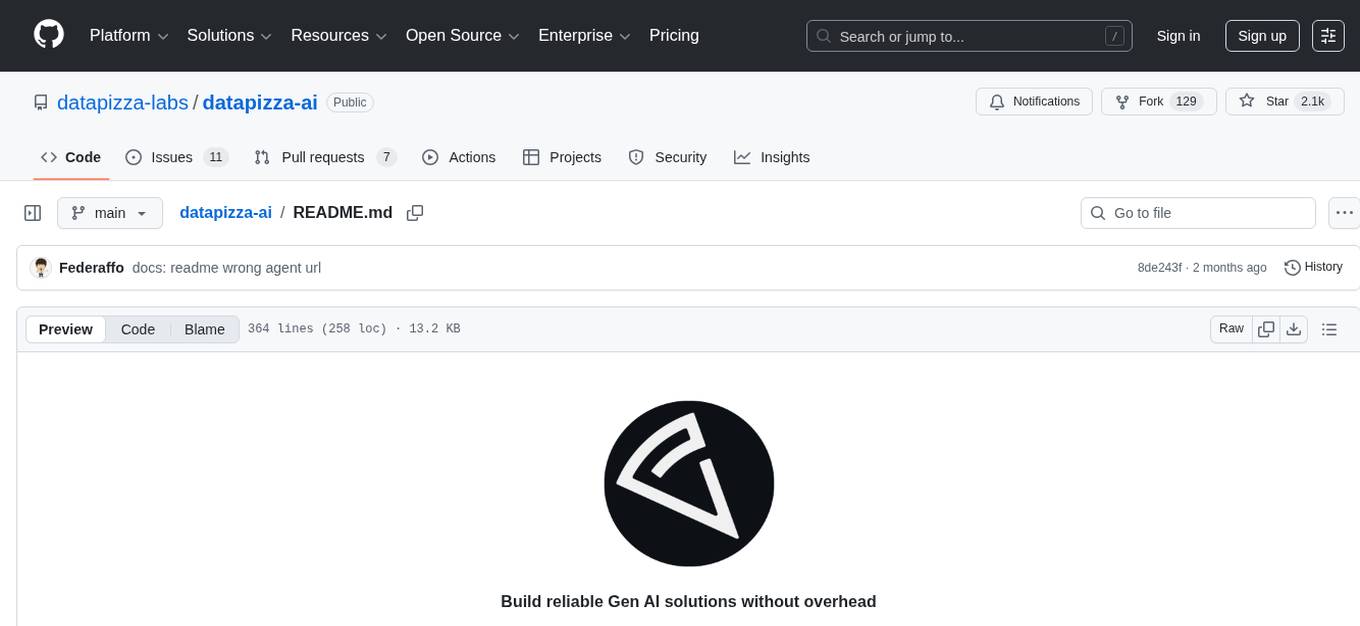

template-repo

The template-repo is a comprehensive development ecosystem with 6 AI agents, 14 MCP servers, and complete CI/CD automation running on self-hosted, zero-cost infrastructure. It follows a container-first approach, with all tools and operations running in Docker containers, zero external dependencies, self-hosted infrastructure, single maintainer design, and modular MCP architecture. The repo provides AI agents for development and automation, features 14 MCP servers for various tasks, and includes security measures, safety training, and sleeper detection system. It offers features like video editing, terrain generation, 3D content creation, AI consultation, image generation, and more, with a focus on maximum portability and consistency.

xln

XLN (Cross-Local Network) is a platform that enables instant off-chain settlement with on-chain finality. It combines Byzantine consensus, Bloomberg Terminal functionalities, and VR capabilities to run economic simulations in the browser without the need for a backend. The architecture includes layers for jurisdictions, entities, and accounts, with features like Solidity contracts, BFT consensus, and bilateral channels. The tool offers a panel system similar to Bloomberg Terminal for workspace organization and visualization, along with support for offline blockchain simulations in the browser and VR/Quest compatibility.

For similar tasks

AI-Notes

AI-Notes is a repository dedicated to practical applications of artificial intelligence and deep learning. It covers concepts such as data mining, machine learning, natural language processing, and AI. The repository contains Jupyter Notebook examples for hands-on learning and experimentation. It explores the development stages of AI, from narrow artificial intelligence to general artificial intelligence and superintelligence. The content delves into machine learning algorithms, deep learning techniques, and the impact of AI on various industries like autonomous driving and healthcare. The repository aims to provide a comprehensive understanding of AI technologies and their real-world applications.

AI-LLM-ML-CS-Quant-Readings

AI-LLM-ML-CS-Quant-Readings is a repository dedicated to taking notes on Artificial Intelligence, Large Language Models, Machine Learning, Computer Science, and Quantitative Finance. It contains a wide range of resources, including theory, applications, conferences, essentials, foundations, system design, computer systems, finance, and job interview questions. The repository covers topics such as AI systems, multi-agent systems, deep learning theory and applications, system design interviews, C++ design patterns, high-frequency finance, algorithmic trading, stochastic volatility modeling, and quantitative investing. It is a comprehensive collection of materials for individuals interested in these fields.

datapizza-ai

Datapizza AI is a Python-based GenAI framework designed for speed, providing an API-first design with multi-provider support, tool integration, memory management, and observability features. It offers less abstraction, more control, and is vendor-agnostic, allowing easy model swapping and clear interfaces. The framework enables building sophisticated AI systems, document processing, RAG pipelines, and more, with a focus on observability and performance monitoring. Datapizza AI is trusted by engineers for its predictability, fast debugging, and reliable code in production.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.

holoinsight

HoloInsight is a cloud-native observability platform that provides low-cost and high-performance monitoring services for cloud-native applications. It offers deep insights through real-time log analysis and AI integration. The platform is designed to help users gain a comprehensive understanding of their applications' performance and behavior in the cloud environment. HoloInsight is easy to deploy using Docker and Kubernetes, making it a versatile tool for monitoring and optimizing cloud-native applications. With a focus on scalability and efficiency, HoloInsight is suitable for organizations looking to enhance their observability and monitoring capabilities in the cloud.

awesome-AIOps

awesome-AIOps is a curated list of academic researches and industrial materials related to Artificial Intelligence for IT Operations (AIOps). It includes resources such as competitions, white papers, blogs, tutorials, benchmarks, tools, companies, academic materials, talks, workshops, papers, and courses covering various aspects of AIOps like anomaly detection, root cause analysis, incident management, microservices, dependency tracing, and more.

OpenLLM

OpenLLM is a platform that helps developers run any open-source Large Language Models (LLMs) as OpenAI-compatible API endpoints, locally and in the cloud. It supports a wide range of LLMs, provides state-of-the-art serving and inference performance, and simplifies cloud deployment via BentoML. Users can fine-tune, serve, deploy, and monitor any LLMs with ease using OpenLLM. The platform also supports various quantization techniques, serving fine-tuning layers, and multiple runtime implementations. OpenLLM seamlessly integrates with other tools like OpenAI Compatible Endpoints, LlamaIndex, LangChain, and Transformers Agents. It offers deployment options through Docker containers, BentoCloud, and provides a community for collaboration and contributions.

laravel-slower

Laravel Slower is a powerful package designed for Laravel developers to optimize the performance of their applications by identifying slow database queries and providing AI-driven suggestions for optimal indexing strategies and performance improvements. It offers actionable insights for debugging and monitoring database interactions, enhancing efficiency and scalability.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.