incidentfox

AI-powered SRE platform for automated incident investigation

Stars: 270

IncidentFox is an open-source AI SRE tool designed to assist in incident response by automatically investigating incidents, finding root causes, and suggesting fixes. It integrates with observability stack, infrastructure, and collaboration tools, forming hypotheses, collecting data, and reasoning through to find root causes. The tool is built for production on-call scenarios, handling log sampling, alert correlation, anomaly detection, and dependency mapping. IncidentFox is highly customizable, Slack-first, and works on various platforms like web UI, GitHub, PagerDuty, and API. It aims to reduce incident resolution time, alert noise, and improve knowledge retention for engineering teams.

README:

Your AI Copilot for Incident Response

Investigate incidents, find root causes, and suggest fixes — automatically

Try Free in Slack · 5-Min Docker Setup · Deploy for Your Team

IncidentFox is an open-source AI SRE that integrates with your observability stack, infrastructure, and collaboration tools. It automatically forms hypotheses, collects data from your systems, and reasons through to find root causes — all while you focus on the fix.

Built for production on-call — handles log sampling, alert correlation, anomaly detection, and dependency mapping so you don't have to.

- What is IncidentFox?

- How We're Different

- Get Started

- Why IncidentFox

- Architecture Overview

- Under the Hood

- Enterprise Ready

- Documentation

- Contributing

- License

An AI SRE that helps root cause and propose mitigations for production on-call issues. It automatically forms hypotheses, collects info from your infrastructure, observability tools, and code, and reasons through to an answer.

Slack-first (see screenshot above), but also works on web UI, GitHub, PagerDuty, and API.

Highly customizable — set up in minutes, and it self-improves by automatically learning and persisting your team's context.

AI SRE is not a new idea. The problem? Most AI SREs don't actually work — they lack the context to debug your specific systems.

Other tools ask you to manually configure integrations, write runbooks, and hope the AI figures it out. IncidentFox does the opposite.

On setup, we analyze your codebase, Slack history, and past incidents to understand how your org actually works. Internal CI/CD system with weird quirks? Custom deployment tooling? We learn it automatically and build integrations that work out of the box.

No weeks of integration work. No building your own MCP servers. We connect to the tools that actually matter for root cause — so you can skip straight to debugging.

We're opinionated: you shouldn't leave Slack during an incident.

- Upload a Grafana screenshot → we analyze it

- Attach a log file → we parse and correlate

- All tool outputs, evidence, and reasoning → visible as Slack attachments

- No new tabs. No context switching. Debug where you already work.

Our agents run in sandboxed environments with filesystem access — enabling code generation, script execution, and deep analysis. Security guardrails keep them focused on the task.

The result: Higher accuracy, faster resolution, less time wasted on integration work.

IncidentFox is open source (Apache 2.0). You can try it instantly in Slack, or deploy it yourself for full control. Pick the option that fits your needs:

| Option | Best For | Setup Time | Cost | Privacy | |

|---|---|---|---|---|---|

| Try Free | See it in action | Instant | Free | Our playground environment |  |

| Local Docker | Evaluate with your infra | 5 minutes | Free | Everything local | Setup Guide → |

| Managed (premium features) | Production, we handle ops | 30 minutes | Contact us (7-day free trial) | SaaS or on-prem, SOC2 |  |

| Self-Host (Open Core) | Production, full control | 30 minutes | Free | Everything local | Deployment Guide → |

New to IncidentFox? We recommend trying it in our Slack first — no setup required, see how it works instantly.

For Engineering Leaders: What this means for your team.

| Outcome | Impact |

|---|---|

| Faster Incident Resolution | Hours → minutes. Auto-correlates alerts, analyzes logs, traces dependencies. |

| 85-95% Less Alert Noise | Smart correlation finds root cause. Engineers focus on real problems. |

| Knowledge Retention | Learns your systems and runbooks. Knowledge stays when people leave. |

| Works on Day One | 300+ integrations. No months of setup — connect and go. |

| No Vendor Lock-In | Open source, bring your own LLM keys, deploy anywhere. |

| Gets Smarter Over Time | Learns from every investigation. Your expertise compounds. |

The bottom line: Less time firefighting, more time building.

IncidentFox connects to your existing tools and infrastructure. No manual setup required — configure once and it works everywhere.

| Category | Integrations |

|---|---|

| Logs & Metrics | Coralogix · Grafana · Elasticsearch · Datadog · Prometheus · Jaeger |

| Incidents | incident.io |

| Cloud & Infra | Kubernetes |

| Dev Tools | GitHub · Confluence |

| Category | Integrations |

|---|---|

| Logs & Metrics | CloudWatch · Splunk · OpenSearch · New Relic · Honeycomb · Dynatrace · Chronosphere · VictoriaMetrics · Kloudfuse · Sentry · Snowflake |

| Incidents | PagerDuty · Opsgenie · ServiceNow |

| Cloud & Infra | AWS · GCP · Azure · Temporal |

| Dev Tools | Jira · Linear · Notion · Glean |

Need an integration? Contact us or contribute via MCP protocol — add new integrations in minutes.

┌───────────────────────────────────┐ ┌──────────────────────┐

│ Slack / GitHub / PagerDuty / API │ │ Web UI │

└─────────────────┬─────────────────┘ │ (dashboard, team │

│ webhooks │ management) │

┌─────────────────▼─────────────────┐ └──────────┬───────────┘

│ Orchestrator │ │

│ (routes webhooks, team lookup, │ │

│ token auth, audit logging) │ │

└────────┬─────────────────┬────────┘ │

│ │ │

┌────────▼────────┐ ┌────▼─────────────────────────▼───┐

│ Agent │<->│ Config Service │

│ (Claude/OpenAI, │ │ (multi-tenant cfg, RBAC, │

│ 300+ tools, │ │ routing, team hierarchy) │

│ multi-agent) │ └─────────────────┬────────────────┘

└────┬───────┬────┘ │

│ │ ▼

│ │ ┌───────────────────────┐

│ │ │ PostgreSQL │

│ │ │ (config, audit, │

│ │ │ investigations) │

│ │ └───────────────────────┘

│ │

▼ ▼

┌──────────┐ ┌─────────────────────────┐

│ Knowledge│ │ External APIs │

│ Base │ │ (K8s, AWS, Datadog, │

│ (RAPTOR) │ │ Grafana, etc.) │

└──────────┘ └─────────────────────────┘

Web Console — Easiest way to view and customize agents

The engineering that makes IncidentFox actually work in production:

| Capability | What It Does | Why It Matters |

|---|---|---|

| RAPTOR Knowledge Base | Hierarchical tree structure (ICLR 2024) — clusters → summarizes → abstracts | Standard RAG fails on 100-page runbooks. RAPTOR maintains context across long documents. |

| Smart Log Sampling | Statistics first → sample errors → drill down on anomalies | Other tools load 100K lines and hit context limits. We sample intelligently to stay useful. |

| Alert Correlation Engine | 3-layer analysis: temporal + topology + semantic | Groups alerts AND finds root cause. Reduces noise by 85-95%. |

| Prophet Anomaly Detection | Meta's Prophet algorithm with seasonality-aware forecasting | Detects anomalies that account for daily/weekly patterns, not just static thresholds. |

| Dependency Discovery | Automatic service topology mapping with blast radius analysis | Know what's affected before you start investigating. No manual service maps needed. |

| 300+ Built-in Tools | Kubernetes, AWS, Azure, GCP, Grafana, Datadog, Prometheus, GitHub, and more | No "bring your own tools" setup. Works out of the box with your stack. |

| MCP Protocol Support | Connect to any MCP server for unlimited integrations | Add new tools in minutes via config, not code. |

| Multi-Agent Orchestration | Planner routes to specialist agents (K8s, AWS, Metrics, Code, etc.) | Complex investigations get handled by the right expert, not a generic agent. |

| Model Flexibility | Supports OpenAI and Claude SDKs — use the model that fits your needs | No vendor lock-in. Switch models or use different models for different tasks. |

| Continuous Self-Improvement | Learns from investigations, persists patterns, builds team context | Gets smarter over time. Your past incidents inform future investigations. |

RAPTOR knowledge base storing 50K+ docs as your proprietary knowledge

Security, compliance, and deep customization for production deployments.

Every team is different — different tech stacks, observability tools, incident patterns, and services. Enterprise unlocks deep specialization:

| Feature | Description |

|---|---|

| Auto-Learn Your Org | We analyze your codebase, Slack history, and past incidents to identify which internal tools matter most for debugging. Then we auto-build integrations. |

| Team-Specific Agents | Each team gets agents tuned to their stack. Your payments team and your infra team have different needs — their agents reflect that. |

| Custom Prompts & Tools | Auto-learned defaults, with full control to tune. Engineers can adjust prompts, add tools, and configure agents per team. |

| Context Compounds | Every investigation makes IncidentFox smarter about your systems. Tribal knowledge gets captured, not lost. |

| Feature | Description |

|---|---|

| SOC 2 Compliant | Audited security controls, data handling, and access management |

| Sandboxed Execution | Isolated Kubernetes sandboxes for agent execution — no shared state between runs |

| Secrets Proxy | Credentials never touch the agent. Envoy proxy injects secrets at request time. |

| Approval Workflows | Critical changes (prompts, tools, configs) require review before deployment |

| SSO/OIDC | Google, Azure AD, Okta — per-organization configuration |

| Hierarchical Config | Org → Business Unit → Team inheritance with override capabilities |

| Audit Logging | Full trail of all agent actions, config changes, and investigations |

| On-Premise | Deploy entirely in your environment — air-gapped support available |

| Getting Started | Reference | Development |

|---|---|---|

| Quick Start | Features | Dev Guide |

| Deployment Guide | Integrations | Agent Architecture |

| Slack Setup (detailed) | Architecture | Tools Catalog |

We welcome contributions! See issues labeled good first issue to get started.

For bugs or feature requests, open an issue on GitHub.

Claude Code Plugin — Standalone SRE tools for individual developers using Claude Code CLI. Not connected to the IncidentFox platform above.

Built with ❤️ by the IncidentFox team

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for incidentfox

Similar Open Source Tools

incidentfox

IncidentFox is an open-source AI SRE tool designed to assist in incident response by automatically investigating incidents, finding root causes, and suggesting fixes. It integrates with observability stack, infrastructure, and collaboration tools, forming hypotheses, collecting data, and reasoning through to find root causes. The tool is built for production on-call scenarios, handling log sampling, alert correlation, anomaly detection, and dependency mapping. IncidentFox is highly customizable, Slack-first, and works on various platforms like web UI, GitHub, PagerDuty, and API. It aims to reduce incident resolution time, alert noise, and improve knowledge retention for engineering teams.

multi-agent-shogun

multi-agent-shogun is a system that runs multiple AI coding CLI instances simultaneously, orchestrating them like a feudal Japanese army. It supports Claude Code, OpenAI Codex, GitHub Copilot, and Kimi Code. The system allows you to command your AI army with zero coordination cost, enabling parallel execution, non-blocking workflow, cross-session memory, event-driven communication, and full transparency. It also features skills discovery, phone notifications, pane border task display, shout mode, and multi-CLI support.

DeepTutor

DeepTutor is an AI-powered personalized learning assistant that offers a suite of modules for massive document knowledge Q&A, interactive learning visualization, knowledge reinforcement with practice exercise generation, deep research, and idea generation. The tool supports multi-agent collaboration, dynamic topic queues, and structured outputs for various tasks. It provides a unified system entry for activity tracking, knowledge base management, and system status monitoring. DeepTutor is designed to streamline learning and research processes by leveraging AI technologies and interactive features.

mimiclaw

MimiClaw is a pocket AI assistant that runs on a $5 chip, specifically designed for the ESP32-S3 board. It operates without Linux or Node.js, using pure C language. Users can interact with MimiClaw through Telegram, enabling it to handle various tasks and learn from local memory. The tool is energy-efficient, running on USB power 24/7. With MimiClaw, users can have a personal AI assistant on a chip the size of a thumb, making it convenient and accessible for everyday use.

VT.ai

VT.ai is a multimodal AI platform that offers dynamic conversation routing with SemanticRouter, multi-modal interactions (text/image/audio), an assistant framework with code interpretation, real-time response streaming, cross-provider model switching, and local model support with Ollama integration. It supports various AI providers such as OpenAI, Anthropic, Google Gemini, Groq, Cohere, and OpenRouter, providing a wide range of core capabilities for AI orchestration.

lihil

Lihil is a performant, productive, and professional web framework designed to make Python the mainstream programming language for web development. It is 100% test covered and strictly typed, offering fast performance, ergonomic API, and built-in solutions for common problems. Lihil is suitable for enterprise web development, delivering robust and scalable solutions with best practices in microservice architecture and related patterns. It features dependency injection, OpenAPI docs generation, error response generation, data validation, message system, testability, and strong support for AI features. Lihil is ASGI compatible and uses starlette as its ASGI toolkit, ensuring compatibility with starlette classes and middlewares. The framework follows semantic versioning and has a roadmap for future enhancements and features.

starknet-agentic

Open-source stack for giving AI agents wallets, identity, reputation, and execution rails on Starknet. `starknet-agentic` is a monorepo with Cairo smart contracts for agent wallets, identity, reputation, and validation, TypeScript packages for MCP tools, A2A integration, and payment signing, reusable skills for common Starknet agent capabilities, and examples and docs for integration. It provides contract primitives + runtime tooling in one place for integrating agents. The repo includes various layers such as Agent Frameworks / Apps, Integration + Runtime Layer, Packages / Tooling Layer, Cairo Contract Layer, and Starknet L2. It aims for portability of agent integrations without giving up Starknet strengths, with a cross-chain interop strategy and skills marketplace. The repository layout consists of directories for contracts, packages, skills, examples, docs, and website.

claudex

Claudex is an open-source, self-hosted Claude Code UI that runs entirely on your machine. It provides multiple sandboxes, allows users to use their own plans, offers a full IDE experience with VS Code in the browser, and is extensible with skills, agents, slash commands, and MCP servers. Users can run AI agents in isolated environments, view and interact with a browser via VNC, switch between multiple AI providers, automate tasks with Celery workers, and enjoy various chat features and preview capabilities. Claudex also supports marketplace plugins, secrets management, integrations like Gmail, and custom instructions. The tool is configured through providers and supports various providers like Anthropic, OpenAI, OpenRouter, and Custom. It has a tech stack consisting of React, FastAPI, Python, PostgreSQL, Celery, Redis, and more.

aichildedu

AICHILDEDU is a microservice-based AI education platform for children that integrates LLMs, image generation, and speech synthesis to provide personalized storybook creation, intelligent conversational learning, and multimedia content generation. It offers features like personalized story generation, educational quiz creation, multimedia integration, age-appropriate content, multi-language support, user management, parental controls, and asynchronous processing. The platform follows a microservice architecture with components like API Gateway, User Service, Content Service, Learning Service, and AI Services. Technologies used include Python, FastAPI, PostgreSQL, MongoDB, Redis, LangChain, OpenAI GPT models, TensorFlow, PyTorch, Transformers, MinIO, Elasticsearch, Docker, Docker Compose, and JWT-based authentication.

gigachad-grc

A comprehensive, modular, containerized Governance, Risk, and Compliance (GRC) platform built with modern technologies. Manage your entire security program from compliance tracking to risk management, third-party assessments, and external audits. The platform includes specialized modules for Compliance, Data Management, Risk Management, Third-Party Risk Management, Trust, Audit, Tools, AI & Automation, and Administration. It offers features like controls management, frameworks assessment, policies lifecycle management, vendor risk management, security questionnaires, knowledge base, audit management, awareness training, phishing simulations, AI-powered risk scoring, and MCP server integration. The tech stack includes Node.js, TypeScript, React, PostgreSQL, Keycloak, Traefik, Redis, and RustFS for storage.

mesh

MCP Mesh is an open-source control plane for MCP traffic that provides a unified layer for authentication, routing, and observability. It replaces multiple integrations with a single production endpoint, simplifying configuration management. Built for multi-tenant organizations, it offers workspace/project scoping for policies, credentials, and logs. With core capabilities like MeshContext, AccessControl, and OpenTelemetry, it ensures fine-grained RBAC, full tracing, and metrics for tools and workflows. Users can define tools with input/output validation, access control checks, audit logging, and OpenTelemetry traces. The project structure includes apps for full-stack MCP Mesh, encryption, observability, and more, with deployment options ranging from Docker to Kubernetes. The tech stack includes Bun/Node runtime, TypeScript, Hono API, React, Kysely ORM, and Better Auth for OAuth and API keys.

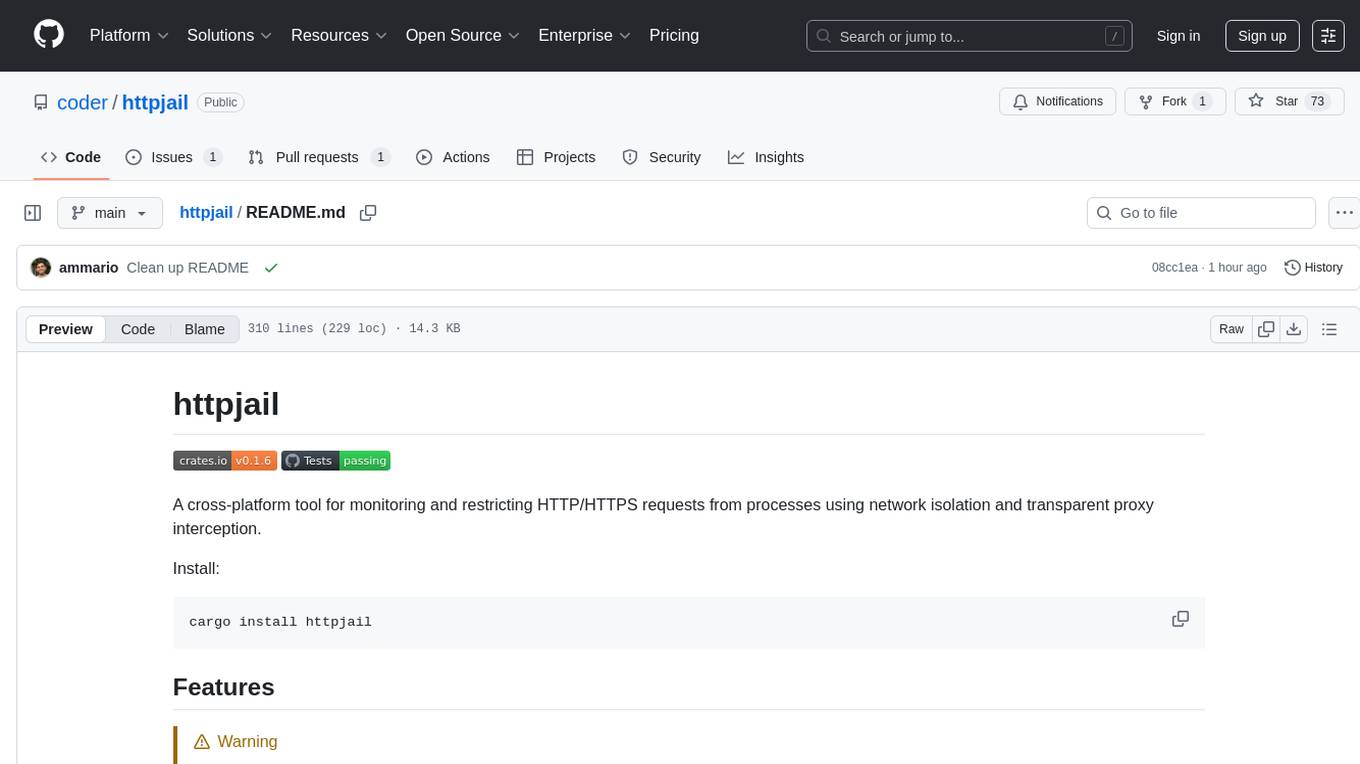

httpjail

httpjail is a cross-platform tool designed for monitoring and restricting HTTP/HTTPS requests from processes using network isolation and transparent proxy interception. It provides process-level network isolation, HTTP/HTTPS interception with TLS certificate injection, script-based and JavaScript evaluation for custom request logic, request logging, default deny behavior, and zero-configuration setup. The tool operates on Linux and macOS, creating an isolated network environment for target processes and intercepting all HTTP/HTTPS traffic through a transparent proxy enforcing user-defined rules.

Shannon

Shannon is a battle-tested infrastructure for AI agents that solves problems at scale, such as runaway costs, non-deterministic failures, and security concerns. It offers features like intelligent caching, deterministic replay of workflows, time-travel debugging, WASI sandboxing, and hot-swapping between LLM providers. Shannon allows users to ship faster with zero configuration multi-agent setup, multiple AI patterns, time-travel debugging, and hot configuration changes. It is production-ready with features like WASI sandbox, token budget control, policy engine (OPA), and multi-tenancy. Shannon helps scale without breaking by reducing costs, being provider agnostic, observable by default, and designed for horizontal scaling with Temporal workflow orchestration.

gpt-all-star

GPT-All-Star is an AI-powered code generation tool designed for scratch development of web applications with team collaboration of autonomous AI agents. The primary focus of this research project is to explore the potential of autonomous AI agents in software development. Users can organize their team, choose leaders for each step, create action plans, and work together to complete tasks. The tool supports various endpoints like OpenAI, Azure, and Anthropic, and provides functionalities for project management, code generation, and team collaboration.

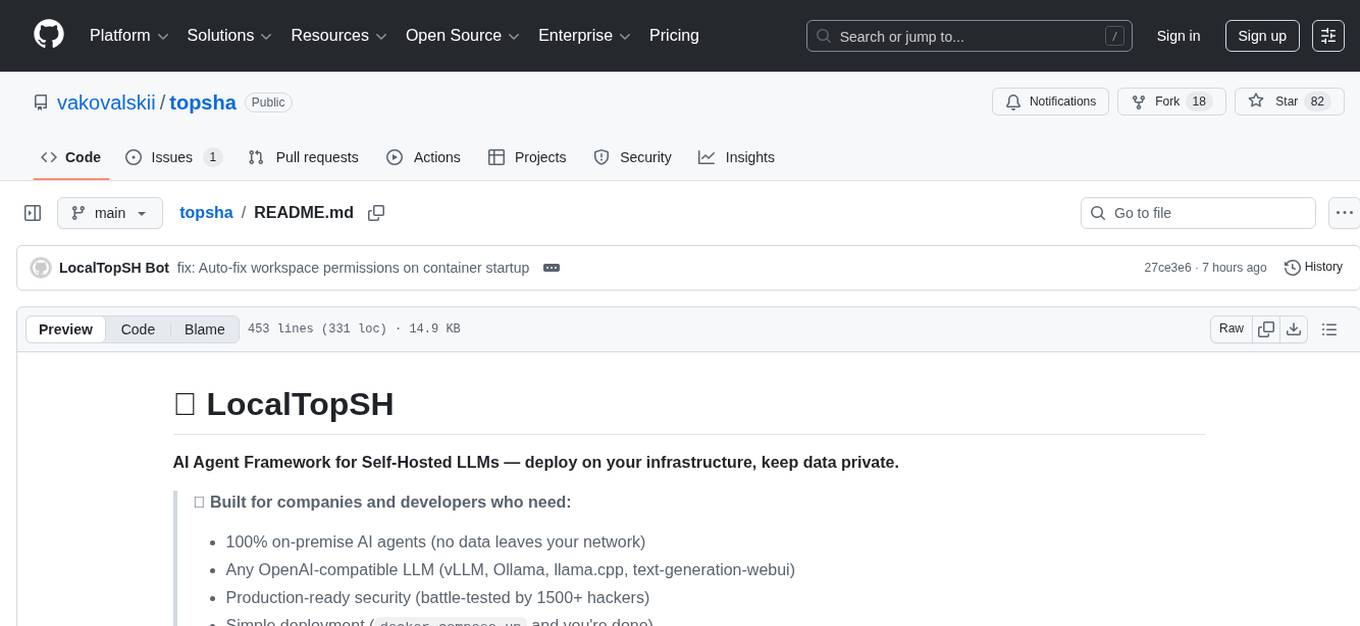

topsha

LocalTopSH is an AI Agent Framework designed for companies and developers who require 100% on-premise AI agents with data privacy. It supports various OpenAI-compatible LLM backends and offers production-ready security features. The framework allows simple deployment using Docker compose and ensures that data stays within the user's network, providing full control and compliance. With cost-effective scaling options and compatibility in regions with restrictions, LocalTopSH is a versatile solution for deploying AI agents on self-hosted infrastructure.

mcp-prompts

mcp-prompts is a Python library that provides a collection of prompts for generating creative writing ideas. It includes a variety of prompts such as story starters, character development, plot twists, and more. The library is designed to inspire writers and help them overcome writer's block by offering unique and engaging prompts to spark creativity. With mcp-prompts, users can access a wide range of writing prompts to kickstart their imagination and enhance their storytelling skills.

For similar tasks

awesome-AIOps

awesome-AIOps is a curated list of academic researches and industrial materials related to Artificial Intelligence for IT Operations (AIOps). It includes resources such as competitions, white papers, blogs, tutorials, benchmarks, tools, companies, academic materials, talks, workshops, papers, and courses covering various aspects of AIOps like anomaly detection, root cause analysis, incident management, microservices, dependency tracing, and more.

awesome-LLM-AIOps

The 'awesome-LLM-AIOps' repository is a curated list of academic research and industrial materials related to Large Language Models (LLM) and Artificial Intelligence for IT Operations (AIOps). It covers various topics such as incident management, log analysis, root cause analysis, incident mitigation, and incident postmortem analysis. The repository provides a comprehensive collection of papers, projects, and tools related to the application of LLM and AI in IT operations, offering valuable insights and resources for researchers and practitioners in the field.

robusta

Robusta is a tool designed to enhance Prometheus notifications for Kubernetes environments. It offers features such as smart grouping to reduce notification spam, AI investigation for alert analysis, alert enrichment with additional data like pod logs, self-healing capabilities for defining auto-remediation rules, advanced routing options, problem detection without PromQL, change-tracking for Kubernetes resources, auto-resolve functionality, and integration with various external systems like Slack, Teams, and Jira. Users can utilize Robusta with or without Prometheus, and it can be installed alongside existing Prometheus setups or as part of an all-in-one Kubernetes observability stack.

incidentfox

IncidentFox is an open-source AI SRE tool designed to assist in incident response by automatically investigating incidents, finding root causes, and suggesting fixes. It integrates with observability stack, infrastructure, and collaboration tools, forming hypotheses, collecting data, and reasoning through to find root causes. The tool is built for production on-call scenarios, handling log sampling, alert correlation, anomaly detection, and dependency mapping. IncidentFox is highly customizable, Slack-first, and works on various platforms like web UI, GitHub, PagerDuty, and API. It aims to reduce incident resolution time, alert noise, and improve knowledge retention for engineering teams.

qdrant

Qdrant is a vector similarity search engine and vector database. It is written in Rust, which makes it fast and reliable even under high load. Qdrant can be used for a variety of applications, including: * Semantic search * Image search * Product recommendations * Chatbots * Anomaly detection Qdrant offers a variety of features, including: * Payload storage and filtering * Hybrid search with sparse vectors * Vector quantization and on-disk storage * Distributed deployment * Highlighted features such as query planning, payload indexes, SIMD hardware acceleration, async I/O, and write-ahead logging Qdrant is available as a fully managed cloud service or as an open-source software that can be deployed on-premises.

SynapseML

SynapseML (previously known as MMLSpark) is an open-source library that simplifies the creation of massively scalable machine learning (ML) pipelines. It provides simple, composable, and distributed APIs for various machine learning tasks such as text analytics, vision, anomaly detection, and more. Built on Apache Spark, SynapseML allows seamless integration of models into existing workflows. It supports training and evaluation on single-node, multi-node, and resizable clusters, enabling scalability without resource wastage. Compatible with Python, R, Scala, Java, and .NET, SynapseML abstracts over different data sources for easy experimentation. Requires Scala 2.12, Spark 3.4+, and Python 3.8+.

mlx-vlm

MLX-VLM is a package designed for running Vision LLMs on Mac systems using MLX. It provides a convenient way to install and utilize the package for processing large language models related to vision tasks. The tool simplifies the process of running LLMs on Mac computers, offering a seamless experience for users interested in leveraging MLX for vision-related projects.

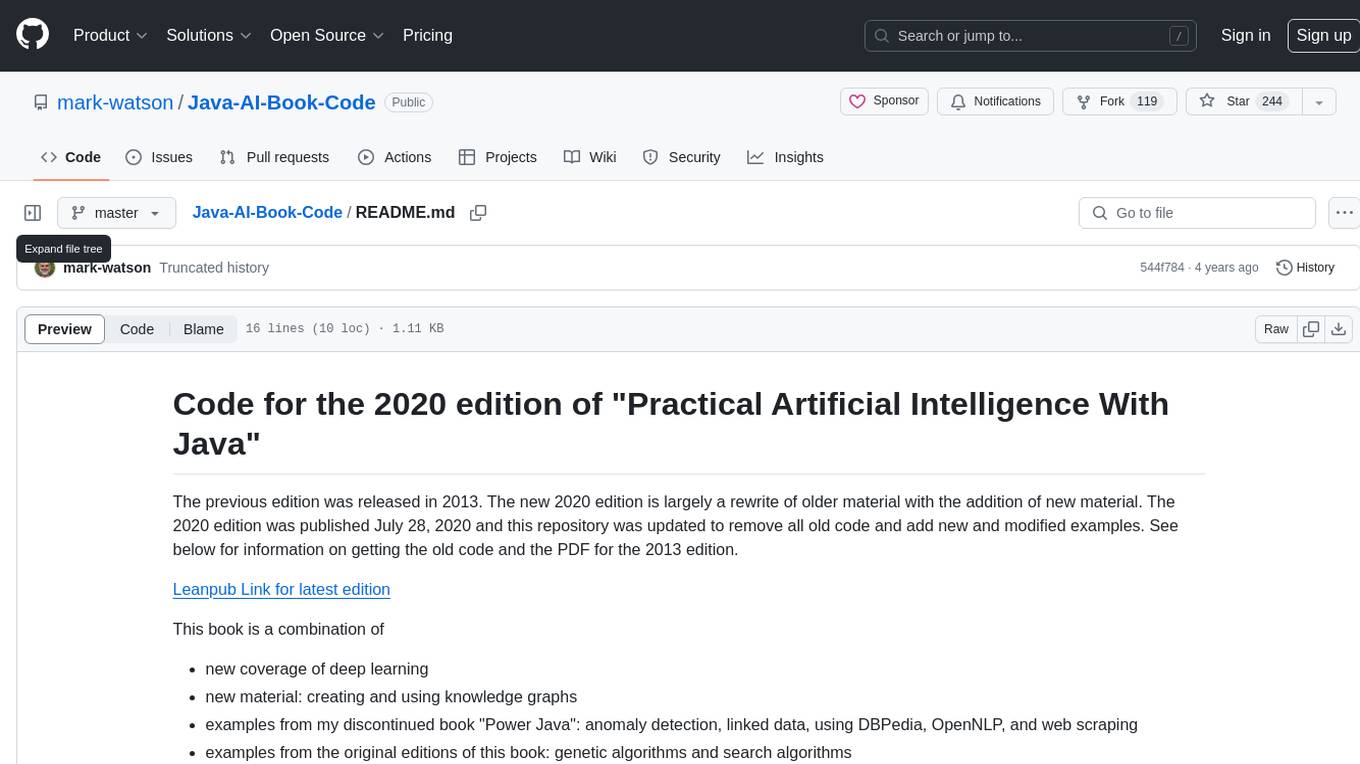

Java-AI-Book-Code

The Java-AI-Book-Code repository contains code examples for the 2020 edition of 'Practical Artificial Intelligence With Java'. It is a comprehensive update of the previous 2013 edition, featuring new content on deep learning, knowledge graphs, anomaly detection, linked data, genetic algorithms, search algorithms, and more. The repository serves as a valuable resource for Java developers interested in AI applications and provides practical implementations of various AI techniques and algorithms.

For similar jobs

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

nvidia_gpu_exporter

Nvidia GPU exporter for prometheus, using `nvidia-smi` binary to gather metrics.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

openinference

OpenInference is a set of conventions and plugins that complement OpenTelemetry to enable tracing of AI applications. It provides a way to capture and analyze the performance and behavior of AI models, including their interactions with other components of the application. OpenInference is designed to be language-agnostic and can be used with any OpenTelemetry-compatible backend. It includes a set of instrumentations for popular machine learning SDKs and frameworks, making it easy to add tracing to your AI applications.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.