topsha

Local Topsha 🐧 AI Agent for simple PC tasks - focused on local LLM (GPT-OSS, Qwen, GLM)

Stars: 81

LocalTopSH is an AI Agent Framework designed for companies and developers who require 100% on-premise AI agents with data privacy. It supports various OpenAI-compatible LLM backends and offers production-ready security features. The framework allows simple deployment using Docker compose and ensures that data stays within the user's network, providing full control and compliance. With cost-effective scaling options and compatibility in regions with restrictions, LocalTopSH is a versatile solution for deploying AI agents on self-hosted infrastructure.

README:

AI Agent Framework for Self-Hosted LLMs — deploy on your infrastructure, keep data private.

🎯 Built for companies and developers who need:

- 100% on-premise AI agents (no data leaves your network)

- Any OpenAI-compatible LLM (vLLM, Ollama, llama.cpp, text-generation-webui)

- Production-ready security (battle-tested by 1500+ hackers)

- Simple deployment (

docker compose upand you're done)

Unlike cloud-dependent solutions, LocalTopSH runs entirely on your infrastructure:

| Problem | Cloud Solutions | LocalTopSH |

|---|---|---|

| Data Privacy | Data sent to external APIs | ✅ Everything stays on-premise |

| Compliance | Hard to audit | ✅ Full control, easy audit |

| API Access | Need OpenAI/Anthropic account | ✅ Any OpenAI-compatible endpoint |

| Sanctions/Restrictions | Blocked in some regions | ✅ Works anywhere |

| Cost at Scale | $0.01-0.03 per 1K tokens | ✅ Only electricity costs |

| Backend | Example Models | Setup |

|---|---|---|

| vLLM | gpt-oss-120b, Qwen-72B, Llama-3-70B | vllm serve model --api-key dummy |

| Ollama | Llama 3, Mistral, Qwen, 100+ models | ollama serve |

| llama.cpp | Any GGUF model | llama-server -m model.gguf |

| text-generation-webui | Any HuggingFace model | Enable OpenAI API extension |

| LocalAI | Multiple backends | Docker compose included |

| LM Studio | Desktop-friendly | Built-in server mode |

| Solution | Daily Cost | Monthly Cost |

|---|---|---|

| OpenAI GPT-4 | ~$30 | ~$900 |

| Anthropic Claude | ~$15 | ~$450 |

| Self-hosted (LocalTopSH) | Electricity only | ~$50-100 (GPU power) |

- ✅ Russia, Belarus, Iran — sanctions don't apply to self-hosted

- ✅ China — no Great Firewall issues

- ✅ Air-gapped networks — zero internet required

- ✅ On-premise data centers — full compliance

# Option A: vLLM (recommended for production)

vllm serve gpt-oss-120b --api-key dummy --port 8000

# Option B: Ollama (easy setup)

ollama serve # Default port 11434

# Option C: llama.cpp (minimal resources)

llama-server -m your-model.gguf --port 8000git clone https://github.com/yourrepo/LocalTopSH

cd LocalTopSH

# Create secrets

mkdir secrets

echo "your-telegram-token" > secrets/telegram_token.txt

echo "http://your-llm-server:8000/v1" > secrets/base_url.txt

echo "dummy" > secrets/api_key.txt # or real key if required

echo "gpt-oss-120b" > secrets/model_name.txt

echo "your-zai-key" > secrets/zai_api_key.txt

# Set permissions for Docker

chmod 644 secrets/*.txtdocker compose up -d

# Check status

docker compose ps

# View logs

docker compose logs -f- Telegram Bot: Message your bot

-

Admin Panel: http://localhost:3000 (login: admin / password from

secrets/admin_password.txt) - API: http://localhost:4000/api

# Change default admin password (REQUIRED for production!)

echo "your-secure-password" > secrets/admin_password.txt

# Optionally change admin username via environment variable

# Edit docker-compose.yml and set ADMIN_USER=your_username

# Rebuild admin container

docker compose up -d --build admin

⚠️ Default credentials: admin / changeme123 — change them before exposing to network!

┌─────────────────────────────────────────────────────────────────────────────────┐

│ YOUR INFRASTRUCTURE │

├─────────────────────────────────────────────────────────────────────────────────┤

│ │

│ ┌─────────────────┐ ┌─────────────────┐ ┌─────────────────────────────┐│

│ │ Telegram │ │ LocalTopSH │ │ Your LLM Backend ││

│ │ (optional) │────▶│ Agent Stack │────▶│ ──────────────────────── ││

│ └─────────────────┘ │ │ │ vLLM / Ollama / llama.cpp ││

│ │ ┌───────────┐ │ │ gpt-oss-120b ││

│ ┌─────────────────┐ │ │ core │ │ │ Qwen-72B ││

│ │ Admin Panel │────▶│ │ (agent) │ │ │ Llama-3-70B ││

│ │ :3000 │ │ └───────────┘ │ │ Mistral-22B ││

│ └─────────────────┘ │ │ │ │ Your fine-tuned model ││

│ │ ▼ │ └─────────────────────────────┘│

│ │ ┌───────────┐ │ │

│ │ │ sandbox │ │ No data leaves your network! │

│ │ │ (per-user)│ │ │

│ │ └───────────┘ │ │

│ └─────────────────┘ │

│ │

└─────────────────────────────────────────────────────────────────────────────────┘

🔥 Stress-tested by 1500+ hackers in @neuraldeepchat

Attack attempts: Token extraction, RAM exhaustion, container escapes

Result: 0 secrets leaked, 0 downtime

| Layer | Protection | Details |

|---|---|---|

| Access Control | DM Policy | admin/allowlist/pairing/public modes |

| Input Validation | Blocked patterns | 247 dangerous commands blocked |

| Injection Defense | Pattern matching | 19 prompt injection patterns |

| Sandbox Isolation | Docker per-user | 512MB RAM, 50% CPU, 100 PIDs |

| Secrets Protection | Proxy architecture | Agent never sees API keys |

# Run security doctor (46 checks)

python scripts/doctor.py

# Run E2E tests (10 checks)

python scripts/e2e_test.py --verbose| Category | Features |

|---|---|

| System | Shell execution, file operations, code execution |

| Web | Search (Z.AI), page fetching, link extraction |

| Memory | Persistent notes, task management, chat history |

| Automation | Scheduled tasks, background jobs |

| Telegram | Send files, DMs, message management |

| Feature | Description |

|---|---|

| Skills | Anthropic-compatible skill packages |

| MCP | Model Context Protocol for external tools |

| Tools API | Dynamic tool loading and management |

| Admin Panel | Web UI for configuration and monitoring |

| Container | Port | Role |

|---|---|---|

| core | 4000 | ReAct Agent, security, sandbox orchestration |

| bot | 4001 | Telegram Bot (aiogram) |

| proxy | 3200 | Secrets isolation, LLM proxy |

| tools-api | 8100 | Tool registry, MCP, skills |

| admin | 3000 | Web admin panel (React) |

| sandbox_{id} | 5000-5999 | Per-user isolated execution |

| Secret | Required | Description |

|---|---|---|

telegram_token.txt |

✅ | Bot token from @BotFather |

base_url.txt |

✅ | LLM API URL (e.g. http://vllm:8000/v1) |

api_key.txt |

✅ | LLM API key (use dummy if not required) |

model_name.txt |

✅ | Model name (e.g. gpt-oss-120b) |

zai_api_key.txt |

✅ | Z.AI search key |

admin_password.txt |

✅ | Admin panel password (default: changeme123) |

echo "http://vllm-server:8000/v1" > secrets/base_url.txt

echo "dummy" > secrets/api_key.txt

echo "gpt-oss-120b" > secrets/model_name.txtecho "http://ollama:11434/v1" > secrets/base_url.txt

echo "ollama" > secrets/api_key.txt

echo "llama3:70b" > secrets/model_name.txtecho "http://your-server:8000/v1" > secrets/base_url.txt

echo "your-api-key" > secrets/api_key.txt

echo "your-model-name" > secrets/model_name.txtWeb panel at :3000 for managing the system (protected by Basic Auth):

# Default credentials

Username: admin

Password: (from secrets/admin_password.txt, default: changeme123)

# Change password

echo "your-secure-password" > secrets/admin_password.txt

docker compose up -d --build admin

# Change username (optional)

# In docker-compose.yml, set environment variable:

# ADMIN_USER=your_username| Page | Features |

|---|---|

| Dashboard | Stats, active users, sandboxes |

| Services | Start/stop containers |

| Config | Agent settings, rate limits |

| Security | Blocked patterns management |

| Tools | Enable/disable tools |

| MCP | Manage MCP servers |

| Skills | Install/manage skills |

| Users | Sessions, chat history |

| Logs | Real-time service logs |

Admin panel is bound to 127.0.0.1:3000 for security. For remote access:

# On your local machine

ssh -L 3000:localhost:3000 user@your-server

# Then open http://localhost:3000 in browser| Feature | LocalTopSH | OpenClaw | LangChain |

|---|---|---|---|

| Self-hosted LLM | ✅ Native | ✅ Yes | |

| Security hardening | ✅ 247 patterns | Basic | ❌ None |

| Sandbox isolation | ✅ Docker per-user | ✅ Docker | ❌ None |

| Admin panel | ✅ React UI | ✅ React UI | ❌ None |

| Telegram integration | ✅ Native | ✅ Multi-channel | ❌ None |

| Setup complexity | Simple | Complex | Code-only |

| OAuth/subscription abuse | ❌ No | ✅ Yes | ❌ No |

| 100% on-premise | ✅ Yes | ✅ Yes |

- Internal AI assistant with full data privacy

- Code review bot that never leaks proprietary code

- Document analysis without sending files to cloud

- Experiment with open models (Llama, Mistral, Qwen)

- Fine-tuned model deployment with agent capabilities

- Reproducible AI workflows in isolated environments

- Russia/Belarus/Iran — no API access restrictions

- China — no Great Firewall issues

- Air-gapped networks — military, government, finance

- High-volume workloads — pay for GPU, not per-token

- Predictable costs — no surprise API bills

- Scale without limits — your hardware, your rules

We believe in building real infrastructure, not hacks.

| Approach | LocalTopSH ✅ | Subscription Abuse ❌ |

|---|---|---|

| LLM Access | Your own models/keys | Stolen browser sessions |

| Cost Model | Pay for hardware | Violate ToS, risk bans |

| Reliability | 100% uptime (your infra) | Breaks when UI changes |

| Security | Full control | Cookies stored who-knows-where |

| Ethics | Transparent & legal | Gray area at best |

MIT

- Architecture: ARCHITECTURE.md — detailed system design

- Security: SECURITY.md — security model and patterns

- Telegram: @neuraldeepchat

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for topsha

Similar Open Source Tools

topsha

LocalTopSH is an AI Agent Framework designed for companies and developers who require 100% on-premise AI agents with data privacy. It supports various OpenAI-compatible LLM backends and offers production-ready security features. The framework allows simple deployment using Docker compose and ensures that data stays within the user's network, providing full control and compliance. With cost-effective scaling options and compatibility in regions with restrictions, LocalTopSH is a versatile solution for deploying AI agents on self-hosted infrastructure.

ClawRouter

ClawRouter is a tool designed to route every request to the cheapest model that can handle it, offering a wallet-based system with 30+ models available without the need for API keys. It provides 100% local routing with 14-dimension weighted scoring, zero external calls for routing decisions, and supports various models from providers like OpenAI, Anthropic, Google, DeepSeek, xAI, and Moonshot. Users can pay per request with USDC on Base, benefiting from an open-source, MIT-licensed, fully inspectable routing logic. The tool is optimized for agent swarm and multi-step workflows, offering cost-efficient solutions for parallel web research, multi-agent orchestration, and long-running automation tasks.

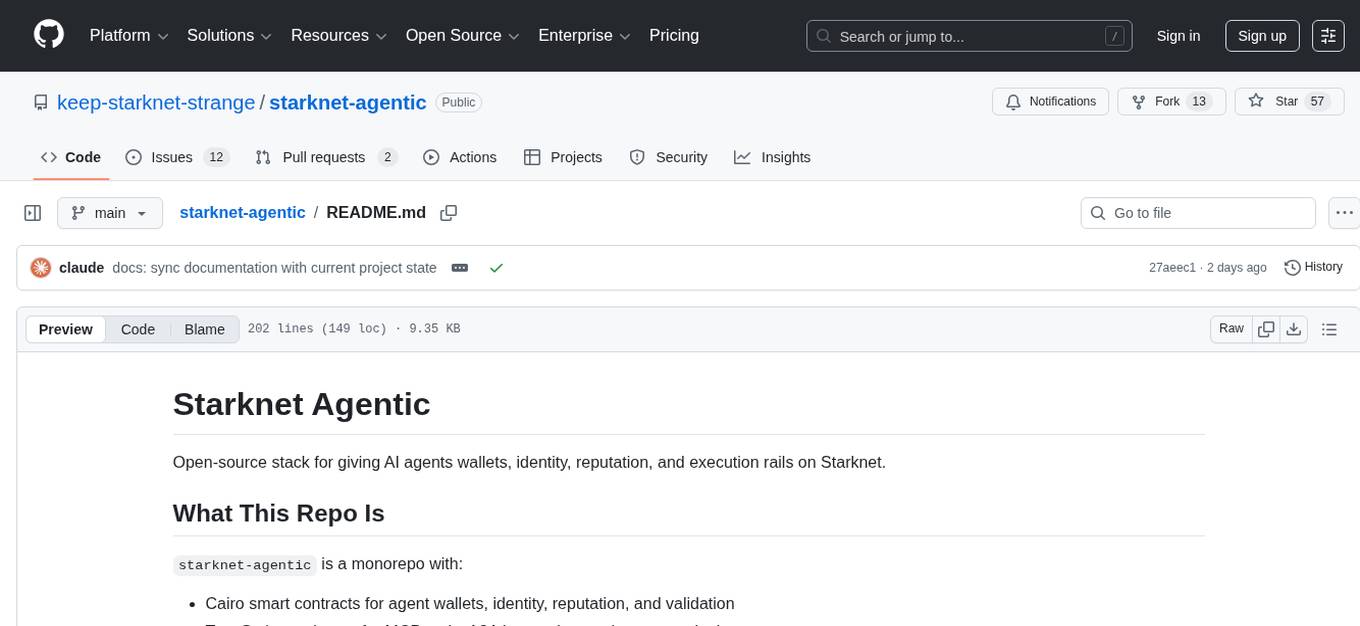

starknet-agentic

Open-source stack for giving AI agents wallets, identity, reputation, and execution rails on Starknet. `starknet-agentic` is a monorepo with Cairo smart contracts for agent wallets, identity, reputation, and validation, TypeScript packages for MCP tools, A2A integration, and payment signing, reusable skills for common Starknet agent capabilities, and examples and docs for integration. It provides contract primitives + runtime tooling in one place for integrating agents. The repo includes various layers such as Agent Frameworks / Apps, Integration + Runtime Layer, Packages / Tooling Layer, Cairo Contract Layer, and Starknet L2. It aims for portability of agent integrations without giving up Starknet strengths, with a cross-chain interop strategy and skills marketplace. The repository layout consists of directories for contracts, packages, skills, examples, docs, and website.

openakita

OpenAkita is a self-evolving AI Agent framework that autonomously learns new skills, performs daily self-checks and repairs, accumulates experience from task execution, and persists until the task is done. It auto-generates skills, installs dependencies, learns from mistakes, and remembers preferences. The framework is standards-based, multi-platform, and provides a Setup Center GUI for intuitive installation and configuration. It features self-learning and evolution mechanisms, a Ralph Wiggum Mode for persistent execution, multi-LLM endpoints, multi-platform IM support, desktop automation, multi-agent architecture, scheduled tasks, identity and memory management, a tool system, and a guided wizard for setup.

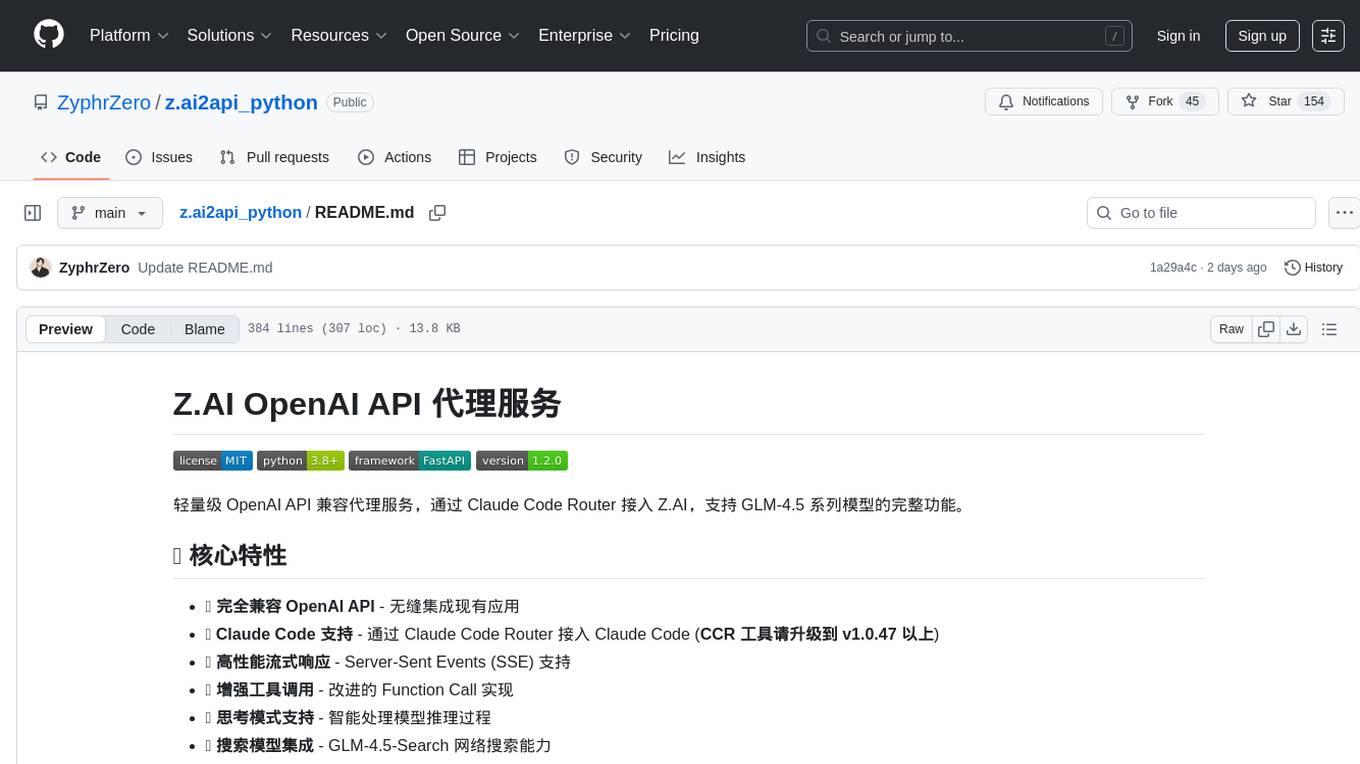

z.ai2api_python

Z.AI2API Python is a lightweight OpenAI API proxy service that integrates seamlessly with existing applications. It supports the full functionality of GLM-4.5 series models and features high-performance streaming responses, enhanced tool invocation, support for thinking mode, integration with search models, Docker deployment, session isolation for privacy protection, flexible configuration via environment variables, and intelligent upstream model routing.

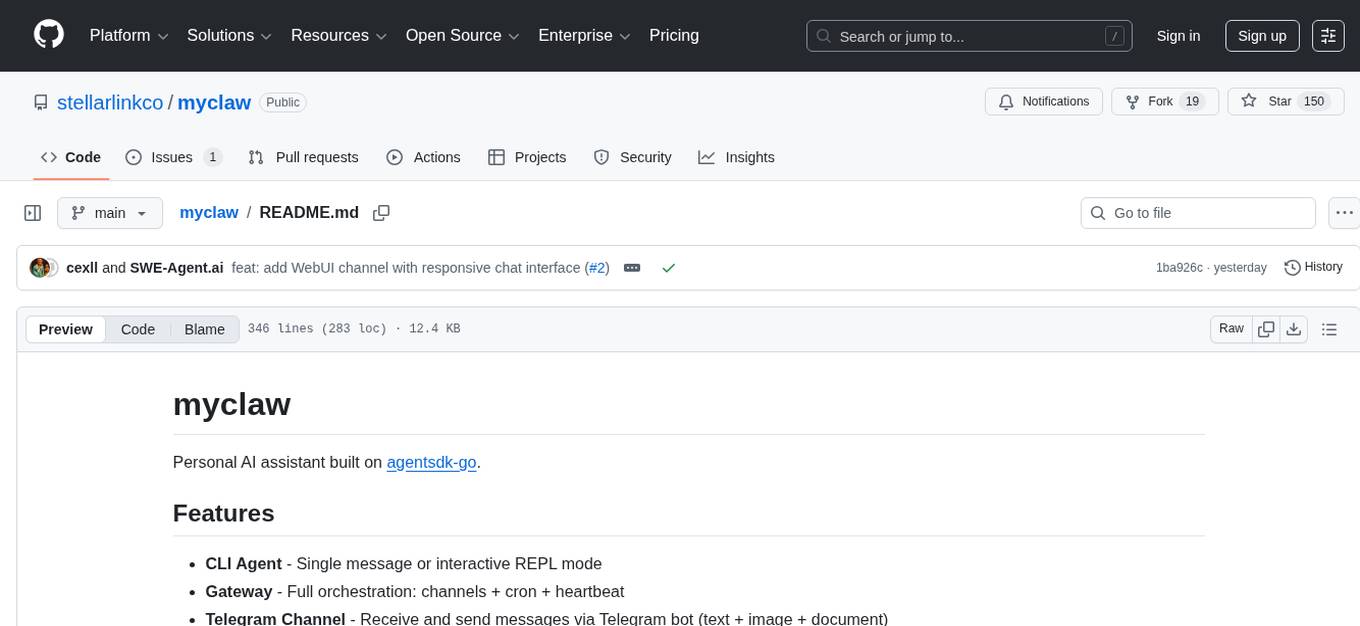

myclaw

myclaw is a personal AI assistant built on agentsdk-go that offers a CLI agent for single message or interactive REPL mode, full orchestration with channels, cron, and heartbeat, support for various messaging channels like Telegram, Feishu, WeCom, WhatsApp, and a web UI, multi-provider support for Anthropic and OpenAI models, image recognition and document processing, scheduled tasks with JSON persistence, long-term and daily memory storage, custom skill loading, and more. It provides a comprehensive solution for interacting with AI models and managing tasks efficiently.

vibium

Vibium is a browser automation infrastructure designed for AI agents, providing a single binary that manages browser lifecycle, WebDriver BiDi protocol, and an MCP server. It offers zero configuration, AI-native capabilities, and is lightweight with no runtime dependencies. It is suitable for AI agents, test automation, and any tasks requiring browser interaction.

ai-toolbox

AI Toolbox is a cross-platform desktop application designed to efficiently manage various AI programming assistant configurations. It supports Windows, macOS, and Linux. The tool provides visual management of OpenCode, Oh-My-OpenCode, Slim plugin configurations, Claude Code API supplier configurations, Codex CLI configurations, MCP server management, Skills management, WSL synchronization, AI supplier management, system tray for quick configuration switching, data backup, theme switching, multilingual support, and automatic update checks.

boxlite

BoxLite is an embedded, lightweight micro-VM runtime designed for AI agents running OCI containers with hardware-level isolation. It is built for high concurrency with no daemon required, offering features like lightweight VMs, high concurrency, hardware isolation, embeddability, and OCI compatibility. Users can spin up 'Boxes' to run containers for AI agent sandboxes and multi-tenant code execution scenarios where Docker alone is insufficient and full VM infrastructure is too heavy. BoxLite supports Python, Node.js, and Rust with quick start guides for each, along with features like CPU/memory limits, storage options, networking capabilities, security layers, and image registry configuration. The tool provides SDKs for Python and Node.js, with Go support coming soon. It offers detailed documentation, examples, and architecture insights for users to understand how BoxLite works under the hood.

AgentX

AgentX is a next-generation open-source AI agent development framework and runtime platform. It provides an event-driven runtime with a simple framework and minimal UI. The platform is ready-to-use and offers features like multi-user support, session persistence, real-time streaming, and Docker readiness. Users can build AI Agent applications with event-driven architecture using TypeScript for server-side (Node.js) and client-side (Browser/React) development. AgentX also includes comprehensive documentation, core concepts, guides, API references, and various packages for different functionalities. The architecture follows an event-driven design with layered components for server-side and client-side interactions.

AI-CloudOps

AI+CloudOps is a cloud-native operations management platform designed for enterprises. It aims to integrate artificial intelligence technology with cloud-native practices to significantly improve the efficiency and level of operations work. The platform offers features such as AIOps for monitoring data analysis and alerts, multi-dimensional permission management, visual CMDB for resource management, efficient ticketing system, deep integration with Prometheus for real-time monitoring, and unified Kubernetes management for cluster optimization.

gin-vue-admin

Gin-vue-admin is a full-stack development platform based on Vue and Gin, integrating features like JWT authentication, dynamic routing, dynamic menus, Casbin authorization, form generator, code generator, etc. It provides various example files to help users focus more on business development. The project offers detailed documentation, video tutorials for setup and deployment, and a community for support and contributions. Users need a certain level of knowledge in Golang and Vue to work with this project. It is recommended to follow the Apache2.0 license if using the project for commercial purposes.

AiToEarn

AiToEarn is a one-click publishing tool for multiple self-media platforms such as Douyin, Xiaohongshu, Video Number, and Kuaishou. It allows users to publish videos with ease, observe popular content across the web, and view rankings of explosive articles on Xiaohongshu. The tool is also capable of providing daily and weekly rankings of popular content on Xiaohongshu, Douyin, Video Number, and Kuaishou. In progress features include expanding publishing parameters to support short video e-commerce, adding an AI tool ranking list, enabling AI automatic comments, and AI comment search.

private-llm-qa-bot

This is a production-grade knowledge Q&A chatbot implementation based on AWS services and the LangChain framework, with optimizations at various stages. It supports flexible configuration and plugging of vector models and large language models. The front and back ends are separated, making it easy to integrate with IM tools (such as Feishu).

Lim-Code

LimCode is a powerful VS Code AI programming assistant that supports multiple AI models, intelligent tool invocation, and modular architecture. It features support for various AI channels, a smart tool system for code manipulation, MCP protocol support for external tool extension, intelligent context management, session management, and more. Users can install LimCode from the plugin store or via VSIX, or build it from the source code. The tool offers a rich set of features for AI programming and code manipulation within the VS Code environment.

For similar tasks

ai2apps

AI2Apps is a visual IDE for building LLM-based AI agent applications, enabling developers to efficiently create AI agents through drag-and-drop, with features like design-to-development for rapid prototyping, direct packaging of agents into apps, powerful debugging capabilities, enhanced user interaction, efficient team collaboration, flexible deployment, multilingual support, simplified product maintenance, and extensibility through plugins.

ApeRAG

ApeRAG is a production-ready platform for Retrieval-Augmented Generation (RAG) that combines Graph RAG, vector search, and full-text search with advanced AI agents. It is ideal for building Knowledge Graphs, Context Engineering, and deploying intelligent AI agents for autonomous search and reasoning across knowledge bases. The platform offers features like advanced index types, intelligent AI agents with MCP support, enhanced Graph RAG with entity normalization, multimodal processing, hybrid retrieval engine, MinerU integration for document parsing, production-grade deployment with Kubernetes, enterprise management features, MCP integration, and developer-friendly tools for customization and contribution.

topsha

LocalTopSH is an AI Agent Framework designed for companies and developers who require 100% on-premise AI agents with data privacy. It supports various OpenAI-compatible LLM backends and offers production-ready security features. The framework allows simple deployment using Docker compose and ensures that data stays within the user's network, providing full control and compliance. With cost-effective scaling options and compatibility in regions with restrictions, LocalTopSH is a versatile solution for deploying AI agents on self-hosted infrastructure.

non-ai-licenses

This repository provides templates for software and digital work licenses that restrict usage in AI training datasets or AI technologies. It includes various license styles such as Apache, BSD, MIT, UPL, ISC, CC0, and MPL-2.0.

fraim

Fraim is an AI-powered toolkit designed for security engineers to enhance their workflows by leveraging AI capabilities. It offers solutions to find, detect, fix, and flag vulnerabilities throughout the development lifecycle. The toolkit includes features like Risk Flagger for identifying risks in code changes, Code Security Analysis for context-aware vulnerability detection, and Infrastructure as Code Analysis for spotting misconfigurations in cloud environments. Fraim can be run as a CLI tool or integrated into Github Actions, making it a versatile solution for security teams and organizations looking to enhance their security practices with AI technology.

MCPSpy

MCPSpy is a command-line tool leveraging eBPF technology to monitor Model Context Protocol (MCP) communication at the kernel level. It provides real-time visibility into JSON-RPC 2.0 messages exchanged between MCP clients and servers, supporting Stdio and HTTP transports. MCPSpy offers security analysis, debugging, performance monitoring, compliance assurance, and learning opportunities for understanding MCP communications. The tool consists of eBPF programs, an eBPF loader, an HTTP session manager, an MCP protocol parser, and output handlers for console display and JSONL output.

llm-price-compass

LLM price compass is an open-source tool for comparing inference costs on different GPUs across various cloud providers. It collects benchmark data to help users select the right GPU, cloud, and provider for their models. The project aims to provide insights into fixed per token costs from different providers, aiding in decision-making for model deployment.

sre

SmythOS is an operating system designed for building, deploying, and managing intelligent AI agents at scale. It provides a unified SDK and resource abstraction layer for various AI services, making it easy to scale and flexible. With an agent-first design, developer-friendly SDK, modular architecture, and enterprise security features, SmythOS offers a robust foundation for AI workloads. The system is built with a philosophy inspired by traditional operating system kernels, ensuring autonomy, control, and security for AI agents. SmythOS aims to make shipping production-ready AI agents accessible and open for everyone in the coming Internet of Agents era.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.