ClawRouter

Smart LLM router — save 78% on inference costs. 30+ models, one wallet, x402 micropayments.

Stars: 2023

ClawRouter is a tool designed to route every request to the cheapest model that can handle it, offering a wallet-based system with 30+ models available without the need for API keys. It provides 100% local routing with 14-dimension weighted scoring, zero external calls for routing decisions, and supports various models from providers like OpenAI, Anthropic, Google, DeepSeek, xAI, and Moonshot. Users can pay per request with USDC on Base, benefiting from an open-source, MIT-licensed, fully inspectable routing logic. The tool is optimized for agent swarm and multi-step workflows, offering cost-efficient solutions for parallel web research, multi-agent orchestration, and long-running automation tasks.

README:

Route every request to the cheapest model that can handle it. One wallet, 30+ models, zero API keys.

Docs · Models · Configuration · Features · Troubleshooting · Telegram · X

"What is 2+2?" → DeepSeek $0.27/M saved 99%

"Summarize this article" → GPT-4o-mini $0.60/M saved 99%

"Build a React component" → Claude Sonnet $15.00/M best balance

"Prove this theorem" → DeepSeek-R $0.42/M reasoning

"Run 50 parallel searches"→ Kimi K2.5 $2.40/M agentic swarm

- 100% local routing — 15-dimension weighted scoring runs on your machine in <1ms

- Zero external calls — no API calls for routing decisions, ever

- 30+ models — OpenAI, Anthropic, Google, DeepSeek, xAI, Moonshot through one wallet

- x402 micropayments — pay per request with USDC on Base, no API keys

- Open source — MIT licensed, fully inspectable routing logic

# 1. Install with smart routing enabled by default

curl -fsSL https://raw.githubusercontent.com/BlockRunAI/ClawRouter/main/scripts/reinstall.sh | bash

# 2. Fund your wallet with USDC on Base (address printed on install)

# $5 is enough for thousands of requests

# 3. Restart OpenClaw gateway

openclaw gateway restartDone! Smart routing (blockrun/auto) is now your default model.

-

Use

/model blockrun/autoin any conversation to switch on the fly -

Free tier? Use

/model free— routes to gpt-oss-120b at $0 -

Model aliases:

/model sonnet,/model grok,/model deepseek,/model kimi -

Want a specific model? Use

blockrun/openai/gpt-4oorblockrun/anthropic/claude-sonnet-4 -

Already have a funded wallet?

export BLOCKRUN_WALLET_KEY=0x...

The flow:

-

Wallet auto-generated on Base (L2) — saved securely at

~/.openclaw/blockrun/wallet.key - Fund with $1 USDC — enough for hundreds of requests

- Request any model — "help me call Grok to check @hosseeb's opinion on AI agents"

-

ClawRouter routes it — spawns a Grok sub-agent via

xai/grok-3, pays per-request

No API keys. No accounts. Just fund and go.

100% local, <1ms, zero API calls.

Request → Weighted Scorer (15 dimensions)

│

├── High confidence → Pick model from tier → Done

│

└── Low confidence → Default to MEDIUM tier → Done

No external classifier calls. Ambiguous queries default to the MEDIUM tier (DeepSeek/GPT-4o-mini) — fast, cheap, and good enough for most tasks.

Deep dive: 15-dimension scoring weights | Architecture

| Tier | Primary Model | Cost/M | Savings vs Opus |

|---|---|---|---|

| SIMPLE | gemini-2.5-flash | $0.60 | 99.2% |

| MEDIUM | grok-code-fast-1 | $1.50 | 98.0% |

| COMPLEX | gemini-2.5-pro | $10.00 | 86.7% |

| REASONING | grok-4-fast-reasoning | $0.50 | 99.3% |

Special rule: 2+ reasoning markers → REASONING at 0.97 confidence.

ClawRouter v0.5+ includes intelligent features that work automatically:

- Agentic auto-detect — routes multi-step tasks to Kimi K2.5

-

Tool detection — auto-switches when

toolsarray present - Context-aware — filters models that can't handle your context size

-

Model aliases —

/model free,/model sonnet,/model grok - Session persistence — pins model for multi-turn conversations

- Free tier fallback — keeps working when wallet is empty

Full details: docs/features.md

| Tier | % of Traffic | Cost/M |

|---|---|---|

| SIMPLE | ~45% | $0.27 |

| MEDIUM | ~35% | $0.60 |

| COMPLEX | ~15% | $15.00 |

| REASONING | ~5% | $10.00 |

| Blended average | $3.17/M |

Compared to $75/M for Claude Opus = 96% savings on a typical workload.

30+ models across 6 providers, one wallet:

| Model | Input $/M | Output $/M | Context | Reasoning |

|---|---|---|---|---|

| OpenAI | ||||

| gpt-5.2 | $1.75 | $14.00 | 400K | * |

| gpt-4o | $2.50 | $10.00 | 128K | |

| gpt-4o-mini | $0.15 | $0.60 | 128K | |

| gpt-oss-120b | $0 | $0 | 128K | |

| o3 | $2.00 | $8.00 | 200K | * |

| o3-mini | $1.10 | $4.40 | 128K | * |

| Anthropic | ||||

| claude-opus-4.5 | $5.00 | $25.00 | 200K | * |

| claude-sonnet-4 | $3.00 | $15.00 | 200K | * |

| claude-haiku-4.5 | $1.00 | $5.00 | 200K | |

| gemini-2.5-pro | $1.25 | $10.00 | 1M | * |

| gemini-2.5-flash | $0.15 | $0.60 | 1M | |

| DeepSeek | ||||

| deepseek-chat | $0.14 | $0.28 | 128K | |

| deepseek-reasoner | $0.55 | $2.19 | 128K | * |

| xAI | ||||

| grok-3 | $3.00 | $15.00 | 131K | * |

| grok-3-mini | $0.30 | $0.50 | 131K | |

| grok-4-fast-reasoning | $0.20 | $0.50 | 131K | * |

| grok-4-fast | $0.20 | $0.50 | 131K | |

| grok-code-fast-1 | $0.20 | $1.50 | 131K | |

| Moonshot | ||||

| kimi-k2.5 | $0.50 | $2.40 | 262K | * |

Free tier:

gpt-oss-120bcosts nothing and serves as automatic fallback when wallet is empty.

Full list: src/models.ts

Kimi K2.5 from Moonshot AI is optimized for agent swarm and multi-step workflows:

- Agent Swarm — Coordinates up to 100 parallel agents, 4.5x faster execution

- Extended Tool Chains — Stable across 200-300 sequential tool calls without drift

- Vision-to-Code — Generates production React from UI mockups and videos

- Cost Efficient — 76% cheaper than Claude Opus on agentic benchmarks

Best for: parallel web research, multi-agent orchestration, long-running automation tasks.

No account. No API key. Payment IS authentication via x402.

Request → 402 (price: $0.003) → wallet signs USDC → retry → response

USDC stays in your wallet until spent — non-custodial. Price is visible in the 402 header before signing.

Fund your wallet:

- Coinbase: Buy USDC, send to Base

- Bridge: Move USDC from any chain to Base

- CEX: Withdraw USDC to Base network

ClawRouter auto-generates and saves a wallet at ~/.openclaw/blockrun/wallet.key.

# Check wallet status

/wallet

# Use your own wallet

export BLOCKRUN_WALLET_KEY=0x...Full reference: Wallet configuration | Backup & recovery

┌─────────────────────────────────────────────────────────────┐

│ Your Application │

└─────────────────────────────────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────┐

│ ClawRouter (localhost) │

│ ┌─────────────────┐ ┌─────────────────┐ ┌─────────────┐ │

│ │ Weighted Scorer │→ │ Model Selector │→ │ x402 Signer │ │

│ │ (15 dimensions)│ │ (cheapest tier) │ │ (USDC) │ │

│ └─────────────────┘ └─────────────────┘ └─────────────┘ │

└─────────────────────────────────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────┐

│ BlockRun API │

│ → OpenAI | Anthropic | Google | DeepSeek | xAI | Moonshot│

└─────────────────────────────────────────────────────────────┘

Routing is client-side — open source and inspectable.

Deep dive: docs/architecture.md — request flow, payment system, optimizations

For basic usage, no configuration needed. For advanced options:

| Setting | Default | Description |

|---|---|---|

CLAWROUTER_DISABLED |

false |

Disable smart routing |

BLOCKRUN_PROXY_PORT |

8402 |

Proxy port |

BLOCKRUN_WALLET_KEY |

auto | Wallet private key |

Full reference: docs/configuration.md

Use ClawRouter directly in your code:

import { startProxy, route } from "@blockrun/clawrouter";

// Start proxy server

const proxy = await startProxy({ walletKey: "0x..." });

// Or use router directly (no proxy)

const decision = route("Prove sqrt(2) is irrational", ...);Full examples: docs/configuration.md#programmatic-usage

- SSE heartbeat: Sends headers + heartbeat immediately, preventing upstream timeouts

- Response dedup: SHA-256 hash → 30s cache, prevents double-charge on retries

- Payment pre-auth: Caches 402 params, pre-signs USDC, skips 402 round trip (~200ms saved)

Track your savings with /stats in any OpenClaw conversation.

Full details: docs/features.md#cost-tracking-with-stats

They're built for developers. ClawRouter is built for agents.

| OpenRouter / LiteLLM | ClawRouter | |

|---|---|---|

| Setup | Human creates account | Agent generates wallet |

| Auth | API key (shared secret) | Wallet signature (cryptographic) |

| Payment | Prepaid balance (custodial) | Per-request (non-custodial) |

| Routing | Proprietary / closed | Open source, client-side |

Agents shouldn't need a human to paste API keys. They should generate a wallet, receive funds, and pay per request — programmatically.

Quick checklist:

# Check version (should be 0.5.7+)

cat ~/.openclaw/extensions/clawrouter/package.json | grep version

# Check proxy running

curl http://localhost:8402/healthFull guide: docs/troubleshooting.md

git clone https://github.com/BlockRunAI/ClawRouter.git

cd ClawRouter

npm install

npm run build

npm run typecheck

# End-to-end tests (requires funded wallet)

BLOCKRUN_WALLET_KEY=0x... npx tsx test-e2e.ts- [x] Smart routing — 15-dimension weighted scoring, 4-tier model selection

- [x] x402 payments — per-request USDC micropayments, non-custodial

- [x] Response dedup — prevents double-charge on retries

- [x] Payment pre-auth — skips 402 round trip

- [x] SSE heartbeat — prevents upstream timeouts

- [x] Agentic auto-detect — auto-switch to agentic models for multi-step tasks

- [x] Tool detection — auto-switch to agentic mode when tools array present

- [x] Context-aware routing — filter out models that can't handle context size

- [x] Session persistence — pin model for multi-turn conversations

- [x] Cost tracking — /stats command with savings dashboard

- [x] Model aliases —

/model free,/model sonnet,/model grok, etc. - [x] Free tier — gpt-oss-120b for $0 when wallet is empty

- [ ] Cascade routing — try cheap model first, escalate on low quality

- [ ] Spend controls — daily/monthly budgets

- [ ] Remote analytics — cost tracking at blockrun.ai

MIT

BlockRun — Pay-per-request AI infrastructure

If ClawRouter saves you money, consider starring the repo.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ClawRouter

Similar Open Source Tools

For similar tasks

ClawRouter

ClawRouter is a tool designed to route every request to the cheapest model that can handle it, offering a wallet-based system with 30+ models available without the need for API keys. It provides 100% local routing with 14-dimension weighted scoring, zero external calls for routing decisions, and supports various models from providers like OpenAI, Anthropic, Google, DeepSeek, xAI, and Moonshot. Users can pay per request with USDC on Base, benefiting from an open-source, MIT-licensed, fully inspectable routing logic. The tool is optimized for agent swarm and multi-step workflows, offering cost-efficient solutions for parallel web research, multi-agent orchestration, and long-running automation tasks.

agentkit

AgentKit is a framework developed by Coinbase Developer Platform for enabling AI agents to take actions onchain. It is designed to be framework-agnostic and wallet-agnostic, allowing users to integrate it with any AI framework and any wallet. The tool is actively being developed and encourages community contributions. AgentKit provides support for various protocols, frameworks, wallets, and networks, making it versatile for blockchain transactions and API integrations using natural language inputs.

semantic-router

Semantic Router is a superfast decision-making layer for your LLMs and agents. Rather than waiting for slow LLM generations to make tool-use decisions, we use the magic of semantic vector space to make those decisions — _routing_ our requests using _semantic_ meaning.

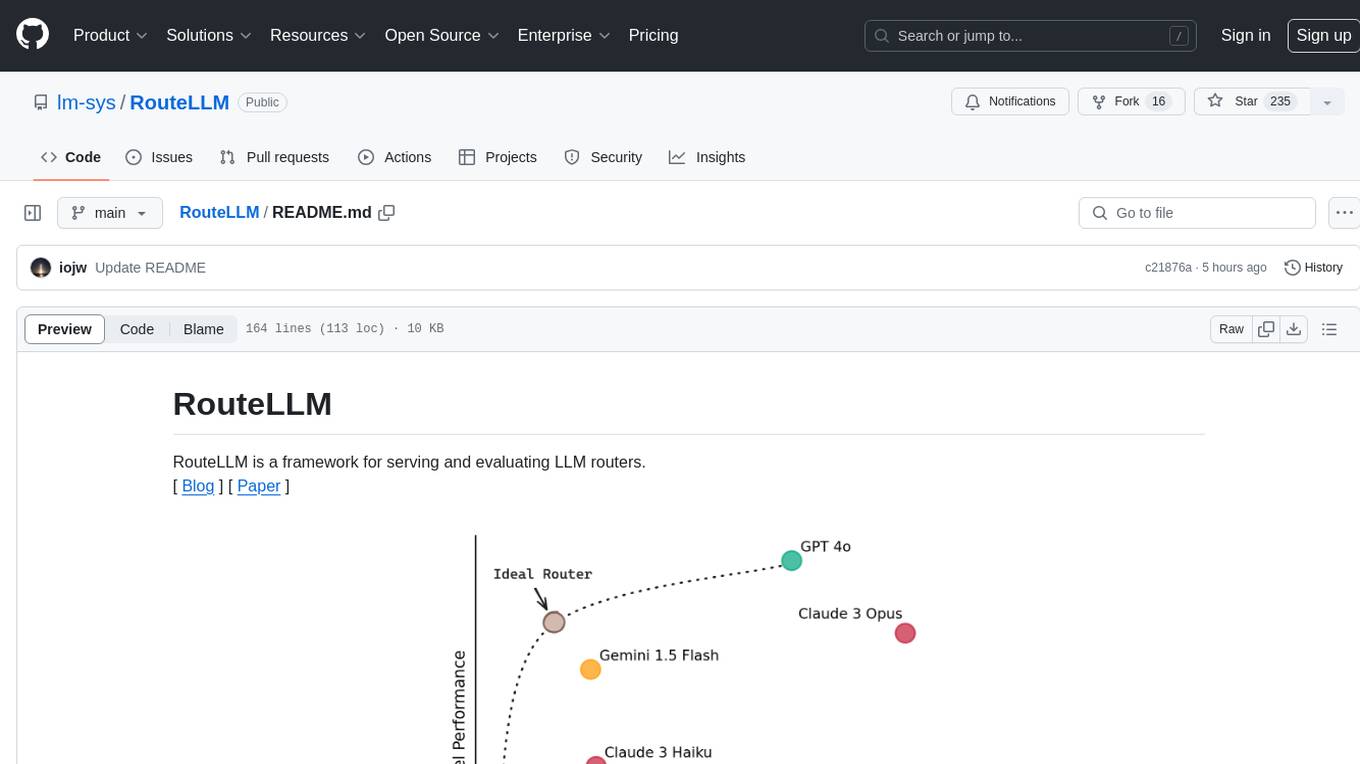

RouteLLM

RouteLLM is a framework for serving and evaluating LLM routers. It allows users to launch an OpenAI-compatible API that routes requests to the best model based on cost thresholds. Trained routers are provided to reduce costs while maintaining performance. Users can easily extend the framework, compare router performance, and calibrate cost thresholds. RouteLLM supports multiple routing strategies and benchmarks, offering a lightweight server and evaluation framework. It enables users to evaluate routers on benchmarks, calibrate thresholds, and modify model pairs. Contributions for adding new routers and benchmarks are welcome.

DeepAI

DeepAI is a proxy server that enhances the interaction experience of large language models (LLMs) by integrating the 'thinking chain' process. It acts as an intermediary layer, receiving standard OpenAI API compatible requests, using independent 'thinking services' to generate reasoning processes, and then forwarding the enhanced requests to the LLM backend of your choice. This ensures that responses are not only generated by the LLM but also based on pre-inference analysis, resulting in more insightful and coherent answers. DeepAI supports seamless integration with applications designed for the OpenAI API, providing endpoints for '/v1/chat/completions' and '/v1/models', making it easy to integrate into existing applications. It offers features such as reasoning chain enhancement, flexible backend support, API key routing, weighted random selection, proxy support, comprehensive logging, and graceful shutdown.

Toolify

Toolify is a middleware proxy that empowers Large Language Models (LLMs) and OpenAI API interfaces by enabling function calling capabilities. It acts as an intermediary between applications and LLM APIs, injecting prompts and parsing tool calls from the model's response. Key features include universal function calling, multiple function calls support, flexible initiation, compatibility with

tingly-box

Tingly Box is a tool that helps in deciding which model to call, compressing context, and routing requests efficiently. It offers secure, reliable, and customizable functional extensions. With features like unified API, smart routing, context compression, auto API translation, blazing fast performance, flexible authentication, visual control panel, and client-side usage stats, Tingly Box provides a comprehensive solution for managing AI models and tokens. It supports integration with various IDEs, CLI tools, SDKs, and AI applications, making it versatile and easy to use. The tool also allows seamless integration with OAuth providers like Claude Code, enabling users to utilize existing quotas in OpenAI-compatible tools. Tingly Box aims to simplify AI model management and usage by providing a single endpoint for multiple providers with minimal configuration, promoting seamless integration with SDKs and CLI tools.

parallax

Parallax is a fully decentralized inference engine developed by Gradient. It allows users to build their own AI cluster for model inference across distributed nodes with varying configurations and physical locations. Core features include hosting local LLM on personal devices, cross-platform support, pipeline parallel model sharding, paged KV cache management, continuous batching for Mac, dynamic request scheduling, and routing for high performance. The backend architecture includes P2P communication powered by Lattica, GPU backend powered by SGLang and vLLM, and MAC backend powered by MLX LM.

For similar jobs

NanoLLM

NanoLLM is a tool designed for optimized local inference for Large Language Models (LLMs) using HuggingFace-like APIs. It supports quantization, vision/language models, multimodal agents, speech, vector DB, and RAG. The tool aims to provide efficient and effective processing for LLMs on local devices, enhancing performance and usability for various AI applications.

mslearn-ai-fundamentals

This repository contains materials for the Microsoft Learn AI Fundamentals module. It covers the basics of artificial intelligence, machine learning, and data science. The content includes hands-on labs, interactive learning modules, and assessments to help learners understand key concepts and techniques in AI. Whether you are new to AI or looking to expand your knowledge, this module provides a comprehensive introduction to the fundamentals of AI.

awesome-ai-tools

Awesome AI Tools is a curated list of popular tools and resources for artificial intelligence enthusiasts. It includes a wide range of tools such as machine learning libraries, deep learning frameworks, data visualization tools, and natural language processing resources. Whether you are a beginner or an experienced AI practitioner, this repository aims to provide you with a comprehensive collection of tools to enhance your AI projects and research. Explore the list to discover new tools, stay updated with the latest advancements in AI technology, and find the right resources to support your AI endeavors.

go2coding.github.io

The go2coding.github.io repository is a collection of resources for AI enthusiasts, providing information on AI products, open-source projects, AI learning websites, and AI learning frameworks. It aims to help users stay updated on industry trends, learn from community projects, access learning resources, and understand and choose AI frameworks. The repository also includes instructions for local and external deployment of the project as a static website, with details on domain registration, hosting services, uploading static web pages, configuring domain resolution, and a visual guide to the AI tool navigation website. Additionally, it offers a platform for AI knowledge exchange through a QQ group and promotes AI tools through a WeChat public account.

AI-Notes

AI-Notes is a repository dedicated to practical applications of artificial intelligence and deep learning. It covers concepts such as data mining, machine learning, natural language processing, and AI. The repository contains Jupyter Notebook examples for hands-on learning and experimentation. It explores the development stages of AI, from narrow artificial intelligence to general artificial intelligence and superintelligence. The content delves into machine learning algorithms, deep learning techniques, and the impact of AI on various industries like autonomous driving and healthcare. The repository aims to provide a comprehensive understanding of AI technologies and their real-world applications.

promptpanel

Prompt Panel is a tool designed to accelerate the adoption of AI agents by providing a platform where users can run large language models across any inference provider, create custom agent plugins, and use their own data safely. The tool allows users to break free from walled-gardens and have full control over their models, conversations, and logic. With Prompt Panel, users can pair their data with any language model, online or offline, and customize the system to meet their unique business needs without any restrictions.

ai-demos

The 'ai-demos' repository is a collection of example code from presentations focusing on building with AI and LLMs. It serves as a resource for developers looking to explore practical applications of artificial intelligence in their projects. The code snippets showcase various techniques and approaches to leverage AI technologies effectively. The repository aims to inspire and educate developers on integrating AI solutions into their applications.

ai_summer

AI Summer is a repository focused on providing workshops and resources for developing foundational skills in generative AI models and transformer models. The repository offers practical applications for inferencing and training, with a specific emphasis on understanding and utilizing advanced AI chat models like BingGPT. Participants are encouraged to engage in interactive programming environments, decide on projects to work on, and actively participate in discussions and breakout rooms. The workshops cover topics such as generative AI models, retrieval-augmented generation, building AI solutions, and fine-tuning models. The goal is to equip individuals with the necessary skills to work with AI technologies effectively and securely, both locally and in the cloud.