aioimaplib

Python asyncio IMAP4rev1 client library

Stars: 141

aioimaplib is a Python library inspired by imaplib and imaplib2, aiming to port imaplib with asyncio for asynchronous benefits. It provides functionalities to interact with IMAP servers using asyncio, including checking mailbox, waiting for new messages, handling IDLE command, threading, IMAP command concurrency, logging configuration, and authentication with OAuth2. The library is tested with various IMAP servers like dovecot, Gmail, Outlook, Yahoo, etc. Developers are encouraged to contribute by improving, bug fixing, testing with other IMAP servers, and providing feedback. The library supports most IMAP4rev1 commands from RFC3501 and plans to implement additional commands like 'STARTTLS', 'AUTHENTICATE', 'COMPRESS', 'SETACL', 'DELETEACL', 'GETACL', 'MYRIGHTS', 'LISTRIGHTS', 'GETQUOTA', 'GETQUOTAROOT', 'SETQUOTA', 'SORT', 'THREAD', 'ID', 'NAMESPACE', 'CATENATE', and tests with other servers.

README:

.. _imaplib2: https://sourceforge.net/projects/imaplib2/ .. _imaplib: https://docs.python.org/3/library/imaplib.html .. _asyncio: https://docs.python.org/3/library/asyncio.html

.. image:: https://github.com/bamthomas/aioimaplib/workflows/tests/badge.svg :alt: Build status :target: https://github.com/bamthomas/aioimaplib/actions/

.. image:: https://coveralls.io/repos/github/bamthomas/aioimaplib/badge.svg :target: https://coveralls.io/github/bamthomas/aioimaplib

This library is inspired by imaplib_ and imaplib2_ from Piers Lauder, Nicolas Sebrecht, Sebastian Spaeth. Some utilities functions are taken from imaplib/imaplib2 thanks to them.

Thank you to all contributors for your time and interest 🙏 👉

The aim is to port the imaplib with asyncio_, to benefit from the sleep or treat model.

It is tested against python 3.9, 3.10, 3.11 matrix. But the library itself could run with other python versions.

.. code-block:: python

import asyncio

from aioimaplib import aioimaplib

async def check_mailbox(host, user, password):

imap_client = aioimaplib.IMAP4_SSL(host=host)

await imap_client.wait_hello_from_server()

await imap_client.login(user, password)

res, data = await imap_client.select()

print('there is %s messages INBOX' % data[0])

await imap_client.logout()

if __name__ == '__main__':

loop = asyncio.get_event_loop()

loop.run_until_complete(check_mailbox('my.imap.server', 'user', 'pass'))

Beware that the IMAP4.close() function is an IMAP function that is closing the selected mailbox, thus passing from SELECTED state to AUTH state. It does not close the TCP connection. The way to close TCP connection properly is to logout.

.. _RFC2177: https://tools.ietf.org/html/rfc2177

The RFC2177_ is implemented, to be able to wait for new mail messages without using CPU. The responses are pushed in an async queue, and it is possible to read them in real time. To leave the IDLE mode, it is necessary to send a "DONE" command to the server.

.. code-block:: python

async def wait_for_new_message(host, user, password):

imap_client = aioimaplib.IMAP4_SSL(host=host)

await imap_client.wait_hello_from_server()

await imap_client.login(user, password)

await imap_client.select()

idle = await imap_client.idle_start(timeout=10)

while imap_client.has_pending_idle():

msg = await imap_client.wait_server_push()

print(msg)

if msg == STOP_WAIT_SERVER_PUSH:

imap_client.idle_done()

await asyncio.wait_for(idle, 1)

await imap_client.logout()

if __name__ == '__main__':

loop = asyncio.get_event_loop()

loop.run_until_complete(wait_for_new_message('my.imap.server', 'user', 'pass'))

Or in a more event based style (the IDLE command is closed at each message from server):

.. code-block:: python

async def idle_loop(host, user, password): imap_client = aioimaplib.IMAP4_SSL(host=host, timeout=30) await imap_client.wait_hello_from_server()

await imap_client.login(user, password)

await imap_client.select()

while True:

print((await imap_client.uid('fetch', '1:*', 'FLAGS')))

idle = await imap_client.idle_start(timeout=60)

print((await imap_client.wait_server_push()))

imap_client.idle_done()

await asyncio.wait_for(idle, 30)

.. _asyncio.Event: https://docs.python.org/3.4/library/asyncio-sync.html#event .. _asyncio.Condition: https://docs.python.org/3.4/library/asyncio-sync.html#condition .. _supervisor: http://supervisord.org/

The IMAP4ClientProtocol class is not thread safe, it uses asyncio.Event_ and asyncio.Condition_ that are not thread safe, and state change for pending commands is not locked.

It is possible to use threads but each IMAP4ClientProtocol instance should run in the same thread:

.. image:: images/thread_imap_protocol.png

Each color rectangle is an IMAP4ClientProtocol instance piece of code executed by the thread asyncio loop until it reaches a yield, waiting on I/O.

For example, it is possible to launch 4 mono-threaded mail-fetcher processes on a 4 cores server with supervisor_, and use a distribution function like len(email) % (process_num) or whatever to share equally a mail account list between the 4 processes.

IMAP protocol allows to run some commands in parallel. Four rules are implemented to ensure responses consistency:

- if a sync command is running, the following requests (sync or async) must wait

- if an async command is running, same async commands (or with the same untagged response type) must wait

- async commands can be executed in parallel

- sync command must wait pending async commands to finish

.. _howto: https://docs.python.org/3.4/howto/logging.html#configuring-logging-for-a-library

As said in the logging howto_ the logger is defined with

.. code-block:: python

logger = logging.getLogger(__name__)

Where name is 'aioimaplib.aioimaplib'. You can set the logger parameters, either by python API

.. code-block:: python

aioimaplib_logger = logging.getLogger('aioimaplib.aioimaplib')

sh = logging.StreamHandler()

sh.setLevel(logging.DEBUG)

sh.setFormatter(logging.Formatter("%(asctime)s %(levelname)s [%(module)s:%(lineno)d] %(message)s"))

aioimaplib_logger.addHandler(sh)

Or loading config file (for example with logging.config.dictConfig(yaml.load(file))) with this piece of yaml file

.. code-block:: yaml

loggers:

...

aioimaplib.aioimaplib:

level: DEBUG

handlers: [syslog]

propagate: no

...

Starting with the 01/01/23 Microsoft Outlook can only be accessed with OAuth2. You need to register you client to be used with oauth. Find more :https://learn.microsoft.com/en-us/exchange/client-developer/legacy-protocols/how-to-authenticate-an-imap-pop-smtp-application-by-using-oauth:`here`.

This might be also used with Google Mail, but it is not tested for it.

- dovecot 2.2.13 on debian Jessie

- gmail with imap and SSL

- outlook with SSL

- yahoo with SSL

- free.fr with SSL

- orange.fr with SSL

- mailden.net with SSL

.. _poetry: https://python-poetry.org/

Developers are welcome! If you want to improve it, fix bugs, test it with other IMAP servers, give feedback, thank you for it.

We use poetry_ for building the library. Just run

.. code-block:: bash

poetry install

poetry run pytest

# or you can create a poetry shell

poetry install

poetry shell

pytest

To add an imaplib or imaplib2 command you can :

- add the function to the testing imapserver with a new imaplib or imaplib2 server test, i.e. test_imapserver_imaplib.py or test_imapserver_imaplib2.py respectively;

- then add the function to the aioimaplib doing almost the same test than above but the async way in test_aioimaplib.py.

- PREAUTH

.. _rfc3501: https://tools.ietf.org/html/rfc3501 .. _rfc4978: https://tools.ietf.org/html/rfc4978 .. _rfc4314: https://tools.ietf.org/html/rfc4314 .. _rfc2087: https://tools.ietf.org/html/rfc2087 .. _rfc5256: https://tools.ietf.org/html/rfc5256 .. _rfc2971: https://tools.ietf.org/html/rfc2971 .. _rfc2342: https://tools.ietf.org/html/rfc2342 .. _rfc4469: https://tools.ietf.org/html/rfc4469

- 23/25 IMAP4rev1 commands are implemented from the main rfc3501_. 'STARTTLS' and 'AUTHENTICATE'(except with XOAUTH2) are still missing.

- 'COMPRESS' from rfc4978_

- 'SETACL' 'DELETEACL' 'GETACL' 'MYRIGHTS' 'LISTRIGHTS' from ACL rfc4314_

- 'GETQUOTA': 'GETQUOTAROOT': 'SETQUOTA' from quota rfc2087_

- 'SORT' and 'THREAD' from the rfc5256_

- 'ID' from the rfc2971_

- 'NAMESPACE' from rfc2342_

- 'CATENATE' from rfc4469_

- tests with other servers

Sometimes you break things and you don't understand what's going on (I always do). For this library I have two related tools:

.. role:: bash(code) :language: bash

- ngrep on the imap test port: :bash:

sudo ngrep -d lo port 12345 - activate debug logs changing INFO to DEBUG at the top of the mock server and the aioimaplib

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aioimaplib

Similar Open Source Tools

aioimaplib

aioimaplib is a Python library inspired by imaplib and imaplib2, aiming to port imaplib with asyncio for asynchronous benefits. It provides functionalities to interact with IMAP servers using asyncio, including checking mailbox, waiting for new messages, handling IDLE command, threading, IMAP command concurrency, logging configuration, and authentication with OAuth2. The library is tested with various IMAP servers like dovecot, Gmail, Outlook, Yahoo, etc. Developers are encouraged to contribute by improving, bug fixing, testing with other IMAP servers, and providing feedback. The library supports most IMAP4rev1 commands from RFC3501 and plans to implement additional commands like 'STARTTLS', 'AUTHENTICATE', 'COMPRESS', 'SETACL', 'DELETEACL', 'GETACL', 'MYRIGHTS', 'LISTRIGHTS', 'GETQUOTA', 'GETQUOTAROOT', 'SETQUOTA', 'SORT', 'THREAD', 'ID', 'NAMESPACE', 'CATENATE', and tests with other servers.

llm-baselines

LLM-baselines is a modular codebase to experiment with transformers, inspired from NanoGPT. It provides a quick and easy way to train and evaluate transformer models on a variety of datasets. The codebase is well-documented and easy to use, making it a great resource for researchers and practitioners alike.

axar

AXAR AI is a lightweight framework designed for building production-ready agentic applications using TypeScript. It aims to simplify the process of creating robust, production-grade LLM-powered apps by focusing on familiar coding practices without unnecessary abstractions or steep learning curves. The framework provides structured, typed inputs and outputs, familiar and intuitive patterns like dependency injection and decorators, explicit control over agent behavior, real-time logging and monitoring tools, minimalistic design with little overhead, model agnostic compatibility with various AI models, and streamed outputs for fast and accurate results. AXAR AI is ideal for developers working on real-world AI applications who want a tool that gets out of the way and allows them to focus on shipping reliable software.

KaibanJS

KaibanJS is a JavaScript-native framework for building multi-agent AI systems. It enables users to create specialized AI agents with distinct roles and goals, manage tasks, and coordinate teams efficiently. The framework supports role-based agent design, tool integration, multiple LLMs support, robust state management, observability and monitoring features, and a real-time agentic Kanban board for visualizing AI workflows. KaibanJS aims to empower JavaScript developers with a user-friendly AI framework tailored for the JavaScript ecosystem, bridging the gap in the AI race for non-Python developers.

Bard-API

The Bard API is a Python package that returns responses from Google Bard through the value of a cookie. It is an unofficial API that operates through reverse-engineering, utilizing cookie values to interact with Google Bard for users struggling with frequent authentication problems or unable to authenticate via Google Authentication. The Bard API is not a free service, but rather a tool provided to assist developers with testing certain functionalities due to the delayed development and release of Google Bard's API. It has been designed with a lightweight structure that can easily adapt to the emergence of an official API. Therefore, using it for any other purposes is strongly discouraged. If you have access to a reliable official PaLM-2 API or Google Generative AI API, replace the provided response with the corresponding official code. Check out https://github.com/dsdanielpark/Bard-API/issues/262.

CEO

CEO is an intuitive and modular AI agent framework designed for task automation. It provides a flexible environment for building agents with specific abilities and personalities, allowing users to assign tasks and interact with the agents to automate various processes. The framework supports multi-agent collaboration scenarios and offers functionalities like instantiating agents, granting abilities, assigning queries, and executing tasks. Users can customize agent personalities and define specific abilities using decorators, making it easy to create complex automation workflows.

aiosqlite

aiosqlite is a Python library that provides a friendly, async interface to SQLite databases. It replicates the standard sqlite3 module but with async versions of all the standard connection and cursor methods, along with context managers for automatically closing connections and cursors. It allows interaction with SQLite databases on the main AsyncIO event loop without blocking execution of other coroutines while waiting for queries or data fetches. The library also replicates most of the advanced features of sqlite3, such as row factories and total changes tracking.

wllama

Wllama is a WebAssembly binding for llama.cpp, a high-performance and lightweight language model library. It enables you to run inference directly on the browser without the need for a backend or GPU. Wllama provides both high-level and low-level APIs, allowing you to perform various tasks such as completions, embeddings, tokenization, and more. It also supports model splitting, enabling you to load large models in parallel for faster download. With its Typescript support and pre-built npm package, Wllama is easy to integrate into your React Typescript projects.

aiokafka

aiokafka is an asyncio client for Kafka that provides high-level, asynchronous message producer and consumer functionalities. It allows users to interact with Kafka for sending and consuming messages in an efficient and scalable manner. The tool supports features like cluster layout retrieval, topic/partition leadership information, group coordination, and message consumption load balancing. Users can easily integrate aiokafka into their Python projects to work with Kafka seamlessly.

azure-functions-openai-extension

Azure Functions OpenAI Extension is a project that adds support for OpenAI LLM (GPT-3.5-turbo, GPT-4) bindings in Azure Functions. It provides NuGet packages for various functionalities like text completions, chat completions, assistants, embeddings generators, and semantic search. The project requires .NET 6 SDK or greater, Azure Functions Core Tools v4.x, and specific settings in Azure Function or local settings for development. It offers features like text completions, chat completion, assistants with custom skills, embeddings generators for text relatedness, and semantic search using vector databases. The project also includes examples in C# and Python for different functionalities.

embodied-agents

Embodied Agents is a toolkit for integrating large multi-modal models into existing robot stacks with just a few lines of code. It provides consistency, reliability, scalability, and is configurable to any observation and action space. The toolkit is designed to reduce complexities involved in setting up inference endpoints, converting between different model formats, and collecting/storing datasets. It aims to facilitate data collection and sharing among roboticists by providing Python-first abstractions that are modular, extensible, and applicable to a wide range of tasks. The toolkit supports asynchronous and remote thread-safe agent execution for maximal responsiveness and scalability, and is compatible with various APIs like HuggingFace Spaces, Datasets, Gymnasium Spaces, Ollama, and OpenAI. It also offers automatic dataset recording and optional uploads to the HuggingFace hub.

chromem-go

chromem-go is an embeddable vector database for Go with a Chroma-like interface and zero third-party dependencies. It enables retrieval augmented generation (RAG) and similar embeddings-based features in Go apps without the need for a separate database. The focus is on simplicity and performance for common use cases, allowing querying of documents with minimal memory allocations. The project is in beta and may introduce breaking changes before v1.0.0.

neuron-ai

Neuron AI is a PHP framework that provides an Agent class for creating fully functional agents to perform tasks like analyzing text for SEO optimization. The framework manages advanced mechanisms such as memory, tools, and function calls. Users can extend the Agent class to create custom agents and interact with them to get responses based on the underlying LLM. Neuron AI aims to simplify the development of AI-powered applications by offering a structured framework with documentation and guidelines for contributions under the MIT license.

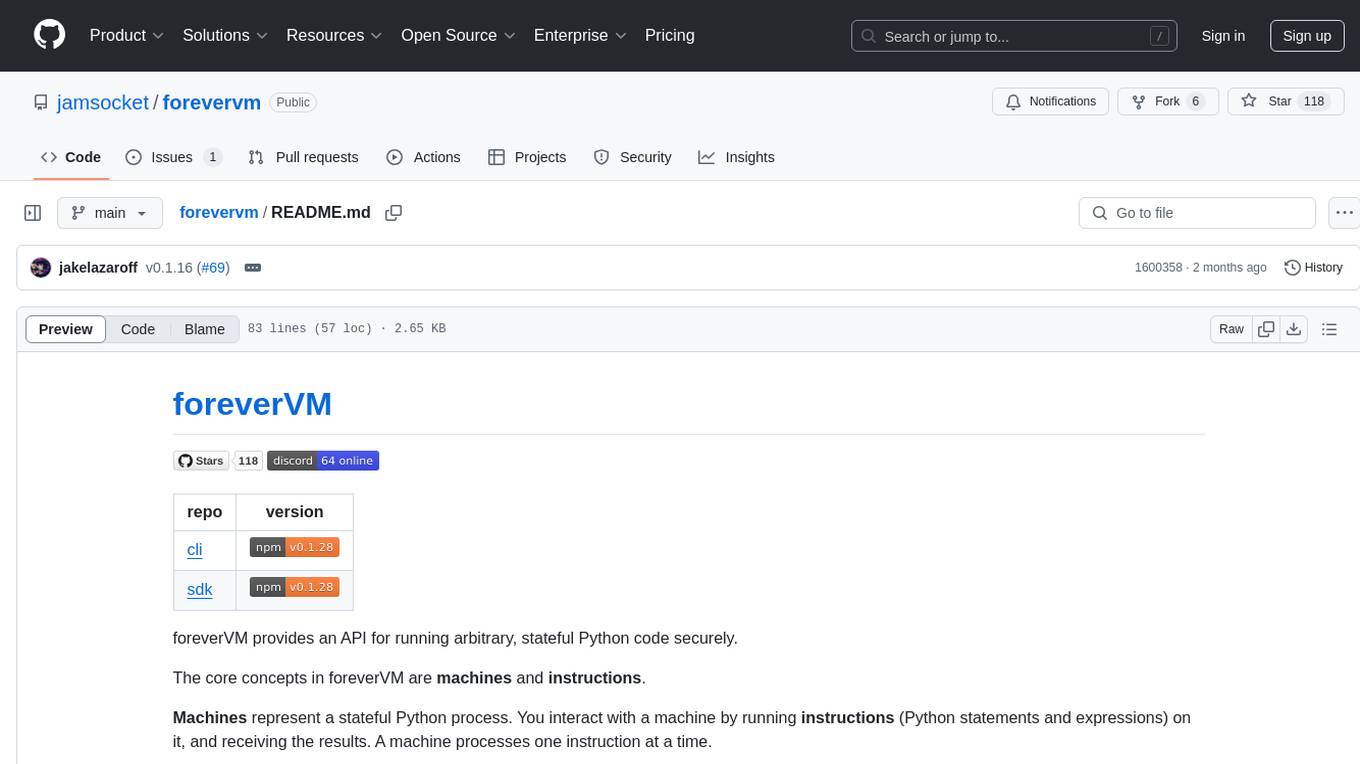

forevervm

foreverVM is a tool that provides an API for running arbitrary, stateful Python code securely. It revolves around the concepts of machines and instructions, where machines represent stateful Python processes and instructions are Python statements and expressions that can be executed on these machines. Users can interact with machines, run instructions, and receive results. The tool ensures that machines are managed efficiently by automatically swapping them from memory to disk when idle and back when needed, allowing for running REPLs 'forever'. Users can easily get started with foreverVM using the CLI and an API token, and can leverage the SDK for more advanced functionalities.

instructor-js

Instructor is a Typescript library for structured extraction in Typescript, powered by llms, designed for simplicity, transparency, and control. It stands out for its simplicity, transparency, and user-centric design. Whether you're a seasoned developer or just starting out, you'll find Instructor's approach intuitive and steerable.

Trace

Trace is a new AutoDiff-like tool for training AI systems end-to-end with general feedback. It generalizes the back-propagation algorithm by capturing and propagating an AI system's execution trace. Implemented as a PyTorch-like Python library, users can write Python code directly and use Trace primitives to optimize certain parts, similar to training neural networks.

For similar tasks

aioimaplib

aioimaplib is a Python library inspired by imaplib and imaplib2, aiming to port imaplib with asyncio for asynchronous benefits. It provides functionalities to interact with IMAP servers using asyncio, including checking mailbox, waiting for new messages, handling IDLE command, threading, IMAP command concurrency, logging configuration, and authentication with OAuth2. The library is tested with various IMAP servers like dovecot, Gmail, Outlook, Yahoo, etc. Developers are encouraged to contribute by improving, bug fixing, testing with other IMAP servers, and providing feedback. The library supports most IMAP4rev1 commands from RFC3501 and plans to implement additional commands like 'STARTTLS', 'AUTHENTICATE', 'COMPRESS', 'SETACL', 'DELETEACL', 'GETACL', 'MYRIGHTS', 'LISTRIGHTS', 'GETQUOTA', 'GETQUOTAROOT', 'SETQUOTA', 'SORT', 'THREAD', 'ID', 'NAMESPACE', 'CATENATE', and tests with other servers.

For similar jobs

resonance

Resonance is a framework designed to facilitate interoperability and messaging between services in your infrastructure and beyond. It provides AI capabilities and takes full advantage of asynchronous PHP, built on top of Swoole. With Resonance, you can: * Chat with Open-Source LLMs: Create prompt controllers to directly answer user's prompts. LLM takes care of determining user's intention, so you can focus on taking appropriate action. * Asynchronous Where it Matters: Respond asynchronously to incoming RPC or WebSocket messages (or both combined) with little overhead. You can set up all the asynchronous features using attributes. No elaborate configuration is needed. * Simple Things Remain Simple: Writing HTTP controllers is similar to how it's done in the synchronous code. Controllers have new exciting features that take advantage of the asynchronous environment. * Consistency is Key: You can keep the same approach to writing software no matter the size of your project. There are no growing central configuration files or service dependencies registries. Every relation between code modules is local to those modules. * Promises in PHP: Resonance provides a partial implementation of Promise/A+ spec to handle various asynchronous tasks. * GraphQL Out of the Box: You can build elaborate GraphQL schemas by using just the PHP attributes. Resonance takes care of reusing SQL queries and optimizing the resources' usage. All fields can be resolved asynchronously.

aiogram_bot_template

Aiogram bot template is a boilerplate for creating Telegram bots using Aiogram framework. It provides a solid foundation for building robust and scalable bots with a focus on code organization, database integration, and localization.

pluto

Pluto is a development tool dedicated to helping developers **build cloud and AI applications more conveniently** , resolving issues such as the challenging deployment of AI applications and open-source models. Developers are able to write applications in familiar programming languages like **Python and TypeScript** , **directly defining and utilizing the cloud resources necessary for the application within their code base** , such as AWS SageMaker, DynamoDB, and more. Pluto automatically deduces the infrastructure resource needs of the app through **static program analysis** and proceeds to create these resources on the specified cloud platform, **simplifying the resources creation and application deployment process**.

pinecone-ts-client

The official Node.js client for Pinecone, written in TypeScript. This client library provides a high-level interface for interacting with the Pinecone vector database service. With this client, you can create and manage indexes, upsert and query vector data, and perform other operations related to vector search and retrieval. The client is designed to be easy to use and provides a consistent and idiomatic experience for Node.js developers. It supports all the features and functionality of the Pinecone API, making it a comprehensive solution for building vector-powered applications in Node.js.

aiohttp-pydantic

Aiohttp pydantic is an aiohttp view to easily parse and validate requests. You define using function annotations what your methods for handling HTTP verbs expect, and Aiohttp pydantic parses the HTTP request for you, validates the data, and injects the parameters you want. It provides features like query string, request body, URL path, and HTTP headers validation, as well as Open API Specification generation.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

aioconsole

aioconsole is a Python package that provides asynchronous console and interfaces for asyncio. It offers asynchronous equivalents to input, print, exec, and code.interact, an interactive loop running the asynchronous Python console, customization and running of command line interfaces using argparse, stream support to serve interfaces instead of using standard streams, and the apython script to access asyncio code at runtime without modifying the sources. The package requires Python version 3.8 or higher and can be installed from PyPI or GitHub. It allows users to run Python files or modules with a modified asyncio policy, replacing the default event loop with an interactive loop. aioconsole is useful for scenarios where users need to interact with asyncio code in a console environment.

aiosqlite

aiosqlite is a Python library that provides a friendly, async interface to SQLite databases. It replicates the standard sqlite3 module but with async versions of all the standard connection and cursor methods, along with context managers for automatically closing connections and cursors. It allows interaction with SQLite databases on the main AsyncIO event loop without blocking execution of other coroutines while waiting for queries or data fetches. The library also replicates most of the advanced features of sqlite3, such as row factories and total changes tracking.