prelude

A very simple tool to build LLM prompts from your code repositories.

Stars: 130

Prelude is a simple tool for creating context prompts for LLMs with long context windows. It helps improve code distributed over multiple files by generating prompts with file tree and concatenated file contents. The prompt is copied to clipboard and can be saved to a file. It excludes files listed in .preludeignore and .gitignore files. The tool requires the `tree` command to be installed on the system for functionality.

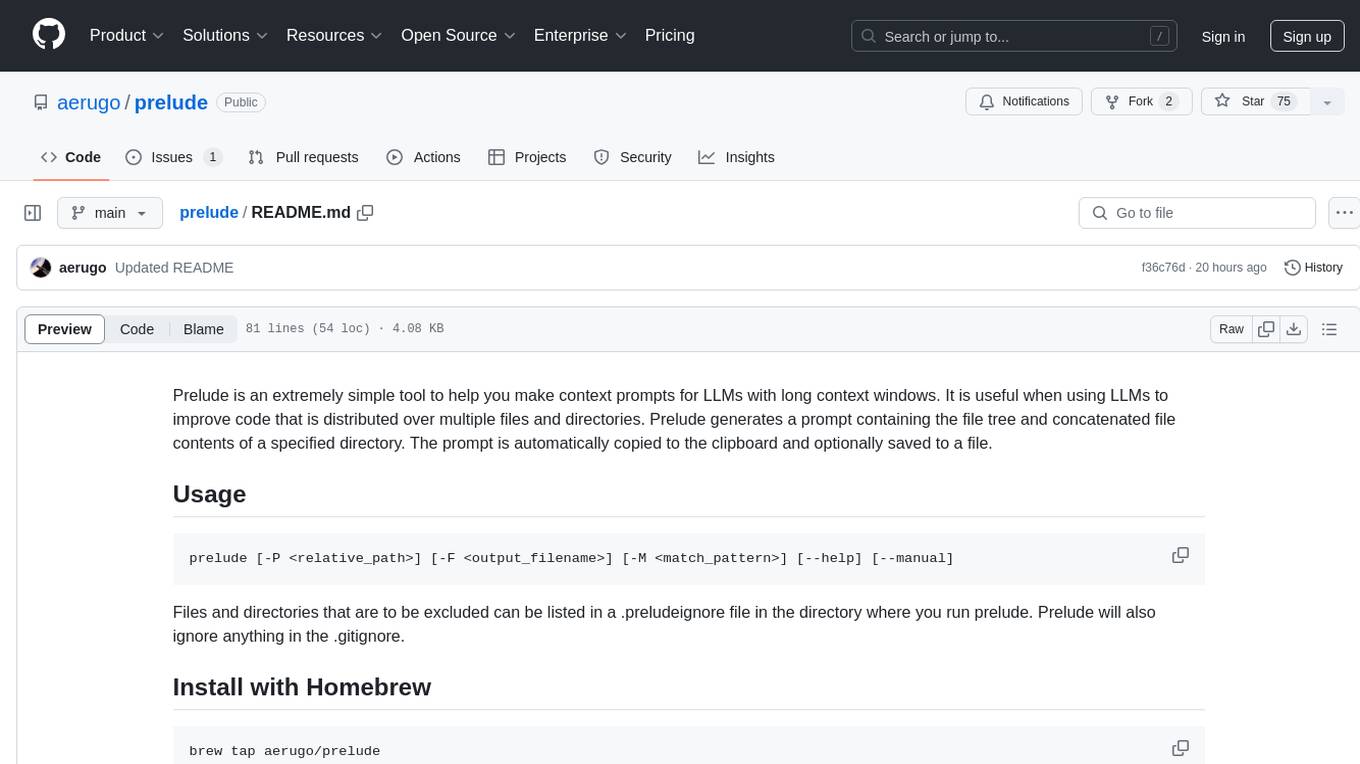

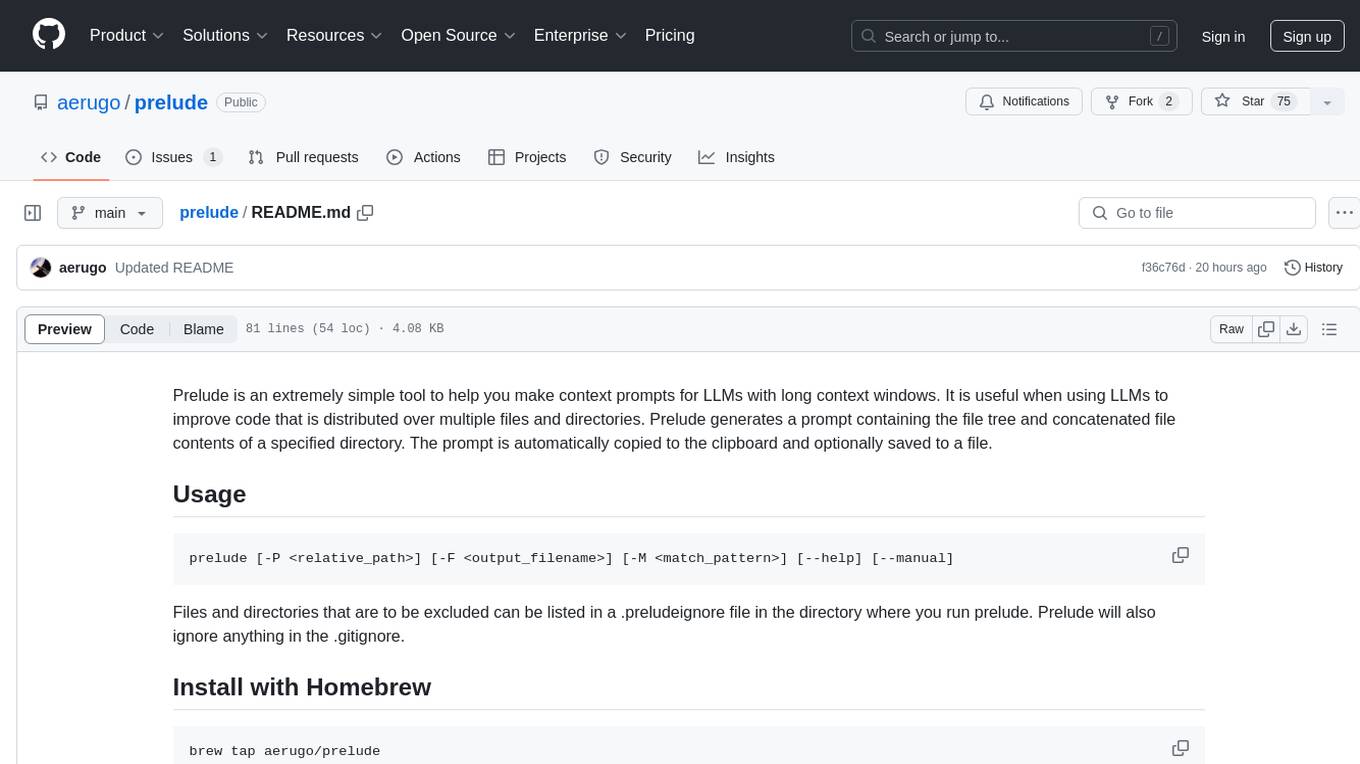

README:

Prelude is an extremely simple tool to help you make context prompts for LLMs with long context windows. It is useful when using LLMs to improve code that is distributed over multiple files and directories. Prelude generates a prompt containing the file tree and concatenated file contents of a specified directory. The prompt is automatically copied to the clipboard and optionally saved to a file. On systems without a clipboard, the prompt is printed to stdout and optionally saved to a file.

prelude [-P <relative_path>] [-F <output_filename>] [-M <match_pattern>] [-g] [--help] [--manual]Files and directories that are to be excluded can be listed in a .preludeignore file in the directory where you run prelude. Prelude will also ignore anything in the .gitignore.

$ prelude -M "*.md|*test*" -F prompt.txt

Got prompt with file tree and concatenated file contents.

Files included in the prompt are:

~/prelude

~/prelude/README.md

~/prelude/test_prelude.bats

1 directory, 2 files

The prompt has been copied to the clipboard.

The prompt has been saved to prompt.txt.I want you to help me fix some issues with my code. I have attached the code and file structure.

File Tree:

~/README.md

~/test_prelude.bats

1 directory, 2 files

Concatenated Files:

--- File: /Users/hugi/GitRepos/prelude/README.md ---

... <content of README.md> ...

--- File: /Users/hugi/GitRepos/prelude/test_prelude.bats ---

... <content of test_prelude.bats> ...

brew tap aerugo/prelude

brew install preludeThis script generates a prompt containing the file tree and concatenated file contents of a specified directory. The prompt can be copied to the clipboard and optionally saved to a file.

-

-P <relative_path>: Specify a relative path to include only files below that path. If not specified, the script will include all files in the current directory and its subdirectories. -

-F <output_filename>: Specify a filename to save the generated prompt. If not specified, the prompt will only be copied to the clipboard. -

-M <match_pattern>: Specify pattern(s) to match filenames and only include those files. Patterns are case-insensitive by default. Valid wildcard operators are '*' (any zero or more characters), '?' (any single character), '[...]' (any single character listed between brackets (optional - (dash) for character range may be used: ex: [A-Z]), and '[^...]' (any single character not listed in brackets) and '|' separates alternate patterns. -

-g: Only include files tracked by git. -

-c: Respect case sensitivity in pattern matching. -

--help: Display help information. -

--manual: Display the manual.

-

./prelude: Generate a prompt for all files in the current directory and copy it to the clipboard. -

./prelude -P src: Generate a prompt for all files below the specified path and copy it to the clipboard. -

./prelude -F prompt.txt: Generate a prompt for all files in the current directory and save it to a file. -

./prelude -P src -F prompt.txt: Generate a prompt for all files below the specified path and save it to a file. -

./prelude -M "*.txt|*.py": Generate a prompt for all .txt and .py files. -

./prelude -M "test*": Generate a prompt for all files starting with 'test'. -

./prelude -g: Generate a prompt for all git-tracked files in the current directory. -

./prelude -g -P src: Generate a prompt for all git-tracked files below the specified path. -

./prelude -g -M "*.js": Generate a prompt for all git-tracked .js files.

- The script checks for the presence of clipboard commands (pbcopy, xclip, xsel, clip, wl-copy) and uses the first one found to copy the prompt to the clipboard. If none are found and no output file is specified, the prompt is printed to stdout.

- The script reads

.gitignoreand.preludeignorefiles to exclude specified patterns from the file tree.

-

tree: Ensure that thetreecommand is installed and available in the system. -

git: Required when using the-goption to include only git-tracked files.

- If the specified path does not exist or is not a directory, an error message is displayed, and the script exits.

- If no clipboard command is found and no output file is specified, the prompt is printed to stdout.

- If no clipboard command is found and an output file is specified, the prompt is saved to the file without copying to the clipboard.

- The script copies the generated prompt to the clipboard and optionally saves it to a specified file.

- A message is printed to indicate the completion, listing the files included in the prompt.

Prelude comes with a comprehensive test suite using the Bats (Bash Automated Testing System) framework. The tests cover various scenarios including:

- Running the script without arguments

- Using different flags (-P, -F, -M, -g)

- Respecting

.gitignoreand.preludeignorefiles - Handling invalid inputs and edge cases

To run the tests, make sure you have Bats installed and then run:

bats test_prelude.batsThis will execute all the tests and provide a summary of the results, ensuring the reliability and correctness of the Prelude tool.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for prelude

Similar Open Source Tools

prelude

Prelude is a simple tool for creating context prompts for LLMs with long context windows. It helps improve code distributed over multiple files by generating prompts with file tree and concatenated file contents. The prompt is copied to clipboard and can be saved to a file. It excludes files listed in .preludeignore and .gitignore files. The tool requires the `tree` command to be installed on the system for functionality.

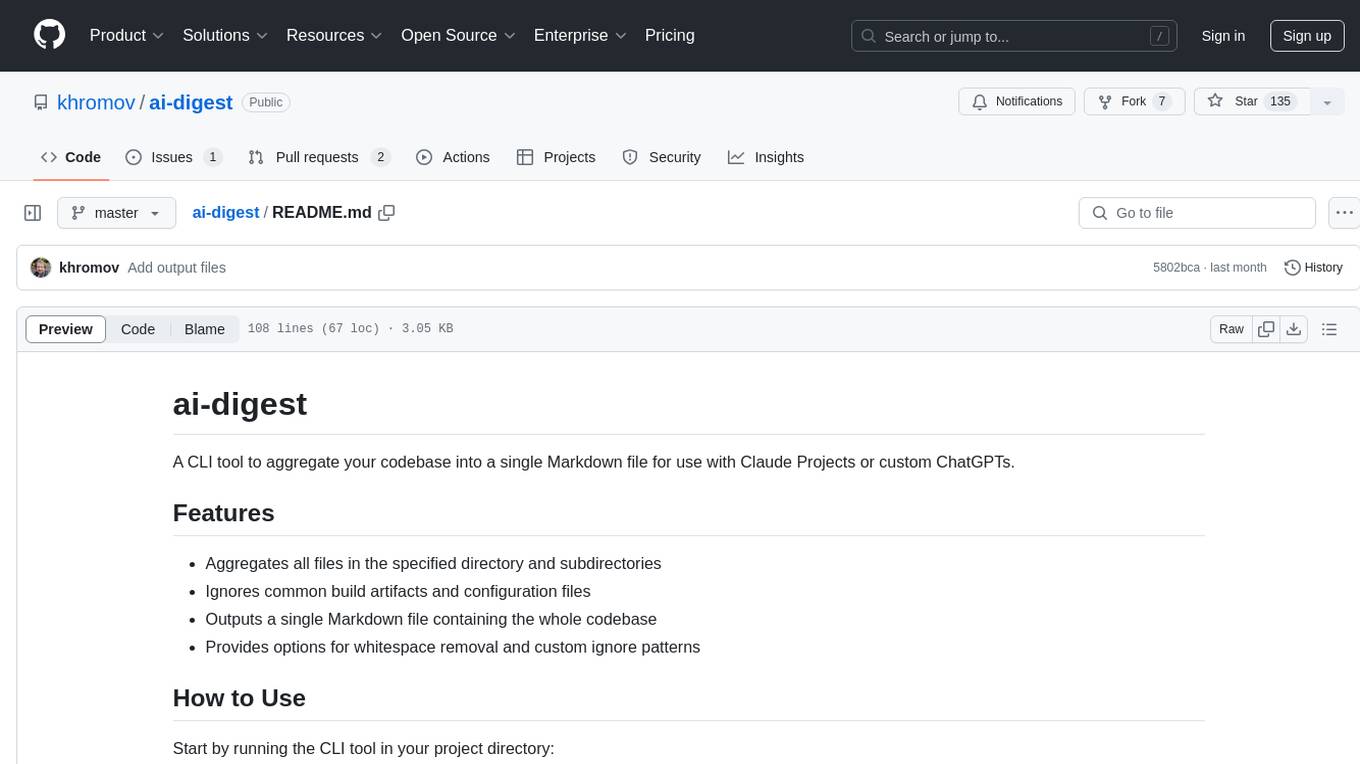

ai-digest

ai-digest is a CLI tool designed to aggregate your codebase into a single Markdown file for use with Claude Projects or custom ChatGPTs. It aggregates all files in the specified directory and subdirectories, ignores common build artifacts and configuration files, and provides options for whitespace removal and custom ignore patterns. The tool is useful for preparing codebases for AI analysis and assistance.

vulnerability-analysis

The NVIDIA AI Blueprint for Vulnerability Analysis for Container Security showcases accelerated analysis on common vulnerabilities and exposures (CVE) at an enterprise scale, reducing mitigation time from days to seconds. It enables security analysts to determine software package vulnerabilities using large language models (LLMs) and retrieval-augmented generation (RAG). The blueprint is designed for security analysts, IT engineers, and AI practitioners in cybersecurity. It requires NVAIE developer license and API keys for vulnerability databases, search engines, and LLM model services. Hardware requirements include L40 GPU for pipeline operation and optional LLM NIM and Embedding NIM. The workflow involves LLM pipeline for CVE impact analysis, utilizing LLM planner, agent, and summarization nodes. The blueprint uses NVIDIA NIM microservices and Morpheus Cybersecurity AI SDK for vulnerability analysis.

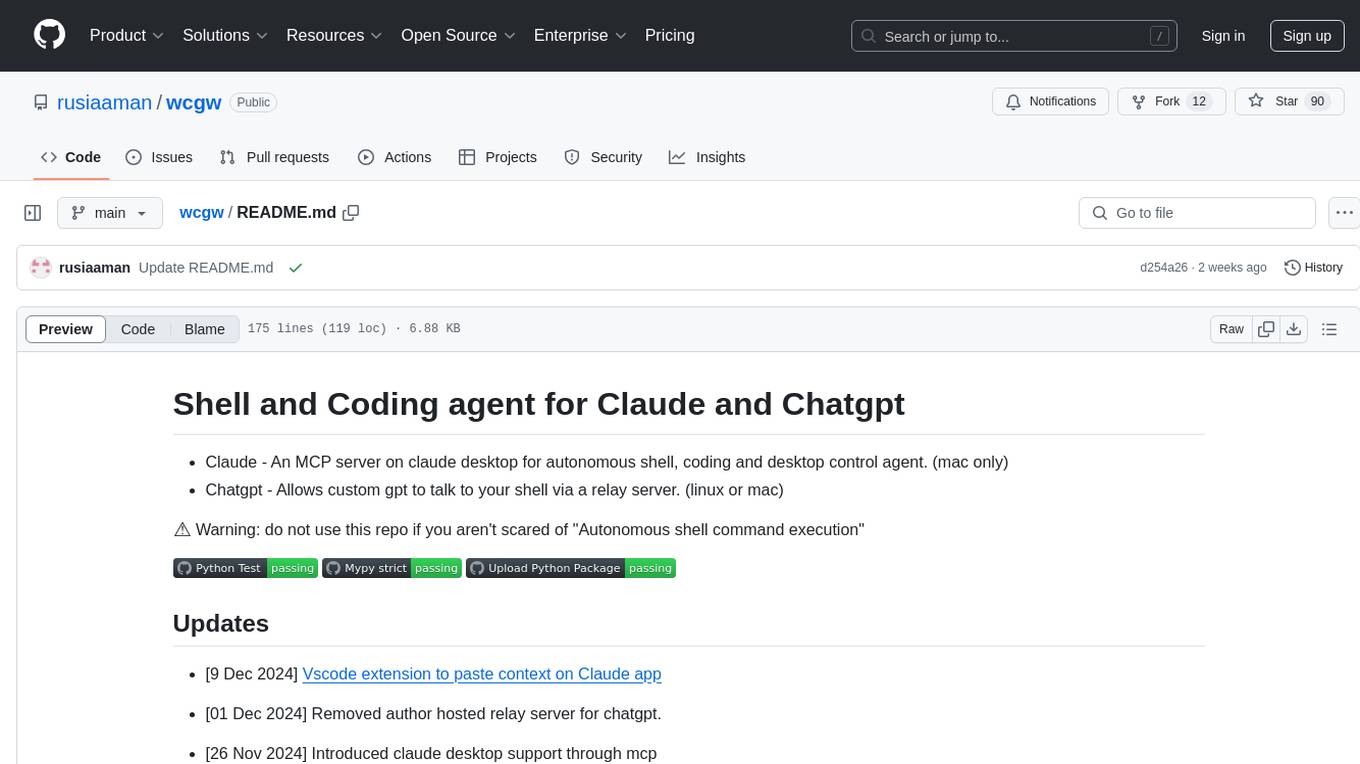

wcgw

wcgw is a shell and coding agent designed for Claude and Chatgpt. It provides full shell access with no restrictions, desktop control on Claude for screen capture and control, interactive command handling, large file editing, and REPL support. Users can use wcgw to create, execute, and iterate on tasks, such as solving problems with Python, finding code instances, setting up projects, creating web apps, editing large files, and running server commands. Additionally, wcgw supports computer use on Docker containers for desktop control. The tool can be extended with a VS Code extension for pasting context on Claude app and integrates with Chatgpt for custom GPT interactions.

debug-gym

debug-gym is a text-based interactive debugging framework designed for debugging Python programs. It provides an environment where agents can interact with code repositories, use various tools like pdb and grep to investigate and fix bugs, and propose code patches. The framework supports different LLM backends such as OpenAI, Azure OpenAI, and Anthropic. Users can customize tools, manage environment states, and run agents to debug code effectively. debug-gym is modular, extensible, and suitable for interactive debugging tasks in a text-based environment.

vectorflow

VectorFlow is an open source, high throughput, fault tolerant vector embedding pipeline. It provides a simple API endpoint for ingesting large volumes of raw data, processing, and storing or returning the vectors quickly and reliably. The tool supports text-based files like TXT, PDF, HTML, and DOCX, and can be run locally with Kubernetes in production. VectorFlow offers functionalities like embedding documents, running chunking schemas, custom chunking, and integrating with vector databases like Pinecone, Qdrant, and Weaviate. It enforces a standardized schema for uploading data to a vector store and supports features like raw embeddings webhook, chunk validation webhook, S3 endpoint, and telemetry. The tool can be used with the Python client and provides detailed instructions for running and testing the functionalities.

vscode-pddl

The vscode-pddl extension provides comprehensive support for Planning Domain Description Language (PDDL) in Visual Studio Code. It enables users to model planning domains, validate them, industrialize planning solutions, and run planners. The extension offers features like syntax highlighting, auto-completion, plan visualization, plan validation, plan happenings evaluation, search debugging, and integration with Planning.Domains. Users can create PDDL files, run planners, visualize plans, and debug search algorithms efficiently within VS Code.

latex2ai

LaTeX2AI is a plugin for Adobe Illustrator that allows users to use editable text labels typeset in LaTeX inside an Illustrator document. It provides a seamless integration of LaTeX functionality within the Illustrator environment, enabling users to create and edit LaTeX labels, manage item scaling behavior, set global options, and save documents as PDF with included LaTeX labels. The tool simplifies the process of including LaTeX-generated content in Illustrator designs, ensuring accurate scaling and alignment with other elements in the document.

SirChatalot

A Telegram bot that proves you don't need a body to have a personality. It can use various text and image generation APIs to generate responses to user messages. For text generation, the bot can use: * OpenAI's ChatGPT API (or other compatible API). Vision capabilities can be used with GPT-4 models. Function calling can be used with Function calling. * Anthropic's Claude API. Vision capabilities can be used with Claude 3 models. Function calling can be used with tool use. * YandexGPT API Bot can also generate images with: * OpenAI's DALL-E * Stability AI * Yandex ART This bot can also be used to generate responses to voice messages. Bot will convert the voice message to text and will then generate a response. Speech recognition can be done using the OpenAI's Whisper model. To use this feature, you need to install the ffmpeg library. This bot is also support working with files, see Files section for more details. If function calling is enabled, bot can generate images and search the web (limited).

ain

Ain is a terminal HTTP API client designed for scripting input and processing output via pipes. It allows flexible organization of APIs using files and folders, supports shell-scripts and executables for common tasks, handles url-encoding, and enables sharing the resulting curl, wget, or httpie command-line. Users can put things that change in environment variables or .env-files, and pipe the API output for further processing. Ain targets users who work with many APIs using a simple file format and uses curl, wget, or httpie to make the actual calls.

ComfyUI-mnemic-nodes

ComfyUI-mnemic-nodes is a repository hosting a collection of nodes developed for ComfyUI, providing useful components to enhance project functionality. The nodes include features like returning file paths, saving text files, downloading images from URLs, tokenizing text, cleaning strings, querying Groq language models, generating negative prompts, and more. Some nodes are experimental and marked with a 'Caution' label. Installation instructions and setup details are provided for each node, along with examples and presets for different tasks.

aiac

AIAC is a library and command line tool to generate Infrastructure as Code (IaC) templates, configurations, utilities, queries, and more via LLM providers such as OpenAI, Amazon Bedrock, and Ollama. Users can define multiple 'backends' targeting different LLM providers and environments using a simple configuration file. The tool allows users to ask a model to generate templates for different scenarios and composes an appropriate request to the selected provider, storing the resulting code to a file and/or printing it to standard output.

vectara-answer

Vectara Answer is a sample app for Vectara-powered Summarized Semantic Search (or question-answering) with advanced configuration options. For examples of what you can build with Vectara Answer, check out Ask News, LegalAid, or any of the other demo applications.

nextjs-openai-doc-search

This starter project is designed to process `.mdx` files in the `pages` directory to use as custom context within OpenAI Text Completion prompts. It involves building a custom ChatGPT style doc search powered by Next.js, OpenAI, and Supabase. The project includes steps for pre-processing knowledge base, storing embeddings in Postgres, performing vector similarity search, and injecting content into OpenAI GPT-3 text completion prompt.

CJA_Comprehensive_Jailbreak_Assessment

This public repository contains the paper 'Comprehensive Assessment of Jailbreak Attacks Against LLMs'. It provides a labeling method to label results using Python and offers the opportunity to submit evaluation results to the leaderboard. Full codes will be released after the paper is accepted.

illume

Illume is a scriptable command line program designed for interfacing with an OpenAI-compatible LLM API. It acts as a unix filter, sending standard input to the LLM and streaming its response to standard output. Users can interact with the LLM through text editors like Vim or Emacs, enabling seamless communication with the AI model for various tasks.

For similar tasks

prelude

Prelude is a simple tool for creating context prompts for LLMs with long context windows. It helps improve code distributed over multiple files by generating prompts with file tree and concatenated file contents. The prompt is copied to clipboard and can be saved to a file. It excludes files listed in .preludeignore and .gitignore files. The tool requires the `tree` command to be installed on the system for functionality.

FileKitty

FileKitty is a simple file selection and concatenation tool that allows users to select files from a directory, concatenate them into a single file, save the concatenated file, and copy files to the clipboard. It is useful for concatenating files for use in a single file format and pasting file contents into an LLM to provide context to a prompt. The tool is built using Poetry to manage dependencies and build the app.

ComfyUI-IF_AI_tools

ComfyUI-IF_AI_tools is a set of custom nodes for ComfyUI that allows you to generate prompts using a local Large Language Model (LLM) via Ollama. This tool enables you to enhance your image generation workflow by leveraging the power of language models.

Awesome-AI-GPTs

Awesome AI GPTs is an open repository that collects resources and fun ways to use OpenAI GPTs. It includes databases, search tools, open-source projects, articles, attack and defense strategies, installation of custom plugins, knowledge bases, and community interactions related to GPTs. Users can find curated lists, leaked prompts, and various GPT applications in this repository. The project aims to empower users with AI capabilities and foster collaboration in the AI community.

kor

Kor is a prototype tool designed to help users extract structured data from text using Language Models (LLMs). It generates prompts, sends them to specified LLMs, and parses the output. The tool works with the parsing approach and is integrated with the LangChain framework. Kor is compatible with pydantic v2 and v1, and schema is typed checked using pydantic. It is primarily used for extracting information from text based on provided reference examples and schema documentation. Kor is designed to work with all good-enough LLMs regardless of their support for function/tool calling or JSON modes.

Awesome-LLM-Survey

This repository, Awesome-LLM-Survey, serves as a comprehensive collection of surveys related to Large Language Models (LLM). It covers various aspects of LLM, including instruction tuning, human alignment, LLM agents, hallucination, multi-modal capabilities, and more. Researchers are encouraged to contribute by updating information on their papers to benefit the LLM survey community.

awesome-gpt-prompt-engineering

Awesome GPT Prompt Engineering is a curated list of resources, tools, and shiny things for GPT prompt engineering. It includes roadmaps, guides, techniques, prompt collections, papers, books, communities, prompt generators, Auto-GPT related tools, prompt injection information, ChatGPT plug-ins, prompt engineering job offers, and AI links directories. The repository aims to provide a comprehensive guide for prompt engineering enthusiasts, covering various aspects of working with GPT models and improving communication with AI tools.

ComfyUI_VLM_nodes

ComfyUI_VLM_nodes is a repository containing various nodes for utilizing Vision Language Models (VLMs) and Language Models (LLMs). The repository provides nodes for tasks such as structured output generation, image to music conversion, LLM prompt generation, automatic prompt generation, and more. Users can integrate different models like InternLM-XComposer2-VL, UForm-Gen2, Kosmos-2, moondream1, moondream2, JoyTag, and Chat Musician. The nodes support features like extracting keywords, generating prompts, suggesting prompts, and obtaining structured outputs. The repository includes examples and instructions for using the nodes effectively.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.