runanywhere-sdks

Production ready toolkit to run AI locally

Stars: 6616

RunAnywhere is an on-device AI tool for mobile apps that allows users to run LLMs, speech-to-text, text-to-speech, and voice assistant features locally, ensuring privacy, offline functionality, and fast performance. The tool provides a range of AI capabilities without relying on cloud services, reducing latency and ensuring that no data leaves the device. RunAnywhere offers SDKs for Swift (iOS/macOS), Kotlin (Android), React Native, and Flutter, making it easy for developers to integrate AI features into their mobile applications. The tool supports various models for LLM, speech-to-text, and text-to-speech, with detailed documentation and installation instructions available for each platform.

README:

On-device AI for mobile apps.

Run LLMs, speech-to-text, and text-to-speech locally—private, offline, fast.

Llama 3.2 3B on iPhone 16 Pro Max

Tool calling + LLM reasoning — 100% on-device

View the code · Full tool calling support coming soon

RunAnywhere lets you add AI features to your mobile app that run entirely on-device:

- LLM Chat — Llama, Mistral, Qwen, SmolLM, and more

- Speech-to-Text — Whisper-powered transcription

- Text-to-Speech — Neural voice synthesis

- Voice Assistant — Full STT → LLM → TTS pipeline

No cloud. No latency. No data leaves the device.

| Platform | Status | Installation | Documentation |

|---|---|---|---|

| Swift (iOS/macOS) | Stable | Swift Package Manager | docs.runanywhere.ai/swift |

| Kotlin (Android) | Stable | Gradle | docs.runanywhere.ai/kotlin |

| React Native | Beta | npm | docs.runanywhere.ai/react-native |

| Flutter | Beta | pub.dev | docs.runanywhere.ai/flutter |

import RunAnywhere

import LlamaCPPRuntime

// 1. Initialize

LlamaCPP.register()

try RunAnywhere.initialize()

// 2. Load a model

try await RunAnywhere.downloadModel("smollm2-360m")

try await RunAnywhere.loadModel("smollm2-360m")

// 3. Generate

let response = try await RunAnywhere.chat("What is the capital of France?")

print(response) // "Paris is the capital of France."Install via Swift Package Manager:

https://github.com/RunanywhereAI/runanywhere-sdks

Full documentation → · Source code

import com.runanywhere.sdk.public.RunAnywhere

import com.runanywhere.sdk.public.extensions.*

// 1. Initialize

LlamaCPP.register()

RunAnywhere.initialize(environment = SDKEnvironment.DEVELOPMENT)

// 2. Load a model

RunAnywhere.downloadModel("smollm2-360m").collect { println("${it.progress * 100}%") }

RunAnywhere.loadLLMModel("smollm2-360m")

// 3. Generate

val response = RunAnywhere.chat("What is the capital of France?")

println(response) // "Paris is the capital of France."Install via Gradle:

dependencies {

implementation("com.runanywhere.sdk:runanywhere-kotlin:0.1.4")

implementation("com.runanywhere.sdk:runanywhere-core-llamacpp:0.1.4")

}Full documentation → · Source code

import { RunAnywhere, SDKEnvironment } from '@runanywhere/core';

import { LlamaCPP } from '@runanywhere/llamacpp';

// 1. Initialize

await RunAnywhere.initialize({ environment: SDKEnvironment.Development });

LlamaCPP.register();

// 2. Load a model

await RunAnywhere.downloadModel('smollm2-360m');

await RunAnywhere.loadModel(modelPath);

// 3. Generate

const response = await RunAnywhere.chat('What is the capital of France?');

console.log(response); // "Paris is the capital of France."Install via npm:

npm install @runanywhere/core @runanywhere/llamacppFull documentation → · Source code

import 'package:runanywhere/runanywhere.dart';

import 'package:runanywhere_llamacpp/runanywhere_llamacpp.dart';

// 1. Initialize

await RunAnywhere.initialize();

await LlamaCpp.register();

// 2. Load a model

await RunAnywhere.downloadModel('smollm2-360m');

await RunAnywhere.loadModel('smollm2-360m');

// 3. Generate

final response = await RunAnywhere.chat('What is the capital of France?');

print(response); // "Paris is the capital of France."Install via pub.dev:

dependencies:

runanywhere: ^0.15.11

runanywhere_llamacpp: ^0.15.11Full documentation → · Source code

Full-featured demo applications demonstrating SDK capabilities:

| Platform | Source Code | Download |

|---|---|---|

| iOS | examples/ios/RunAnywhereAI | App Store |

| Android | examples/android/RunAnywhereAI | Google Play |

| React Native | examples/react-native/RunAnywhereAI | Build from source |

| Flutter | examples/flutter/RunAnywhereAI | Build from source |

Standalone demo projects showcasing what you can build with RunAnywhere:

| Project | Description | Platform |

|---|---|---|

| swift-starter-app | Privacy-first AI demo — LLM Chat, STT, TTS, and Voice Pipeline | iOS (Swift/SwiftUI) |

| on-device-browser-agent | On-device AI browser automation — no cloud, no API keys | Chrome Extension |

| Feature | iOS | Android | React Native | Flutter |

|---|---|---|---|---|

| LLM Text Generation | ✅ | ✅ | ✅ | ✅ |

| Streaming | ✅ | ✅ | ✅ | ✅ |

| Speech-to-Text | ✅ | ✅ | ✅ | ✅ |

| Text-to-Speech | ✅ | ✅ | ✅ | ✅ |

| Voice Assistant Pipeline | ✅ | ✅ | ✅ | ✅ |

| Model Download + Progress | ✅ | ✅ | ✅ | ✅ |

| Structured Output (JSON) | ✅ | ✅ | 🔜 | 🔜 |

| Apple Foundation Models | ✅ | — | — | — |

| Model | Size | RAM Required | Use Case |

|---|---|---|---|

| SmolLM2 360M | ~400MB | 500MB | Fast, lightweight |

| Qwen 2.5 0.5B | ~500MB | 600MB | Multilingual |

| Llama 3.2 1B | ~1GB | 1.2GB | Balanced |

| Mistral 7B Q4 | ~4GB | 5GB | High quality |

| Model | Size | Languages |

|---|---|---|

| Whisper Tiny | ~75MB | English |

| Whisper Base | ~150MB | Multilingual |

| Voice | Size | Language |

|---|---|---|

| Piper US English | ~65MB | English (US) |

| Piper British English | ~65MB | English (UK) |

runanywhere-sdks/

├── sdk/

│ ├── runanywhere-swift/ # iOS/macOS SDK

│ ├── runanywhere-kotlin/ # Android SDK

│ ├── runanywhere-react-native/ # React Native SDK

│ ├── runanywhere-flutter/ # Flutter SDK

│ └── runanywhere-commons/ # Shared C++ core

│

├── examples/

│ ├── ios/RunAnywhereAI/ # iOS sample app

│ ├── android/RunAnywhereAI/ # Android sample app

│ ├── react-native/RunAnywhereAI/ # React Native sample app

│ └── flutter/RunAnywhereAI/ # Flutter sample app

│

├── Playground/

│ ├── swift-starter-app/ # iOS AI playground app

│ └── on-device-browser-agent/ # Chrome browser automation agent

│

└── docs/ # Documentation

| Platform | Minimum | Recommended |

|---|---|---|

| iOS | 17.0+ | 17.0+ |

| macOS | 14.0+ | 14.0+ |

| Android | API 24 (7.0) | API 28+ |

| React Native | 0.74+ | 0.76+ |

| Flutter | 3.10+ | 3.24+ |

Memory: 2GB minimum, 4GB+ recommended for larger models

We welcome contributions. See our Contributing Guide for details.

# Clone the repo

git clone https://github.com/RunanywhereAI/runanywhere-sdks.git

# Set up a specific SDK (example: Swift)

cd runanywhere-sdks/sdk/runanywhere-swift

./scripts/build-swift.sh --setup

# Run the sample app

cd ../../examples/ios/RunAnywhereAI

open RunAnywhereAI.xcodeproj- Discord: Join our community

- GitHub Issues: Report bugs or request features

- Email: [email protected]

- Twitter: @RunanywhereAI

Apache 2.0 — see LICENSE for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for runanywhere-sdks

Similar Open Source Tools

runanywhere-sdks

RunAnywhere is an on-device AI tool for mobile apps that allows users to run LLMs, speech-to-text, text-to-speech, and voice assistant features locally, ensuring privacy, offline functionality, and fast performance. The tool provides a range of AI capabilities without relying on cloud services, reducing latency and ensuring that no data leaves the device. RunAnywhere offers SDKs for Swift (iOS/macOS), Kotlin (Android), React Native, and Flutter, making it easy for developers to integrate AI features into their mobile applications. The tool supports various models for LLM, speech-to-text, and text-to-speech, with detailed documentation and installation instructions available for each platform.

sf-skills

sf-skills is a collection of reusable skills for Agentic Salesforce Development, enabling AI-powered code generation, validation, testing, debugging, and deployment. It includes skills for development, quality, foundation, integration, AI & automation, DevOps & tooling. The installation process is newbie-friendly and includes an installer script for various CLIs. The skills are compatible with platforms like Claude Code, OpenCode, Codex, Gemini, Amp, Droid, Cursor, and Agentforce Vibes. The repository is community-driven and aims to strengthen the Salesforce ecosystem.

Open-dLLM

Open-dLLM is the most open release of a diffusion-based large language model, providing pretraining, evaluation, inference, and checkpoints. It introduces Open-dCoder, the code-generation variant of Open-dLLM. The repo offers a complete stack for diffusion LLMs, enabling users to go from raw data to training, checkpoints, evaluation, and inference in one place. It includes pretraining pipeline with open datasets, inference scripts for easy sampling and generation, evaluation suite with various metrics, weights and checkpoints on Hugging Face, and transparent configs for full reproducibility.

agentica

Agentica is a human-centric framework for building large language model agents. It provides functionalities for planning, memory management, tool usage, and supports features like reflection, planning and execution, RAG, multi-agent, multi-role, and workflow. The tool allows users to quickly code and orchestrate agents, customize prompts, and make API calls to various services. It supports API calls to OpenAI, Azure, Deepseek, Moonshot, Claude, Ollama, and Together. Agentica aims to simplify the process of building AI agents by providing a user-friendly interface and a range of functionalities for agent development.

gpupixel

GPUPixel is a real-time, high-performance image and video filter library written in C++11 and based on OpenGL/ES. It incorporates a built-in beauty face filter that achieves commercial-grade beauty effects. The library is extremely easy to compile and integrate with a small size, supporting platforms including iOS, Android, Mac, Windows, and Linux. GPUPixel provides various filters like skin smoothing, whitening, face slimming, big eyes, lipstick, and blush. It supports input formats like YUV420P, RGBA, JPEG, PNG, and output formats like RGBA and YUV420P. The library's performance on devices like iPhone and Android is optimized, with low CPU usage and fast processing times. GPUPixel's lib size is compact, making it suitable for mobile and desktop applications.

cactus

Cactus is an energy-efficient and fast AI inference framework designed for phones, wearables, and resource-constrained arm-based devices. It provides a bottom-up approach with no dependencies, optimizing for budget and mid-range phones. The framework includes Cactus FFI for integration, Cactus Engine for high-level transformer inference, Cactus Graph for unified computation graph, and Cactus Kernels for low-level ARM-specific operations. It is suitable for implementing custom models and scientific computing on mobile devices.

MOSS-TTS

MOSS-TTS Family is an open-source speech and sound generation model family designed for high-fidelity, high-expressiveness, and complex real-world scenarios. It includes five production-ready models: MOSS-TTS, MOSS-TTSD, MOSS-VoiceGenerator, MOSS-TTS-Realtime, and MOSS-SoundEffect, each serving specific purposes in speech generation, dialogue, voice design, real-time interactions, and sound effect generation. The models offer features like long-speech generation, fine-grained control over phonemes and duration, multilingual synthesis, voice cloning, and real-time voice agents.

FaceAISDK_Android

FaceAI SDK is an on-device offline face detection, recognition, liveness detection, anti-spoofing, and 1:N/M:N face search SDK. It enables quick integration to achieve on-device face recognition, face search, and other functions. The SDK performs all functions offline on the device without the need for internet connection, ensuring privacy and security. It supports various actions for liveness detection, custom camera management, and clear imaging even in challenging lighting conditions.

new-api

New API is a next-generation large model gateway and AI asset management system that provides a wide range of features, including a new UI interface, multi-language support, online recharge function, key query for usage quota, compatibility with the original One API database, model charging by usage count, channel weighted randomization, data dashboard, token grouping and model restrictions, support for various authorization login methods, support for Rerank models, OpenAI Realtime API, Claude Messages format, reasoning effort setting, content reasoning, user-specific model rate limiting, request format conversion, cache billing support, and various model support such as gpts, Midjourney-Proxy, Suno API, custom channels, Rerank models, Claude Messages format, Dify, and more.

EVE

EVE is an official PyTorch implementation of Unveiling Encoder-Free Vision-Language Models. The project aims to explore the removal of vision encoders from Vision-Language Models (VLMs) and transfer LLMs to encoder-free VLMs efficiently. It also focuses on bridging the performance gap between encoder-free and encoder-based VLMs. EVE offers a superior capability with arbitrary image aspect ratio, data efficiency by utilizing publicly available data for pre-training, and training efficiency with a transparent and practical strategy for developing a pure decoder-only architecture across modalities.

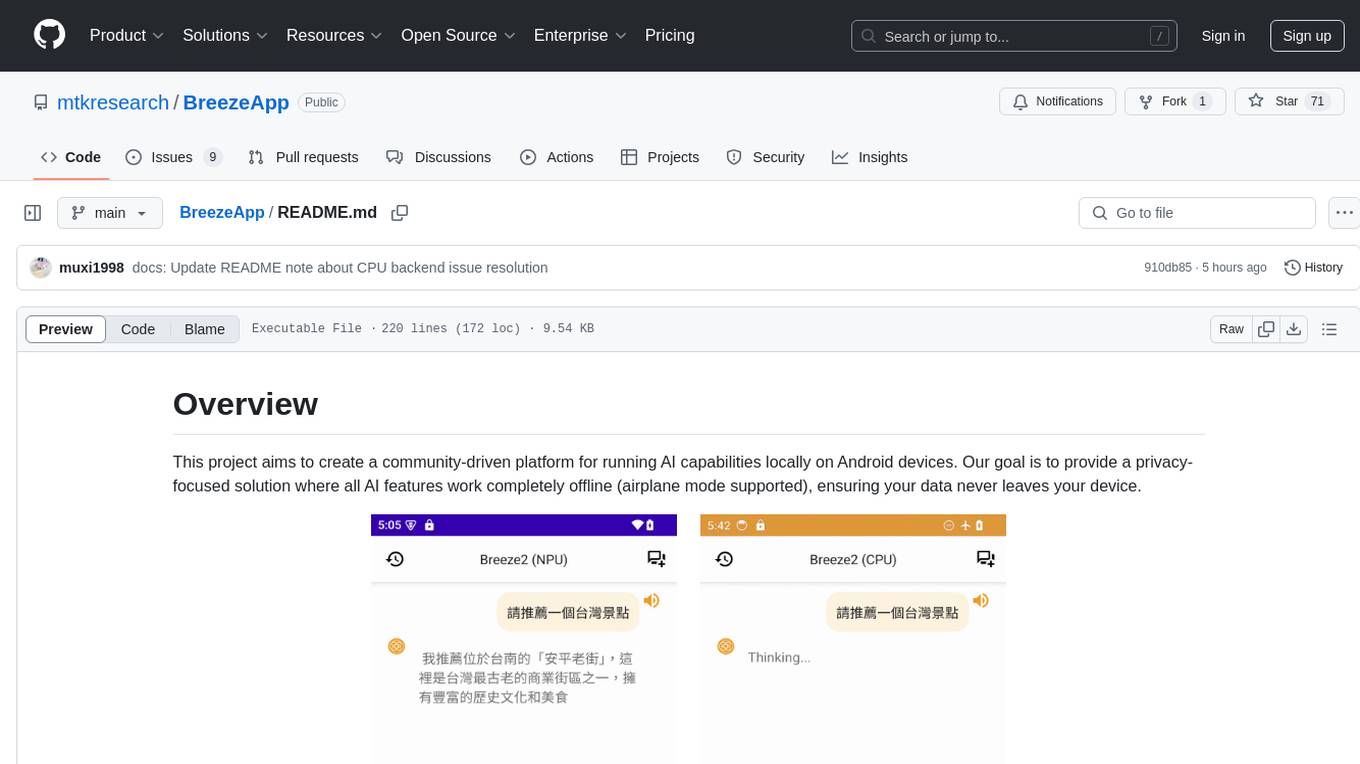

BreezeApp

BreezeApp is a community-driven platform for running AI capabilities locally on Android devices. It offers a privacy-focused solution where all AI features work offline, showcasing text-based chat interface, voice input/output support, and image understanding capabilities. The app supports multiple backends for different components and aims to make powerful AI models accessible to users. Users can contribute to the project by reporting issues, suggesting features, submitting pull requests, and sharing feedback. The architecture follows a service-based approach with service implementations for each AI capability. BreezeApp is a research project that may require specific hardware support or proprietary components, providing open-source alternatives where possible.

Native-LLM-for-Android

This repository provides a demonstration of running a native Large Language Model (LLM) on Android devices. It supports various models such as Qwen2.5-Instruct, MiniCPM-DPO/SFT, Yuan2.0, Gemma2-it, StableLM2-Chat/Zephyr, and Phi3.5-mini-instruct. The demo models are optimized for extreme execution speed after being converted from HuggingFace or ModelScope. Users can download the demo models from the provided drive link, place them in the assets folder, and follow specific instructions for decompression and model export. The repository also includes information on quantization methods and performance benchmarks for different models on various devices.

DownEdit

DownEdit is a powerful program that allows you to download videos from various social media platforms such as TikTok, Douyin, Kuaishou, and more. With DownEdit, you can easily download videos from user profiles and edit them in bulk. You have the option to flip the videos horizontally or vertically throughout the entire directory with just a single click. Stay tuned for more exciting features coming soon!

DownEdit

DownEdit is a fast and powerful program for downloading and editing videos from platforms like TikTok, Douyin, and Kuaishou. It allows users to effortlessly grab videos, make bulk edits, and utilize advanced AI features for generating videos, images, and sounds in bulk. The tool offers features like video, photo, and sound editing, downloading videos without watermarks, bulk AI generation, and AI editing for content enhancement.

AI0x0.com

AI 0x0 is a versatile AI query generation desktop floating assistant application that supports MacOS and Windows. It allows users to utilize AI capabilities in any desktop software to query and generate text, images, audio, and video data, helping them work more efficiently. The application features a dynamic desktop floating ball, floating dialogue bubbles, customizable presets, conversation bookmarking, preset packages, network acceleration, query mode, input mode, mouse navigation, deep customization of ChatGPT Next Web, support for full-format libraries, online search, voice broadcasting, voice recognition, voice assistant, application plugins, multi-model support, online text and image generation, image recognition, frosted glass interface, light and dark theme adaptation for each language model, and free access to all language models except Chat0x0 with a key.

ai-dev-kit

The AI Dev Kit is a comprehensive toolkit designed to enhance AI-driven development on Databricks. It provides trusted sources for AI coding assistants like Claude Code and Cursor to build faster and smarter on Databricks. The kit includes features such as Spark Declarative Pipelines, Databricks Jobs, AI/BI Dashboards, Unity Catalog, Genie Spaces, Knowledge Assistants, MLflow Experiments, Model Serving, Databricks Apps, and more. Users can choose from different adventures like installing the kit, using the visual builder app, teaching AI assistants Databricks patterns, executing Databricks actions, or building custom integrations with the core library. The kit also includes components like databricks-tools-core, databricks-mcp-server, databricks-skills, databricks-builder-app, and ai-dev-project.

For similar tasks

runanywhere-sdks

RunAnywhere is an on-device AI tool for mobile apps that allows users to run LLMs, speech-to-text, text-to-speech, and voice assistant features locally, ensuring privacy, offline functionality, and fast performance. The tool provides a range of AI capabilities without relying on cloud services, reducing latency and ensuring that no data leaves the device. RunAnywhere offers SDKs for Swift (iOS/macOS), Kotlin (Android), React Native, and Flutter, making it easy for developers to integrate AI features into their mobile applications. The tool supports various models for LLM, speech-to-text, and text-to-speech, with detailed documentation and installation instructions available for each platform.

agents-js

LiveKit Agents for Node.js is a framework designed for building realtime, programmable voice agents that can see, hear, and understand. It includes support for OpenAI Realtime API, allowing for ultra-low latency WebRTC transport between GPT-4o and users' devices. The framework provides concepts like Agents, Workers, and Plugins to create complex tasks. It offers a CLI interface for running agents and a versatile web frontend called 'playground' for building and testing agents. The framework is suitable for developers looking to create conversational voice agents with advanced capabilities.

fastRAG

fastRAG is a research framework designed to build and explore efficient retrieval-augmented generative models. It incorporates state-of-the-art Large Language Models (LLMs) and Information Retrieval to empower researchers and developers with a comprehensive tool-set for advancing retrieval augmented generation. The framework is optimized for Intel hardware, customizable, and includes key features such as optimized RAG pipelines, efficient components, and RAG-efficient components like ColBERT and Fusion-in-Decoder (FiD). fastRAG supports various unique components and backends for running LLMs, making it a versatile tool for research and development in the field of retrieval-augmented generation.

AI.Labs

AI.Labs is an open-source project that integrates advanced artificial intelligence technologies to create a powerful AI platform. It focuses on integrating AI services like large language models, speech recognition, and speech synthesis for functionalities such as dialogue, voice interaction, and meeting transcription. The project also includes features like a large language model dialogue system, speech recognition for meeting transcription, speech-to-text voice synthesis, integration of translation and chat, and uses technologies like C#, .Net, SQLite database, XAF, OpenAI API, TTS, and STT.

LogChat

LogChat is an open-source and free AI chat client that supports various chat models and technologies such as ChatGPT, 讯飞星火, DeepSeek, LLM, TTS, STT, and Live2D. The tool provides a user-friendly interface designed using Qt Creator and can be used on Windows systems without any additional environment requirements. Users can interact with different AI models, perform voice synthesis and recognition, and customize Live2D character models. LogChat also offers features like language translation, AI platform integration, and menu items like screenshot editing, clock, and application launcher.

Friend

Friend is an open-source AI wearable device that records everything you say, gives you proactive feedback and advice. It has real-time AI audio processing capabilities, low-powered Bluetooth, open-source software, and a wearable design. The device is designed to be affordable and easy to use, with a total cost of less than $20. To get started, you can clone the repo, choose the version of the app you want to install, and follow the instructions for installing the firmware and assembling the device. Friend is still a prototype project and is provided "as is", without warranty of any kind. Use of the device should comply with all local laws and regulations concerning privacy and data protection.

agents

The LiveKit Agent Framework is designed for building real-time, programmable participants that run on servers. Easily tap into LiveKit WebRTC sessions and process or generate audio, video, and data streams. The framework includes plugins for common workflows, such as voice activity detection and speech-to-text. Agents integrates seamlessly with LiveKit server, offloading job queuing and scheduling responsibilities to it. This eliminates the need for additional queuing infrastructure. Agent code developed on your local machine can scale to support thousands of concurrent sessions when deployed to a server in production.

openvino-plugins-ai-audacity

OpenVINO™ AI Plugins for Audacity* are a set of AI-enabled effects, generators, and analyzers for Audacity®. These AI features run 100% locally on your PC -- no internet connection necessary! OpenVINO™ is used to run AI models on supported accelerators found on the user's system such as CPU, GPU, and NPU. * **Music Separation**: Separate a mono or stereo track into individual stems -- Drums, Bass, Vocals, & Other Instruments. * **Noise Suppression**: Removes background noise from an audio sample. * **Music Generation & Continuation**: Uses MusicGen LLM to generate snippets of music, or to generate a continuation of an existing snippet of music. * **Whisper Transcription**: Uses whisper.cpp to generate a label track containing the transcription or translation for a given selection of spoken audio or vocals.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.