Dive

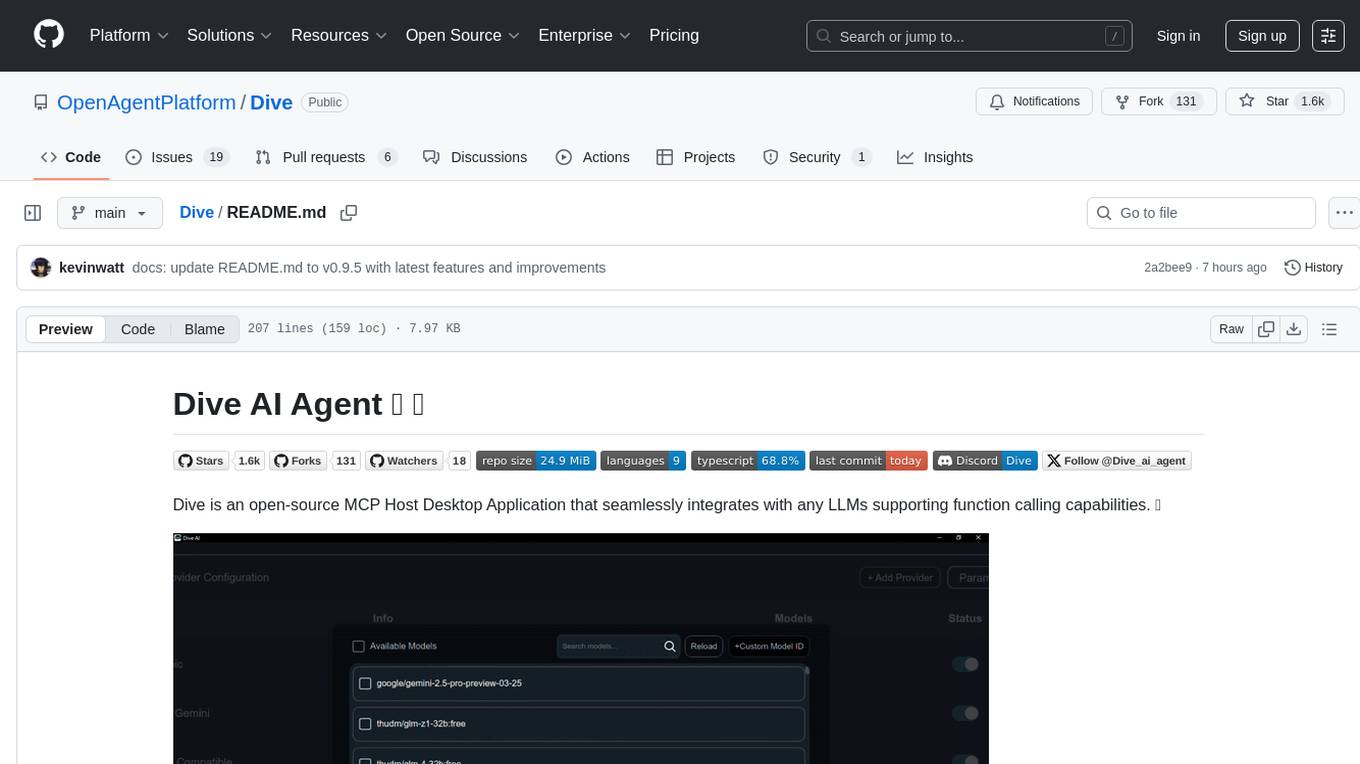

Dive is an open-source MCP Host Desktop Application that seamlessly integrates with any LLMs supporting function calling capabilities. ✨

Stars: 1566

Dive is an open-source MCP Host Desktop Application that seamlessly integrates with any LLMs supporting function calling capabilities. It offers universal LLM support, cross-platform compatibility, model context protocol for AI agent integration, OAP cloud integration, dual architecture for optimal performance, multi-language support, advanced API management, granular tool control, custom instructions, auto-update mechanism, and more. Dive provides a user-friendly interface for managing multiple AI models and tools, with recent updates introducing major architecture changes, new features, improvements, and platform availability. Users can easily download and install Dive on Windows, MacOS, and Linux, and set up MCP tools through local servers or OAP cloud services.

README:

Dive is an open-source MCP Host Desktop Application that seamlessly integrates with any LLMs supporting function calling capabilities. ✨

- 🌐 Universal LLM Support: Compatible with ChatGPT, Anthropic, Ollama and OpenAI-compatible models

- 💻 Cross-Platform: Available for Windows, MacOS, and Linux

- 🔄 Model Context Protocol: Enabling seamless MCP AI agent integration on both stdio and SSE mode

- ☁️ OAP Cloud Integration: One-click access to managed MCP servers via OAPHub.ai - eliminates complex local deployments

- 🏗️ Dual Architecture: Modern Tauri version alongside traditional Electron version for optimal performance

- 🌍 Multi-Language Support: Traditional Chinese, Simplified Chinese, English, Spanish, Japanese, Korean with more coming soon

- ⚙️ Advanced API Management: Multiple API keys and model switching support with

model_settings.json - 🛠️ Granular Tool Control: Enable/disable individual MCP tools for precise customization

- 💡 Custom Instructions: Personalized system prompts for tailored AI behavior

- 🔄 Auto-Update Mechanism: Automatically checks for and installs the latest application updates

- 🏗️ Dual Architecture Support: Dive now supports both Electron and Tauri frameworks simultaneously

- ⚡ Tauri Version: New modern architecture with optimized installer size (Windows < 30MB)

- 🌐 OAP Platform Integration: Native support for OAPHub.ai cloud services with one-click MCP server deployment

- 🔐 OAP Authentication: Comprehensive OAP login and authentication support

- 📁 Enhanced Model Configuration: Complete restructuring with

model_settings.jsonfor managing multiple models - 🛠️ Granular MCP Control: Individual tool enable/disable functionality for better customization

- 🎨 UI/UX Enhancements: Streamlined settings interface with combined pages for better user experience

- 🔧 Improved Network Handling: Enhanced port resolution logic with interval polling for better connectivity

- ⚙️ Enhanced Model Settings: Improved OpenAI compatible model settings and tool integration in prompts

- 🐧 Linux Tauri Support: Full Tauri framework support now available on Linux platforms

- 📦 Smart Dependency Management: Automatic detection and updating of MCP host dependencies

- 🔄 Updated dive-mcp-host: Latest architectural improvements incorporated

- Windows: Available in both Electron and Tauri versions ✅

- macOS: Currently Electron only 🔜

- Linux: Available in both Electron and Tauri versions ✅

Migration Note: Existing local MCP/LLM configurations remain fully supported. OAP integration is additive and does not affect current workflows.

Get the latest version of Dive:

Choose between two architectures:

- Tauri Version (Recommended): Smaller installer (<30MB), modern architecture

- Electron Version: Traditional architecture, fully stable

- Python and Node.js environments will be downloaded automatically after launching

- Electron Version: Download the .dmg version

- You need to install Python and Node.js (with npx uvx) environments yourself

- Follow the installation prompts to complete setup

Choose between two architectures:

- Tauri Version (Recommended): Modern architecture with smaller installer size

- Electron Version: Traditional architecture with .AppImage format

- You need to install Python and Node.js (with npx uvx) environments yourself

- For Ubuntu/Debian users:

- You may need to add

--no-sandboxparameter - Or modify system settings to allow sandbox

- Run

chmod +xto make the AppImage executable

- You may need to add

Dive offers two ways to access MCP tools: OAP Cloud Services (recommended for beginners) and Local MCP Servers (for advanced users).

For advanced users who prefer local control. The system comes with a default echo MCP Server, and you can add more powerful tools like Fetch and Youtube-dl.

The easiest way to get started! Access enterprise-grade MCP tools instantly:

- Sign up at OAPHub.ai

- Connect to Dive using one-click deep links or configuration files

- Enjoy managed MCP servers with zero setup - no Python, Docker, or complex dependencies required

Benefits:

- ✅ Zero configuration needed

- ✅ Cross-platform compatibility

- ✅ Enterprise-grade reliability

- ✅ Automatic updates and maintenance

Add this JSON configuration to your Dive MCP settings to enable local tools:

"mcpServers":{

"fetch": {

"command": "uvx",

"args": [

"mcp-server-fetch",

"--ignore-robots-txt"

],

"enabled": true

},

"filesystem": {

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-filesystem",

"/path/to/allowed/files"

],

"enabled": true

},

"youtubedl": {

"command": "npx",

"args": [

"@kevinwatt/yt-dlp-mcp"

],

"enabled": true

}

}You can connect to external cloud MCP servers via Streamable HTTP transport. Here's the Dive configuration example for SearXNG service from OAPHub:

{

"mcpServers": {

"SearXNG_MCP_Server": {

"transport": "streamable",

"url": "https://proxy.oaphub.ai/v1/mcp/181672830075666436",

"headers": {

"Authorization": "GLOBAL_CLIENT_TOKEN"

}

}

}

}Reference: @https://oaphub.ai/mcp/181672830075666436

You can also connect to external MCP servers (not local ones) via SSE (Server-Sent Events). Add this configuration to your Dive MCP settings:

{

"mcpServers": {

"MCP_SERVER_NAME": {

"enabled": true,

"transport": "sse",

"url": "YOUR_SSE_SERVER_URL"

}

}

}yt-dlp-mcp requires the yt-dlp package. Install it based on your operating system:

winget install yt-dlpbrew install yt-dlppip install yt-dlpSee BUILD.md for more details.

- 💬 Join our Discord

- 🐦 Follow us on Twitter/X Reddit Thread

- ⭐ Star us on GitHub

- 🐛 Report issues on our Issue Tracker

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Dive

Similar Open Source Tools

Dive

Dive is an open-source MCP Host Desktop Application that seamlessly integrates with any LLMs supporting function calling capabilities. It offers universal LLM support, cross-platform compatibility, model context protocol for AI agent integration, OAP cloud integration, dual architecture for optimal performance, multi-language support, advanced API management, granular tool control, custom instructions, auto-update mechanism, and more. Dive provides a user-friendly interface for managing multiple AI models and tools, with recent updates introducing major architecture changes, new features, improvements, and platform availability. Users can easily download and install Dive on Windows, MacOS, and Linux, and set up MCP tools through local servers or OAP cloud services.

tingly-box

Tingly Box is a tool that helps in deciding which model to call, compressing context, and routing requests efficiently. It offers secure, reliable, and customizable functional extensions. With features like unified API, smart routing, context compression, auto API translation, blazing fast performance, flexible authentication, visual control panel, and client-side usage stats, Tingly Box provides a comprehensive solution for managing AI models and tokens. It supports integration with various IDEs, CLI tools, SDKs, and AI applications, making it versatile and easy to use. The tool also allows seamless integration with OAuth providers like Claude Code, enabling users to utilize existing quotas in OpenAI-compatible tools. Tingly Box aims to simplify AI model management and usage by providing a single endpoint for multiple providers with minimal configuration, promoting seamless integration with SDKs and CLI tools.

astron-rpa

AstronRPA is an enterprise-grade Robotic Process Automation (RPA) desktop application that supports low-code/no-code development. It enables users to rapidly build workflows and automate desktop software and web pages. The tool offers comprehensive automation support for various applications, highly component-based design, enterprise-grade security and collaboration features, developer-friendly experience, native agent empowerment, and multi-channel trigger integration. It follows a frontend-backend separation architecture with components for system operations, browser automation, GUI automation, AI integration, and more. The tool is deployed via Docker and designed for complex RPA scenarios.

MassGen

MassGen is a cutting-edge multi-agent system that leverages the power of collaborative AI to solve complex tasks. It assigns a task to multiple AI agents who work in parallel, observe each other's progress, and refine their approaches to converge on the best solution to deliver a comprehensive and high-quality result. The system operates through an architecture designed for seamless multi-agent collaboration, with key features including cross-model/agent synergy, parallel processing, intelligence sharing, consensus building, and live visualization. Users can install the system, configure API settings, and run MassGen for various tasks such as question answering, creative writing, research, development & coding tasks, and web automation & browser tasks. The roadmap includes plans for advanced agent collaboration, expanded model, tool & agent integration, improved performance & scalability, enhanced developer experience, and a web interface.

inference-gateway

The Inference Gateway is an open-source proxy server designed to simplify access to various language model APIs. It allows users to interact with different language models through a unified interface, stream tokens in real-time, process images alongside text, and use Docker or Kubernetes for deployment. The gateway supports Model Context Protocol integration, provides metrics and observability features, and is production-ready with minimal resource consumption. It offers middleware control and bypass mechanisms, enabling users to manage capabilities like MCP and vision support. The CLI tool provides status monitoring, interactive chat, configuration management, project initialization, and tool execution functionalities. The project aims to provide a flexible solution for AI Agents, supporting self-hosted LLMs and avoiding vendor lock-in.

BodhiApp

Bodhi App runs Open Source Large Language Models locally, exposing LLM inference capabilities as OpenAI API compatible REST APIs. It leverages llama.cpp for GGUF format models and huggingface.co ecosystem for model downloads. Users can run fine-tuned models for chat completions, create custom aliases, and convert Huggingface models to GGUF format. The CLI offers commands for environment configuration, model management, pulling files, serving API, and more.

local-cocoa

Local Cocoa is a privacy-focused tool that runs entirely on your device, turning files into memory to spark insights and power actions. It offers features like fully local privacy, multimodal memory, vector-powered retrieval, intelligent indexing, vision understanding, hardware acceleration, focused user experience, integrated notes, and auto-sync. The tool combines file ingestion, intelligent chunking, and local retrieval to build a private on-device knowledge system. The ultimate goal includes more connectors like Google Drive integration, voice mode for local speech-to-text interaction, and a plugin ecosystem for community tools and agents. Local Cocoa is built using Electron, React, TypeScript, FastAPI, llama.cpp, and Qdrant.

mistral.rs

Mistral.rs is a fast LLM inference platform written in Rust. We support inference on a variety of devices, quantization, and easy-to-use application with an Open-AI API compatible HTTP server and Python bindings.

hyper-mcp

hyper-mcp is a fast and secure MCP server that extends its capabilities through WebAssembly plugins. It makes it easy to add AI capabilities to applications by allowing users to write plugins in any language that compiles to WebAssembly, distribute them via standard OCI registries, and run them anywhere from cloud to edge. The tool is built with a security-first mindset, offering sandboxed plugins, memory-safe execution, secure plugin distribution, and fine-grained access control for host functions. Users can deploy hyper-mcp anywhere, benefit from cross-platform compatibility, and prevent tool name collisions with the support tool name prefix feature.

AIClient-2-API

AIClient-2-API is a versatile and lightweight API proxy designed for developers, providing ample free API request quotas and comprehensive support for various mainstream large models like Gemini, Qwen Code, Claude, etc. It converts multiple backend APIs into standard OpenAI format interfaces through a Node.js HTTP server. The project adopts a modern modular architecture, supports strategy and adapter patterns, comes with complete test coverage and health check mechanisms, and is ready to use after 'npm install'. By easily switching model service providers in the configuration file, any OpenAI-compatible client or application can seamlessly access different large model capabilities through the same API address, eliminating the hassle of maintaining multiple sets of configurations for different services and dealing with incompatible interfaces.

openwhispr

OpenWhispr is an open source desktop dictation application that converts speech to text using OpenAI Whisper. It features both local and cloud processing options for maximum flexibility and privacy. The application supports multiple AI providers, customizable hotkeys, agent naming, and various AI processing models. It offers a modern UI built with React 19, TypeScript, and Tailwind CSS v4, and is optimized for speed using Vite and modern tooling. Users can manage settings, view history, configure API keys, and download/manage local Whisper models. The application is cross-platform, supporting macOS, Windows, and Linux, and offers features like automatic pasting, draggable interface, global hotkeys, and compound hotkeys.

figma-console-mcp

Figma Console MCP is a Model Context Protocol server that bridges design and development, giving AI assistants complete access to Figma for extraction, creation, and debugging. It connects AI assistants like Claude to Figma, enabling plugin debugging, visual debugging, design system extraction, design creation, variable management, real-time monitoring, and three installation methods. The server offers 53+ tools for NPX and Local Git setups, while Remote SSE provides read-only access with 16 tools. Users can create and modify designs with AI, contribute to projects, or explore design data. The server supports authentication via personal access tokens and OAuth, and offers tools for navigation, console debugging, visual debugging, design system extraction, design creation, design-code parity, variable management, and AI-assisted design creation.

Claw-Hunter

Claw Hunter is a discovery and risk-assessment tool for OpenClaw instances, designed to identify 'Shadow AI' and audit agent privileges. It helps ITSec teams detect security risks, credential exposure, integration inventory, configuration issues, and installation status. The tool offers system-agnostic visibility, MDM readiness, non-intrusive operations, comprehensive detection, structured output in JSON format, and zero dependencies. It provides silent execution mode for automated deployment, machine identification, security risk scoring, results upload to a central API endpoint, bearer token authentication support, and persistent logging. Claw Hunter offers proper exit codes for automation and is available for macOS, Linux, and Windows platforms.

InsForge

InsForge is a backend development platform designed for AI coding agents and AI code editors. It serves as a semantic layer that enables agents to interact with backend primitives such as databases, authentication, storage, and functions in a meaningful way. The platform allows agents to fetch backend context, configure primitives, and inspect backend state through structured schemas. InsForge facilitates backend context engineering for AI coding agents to understand, operate, and monitor backend systems effectively.

BubbleLab

Bubble Lab is an open-source agentic workflow automation builder designed for developers seeking full control, transparency, and type safety. It compiles workflows into clean, production-ready TypeScript code that can be debugged and deployed anywhere. With features like natural language prompt to workflow generation, full observability, seamless migration from other platforms, and instant export as TypeScript/API, Bubble Lab offers a flexible and code-centric approach to workflow automation.

curiso

Curiso AI is an infinite canvas platform that connects nodes and AI services to explore ideas without repetition. It empowers advanced users to unlock richer AI interactions. Features include multi OS support, infinite canvas, multiple AI provider integration, local AI inference provider integration, custom model support, model metrics, RAG support, local Transformers.js embedding models, inference parameters customization, multiple boards, vision model support, customizable interface, node-based conversations, and secure local encrypted storage. Curiso also offers a Solana token for exclusive access to premium features and enhanced AI capabilities.

For similar tasks

Dive

Dive is an open-source MCP Host Desktop Application that seamlessly integrates with any LLMs supporting function calling capabilities. It offers universal LLM support, cross-platform compatibility, model context protocol for AI agent integration, OAP cloud integration, dual architecture for optimal performance, multi-language support, advanced API management, granular tool control, custom instructions, auto-update mechanism, and more. Dive provides a user-friendly interface for managing multiple AI models and tools, with recent updates introducing major architecture changes, new features, improvements, and platform availability. Users can easily download and install Dive on Windows, MacOS, and Linux, and set up MCP tools through local servers or OAP cloud services.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.

tts-generation-webui

TTS Generation WebUI is a comprehensive tool that provides a user-friendly interface for text-to-speech and voice cloning tasks. It integrates various AI models such as Bark, MusicGen, AudioGen, Tortoise, RVC, Vocos, Demucs, SeamlessM4T, and MAGNeT. The tool offers one-click installers, Google Colab demo, videos for guidance, and extra voices for Bark. Users can generate audio outputs, manage models, caches, and system space for AI projects. The project is open-source and emphasizes ethical and responsible use of AI technology.

Thor

Thor is a powerful AI model management tool designed for unified management and usage of various AI models. It offers features such as user, channel, and token management, data statistics preview, log viewing, system settings, external chat link integration, and Alipay account balance purchase. Thor supports multiple AI models including OpenAI, Kimi, Starfire, Claudia, Zhilu AI, Ollama, Tongyi Qianwen, AzureOpenAI, and Tencent Hybrid models. It also supports various databases like SqlServer, PostgreSql, Sqlite, and MySql, allowing users to choose the appropriate database based on their needs.

VoAPI

VoAPI is a new high-value/high-performance AI model interface management and distribution system. It is a closed-source tool for personal learning use only, not for commercial purposes. Users must comply with upstream AI model service providers and legal regulations. The system offers a visually appealing interface, independent development documentation page support, service monitoring page configuration support, and third-party login support. It also optimizes interface elements, user registration time support, data operation button positioning, and more.

VoAPI

VoAPI is a new high-value/high-performance AI model interface management and distribution system. It is a closed-source tool for personal learning use only, not for commercial purposes. Users must comply with upstream AI model service providers and legal regulations. The system offers a visually appealing interface with features such as independent development documentation page support, service monitoring page configuration support, and third-party login support. Users can manage user registration time, optimize interface elements, and support features like online recharge, model pricing display, and sensitive word filtering. VoAPI also provides support for various AI models and platforms, with the ability to configure homepage templates, model information, and manufacturer information.

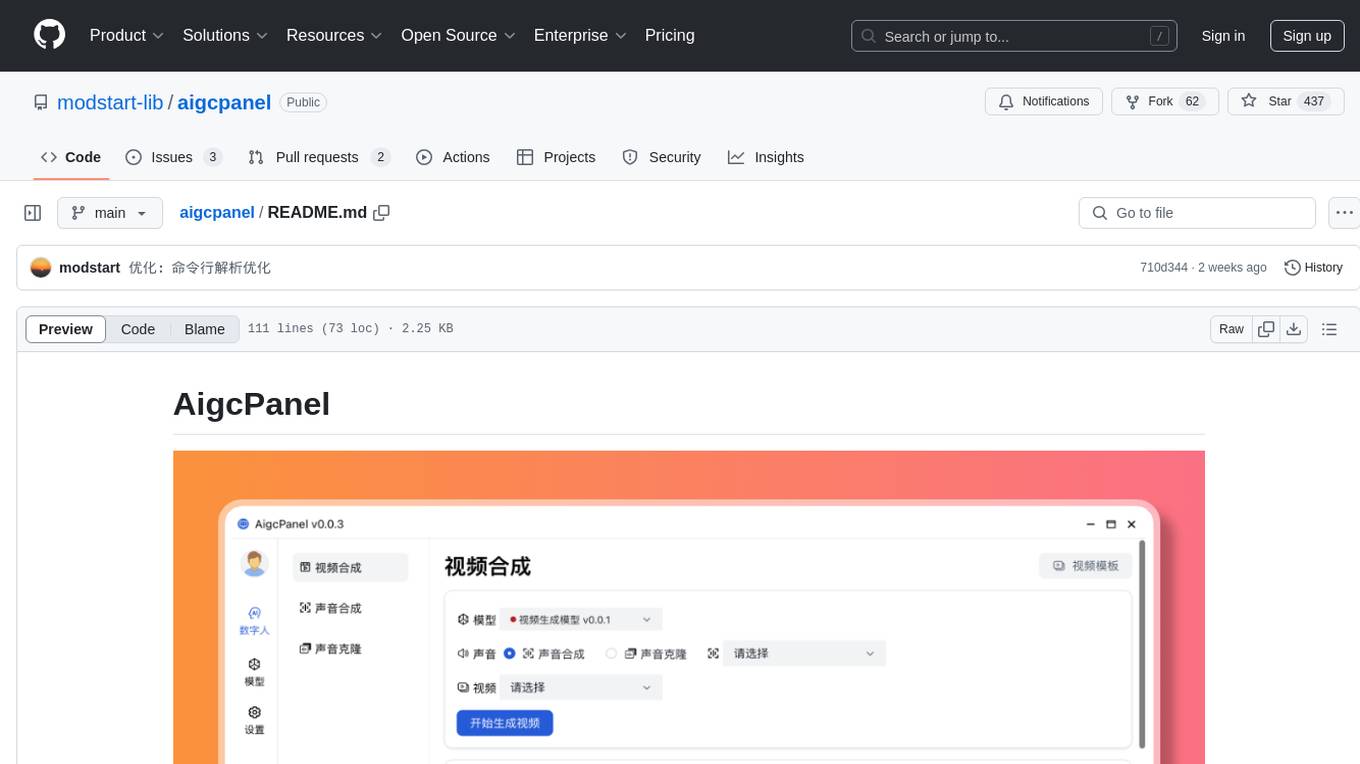

aigcpanel

AigcPanel is a simple and easy-to-use all-in-one AI digital human system that even beginners can use. It supports video synthesis, voice synthesis, voice cloning, simplifies local model management, and allows one-click import and use of AI models. It prohibits the use of this product for illegal activities and users must comply with the laws and regulations of the People's Republic of China.

solo-server

Solo Server is a lightweight server designed for managing hardware-aware inference. It provides seamless setup through a simple CLI and HTTP servers, an open model registry for pulling models from platforms like Ollama and Hugging Face, cross-platform compatibility for effortless deployment of AI models on hardware, and a configurable framework that auto-detects hardware components (CPU, GPU, RAM) and sets optimal configurations.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.