presenton

Open-Source AI Presentation Generator and API (Gamma, Beautiful AI, Decktopus Alternative)

Stars: 1882

Presenton is an open-source AI presentation generator and API that allows users to create professional presentations locally on their devices. It offers complete control over the presentation workflow, including custom templates, AI template generation, flexible generation options, and export capabilities. Users can use their own API keys for various models, integrate with Ollama for local model running, and connect to OpenAI-compatible endpoints. The tool supports multiple providers for text and image generation, runs locally without cloud dependencies, and can be deployed as a Docker container with GPU support.

README:

Presenton is an open-source application for generating presentations with AI — all running locally on your device. Stay in control of your data and privacy while using models like OpenAI and Gemini, or use your own hosted models through Ollama.

✨ Now, generate presentations with your existing PPTX file! Just upload your presentation file to create template design and then use that template to generate on brand and on design presentation on any topic.

[!NOTE] Enterprise Inquiries: For enterprise use, custom deployments, or partnership opportunities, contact us at [email protected].

[!IMPORTANT] Like Presenton? A ⭐ star shows your support and encourages us to keep building!

[!TIP] For detailed setup guides, API documentation, and advanced configuration options, visit our Official Documentation

Presenton gives you complete control over your AI presentation workflow. Choose your models, customize your experience, and keep your data private.

- ✅ Custom Templates & Themes — Create unlimited presentation designs with HTML and Tailwind CSS

- ✅ AI Template Generation — Create presentation templates from existing Powerpoint documents.

- ✅ Flexible Generation — Build presentations from prompts or uploaded documents

- ✅ Export Ready — Save as PowerPoint (PPTX) and PDF with professional formatting

- ✅ Built-In MCP Server — Generate presentations over Model Context Protocol

- ✅ Bring Your Own Key — Use your own API keys for OpenAI, Google Gemini, Anthropic Claude, or any compatible provider. Only pay for what you use, no hidden fees or subscriptions.

- ✅ Ollama Integration — Run open-source models locally with full privacy

- ✅ OpenAI API Compatible — Connect to any OpenAI-compatible endpoint with your own models

- ✅ Multi-Provider Support — Mix and match text and image generation providers

- ✅ Versatile Image Generation — Choose from DALL-E 3, Gemini Flash, Pexels, or Pixabay

- ✅ Rich Media Support — Icons, charts, and custom graphics for professional presentations

- ✅ Runs Locally — All processing happens on your device, no cloud dependencies

- ✅ API Deployment — Host as your own API service for your team

- ✅ Fully Open-Source — Apache 2.0 licensed, inspect, modify, and contribute

- ✅ Docker Ready — One-command deployment with GPU support for local models

We're launching Presenton Cloud which will make it very easy to create presentations through UI, API and MCP. Join our waitlist for early beta.

docker run -it --name presenton -p 5000:80 -v "./app_data:/app_data" ghcr.io/presenton/presenton:latestdocker run -it --name presenton -p 5000:80 -v "${PWD}\app_data:/app_data" ghcr.io/presenton/presenton:latestOpen http://localhost:5000 on browser of your choice to use Presenton.

Note: You can replace 5000 with any other port number of your choice to run Presenton on a different port number.

You may want to directly provide your API KEYS as environment variables and keep them hidden. You can set these environment variables to achieve it.

- CAN_CHANGE_KEYS=[true/false]: Set this to false if you want to keep API Keys hidden and make them unmodifiable.

- LLM=[openai/google/anthropic/ollama/custom]: Select LLM of your choice.

- OPENAI_API_KEY=[Your OpenAI API Key]: Provide this if LLM is set to openai

- OPENAI_MODEL=[OpenAI Model ID]: Provide this if LLM is set to openai (default: "gpt-4.1")

- GOOGLE_API_KEY=[Your Google API Key]: Provide this if LLM is set to google

- GOOGLE_MODEL=[Google Model ID]: Provide this if LLM is set to google (default: "models/gemini-2.0-flash")

- ANTHROPIC_API_KEY=[Your Anthropic API Key]: Provide this if LLM is set to anthropic

- ANTHROPIC_MODEL=[Anthropic Model ID]: Provide this if LLM is set to anthropic (default: "claude-3-5-sonnet-20241022")

- OLLAMA_URL=[Custom Ollama URL]: Provide this if you want to custom Ollama URL and LLM is set to ollama

- OLLAMA_MODEL=[Ollama Model ID]: Provide this if LLM is set to ollama

- CUSTOM_LLM_URL=[Custom OpenAI Compatible URL]: Provide this if LLM is set to custom

- CUSTOM_LLM_API_KEY=[Custom OpenAI Compatible API KEY]: Provide this if LLM is set to custom

- CUSTOM_MODEL=[Custom Model ID]: Provide this if LLM is set to custom

- TOOL_CALLS=[Enable/Disable Tool Calls on Custom LLM]: If true, LLM will use Tool Call instead of Json Schema for Structured Output.

- DISABLE_THINKING=[Enable/Disable Thinking on Custom LLM]: If true, Thinking will be disabled.

- WEB_GROUNDING=[Enable/Disable Web Search for OpenAI, Google And Anthropic]: If true, LLM will be able to search web for better results.

You can also set the following environment variables to customize the image generation provider and API keys:

-

IMAGE_PROVIDER=[pexels/pixabay/gemini_flash/dall-e-3]: Select the image provider of your choice.

- Defaults to dall-e-3 for OpenAI models, gemini_flash for Google models if not set.

- PEXELS_API_KEY=[Your Pexels API Key]: Required if using pexels as the image provider.

- PIXABAY_API_KEY=[Your Pixabay API Key]: Required if using pixabay as the image provider.

- GOOGLE_API_KEY=[Your Google API Key]: Required if using gemini_flash as the image provider.

- OPENAI_API_KEY=[Your OpenAI API Key]: Required if using dall-e-3 as the image provider.

You can disable anonymous telemetry using the following environment variable:

- DISABLE_ANONYMOUS_TELEMETRY=[true/false]: Set this to true to disable anonymous telemetry.

Note: You can freely choose both the LLM (text generation) and the image provider. Supported image providers: pexels, pixabay, gemini_flash (Google), and dall-e-3 (OpenAI).

docker run -it --name presenton -p 5000:80 -e LLM="openai" -e OPENAI_API_KEY="******" -e IMAGE_PROVIDER="dall-e-3" -e CAN_CHANGE_KEYS="false" -v "./app_data:/app_data" ghcr.io/presenton/presenton:latestdocker run -it --name presenton -p 5000:80 -e LLM="google" -e GOOGLE_API_KEY="******" -e IMAGE_PROVIDER="gemini_flash" -e CAN_CHANGE_KEYS="false" -v "./app_data:/app_data" ghcr.io/presenton/presenton:latestdocker run -it --name presenton -p 5000:80 -e LLM="ollama" -e OLLAMA_MODEL="llama3.2:3b" -e IMAGE_PROVIDER="pexels" -e PEXELS_API_KEY="*******" -e CAN_CHANGE_KEYS="false" -v "./app_data:/app_data" ghcr.io/presenton/presenton:latestdocker run -it --name presenton -p 5000:80 -e LLM="anthropic" -e ANTHROPIC_API_KEY="******" -e IMAGE_PROVIDER="pexels" -e PEXELS_API_KEY="******" -e CAN_CHANGE_KEYS="false" -v "./app_data:/app_data" ghcr.io/presenton/presenton:latestdocker run -it -p 5000:80 -e CAN_CHANGE_KEYS="false" -e LLM="custom" -e CUSTOM_LLM_URL="http://*****" -e CUSTOM_LLM_API_KEY="*****" -e CUSTOM_MODEL="llama3.2:3b" -e IMAGE_PROVIDER="pexels" -e PEXELS_API_KEY="********" -v "./app_data:/app_data" ghcr.io/presenton/presenton:latestTo use GPU acceleration with Ollama models, you need to install and configure the NVIDIA Container Toolkit. This allows Docker containers to access your NVIDIA GPU.

Once the NVIDIA Container Toolkit is installed and configured, you can run Presenton with GPU support by adding the --gpus=all flag:

docker run -it --name presenton --gpus=all -p 5000:80 -e LLM="ollama" -e OLLAMA_MODEL="llama3.2:3b" -e IMAGE_PROVIDER="pexels" -e PEXELS_API_KEY="*******" -e CAN_CHANGE_KEYS="false" -v "./app_data:/app_data" ghcr.io/presenton/presenton:latestNote: GPU acceleration significantly improves the performance of Ollama models, especially for larger models. Make sure you have sufficient GPU memory for your chosen model.

Endpoint: /api/v1/ppt/presentation/generate

Method: POST

Content-Type: application/json

| Parameter | Type | Required | Description |

|---|---|---|---|

| prompt | string | Yes | The main topic or prompt for generating the presentation |

| n_slides | integer | No | Number of slides to generate (default: 8, min: 5, max: 15) |

| language | string | No | Language for the presentation (default: "English") |

| template | string | No | Presentation template (default: "general"). Available options: "classic", "general", "modern", "professional" + Custom templates |

| export_as | string | No | Export format ("pptx" or "pdf", default: "pptx") |

{

"presentation_id": "string",

"path": "string",

"edit_path": "string"

}curl -X POST http://localhost:5000/api/v1/ppt/presentation/generate \

-H "Content-Type: application/json" \

-d '{

"prompt": "Introduction to Machine Learning",

"n_slides": 5,

"language": "English",

"template": "general",

"export_as": "pptx"

}'{

"presentation_id": "d3000f96-096c-4768-b67b-e99aed029b57",

"path": "/static/user_data/d3000f96-096c-4768-b67b-e99aed029b57/Introduction_to_Machine_Learning.pptx",

"edit_path": "/presentation?id=d3000f96-096c-4768-b67b-e99aed029b57"

}Note: Make sure to prepend your server's root URL to the path and edit_path fields in the response to construct valid links.

For detailed info checkout API documentation.

- Generate Presentations via API in 5 minutes

- Create Presentations from CSV using AI

- Create Data Reports Using AI

- [x] Support for custom HTML templates by developers

- [x] Support for accessing custom templates over API

- [x] Implement MCP server

- [ ] Ability for users to change system prompt

- [X] Support external SQL database

Apache 2.0

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for presenton

Similar Open Source Tools

presenton

Presenton is an open-source AI presentation generator and API that allows users to create professional presentations locally on their devices. It offers complete control over the presentation workflow, including custom templates, AI template generation, flexible generation options, and export capabilities. Users can use their own API keys for various models, integrate with Ollama for local model running, and connect to OpenAI-compatible endpoints. The tool supports multiple providers for text and image generation, runs locally without cloud dependencies, and can be deployed as a Docker container with GPU support.

osaurus

Osaurus is a native, Apple Silicon-only local LLM server built on Apple's MLX for maximum performance on M‑series chips. It is a SwiftUI app + SwiftNIO server with OpenAI‑compatible and Ollama‑compatible endpoints. The tool supports native MLX text generation, model management, streaming and non‑streaming chat completions, OpenAI‑compatible function calling, real-time system resource monitoring, and path normalization for API compatibility. Osaurus is designed for macOS 15.5+ and Apple Silicon (M1 or newer) with Xcode 16.4+ required for building from source.

handit.ai

Handit.ai is an autonomous engineer tool designed to fix AI failures 24/7. It catches failures, writes fixes, tests them, and ships PRs automatically. It monitors AI applications, detects issues, generates fixes, tests them against real data, and ships them as pull requests—all automatically. Users can write JavaScript, TypeScript, Python, and more, and the tool automates what used to require manual debugging and firefighting.

lighteval

LightEval is a lightweight LLM evaluation suite that Hugging Face has been using internally with the recently released LLM data processing library datatrove and LLM training library nanotron. We're releasing it with the community in the spirit of building in the open. Note that it is still very much early so don't expect 100% stability ^^' In case of problems or question, feel free to open an issue!

tensorzero

TensorZero is an open-source platform that helps LLM applications graduate from API wrappers into defensible AI products. It enables a data & learning flywheel for LLMs by unifying inference, observability, optimization, and experimentation. The platform includes a high-performance model gateway, structured schema-based inference, observability, experimentation, and data warehouse for analytics. TensorZero Recipes optimize prompts and models, and the platform supports experimentation features and GitOps orchestration for deployment.

AutoAgents

AutoAgents is a cutting-edge multi-agent framework built in Rust that enables the creation of intelligent, autonomous agents powered by Large Language Models (LLMs) and Ractor. Designed for performance, safety, and scalability. AutoAgents provides a robust foundation for building complex AI systems that can reason, act, and collaborate. With AutoAgents you can create Cloud Native Agents, Edge Native Agents and Hybrid Models as well. It is so extensible that other ML Models can be used to create complex pipelines using Actor Framework.

evi-run

evi-run is a powerful, production-ready multi-agent AI system built on Python using the OpenAI Agents SDK. It offers instant deployment, ultimate flexibility, built-in analytics, Telegram integration, and scalable architecture. The system features memory management, knowledge integration, task scheduling, multi-agent orchestration, custom agent creation, deep research, web intelligence, document processing, image generation, DEX analytics, and Solana token swap. It supports flexible usage modes like private, free, and pay mode, with upcoming features including NSFW mode, task scheduler, and automatic limit orders. The technology stack includes Python 3.11, OpenAI Agents SDK, Telegram Bot API, PostgreSQL, Redis, and Docker & Docker Compose for deployment.

llxprt-code

LLxprt Code is an AI-powered coding assistant that works with any LLM provider, offering a command-line interface for querying and editing codebases, generating applications, and automating development workflows. It supports various subscriptions, provider flexibility, top open models, local model support, and a privacy-first approach. Users can interact with LLxprt Code in both interactive and non-interactive modes, leveraging features like subscription OAuth, multi-account failover, load balancer profiles, and extensive provider support. The tool also allows for the creation of advanced subagents for specialized tasks and integrates with the Zed editor for in-editor chat and code selection.

J.A.R.V.I.S.2.0

J.A.R.V.I.S. 2.0 is an AI-powered assistant designed for voice commands, capable of tasks like providing weather reports, summarizing news, sending emails, and more. It features voice activation, speech recognition, AI responses, and handles multiple tasks including email sending, weather reports, news reading, image generation, database functions, phone call automation, AI-based task execution, website & application automation, and knowledge-based interactions. The assistant also includes timeout handling, automatic input processing, and the ability to call multiple functions simultaneously. It requires Python 3.9 or later and specific API keys for weather, news, email, and AI access. The tool integrates Gemini AI for function execution and Ollama as a fallback mechanism. It utilizes a RAG-based knowledge system and ADB integration for phone automation. Future enhancements include deeper mobile integration, advanced AI-driven automation, improved NLP-based command execution, and multi-modal interactions.

AionUi

AionUi is a user interface library for building modern and responsive web applications. It provides a set of customizable components and styles to create visually appealing user interfaces. With AionUi, developers can easily design and implement interactive web interfaces that are both functional and aesthetically pleasing. The library is built using the latest web technologies and follows best practices for performance and accessibility. Whether you are working on a personal project or a professional application, AionUi can help you streamline the UI development process and deliver a seamless user experience.

layra

LAYRA is the world's first visual-native AI automation engine that sees documents like a human, preserves layout and graphical elements, and executes arbitrarily complex workflows with full Python control. It empowers users to build next-generation intelligent systems with no limits or compromises. Built for Enterprise-Grade deployment, LAYRA features a modern frontend, high-performance backend, decoupled service architecture, visual-native multimodal document understanding, and a powerful workflow engine.

CBbot

CBbot is an AI-powered coding assistant for macOS that helps users write code more efficiently, process documents, and automate tasks. It offers easy installation, built-in AI coding capabilities, auto configuration, and smart tools. Users can download CBbot for macOS 10.15 or higher, with Apple Silicon or Intel chip, and at least 6GB memory and 10GB disk space. The tool requires an internet connection for AI features. CBbot assists users in installing Docker Desktop, binding keys, troubleshooting, and using various skills for document processing and automation tasks. It also provides community support, billing based on usage, and network tips for using overseas AI models.

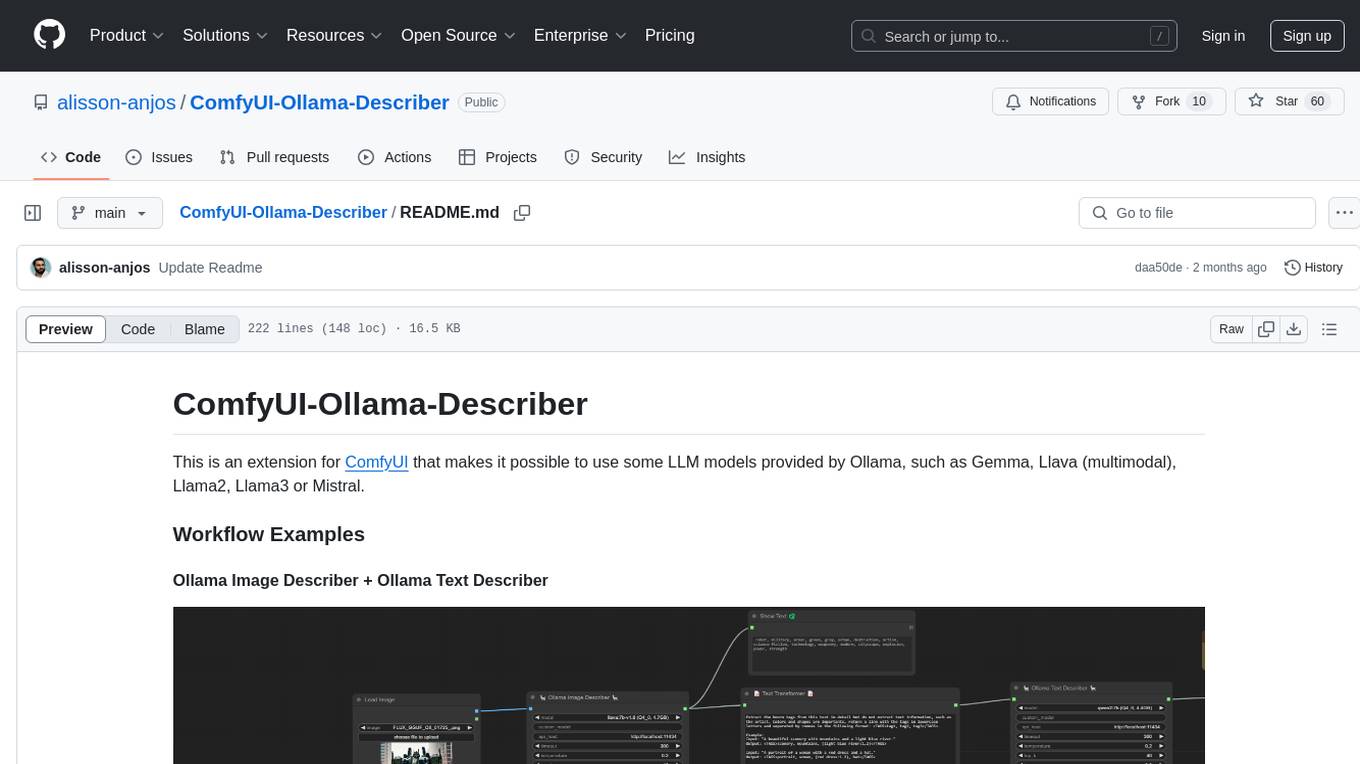

ComfyUI-Ollama-Describer

ComfyUI-Ollama-Describer is an extension for ComfyUI that enables the use of LLM models provided by Ollama, such as Gemma, Llava (multimodal), Llama2, Llama3, or Mistral. It requires the Ollama library for interacting with large-scale language models, supporting GPUs using CUDA and AMD GPUs on Windows, Linux, and Mac. The extension allows users to run Ollama through Docker and utilize NVIDIA GPUs for faster processing. It provides nodes for image description, text description, image captioning, and text transformation, with various customizable parameters for model selection, API communication, response generation, and model memory management.

RSTGameTranslation

RSTGameTranslation is a tool designed for translating game text into multiple languages efficiently. It provides a user-friendly interface for game developers to easily manage and localize their game content. With RSTGameTranslation, developers can streamline the translation process, ensuring consistency and accuracy across different language versions of their games. The tool supports various file formats commonly used in game development, making it versatile and adaptable to different project requirements. Whether you are working on a small indie game or a large-scale production, RSTGameTranslation can help you reach a global audience by making localization a seamless and hassle-free experience.

shimmy

Shimmy is a 5.1MB single-binary local inference server providing OpenAI-compatible endpoints for GGUF models. It offers fast, reliable AI inference with sub-second responses, zero configuration, and automatic port management. Perfect for developers seeking privacy, cost-effectiveness, speed, and easy integration with popular tools like VSCode and Cursor. Shimmy is designed to be invisible infrastructure that simplifies local AI development and deployment.

claude-code-plugins-plus-skills

Claude Code Skills & Plugins Hub is a comprehensive marketplace for agent skills and plugins, offering 1537 production-ready agent skills and 270 total plugins. It provides a learning lab with guides, diagrams, and examples for building production agent workflows. The package manager CLI allows users to discover, install, and manage plugins from their terminal, with features like searching, listing, installing, updating, and validating plugins. The marketplace is not on GitHub Marketplace and does not support built-in monetization. It is community-driven, actively maintained, and focuses on quality over quantity, aiming to be the definitive resource for Claude Code plugins.

For similar tasks

ai-to-pptx

Ai-to-pptx is a tool that uses AI technology to automatically generate PPTX, and supports online editing and exporting of PPTX. Main functions: - 1 Use large language models such as ChatGPT to generate outlines - 2 The generated content allows users to modify again - 3 Different templates can be selected when generating PPTX - 4 Support online editing of PPTX text content, style, pictures, etc. - 5 Supports exporting PPTX, PDF, PNG and other formats - 6 Support users to set their own LOGO and related background pictures to create their own exclusive PPTX style - 7 Support users to design their own templates and upload them to the sharing platform for others to use

cannoli

Cannoli allows you to build and run no-code LLM scripts using the Obsidian Canvas editor. Cannolis are scripts that leverage the OpenAI API to read/write to your vault, and take actions using HTTP requests. They can be used to automate tasks, create custom llm-chatbots, and more.

awesome-chatgpt

Awesome ChatGPT is an artificial intelligence chatbot developed by OpenAI. It offers a wide range of applications, web apps, browser extensions, CLI tools, bots, integrations, and packages for various platforms. Users can interact with ChatGPT through different interfaces and use it for tasks like generating text, creating presentations, summarizing content, and more. The ecosystem around ChatGPT includes tools for developers, writers, researchers, and individuals looking to leverage AI technology for different purposes.

Powerpointer-For-Local-LLMs

PowerPointer For Local LLMs is a PowerPoint generator that uses python-pptx and local llm's via the Oobabooga Text Generation WebUI api to create beautiful and informative presentations. It runs locally on your computer, eliminating privacy concerns. The tool allows users to select from 7 designs, make placeholders for images, and easily customize presentations within PowerPoint. Users provide information for the PowerPoint, which is then used to generate text using optimized prompts and the text generation webui api. The generated text is converted into a PowerPoint presentation using the python-pptx library.

aippt

Aippt is a commercial-grade AI tool for generating, parsing, and rendering PowerPoint presentations. It offers functionalities such as AI-powered PPT generation, PPT to JSON conversion, and JSON to PPT rendering. Users can experience online editing, upload PPT files for rendering, and download edited PPT files. The tool also supports commercial partnerships for custom industry solutions, native chart and animation support, user-defined templates, and competitive pricing. Aippt is available for commercial use with options for agency support and private deployment. The official website offers open APIs and an open platform for API/UI integration.

aippt_PresentationGen

A SpringBoot web application that generates PPT files using a llm. The tool preprocesses single-page templates and dynamically combines them to generate PPTX files with text replacement functionality. It utilizes technologies such as SpringBoot, MyBatis, MySQL, Redis, WebFlux, Apache POI, Aspose Slides, OSS, and Vue2. Users can deploy the tool by configuring various parameters in the application.yml file and setting up necessary resources like MySQL, OSS, and API keys. The tool also supports integration with open-source image libraries like Unsplash for adding images to the presentations.

PPTAgent

PPTAgent is an innovative system that automatically generates presentations from documents. It employs a two-step process for quality assurance and introduces PPTEval for comprehensive evaluation. With dynamic content generation, smart reference learning, and quality assessment, PPTAgent aims to streamline presentation creation. The tool follows an analysis phase to learn from reference presentations and a generation phase to develop structured outlines and cohesive slides. PPTEval evaluates presentations based on content accuracy, visual appeal, and logical coherence.

Sentient

Sentient is a personal, private, and interactive AI companion developed by Existence. The project aims to build a completely private AI companion that is deeply personalized and context-aware of the user. It utilizes automation and privacy to create a true companion for humans. The tool is designed to remember information about the user and use it to respond to queries and perform various actions. Sentient features a local and private environment, MBTI personality test, integrations with LinkedIn, Reddit, and more, self-managed graph memory, web search capabilities, multi-chat functionality, and auto-updates for the app. The project is built using technologies like ElectronJS, Next.js, TailwindCSS, FastAPI, Neo4j, and various APIs.

For similar jobs

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

skyvern

Skyvern automates browser-based workflows using LLMs and computer vision. It provides a simple API endpoint to fully automate manual workflows, replacing brittle or unreliable automation solutions. Traditional approaches to browser automations required writing custom scripts for websites, often relying on DOM parsing and XPath-based interactions which would break whenever the website layouts changed. Instead of only relying on code-defined XPath interactions, Skyvern adds computer vision and LLMs to the mix to parse items in the viewport in real-time, create a plan for interaction and interact with them. This approach gives us a few advantages: 1. Skyvern can operate on websites it’s never seen before, as it’s able to map visual elements to actions necessary to complete a workflow, without any customized code 2. Skyvern is resistant to website layout changes, as there are no pre-determined XPaths or other selectors our system is looking for while trying to navigate 3. Skyvern leverages LLMs to reason through interactions to ensure we can cover complex situations. Examples include: 1. If you wanted to get an auto insurance quote from Geico, the answer to a common question “Were you eligible to drive at 18?” could be inferred from the driver receiving their license at age 16 2. If you were doing competitor analysis, it’s understanding that an Arnold Palmer 22 oz can at 7/11 is almost definitely the same product as a 23 oz can at Gopuff (even though the sizes are slightly different, which could be a rounding error!) Want to see examples of Skyvern in action? Jump to #real-world-examples-of- skyvern

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

vanna

Vanna is an open-source Python framework for SQL generation and related functionality. It uses Retrieval-Augmented Generation (RAG) to train a model on your data, which can then be used to ask questions and get back SQL queries. Vanna is designed to be portable across different LLMs and vector databases, and it supports any SQL database. It is also secure and private, as your database contents are never sent to the LLM or the vector database.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

Avalonia-Assistant

Avalonia-Assistant is an open-source desktop intelligent assistant that aims to provide a user-friendly interactive experience based on the Avalonia UI framework and the integration of Semantic Kernel with OpenAI or other large LLM models. By utilizing Avalonia-Assistant, you can perform various desktop operations through text or voice commands, enhancing your productivity and daily office experience.

marvin

Marvin is a lightweight AI toolkit for building natural language interfaces that are reliable, scalable, and easy to trust. Each of Marvin's tools is simple and self-documenting, using AI to solve common but complex challenges like entity extraction, classification, and generating synthetic data. Each tool is independent and incrementally adoptable, so you can use them on their own or in combination with any other library. Marvin is also multi-modal, supporting both image and audio generation as well using images as inputs for extraction and classification. Marvin is for developers who care more about _using_ AI than _building_ AI, and we are focused on creating an exceptional developer experience. Marvin users should feel empowered to bring tightly-scoped "AI magic" into any traditional software project with just a few extra lines of code. Marvin aims to merge the best practices for building dependable, observable software with the best practices for building with generative AI into a single, easy-to-use library. It's a serious tool, but we hope you have fun with it. Marvin is open-source, free to use, and made with 💙 by the team at Prefect.

activepieces

Activepieces is an open source replacement for Zapier, designed to be extensible through a type-safe pieces framework written in Typescript. It features a user-friendly Workflow Builder with support for Branches, Loops, and Drag and Drop. Activepieces integrates with Google Sheets, OpenAI, Discord, and RSS, along with 80+ other integrations. The list of supported integrations continues to grow rapidly, thanks to valuable contributions from the community. Activepieces is an open ecosystem; all piece source code is available in the repository, and they are versioned and published directly to npmjs.com upon contributions. If you cannot find a specific piece on the pieces roadmap, please submit a request by visiting the following link: Request Piece Alternatively, if you are a developer, you can quickly build your own piece using our TypeScript framework. For guidance, please refer to the following guide: Contributor's Guide