SUPIR

SUPIR aims at developing Practical Algorithms for Photo-Realistic Image Restoration In the Wild. Our new online demo is also released at suppixel.ai.

Stars: 3987

SUPIR is an AI-based image processing and upscaling tool that leverages cutting-edge technology to enhance image quality and resolution. The tool provides users with the ability to upscale images with high generalization and quality, as well as specific settings for light degradation scenarios. It offers a range of models and checkpoints for different use cases, along with detailed instructions for installation and usage. SUPIR also includes features for color fixing, linear CFG adjustments, and various prompts for image enhancement. The tool is designed for non-commercial use only and comes with a contact email for inquiries and permission requests for commercial use.

README:

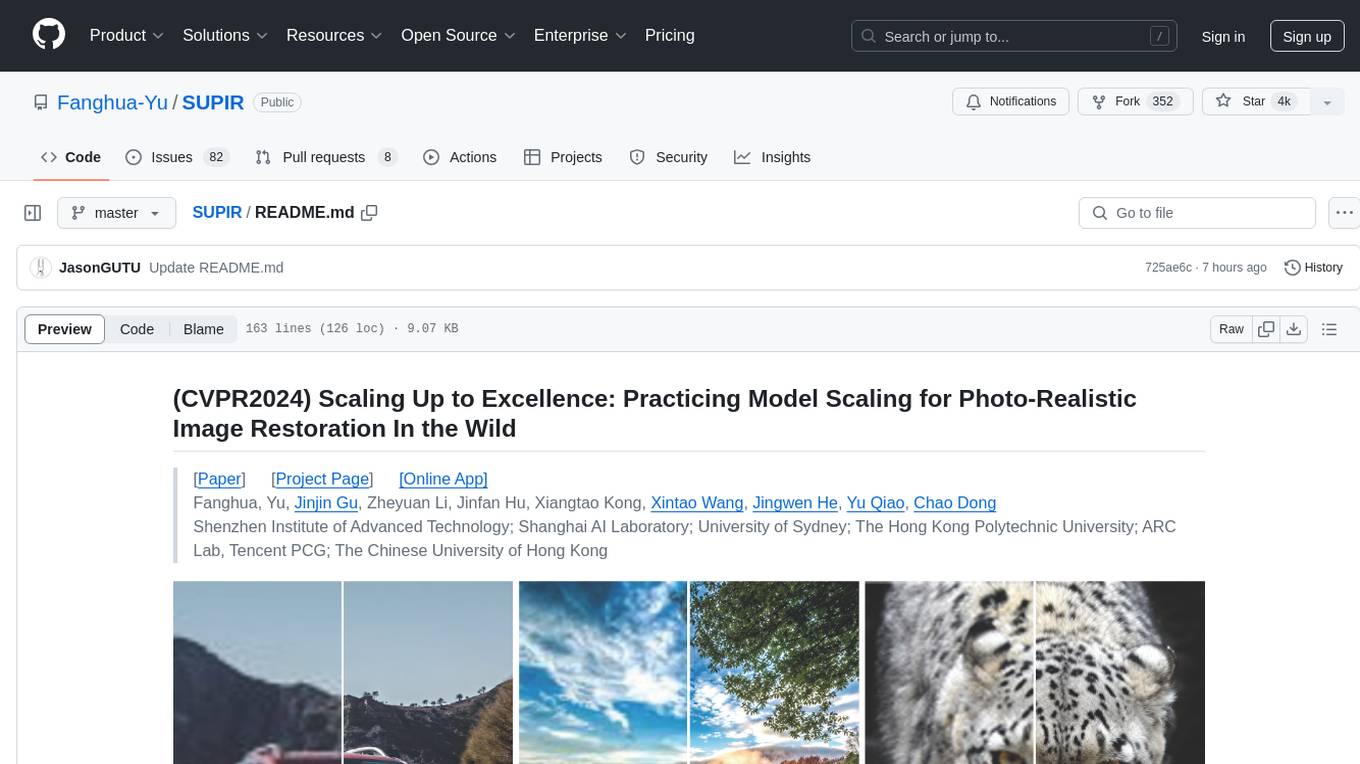

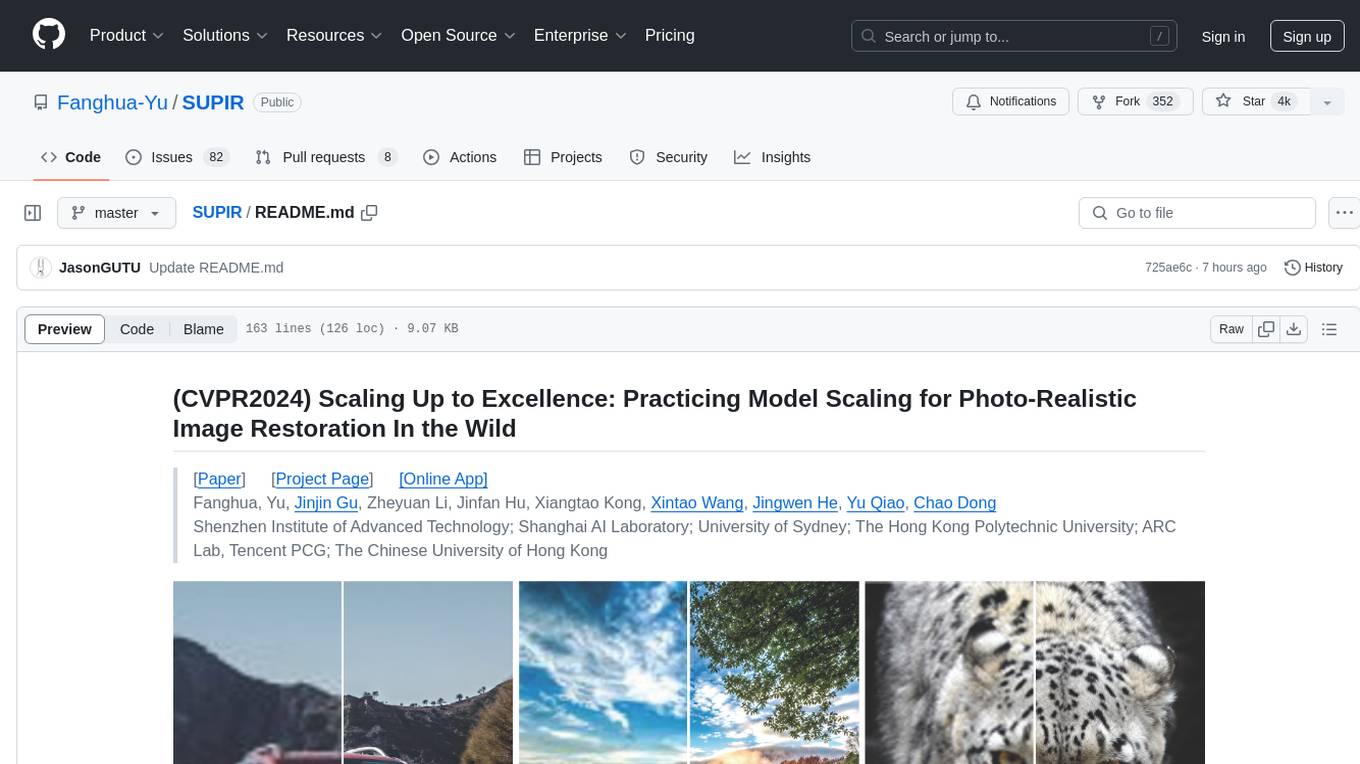

(CVPR2024) Scaling Up to Excellence: Practicing Model Scaling for Photo-Realistic Image Restoration In the Wild

[Paper] [Project Page] [Online App]

Fanghua, Yu, Jinjin Gu, Zheyuan Li, Jinfan Hu, Xiangtao Kong, Xintao Wang, Jingwen He, Yu Qiao, Chao Dong

Shenzhen Institute of Advanced Technology; Shanghai AI Laboratory; University of Sydney; The Hong Kong Polytechnic University; ARC Lab, Tencent PCG; The Chinese University of Hong Kong

🚀 We're thrilled to announce the official launch of SupPixel AI! Experience the next level of image processing and upscaling with our cutting-edge AI technology based on SUPIR. Explore now for free at suppixel.ai.

-

Clone repo

git clone https://github.com/Fanghua-Yu/SUPIR.git cd SUPIR -

Install dependent packages

conda create -n SUPIR python=3.8 -y conda activate SUPIR pip install --upgrade pip pip install -r requirements.txt

-

Download Checkpoints

For users who can connect to huggingface, please setting LLAVA_CLIP_PATH, SDXL_CLIP1_PATH, SDXL_CLIP2_CKPT_PTH in CKPT_PTH.py as None. These CLIPs will be downloaded automatically.

- SDXL CLIP Encoder-1

- SDXL CLIP Encoder-2

- SDXL base 1.0_0.9vae

- LLaVA CLIP

- LLaVA v1.5 13B

- (optional) Juggernaut-XL_v9_RunDiffusionPhoto_v2

- Replacement of

SDXL base 1.0_0.9vaefor Photo Realistic

- Replacement of

- (optional) Juggernaut_RunDiffusionPhoto2_Lightning_4Steps

- Distilling model used in

SUPIR_v0_Juggernautv9_lightning.yaml

- Distilling model used in

-

SUPIR-v0Q: Baidu Netdisk, Google DriveDefault training settings with paper. High generalization and high image quality in most cases.

-

SUPIR-v0F: Baidu Netdisk, Google DriveTraining with light degradation settings. Stage1 encoder of

SUPIR-v0Fremains more details when facing light degradations.

- Edit Custom Path for Checkpoints

* [CKPT_PTH.py] --> LLAVA_CLIP_PATH, LLAVA_MODEL_PATH, SDXL_CLIP1_PATH, SDXL_CLIP2_CACHE_DIR * [options/SUPIR_v0.yaml] --> SDXL_CKPT, SUPIR_CKPT_Q, SUPIR_CKPT_F

RealPhoto60: Baidu Netdisk, Google Drive

Usage:

-- python test.py [options]

-- python gradio_demo.py [interactive options]

--img_dir Input folder.

--save_dir Output folder.

--upscale Upsampling ratio of given inputs. Default: 1

--SUPIR_sign Model selection. Default: 'Q'; Options: ['F', 'Q']

--seed Random seed. Default: 1234

--min_size Minimum resolution of output images. Default: 1024

--edm_steps Numb of steps for EDM Sampling Scheduler. Default: 50

--s_stage1 Control Strength of Stage1. Default: -1 (negative means invalid)

--s_churn Original hy-param of EDM. Default: 5

--s_noise Original hy-param of EDM. Default: 1.003

--s_cfg Classifier-free guidance scale for prompts. Default: 7.5

--s_stage2 Control Strength of Stage2. Default: 1.0

--num_samples Number of samples for each input. Default: 1

--a_prompt Additive positive prompt for all inputs.

Default: 'Cinematic, High Contrast, highly detailed, taken using a Canon EOS R camera,

hyper detailed photo - realistic maximum detail, 32k, Color Grading, ultra HD, extreme

meticulous detailing, skin pore detailing, hyper sharpness, perfect without deformations.'

--n_prompt Fixed negative prompt for all inputs.

Default: 'painting, oil painting, illustration, drawing, art, sketch, oil painting,

cartoon, CG Style, 3D render, unreal engine, blurring, dirty, messy, worst quality,

low quality, frames, watermark, signature, jpeg artifacts, deformed, lowres, over-smooth'

--color_fix_type Color Fixing Type. Default: 'Wavelet'; Options: ['None', 'AdaIn', 'Wavelet']

--linear_CFG Linearly (with sigma) increase CFG from 'spt_linear_CFG' to s_cfg. Default: False

--linear_s_stage2 Linearly (with sigma) increase s_stage2 from 'spt_linear_s_stage2' to s_stage2. Default: False

--spt_linear_CFG Start point of linearly increasing CFG. Default: 1.0

--spt_linear_s_stage2 Start point of linearly increasing s_stage2. Default: 0.0

--ae_dtype Inference data type of AutoEncoder. Default: 'bf16'; Options: ['fp32', 'bf16']

--diff_dtype Inference data type of Diffusion. Default: 'fp16'; Options: ['fp32', 'fp16', 'bf16']# Seek for best quality for most cases

CUDA_VISIBLE_DEVICES=0,1 python test.py --img_dir '/opt/data/private/LV_Dataset/DiffGLV-Test-All/RealPhoto60/LQ' --save_dir ./results-Q --SUPIR_sign Q --upscale 2

# for light degradation and high fidelity

CUDA_VISIBLE_DEVICES=0,1 python test.py --img_dir '/opt/data/private/LV_Dataset/DiffGLV-Test-All/RealPhoto60/LQ' --save_dir ./results-F --SUPIR_sign F --upscale 2 --s_cfg 4.0 --linear_CFGCUDA_VISIBLE_DEVICES=0,1 python gradio_demo.py --ip 0.0.0.0 --port 6688 --use_image_slider --log_history

# Juggernaut_RunDiffusionPhoto2_Lightning_4Steps and DPM++ M2 SDE Karras for fast sampling

CUDA_VISIBLE_DEVICES=0,1 python gradio_demo.py --ip 0.0.0.0 --port 6688 --use_image_slider --log_history --opt options/SUPIR_v0_Juggernautv9_lightning.yaml

# less VRAM & slower (12G for Diffusion, 16G for LLaVA)

CUDA_VISIBLE_DEVICES=0,1 python gradio_demo.py --ip 0.0.0.0 --port 6688 --use_image_slider --log_history --loading_half_params --use_tile_vae --load_8bit_llavaWe've just launched SupPixel AI, an easy-to-use tool designed to help with high-quality image processing and upscaling. It builds on SUPIR. Whether you’re into photography, digital art, or just love playing around with image enhancement, we’d love for you to check it out. Everyone gets free credit to use~

@misc{yu2024scaling,

title={Scaling Up to Excellence: Practicing Model Scaling for Photo-Realistic Image Restoration In the Wild},

author={Fanghua Yu and Jinjin Gu and Zheyuan Li and Jinfan Hu and Xiangtao Kong and Xintao Wang and Jingwen He and Yu Qiao and Chao Dong},

year={2024},

eprint={2401.13627},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

If you have any question, please email [email protected] or [email protected].

The SUPIR ("Software") is made available for use, reproduction, and distribution strictly for non-commercial purposes. For the purposes of this declaration, "non-commercial" is defined as not primarily intended for or directed towards commercial advantage or monetary compensation.

By using, reproducing, or distributing the Software, you agree to abide by this restriction and not to use the Software for any commercial purposes without obtaining prior written permission from Dr. Jinjin Gu.

This declaration does not in any way limit the rights under any open source license that may apply to the Software; it solely adds a condition that the Software shall not be used for commercial purposes.

IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

For inquiries or to obtain permission for commercial use, please contact Dr. Jinjin Gu ([email protected]).

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for SUPIR

Similar Open Source Tools

SUPIR

SUPIR is an AI-based image processing and upscaling tool that leverages cutting-edge technology to enhance image quality and resolution. The tool provides users with the ability to upscale images with high generalization and quality, as well as specific settings for light degradation scenarios. It offers a range of models and checkpoints for different use cases, along with detailed instructions for installation and usage. SUPIR also includes features for color fixing, linear CFG adjustments, and various prompts for image enhancement. The tool is designed for non-commercial use only and comes with a contact email for inquiries and permission requests for commercial use.

CogAgent

CogAgent is an advanced intelligent agent model designed for automating operations on graphical interfaces across various computing devices. It supports platforms like Windows, macOS, and Android, enabling users to issue commands, capture device screenshots, and perform automated operations. The model requires a minimum of 29GB of GPU memory for inference at BF16 precision and offers capabilities for executing tasks like sending Christmas greetings and sending emails. Users can interact with the model by providing task descriptions, platform specifications, and desired output formats.

Trace

Trace is a new AutoDiff-like tool for training AI systems end-to-end with general feedback. It generalizes the back-propagation algorithm by capturing and propagating an AI system's execution trace. Implemented as a PyTorch-like Python library, users can write Python code directly and use Trace primitives to optimize certain parts, similar to training neural networks.

embodied-agents

Embodied Agents is a toolkit for integrating large multi-modal models into existing robot stacks with just a few lines of code. It provides consistency, reliability, scalability, and is configurable to any observation and action space. The toolkit is designed to reduce complexities involved in setting up inference endpoints, converting between different model formats, and collecting/storing datasets. It aims to facilitate data collection and sharing among roboticists by providing Python-first abstractions that are modular, extensible, and applicable to a wide range of tasks. The toolkit supports asynchronous and remote thread-safe agent execution for maximal responsiveness and scalability, and is compatible with various APIs like HuggingFace Spaces, Datasets, Gymnasium Spaces, Ollama, and OpenAI. It also offers automatic dataset recording and optional uploads to the HuggingFace hub.

allms

allms is a versatile and powerful library designed to streamline the process of querying Large Language Models (LLMs). Developed by Allegro engineers, it simplifies working with LLM applications by providing a user-friendly interface, asynchronous querying, automatic retrying mechanism, error handling, and output parsing. It supports various LLM families hosted on different platforms like OpenAI, Google, Azure, and GCP. The library offers features for configuring endpoint credentials, batch querying with symbolic variables, and forcing structured output format. It also provides documentation, quickstart guides, and instructions for local development, testing, updating documentation, and making new releases.

supallm

Supallm is a Python library for super resolution of images using deep learning techniques. It provides pre-trained models for enhancing image quality by increasing resolution. The library is easy to use and allows users to upscale images with high fidelity and detail. Supallm is suitable for tasks such as enhancing image quality, improving visual appearance, and increasing the resolution of low-quality images. It is a valuable tool for researchers, photographers, graphic designers, and anyone looking to enhance image quality using AI technology.

oasis

OASIS is a scalable, open-source social media simulator that integrates large language models with rule-based agents to realistically mimic the behavior of up to one million users on platforms like Twitter and Reddit. It facilitates the study of complex social phenomena such as information spread, group polarization, and herd behavior, offering a versatile tool for exploring diverse social dynamics and user interactions in digital environments. With features like scalability, dynamic environments, diverse action spaces, and integrated recommendation systems, OASIS provides a comprehensive platform for simulating social media interactions at a large scale.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

flow-prompt

Flow Prompt is a dynamic library for managing and optimizing prompts for large language models. It facilitates budget-aware operations, dynamic data integration, and efficient load distribution. Features include CI/CD testing, dynamic prompt development, multi-model support, real-time insights, and prompt testing and evolution.

langchain

LangChain is a framework for developing Elixir applications powered by language models. It enables applications to connect language models to other data sources and interact with the environment. The library provides components for working with language models and off-the-shelf chains for specific tasks. It aims to assist in building applications that combine large language models with other sources of computation or knowledge. LangChain is written in Elixir and is not aimed for parity with the JavaScript and Python versions due to differences in programming paradigms and design choices. The library is designed to make it easy to integrate language models into applications and expose features, data, and functionality to the models.

DemoGPT

DemoGPT is an all-in-one agent library that provides tools, prompts, frameworks, and LLM models for streamlined agent development. It leverages GPT-3.5-turbo to generate LangChain code, creating interactive Streamlit applications. The tool is designed for creating intelligent, interactive, and inclusive solutions in LLM-based application development. It offers model flexibility, iterative development, and a commitment to user engagement. Future enhancements include integrating Gorilla for autonomous API usage and adding a publicly available database for refining the generation process.

chroma

Chroma is an open-source embedding database that provides a simple, scalable, and feature-rich way to build Python or JavaScript LLM apps with memory. It offers a fully-typed, fully-tested, and fully-documented API that makes it easy to get started and scale your applications. Chroma also integrates with popular tools like LangChain and LlamaIndex, and supports a variety of embedding models, including Sentence Transformers, OpenAI embeddings, and Cohere embeddings. With Chroma, you can easily add documents to your database, query relevant documents with natural language, and compose documents into the context window of an LLM like GPT3 for additional summarization or analysis.

Easy-Translate

Easy-Translate is a script designed for translating large text files with a single command. It supports various models like M2M100, NLLB200, SeamlessM4T, LLaMA, and Bloom. The tool is beginner-friendly and offers seamless and customizable features for advanced users. It allows acceleration on CPU, multi-CPU, GPU, multi-GPU, and TPU, with support for different precisions and decoding strategies. Easy-Translate also provides an evaluation script for translations. Built on HuggingFace's Transformers and Accelerate library, it supports prompt usage and loading huge models efficiently.

generative-models

Generative Models by Stability AI is a repository that provides various generative models for research purposes. It includes models like Stable Video 4D (SV4D) for video synthesis, Stable Video 3D (SV3D) for multi-view synthesis, SDXL-Turbo for text-to-image generation, and more. The repository focuses on modularity and implements a config-driven approach for building and combining submodules. It supports training with PyTorch Lightning and offers inference demos for different models. Users can access pre-trained models like SDXL-base-1.0 and SDXL-refiner-1.0 under a CreativeML Open RAIL++-M license. The codebase also includes tools for invisible watermark detection in generated images.

Pixel-Reasoner

Pixel Reasoner is a framework that introduces reasoning in the pixel-space for Vision-Language Models (VLMs), enabling them to directly inspect, interrogate, and infer from visual evidences. This enhances reasoning fidelity for visual tasks by equipping VLMs with visual reasoning operations like zoom-in and select-frame. The framework addresses challenges like model's imbalanced competence and reluctance to adopt pixel-space operations through a two-phase training approach involving instruction tuning and curiosity-driven reinforcement learning. With these visual operations, VLMs can interact with complex visual inputs such as images or videos to gather necessary information, leading to improved performance across visual reasoning benchmarks.

LeanCopilot

Lean Copilot is a tool that enables the use of large language models (LLMs) in Lean for proof automation. It provides features such as suggesting tactics/premises, searching for proofs, and running inference of LLMs. Users can utilize built-in models from LeanDojo or bring their own models to run locally or on the cloud. The tool supports platforms like Linux, macOS, and Windows WSL, with optional CUDA and cuDNN for GPU acceleration. Advanced users can customize behavior using Tactic APIs and Model APIs. Lean Copilot also allows users to bring their own models through ExternalGenerator or ExternalEncoder. The tool comes with caveats such as occasional crashes and issues with premise selection and proof search. Users can get in touch through GitHub Discussions for questions, bug reports, feature requests, and suggestions. The tool is designed to enhance theorem proving in Lean using LLMs.

For similar tasks

StableSwarmUI

StableSwarmUI is a modular Stable Diffusion web user interface that emphasizes making power tools easily accessible, high performance, and extensible. It is designed to be a one-stop-shop for all things Stable Diffusion, providing a wide range of features and capabilities to enhance the user experience.

upscayl

Upscayl is a free and open-source AI image upscaler that uses advanced AI algorithms to enlarge and enhance low-resolution images without losing quality. It is a cross-platform application built with the Linux-first philosophy, available on all major desktop operating systems. Upscayl utilizes Real-ESRGAN and Vulkan architecture for image enhancement, and its backend is fully open-source under the AGPLv3 license. It is important to note that a Vulkan compatible GPU is required for Upscayl to function effectively.

ailia-models

The collection of pre-trained, state-of-the-art AI models. ailia SDK is a self-contained, cross-platform, high-speed inference SDK for AI. The ailia SDK provides a consistent C++ API across Windows, Mac, Linux, iOS, Android, Jetson, and Raspberry Pi platforms. It also supports Unity (C#), Python, Rust, Flutter(Dart) and JNI for efficient AI implementation. The ailia SDK makes extensive use of the GPU through Vulkan and Metal to enable accelerated computing. # Supported models 323 models as of April 8th, 2024

models

This repository contains self-trained single image super resolution (SISR) models. The models are trained on various datasets and use different network architectures. They can be used to upscale images by 2x, 4x, or 8x, and can handle various types of degradation, such as JPEG compression, noise, and blur. The models are provided as safetensors files, which can be loaded into a variety of deep learning frameworks, such as PyTorch and TensorFlow. The repository also includes a number of resources, such as examples, results, and a website where you can compare the outputs of different models.

adobe-photoshopCRCK

Adobe PhotoshopCRCK is a tool designed to provide users with the latest version of Adobe Photoshop for free on Windows. It allows users to access advanced photo editing features and functionalities without the need for a paid subscription. The tool is intended for individuals looking to explore professional photo editing capabilities without incurring additional costs. With Adobe PhotoshopCRCK, users can enhance their images, create stunning graphics, and unleash their creativity through a wide range of editing tools and options.

lassxToolkit

lassxToolkit is a versatile tool designed for file processing tasks. It allows users to manipulate files and folders based on specified configurations in a strict .json format. The tool supports various AI models for tasks such as image upscaling and denoising. Users can customize settings like input/output paths, error handling, file selection, and plugin integration. lassxToolkit provides detailed instructions on configuration options, default values, and model selection. It also offers features like tree restoration, recursive processing, and regex-based file filtering. The tool is suitable for users looking to automate file processing tasks with AI capabilities.

clarity-upscaler

Clarity AI is a free and open-source AI image upscaler and enhancer, providing an alternative to Magnific. It offers various features such as multi-step upscaling, resemblance fixing, speed improvements, support for custom safetensors checkpoints, anime upscaling, LoRa support, pre-downscaling, and fractality. Users can access the tool through the ClarityAI.co app, ComfyUI manager, API, or by deploying and running locally or in the cloud with cog or A1111 webUI. The tool aims to enhance image quality and resolution using advanced AI algorithms and models.

AI-Lossless-Zoomer

AI-Lossless-Zoomer is a tool that utilizes the Real-ESRGAN model provided by Tencent ARC Lab to enhance images, particularly portraits and anime pictures, with fast processing. It supports multi-thread processing, batch image processing, customizable options, output formats, output paths, AI engine selection, and batch cleaning tasks. The tool is designed for Windows 7 or later with .NET Framework 4.6+. Users can choose between the installable version (.exe) and the portable version (.zip) that includes the latest AI engine. The tool is efficient for enlarging images while maintaining quality.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.