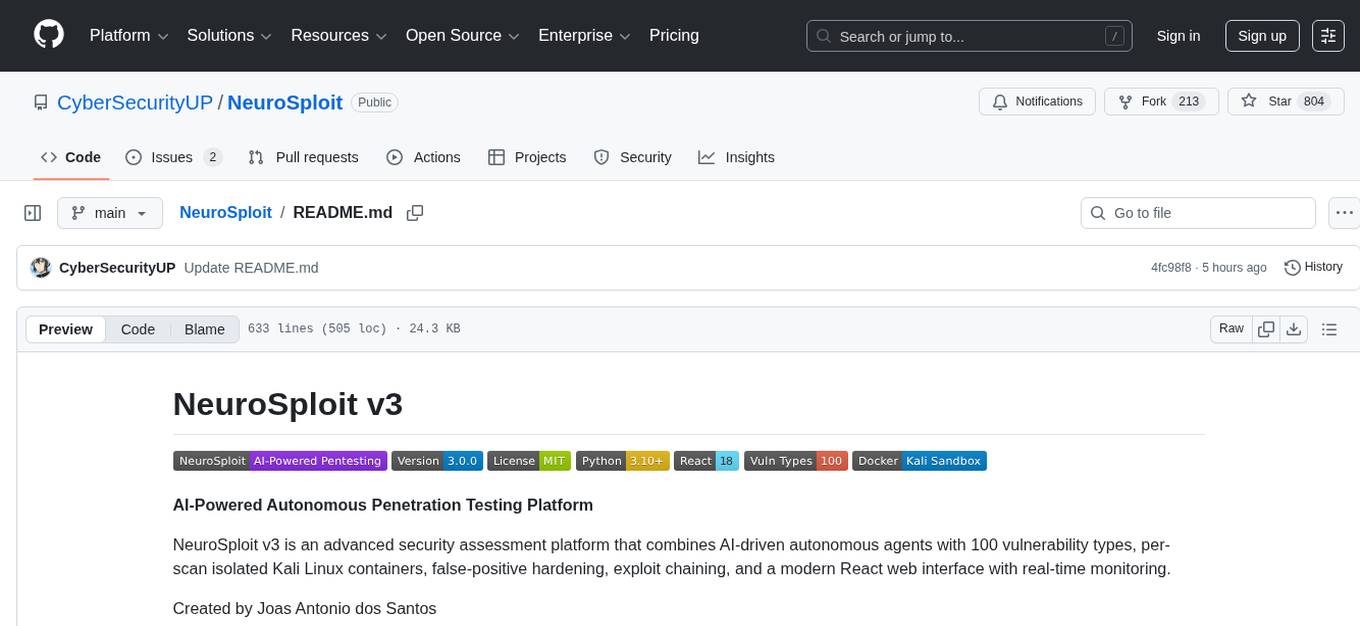

NeuroSploit

NeuroSploit is an advanced, AI-powered penetration testing framework designed to automate and augment various aspects of offensive security operations. Leveraging the capabilities of large language models (LLMs).

Stars: 804

NeuroSploit v3 is an advanced security assessment platform that combines AI-driven autonomous agents with 100 vulnerability types, per-scan isolated Kali Linux containers, false-positive hardening, exploit chaining, and a modern React web interface with real-time monitoring. It offers features like 100 Vulnerability Types, Autonomous Agent with 3-stream parallel pentest, Per-Scan Kali Containers, Anti-Hallucination Pipeline, Exploit Chain Engine, WAF Detection & Bypass, Smart Strategy Adaptation, Multi-Provider LLM, Real-Time Dashboard, and Sandbox Dashboard. The tool is designed for authorized security testing purposes only, ensuring compliance with laws and regulations.

README:

AI-Powered Autonomous Penetration Testing Platform

NeuroSploit v3 is an advanced security assessment platform that combines AI-driven autonomous agents with 100 vulnerability types, per-scan isolated Kali Linux containers, false-positive hardening, exploit chaining, and a modern React web interface with real-time monitoring.

Created by Joas Antonio dos Santos

- 100 Vulnerability Types across 10 categories with AI-driven testing prompts

- Autonomous Agent - 3-stream parallel pentest (recon + junior tester + tool runner)

- Per-Scan Kali Containers - Each scan runs in its own isolated Docker container

- Anti-Hallucination Pipeline - Negative controls, proof-of-execution, confidence scoring

- Exploit Chain Engine - Automatically chains findings (SSRF->internal, SQLi->DB-specific, etc.)

- WAF Detection & Bypass - 16 WAF signatures, 12 bypass techniques

- Smart Strategy Adaptation - Dead endpoint detection, diminishing returns, priority recomputation

- Multi-Provider LLM - Claude, GPT, Gemini, Ollama, LMStudio, OpenRouter

- Real-Time Dashboard - WebSocket-powered live scan progress, findings, and reports

- Sandbox Dashboard - Monitor running Kali containers, tools, health checks in real-time

- Quick Start

- Architecture

- Autonomous Agent

- 100 Vulnerability Types

- Kali Sandbox System

- Anti-Hallucination & Validation

- Web GUI

- API Reference

- Configuration

- Development

- Security Notice

# Clone repository

git clone https://github.com/CyberSecurityUP/NeuroSploit/

cd NeuroSploitv2

# Copy environment file and add your API keys

cp .env.example .env

nano .env # Add ANTHROPIC_API_KEY, OPENAI_API_KEY, or GEMINI_API_KEY

# Build the Kali sandbox image (first time only, ~5 min)

./scripts/build-kali.sh

# Start backend

uvicorn backend.main:app --host 0.0.0.0 --port 8000

# Backend

pip install -r requirements.txt

uvicorn backend.main:app --host 0.0.0.0 --port 8000 --reload

# Frontend (new terminal)

cd frontend

npm install

npm run dev

# Normal build (uses Docker cache)

./scripts/build-kali.sh

# Full rebuild (no cache)

./scripts/build-kali.sh --fresh

# Build + run health check

./scripts/build-kali.sh --test

# Or via docker-compose

docker compose -f docker/docker-compose.kali.yml build

Access the web interface at http://localhost:8000 (production build) or http://localhost:5173 (dev mode).

NeuroSploitv3/

├── backend/ # FastAPI Backend

│ ├── api/v1/ # REST API (13 routers)

│ │ ├── scans.py # Scan CRUD + pause/resume/stop

│ │ ├── agent.py # AI Agent control

│ │ ├── agent_tasks.py # Scan task tracking

│ │ ├── dashboard.py # Stats + activity feed

│ │ ├── reports.py # Report generation (HTML/PDF/JSON)

│ │ ├── scheduler.py # Cron/interval scheduling

│ │ ├── vuln_lab.py # Per-type vulnerability lab

│ │ ├── terminal.py # Terminal agent (10 endpoints)

│ │ ├── sandbox.py # Sandbox container monitoring

│ │ ├── targets.py # Target validation

│ │ ├── prompts.py # Preset prompts

│ │ ├── vulnerabilities.py # Vulnerability management

│ │ └── settings.py # Runtime settings

│ ├── core/

│ │ ├── autonomous_agent.py # Main AI agent (~7000 lines)

│ │ ├── vuln_engine/ # 100-type vulnerability engine

│ │ │ ├── registry.py # 100 VULNERABILITY_INFO entries

│ │ │ ├── payload_generator.py # 526 payloads across 95 libraries

│ │ │ ├── ai_prompts.py # Per-vuln AI decision prompts

│ │ │ ├── system_prompts.py # 12 anti-hallucination prompts

│ │ │ └── testers/ # 10 category tester modules

│ │ ├── validation/ # False-positive hardening

│ │ │ ├── negative_control.py # Benign request control engine

│ │ │ ├── proof_of_execution.py # Per-type proof checks (25+ methods)

│ │ │ ├── confidence_scorer.py # Numeric 0-100 scoring

│ │ │ └── validation_judge.py # Sole authority for finding approval

│ │ ├── request_engine.py # Retry, rate limit, circuit breaker

│ │ ├── waf_detector.py # 16 WAF signatures + bypass

│ │ ├── strategy_adapter.py # Mid-scan strategy adaptation

│ │ ├── chain_engine.py # 10 exploit chain rules

│ │ ├── auth_manager.py # Multi-user auth management

│ │ ├── xss_context_analyzer.py # 8-context XSS analysis

│ │ ├── poc_generator.py # 20+ per-type PoC generators

│ │ ├── execution_history.py # Cross-scan learning

│ │ ├── access_control_learner.py # Adaptive BOLA/BFLA/IDOR learning

│ │ ├── response_verifier.py # 4-signal response verification

│ │ ├── agent_memory.py # Bounded dedup agent memory

│ │ └── report_engine/ # OHVR report generator

│ ├── models/ # SQLAlchemy ORM models

│ ├── db/ # Database layer

│ ├── config.py # Pydantic settings

│ └── main.py # FastAPI app entry

│

├── core/ # Shared core modules

│ ├── llm_manager.py # Multi-provider LLM routing

│ ├── sandbox_manager.py # BaseSandbox ABC + legacy shared sandbox

│ ├── kali_sandbox.py # Per-scan Kali container manager

│ ├── container_pool.py # Global container pool coordinator

│ ├── tool_registry.py # 56 tool install recipes for Kali

│ ├── mcp_server.py # MCP server (12 tools, stdio)

│ ├── scheduler.py # APScheduler scan scheduling

│ └── browser_validator.py # Playwright browser validation

│

├── frontend/ # React + TypeScript Frontend

│ ├── src/

│ │ ├── pages/

│ │ │ ├── HomePage.tsx # Dashboard with stats

│ │ │ ├── AutoPentestPage.tsx # 3-stream auto pentest

│ │ │ ├── VulnLabPage.tsx # Per-type vulnerability lab

│ │ │ ├── TerminalAgentPage.tsx # AI terminal chat

│ │ │ ├── SandboxDashboardPage.tsx # Container monitoring

│ │ │ ├── ScanDetailsPage.tsx # Findings + validation

│ │ │ ├── SchedulerPage.tsx # Cron/interval scheduling

│ │ │ ├── SettingsPage.tsx # Configuration

│ │ │ └── ReportsPage.tsx # Report management

│ │ ├── components/ # Reusable UI components

│ │ ├── services/api.ts # API client layer

│ │ └── types/index.ts # TypeScript interfaces

│ └── package.json

│

├── docker/

│ ├── Dockerfile.kali # Multi-stage Kali sandbox (11 Go tools)

│ ├── Dockerfile.sandbox # Legacy Debian sandbox

│ ├── Dockerfile.backend # Backend container

│ ├── Dockerfile.frontend # Frontend container

│ ├── docker-compose.kali.yml # Kali sandbox build

│ └── docker-compose.sandbox.yml # Legacy sandbox

│

├── config/config.json # Profiles, tools, sandbox, MCP

├── data/

│ ├── vuln_knowledge_base.json # 100 vuln type definitions

│ ├── execution_history.json # Cross-scan learning data

│ └── access_control_learning.json # BOLA/BFLA adaptive data

│

├── scripts/

│ └── build-kali.sh # Build/rebuild Kali image

├── tools/

│ └── benchmark_runner.py # 104 CTF challenges

├── agents/base_agent.py # BaseAgent class

├── neurosploit.py # CLI entry point

└── requirements.txt

The AI agent (autonomous_agent.py) orchestrates the entire penetration test autonomously.

┌─────────────────────┐

│ Auto Pentest │

│ Target URL(s) │

└────────┬────────────┘

│

┌──────────────┼──────────────┐

▼ ▼ ▼

┌──────────────┐ ┌──────────────┐ ┌──────────────┐

│ Stream 1 │ │ Stream 2 │ │ Stream 3 │

│ Recon │ │ Junior Test │ │ Tool Runner │

│ ─────────── │ │ ─────────── │ │ ─────────── │

│ Crawl pages │ │ Test target │ │ Nuclei scan │

│ Find params │ │ AI-priority │ │ Naabu ports │

│ Tech detect │ │ 3 payloads │ │ AI decides │

│ WAF detect │ │ per endpoint│ │ extra tools │

└──────┬───────┘ └──────┬───────┘ └──────┬───────┘

│ │ │

└────────────────┼────────────────┘

▼

┌─────────────────────┐

│ Deep Analysis │

│ 100 vuln types │

│ Full payload sets │

│ Chain exploitation │

└─────────┬───────────┘

▼

┌─────────────────────┐

│ Report Generation │

│ AI executive brief │

│ PoC code per find │

└─────────────────────┘

| Module | Description |

|---|---|

| Request Engine | Retry with backoff, per-host rate limiting, circuit breaker, adaptive timeouts |

| WAF Detector | 16 WAF signatures (Cloudflare, AWS, Akamai, Imperva, etc.), 12 bypass techniques |

| Strategy Adapter | Dead endpoint detection, diminishing returns, 403 bypass, priority recomputation |

| Chain Engine | 10 chain rules (SSRF->internal, SQLi->DB-specific, LFI->config, IDOR pattern transfer) |

| Auth Manager | Multi-user contexts (user_a, user_b, admin), login form detection, session management |

- Pause / Resume / Stop with checkpoints

- Manual Validation - Confirm or reject AI findings

- Screenshot Capture on confirmed findings (Playwright)

- Cross-Scan Learning - Historical success rates influence future priorities

- CVE Testing - Regex detection + AI-generated payloads

| Category | Types | Examples |

|---|---|---|

| Injection | 38 | XSS (reflected/stored/DOM), SQLi, NoSQLi, Command Injection, SSTI, LDAP, XPath, CRLF, Header Injection, Log Injection, GraphQL Injection |

| Inspection | 21 | Security Headers, CORS, Clickjacking, Info Disclosure, Debug Endpoints, Error Disclosure, Source Code Exposure |

| AI-Driven | 41 | BOLA, BFLA, IDOR, Race Condition, Business Logic, JWT Manipulation, OAuth Flaws, Prototype Pollution, WebSocket Hijacking, Cache Poisoning, HTTP Request Smuggling |

| Authentication | 8 | Auth Bypass, Session Fixation, Credential Stuffing, Password Reset Flaws, MFA Bypass, Default Credentials |

| Authorization | 6 | BOLA, BFLA, IDOR, Privilege Escalation, Forced Browsing, Function-Level Access Control |

| File Access | 5 | LFI, RFI, Path Traversal, File Upload, XXE |

| Request Forgery | 4 | SSRF, CSRF, Cloud Metadata, DNS Rebinding |

| Client-Side | 8 | CORS, Clickjacking, Open Redirect, DOM Clobbering, Prototype Pollution, PostMessage, CSS Injection |

| Infrastructure | 6 | SSL/TLS, HTTP Methods, Subdomain Takeover, Host Header, CNAME Hijacking |

| Cloud/Supply | 4 | Cloud Metadata, S3 Bucket Misconfiguration, Dependency Confusion, Third-Party Script |

- 526 payloads across 95 libraries

- 73 XSS stored payloads + 5 context-specific sets

- Per-type AI decision prompts with anti-hallucination directives

- WAF-adaptive payload transformation (12 techniques)

Each scan runs in its own isolated Kali Linux Docker container, providing:

- Complete Isolation - No interference between concurrent scans

- On-Demand Tools - 56 tools installed only when needed

- Auto Cleanup - Containers destroyed when scan completes

- Resource Limits - Per-container memory (2GB) and CPU (2 cores) limits

| Category | Tools |

|---|---|

| Scanners | nuclei, naabu, httpx, nmap, nikto, masscan, whatweb |

| Discovery | subfinder, katana, dnsx, uncover, ffuf, gobuster, waybackurls |

| Exploitation | dalfox, sqlmap |

| System | curl, wget, git, python3, pip3, go, jq, dig, whois, openssl, netcat, bash |

Installed automatically inside the container when first requested:

- APT: wpscan, dirb, hydra, john, hashcat, testssl, sslscan, enum4linux, dnsrecon, amass, medusa, crackmapexec, etc.

- Go: gau, gitleaks, anew, httprobe

- Pip: dirsearch, wfuzz, arjun, wafw00f, sslyze, commix, trufflehog, retire

ContainerPool (global coordinator, max 5 concurrent)

├── KaliSandbox(scan_id="abc") → docker: neurosploit-abc

├── KaliSandbox(scan_id="def") → docker: neurosploit-def

└── KaliSandbox(scan_id="ghi") → docker: neurosploit-ghi

- TTL enforcement - Containers auto-destroyed after 60 min

- Orphan cleanup - Stale containers removed on server startup

- Graceful fallback - Falls back to shared container if Docker unavailable

NeuroSploit uses a multi-layered validation pipeline to eliminate false positives:

Finding Candidate

│

▼

┌─────────────────────┐

│ Negative Controls │ Send benign/empty requests as controls

│ Same behavior = FP │ -60 confidence if same response

└─────────┬───────────┘

▼

┌─────────────────────┐

│ Proof of Execution │ 25+ per-vuln-type proof methods

│ XSS: context check │ SSRF: metadata markers

│ SQLi: DB errors │ BOLA: data comparison

└─────────┬───────────┘

▼

┌─────────────────────┐

│ AI Interpretation │ LLM with anti-hallucination prompts

│ Per-type system msgs │ 12 composable prompt templates

└─────────┬───────────┘

▼

┌─────────────────────┐

│ Confidence Scorer │ 0-100 numeric score

│ ≥90 = confirmed │ +proof, +impact, +controls

│ ≥60 = likely │ -baseline_only, -same_behavior

│ <60 = rejected │ Breakdown visible in UI

└─────────┬───────────┘

▼

┌─────────────────────┐

│ Validation Judge │ Final verdict authority

│ approve / reject │ Records for adaptive learning

└─────────────────────┘

12 composable prompts applied across 7 task contexts:

-

anti_hallucination- Core truthfulness directives -

proof_of_execution- Require concrete evidence -

negative_controls- Compare with benign requests -

anti_severity_inflation- Accurate severity ratings -

access_control_intelligence- BOLA/BFLA data comparison methodology

- Records TP/FP outcomes per domain for BOLA/BFLA/IDOR

- 9 default response patterns, 6 known FP patterns (WSO2, Keycloak, etc.)

- Historical FP rate influences future confidence scoring

| Page | Route | Description |

|---|---|---|

| Dashboard | / |

Stats overview, severity distribution, recent activity feed |

| Auto Pentest | /auto |

One-click autonomous pentest with 3-stream live display |

| Vuln Lab | /vuln-lab |

Per-type vulnerability testing (100 types, 11 categories) |

| Terminal Agent | /terminal |

AI-powered interactive security chat + tool execution |

| Sandboxes | /sandboxes |

Real-time Docker container monitoring + management |

| AI Agent | /scan/new |

Manual scan creation with prompt selection |

| Scan Details | /scan/:id |

Findings with confidence badges, pause/resume/stop |

| Scheduler | /scheduler |

Cron/interval automated scan scheduling |

| Reports | /reports |

HTML/PDF/JSON report generation and viewing |

| Settings | /settings |

LLM providers, model routing, feature toggles |

Real-time monitoring of per-scan Kali containers:

- Pool stats - Active/max containers, Docker status, TTL

- Capacity bar - Visual utilization indicator

- Per-container cards - Name, scan link, uptime, installed tools, status

- Actions - Health check, destroy (with confirmation), cleanup expired/orphans

- 5-second auto-polling for real-time updates

http://localhost:8000/api/v1

| Method | Endpoint | Description |

|---|---|---|

POST |

/scans |

Create new scan |

GET |

/scans |

List all scans |

GET |

/scans/{id} |

Get scan details |

POST |

/scans/{id}/start |

Start scan |

POST |

/scans/{id}/stop |

Stop scan |

POST |

/scans/{id}/pause |

Pause scan |

POST |

/scans/{id}/resume |

Resume scan |

DELETE |

/scans/{id} |

Delete scan |

| Method | Endpoint | Description |

|---|---|---|

POST |

/agent/run |

Launch autonomous agent |

GET |

/agent/status/{id} |

Get agent status + findings |

GET |

/agent/by-scan/{scan_id} |

Get agent by scan ID |

POST |

/agent/stop/{id} |

Stop agent |

POST |

/agent/pause/{id} |

Pause agent |

POST |

/agent/resume/{id} |

Resume agent |

GET |

/agent/findings/{id} |

Get findings with details |

GET |

/agent/logs/{id} |

Get agent logs |

| Method | Endpoint | Description |

|---|---|---|

GET |

/sandbox |

List containers + pool status |

GET |

/sandbox/{scan_id} |

Health check container |

DELETE |

/sandbox/{scan_id} |

Destroy container |

POST |

/sandbox/cleanup |

Remove expired containers |

POST |

/sandbox/cleanup-orphans |

Remove orphan containers |

| Method | Endpoint | Description |

|---|---|---|

GET |

/scheduler |

List scheduled jobs |

POST |

/scheduler |

Create scheduled job |

DELETE |

/scheduler/{id} |

Delete job |

POST |

/scheduler/{id}/pause |

Pause job |

POST |

/scheduler/{id}/resume |

Resume job |

| Method | Endpoint | Description |

|---|---|---|

GET |

/vuln-lab/types |

List 100 vuln types by category |

POST |

/vuln-lab/run |

Run per-type vulnerability test |

GET |

/vuln-lab/challenges |

List challenge runs |

GET |

/vuln-lab/stats |

Detection rate stats |

| Method | Endpoint | Description |

|---|---|---|

POST |

/reports |

Generate report |

POST |

/reports/ai-generate |

AI-powered report |

GET |

/reports/{id}/view |

View HTML report |

GET |

/dashboard/stats |

Dashboard statistics |

GET |

/dashboard/activity-feed |

Recent activity |

ws://localhost:8000/ws/scan/{scan_id}

Events: scan_started, progress_update, finding_discovered, scan_completed, scan_error

Interactive docs available at:

- Swagger UI:

http://localhost:8000/api/docs - ReDoc:

http://localhost:8000/api/redoc

# LLM API Keys (at least one required)

ANTHROPIC_API_KEY=your-key

OPENAI_API_KEY=your-key

GEMINI_API_KEY=your-key

# Local LLM (optional)

OLLAMA_BASE_URL=http://localhost:11434

LMSTUDIO_BASE_URL=http://localhost:1234

OPENROUTER_API_KEY=your-key

# Database

DATABASE_URL=sqlite+aiosqlite:///./data/neurosploit.db

# Server

HOST=0.0.0.0

PORT=8000

DEBUG=false

{

"llm": {

"default_profile": "gemini_pro_default",

"profiles": { ... }

},

"agent_roles": {

"pentest_generalist": { "vuln_coverage": 100 },

"bug_bounty_hunter": { "vuln_coverage": 100 }

},

"sandbox": {

"mode": "per_scan",

"kali": {

"enabled": true,

"image": "neurosploit-kali:latest",

"max_concurrent": 5,

"container_ttl_minutes": 60

}

},

"mcp_servers": {

"neurosploit_tools": {

"transport": "stdio",

"command": "python3",

"args": ["-m", "core.mcp_server"]

}

}

}

pip install -r requirements.txt

uvicorn backend.main:app --reload --host 0.0.0.0 --port 8000

# API docs: http://localhost:8000/api/docs

cd frontend

npm install

npm run dev # Dev server at http://localhost:5173

npm run build # Production build

./scripts/build-kali.sh --test # Build + health check

python3 -m core.mcp_server # Starts stdio MCP server (12 tools)

This tool is for authorized security testing only.

- Only test systems you own or have explicit written permission to test

- Follow responsible disclosure practices

- Comply with all applicable laws and regulations

- Unauthorized access to computer systems is illegal

MIT License - See LICENSE for details.

| Layer | Technologies |

|---|---|

| Backend | Python, FastAPI, SQLAlchemy, Pydantic, aiohttp |

| Frontend | React 18, TypeScript, TailwindCSS, Vite |

| AI/LLM | Anthropic Claude, OpenAI GPT, Google Gemini, Ollama, LMStudio, OpenRouter |

| Sandbox | Docker, Kali Linux, ProjectDiscovery suite, Nmap, SQLMap, Nikto |

| Tools | Nuclei, Naabu, httpx, Subfinder, Katana, FFuf, Gobuster, Dalfox |

| Infra | Docker Compose, MCP Protocol, Playwright, APScheduler |

NeuroSploit v3 - AI-Powered Autonomous Penetration Testing Platform

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for NeuroSploit

Similar Open Source Tools

NeuroSploit

NeuroSploit v3 is an advanced security assessment platform that combines AI-driven autonomous agents with 100 vulnerability types, per-scan isolated Kali Linux containers, false-positive hardening, exploit chaining, and a modern React web interface with real-time monitoring. It offers features like 100 Vulnerability Types, Autonomous Agent with 3-stream parallel pentest, Per-Scan Kali Containers, Anti-Hallucination Pipeline, Exploit Chain Engine, WAF Detection & Bypass, Smart Strategy Adaptation, Multi-Provider LLM, Real-Time Dashboard, and Sandbox Dashboard. The tool is designed for authorized security testing purposes only, ensuring compliance with laws and regulations.

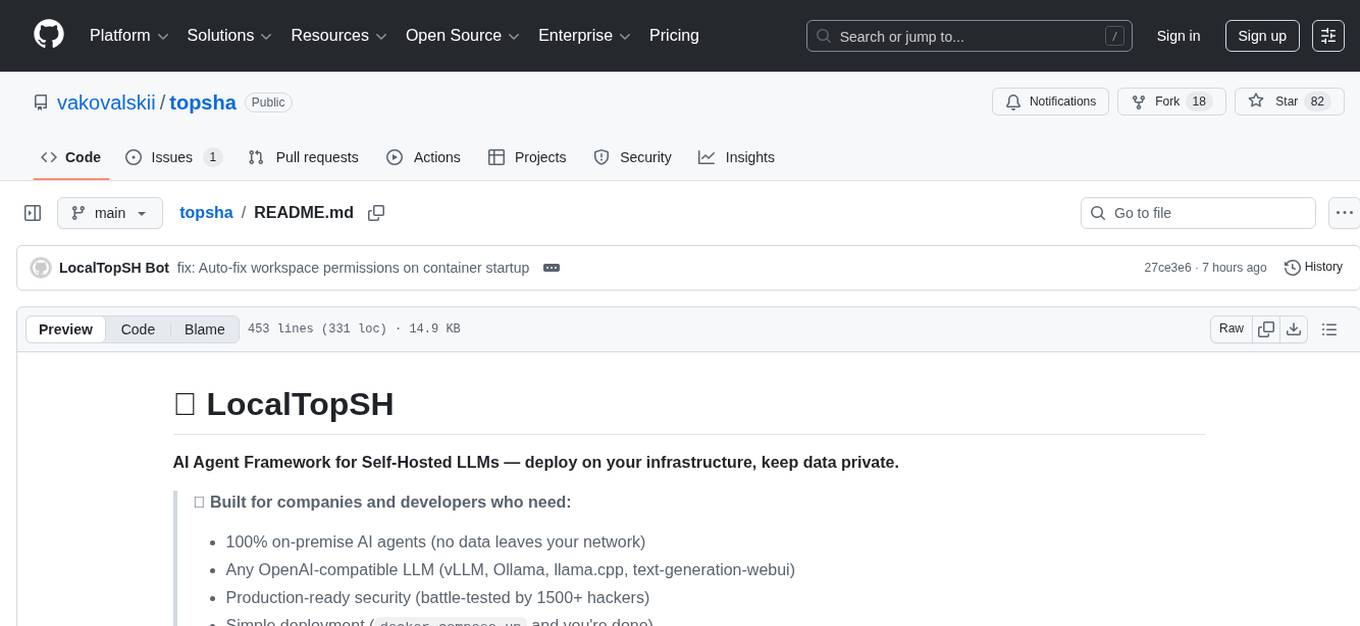

topsha

LocalTopSH is an AI Agent Framework designed for companies and developers who require 100% on-premise AI agents with data privacy. It supports various OpenAI-compatible LLM backends and offers production-ready security features. The framework allows simple deployment using Docker compose and ensures that data stays within the user's network, providing full control and compliance. With cost-effective scaling options and compatibility in regions with restrictions, LocalTopSH is a versatile solution for deploying AI agents on self-hosted infrastructure.

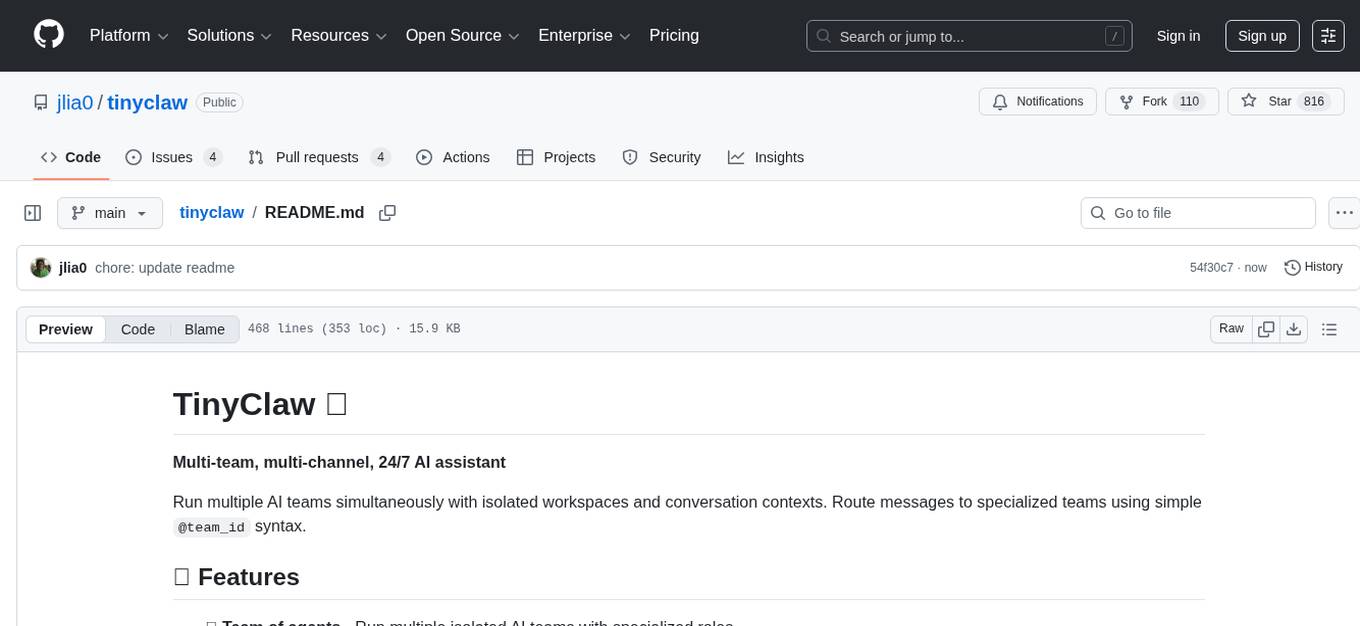

tinyclaw

TinyClaw is a lightweight wrapper around Claude Code that connects WhatsApp via QR code, processes messages sequentially, maintains conversation context, runs 24/7 in tmux, and is ready for multi-channel support. Its key innovation is the file-based queue system that prevents race conditions and enables multi-channel support. TinyClaw consists of components like whatsapp-client.js for WhatsApp I/O, queue-processor.js for message processing, heartbeat-cron.sh for health checks, and tinyclaw.sh as the main orchestrator with a CLI interface. It ensures no race conditions, is multi-channel ready, provides clean responses using claude -c -p, and supports persistent sessions. Security measures include local storage of WhatsApp session and queue files, channel-specific authentication, and running Claude with user permissions.

MediCareAI

MediCareAI is an intelligent disease management system powered by AI, designed for patient follow-up and disease tracking. It integrates medical guidelines, AI-powered diagnosis, and document processing to provide comprehensive healthcare support. The system includes features like user authentication, patient management, AI diagnosis, document processing, medical records management, knowledge base system, doctor collaboration platform, and admin system. It ensures privacy protection through automatic PII detection and cleaning for document sharing.

myclaw

myclaw is a personal AI assistant built on agentsdk-go that offers a CLI agent for single message or interactive REPL mode, full orchestration with channels, cron, and heartbeat, support for various messaging channels like Telegram, Feishu, WeCom, WhatsApp, and a web UI, multi-provider support for Anthropic and OpenAI models, image recognition and document processing, scheduled tasks with JSON persistence, long-term and daily memory storage, custom skill loading, and more. It provides a comprehensive solution for interacting with AI models and managing tasks efficiently.

Shannon

Shannon is a battle-tested infrastructure for AI agents that solves problems at scale, such as runaway costs, non-deterministic failures, and security concerns. It offers features like intelligent caching, deterministic replay of workflows, time-travel debugging, WASI sandboxing, and hot-swapping between LLM providers. Shannon allows users to ship faster with zero configuration multi-agent setup, multiple AI patterns, time-travel debugging, and hot configuration changes. It is production-ready with features like WASI sandbox, token budget control, policy engine (OPA), and multi-tenancy. Shannon helps scale without breaking by reducing costs, being provider agnostic, observable by default, and designed for horizontal scaling with Temporal workflow orchestration.

distill

Distill is a reliability layer for LLM context that provides deterministic deduplication to remove redundancy before reaching the model. It aims to reduce redundant data, lower costs, provide faster responses, and offer more efficient and deterministic results. The tool works by deduplicating, compressing, summarizing, and caching context to ensure reliable outputs. It offers various installation methods, including binary download, Go install, Docker usage, and building from source. Distill can be used for tasks like deduplicating chunks, connecting to vector databases, integrating with AI assistants, analyzing files for duplicates, syncing vectors to Pinecone, querying from the command line, and managing configuration files. The tool supports self-hosting via Docker, Docker Compose, building from source, Fly.io deployment, Render deployment, and Railway integration. Distill also provides monitoring capabilities with Prometheus-compatible metrics, Grafana dashboard, and OpenTelemetry tracing.

pilot

Pilot is an AI tool designed to streamline the process of handling tickets from GitHub, Linear, Jira, or Asana. It plans the implementation, writes the code, runs tests, and opens a PR for you to review and merge. With features like Autopilot, Epic Decomposition, Self-Review, and more, Pilot aims to automate the ticket handling process and reduce the time spent on prioritizing and completing tasks. It integrates with various platforms, offers intelligence features, and provides real-time visibility through a dashboard. Pilot is free to use, with costs associated with Claude API usage. It is designed for bug fixes, small features, refactoring, tests, docs, and dependency updates, but may not be suitable for large architectural changes or security-critical code.

vllm-mlx

vLLM-MLX is a tool that brings native Apple Silicon GPU acceleration to vLLM by integrating Apple's ML framework with unified memory and Metal kernels. It offers optimized LLM inference with KV cache and quantization, vision-language models for multimodal inference, speech-to-text and text-to-speech with native voices, text embeddings for semantic search and RAG, and more. Users can benefit from features like multimodal support for text, image, video, and audio, native GPU acceleration on Apple Silicon, compatibility with OpenAI API, Anthropic Messages API, reasoning models extraction, integration with external tools via Model Context Protocol, memory-efficient caching, and high throughput for multiple concurrent users.

mesh

MCP Mesh is an open-source control plane for MCP traffic that provides a unified layer for authentication, routing, and observability. It replaces multiple integrations with a single production endpoint, simplifying configuration management. Built for multi-tenant organizations, it offers workspace/project scoping for policies, credentials, and logs. With core capabilities like MeshContext, AccessControl, and OpenTelemetry, it ensures fine-grained RBAC, full tracing, and metrics for tools and workflows. Users can define tools with input/output validation, access control checks, audit logging, and OpenTelemetry traces. The project structure includes apps for full-stack MCP Mesh, encryption, observability, and more, with deployment options ranging from Docker to Kubernetes. The tech stack includes Bun/Node runtime, TypeScript, Hono API, React, Kysely ORM, and Better Auth for OAuth and API keys.

starknet-agentic

Open-source stack for giving AI agents wallets, identity, reputation, and execution rails on Starknet. `starknet-agentic` is a monorepo with Cairo smart contracts for agent wallets, identity, reputation, and validation, TypeScript packages for MCP tools, A2A integration, and payment signing, reusable skills for common Starknet agent capabilities, and examples and docs for integration. It provides contract primitives + runtime tooling in one place for integrating agents. The repo includes various layers such as Agent Frameworks / Apps, Integration + Runtime Layer, Packages / Tooling Layer, Cairo Contract Layer, and Starknet L2. It aims for portability of agent integrations without giving up Starknet strengths, with a cross-chain interop strategy and skills marketplace. The repository layout consists of directories for contracts, packages, skills, examples, docs, and website.

vibium

Vibium is a browser automation infrastructure designed for AI agents, providing a single binary that manages browser lifecycle, WebDriver BiDi protocol, and an MCP server. It offers zero configuration, AI-native capabilities, and is lightweight with no runtime dependencies. It is suitable for AI agents, test automation, and any tasks requiring browser interaction.

solo-server

Solo Server is a lightweight server designed for managing hardware-aware inference. It provides seamless setup through a simple CLI and HTTP servers, an open model registry for pulling models from platforms like Ollama and Hugging Face, cross-platform compatibility for effortless deployment of AI models on hardware, and a configurable framework that auto-detects hardware components (CPU, GPU, RAM) and sets optimal configurations.

open-computer-use

Open Computer Use is an open-source platform that enables AI agents to control computers through browser automation, terminal access, and desktop interaction. It is designed for developers to create autonomous AI workflows. The platform allows agents to browse the web, run terminal commands, control desktop applications, orchestrate multi-agents, stream execution, and is 100% open-source and self-hostable. It provides capabilities similar to Anthropic's Claude Computer Use but is fully open-source and extensible.

fluid.sh

fluid.sh is a tool designed to manage and debug VMs using AI agents in isolated environments before applying changes to production. It provides a workflow where AI agents work autonomously in sandbox VMs, and human approval is required before any changes are made to production. The tool offers features like autonomous execution, full VM isolation, human-in-the-loop approval workflow, Ansible export, and a Python SDK for building autonomous agents.

z.ai2api_python

Z.AI2API Python is a lightweight OpenAI API proxy service that integrates seamlessly with existing applications. It supports the full functionality of GLM-4.5 series models and features high-performance streaming responses, enhanced tool invocation, support for thinking mode, integration with search models, Docker deployment, session isolation for privacy protection, flexible configuration via environment variables, and intelligent upstream model routing.

For similar tasks

hexstrike-ai

HexStrike AI is an advanced AI-powered penetration testing MCP framework with 150+ security tools and 12+ autonomous AI agents. It features a multi-agent architecture with intelligent decision-making, vulnerability intelligence, and modern visual engine. The platform allows for AI agent connection, intelligent analysis, autonomous execution, real-time adaptation, and advanced reporting. HexStrike AI offers a streamlined installation process, Docker container support, 250+ specialized AI agents/tools, native desktop client, advanced web automation, memory optimization, enhanced error handling, and bypassing limitations.

NeuroSploit

NeuroSploit v3 is an advanced security assessment platform that combines AI-driven autonomous agents with 100 vulnerability types, per-scan isolated Kali Linux containers, false-positive hardening, exploit chaining, and a modern React web interface with real-time monitoring. It offers features like 100 Vulnerability Types, Autonomous Agent with 3-stream parallel pentest, Per-Scan Kali Containers, Anti-Hallucination Pipeline, Exploit Chain Engine, WAF Detection & Bypass, Smart Strategy Adaptation, Multi-Provider LLM, Real-Time Dashboard, and Sandbox Dashboard. The tool is designed for authorized security testing purposes only, ensuring compliance with laws and regulations.

watchtower

AIShield Watchtower is a tool designed to fortify the security of AI/ML models and Jupyter notebooks by automating model and notebook discoveries, conducting vulnerability scans, and categorizing risks into 'low,' 'medium,' 'high,' and 'critical' levels. It supports scanning of public GitHub repositories, Hugging Face repositories, AWS S3 buckets, and local systems. The tool generates comprehensive reports, offers a user-friendly interface, and aligns with industry standards like OWASP, MITRE, and CWE. It aims to address the security blind spots surrounding Jupyter notebooks and AI models, providing organizations with a tailored approach to enhancing their security efforts.

LLM-PLSE-paper

LLM-PLSE-paper is a repository focused on the applications of Large Language Models (LLMs) in Programming Language and Software Engineering (PL/SE) domains. It covers a wide range of topics including bug detection, specification inference and verification, code generation, fuzzing and testing, code model and reasoning, code understanding, IDE technologies, prompting for reasoning tasks, and agent/tool usage and planning. The repository provides a comprehensive collection of research papers, benchmarks, empirical studies, and frameworks related to the capabilities of LLMs in various PL/SE tasks.

invariant

Invariant Analyzer is an open-source scanner designed for LLM-based AI agents to find bugs, vulnerabilities, and security threats. It scans agent execution traces to identify issues like looping behavior, data leaks, prompt injections, and unsafe code execution. The tool offers a library of built-in checkers, an expressive policy language, data flow analysis, real-time monitoring, and extensible architecture for custom checkers. It helps developers debug AI agents, scan for security violations, and prevent security issues and data breaches during runtime. The analyzer leverages deep contextual understanding and a purpose-built rule matching engine for security policy enforcement.

OpenRedTeaming

OpenRedTeaming is a repository focused on red teaming for generative models, specifically large language models (LLMs). The repository provides a comprehensive survey on potential attacks on GenAI and robust safeguards. It covers attack strategies, evaluation metrics, benchmarks, and defensive approaches. The repository also implements over 30 auto red teaming methods. It includes surveys, taxonomies, attack strategies, and risks related to LLMs. The goal is to understand vulnerabilities and develop defenses against adversarial attacks on large language models.

Awesome-LLM4Cybersecurity

The repository 'Awesome-LLM4Cybersecurity' provides a comprehensive overview of the applications of Large Language Models (LLMs) in cybersecurity. It includes a systematic literature review covering topics such as constructing cybersecurity-oriented domain LLMs, potential applications of LLMs in cybersecurity, and research directions in the field. The repository analyzes various benchmarks, datasets, and applications of LLMs in cybersecurity tasks like threat intelligence, fuzzing, vulnerabilities detection, insecure code generation, program repair, anomaly detection, and LLM-assisted attacks.

quark-engine

Quark Engine is an AI-powered tool designed for analyzing Android APK files. It focuses on enhancing the detection process for auto-suggestion, enabling users to create detection workflows without coding. The tool offers an intuitive drag-and-drop interface for workflow adjustments and updates. Quark Agent, the core component, generates Quark Script code based on natural language input and feedback. The project is committed to providing a user-friendly experience for designing detection workflows through textual and visual methods. Various features are still under development and will be rolled out gradually.

For similar jobs

hackingBuddyGPT

hackingBuddyGPT is a framework for testing LLM-based agents for security testing. It aims to create common ground truth by creating common security testbeds and benchmarks, evaluating multiple LLMs and techniques against those, and publishing prototypes and findings as open-source/open-access reports. The initial focus is on evaluating the efficiency of LLMs for Linux privilege escalation attacks, but the framework is being expanded to evaluate the use of LLMs for web penetration-testing and web API testing. hackingBuddyGPT is released as open-source to level the playing field for blue teams against APTs that have access to more sophisticated resources.

aircrackauto

AirCrackAuto is a tool that automates the aircrack-ng process for Wi-Fi hacking. It is designed to make it easier for users to crack Wi-Fi passwords by automating the process of capturing packets, generating wordlists, and launching attacks. AirCrackAuto is a powerful tool that can be used to crack Wi-Fi passwords in a matter of minutes.

AIMr

AIMr is an AI aimbot tool written in Python that leverages modern technologies to achieve an undetected system with a pleasing appearance. It works on any game that uses human-shaped models. To optimize its performance, users should build OpenCV with CUDA. For Valorant, additional perks in the Discord and an Arduino Leonardo R3 are required.

aircrack-ng

Aircrack-ng is a comprehensive suite of tools designed to evaluate the security of WiFi networks. It covers various aspects of WiFi security, including monitoring, attacking (replay attacks, deauthentication, fake access points), testing WiFi cards and driver capabilities, and cracking WEP and WPA PSK. The tools are command line-based, allowing for extensive scripting and have been utilized by many GUIs. Aircrack-ng primarily works on Linux but also supports Windows, macOS, FreeBSD, OpenBSD, NetBSD, Solaris, and eComStation 2.

Awesome_GPT_Super_Prompting

Awesome_GPT_Super_Prompting is a repository that provides resources related to Jailbreaks, Leaks, Injections, Libraries, Attack, Defense, and Prompt Engineering. It includes information on ChatGPT Jailbreaks, GPT Assistants Prompt Leaks, GPTs Prompt Injection, LLM Prompt Security, Super Prompts, Prompt Hack, Prompt Security, Ai Prompt Engineering, and Adversarial Machine Learning. The repository contains curated lists of repositories, tools, and resources related to GPTs, prompt engineering, prompt libraries, and secure prompting. It also offers insights into Cyber-Albsecop GPT Agents and Super Prompts for custom GPT usage.

ai-exploits

AI Exploits is a repository that showcases practical attacks against AI/Machine Learning infrastructure, aiming to raise awareness about vulnerabilities in the AI/ML ecosystem. It contains exploits and scanning templates for responsibly disclosed vulnerabilities affecting machine learning tools, including Metasploit modules, Nuclei templates, and CSRF templates. Users can use the provided Docker image to easily run the modules and templates. The repository also provides guidelines for using Metasploit modules, Nuclei templates, and CSRF templates to exploit vulnerabilities in machine learning tools.

airgeddon

Airgeddon is a versatile bash script designed for Linux systems to conduct wireless network audits. It provides a comprehensive set of features and tools for auditing and securing wireless networks. The script is user-friendly and offers functionalities such as scanning, capturing handshakes, deauth attacks, and more. Airgeddon is regularly updated and supported, making it a valuable tool for both security professionals and enthusiasts.

PentestGPT

PentestGPT is a penetration testing tool empowered by ChatGPT, designed to automate the penetration testing process. It operates interactively to guide penetration testers in overall progress and specific operations. The tool supports solving easy to medium HackTheBox machines and other CTF challenges. Users can use PentestGPT to perform tasks like testing connections, using different reasoning models, discussing with the tool, searching on Google, and generating reports. It also supports local LLMs with custom parsers for advanced users.