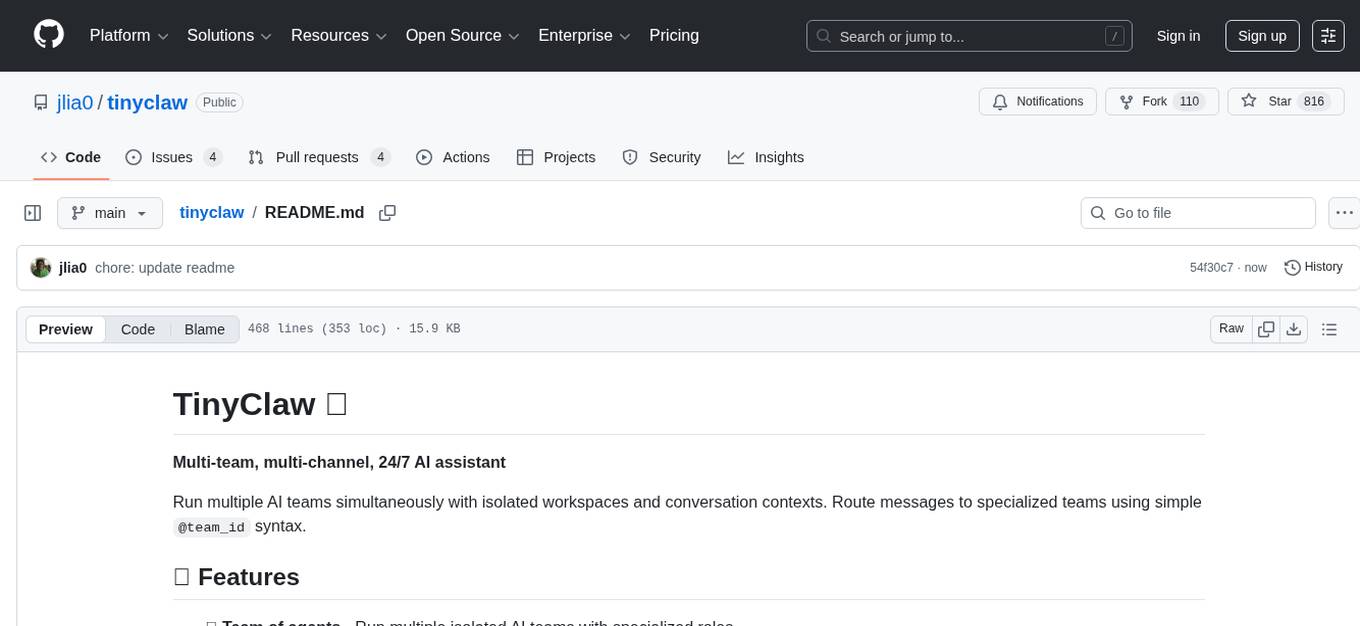

tinyclaw

TinyClaw is a tiny wrapper of Claude Code as your 24/7 personal AI agent

Stars: 273

TinyClaw is a lightweight wrapper around Claude Code that connects WhatsApp via QR code, processes messages sequentially, maintains conversation context, runs 24/7 in tmux, and is ready for multi-channel support. Its key innovation is the file-based queue system that prevents race conditions and enables multi-channel support. TinyClaw consists of components like whatsapp-client.js for WhatsApp I/O, queue-processor.js for message processing, heartbeat-cron.sh for health checks, and tinyclaw.sh as the main orchestrator with a CLI interface. It ensures no race conditions, is multi-channel ready, provides clean responses using claude -c -p, and supports persistent sessions. Security measures include local storage of WhatsApp session and queue files, channel-specific authentication, and running Claude with user permissions.

README:

Minimal multi-channel AI assistant with WhatsApp integration and queue-based architecture.

TinyClaw is a lightweight wrapper around Claude Code that:

- ✅ Connects WhatsApp (via QR code)

- ✅ Processes messages sequentially (no race conditions)

- ✅ Maintains conversation context

- ✅ Runs 24/7 in tmux

- ✅ Ready for multi-channel (Telegram, etc.)

Key innovation: File-based queue system prevents race conditions and enables multi-channel support.

┌─────────────────┐

│ WhatsApp │──┐

│ Client │ │

└─────────────────┘ │

├──→ Queue (incoming/)

┌─────────────────┐ │ ↓

│ Telegram │──┤ ┌──────────────┐

│ (future) │ │ │ Queue │

└─────────────────┘ │ │ Processor │

│ └──────────────┘

Other Channels ──────┘ ↓

claude --dangerously-skip-permissions -c -p

↓

Queue (outgoing/)

↓

┌─────────────────┐

│ Channels send │

│ responses │

└─────────────────┘

┌──────────────┬──────────────┐

│ WhatsApp │ Queue │

│ Client │ Processor │

├──────────────┼──────────────┤

│ Heartbeat │ Logs │

└──────────────┴──────────────┘

- macOS or Linux

- Claude Code installed

- Node.js v14+

- tmux

cd /Users/jliao/workspace/tinyclaw

# Install dependencies

npm install

# Make scripts executable

chmod +x *.sh *.js

# Start TinyClaw

./tinyclaw.sh startA QR code will appear in your terminal:

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

WhatsApp QR Code

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

[QR CODE HERE]

📱 Scan with WhatsApp:

Settings → Linked Devices → Link a Device

Scan it with your phone. Done! 🎉

Send a WhatsApp message to yourself from a different WhatsApp account:

"Hello Claude!"

You'll get a response! 🤖

# Start TinyClaw

./tinyclaw.sh start

# Check status

./tinyclaw.sh status

# Send manual message

./tinyclaw.sh send "What's the weather?"

# Reset conversation

./tinyclaw.sh reset

# View logs

./tinyclaw.sh logs whatsapp

./tinyclaw.sh logs queue

# Attach to tmux

./tinyclaw.sh attach

# Stop

./tinyclaw.sh stop- Connects to WhatsApp via QR code

- Writes incoming messages to queue

- Reads responses from queue

- Sends replies back

- Polls incoming queue

- Processes ONE message at a time

- Calls

claude -c -p - Writes responses to outgoing queue

- Runs every 5 minutes

- Sends heartbeat via queue

- Keeps conversation active

- Main orchestrator

- Manages tmux session

- CLI interface

WhatsApp message arrives

↓

whatsapp-client.js writes to:

.tinyclaw/queue/incoming/whatsapp_<id>.json

↓

queue-processor.js picks it up

↓

Runs: claude -c -p "message"

↓

Writes to:

.tinyclaw/queue/outgoing/whatsapp_<id>.json

↓

whatsapp-client.js sends response

↓

User receives reply

tinyclaw/

├── .claude/ # Claude Code config

│ ├── settings.json # Hooks config

│ └── hooks/ # Hook scripts

├── .tinyclaw/ # TinyClaw data

│ ├── queue/

│ │ ├── incoming/ # New messages

│ │ ├── processing/ # Being processed

│ │ └── outgoing/ # Responses

│ ├── logs/

│ ├── whatsapp-session/

│ └── heartbeat.md

├── tinyclaw.sh # Main script

├── whatsapp-client.js # WhatsApp I/O

├── queue-processor.js # Message processing

└── heartbeat-cron.sh # Health checks

./tinyclaw.sh resetSend: !reset or /reset

Next message starts fresh (no conversation history).

Edit heartbeat-cron.sh:

INTERVAL=300 # seconds (5 minutes)Edit .tinyclaw/heartbeat.md:

Check for:

1. Pending tasks

2. Errors

3. Unread messages

Take action if needed.# WhatsApp activity

tail -f .tinyclaw/logs/whatsapp.log

# Queue processing

tail -f .tinyclaw/logs/queue.log

# Heartbeat checks

tail -f .tinyclaw/logs/heartbeat.log

# All logs

./tinyclaw.sh logs daemon# Incoming messages

watch -n 1 'ls -lh .tinyclaw/queue/incoming/'

# Outgoing responses

watch -n 1 'ls -lh .tinyclaw/queue/outgoing/'Messages processed sequentially, one at a time:

Message 1 → Process → Done

Message 2 → Wait → Process → Done

Message 3 → Wait → Process → Done

Add Telegram by creating telegram-client.js:

// Write to queue

fs.writeFileSync(

'.tinyclaw/queue/incoming/telegram_<id>.json',

JSON.stringify({ channel: 'telegram', message, ... })

);

// Read responses

// Same format as WhatsAppQueue processor handles it automatically!

Uses claude -c -p:

-

-c= continue conversation -

-p= print mode (clean output) - No tmux capture needed

WhatsApp session persists across restarts:

# First time: Scan QR code

./tinyclaw.sh start

# Subsequent starts: Auto-connects

./tinyclaw.sh restart- WhatsApp session stored locally in

.tinyclaw/whatsapp-session/ - Queue files are local (no network exposure)

- Each channel handles its own authentication

- Claude runs with your user permissions

# Check logs

./tinyclaw.sh logs whatsapp

# Re-authenticate

rm -rf .tinyclaw/whatsapp-session/

./tinyclaw.sh restart# Check queue processor

./tinyclaw.sh status

# Check queue

ls -la .tinyclaw/queue/incoming/

# View queue logs

./tinyclaw.sh logs queue# Attach to tmux to see the QR code

tmux attach -t tinyclawsudo systemctl enable tinyclaw

sudo systemctl start tinyclawpm2 start tinyclaw.sh --name tinyclaw

pm2 save[program:tinyclaw]

command=/path/to/tinyclaw/tinyclaw.sh start

autostart=true

autorestart=trueYou: "Remind me to call mom"

Claude: "I'll remind you!"

[5 minutes later via heartbeat]

Claude: "Don't forget to call mom!"

You: "Review my code"

Claude: [reads files, provides feedback]

You: "Fix the bug"

Claude: [fixes and commits]

- WhatsApp on phone

- Telegram on desktop

- CLI for scripts All share the same Claude conversation!

- Inspired by OpenClaw by Peter Steinberger

- Built on Claude Code

- Uses whatsapp-web.js

MIT

TinyClaw - Small but mighty! 🦞✨

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for tinyclaw

Similar Open Source Tools

tinyclaw

TinyClaw is a lightweight wrapper around Claude Code that connects WhatsApp via QR code, processes messages sequentially, maintains conversation context, runs 24/7 in tmux, and is ready for multi-channel support. Its key innovation is the file-based queue system that prevents race conditions and enables multi-channel support. TinyClaw consists of components like whatsapp-client.js for WhatsApp I/O, queue-processor.js for message processing, heartbeat-cron.sh for health checks, and tinyclaw.sh as the main orchestrator with a CLI interface. It ensures no race conditions, is multi-channel ready, provides clean responses using claude -c -p, and supports persistent sessions. Security measures include local storage of WhatsApp session and queue files, channel-specific authentication, and running Claude with user permissions.

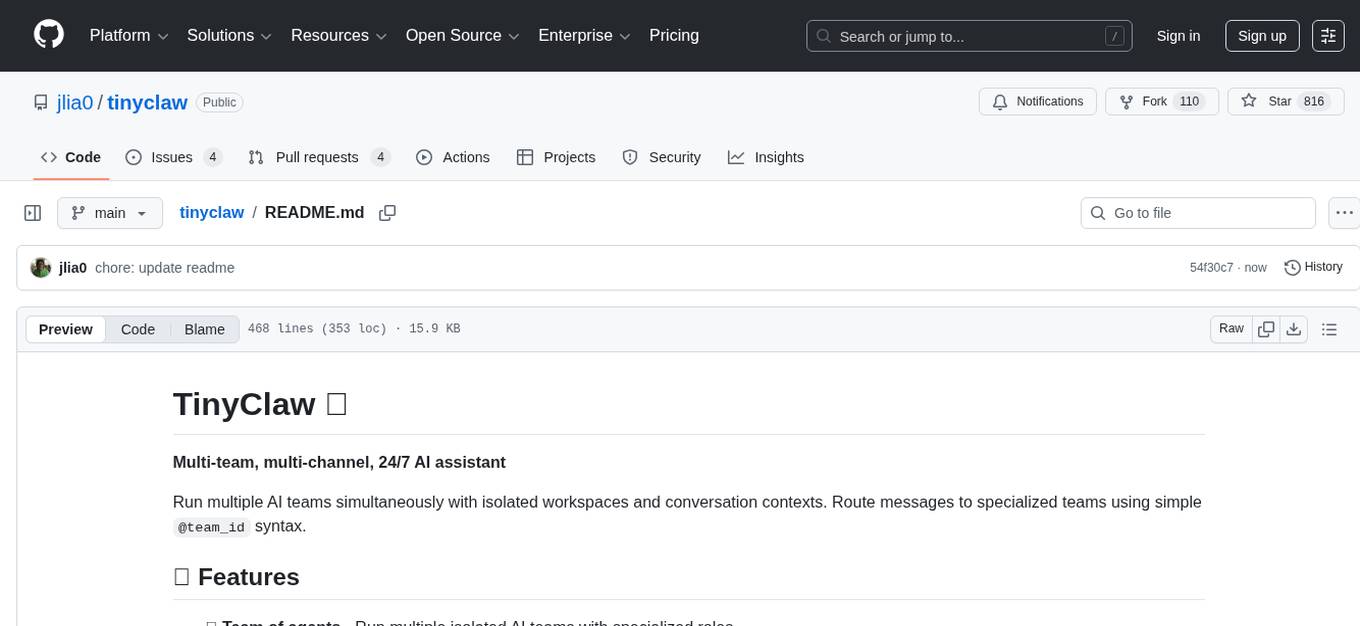

myclaw

myclaw is a personal AI assistant built on agentsdk-go that offers a CLI agent for single message or interactive REPL mode, full orchestration with channels, cron, and heartbeat, support for various messaging channels like Telegram, Feishu, WeCom, WhatsApp, and a web UI, multi-provider support for Anthropic and OpenAI models, image recognition and document processing, scheduled tasks with JSON persistence, long-term and daily memory storage, custom skill loading, and more. It provides a comprehensive solution for interacting with AI models and managing tasks efficiently.

solo-server

Solo Server is a lightweight server designed for managing hardware-aware inference. It provides seamless setup through a simple CLI and HTTP servers, an open model registry for pulling models from platforms like Ollama and Hugging Face, cross-platform compatibility for effortless deployment of AI models on hardware, and a configurable framework that auto-detects hardware components (CPU, GPU, RAM) and sets optimal configurations.

mimiclaw

MimiClaw is a pocket AI assistant that runs on a $5 chip, specifically designed for the ESP32-S3 board. It operates without Linux or Node.js, using pure C language. Users can interact with MimiClaw through Telegram, enabling it to handle various tasks and learn from local memory. The tool is energy-efficient, running on USB power 24/7. With MimiClaw, users can have a personal AI assistant on a chip the size of a thumb, making it convenient and accessible for everyday use.

Shannon

Shannon is a battle-tested infrastructure for AI agents that solves problems at scale, such as runaway costs, non-deterministic failures, and security concerns. It offers features like intelligent caching, deterministic replay of workflows, time-travel debugging, WASI sandboxing, and hot-swapping between LLM providers. Shannon allows users to ship faster with zero configuration multi-agent setup, multiple AI patterns, time-travel debugging, and hot configuration changes. It is production-ready with features like WASI sandbox, token budget control, policy engine (OPA), and multi-tenancy. Shannon helps scale without breaking by reducing costs, being provider agnostic, observable by default, and designed for horizontal scaling with Temporal workflow orchestration.

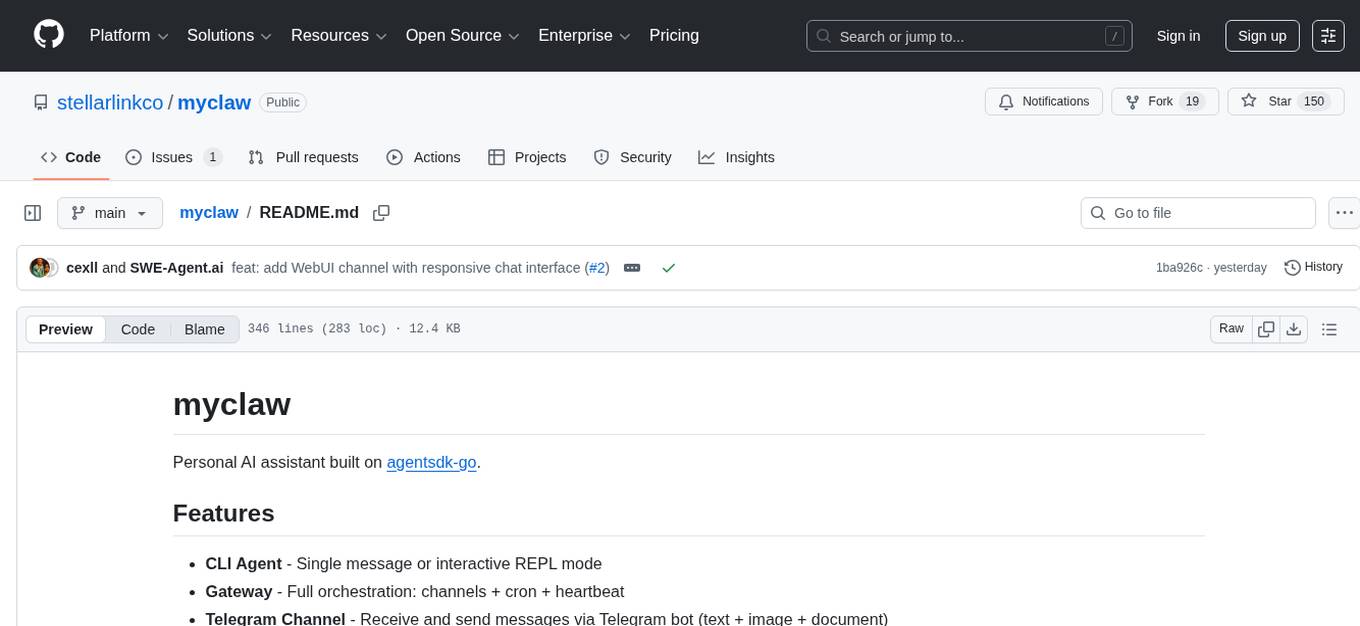

claudex

Claudex is an open-source, self-hosted Claude Code UI that runs entirely on your machine. It provides multiple sandboxes, allows users to use their own plans, offers a full IDE experience with VS Code in the browser, and is extensible with skills, agents, slash commands, and MCP servers. Users can run AI agents in isolated environments, view and interact with a browser via VNC, switch between multiple AI providers, automate tasks with Celery workers, and enjoy various chat features and preview capabilities. Claudex also supports marketplace plugins, secrets management, integrations like Gmail, and custom instructions. The tool is configured through providers and supports various providers like Anthropic, OpenAI, OpenRouter, and Custom. It has a tech stack consisting of React, FastAPI, Python, PostgreSQL, Celery, Redis, and more.

shell_gpt

ShellGPT is a command-line productivity tool powered by AI large language models (LLMs). This command-line tool offers streamlined generation of shell commands, code snippets, documentation, eliminating the need for external resources (like Google search). Supports Linux, macOS, Windows and compatible with all major Shells like PowerShell, CMD, Bash, Zsh, etc.

httpjail

httpjail is a cross-platform tool designed for monitoring and restricting HTTP/HTTPS requests from processes using network isolation and transparent proxy interception. It provides process-level network isolation, HTTP/HTTPS interception with TLS certificate injection, script-based and JavaScript evaluation for custom request logic, request logging, default deny behavior, and zero-configuration setup. The tool operates on Linux and macOS, creating an isolated network environment for target processes and intercepting all HTTP/HTTPS traffic through a transparent proxy enforcing user-defined rules.

gpt-all-star

GPT-All-Star is an AI-powered code generation tool designed for scratch development of web applications with team collaboration of autonomous AI agents. The primary focus of this research project is to explore the potential of autonomous AI agents in software development. Users can organize their team, choose leaders for each step, create action plans, and work together to complete tasks. The tool supports various endpoints like OpenAI, Azure, and Anthropic, and provides functionalities for project management, code generation, and team collaboration.

hub

Hub is an open-source, high-performance LLM gateway written in Rust. It serves as a smart proxy for LLM applications, centralizing control and tracing of all LLM calls and traces. Built for efficiency, it provides a single API to connect to any LLM provider. The tool is designed to be fast, efficient, and completely open-source under the Apache 2.0 license.

vibium

Vibium is a browser automation infrastructure designed for AI agents, providing a single binary that manages browser lifecycle, WebDriver BiDi protocol, and an MCP server. It offers zero configuration, AI-native capabilities, and is lightweight with no runtime dependencies. It is suitable for AI agents, test automation, and any tasks requiring browser interaction.

mesh

MCP Mesh is an open-source control plane for MCP traffic that provides a unified layer for authentication, routing, and observability. It replaces multiple integrations with a single production endpoint, simplifying configuration management. Built for multi-tenant organizations, it offers workspace/project scoping for policies, credentials, and logs. With core capabilities like MeshContext, AccessControl, and OpenTelemetry, it ensures fine-grained RBAC, full tracing, and metrics for tools and workflows. Users can define tools with input/output validation, access control checks, audit logging, and OpenTelemetry traces. The project structure includes apps for full-stack MCP Mesh, encryption, observability, and more, with deployment options ranging from Docker to Kubernetes. The tech stack includes Bun/Node runtime, TypeScript, Hono API, React, Kysely ORM, and Better Auth for OAuth and API keys.

Whimbox

Whimbox is a game AI agent based on large language models and image recognition technology, providing users with a new gaming experience. It automates daily tasks such as mining, material collection, and wish checking, as well as features like route recording, image recognition, and AI dialogue. The tool does not modify game files or memory, only captures screenshots and simulates mouse and keyboard actions. It is designed for games running in a 1920x1080 windowed mode on mid to high-end PCs, with plans for future cloud gaming support. Whimbox is grateful to open-source projects like GIA and BetterGI, as well as AI models and programming tools like chatgpt and cursor. Developers interested in contributing to the project can join the development community and explore various functionalities that need development and adaptation.

mcp-prompts

mcp-prompts is a Python library that provides a collection of prompts for generating creative writing ideas. It includes a variety of prompts such as story starters, character development, plot twists, and more. The library is designed to inspire writers and help them overcome writer's block by offering unique and engaging prompts to spark creativity. With mcp-prompts, users can access a wide range of writing prompts to kickstart their imagination and enhance their storytelling skills.

sandbox

AIO Sandbox is an all-in-one agent sandbox environment that combines Browser, Shell, File, MCP operations, and VSCode Server in a single Docker container. It provides a unified, secure execution environment for AI agents and developers, with features like unified file system, multiple interfaces, secure execution, zero configuration, and agent-ready MCP-compatible APIs. The tool allows users to run shell commands, perform file operations, automate browser tasks, and integrate with various development tools and services.

boxlite

BoxLite is an embedded, lightweight micro-VM runtime designed for AI agents running OCI containers with hardware-level isolation. It is built for high concurrency with no daemon required, offering features like lightweight VMs, high concurrency, hardware isolation, embeddability, and OCI compatibility. Users can spin up 'Boxes' to run containers for AI agent sandboxes and multi-tenant code execution scenarios where Docker alone is insufficient and full VM infrastructure is too heavy. BoxLite supports Python, Node.js, and Rust with quick start guides for each, along with features like CPU/memory limits, storage options, networking capabilities, security layers, and image registry configuration. The tool provides SDKs for Python and Node.js, with Go support coming soon. It offers detailed documentation, examples, and architecture insights for users to understand how BoxLite works under the hood.

For similar tasks

UltraContextAI

UltraContextAI is a comprehensive system for managing AI interactions through memory management, lessons learned tracking, and dual-mode operation (Plan/Agent). It ensures consistent, high-quality development while maintaining detailed project documentation and knowledge retention. The system includes core components like Memory System, Lessons Learned, and Scratchpad. It operates in Plan Mode for information gathering and planning, and Agent Mode for execution. Users can create new features, fix bugs, set up projects, and update documentation using the system. Real-time updates, version control, and cross-referencing are key aspects of the system. Best practices include memory management, task tracking, and documentation standards. Tips and tricks are provided for handling AI and Cursor issues. Contributions to the system are welcome, and it is licensed under MIT License.

tinyclaw

TinyClaw is a lightweight wrapper around Claude Code that connects WhatsApp via QR code, processes messages sequentially, maintains conversation context, runs 24/7 in tmux, and is ready for multi-channel support. Its key innovation is the file-based queue system that prevents race conditions and enables multi-channel support. TinyClaw consists of components like whatsapp-client.js for WhatsApp I/O, queue-processor.js for message processing, heartbeat-cron.sh for health checks, and tinyclaw.sh as the main orchestrator with a CLI interface. It ensures no race conditions, is multi-channel ready, provides clean responses using claude -c -p, and supports persistent sessions. Security measures include local storage of WhatsApp session and queue files, channel-specific authentication, and running Claude with user permissions.

ask-astro

Ask Astro is an open-source reference implementation of Andreessen Horowitz's LLM Application Architecture built by Astronomer. It provides an end-to-end example of a Q&A LLM application used to answer questions about Apache Airflow® and Astronomer. Ask Astro includes Airflow DAGs for data ingestion, an API for business logic, a Slack bot, a public UI, and DAGs for processing user feedback. The tool is divided into data retrieval & embedding, prompt orchestration, and feedback loops.

sourcegraph

Sourcegraph is a code search and navigation tool that helps developers read, write, and fix code in large, complex codebases. It provides features such as code search across all repositories and branches, code intelligence for navigation and refactoring, and the ability to fix and refactor code across multiple repositories at once.

pr-agent

PR-Agent is a tool that helps to efficiently review and handle pull requests by providing AI feedbacks and suggestions. It supports various commands such as generating PR descriptions, providing code suggestions, answering questions about the PR, and updating the CHANGELOG.md file. PR-Agent can be used via CLI, GitHub Action, GitHub App, Docker, and supports multiple git providers and models. It emphasizes real-life practical usage, with each tool having a single GPT-4 call for quick and affordable responses. The PR Compression strategy enables effective handling of both short and long PRs, while the JSON prompting strategy allows for modular and customizable tools. PR-Agent Pro, the hosted version by CodiumAI, provides additional benefits such as full management, improved privacy, priority support, and extra features.

code-review-gpt

Code Review GPT uses Large Language Models to review code in your CI/CD pipeline. It helps streamline the code review process by providing feedback on code that may have issues or areas for improvement. It should pick up on common issues such as exposed secrets, slow or inefficient code, and unreadable code. It can also be run locally in your command line to review staged files. Code Review GPT is in alpha and should be used for fun only. It may provide useful feedback but please check any suggestions thoroughly.

CodeGPT

CodeGPT is a CLI tool written in Go that helps you write git commit messages or do a code review brief using ChatGPT AI (gpt-3.5-turbo, gpt-4 model) and automatically installs a git prepare-commit-msg hook. It supports Azure OpenAI Service or OpenAI API, conventional commits specification, Git prepare-commit-msg Hook, customizing the number of lines of context in diffs, excluding files from the git diff command, translating commit messages into different languages, using socks or custom network HTTP proxies, specifying model lists, and doing brief code reviews.

gpt-pilot

GPT Pilot is a core technology for the Pythagora VS Code extension, aiming to provide the first real AI developer companion. It goes beyond autocomplete, helping with writing full features, debugging, issue discussions, and reviews. The tool utilizes LLMs to generate production-ready apps, with developers overseeing the implementation. GPT Pilot works step by step like a developer, debugging issues as they arise. It can work at any scale, filtering out code to show only relevant parts to the AI during tasks. Contributions are welcome, with debugging and telemetry being key areas of focus for improvement.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.