cherry-studio

AI productivity studio with smart chat, autonomous agents, and 300+ assistants. Unified access to frontier LLMs

Stars: 39716

Cherry Studio is a desktop client that supports multiple LLM providers on Windows, Mac, and Linux. It offers diverse LLM provider support, AI assistants & conversations, document & data processing, practical tools integration, and enhanced user experience. The tool includes features like support for major LLM cloud services, AI web service integration, local model support, pre-configured AI assistants, document processing for text, images, and more, global search functionality, topic management system, AI-powered translation, and cross-platform support with ready-to-use features and themes for a better user experience.

README:

English | 中文 | Official Site | Documents | Development | Feedback

Cherry Studio is a desktop client that supports multiple LLM providers, available on Windows, Mac and Linux.

👏 Join Telegram Group|Discord | QQ Group(575014769)

❤️ Like Cherry Studio? Give it a star 🌟 or Sponsor to support the development!

- Diverse LLM Provider Support:

- ☁️ Major LLM Cloud Services: OpenAI, Gemini, Anthropic, and more

- 🔗 AI Web Service Integration: Claude, Perplexity, Poe, and others

- 💻 Local Model Support with Ollama, LM Studio

- AI Assistants & Conversations:

- 📚 300+ Pre-configured AI Assistants

- 🤖 Custom Assistant Creation

- 💬 Multi-model Simultaneous Conversations

- Document & Data Processing:

- 📄 Supports Text, Images, Office, PDF, and more

- ☁️ WebDAV File Management and Backup

- 📊 Mermaid Chart Visualization

- 💻 Code Syntax Highlighting

- Practical Tools Integration:

- 🔍 Global Search Functionality

- 📝 Topic Management System

- 🔤 AI-powered Translation

- 🎯 Drag-and-drop Sorting

- 🔌 Mini Program Support

- ⚙️ MCP(Model Context Protocol) Server

- Enhanced User Experience:

- 🖥️ Cross-platform Support for Windows, Mac, and Linux

- 📦 Ready to Use - No Environment Setup Required

- 🎨 Light/Dark Themes and Transparent Window

- 📝 Complete Markdown Rendering

- 🤲 Easy Content Sharing

We're actively working on the following features and improvements:

- 🎯 Core Features

- Selection Assistant with smart content selection enhancement

- Deep Research with advanced research capabilities

- Memory System with global context awareness

- Document Preprocessing with improved document handling

- MCP Marketplace for Model Context Protocol ecosystem

- 🗂 Knowledge Management

- Notes and Collections

- Dynamic Canvas visualization

- OCR capabilities

- TTS (Text-to-Speech) support

- 📱 Platform Support

- HarmonyOS Edition (PC)

- Android App (Phase 1)

- iOS App (Phase 1)

- Multi-Window support

- Window Pinning functionality

- Intel AI PC (Core Ultra) Support

- 🔌 Advanced Features

- Plugin System

- ASR (Automatic Speech Recognition)

- Assistant and Topic Interaction Refactoring

Track our progress and contribute on our project board.

Want to influence our roadmap? Join our GitHub Discussions to share your ideas and feedback!

- Theme Gallery: https://cherrycss.com

- Aero Theme: https://github.com/hakadao/CherryStudio-Aero

- PaperMaterial Theme: https://github.com/rainoffallingstar/CherryStudio-PaperMaterial

- Claude dynamic-style: https://github.com/bjl101501/CherryStudio-Claudestyle-dynamic

- Maple Neon Theme: https://github.com/BoningtonChen/CherryStudio_themes

Welcome PR for more themes

We welcome contributions to Cherry Studio! Here are some ways you can contribute:

- Contribute Code: Develop new features or optimize existing code.

- Fix Bugs: Submit fixes for any bugs you find.

- Maintain Issues: Help manage GitHub issues.

- Product Design: Participate in design discussions.

- Write Documentation: Improve user manuals and guides.

- Community Engagement: Join discussions and help users.

- Promote Usage: Spread the word about Cherry Studio.

Refer to the Branching Strategy for contribution guidelines

- Fork the Repository: Fork and clone it to your local machine.

- Create a Branch: For your changes.

- Submit Changes: Commit and push your changes.

- Open a Pull Request: Describe your changes and reasons.

For more detailed guidelines, please refer to our Contributing Guide.

Thank you for your support and contributions!

We are launching the Cherry Studio Developer Co-creation Program to foster a healthy and positive-feedback loop within the open-source ecosystem. We believe that great software is built collaboratively, and every merged pull request breathes new life into the project.

We sincerely invite you to join our ranks of contributors and shape the future of Cherry Studio with us.

To give back to our core contributors and create a virtuous cycle, we have established the following long-term incentive plan.

The inaugural tracking period for this program will be Q3 2025 (July, August, September). Rewards for this cycle will be distributed on October 1st.

Within any tracking period (e.g., July 1st to September 30th for the first cycle), any developer who contributes more than 30 meaningful commits to any of Cherry Studio's open-source projects on GitHub will be eligible for the following benefits:

- Cursor Subscription Sponsorship: Receive a $70 USD credit or reimbursement for your Cursor subscription, making AI your most efficient coding partner.

- Unlimited Model Access: Get unlimited API calls for the DeepSeek and Qwen models.

- Cutting-Edge Tech Access: Enjoy occasional perks, including API access to models like Claude, Gemini, and OpenAI, keeping you at the forefront of technology.

A vibrant community is the driving force behind any sustainable open-source project. As Cherry Studio grows, so will our rewards program. We are committed to continuously aligning our benefits with the best-in-class tools and resources in the industry. This ensures our core contributors receive meaningful support, creating a positive cycle where developers, the community, and the project grow together.

Moving forward, the project will also embrace an increasingly open stance to give back to the entire open-source community.

We look forward to your first Pull Request!

You can start by exploring our repositories, picking up a good first issue, or proposing your own enhancements. Every commit is a testament to the spirit of open source.

Thank you for your interest and contributions.

Let's build together.

Building on the Community Edition, we are proud to introduce Cherry Studio Enterprise Edition—a privately-deployable AI productivity and management platform designed for modern teams and enterprises.

The Enterprise Edition addresses core challenges in team collaboration by centralizing the management of AI resources, knowledge, and data. It empowers organizations to enhance efficiency, foster innovation, and ensure compliance, all while maintaining 100% control over their data in a secure environment.

- Unified Model Management: Centrally integrate and manage various cloud-based LLMs (e.g., OpenAI, Anthropic, Google Gemini) and locally deployed private models. Employees can use them out-of-the-box without individual configuration.

- Enterprise-Grade Knowledge Base: Build, manage, and share team-wide knowledge bases. Ensures knowledge retention and consistency, enabling team members to interact with AI based on unified and accurate information.

- Fine-Grained Access Control: Easily manage employee accounts and assign role-based permissions for different models, knowledge bases, and features through a unified admin backend.

- Fully Private Deployment: Deploy the entire backend service on your on-premises servers or private cloud, ensuring your data remains 100% private and under your control to meet the strictest security and compliance standards.

- Reliable Backend Services: Provides stable API services and enterprise-grade data backup and recovery mechanisms to ensure business continuity.

| Feature | Community Edition | Enterprise Edition |

|---|---|---|

| Open Source | ✅ Yes | ⭕️ Partially released to customers |

| Cost | AGPL-3.0 License | Buyout / Subscription Fee |

| Admin Backend | — | ● Centralized Model Access ● Employee Management ● Shared Knowledge Base ● Access Control ● Data Backup |

| Server | — | ✅ Dedicated Private Deployment |

We believe the Enterprise Edition will become your team's AI productivity engine. If you are interested in Cherry Studio Enterprise Edition and would like to learn more, request a quote, or schedule a demo, please feel free to contact us.

- For Business Inquiries & Purchasing: 📧 [email protected]

-

new-api: The next-generation LLM gateway and AI asset management system supports multiple languages.

-

one-api: LLM API management and distribution system supporting mainstream models like OpenAI, Azure, and Anthropic. Features a unified API interface, suitable for key management and secondary distribution.

-

Poe: Poe gives you access to the best AI, all in one place. Explore GPT-5, Claude Opus 4.1, DeepSeek-R1, Veo 3, ElevenLabs, and millions of others.

-

ublacklist: Blocks specific sites from appearing in Google search results

The Cherry Studio Community Edition is governed by the standard GNU Affero General Public License v3.0 (AGPL-3.0), available at https://www.gnu.org/licenses/agpl-3.0.html.

Use of the Cherry Studio Community Edition for commercial purposes is permitted, subject to full compliance with the terms and conditions of the AGPL-3.0 license.

Should you require a commercial license that provides an exemption from the AGPL-3.0 requirements, please contact us at [email protected].

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for cherry-studio

Similar Open Source Tools

cherry-studio

Cherry Studio is a desktop client that supports multiple LLM providers on Windows, Mac, and Linux. It offers diverse LLM provider support, AI assistants & conversations, document & data processing, practical tools integration, and enhanced user experience. The tool includes features like support for major LLM cloud services, AI web service integration, local model support, pre-configured AI assistants, document processing for text, images, and more, global search functionality, topic management system, AI-powered translation, and cross-platform support with ready-to-use features and themes for a better user experience.

meeting-minutes

An open-source AI assistant for taking meeting notes that captures live meeting audio, transcribes it in real-time, and generates summaries while ensuring user privacy. Perfect for teams to focus on discussions while automatically capturing and organizing meeting content without external servers or complex infrastructure. Features include modern UI, real-time audio capture, speaker diarization, local processing for privacy, and more. The tool also offers a Rust-based implementation for better performance and native integration, with features like live transcription, speaker diarization, and a rich text editor for notes. Future plans include database connection for saving meeting minutes, improving summarization quality, and adding download options for meeting transcriptions and summaries. The backend supports multiple LLM providers through a unified interface, with configurations for Anthropic, Groq, and Ollama models. System architecture includes core components like audio capture service, transcription engine, LLM orchestrator, data services, and API layer. Prerequisites for setup include Node.js, Python, FFmpeg, and Rust. Development guidelines emphasize project structure, testing, documentation, type hints, and ESLint configuration. Contributions are welcome under the MIT License.

BMAD-METHOD

BMAD-METHOD™ is a universal AI agent framework that revolutionizes Agile AI-Driven Development. It offers specialized AI expertise across various domains, including software development, entertainment, creative writing, business strategy, and personal wellness. The framework introduces two key innovations: Agentic Planning, where dedicated agents collaborate to create detailed specifications, and Context-Engineered Development, which ensures complete understanding and guidance for developers. BMAD-METHOD™ simplifies the development process by eliminating planning inconsistency and context loss, providing a seamless workflow for creating AI agents and expanding functionality through expansion packs.

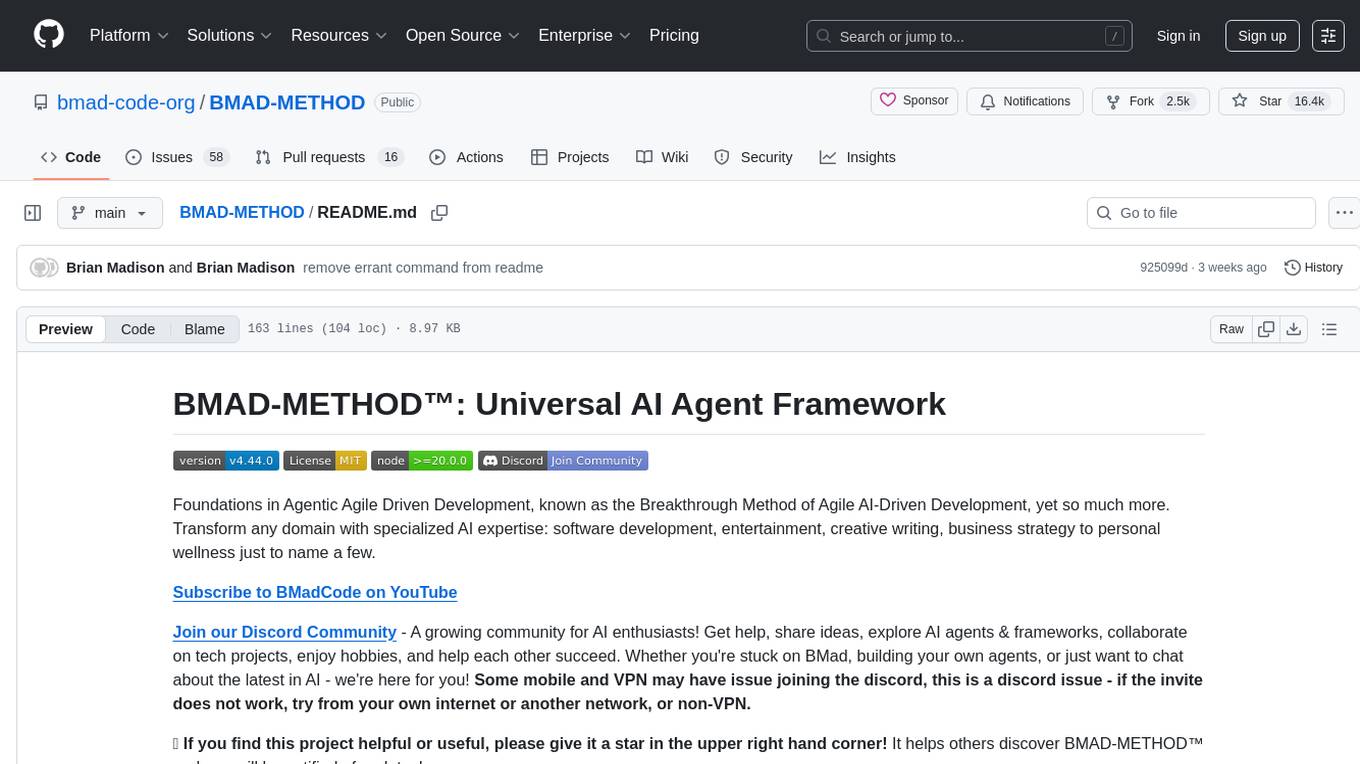

cherry-studio

Cherry Studio is a desktop client that supports multiple Large Language Model (LLM) providers, available on Windows, Mac, and Linux. It allows users to create multiple Assistants and topics, use multiple models to answer questions in the same conversation, and supports drag-and-drop sorting, code highlighting, and Mermaid chart. The tool is designed to enhance productivity and streamline the process of interacting with various language models.

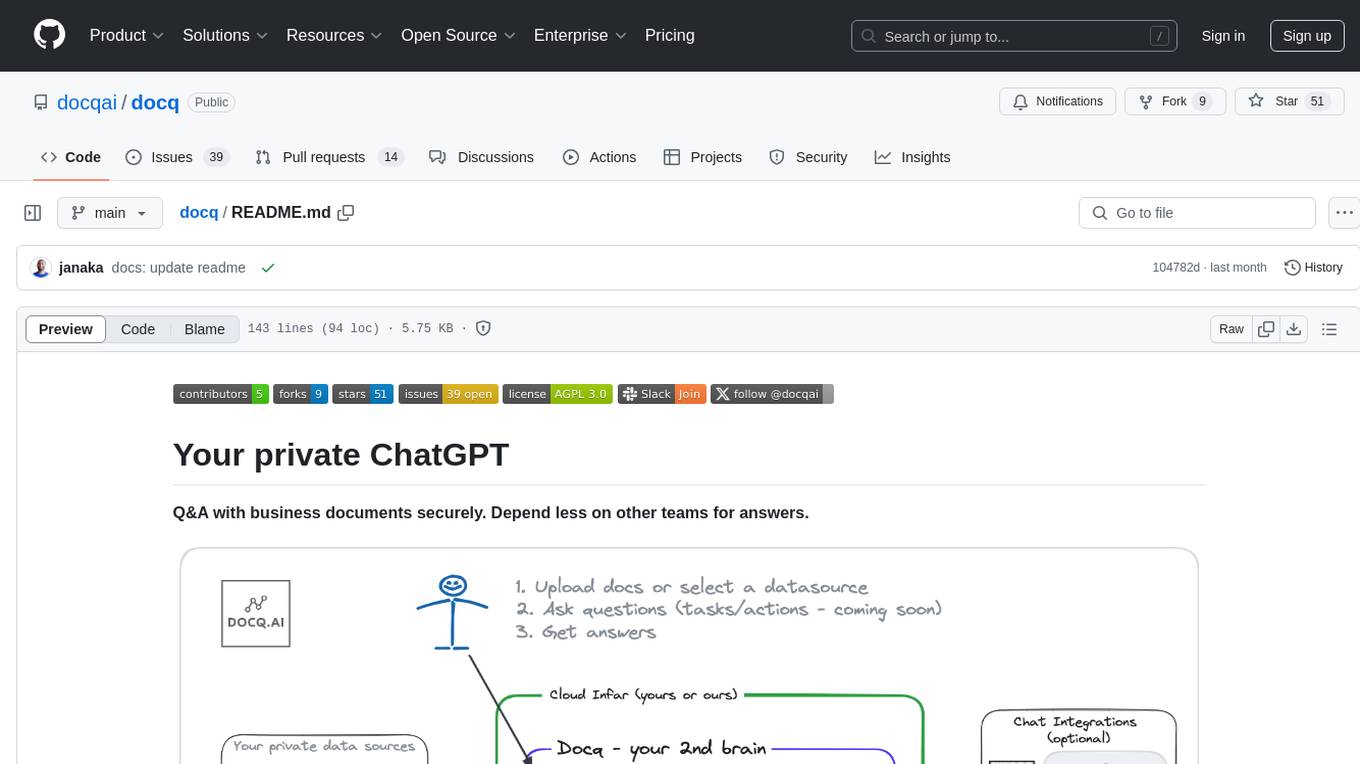

docq

Docq is a private and secure GenAI tool designed to extract knowledge from business documents, enabling users to find answers independently. It allows data to stay within organizational boundaries, supports self-hosting with various cloud vendors, and offers multi-model and multi-modal capabilities. Docq is extensible, open-source (AGPLv3), and provides commercial licensing options. The tool aims to be a turnkey solution for organizations to adopt AI innovation safely, with plans for future features like more data ingestion options and model fine-tuning.

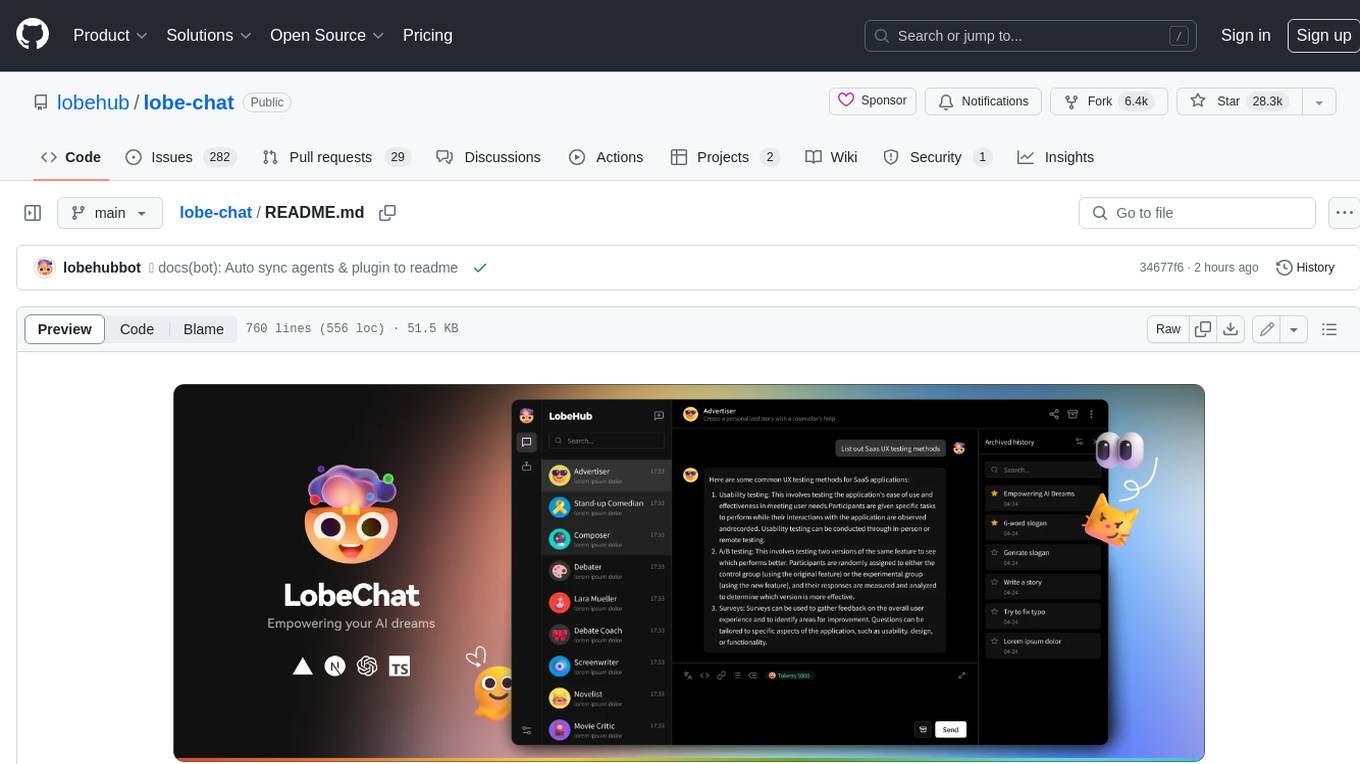

lobe-chat

Lobe Chat is an open-source, modern-design ChatGPT/LLMs UI/Framework. Supports speech-synthesis, multi-modal, and extensible ([function call][docs-functionc-call]) plugin system. One-click **FREE** deployment of your private OpenAI ChatGPT/Claude/Gemini/Groq/Ollama chat application.

memU

MemU is an open-source memory framework designed for AI companions, offering high accuracy, fast retrieval, and cost-effectiveness. It serves as an intelligent 'memory folder' that adapts to various AI companion scenarios. With MemU, users can create AI companions that remember them, learn their preferences, and evolve through interactions. The framework provides advanced retrieval strategies, 24/7 support, and is specialized for AI companions. MemU offers cloud, enterprise, and self-hosting options, with features like memory organization, interconnected knowledge graph, continuous self-improvement, and adaptive forgetting mechanism. It boasts high memory accuracy, fast retrieval, and low cost, making it suitable for building intelligent agents with persistent memory capabilities.

sealos

Sealos is a cloud operating system distribution based on the Kubernetes kernel, designed for a seamless development lifecycle. It allows users to spin up full-stack environments in seconds, effortlessly push releases, and scale production seamlessly. With core features like easy application management, quick database creation, and cloud universality, Sealos offers efficient and economical cloud management with high universality and ease of use. The platform also emphasizes agility and security through its multi-tenancy sharing model. Sealos is supported by a community offering full documentation, Discord support, and active development roadmap.

tuff

Tuff is a local-first, AI-native, and infinitely extensible desktop command center designed to enhance workflow efficiency. It offers a seamless integration of core utilities, AI-powered search, contextual intelligence, and extensibility through custom plugins. With a beautiful UI design, rich functionality, simple operations, and a focus on security and reliability, Tuff provides users with a cross-platform desktop software that is easy to use and offers a good user experience.

refact

This repository contains Refact WebUI for fine-tuning and self-hosting of code models, which can be used inside Refact plugins for code completion and chat. Users can fine-tune open-source code models, self-host them, download and upload Lloras, use models for code completion and chat inside Refact plugins, shard models, host multiple small models on one GPU, and connect GPT-models for chat using OpenAI and Anthropic keys. The repository provides a Docker container for running the self-hosted server and supports various models for completion, chat, and fine-tuning. Refact is free for individuals and small teams under the BSD-3-Clause license, with custom installation options available for GPU support. The community and support include contributing guidelines, GitHub issues for bugs, a community forum, Discord for chatting, and Twitter for product news and updates.

agentgateway

Agentgateway is an open source data plane optimized for agentic AI connectivity within or across any agent framework or environment. It provides drop-in security, observability, and governance for agent-to-agent and agent-to-tool communication, supporting leading interoperable protocols like Agent2Agent (A2A) and Model Context Protocol (MCP). Highly performant, security-first, multi-tenant, dynamic, and supporting legacy API transformation, agentgateway is designed to handle any scale and run anywhere with any agent framework.

chatbox

Chatbox is a desktop client for ChatGPT, Claude, and other LLMs, providing a user-friendly interface for AI copilot assistance on Windows, Mac, and Linux. It offers features like local data storage, multiple LLM provider support, image generation with Dall-E-3, enhanced prompting, keyboard shortcuts, and more. Users can collaborate, access the tool on various platforms, and enjoy multilingual support. Chatbox is constantly evolving with new features to enhance the user experience.

chatbox

Chatbox is a desktop client for ChatGPT, Claude, and other LLMs, providing features like local data storage, multiple LLM provider support, image generation, enhanced prompting, keyboard shortcuts, and more. It offers a user-friendly interface with dark theme, team collaboration, cross-platform availability, web version access, iOS & Android apps, multilingual support, and ongoing feature enhancements. Developed for prompt and API debugging, it has gained popularity for daily chatting and professional role-playing with AI assistance.

RWKV_APP

RWKV App is an experimental application that enables users to run Large Language Models (LLMs) offline on their edge devices. It offers a privacy-first, on-device LLM experience for everyday devices. Users can engage in multi-turn conversations, text-to-speech, visual understanding, and more, all without requiring an internet connection. The app supports switching between different models, running locally without internet, and exploring various AI tasks such as chat, speech generation, and visual understanding. It is built using Flutter and Dart FFI for cross-platform compatibility and efficient communication with the C++ inference engine. The roadmap includes integrating features into the RWKV Chat app, supporting more model weights, hardware, operating systems, and devices.

onyx

Onyx is an open-source Gen-AI and Enterprise Search tool that serves as an AI Assistant connected to company documents, apps, and people. It provides a chat interface, can be deployed anywhere, and offers features like user authentication, role management, chat persistence, and UI for configuring AI Assistants. Onyx acts as an Enterprise Search tool across various workplace platforms, enabling users to access team-specific knowledge and perform tasks like document search, AI answers for natural language queries, and integration with common workplace tools like Slack, Google Drive, Confluence, etc.

aibrix

AIBrix is an open-source initiative providing essential building blocks for scalable GenAI inference infrastructure. It delivers a cloud-native solution optimized for deploying, managing, and scaling large language model (LLM) inference, tailored to enterprise needs. Key features include High-Density LoRA Management, LLM Gateway and Routing, LLM App-Tailored Autoscaler, Unified AI Runtime, Distributed Inference, Distributed KV Cache, Cost-efficient Heterogeneous Serving, and GPU Hardware Failure Detection.

For similar tasks

cherry-studio

Cherry Studio is a desktop client that supports multiple LLM providers on Windows, Mac, and Linux. It offers diverse LLM provider support, AI assistants & conversations, document & data processing, practical tools integration, and enhanced user experience. The tool includes features like support for major LLM cloud services, AI web service integration, local model support, pre-configured AI assistants, document processing for text, images, and more, global search functionality, topic management system, AI-powered translation, and cross-platform support with ready-to-use features and themes for a better user experience.

LocalAGI

LocalAGI is a powerful, self-hostable AI Agent platform that allows you to design AI automations without writing code. It provides a complete drop-in replacement for OpenAI's Responses APIs with advanced agentic capabilities. With LocalAGI, you can create customizable AI assistants, automations, chat bots, and agents that run 100% locally, without the need for cloud services or API keys. The platform offers features like no-code agents, web-based interface, advanced agent teaming, connectors for various platforms, comprehensive REST API, short & long-term memory capabilities, planning & reasoning, periodic tasks scheduling, memory management, multimodal support, extensible custom actions, fully customizable models, observability, and more.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

kollektiv

Kollektiv is a Retrieval-Augmented Generation (RAG) system designed to enable users to chat with their favorite documentation easily. It aims to provide LLMs with access to the most up-to-date knowledge, reducing inaccuracies and improving productivity. The system utilizes intelligent web crawling, advanced document processing, vector search, multi-query expansion, smart re-ranking, AI-powered responses, and dynamic system prompts. The technical stack includes Python/FastAPI for backend, Supabase, ChromaDB, and Redis for storage, OpenAI and Anthropic Claude 3.5 Sonnet for AI/ML, and Chainlit for UI. Kollektiv is licensed under a modified version of the Apache License 2.0, allowing free use for non-commercial purposes.

OpenContracts

OpenContracts is an Apache-2 licensed enterprise document analytics tool that supports multiple formats, including PDF and txt-based formats. It features multiple document ingestion pipelines with a pluggable architecture for easy format and ingestion engine support. Users can create custom document analytics tools with beautiful result displays, support mass document data extraction with a LlamaIndex wrapper, and manage document collections, layout parsing, automatic vector embeddings, and human annotation. The tool also offers pluggable parsing pipelines, human annotation interface, LlamaIndex integration, data extraction capabilities, and custom data extract pipelines for bulk document querying.

simba

Simba is an open source, portable Knowledge Management System (KMS) designed to seamlessly integrate with any Retrieval-Augmented Generation (RAG) system. It features a modern UI and modular architecture, allowing developers to focus on building advanced AI solutions without the complexities of knowledge management. Simba offers a user-friendly interface to visualize and modify document chunks, supports various vector stores and embedding models, and simplifies knowledge management for developers. It is community-driven, extensible, and aims to enhance AI functionality by providing a seamless integration with RAG-based systems.

Kori

Kori is a unified note-taking app with AI capabilities, providing a consistent experience across Android, iOS, Windows, macOS, and Linux. It supports various formats like Drawing, Markdown, TXT, LaTeX, Mermaid diagrams, and Todo.txt lists. Users can benefit from AI co-writing features, note outline generation, find and replace, note templates, local media support, and export options. The app follows Material Design 3 guidelines, offers comprehensive mouse and keyboard support, and is optimized for different screen sizes and orientations.

docs-mcp-server

The docs-mcp-server repository contains the server-side code for the documentation management system. It provides functionalities for managing, storing, and retrieving documentation files. Users can upload, update, and delete documents through the server. The server also supports user authentication and authorization to ensure secure access to the documentation system. Additionally, the server includes APIs for integrating with other systems and tools, making it a versatile solution for managing documentation in various projects and organizations.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.