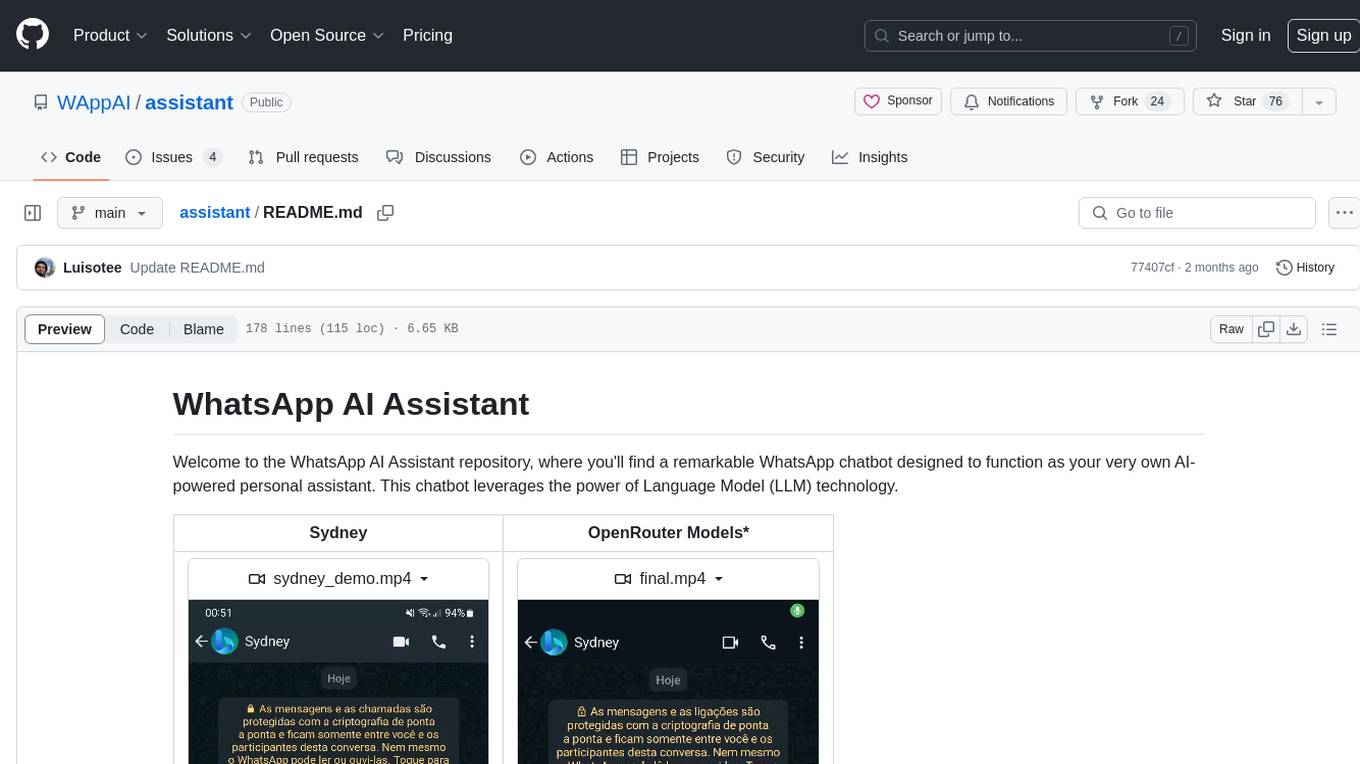

assistant

A WhatsApp chatbot that leverages Bing AI's and others LLMs conversational capabilities.

Stars: 99

The WhatsApp AI Assistant repository offers a chatbot named Sydney that serves as an AI-powered personal assistant. It utilizes Language Model (LLM) technology to provide various features such as Google/Bing searching, Google Calendar integration, communication capabilities, group chat compatibility, voice message support, basic text reminders, image recognition, and more. Users can interact with Sydney through natural language queries and voice messages. The chatbot can transcribe voice messages using either the Whisper API or a local method. Additionally, Sydney can be used in group chats by mentioning her username or replying to her last message. The repository welcomes contributions in the form of issue reports, pull requests, and requests for new tools. The creators of the project, Veigamann and Luisotee, are open to job opportunities and can be contacted through their GitHub profiles.

README:

Welcome to the WhatsApp AI Assistant repository, where you'll find a remarkable WhatsApp chatbot designed to function as your very own AI-powered personal assistant. This chatbot leverages the power of Language Model (LLM) technology.

| Sydney | LangChain |

|---|---|

| Feature | Sydney (BingAI Jailbreak) | LangChain |

|---|---|---|

| Google/Bing Searching | ✅ | ✅ |

| Google Calendar | ❌ | ✅ |

| Google Routes | ❌ | ✅ |

| Gmail | ❌ | ❌ |

| Communication Capability | ✅ | ✅ |

| Group Chat Compatibility | ✅ | ✅ |

| Voice Message Capability | ✅ | ✅ |

| Create Basic Text Reminders | ✅ | ✅ |

| Image Recognition | ✅ | ❌ |

| Image Generation | ❌ | ✅ |

| PDF Reading | ❌ | ❌ |

- Node.js >= 18.15.0

- A spare WhatsApp number

Sydney/BingChat

- Clone this repository

git clone https://github.com/WAppAI/assistant.git

- Install the dependencies

pnpm install

- Rename

.env.exampleto.env

cp .env.example .env

-

Login with your Bing account and edit

.env'sBING_COOKIESenvironment variable to the cookies string from bing.com. For detailed instructions here.NOTE: Occasionally, you might encounter an error stating,

User needs to solve CAPTCHA to continue.To resolve this issue, please solve the captcha [here]https://www.bing.com/turing/captcha/challenge, while logged in with the same account associated with your BING_COOKIES. -

Read and fill in the remaining information in the

.envfile. -

Run

pnpm build

- Start the bot

pnpm start

-

Connect your WhatsApp account to the bot by scanning the generated QR Code in the CLI.

-

Send a message to your WhatsApp account to start a conversation with Sydney!

LangChain

- Clone this repository

git clone https://github.com/WAppAI/assistant.git

- Install the dependencies

pnpm install

- Rename

.env.exampleto.env

cp .env.example .env

-

Read and fill in the remaining information in the

.envfile. -

Instructions on how to use langchain tools like Google Calendar and search will be in the

.env -

Run

pnpm build

- Start the bot

pnpm start

-

Connect your WhatsApp account to the bot by scanning the generated QR Code in the CLI.

-

Send a message to your WhatsApp account to start a conversation with the bot!

Deploying with Docker

- Clone this repository

git clone https://github.com/WAppAI/assistant.git

- Rename

.env.exampleto.env

cp .env.example .env

-

Read and fill in the remaining information in the

.envfile. -

Instructions on how to use langchain tools like Google Calendar and search will be in the

.env -

Build and start the Docker container

pnpm docker:build:start

- Access the container logs to read the QR code.

docker logs whatsapp-assistant

-

Scan the QR code with your WhatsApp account to connect the bot.

-

Send a message to your WhatsApp account to start a conversation with the bot!

The AI's are designed to respond to natural language queries from users. You can ask them questions, or just have a casual conversation.

When dealing with voice messages, you have 3 options for transcription: using groq's Whisper API for free (recommended), utilizing the Whisper API or the local method. Each option has its own considerations, including cost and performance.

Groq API:

-

Setup:

- Obtain a Groq API key from Groq Console.

- In the

.envfile, setTRANSCRIPTION_ENABLEDto"true"andTRANSCRIPTION_METHODto"whisper-groq".

Whisper API:

- Cost: Utilizing the Whisper API incurs a cost of US$0.06 per 10 minutes of audio.

-

Setup:

- Obtain an OpenAI API key and place it in the

.envfile under theOPENAI_API_KEYvariable. - In the

.envfile, setTRANSCRIPTION_ENABLEDto"true"andTRANSCRIPTION_METHODto"whisper-api".

- Obtain an OpenAI API key and place it in the

Local Mode:

- Cost: The local method is free but may be slower and less precise.

-

Setup:

- Download a model of your choice from here. Download any

.binfile and place it in the./whisper/modelsfolder. - Modify the

.envfile by changingTRANSCRIPTION_ENABLEDto"true",TRANSCRIPTION_METHODto"local", and"TRANSCRIPTION_MODEL"with the name of the model you downloaded. While setting a language inTRANSCRIPTION_LANGUAGEis not mandatory, it is recommended for better performance.

- Download a model of your choice from here. Download any

To utilize it in a group chat, you will need to either mention it by using her username with the "@" symbol (e.g., @Sydney) or reply directly to her last message.

-

!help: Displays a message listing all available commands. -

!helpfollowed by a specific command, e.g.,!help reset: Provides detailed information about the selected command. - If you wish to customize the command prefix, you can do so in the

.envfile to better suit your preferences.

Your contributions to Sydney are welcome in any form. Whether you'd like to:

-

Report Issues: If you come across bugs or have ideas for new features, please open an issue to discuss and track these items.

-

Submit Pull Requests (PRs): Feel free to contribute directly by opening pull requests. Your contributions are greatly appreciated and help improve Sydney.

-

If you want us to add a feature open an issue asking for it.

-

In the Projects tab, you'll find a Kanban board that outlines our current objectives and progress.

Your involvement is valued, and you're encouraged to contribute in the way that suits you best.

I, Luisotee am currently open to new job opportunities, including freelance work, contract roles, or permanent positions.

If you have any opportunities, feel free to contact me via the emails provided on my GitHub or LinkedIn profiles.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for assistant

Similar Open Source Tools

assistant

The WhatsApp AI Assistant repository offers a chatbot named Sydney that serves as an AI-powered personal assistant. It utilizes Language Model (LLM) technology to provide various features such as Google/Bing searching, Google Calendar integration, communication capabilities, group chat compatibility, voice message support, basic text reminders, image recognition, and more. Users can interact with Sydney through natural language queries and voice messages. The chatbot can transcribe voice messages using either the Whisper API or a local method. Additionally, Sydney can be used in group chats by mentioning her username or replying to her last message. The repository welcomes contributions in the form of issue reports, pull requests, and requests for new tools. The creators of the project, Veigamann and Luisotee, are open to job opportunities and can be contacted through their GitHub profiles.

garak

Garak is a free tool that checks if a Large Language Model (LLM) can be made to fail in a way that is undesirable. It probes for hallucination, data leakage, prompt injection, misinformation, toxicity generation, jailbreaks, and many other weaknesses. Garak's a free tool. We love developing it and are always interested in adding functionality to support applications.

garak

Garak is a vulnerability scanner designed for LLMs (Large Language Models) that checks for various weaknesses such as hallucination, data leakage, prompt injection, misinformation, toxicity generation, and jailbreaks. It combines static, dynamic, and adaptive probes to explore vulnerabilities in LLMs. Garak is a free tool developed for red-teaming and assessment purposes, focusing on making LLMs or dialog systems fail. It supports various LLM models and can be used to assess their security and robustness.

chatgpt-cli

ChatGPT CLI provides a powerful command-line interface for seamless interaction with ChatGPT models via OpenAI and Azure. It features streaming capabilities, extensive configuration options, and supports various modes like streaming, query, and interactive mode. Users can manage thread-based context, sliding window history, and provide custom context from any source. The CLI also offers model and thread listing, advanced configuration options, and supports GPT-4, GPT-3.5-turbo, and Perplexity's models. Installation is available via Homebrew or direct download, and users can configure settings through default values, a config.yaml file, or environment variables.

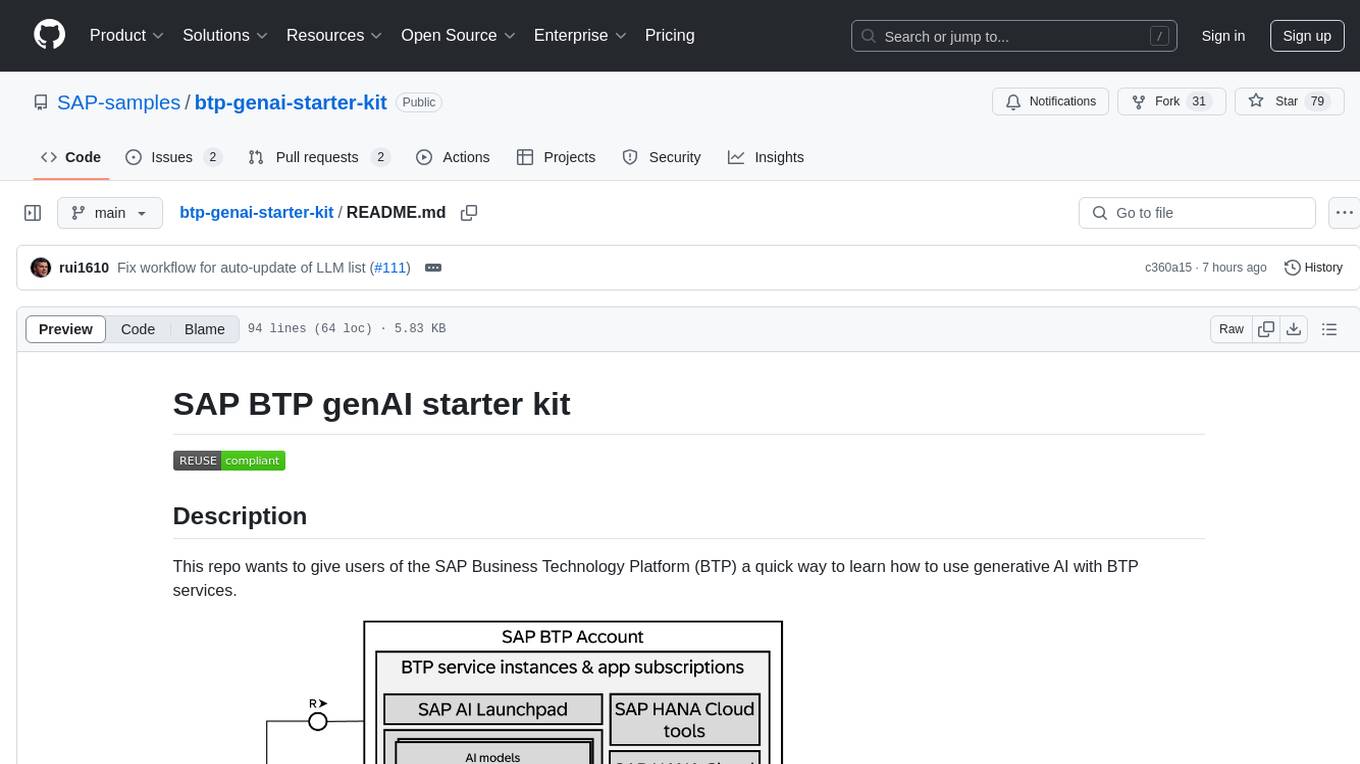

btp-genai-starter-kit

This repository provides a quick way for users of the SAP Business Technology Platform (BTP) to learn how to use generative AI with BTP services. It guides users through setting up the necessary infrastructure, deploying AI models, and running genAI experiments on SAP BTP. The repository includes scripts, examples, and instructions to help users get started with generative AI on the SAP BTP platform.

chatlab

ChatLab is a Python package that simplifies experimenting with OpenAI's chat models. It provides an interactive interface for chatting with the models and registering custom functions. Users can easily create chat experiments, visualize color palettes, work with function registry, create knowledge graphs, and perform direct parallel function calling. The tool enables users to interact with chat models and customize functionalities for various tasks.

SirChatalot

A Telegram bot that proves you don't need a body to have a personality. It can use various text and image generation APIs to generate responses to user messages. For text generation, the bot can use: * OpenAI's ChatGPT API (or other compatible API). Vision capabilities can be used with GPT-4 models. Function calling can be used with Function calling. * Anthropic's Claude API. Vision capabilities can be used with Claude 3 models. Function calling can be used with tool use. * YandexGPT API Bot can also generate images with: * OpenAI's DALL-E * Stability AI * Yandex ART This bot can also be used to generate responses to voice messages. Bot will convert the voice message to text and will then generate a response. Speech recognition can be done using the OpenAI's Whisper model. To use this feature, you need to install the ffmpeg library. This bot is also support working with files, see Files section for more details. If function calling is enabled, bot can generate images and search the web (limited).

vectorflow

VectorFlow is an open source, high throughput, fault tolerant vector embedding pipeline. It provides a simple API endpoint for ingesting large volumes of raw data, processing, and storing or returning the vectors quickly and reliably. The tool supports text-based files like TXT, PDF, HTML, and DOCX, and can be run locally with Kubernetes in production. VectorFlow offers functionalities like embedding documents, running chunking schemas, custom chunking, and integrating with vector databases like Pinecone, Qdrant, and Weaviate. It enforces a standardized schema for uploading data to a vector store and supports features like raw embeddings webhook, chunk validation webhook, S3 endpoint, and telemetry. The tool can be used with the Python client and provides detailed instructions for running and testing the functionalities.

chat-ui

A chat interface using open source models, eg OpenAssistant or Llama. It is a SvelteKit app and it powers the HuggingChat app on hf.co/chat.

WindowsAgentArena

Windows Agent Arena (WAA) is a scalable Windows AI agent platform designed for testing and benchmarking multi-modal, desktop AI agents. It provides researchers and developers with a reproducible and realistic Windows OS environment for AI research, enabling testing of agentic AI workflows across various tasks. WAA supports deploying agents at scale using Azure ML cloud infrastructure, allowing parallel running of multiple agents and delivering quick benchmark results for hundreds of tasks in minutes.

llm2sh

llm2sh is a command-line utility that leverages Large Language Models (LLMs) to translate plain-language requests into shell commands. It provides a convenient way to interact with your system using natural language. The tool supports multiple LLMs for command generation, offers a customizable configuration file, YOLO mode for running commands without confirmation, and is easily extensible with new LLMs and system prompts. Users can set up API keys for OpenAI, Claude, Groq, and Cerebras to use the tool effectively. llm2sh does not store user data or command history, and it does not record or send telemetry by itself, but the LLM APIs may collect and store requests and responses for their purposes.

bolna

Bolna is an open-source platform for building voice-driven conversational applications using large language models (LLMs). It provides a comprehensive set of tools and integrations to handle various aspects of voice-based interactions, including telephony, transcription, LLM-based conversation handling, and text-to-speech synthesis. Bolna simplifies the process of creating voice agents that can perform tasks such as initiating phone calls, transcribing conversations, generating LLM-powered responses, and synthesizing speech. It supports multiple providers for each component, allowing users to customize their setup based on their specific needs. Bolna is designed to be easy to use, with a straightforward local setup process and well-documented APIs. It is also extensible, enabling users to integrate with other telephony providers or add custom functionality.

smartcat

Smartcat is a CLI interface that brings language models into the Unix ecosystem, allowing power users to leverage the capabilities of LLMs in their daily workflows. It features a minimalist design, seamless integration with terminal and editor workflows, and customizable prompts for specific tasks. Smartcat currently supports OpenAI, Mistral AI, and Anthropic APIs, providing access to a range of language models. With its ability to manipulate file and text streams, integrate with editors, and offer configurable settings, Smartcat empowers users to automate tasks, enhance code quality, and explore creative possibilities.

llm-foundry

LLM Foundry is a codebase for training, finetuning, evaluating, and deploying LLMs for inference with Composer and the MosaicML platform. It is designed to be easy-to-use, efficient _and_ flexible, enabling rapid experimentation with the latest techniques. You'll find in this repo: * `llmfoundry/` - source code for models, datasets, callbacks, utilities, etc. * `scripts/` - scripts to run LLM workloads * `data_prep/` - convert text data from original sources to StreamingDataset format * `train/` - train or finetune HuggingFace and MPT models from 125M - 70B parameters * `train/benchmarking` - profile training throughput and MFU * `inference/` - convert models to HuggingFace or ONNX format, and generate responses * `inference/benchmarking` - profile inference latency and throughput * `eval/` - evaluate LLMs on academic (or custom) in-context-learning tasks * `mcli/` - launch any of these workloads using MCLI and the MosaicML platform * `TUTORIAL.md` - a deeper dive into the repo, example workflows, and FAQs

litlyx

Litlyx is a single-line code analytics solution that integrates with every JavaScript/TypeScript framework. It allows you to track 10+ KPIs and custom events for your website or web app. The tool comes with an AI Data Analyst Assistant that can analyze your data, compare data, query metadata, visualize charts, and more. Litlyx is open-source, allowing users to self-host it and create their own version of the dashboard. The tool is user-friendly and supports various JavaScript/TypeScript frameworks, making it versatile for different projects.

termax

Termax is an LLM agent in your terminal that converts natural language to commands. It is featured by: - Personalized Experience: Optimize the command generation with RAG. - Various LLMs Support: OpenAI GPT, Anthropic Claude, Google Gemini, Mistral AI, and more. - Shell Extensions: Plugin with popular shells like `zsh`, `bash` and `fish`. - Cross Platform: Able to run on Windows, macOS, and Linux.

For similar tasks

assistant

The WhatsApp AI Assistant repository offers a chatbot named Sydney that serves as an AI-powered personal assistant. It utilizes Language Model (LLM) technology to provide various features such as Google/Bing searching, Google Calendar integration, communication capabilities, group chat compatibility, voice message support, basic text reminders, image recognition, and more. Users can interact with Sydney through natural language queries and voice messages. The chatbot can transcribe voice messages using either the Whisper API or a local method. Additionally, Sydney can be used in group chats by mentioning her username or replying to her last message. The repository welcomes contributions in the form of issue reports, pull requests, and requests for new tools. The creators of the project, Veigamann and Luisotee, are open to job opportunities and can be contacted through their GitHub profiles.

lettabot

LettaBot is a personal AI assistant that operates across multiple messaging platforms including Telegram, Slack, Discord, WhatsApp, and Signal. It offers features like unified memory, persistent memory, local tool execution, voice message transcription, scheduling, and real-time message updates. Users can interact with LettaBot through various commands and setup wizards. The tool can be used for controlling smart home devices, managing background tasks, connecting to Letta Code, and executing specific operations like file exploration and internet queries. LettaBot ensures security through outbound connections only, restricted tool execution, and access control policies. Development and releases are automated, and troubleshooting guides are provided for common issues.

ChatChat

Chat Chat is a unified chat and search to AI platform with a simple and easy-to-use interface. It supports major AI providers such as Anthropic, OpenAI, Cohere, and Google Gemini, and is easy to self-host. Chat Chat can be used for a variety of tasks, including searching for information, getting help with writing, and translating languages.

databerry

Chaindesk is a no-code platform that allows users to easily set up a semantic search system for personal data without technical knowledge. It supports loading data from various sources such as raw text, web pages, files (Word, Excel, PowerPoint, PDF, Markdown, Plain Text), and upcoming support for web sites, Notion, and Airtable. The platform offers a user-friendly interface for managing datastores, querying data via a secure API endpoint, and auto-generating ChatGPT Plugins for each datastore. Chaindesk utilizes a Vector Database (Qdrant), Openai's text-embedding-ada-002 for embeddings, and has a chunk size of 1024 tokens. The technology stack includes Next.js, Joy UI, LangchainJS, PostgreSQL, Prisma, and Qdrant, inspired by the ChatGPT Retrieval Plugin.

metaso-free-api

Metaso AI Free service supports high-speed streaming output, secret tower AI super network search (full network or academic as well as concise, in-depth, research three modes), zero-configuration deployment, multi-token support. Fully compatible with ChatGPT interface. It also has seven other free APIs available for use. The tool provides various deployment options such as Docker, Docker-compose, Render, Vercel, and native deployment. Users can access the tool for chat completions and token live checks. Note: Reverse API is unstable, it is recommended to use the official Metaso AI website to avoid the risk of banning. This project is for research and learning purposes only, not for commercial use.

AI0x0.com

AI 0x0 is a versatile AI query generation desktop floating assistant application that supports MacOS and Windows. It allows users to utilize AI capabilities in any desktop software to query and generate text, images, audio, and video data, helping them work more efficiently. The application features a dynamic desktop floating ball, floating dialogue bubbles, customizable presets, conversation bookmarking, preset packages, network acceleration, query mode, input mode, mouse navigation, deep customization of ChatGPT Next Web, support for full-format libraries, online search, voice broadcasting, voice recognition, voice assistant, application plugins, multi-model support, online text and image generation, image recognition, frosted glass interface, light and dark theme adaptation for each language model, and free access to all language models except Chat0x0 with a key.

discourse-chatbot

The discourse-chatbot is an original AI chatbot for Discourse forums that allows users to converse with the bot in posts or chat channels. Users can customize the character of the bot, enable RAG mode for expert answers, search Wikipedia, news, and Google, provide market data, perform accurate math calculations, and experiment with vision support. The bot uses cutting-edge Open AI API and supports Azure and proxy server connections. It includes a quota system for access management and can be used in RAG mode or basic bot mode. The setup involves creating embeddings to make the bot aware of forum content and setting up bot access permissions based on trust levels. Users must obtain an API token from Open AI and configure group quotas to interact with the bot. The plugin is extensible to support other cloud bots and content search beyond the provided set.

ChatGPT-Telegram-Bot

The ChatGPT Telegram Bot is a powerful Telegram bot that utilizes various GPT models, including GPT3.5, GPT4, GPT4 Turbo, GPT4 Vision, DALL·E 3, Groq Mixtral-8x7b/LLaMA2-70b, and Claude2.1/Claude3 opus/sonnet API. It enables users to engage in efficient conversations and information searches on Telegram. The bot supports multiple AI models, online search with DuckDuckGo and Google, user-friendly interface, efficient message processing, document interaction, Markdown rendering, and convenient deployment options like Zeabur, Replit, and Docker. Users can set environment variables for configuration and deployment. The bot also provides Q&A functionality, supports model switching, and can be deployed in group chats with whitelisting. The project is open source under GPLv3 license.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.