instructor-go

structured outputs for llms

Stars: 167

Instructor Go is a library that simplifies working with structured outputs from large language models (LLMs). Built on top of `invopop/jsonschema` and utilizing `jsonschema` Go struct tags, it provides a user-friendly API for managing validation, retries, and streaming responses without changing code logic. The library supports LLM provider APIs such as OpenAI, Anthropic, Cohere, and Google, capturing and returning usage data in responses. Users can easily add metadata to struct fields using `jsonschema` tags to enhance model awareness and streamline workflows.

README:

Instructor Go is a library that makes it a breeze to work with structured outputs from large language models (LLMs).

Built on top of invopop/jsonschema and utilizing jsonschema Go struct tags (so no changing code logic), it provides a simple and user-friendly API to manage validation, retries, and streaming responses. Get ready to supercharge your LLM workflows!

Install the package into your code with:

go get "github.com/instructor-ai/instructor-go/pkg/instructor"Import in your code:

import (

"github.com/instructor-ai/instructor-go/pkg/instructor"

)As shown in the example below, by adding extra metadata to each struct field (via jsonschema tag) we want the model to be made aware of:

For more information on the

jsonschematags available, see thejsonschemagodoc.

Running

export OPENAI_API_KEY=<Your OpenAI API Key>

go run examples/user/main.gopackage main

import (

"context"

"fmt"

"os"

"github.com/instructor-ai/instructor-go/pkg/instructor"

openai "github.com/sashabaranov/go-openai"

)

type Person struct {

Name string `json:"name" jsonschema:"title=the name,description=The name of the person,example=joe,example=lucy"`

Age int `json:"age,omitempty" jsonschema:"title=the age,description=The age of the person,example=25,example=67"`

}

func main() {

ctx := context.Background()

client := instructor.FromOpenAI(

openai.NewClient(os.Getenv("OPENAI_API_KEY")),

instructor.WithMode(instructor.ModeJSON),

instructor.WithMaxRetries(3),

)

var person Person

resp, err := client.CreateChatCompletion(

ctx,

openai.ChatCompletionRequest{

Model: openai.GPT4o,

Messages: []openai.ChatCompletionMessage{

{

Role: openai.ChatMessageRoleUser,

Content: "Extract Robby is 22 years old.",

},

},

},

&person,

)

_ = resp // sends back original response so no information loss from original API

if err != nil {

panic(err)

}

fmt.Printf(`

Name: %s

Age: %d

`, person.Name, person.Age)

/*

Name: Robby

Age: 22

*/

}See all examples here examples/README.md

Instructor Go supports the following LLM provider APIs:

These provider APIs include usage data (input and output token counts) in their responses, which Instructor Go captures and returns in the response object.

Usage is summed for retries. If multiple requests are needed to get a valid response, the usage from all requests is summed and returned. Even if Instructor fails to get a valid response after the maximum number of retries, the usage sum from all attempts is still returned.

Usage counting with OpenAI

resp, err := client.CreateChatCompletion(

ctx,

openai.ChatCompletionRequest{

Model: openai.GPT4o,

Messages: []openai.ChatCompletionMessage{

{

Role: openai.ChatMessageRoleUser,

Content: "Extract Robby is 22 years old.",

},

},

},

&person,

)

fmt.Printf("Input tokens: %d\n", resp.Usage.PromptTokens)

fmt.Printf("Output tokens: %d\n", resp.Usage.CompletionTokens)

fmt.Printf("Total tokens: %d\n", resp.Usage.TotalTokens)Usage counting with Anthropic

resp, err := client.CreateMessages(

ctx,

anthropic.MessagesRequest{

Model: anthropic.ModelClaude3Haiku20240307,

Messages: []anthropic.Message{

anthropic.NewUserTextMessage("Classify the following support ticket: My account is locked and I can't access my billing info."),

},

MaxTokens: 500,

},

&prediction,

)

fmt.Printf("Input tokens: %d\n", resp.Usage.InputTokens)

fmt.Printf("Output tokens: %d\n", resp.Usage.OutputTokens)Usage counting with Cohere

resp, err := client.Chat(

ctx,

&cohere.ChatRequest{

Model: "command-r-plus",

Message: "Tell me about the history of artificial intelligence up to year 2000",

MaxTokens: 2500,

},

&historicalFact,

)

fmt.Printf("Input tokens: %d\n", int(*resp.Meta.Tokens.InputTokens))

fmt.Printf("Output tokens: %d\n", int(*resp.Meta.Tokens.OutputTokens))For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for instructor-go

Similar Open Source Tools

instructor-go

Instructor Go is a library that simplifies working with structured outputs from large language models (LLMs). Built on top of `invopop/jsonschema` and utilizing `jsonschema` Go struct tags, it provides a user-friendly API for managing validation, retries, and streaming responses without changing code logic. The library supports LLM provider APIs such as OpenAI, Anthropic, Cohere, and Google, capturing and returning usage data in responses. Users can easily add metadata to struct fields using `jsonschema` tags to enhance model awareness and streamline workflows.

LlmTornado

LLM Tornado is a .NET library designed to simplify the consumption of various large language models (LLMs) from providers like OpenAI, Anthropic, Cohere, Google, Azure, Groq, and self-hosted APIs. It acts as an aggregator, allowing users to easily switch between different LLM providers with just a change in argument. Users can perform tasks such as chatting with documents, voice calling with AI, orchestrating assistants, generating images, and more. The library exposes capabilities through vendor extensions, making it easy to integrate and use multiple LLM providers simultaneously.

bellman

Bellman is a unified interface to interact with language and embedding models, supporting various vendors like VertexAI/Gemini, OpenAI, Anthropic, VoyageAI, and Ollama. It consists of a library for direct interaction with models and a service 'bellmand' for proxying requests with one API key. Bellman simplifies switching between models, vendors, and common tasks like chat, structured data, tools, and binary input. It addresses the lack of official SDKs for major players and differences in APIs, providing a single proxy for handling different models. The library offers clients for different vendors implementing common interfaces for generating and embedding text, enabling easy interchangeability between models.

LightRAG

LightRAG is a PyTorch library designed for building and optimizing Retriever-Agent-Generator (RAG) pipelines. It follows principles of simplicity, quality, and optimization, offering developers maximum customizability with minimal abstraction. The library includes components for model interaction, output parsing, and structured data generation. LightRAG facilitates tasks like providing explanations and examples for concepts through a question-answering pipeline.

gollm

gollm is a Go package designed to simplify interactions with Large Language Models (LLMs) for AI engineers and developers. It offers a unified API for multiple LLM providers, easy provider and model switching, flexible configuration options, advanced prompt engineering, prompt optimization, memory retention, structured output and validation, provider comparison tools, high-level AI functions, robust error handling and retries, and extensible architecture. The package enables users to create AI-powered golems for tasks like content creation workflows, complex reasoning tasks, structured data generation, model performance analysis, prompt optimization, and creating a mixture of agents.

whetstone.chatgpt

Whetstone.ChatGPT is a simple light-weight library that wraps the Open AI API with support for dependency injection. It supports features like GPT 4, GPT 3.5 Turbo, chat completions, audio transcription and translation, vision completions, files, fine tunes, images, embeddings, moderations, and response streaming. The library provides a video walkthrough of a Blazor web app built on it and includes examples such as a command line bot. It offers quickstarts for dependency injection, chat completions, completions, file handling, fine tuning, image generation, and audio transcription.

go-utcp

The Universal Tool Calling Protocol (UTCP) is a modern, flexible, and scalable standard for defining and interacting with tools across various communication protocols. It emphasizes scalability, interoperability, and ease of use. It provides built-in transports for HTTP, CLI, Server-Sent Events, streaming HTTP, GraphQL, MCP, and UDP. Users can use the library to construct a client and call tools using the available transports. The library also includes utilities for variable substitution, in-memory repository for storing providers and tools, and OpenAPI conversion to UTCP manuals.

UniChat

UniChat is a pipeline tool for creating online and offline chat-bots in Unity. It leverages Unity.Sentis and text vector embedding technology to enable offline mode text content search based on vector databases. The tool includes a chain toolkit for embedding LLM and Agent in games, along with middleware components for Text to Speech, Speech to Text, and Sub-classifier functionalities. UniChat also offers a tool for invoking tools based on ReActAgent workflow, allowing users to create personalized chat scenarios and character cards. The tool provides a comprehensive solution for designing flexible conversations in games while maintaining developer's ideas.

modelfusion

ModelFusion is an abstraction layer for integrating AI models into JavaScript and TypeScript applications, unifying the API for common operations such as text streaming, object generation, and tool usage. It provides features to support production environments, including observability hooks, logging, and automatic retries. You can use ModelFusion to build AI applications, chatbots, and agents. ModelFusion is a non-commercial open source project that is community-driven. You can use it with any supported provider. ModelFusion supports a wide range of models including text generation, image generation, vision, text-to-speech, speech-to-text, and embedding models. ModelFusion infers TypeScript types wherever possible and validates model responses. ModelFusion provides an observer framework and logging support. ModelFusion ensures seamless operation through automatic retries, throttling, and error handling mechanisms. ModelFusion is fully tree-shakeable, can be used in serverless environments, and only uses a minimal set of dependencies.

uzu-swift

Swift package for uzu, a high-performance inference engine for AI models on Apple Silicon. Deploy AI directly in your app with zero latency, full data privacy, and no inference costs. Key features include a simple, high-level API, specialized configurations for performance boosts, broad model support, and an observable model manager. Easily set up projects, obtain an API key, choose a model, and run it with corresponding identifiers. Examples include chat, speedup with speculative decoding, chat with dynamic context, chat with static context, summarization, classification, cloud, and structured output. Troubleshooting available via Discord or email. Licensed under MIT.

azure-functions-openai-extension

Azure Functions OpenAI Extension is a project that adds support for OpenAI LLM (GPT-3.5-turbo, GPT-4) bindings in Azure Functions. It provides NuGet packages for various functionalities like text completions, chat completions, assistants, embeddings generators, and semantic search. The project requires .NET 6 SDK or greater, Azure Functions Core Tools v4.x, and specific settings in Azure Function or local settings for development. It offers features like text completions, chat completion, assistants with custom skills, embeddings generators for text relatedness, and semantic search using vector databases. The project also includes examples in C# and Python for different functionalities.

instructor-js

Instructor is a Typescript library for structured extraction in Typescript, powered by llms, designed for simplicity, transparency, and control. It stands out for its simplicity, transparency, and user-centric design. Whether you're a seasoned developer or just starting out, you'll find Instructor's approach intuitive and steerable.

instructor

Instructor is a tool that provides structured outputs from Large Language Models (LLMs) in a reliable manner. It simplifies the process of extracting structured data by utilizing Pydantic for validation, type safety, and IDE support. With Instructor, users can define models and easily obtain structured data without the need for complex JSON parsing, error handling, or retries. The tool supports automatic retries, streaming support, and extraction of nested objects, making it production-ready for various AI applications. Trusted by a large community of developers and companies, Instructor is used by teams at OpenAI, Google, Microsoft, AWS, and YC startups.

ChatRex

ChatRex is a Multimodal Large Language Model (MLLM) designed to seamlessly integrate fine-grained object perception and robust language understanding. By adopting a decoupled architecture with a retrieval-based approach for object detection and leveraging high-resolution visual inputs, ChatRex addresses key challenges in perception tasks. It is powered by the Rexverse-2M dataset with diverse image-region-text annotations. ChatRex can be applied to various scenarios requiring fine-grained perception, such as object detection, grounded conversation, grounded image captioning, and region understanding.

agentic-rag-for-dummies

Agentic RAG for Dummies is a production-ready system that demonstrates how to build an Agentic RAG (Retrieval-Augmented Generation) system using LangGraph with minimal code. It bridges the gap between basic RAG tutorials and production readiness by providing learning materials and deployable code. The system includes features like conversation memory, hierarchical indexing, query clarification, agent orchestration, multi-agent map-reduce, self-correction, and context compression. Users can interact with the system through an interactive notebook for learning or a modular project for production-ready architecture.

generative-ai

The 'Generative AI' repository provides a C# library for interacting with Google's Generative AI models, specifically the Gemini models. It allows users to access and integrate the Gemini API into .NET applications, supporting functionalities such as listing available models, generating content, creating tuned models, working with large files, starting chat sessions, and more. The repository also includes helper classes and enums for Gemini API aspects. Authentication methods include API key, OAuth, and various authentication modes for Google AI and Vertex AI. The package offers features for both Google AI Studio and Google Cloud Vertex AI, with detailed instructions on installation, usage, and troubleshooting.

For similar tasks

instructor-go

Instructor Go is a library that simplifies working with structured outputs from large language models (LLMs). Built on top of `invopop/jsonschema` and utilizing `jsonschema` Go struct tags, it provides a user-friendly API for managing validation, retries, and streaming responses without changing code logic. The library supports LLM provider APIs such as OpenAI, Anthropic, Cohere, and Google, capturing and returning usage data in responses. Users can easily add metadata to struct fields using `jsonschema` tags to enhance model awareness and streamline workflows.

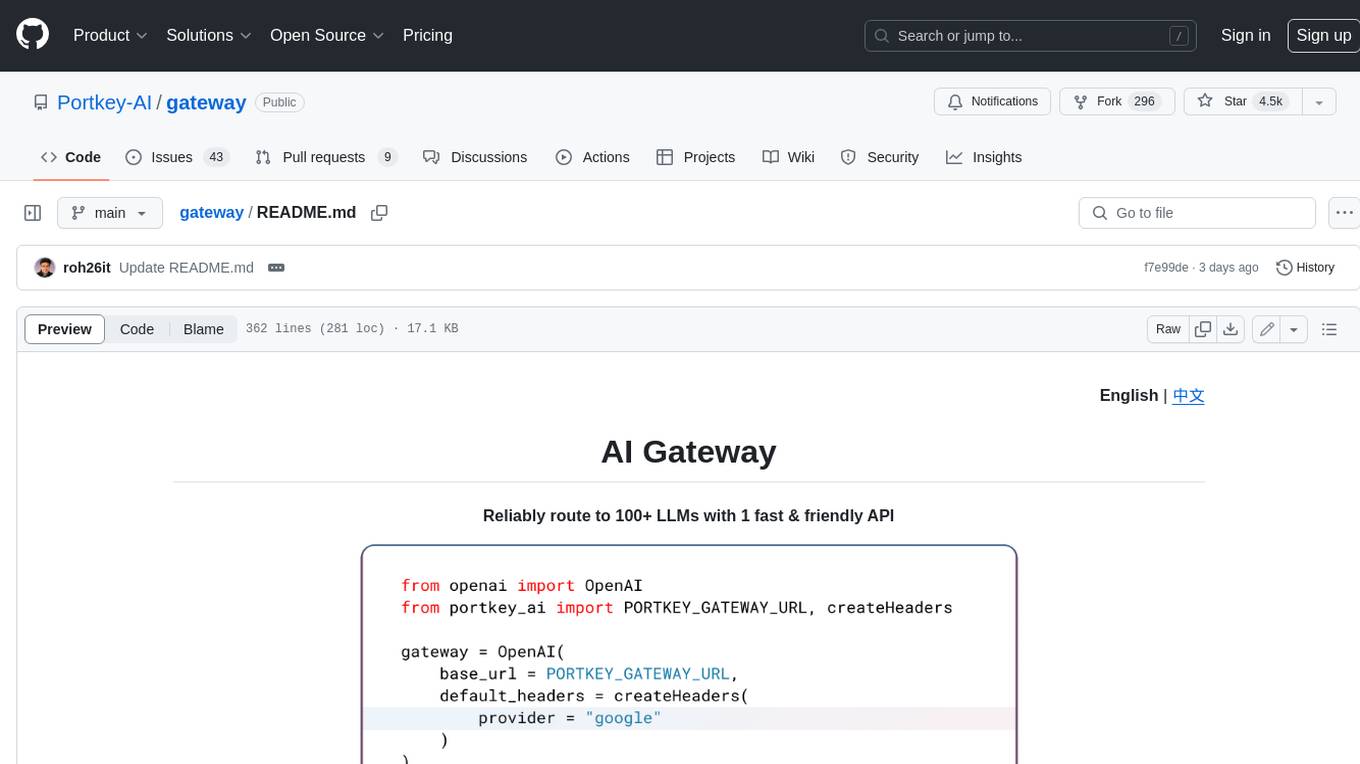

gateway

Gateway is a tool that streamlines requests to 100+ open & closed source models with a unified API. It is production-ready with support for caching, fallbacks, retries, timeouts, load balancing, and can be edge-deployed for minimum latency. It is blazing fast with a tiny footprint, supports load balancing across multiple models, providers, and keys, ensures app resilience with fallbacks, offers automatic retries with exponential fallbacks, allows configurable request timeouts, supports multimodal routing, and can be extended with plug-in middleware. It is battle-tested over 300B tokens and enterprise-ready for enhanced security, scale, and custom deployments.

gemini-ai

Gemini AI is a Ruby Gem designed to provide low-level access to Google's generative AI services through Vertex AI, Generative Language API, or AI Studio. It allows users to interact with Gemini to build abstractions on top of it. The Gem provides functionalities for tasks such as generating content, embeddings, predictions, and more. It supports streaming capabilities, server-sent events, safety settings, system instructions, JSON format responses, and tools (functions) calling. The Gem also includes error handling, development setup, publishing to RubyGems, updating the README, and references to resources for further learning.

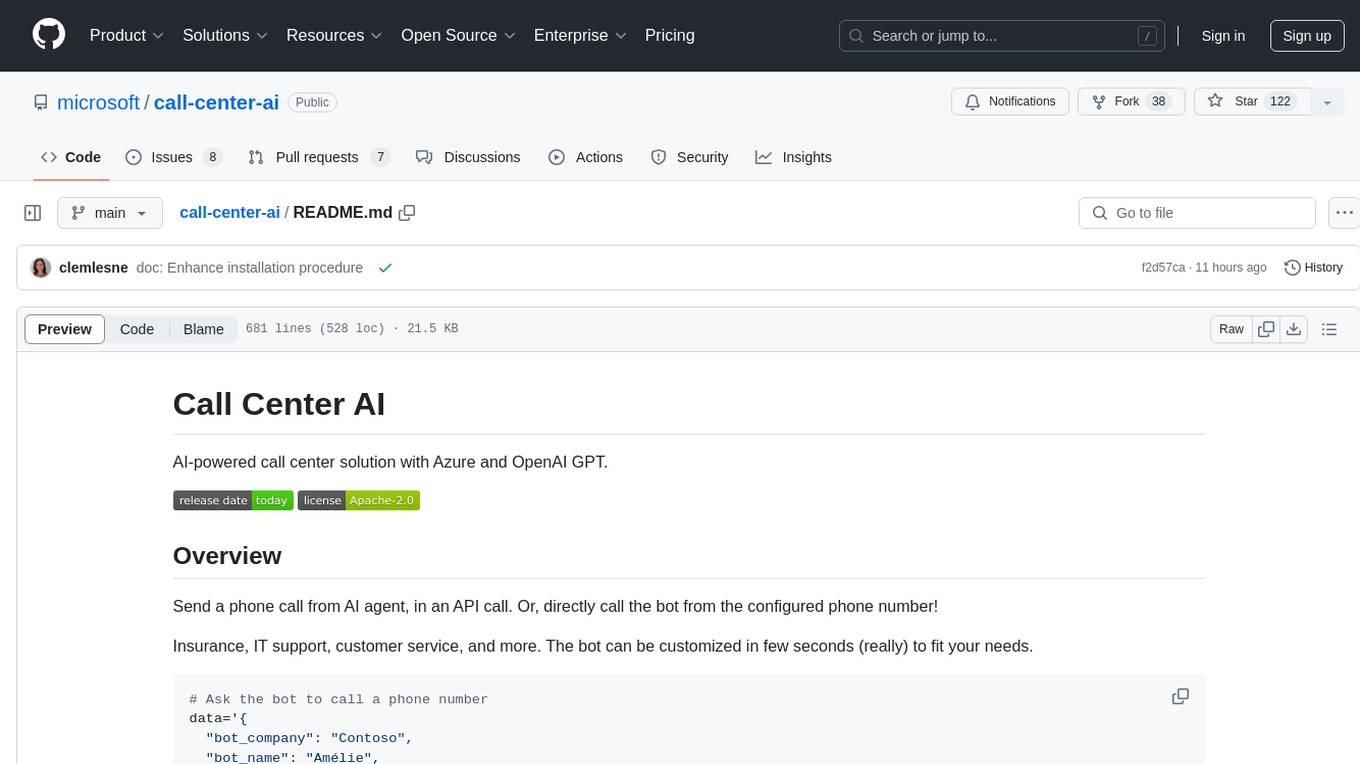

call-center-ai

Call Center AI is an AI-powered call center solution leveraging Azure and OpenAI GPT. It allows for AI agent-initiated phone calls or direct calls to the bot from a configured phone number. The bot is customizable for various industries like insurance, IT support, and customer service, with features such as accessing claim information, conversation history, language change, SMS sending, and more. The project is a proof of concept showcasing the integration of Azure Communication Services, Azure Cognitive Services, and Azure OpenAI for an automated call center solution.

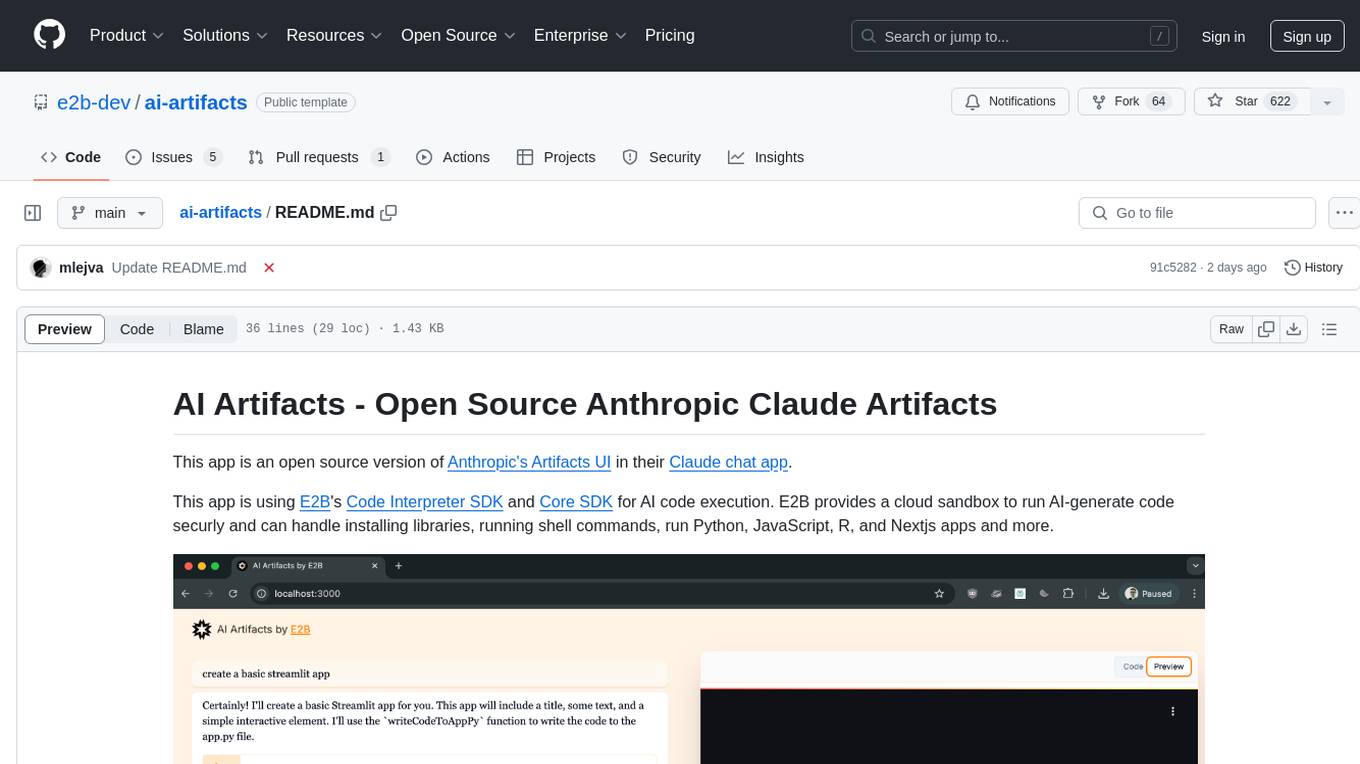

ai-artifacts

AI Artifacts is an open source tool that replicates Anthropic's Artifacts UI in the Claude chat app. It utilizes E2B's Code Interpreter SDK and Core SDK for secure AI code execution in a cloud sandbox environment. Users can run AI-generated code in various languages such as Python, JavaScript, R, and Nextjs apps. The tool also supports running AI-generated Python in Jupyter notebook, Next.js apps, and Streamlit apps. Additionally, it offers integration with Vercel AI SDK for tool calling and streaming responses from the model.

tambo

tambo ai is a React library that simplifies the process of building AI assistants and agents in React by handling thread management, state persistence, streaming responses, AI orchestration, and providing a compatible React UI library. It eliminates React boilerplate for AI features, allowing developers to focus on creating exceptional user experiences with clean React hooks that seamlessly integrate with their codebase.

dive

Dive is an AI toolkit for Go that enables the creation of specialized teams of AI agents and seamless integration with leading LLMs. It offers a CLI and APIs for easy integration, with features like creating specialized agents, hierarchical agent systems, declarative configuration, multiple LLM support, extended reasoning, model context protocol, advanced model settings, tools for agent capabilities, tool annotations, streaming, CLI functionalities, thread management, confirmation system, deep research, and semantic diff. Dive also provides semantic diff analysis, unified interface for LLM providers, tool system with annotations, custom tool creation, and support for various verified models. The toolkit is designed for developers to build AI-powered applications with rich agent capabilities and tool integrations.

aegra

Aegra is a self-hosted AI agent backend platform that provides LangGraph power without vendor lock-in. Built with FastAPI + PostgreSQL, it offers complete control over agent orchestration for teams looking to escape vendor lock-in, meet data sovereignty requirements, enable custom deployments, and optimize costs. Aegra is Agent Protocol compliant and perfect for teams seeking a free, self-hosted alternative to LangGraph Platform with zero lock-in, full control, and compatibility with existing LangGraph Client SDK.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.