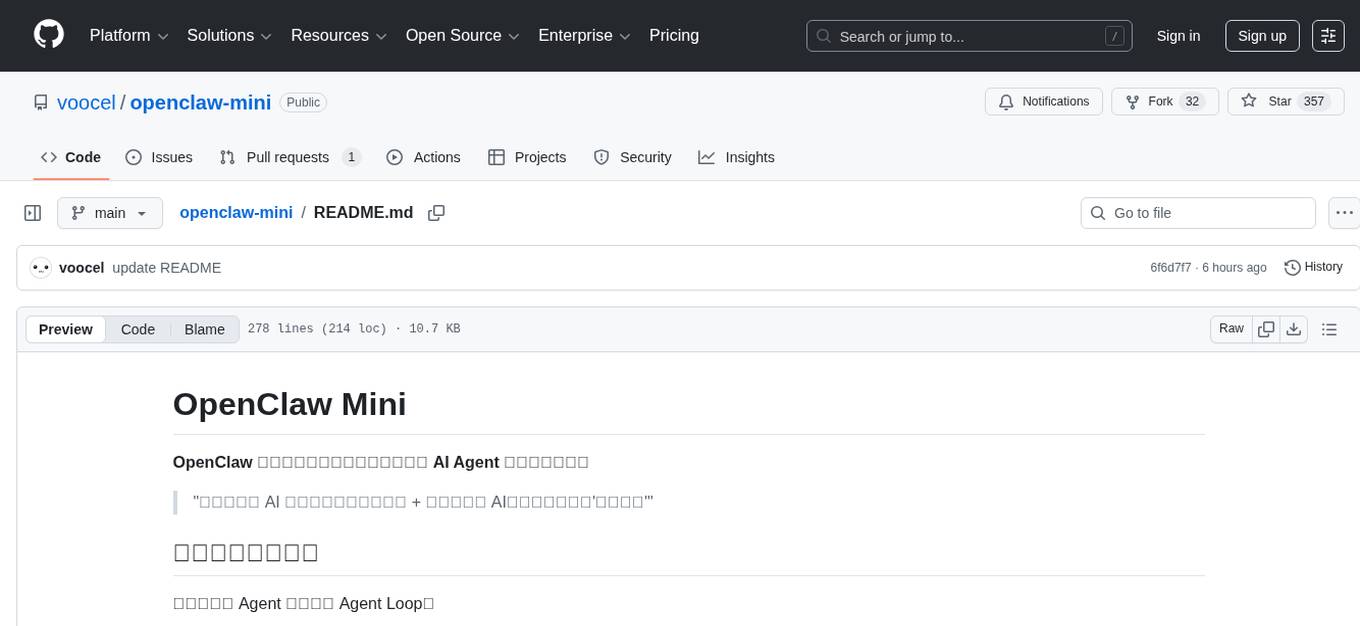

openclaw-mini

🦞 OpenClaw 核心架构的极简复现,涵盖 sessionKey 会话域、队列串行、工具化记忆检索、按需上下文加载、可扩展技能与主动心跳唤醒机制

Stars: 357

OpenClaw Mini is a simplified reproduction of the core architecture of OpenClaw, designed for learning system-level design of AI agents. It focuses on understanding the Agent Loop, session persistence, context management, long-term memory, skill systems, and active awakening. The project provides a minimal implementation to help users grasp the core design concepts of a production-level AI agent system.

README:

OpenClaw 核心架构的精简复现,用于学习 AI Agent 的系统级设计。

"没有记忆的 AI 只是函数映射,有记忆 + 主动唤醒的 AI,才是会演化的'生命系统'"

网上大多数 Agent 教程只讲 Agent Loop:

while tool_calls:

response = llm.generate(messages)

for tool in tools:

result = tool.execute()

messages.append(result)这不是真正的 Agent 架构。 一个生产级 Agent 需要的是"系统级最佳实践"。

OpenClaw 是一个超 43w 行的复杂 Agent 系统,本项目从中提炼出核心设计与最小实现,帮助你理解:

- Agent Loop 的双层循环与 EventStream 事件流

- 会话持久化与上下文管理(裁剪 + 摘要压缩)

- 长期记忆、技能系统、主动唤醒的真实实现

- 多 Provider 适配(Anthropic / OpenAI / Google / Groq 等 22+ 提供商)

本项目按学习价值分为三层,建议按 核心 → 扩展 → 工程 的顺序阅读:

┌─────────────────────────────────────────────────────────────┐

│ [工程层] Production │

│ 生产级防护与控制,学习可跳过 │

│ │

│ session-key · tool-policy · command-queue │

│ sandbox-paths · context-window-guard · tool-result-guard │

├─────────────────────────────────────────────────────────────┤

│ [扩展层] Extended │

│ openclaw 特有的高级功能,非通用 Agent 必需 │

│ │

│ Memory (长期记忆) · Skills (技能系统) · Heartbeat (主动唤醒) │

├─────────────────────────────────────────────────────────────┤

│ [核心层] Core │

│ 任何 Agent 都需要的基础能力 ← 优先阅读 │

│ │

│ Agent Loop (双层循环) EventStream (18 种类型化事件) │

│ Session (JSONL 持久化) Context (加载 + 裁剪 + 摘要压缩) │

│ Tools (工具抽象+内置) Provider (多模型适配) │

└─────────────────────────────────────────────────────────────┘

| 模块 | 文件 | 核心职责 | openclaw 对应 |

|---|---|---|---|

| Agent | agent.ts |

入口 + subscribe/emit 事件分发 | agent.js |

| Agent Loop | agent-loop.ts |

双层循环 (outer=follow-up, inner=tools+steering) | agent-loop.js |

| EventStream | agent-events.ts |

18 种 MiniAgentEvent 判别联合 + 异步推拉 |

types.d.ts AgentEvent |

| Session | session.ts |

JSONL 持久化、历史管理 | session-manager.ts |

| Context | context/loader.ts |

按需加载 AGENTS.md 等 bootstrap 文件 | bootstrap-files.ts |

| Pruning | context/pruning.ts |

三层递进裁剪 (tool_result → assistant → 保留最近) | context-pruning/pruner.ts |

| Compaction | context/compaction.ts |

自适应分块摘要压缩 | compaction.ts |

| Tools | tools/*.ts |

工具抽象 + 7 个内置工具 | src/tools/ |

| Provider | provider/*.ts |

多模型适配层 (基于 pi-ai, 22+ 提供商) | pi-ai |

| 模块 | 文件 | 核心职责 | openclaw 对应 |

|---|---|---|---|

| Memory | memory.ts |

长期记忆 (关键词检索 + 时间衰减) | memory/manager.ts |

| Skills | skills.ts |

SKILL.md frontmatter + 触发词匹配 | agents/skills/ |

| Heartbeat | heartbeat.ts |

两层架构: wake 请求合并 + runner 调度 |

heartbeat-runner.ts + heartbeat-wake.ts

|

| 模块 | 文件 | 核心职责 |

|---|---|---|

| Session Key | session-key.ts |

多 agent 会话键规范化 (agent:id:session) |

| Tool Policy | tool-policy.ts |

工具访问三级控制 (allow/deny/none) |

| Command Queue | command-queue.ts |

并发 lane 控制 (session 串行 + global 并行) |

| Tool Result Guard | session-tool-result-guard.ts |

自动补齐缺失的 tool_result |

| Context Window Guard | context-window-guard.ts |

上下文窗口溢出保护 |

| Sandbox Paths | sandbox-paths.ts |

路径安全检查 |

问题:简单 while 循环无法处理 follow-up、steering injection、上下文溢出等复杂场景。

openclaw 方案:双层循环 + EventStream 事件流

// agent-loop.ts — 返回 EventStream,IIFE 推送事件

function runAgentLoop(params): EventStream<MiniAgentEvent, MiniAgentResult> {

const stream = createMiniAgentStream();

(async () => {

// outer loop: follow-up 循环(处理 end_turn / tool_use 继续)

while (outerTurn < maxOuterTurns) {

// inner loop: 工具执行 + steering injection

// stream.push({ type: "tool_execution_start", ... })

}

stream.end({ text, turns, toolCalls });

})();

return stream; // 调用方 for-await 消费

}事件订阅(对齐 pi-agent-core Agent.subscribe):

const agent = new Agent({ apiKey, provider: "anthropic" });

const unsubscribe = agent.subscribe((event) => {

switch (event.type) {

case "message_delta": // 流式文本

process.stdout.write(event.delta);

break;

case "tool_execution_start": // 工具开始

console.log(`[${event.toolName}]`, event.args);

break;

case "agent_error": // 运行错误

console.error(event.error);

break;

}

});

const result = await agent.run(sessionKey, "列出当前目录的文件");

unsubscribe();问题:Agent 重启后如何恢复对话上下文?

// session.ts — 追加写入,每行一条消息

async append(sessionId: string, message: Message): Promise<void> {

const filePath = this.getFilePath(sessionId);

await fs.mkdir(path.dirname(filePath), { recursive: true });

await fs.appendFile(filePath, JSON.stringify(message) + "\n");

}问题:上下文窗口有限,如何在不丢失关键信息的情况下控制大小?

三层递进策略:

- Pruning — 裁剪旧的 tool_result(保留最近 N 条完整)

- Compaction — 超过阈值后,旧消息压缩为"历史摘要"

- Bootstrap — 按需加载 AGENTS.md 等配置文件(超长文件 head+tail 截断)

问题:如何让 Agent "记住"跨会话的信息?

// memory.ts — 关键词匹配 + 时间衰减

async search(query: string, limit = 5): Promise<MemorySearchResult[]> {

const queryTerms = query.toLowerCase().split(/\s+/);

for (const entry of this.entries) {

let score = 0;

for (const term of queryTerms) {

if (text.includes(term)) score += 1;

if (entry.tags.some(t => t.includes(term))) score += 0.5;

}

const recencyBoost = Math.max(0, 1 - ageHours / (24 * 30));

score += recencyBoost * 0.3;

}

}openclaw 用 SQLite-vec 做向量语义搜索 + BM25 关键词搜索,本项目简化为纯关键词。

问题:Agent 如何"主动"工作,而不只是被动响应?

两层架构:

- HeartbeatWake(请求合并层):多来源触发 (interval/cron/exec/requested) → 250ms 合并窗口 → 双重缓冲

- HeartbeatRunner(调度层):活跃时间检查 → HEARTBEAT.md 解析 → 空内容跳过 → 重复抑制

| 设计点 | 为什么这样做 |

|---|---|

| setTimeout 而非 setInterval | 精确计算下次运行时间,避免漂移 |

| 250ms 合并窗口 | 防止多个事件同时触发 |

| 双重缓冲 | 运行中收到新请求不丢失 |

| 重复抑制 | 24h 内相同消息不重复发送 |

| 模式 | 所在文件 | 说明 |

|---|---|---|

| EventStream 异步推拉 | agent-events.ts |

push/asyncIterator/end/result |

| Subscribe/Emit 观察者 | agent.ts |

listeners Set + subscribe 返回 unsubscribe |

| 双层循环 | agent-loop.ts |

outer (follow-up) + inner (tools+steering) |

| JSONL 追加日志 | session.ts |

每行一条消息,追加写入 |

| 三层递进裁剪 | context/pruning.ts |

tool_result → assistant → 保留最近 |

| 自适应分块摘要 | context/compaction.ts |

按 token 分块,逐块摘要 |

| 双重缓冲调度 | heartbeat.ts |

running + scheduled 状态机 |

| 三级编译策略 | tool-policy.ts |

allow/deny/none → 过滤工具列表 |

cd examples/openclaw-mini

pnpm install

# Anthropic (默认)

export ANTHROPIC_API_KEY=sk-xxx

pnpm dev

# OpenAI

pnpm dev -- --provider openai

# (需要 OPENAI_API_KEY 环境变量)

# Google

pnpm dev -- --provider google

# (需要 GEMINI_API_KEY 环境变量)

# 指定模型

pnpm dev -- --provider openai --model gpt-4o

# 指定 agentId

pnpm dev -- --agent my-agentimport { Agent } from "openclaw-mini";

const agent = new Agent({

provider: "anthropic", // 支持 22+ 提供商

// apiKey 不传则自动从环境变量读取

agentId: "main",

workspaceDir: process.cwd(),

enableMemory: true,

enableContext: true,

enableSkills: true,

enableHeartbeat: false,

});

// 事件订阅

const unsubscribe = agent.subscribe((event) => {

if (event.type === "message_delta") {

process.stdout.write(event.delta);

}

});

const result = await agent.run("session-1", "请列出当前目录的文件");

console.log(`${result.turns} 轮, ${result.toolCalls} 次工具调用`);

unsubscribe();-

核心层优先:

agent-loop.ts→agent.ts→agent-events.ts→session.ts→context/ - 理解事件流:subscribe/emit 模式 + EventStream 异步推拉

-

扩展层选读:

memory.ts→skills.ts→heartbeat.ts(按兴趣) - 对照 openclaw 源码:验证简化版是否抓住了核心

- 工程层跳过:除非你在做生产级 Agent,否则不需要关注

MIT

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for openclaw-mini

Similar Open Source Tools

openclaw-mini

OpenClaw Mini is a simplified reproduction of the core architecture of OpenClaw, designed for learning system-level design of AI agents. It focuses on understanding the Agent Loop, session persistence, context management, long-term memory, skill systems, and active awakening. The project provides a minimal implementation to help users grasp the core design concepts of a production-level AI agent system.

WenShape

WenShape is a context engineering system for creating long novels. It addresses the challenge of narrative consistency over thousands of words by using an orchestrated writing process, dynamic fact tracking, and precise token budget management. All project data is stored in YAML/Markdown/JSONL text format, naturally supporting Git version control.

topsha

LocalTopSH is an AI Agent Framework designed for companies and developers who require 100% on-premise AI agents with data privacy. It supports various OpenAI-compatible LLM backends and offers production-ready security features. The framework allows simple deployment using Docker compose and ensures that data stays within the user's network, providing full control and compliance. With cost-effective scaling options and compatibility in regions with restrictions, LocalTopSH is a versatile solution for deploying AI agents on self-hosted infrastructure.

NeuroSploit

NeuroSploit v3 is an advanced security assessment platform that combines AI-driven autonomous agents with 100 vulnerability types, per-scan isolated Kali Linux containers, false-positive hardening, exploit chaining, and a modern React web interface with real-time monitoring. It offers features like 100 Vulnerability Types, Autonomous Agent with 3-stream parallel pentest, Per-Scan Kali Containers, Anti-Hallucination Pipeline, Exploit Chain Engine, WAF Detection & Bypass, Smart Strategy Adaptation, Multi-Provider LLM, Real-Time Dashboard, and Sandbox Dashboard. The tool is designed for authorized security testing purposes only, ensuring compliance with laws and regulations.

z.ai2api_python

Z.AI2API Python is a lightweight OpenAI API proxy service that integrates seamlessly with existing applications. It supports the full functionality of GLM-4.5 series models and features high-performance streaming responses, enhanced tool invocation, support for thinking mode, integration with search models, Docker deployment, session isolation for privacy protection, flexible configuration via environment variables, and intelligent upstream model routing.

openai-forward

OpenAI-Forward is an efficient forwarding service implemented for large language models. Its core features include user request rate control, token rate limiting, intelligent prediction caching, log management, and API key management, aiming to provide efficient and convenient model forwarding services. Whether proxying local language models or cloud-based language models like LocalAI or OpenAI, OpenAI-Forward makes it easy. Thanks to support from libraries like uvicorn, aiohttp, and asyncio, OpenAI-Forward achieves excellent asynchronous performance.

AIxVuln

AIxVuln is an automated vulnerability discovery and verification system based on large models (LLM) + function calling + Docker sandbox. The system manages 'projects' through a web UI/desktop client, automatically organizing multiple 'digital humans' for environment setup, code auditing, vulnerability verification, and report generation. It utilizes an isolated Docker environment for dependency installation, service startup, PoC verification, and evidence collection, ultimately producing downloadable vulnerability reports. The system has already discovered dozens of vulnerabilities in real open-source projects.

MediCareAI

MediCareAI is an intelligent disease management system powered by AI, designed for patient follow-up and disease tracking. It integrates medical guidelines, AI-powered diagnosis, and document processing to provide comprehensive healthcare support. The system includes features like user authentication, patient management, AI diagnosis, document processing, medical records management, knowledge base system, doctor collaboration platform, and admin system. It ensures privacy protection through automatic PII detection and cleaning for document sharing.

AI-CloudOps

AI+CloudOps is a cloud-native operations management platform designed for enterprises. It aims to integrate artificial intelligence technology with cloud-native practices to significantly improve the efficiency and level of operations work. The platform offers features such as AIOps for monitoring data analysis and alerts, multi-dimensional permission management, visual CMDB for resource management, efficient ticketing system, deep integration with Prometheus for real-time monitoring, and unified Kubernetes management for cluster optimization.

tinyclaw

TinyClaw is a lightweight wrapper around Claude Code that connects WhatsApp via QR code, processes messages sequentially, maintains conversation context, runs 24/7 in tmux, and is ready for multi-channel support. Its key innovation is the file-based queue system that prevents race conditions and enables multi-channel support. TinyClaw consists of components like whatsapp-client.js for WhatsApp I/O, queue-processor.js for message processing, heartbeat-cron.sh for health checks, and tinyclaw.sh as the main orchestrator with a CLI interface. It ensures no race conditions, is multi-channel ready, provides clean responses using claude -c -p, and supports persistent sessions. Security measures include local storage of WhatsApp session and queue files, channel-specific authentication, and running Claude with user permissions.

tradecat

TradeCat is a comprehensive data analysis and trading platform designed for cryptocurrency, stock, and macroeconomic data. It offers a wide range of features including multi-market data collection, technical indicator modules, AI analysis, signal detection engine, Telegram bot integration, and more. The platform utilizes technologies like Python, TimescaleDB, TA-Lib, Pandas, NumPy, and various APIs to provide users with valuable insights and tools for trading decisions. With a modular architecture and detailed documentation, TradeCat aims to empower users in making informed trading decisions across different markets.

goclaw

goclaw is a powerful AI Agent framework written in Go language. It provides a complete tool system for FileSystem, Shell, Web, and Browser with Docker sandbox support and permission control. The framework includes a skill system compatible with OpenClaw and AgentSkills specifications, supporting automatic discovery and environment gating. It also offers persistent session storage, multi-channel support for Telegram, WhatsApp, Feishu, QQ, and WeWork, flexible configuration with YAML/JSON support, multiple LLM providers like OpenAI, Anthropic, and OpenRouter, WebSocket Gateway, Cron scheduling, and Browser automation based on Chrome DevTools Protocol.

torch-rechub

Torch-RecHub is a lightweight, efficient, and user-friendly PyTorch recommendation system framework. It provides easy-to-use solutions for industrial-level recommendation systems, with features such as generative recommendation models, modular design for adding new models and datasets, PyTorch-based implementation for GPU acceleration, a rich library of 30+ classic and cutting-edge recommendation algorithms, standardized data loading, training, and evaluation processes, easy configuration through files or command-line parameters, reproducibility of experimental results, ONNX model export for production deployment, cross-engine data processing with PySpark support, and experiment visualization and tracking with integrated tools like WandB, SwanLab, and TensorBoardX.

banana-slides

Banana-slides is a native AI-powered PPT generation application based on the nano banana pro model. It supports generating complete PPT presentations from ideas, outlines, and page descriptions. The app automatically extracts attachment charts, uploads any materials, and allows verbal modifications, aiming to truly 'Vibe PPT'. It lowers the threshold for creating PPTs, enabling everyone to quickly create visually appealing and professional presentations.

adnify

Adnify is an advanced code editor with ultimate visual experience and deep integration of AI Agent. It goes beyond traditional IDEs, featuring Cyberpunk glass morphism design style and a powerful AI Agent supporting full automation from code generation to file operations.

For similar tasks

Agently

Agently is a development framework that helps developers build AI agent native application really fast. You can use and build AI agent in your code in an extremely simple way. You can create an AI agent instance then interact with it like calling a function in very few codes like this below. Click the run button below and witness the magic. It's just that simple: python # Import and Init Settings import Agently agent = Agently.create_agent() agent\ .set_settings("current_model", "OpenAI")\ .set_settings("model.OpenAI.auth", {"api_key": ""}) # Interact with the agent instance like calling a function result = agent\ .input("Give me 3 words")\ .output([("String", "one word")])\ .start() print(result) ['apple', 'banana', 'carrot'] And you may notice that when we print the value of `result`, the value is a `list` just like the format of parameter we put into the `.output()`. In Agently framework we've done a lot of work like this to make it easier for application developers to integrate Agent instances into their business code. This will allow application developers to focus on how to build their business logic instead of figure out how to cater to language models or how to keep models satisfied.

alan-sdk-web

Alan AI is a comprehensive AI solution that acts as a 'unified brain' for enterprises, interconnecting applications, APIs, and data sources to streamline workflows. It offers tools like Alan AI Studio for designing dialog scenarios, lightweight SDKs for embedding AI Agents, and a backend powered by advanced AI technologies. With Alan AI, users can create conversational experiences with minimal UI changes, benefit from a serverless environment, receive on-the-fly updates, and access dialog testing and analytics tools. The platform supports various frameworks like JavaScript, React, Angular, Vue, Ember, and Electron, and provides example web apps for different platforms. Users can also explore Alan AI SDKs for iOS, Android, Flutter, Ionic, Apache Cordova, and React Native.

archgw

Arch is an intelligent Layer 7 gateway designed to protect, observe, and personalize AI agents with APIs. It handles tasks related to prompts, including detecting jailbreak attempts, calling backend APIs, routing between LLMs, and managing observability. Built on Envoy Proxy, it offers features like function calling, prompt guardrails, traffic management, and observability. Users can build fast, observable, and personalized AI agents using Arch to improve speed, security, and personalization of GenAI apps.

llm-dev

The 'llm-dev' repository contains source code and resources for the book 'Practical Projects of Large Models: Multi-Domain Intelligent Application Development'. It covers topics such as language model basics, application architecture, working modes, environment setup, model installation, fine-tuning, quantization, multi-modal model applications, chat applications, programming large model applications, VS Code plugin development, enhanced generation applications, translation applications, intelligent agent applications, speech model applications, digital human applications, model training applications, and AI town applications.

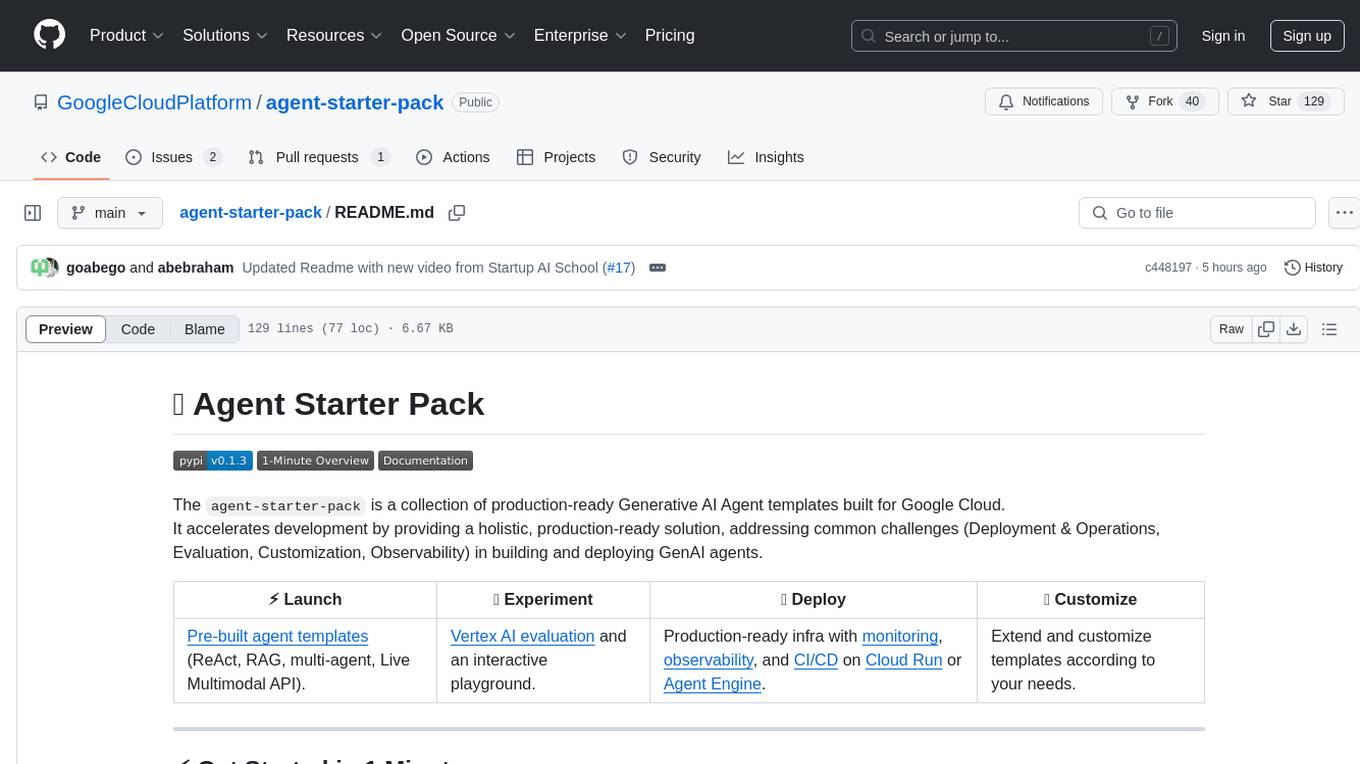

agent-starter-pack

The agent-starter-pack is a collection of production-ready Generative AI Agent templates built for Google Cloud. It accelerates development by providing a holistic, production-ready solution, addressing common challenges in building and deploying GenAI agents. The tool offers pre-built agent templates, evaluation tools, production-ready infrastructure, and customization options. It also provides CI/CD automation and data pipeline integration for RAG agents. The starter pack covers all aspects of agent development, from prototyping and evaluation to deployment and monitoring. It is designed to simplify project creation, template selection, and deployment for agent development on Google Cloud.

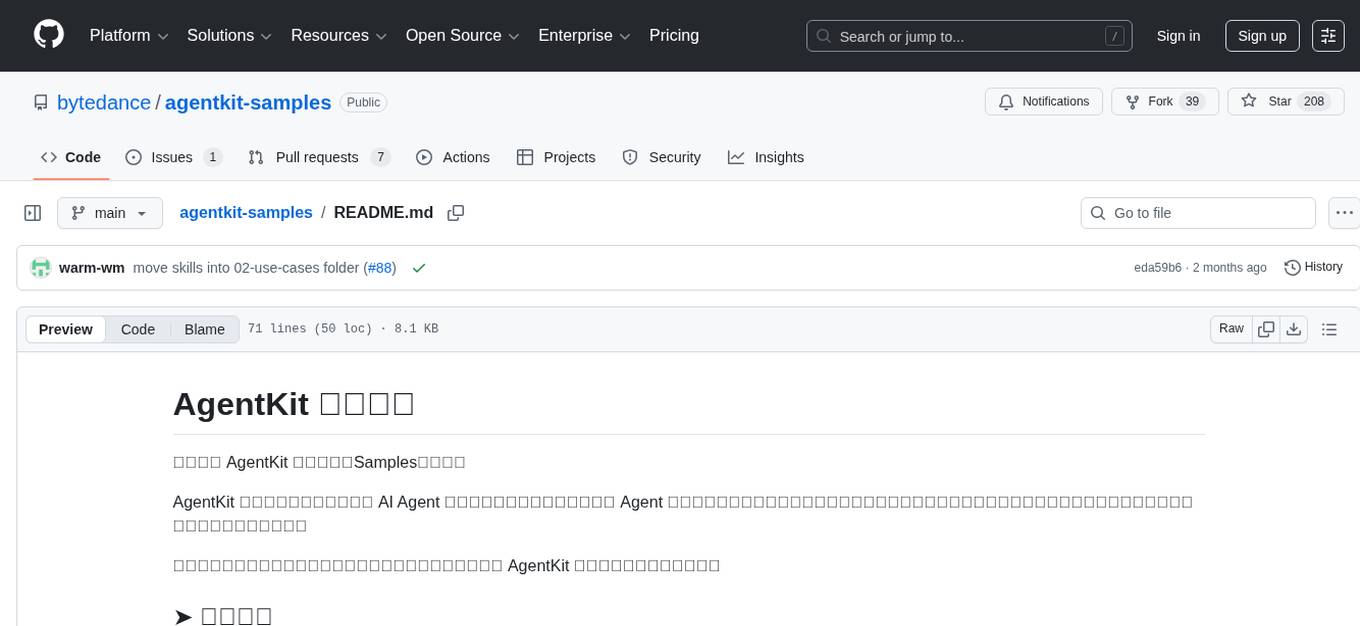

agentkit-samples

AgentKit Samples is a repository containing a series of examples and tutorials to help users understand, implement, and integrate various functionalities of AgentKit into their applications. The platform offers a complete solution for building, deploying, and maintaining AI agents, significantly reducing the complexity of developing intelligent applications. The repository provides different levels of examples and tutorials, including basic tutorials for understanding AgentKit's concepts and use cases, as well as more complex examples for experienced developers.

openclaw-mini

OpenClaw Mini is a simplified reproduction of the core architecture of OpenClaw, designed for learning system-level design of AI agents. It focuses on understanding the Agent Loop, session persistence, context management, long-term memory, skill systems, and active awakening. The project provides a minimal implementation to help users grasp the core design concepts of a production-level AI agent system.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.