dspy.rb

The Ruby framework for programming—rather than prompting—language models.

Stars: 63

DSPy.rb is a Ruby framework for building reliable LLM applications using composable, type-safe modules. It enables developers to define typed signatures and compose them into pipelines, offering a more structured approach compared to traditional prompting. The framework embraces Ruby conventions and adds innovations like CodeAct agents and enhanced production instrumentation, resulting in scalable LLM applications that are robust and efficient. DSPy.rb is actively developed, with a focus on stability and real-world feedback through the 0.x series before reaching a stable v1.0 API.

README:

Build reliable LLM applications in Ruby using composable, type-safe modules.

DSPy.rb brings structured LLM programming to Ruby developers. Instead of wrestling with prompt strings and parsing responses, you define typed signatures and compose them into pipelines that just work.

Traditional prompting is like writing code with string concatenation: it works until it doesn't. DSPy.rb brings you the programming approach pioneered by dspy.ai: instead of crafting fragile prompts, you define modular signatures and let the framework handle the messy details.

DSPy.rb is an idiomatic Ruby port of Stanford's DSPy framework. While implementing the core concepts of signatures, predictors, and optimization from the original Python library, DSPy.rb embraces Ruby conventions and adds Ruby-specific innovations like CodeAct agents and enhanced production instrumentation.

The result? LLM applications that actually scale and don't break when you sneeze.

# Define a signature for sentiment classification

class Classify < DSPy::Signature

description "Classify sentiment of a given sentence."

class Sentiment < T::Enum

enums do

Positive = new('positive')

Negative = new('negative')

Neutral = new('neutral')

end

end

input do

const :sentence, String

end

output do

const :sentiment, Sentiment

const :confidence, Float

end

end

# Configure DSPy with your LLM

DSPy.configure do |c|

c.lm = DSPy::LM.new('openai/gpt-4o-mini',

api_key: ENV['OPENAI_API_KEY'],

structured_outputs: true) # Enable OpenAI's native JSON mode

end

# Create the predictor and run inference

classify = DSPy::Predict.new(Classify)

result = classify.call(sentence: "This book was super fun to read!")

puts result.sentiment # => #<Sentiment::Positive>

puts result.confidence # => 0.85Core Building Blocks:

- Signatures - Define input/output schemas using Sorbet types with T::Enum and union type support

- Predict - LLM completion with structured data extraction and multimodal support

- Chain of Thought - Step-by-step reasoning for complex problems with automatic prompt optimization

- ReAct - Tool-using agents with type-safe tool definitions and error recovery

- CodeAct - Dynamic code execution agents for programming tasks

- Module Composition - Combine multiple LLM calls into production-ready workflows

Optimization & Evaluation:

- Prompt Objects - Manipulate prompts as first-class objects instead of strings

- Typed Examples - Type-safe training data with automatic validation

- Evaluation Framework - Advanced metrics beyond simple accuracy with error-resilient pipelines

- MIPROv2 Optimization - Automatic prompt optimization with storage and persistence

Production Features:

- Reliable JSON Extraction - Native OpenAI structured outputs, Anthropic extraction patterns, and automatic strategy selection with fallback

- Type-Safe Configuration - Strategy enums with automatic provider optimization (Strict/Compatible modes)

- Smart Retry Logic - Progressive fallback with exponential backoff for handling transient failures

- Zero-Config Langfuse Integration - Set env vars and get automatic OpenTelemetry traces in Langfuse

- Performance Caching - Schema and capability caching for faster repeated operations

- File-based Storage - Optimization result persistence with versioning

- Structured Logging - JSON and key=value formats with span tracking

Developer Experience:

- LLM provider support using official Ruby clients:

- OpenAI Ruby with vision model support

- Anthropic Ruby SDK with multimodal capabilities

- Ollama via OpenAI compatibility layer for local models

- Multimodal Support - Complete image analysis with DSPy::Image, type-safe bounding boxes, vision-capable models

- Runtime type checking with Sorbet including T::Enum and union types

- Type-safe tool definitions for ReAct agents

- Comprehensive instrumentation and observability

DSPy.rb is actively developed and approaching stability. The core framework is production-ready with comprehensive documentation, but I'm battle-testing features through the 0.x series before committing to a stable v1.0 API.

Real-world usage feedback is invaluable - if you encounter issues or have suggestions, please open a GitHub issue!

📖 Complete Documentation Website

For LLMs and AI assistants working with DSPy.rb:

- llms.txt - Concise reference optimized for LLMs

- llms-full.txt - Comprehensive API documentation

- Installation & Setup - Detailed installation and configuration

- Quick Start Guide - Your first DSPy programs

- Core Concepts - Understanding signatures, predictors, and modules

- Signatures & Types - Define typed interfaces for LLM operations

- Predictors - Predict, ChainOfThought, ReAct, and more

- Modules & Pipelines - Compose complex multi-stage workflows

- Multimodal Support - Image analysis with vision-capable models

- Examples & Validation - Type-safe training data

- Evaluation Framework - Advanced metrics beyond simple accuracy

- Prompt Optimization - Manipulate prompts as objects

- MIPROv2 Optimizer - Automatic optimization algorithms

- Storage System - Persistence and optimization result storage

- Observability - Zero-config Langfuse integration and structured logging

- Complex Types - Sorbet type integration with automatic coercion for structs, enums, and arrays

- Manual Pipelines - Manual module composition patterns

- RAG Patterns - Manual RAG implementation with external services

- Custom Metrics - Proc-based evaluation logic

Add to your Gemfile:

gem 'dspy'Then run:

bundle installIf you need to compile the polars-df dependency from source (used for data processing in evaluations), install these system packages:

# Update package list

sudo apt-get update

# Install Ruby development files (if not already installed)

sudo apt-get install ruby-full ruby-dev

# Install essential build tools

sudo apt-get install build-essential

# Install Rust and Cargo (required for polars-df compilation)

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

source $HOME/.cargo/env

# Install CMake (often needed for Rust projects)

sudo apt-get install cmakeNote: The polars-df gem compilation can take 15-20 minutes. Pre-built binaries are available for most platforms, so compilation is only needed if a pre-built binary isn't available for your system.

DSPy.rb has rapidly evolved from experimental to production-ready:

- ✅ JSON Parsing Reliability - Native OpenAI structured outputs, strategy selection, retry logic

- ✅ Type-Safe Strategy Configuration - Provider-optimized automatic strategy selection

- ✅ Core Module System - Predict, ChainOfThought, ReAct, CodeAct with type safety

- ✅ Production Observability - OpenTelemetry, New Relic, and Langfuse integration

- ✅ Optimization Framework - MIPROv2 algorithm with storage & persistence

- ✅ Comprehensive Multimodal Framework - Complete image analysis with

DSPy::Image, type-safe bounding boxes, vision model integration - ✅ Advanced Type System -

T::Enumintegration, union types for agentic workflows, complex type coercion - ✅ Production-Ready Evaluation - Multi-factor metrics beyond accuracy, error-resilient evaluation pipelines

- ✅ Documentation Ecosystem -

llms.txtfor AI assistants, ADRs, blog articles, comprehensive examples - ✅ API Maturation - Simplified idiomatic patterns, better error handling, production-proven designs

DSPy.rb has transitioned from feature building to production validation. The core framework is feature-complete and stable - now I'm focusing on real-world usage patterns, performance optimization, and ecosystem integration.

Current Focus Areas:

- 🚧 Production Patterns - Real-world usage validation and performance optimization

- 🚧 Ruby Ecosystem Integration - Rails integration, Sidekiq compatibility, deployment patterns

- 🚧 Scale Testing - High-volume usage, memory management, connection pooling

- 🚧 Error Recovery - Robust failure handling patterns for production environments

- 🚧 Model Context Protocol (MCP) - Integration with MCP ecosystem

- 🚧 Additional Provider Support - Azure OpenAI, local models beyond Ollama

- 🚧 Tool Ecosystem - Expanded tool integrations for ReAct agents

- 🚧 Community Examples - Real-world applications and case studies

- 🚧 Contributor Experience - Making it easier to contribute and extend

- 🚧 Performance Benchmarks - Comparative analysis vs other frameworks

v1.0 Philosophy: v1.0 will be released after extensive production battle-testing, not after checking off features. The API is already stable - v1.0 represents confidence in production reliability backed by real-world validation.

This project is licensed under the MIT License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for dspy.rb

Similar Open Source Tools

dspy.rb

DSPy.rb is a Ruby framework for building reliable LLM applications using composable, type-safe modules. It enables developers to define typed signatures and compose them into pipelines, offering a more structured approach compared to traditional prompting. The framework embraces Ruby conventions and adds innovations like CodeAct agents and enhanced production instrumentation, resulting in scalable LLM applications that are robust and efficient. DSPy.rb is actively developed, with a focus on stability and real-world feedback through the 0.x series before reaching a stable v1.0 API.

transformerlab-app

Transformer Lab is an app that allows users to experiment with Large Language Models by providing features such as one-click download of popular models, finetuning across different hardware, RLHF and Preference Optimization, working with LLMs across different operating systems, chatting with models, using different inference engines, evaluating models, building datasets for training, calculating embeddings, providing a full REST API, running in the cloud, converting models across platforms, supporting plugins, embedded Monaco code editor, prompt editing, inference logs, all through a simple cross-platform GUI.

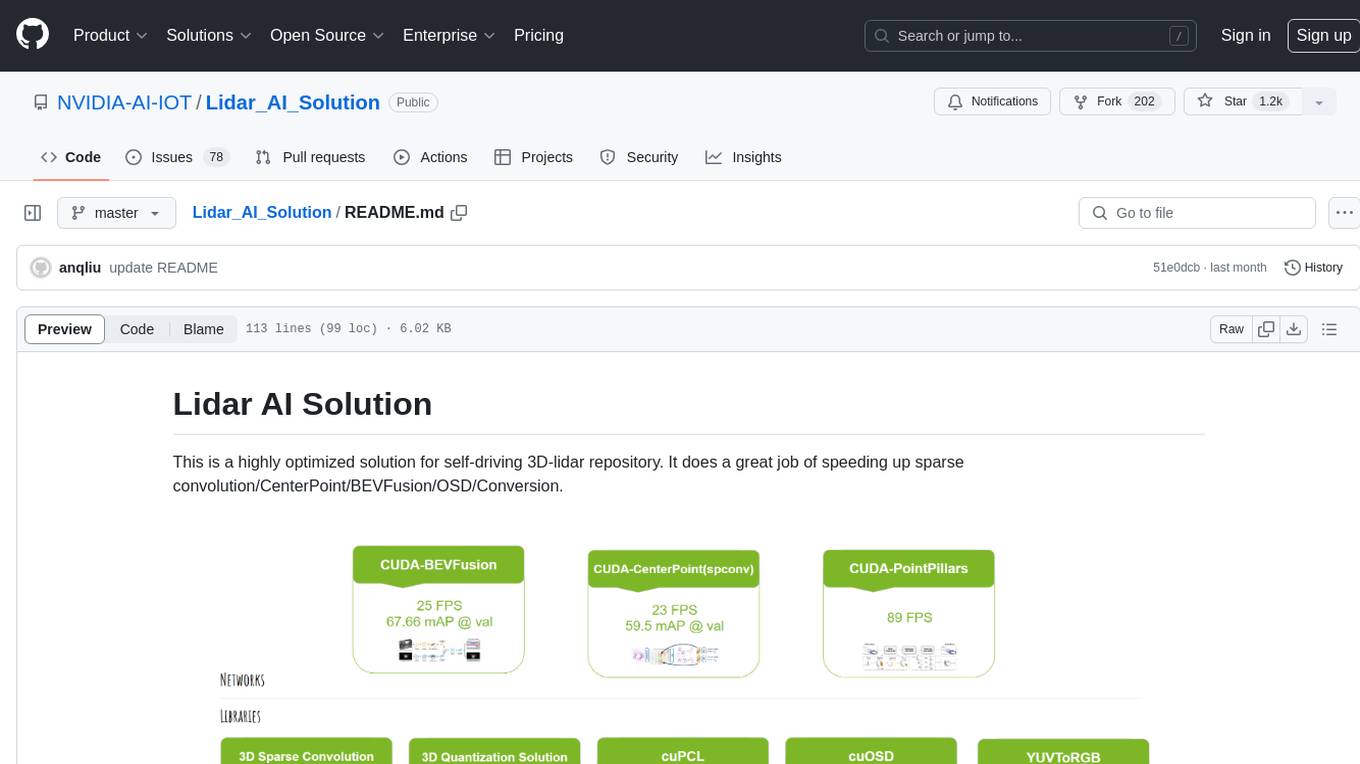

Lidar_AI_Solution

Lidar AI Solution is a highly optimized repository for self-driving 3D lidar, providing solutions for sparse convolution, BEVFusion, CenterPoint, OSD, and Conversion. It includes CUDA and TensorRT implementations for various tasks such as 3D sparse convolution, BEVFusion, CenterPoint, PointPillars, V2XFusion, cuOSD, cuPCL, and YUV to RGB conversion. The repository offers easy-to-use solutions, high accuracy, low memory usage, and quantization options for different tasks related to self-driving technology.

eureka-framework

The Eureka Framework is an open-source toolkit that leverages advanced Artificial Intelligence and Decentralized Science principles to revolutionize scientific discovery. It enables researchers, developers, and decentralized organizations to explore scientific papers, conduct AI-driven experiments, monetize research contributions, provide token-gated access to AI agents, and customize AI agents for specific research domains. The framework also offers features like a RESTful API, robust scheduler for task automation, and webhooks for real-time notifications, empowering users to automate research tasks, enhance productivity, and foster a committed research community.

llmchat

LLMChat is an all-in-one AI chat interface that supports multiple language models, offers a plugin library for enhanced functionality, enables web search capabilities, allows customization of AI assistants, provides text-to-speech conversion, ensures secure local data storage, and facilitates data import/export. It also includes features like knowledge spaces, prompt library, personalization, and can be installed as a Progressive Web App (PWA). The tech stack includes Next.js, TypeScript, Pglite, LangChain, Zustand, React Query, Supabase, Tailwind CSS, Framer Motion, Shadcn, and Tiptap. The roadmap includes upcoming features like speech-to-text and knowledge spaces.

MM-RLHF

MM-RLHF is a comprehensive project for aligning Multimodal Large Language Models (MLLMs) with human preferences. It includes a high-quality MLLM alignment dataset, a Critique-Based MLLM reward model, a novel alignment algorithm MM-DPO, and benchmarks for reward models and multimodal safety. The dataset covers image understanding, video understanding, and safety-related tasks with model-generated responses and human-annotated scores. The reward model generates critiques of candidate texts before assigning scores for enhanced interpretability. MM-DPO is an alignment algorithm that achieves performance gains with simple adjustments to the DPO framework. The project enables consistent performance improvements across 10 dimensions and 27 benchmarks for open-source MLLMs.

nodetool

NodeTool is a platform designed for AI enthusiasts, developers, and creators, providing a visual interface to access a variety of AI tools and models. It simplifies access to advanced AI technologies, offering resources for content creation, data analysis, automation, and more. With features like a visual editor, seamless integration with leading AI platforms, model manager, and API integration, NodeTool caters to both newcomers and experienced users in the AI field.

Ivy-Framework

Ivy-Framework is a powerful tool for building internal applications with AI assistance using C# codebase. It provides a CLI for project initialization, authentication integrations, database support, LLM code generation, secrets management, container deployment, hot reload, dependency injection, state management, routing, and external widget framework. Users can easily create data tables for sorting, filtering, and pagination. The framework offers a seamless integration of front-end and back-end development, making it ideal for developing robust internal tools and dashboards.

kelivo

Kelivo is a Flutter LLM Chat Client with modern design, dark mode, multi-language support, multi-provider support, custom assistants, multimodal input, markdown rendering, voice functionality, MCP support, web search integration, prompt variables, QR code sharing, data backup, and custom requests. It is built with Flutter and Dart, utilizes Provider for state management, Hive for local data storage, and supports dynamic theming and Markdown rendering. Kelivo is a versatile tool for creating and managing personalized AI assistants, supporting various input formats, and integrating with multiple search engines and AI providers.

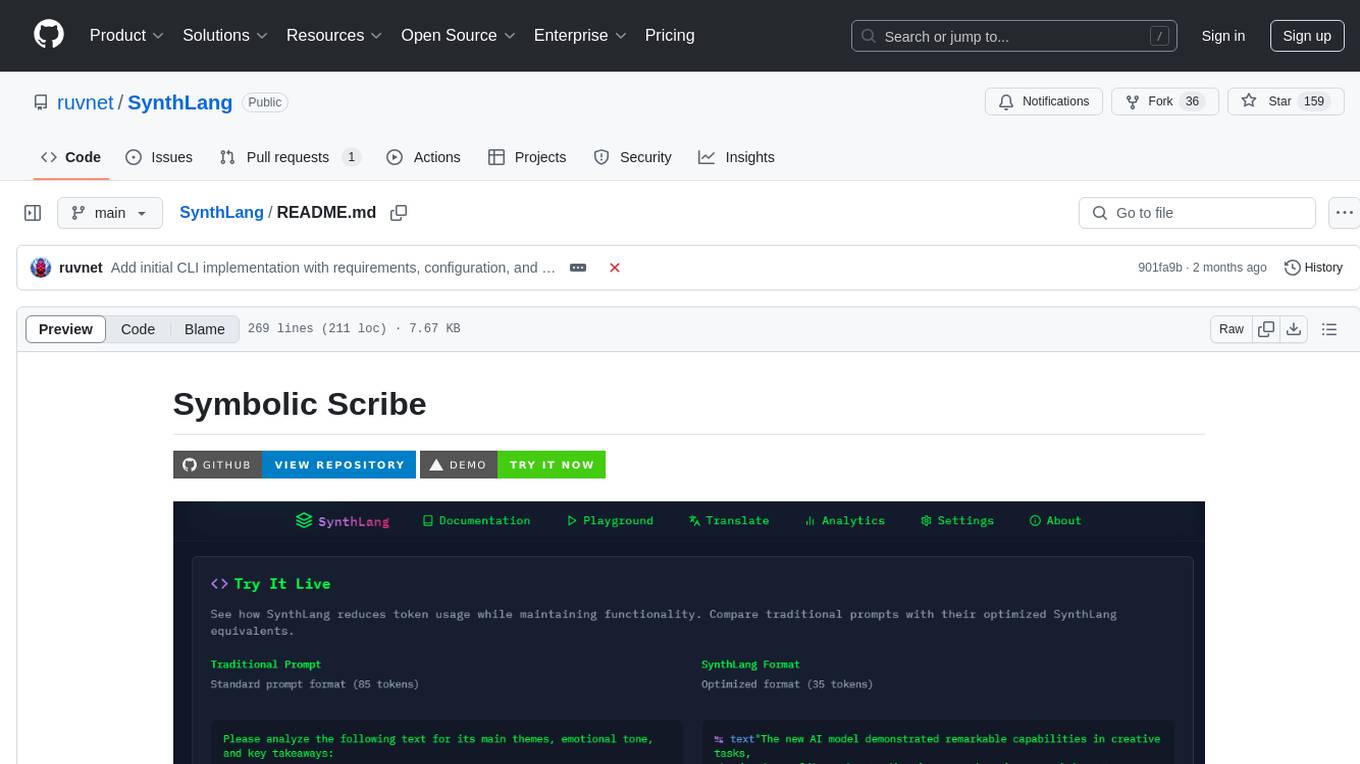

SynthLang

SynthLang is a tool designed to optimize AI prompts by reducing costs and improving processing speed. It brings academic rigor to prompt engineering, creating precise and powerful AI interactions. The tool includes core components like a Translator Engine, Performance Optimization, Testing Framework, and Technical Architecture. It offers mathematical precision, academic rigor, enhanced security, a modern interface, and instant testing. Users can integrate mathematical frameworks, model complex relationships, and apply structured prompts to various domains. Security features include API key management and data privacy. The tool also provides a CLI for prompt engineering and optimization capabilities.

tensorzero

TensorZero is an open-source platform that helps LLM applications graduate from API wrappers into defensible AI products. It enables a data & learning flywheel for LLMs by unifying inference, observability, optimization, and experimentation. The platform includes a high-performance model gateway, structured schema-based inference, observability, experimentation, and data warehouse for analytics. TensorZero Recipes optimize prompts and models, and the platform supports experimentation features and GitOps orchestration for deployment.

modern_ai_for_beginners

This repository provides a comprehensive guide to modern AI for beginners, covering both theoretical foundations and practical implementation. It emphasizes the importance of understanding both the mathematical principles and the code implementation of AI models. The repository includes resources on PyTorch, deep learning fundamentals, mathematical foundations, transformer-based LLMs, diffusion models, software engineering, and full-stack development. It also features tutorials on natural language processing with transformers, reinforcement learning, and practical deep learning for coders.

kitchenai

KitchenAI is an open-source toolkit designed to simplify AI development by serving as an AI backend and LLMOps solution. It aims to empower developers to focus on delivering results without being bogged down by AI infrastructure complexities. With features like simplifying AI integration, providing an AI backend, and empowering developers, KitchenAI streamlines the process of turning AI experiments into production-ready APIs. It offers built-in LLMOps features, is framework-agnostic and extensible, and enables faster time-to-production. KitchenAI is suitable for application developers, AI developers & data scientists, and platform & infra engineers, allowing them to seamlessly integrate AI into apps, deploy custom AI techniques, and optimize AI services with a modular framework. The toolkit eliminates the need to build APIs and infrastructure from scratch, making it easier to deploy AI code as production-ready APIs in minutes. KitchenAI also provides observability, tracing, and evaluation tools, and offers a Docker-first deployment approach for scalability and confidence.

chipper

Chipper provides a web interface, CLI, and architecture for pipelines, document chunking, web scraping, and query workflows. It is built with Haystack, Ollama, Hugging Face, Docker, Tailwind, and ElasticSearch, running locally or as a Dockerized service. Originally created to assist in creative writing, it now offers features like local Ollama and Hugging Face API, ElasticSearch embeddings, document splitting, web scraping, audio transcription, user-friendly CLI, and Docker deployment. The project aims to be educational, beginner-friendly, and a playground for AI exploration and innovation.

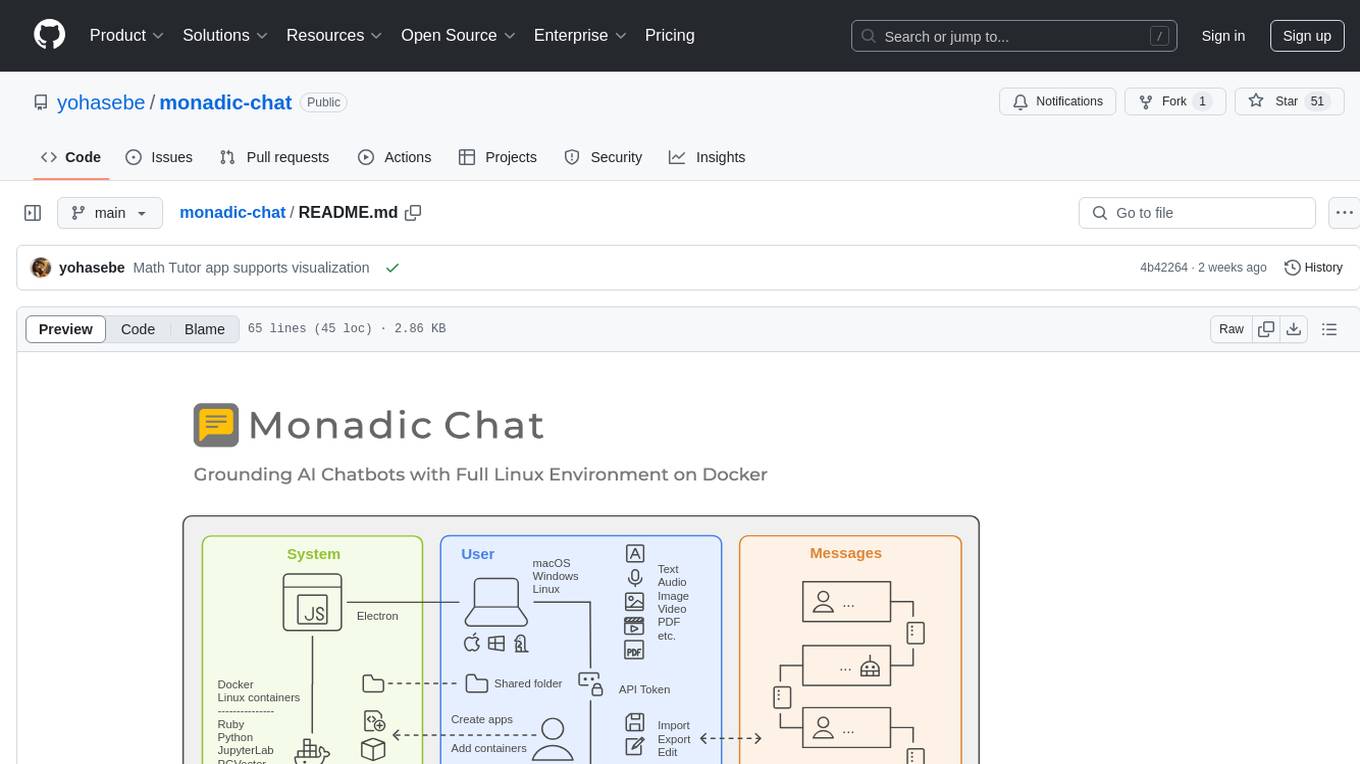

monadic-chat

Monadic Chat is a locally hosted web application designed to create and utilize intelligent chatbots. It provides a Linux environment on Docker to GPT and other LLMs, enabling the execution of advanced tasks that require external tools. The tool supports voice interaction, image and video recognition and generation, and AI-to-AI chat, making it useful for using AI and developing various applications. It is available for Mac, Windows, and Linux (Debian/Ubuntu) with easy-to-use installers.

ComfyUI-Copilot

ComfyUI-Copilot is an intelligent assistant built on the Comfy-UI framework that simplifies and enhances the AI algorithm debugging and deployment process through natural language interactions. It offers intuitive node recommendations, workflow building aids, and model querying services to streamline development processes. With features like interactive Q&A bot, natural language node suggestions, smart workflow assistance, and model querying, ComfyUI-Copilot aims to lower the barriers to entry for beginners, boost development efficiency with AI-driven suggestions, and provide real-time assistance for developers.

For similar tasks

dspy.rb

DSPy.rb is a Ruby framework for building reliable LLM applications using composable, type-safe modules. It enables developers to define typed signatures and compose them into pipelines, offering a more structured approach compared to traditional prompting. The framework embraces Ruby conventions and adds innovations like CodeAct agents and enhanced production instrumentation, resulting in scalable LLM applications that are robust and efficient. DSPy.rb is actively developed, with a focus on stability and real-world feedback through the 0.x series before reaching a stable v1.0 API.

tuui

TUUI is a desktop MCP client designed for accelerating AI adoption through the Model Context Protocol (MCP) and enabling cross-vendor LLM API orchestration. It is an LLM chat desktop application based on MCP, created using AI-generated components with strict syntax checks and naming conventions. The tool integrates AI tools via MCP, orchestrates LLM APIs, supports automated application testing, TypeScript, multilingual, layout management, global state management, and offers quick support through the GitHub community and official documentation.

log10

Log10 is a one-line Python integration to manage your LLM data. It helps you log both closed and open-source LLM calls, compare and identify the best models and prompts, store feedback for fine-tuning, collect performance metrics such as latency and usage, and perform analytics and monitor compliance for LLM powered applications. Log10 offers various integration methods, including a python LLM library wrapper, the Log10 LLM abstraction, and callbacks, to facilitate its use in both existing production environments and new projects. Pick the one that works best for you. Log10 also provides a copilot that can help you with suggestions on how to optimize your prompt, and a feedback feature that allows you to add feedback to your completions. Additionally, Log10 provides prompt provenance, session tracking and call stack functionality to help debug prompt chains. With Log10, you can use your data and feedback from users to fine-tune custom models with RLHF, and build and deploy more reliable, accurate and efficient self-hosted models. Log10 also supports collaboration, allowing you to create flexible groups to share and collaborate over all of the above features.

LMOps

LMOps is a research initiative focusing on fundamental research and technology for building AI products with foundation models, particularly enabling AI capabilities with Large Language Models (LLMs) and Generative AI models. The project explores various aspects such as prompt optimization, longer context handling, LLM alignment, acceleration of LLMs, LLM customization, and understanding in-context learning. It also includes tools like Promptist for automatic prompt optimization, Structured Prompting for efficient long-sequence prompts consumption, and X-Prompt for extensible prompts beyond natural language. Additionally, LLMA accelerators are developed to speed up LLM inference by referencing and copying text spans from documents. The project aims to advance technologies that facilitate prompting language models and enhance the performance of LLMs in various scenarios.

awesome-llm-json

This repository is an awesome list dedicated to resources for using Large Language Models (LLMs) to generate JSON or other structured outputs. It includes terminology explanations, hosted and local models, Python libraries, blog articles, videos, Jupyter notebooks, and leaderboards related to LLMs and JSON generation. The repository covers various aspects such as function calling, JSON mode, guided generation, and tool usage with different providers and models.

PromptAgent

PromptAgent is a repository for a novel automatic prompt optimization method that crafts expert-level prompts using language models. It provides a principled framework for prompt optimization by unifying prompt sampling and rewarding using MCTS algorithm. The tool supports different models like openai, palm, and huggingface models. Users can run PromptAgent to optimize prompts for specific tasks by strategically sampling model errors, generating error feedbacks, simulating future rewards, and searching for high-reward paths leading to expert prompts.

Magic_Words

Magic_Words is a repository containing code for the paper 'What's the Magic Word? A Control Theory of LLM Prompting'. It implements greedy back generation and greedy coordinate gradient (GCG) to find optimal control prompts (magic words). Users can set up a virtual environment, install the package and dependencies, and run example scripts for pointwise control and optimizing prompts for datasets. The repository provides scripts for finding optimal control prompts for question-answer pairs and dataset optimization using the GCG algorithm.

app_generative_ai

This repository contains course materials for T81 559: Applications of Generative Artificial Intelligence at Washington University in St. Louis. The course covers practical applications of Large Language Models (LLMs) and text-to-image networks using Python. Students learn about generative AI principles, LangChain, Retrieval-Augmented Generation (RAG) model, image generation techniques, fine-tuning neural networks, and prompt engineering. Ideal for students, researchers, and professionals in computer science, the course offers a transformative learning experience in the realm of Generative AI.

For similar jobs

alan-sdk-ios

Alan AI SDK for iOS is a powerful tool that allows developers to quickly create AI agents for their iOS apps. With Alan AI Platform, users can easily design, embed, and host conversational experiences in their applications. The platform offers a web-based IDE called Alan AI Studio for creating dialog scenarios, lightweight SDKs for embedding AI agents, and a backend powered by top-notch speech recognition and natural language understanding technologies. Alan AI enables human-like conversations and actions through voice commands, with features like on-the-fly updates, dialog flow testing, and analytics.

EvoMaster

EvoMaster is an open-source AI-driven tool that automatically generates system-level test cases for web/enterprise applications. It uses an Evolutionary Algorithm and Dynamic Program Analysis to evolve test cases, maximizing code coverage and fault detection. The tool supports REST, GraphQL, and RPC APIs, with whitebox testing for JVM-compiled languages. It generates JUnit tests, detects faults, handles SQL databases, and supports authentication. EvoMaster has been funded by the European Research Council and the Research Council of Norway.

nous

Nous is an open-source TypeScript platform for autonomous AI agents and LLM based workflows. It aims to automate processes, support requests, review code, assist with refactorings, and more. The platform supports various integrations, multiple LLMs/services, CLI and web interface, human-in-the-loop interactions, flexible deployment options, observability with OpenTelemetry tracing, and specific agents for code editing, software engineering, and code review. It offers advanced features like reasoning/planning, memory and function call history, hierarchical task decomposition, and control-loop function calling options. Nous is designed to be a flexible platform for the TypeScript community to expand and support different use cases and integrations.

melodisco

Melodisco is an AI music player that allows users to listen to music and manage playlists. It provides a user-friendly interface for music playback and organization. Users can deploy Melodisco with Vercel or Docker for easy setup. Local development instructions are provided for setting up the project environment. The project credits various tools and libraries used in its development, such as Next.js, Tailwind CSS, and Stripe. Melodisco is a versatile tool for music enthusiasts looking for an AI-powered music player with features like authentication, payment integration, and multi-language support.

kobold_assistant

Kobold-Assistant is a fully offline voice assistant interface to KoboldAI's large language model API. It can work online with the KoboldAI horde and online speech-to-text and text-to-speech models. The assistant, called Jenny by default, uses the latest coqui 'jenny' text to speech model and openAI's whisper speech recognition. Users can customize the assistant name, speech-to-text model, text-to-speech model, and prompts through configuration. The tool requires system packages like GCC, portaudio development libraries, and ffmpeg, along with Python >=3.7, <3.11, and runs on Ubuntu/Debian systems. Users can interact with the assistant through commands like 'serve' and 'list-mics'.

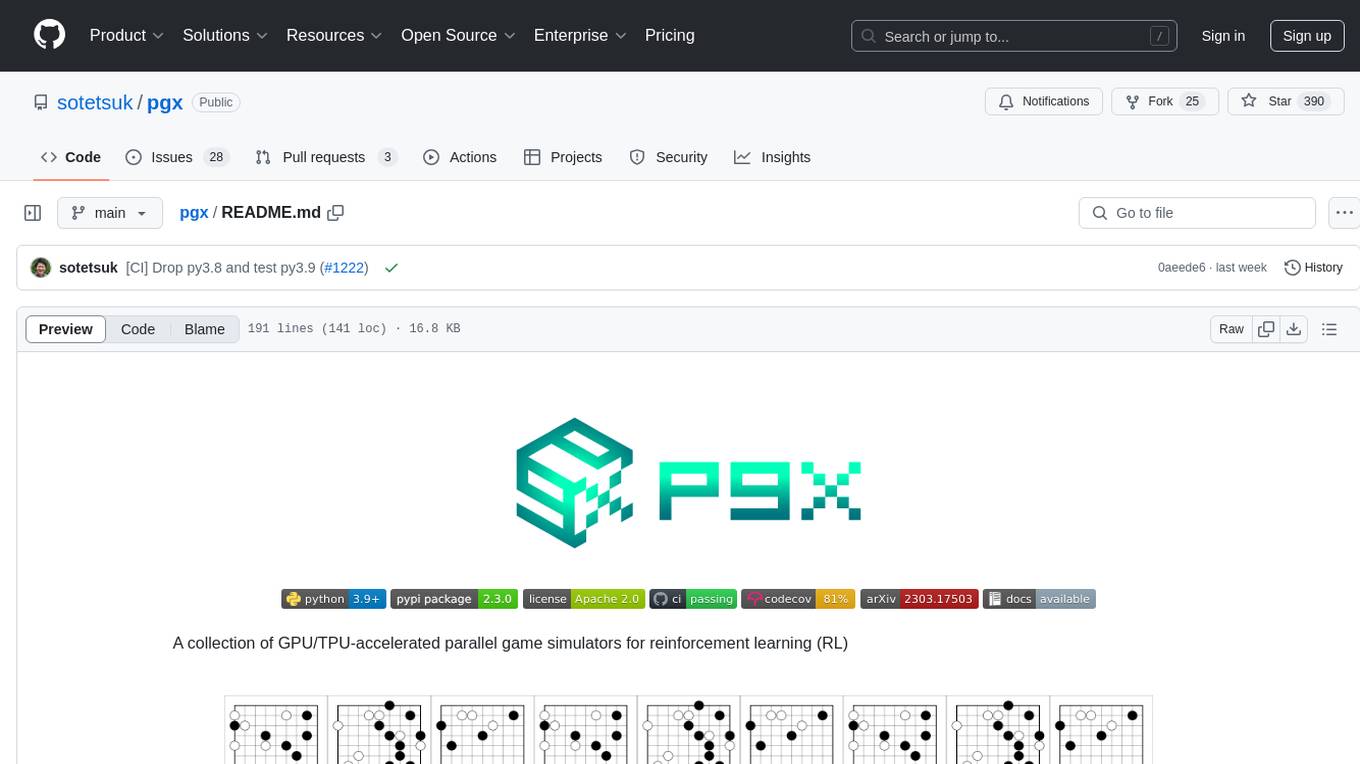

pgx

Pgx is a collection of GPU/TPU-accelerated parallel game simulators for reinforcement learning (RL). It provides JAX-native game simulators for various games like Backgammon, Chess, Shogi, and Go, offering super fast parallel execution on accelerators and beautiful visualization in SVG format. Pgx focuses on faster implementations while also being sufficiently general, allowing environments to be converted to the AEC API of PettingZoo for running Pgx environments through the PettingZoo API.

sophia

Sophia is an open-source TypeScript platform designed for autonomous AI agents and LLM based workflows. It aims to automate processes, review code, assist with refactorings, and support various integrations. The platform offers features like advanced autonomous agents, reasoning/planning inspired by Google's Self-Discover paper, memory and function call history, adaptive iterative planning, and more. Sophia supports multiple LLMs/services, CLI and web interface, human-in-the-loop interactions, flexible deployment options, observability with OpenTelemetry tracing, and specific agents for code editing, software engineering, and code review. It provides a flexible platform for the TypeScript community to expand and support various use cases and integrations.

skyeye

SkyEye is an AI-powered Ground Controlled Intercept (GCI) bot designed for the flight simulator Digital Combat Simulator (DCS). It serves as an advanced replacement for the in-game E-2, E-3, and A-50 AI aircraft, offering modern voice recognition, natural-sounding voices, real-world brevity and procedures, a wide range of commands, and intelligent battlespace monitoring. The tool uses Speech-To-Text and Text-To-Speech technology, can run locally or on a cloud server, and is production-ready software used by various DCS communities.