mcp-for-beginners

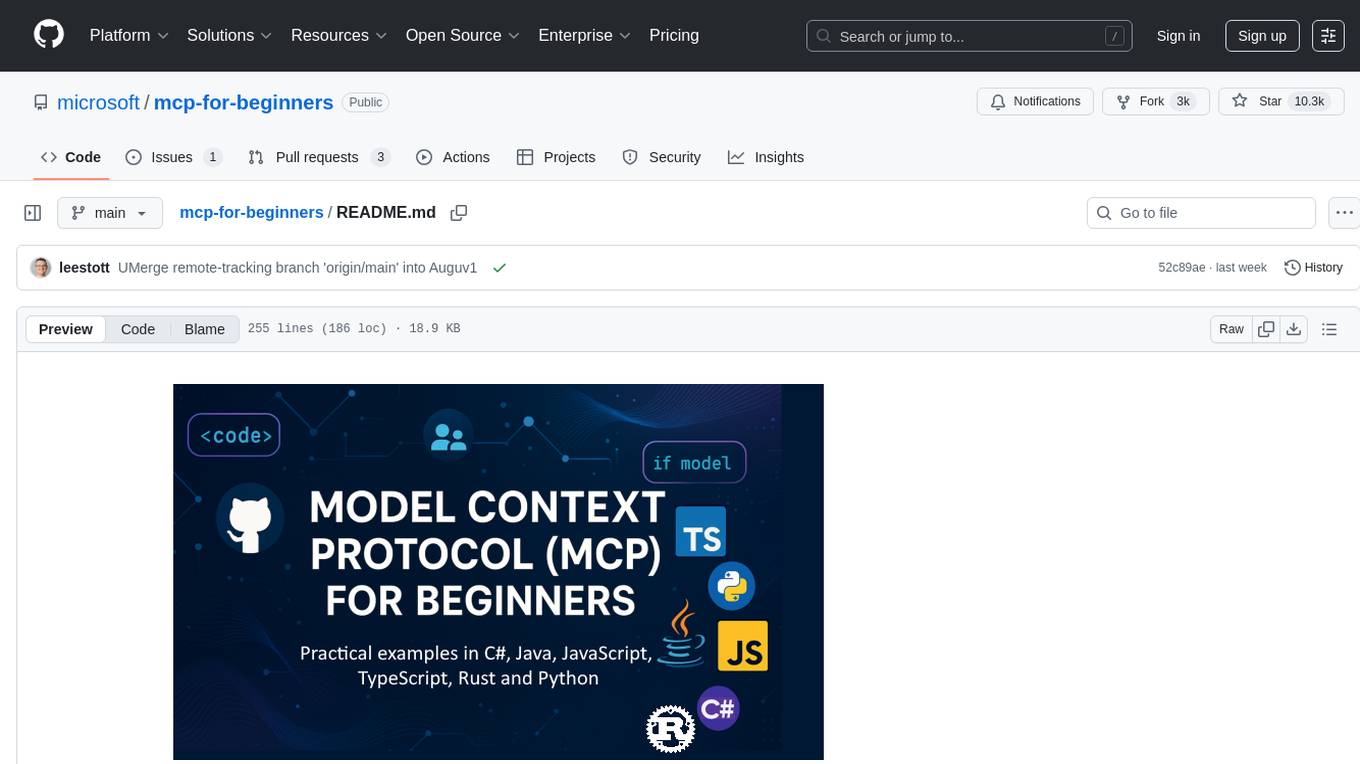

This open-source curriculum introduces the fundamentals of Model Context Protocol (MCP) through real-world, cross-language examples in .NET, Java, TypeScript, JavaScript, Rust and Python. Designed for developers, it focuses on practical techniques for building modular, scalable, and secure AI workflows from session setup to service orchestration.

Stars: 10696

The Model Context Protocol (MCP) Curriculum for Beginners is an open-source framework designed to standardize interactions between AI models and client applications. It offers a structured learning path with practical coding examples and real-world use cases in popular programming languages like C#, Java, JavaScript, Rust, Python, and TypeScript. Whether you're an AI developer, system architect, or software engineer, this guide provides comprehensive resources for mastering MCP fundamentals and implementation strategies.

README:

Follow these steps to get started using these resources:

-

Fork the Repository: Click

-

Clone the Repository:

git clone https://github.com/microsoft/mcp-for-beginners.git - Join The Azure AI Foundry Discord and meet experts and fellow developers

Arabic | Bengali | Bulgarian | Burmese (Myanmar) | Chinese (Simplified) | Chinese (Traditional, Hong Kong) | Chinese (Traditional, Macau) | Chinese (Traditional, Taiwan) | Croatian | Czech | Danish | Dutch | Finnish | French | German | Greek | Hebrew | Hindi | Hungarian | Indonesian | Italian | Japanese | Korean | Malay | Marathi | Nepali | Norwegian | Persian (Farsi) | Polish | Portuguese (Brazil) | Portuguese (Portugal) | Punjabi (Gurmukhi) | Romanian | Russian | Serbian (Cyrillic) | Slovak | Slovenian | Spanish | Swahili | Swedish | Tagalog (Filipino) | Thai | Turkish | Ukrainian | Urdu | Vietnamese

The Model Context Protocol (MCP) is a cutting-edge framework designed to standardize interactions between AI models and client applications. This open-source curriculum offers a structured learning path, complete with practical coding examples and real-world use cases, across popular programming languages including C#, Java, JavaScript, TypeScript, and Python.

Whether you're an AI developer, system architect, or software engineer, this guide is your comprehensive resource for mastering MCP fundamentals and implementation strategies.

- 📘 MCP Documentation – Detailed tutorials and user guides

- 📜 MCP Specification – Protocol architecture and technical references

- 📜 Original MCP Specification – Legacy technical references (may contain additional details)

- 🧑💻 MCP GitHub Repository – Open-source SDKs, tools, and code samples

- 🌐 MCP Community – Join discussions and contribute to the community

| Module | Topic | Description | Link |

|---|---|---|---|

| Module 1-3: Fundamentals | |||

| 00 | Introduction to MCP | Overview of the Model Context Protocol and its significance in AI pipelines | Read more |

| 01 | Core Concepts Explained | In-depth exploration of core MCP concepts | Read more |

| 02 | Security in MCP | Security threats and best practices | Read more |

| 03 | Getting Started with MCP | Environment setup, basic servers/clients, integration | Read more |

| Module 3: Building Your First Server & Client | |||

| 3.1 | First Server | Create your first MCP server | Guide |

| 3.2 | First Client | Develop a basic MCP client | Guide |

| 3.3 | Client with LLM | Integrate large language models | Guide |

| 3.4 | VS Code Integration | Consume MCP servers in VS Code | Guide |

| 3.5 | stdio Server | Create servers using stdio transport | Guide |

| 3.6 | HTTP Streaming | Implement HTTP streaming in MCP | Guide |

| 3.7 | AI Toolkit | Use AI Toolkit with MCP | Guide |

| 3.8 | Testing | Test your MCP server implementation | Guide |

| 3.9 | Deployment | Deploy MCP servers to production | Guide |

| Module 4-5: Practical & Advanced | |||

| 04 | Practical Implementation | SDKs, debugging, testing, reusable prompt templates | Read more |

| 05 | Advanced Topics in MCP | Multi-modal AI, scaling, enterprise use | Read more |

| 5.1 | Azure Integration | MCP Integration with Azure | Guide |

| 5.2 | Multi-modality | Working with multiple modalities | Guide |

| 5.3 | OAuth2 Demo | Implement OAuth2 authentication | Guide |

| 5.4 | Root Contexts | Understand and implement root contexts | Guide |

| 5.5 | Routing | MCP routing strategies | Guide |

| 5.6 | Sampling | Sampling techniques in MCP | Guide |

| 5.7 | Scaling | Scale MCP implementations | Guide |

| 5.8 | Security | Advanced security considerations | Guide |

| 5.9 | Web Search | Implement web search capabilities | Guide |

| 5.10 | Realtime Streaming | Build realtime streaming functionality | Guide |

| 5.11 | Realtime Search | Implement realtime search | Guide |

| 5.12 | Entra ID Auth | Authentication with Microsoft Entra ID | Guide |

| 5.13 | Foundry Integration | Integrate with Azure AI Foundry | Guide |

| 5.14 | Context Engineering | Techniques for effective context engineering | Guide |

| 5.15 | MCP Custom Transport | Custom Transport implementations | Guide |

| Module 6-10: Community & Best Practices | | | | | 06 | Community Contributions | How to contribute to the MCP ecosystem | Guide | | 07 | Insights from Early Adoption | Real-world implementation stories | Guide | | 08 | Best Practices for MCP | Performance, fault-tolerance, resilience | Guide | | 09 | MCP Case Studies | Practical implementation examples | Guide | | 10 | Hands-on Workshop | Building an MCP Server with AI Toolkit | Lab |

| Language | Description | Link |

|---|---|---|

| C# | MCP Server Example | View Code |

| Java | MCP Calculator | View Code |

| JavaScript | MCP Demo | View Code |

| Python | MCP Server | View Code |

| TypeScript | MCP Example | View Code |

| Rust | MCP Example | View Code |

| Language | Description | Link |

|---|---|---|

| C# | Advanced Sample | View Code |

| Java with Spring | Container App Example | View Code |

| JavaScript | Advanced Sample | View Code |

| Python | Complex Implementation | View Code |

| TypeScript | Container Sample | View Code |

To get the most out of this curriculum, you should have:

-

Basic knowledge of programming in at least one of the following languages: C#, Java, JavaScript, Python, or TypeScript

-

Understanding of client-server model and APIs

-

Familiarity with REST and HTTP concepts

-

(Optional) Background in AI/ML concepts

-

Joining our community discussions for support

This repository includes several resources to help you navigate and learn effectively:

A comprehensive Study Guide is available to help you navigate this repository effectively. The guide includes:

- A visual curriculum map showing all topics covered

- Detailed breakdown of each repository section

- Guidance on how to use sample projects

- Recommended learning paths for different skill levels

- Additional resources to complement your learning journey

We maintain a detailed Changelog that tracks all significant updates to the curriculum materials, including:

- New content additions

- Structural changes

- Feature improvements

- Documentation updates

Each lesson in this guide includes:

- Clear explanations of MCP concepts

- Live code examples in multiple languages

- Exercises to build real MCP applications

- Extra resources for advanced learners

Get ready for two days of deep technical insight, community connection, and hands-on learning at MCP Dev Days, a virtual event dedicated to the Model Context Protocol (MCP) — the emerging standard that bridges AI models and the tools they rely on. You can watch MCP Dev Days by registering on our event page: https://aka.ms/mcpdevdays.

Is all about empowering developers to use MCP in their developer workflow and celebrating the amazing MCP community. We’ll be joined with community members and partners such as Arcade, Block, Okta, and Neon to see how they are collaborating with Microsoft to shape an open, extensible MCP ecosystem. Real-world demos across VS Code, Visual Studio, GitHub Copilot, and popular community tools Practical, context-driven dev workflows Community-led sessions and insights Whether you’re just getting started with MCP or already building with it, Day 1 will set the stage with inspiration and actionable takeaways.

Is for MCP builders. We’ll go deep into implementation strategies and best practices for creating MCP servers and integrating MCP into your AI workflows.

- Building MCP Servers and integrating them into agent experiences

- Prompt-driven development

- Security best practices

- Using building blocks like Functions, ACA, and API Management

- Registry alignment and tooling (1P + 3P)

If you’re a developer, tool builder, or AI product strategist, this day is packed with the insights you need to build scalable, secure, and future-ready MCP solutions.

Learn in intensive video sessions how to create MCP servers, integrate with VS Code, and deploy professionally on Azure based on content from the MCP for Beginners curriculum. Walk away with practical skills in a technology that major companies are already using.

Let's learn about the Model Context Protocol (MCP), a cutting-edge framework designed to standardize interactions between AI models and client applications. Through this beginner-friendly session, we'll introduce you to MCP and guide you through creating your first MCP server.

JavaScript: https://aka.ms/letslearnmcp-javascript

Thanks to Microsoft Valued Professional Shivam Goyal for contributing important code samples.

This content is licensed under the MIT License. For terms and conditions, see the LICENSE.

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

When you submit a pull request, a CLA bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact [email protected] with any additional questions or comments.

The repository is organized as follows:

- Core Curriculum (00-10): The main content organized in ten sequential modules

- images/: Diagrams and illustrations used throughout the curriculum

- translations/: Multi-language support with automated translations

- translated_images/: Localized versions of diagrams and illustrations

- study_guide.md: Comprehensive guide to navigating the repository

- changelog.md: Record of all significant changes to the curriculum materials

- mcp.json: Configuration file for MCP specification

- CODE_OF_CONDUCT.md, LICENSE, SECURITY.md, SUPPORT.md: Project governance documents

Our team produces other courses! Check out:

- AI Agents For Beginners

- Generative AI for Beginners using .NET

- Generative AI for Beginners using JavaScript

- Generative AI for Beginners

- Generative AI for Beginners using Java

- ML for Beginners

- Data Science for Beginners

- AI for Beginners

- Cybersecurity for Beginners

- Web Dev for Beginners

- IoT for Beginners

- XR Development for Beginners

- Mastering GitHub Copilot for AI Paired Programming

- Mastering GitHub Copilot for C#/.NET Developers

- Choose Your Own Copilot Adventure

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft trademarks or logos is subject to and must follow Microsoft's Trademark & Brand Guidelines. Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship. Any use of third-party trademarks or logos is subject to those third-parties' policies.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for mcp-for-beginners

Similar Open Source Tools

mcp-for-beginners

The Model Context Protocol (MCP) Curriculum for Beginners is an open-source framework designed to standardize interactions between AI models and client applications. It offers a structured learning path with practical coding examples and real-world use cases in popular programming languages like C#, Java, JavaScript, Rust, Python, and TypeScript. Whether you're an AI developer, system architect, or software engineer, this guide provides comprehensive resources for mastering MCP fundamentals and implementation strategies.

ai-agents-for-beginners

AI Agents for Beginners is a course that covers the fundamentals of building AI Agents. It consists of 10 lessons with code examples using Azure AI Foundry and GitHub Model Catalogs. The course utilizes AI Agent frameworks and services from Microsoft, such as Azure AI Agent Service, Semantic Kernel, and AutoGen. Learners can access written lessons, Python code samples, and additional learning resources for each lesson. The course encourages contributions and suggestions from the community and provides multi-language support for learners worldwide.

AI-For-Beginners

AI-For-Beginners is a comprehensive 12-week, 24-lesson curriculum designed by experts at Microsoft to introduce beginners to the world of Artificial Intelligence (AI). The curriculum covers various topics such as Symbolic AI, Neural Networks, Computer Vision, Natural Language Processing, Genetic Algorithms, and Multi-Agent Systems. It includes hands-on lessons, quizzes, and labs using popular frameworks like TensorFlow and PyTorch. The focus is on providing a foundational understanding of AI concepts and principles, making it an ideal starting point for individuals interested in AI.

awesome-generative-ai-data-scientist

A curated list of 50+ resources to help you become a Generative AI Data Scientist. This repository includes resources on building GenAI applications with Large Language Models (LLMs), and deploying LLMs and GenAI with Cloud-based solutions.

MiniCPM-V-CookBook

MiniCPM-V & o Cookbook is a comprehensive repository for building multimodal AI applications effortlessly. It provides easy-to-use documentation, supports a wide range of users, and offers versatile deployment scenarios. The repository includes live demonstrations, inference recipes for vision and audio capabilities, fine-tuning recipes, serving recipes, quantization recipes, and a framework support matrix. Users can customize models, deploy them efficiently, and compress models to improve efficiency. The repository also showcases awesome works using MiniCPM-V & o and encourages community contributions.

generative-ai-for-beginners

This course has 18 lessons. Each lesson covers its own topic so start wherever you like! Lessons are labeled either "Learn" lessons explaining a Generative AI concept or "Build" lessons that explain a concept and code examples in both **Python** and **TypeScript** when possible. Each lesson also includes a "Keep Learning" section with additional learning tools. **What You Need** * Access to the Azure OpenAI Service **OR** OpenAI API - _Only required to complete coding lessons_ * Basic knowledge of Python or Typescript is helpful - *For absolute beginners check out these Python and TypeScript courses. * A Github account to fork this entire repo to your own GitHub account We have created a **Course Setup** lesson to help you with setting up your development environment. Don't forget to star (🌟) this repo to find it easier later. ## 🧠 Ready to Deploy? If you are looking for more advanced code samples, check out our collection of Generative AI Code Samples in both **Python** and **TypeScript**. ## 🗣️ Meet Other Learners, Get Support Join our official AI Discord server to meet and network with other learners taking this course and get support. ## 🚀 Building a Startup? Sign up for Microsoft for Startups Founders Hub to receive **free OpenAI credits** and up to **$150k towards Azure credits to access OpenAI models through Azure OpenAI Services**. ## 🙏 Want to help? Do you have suggestions or found spelling or code errors? Raise an issue or Create a pull request ## 📂 Each lesson includes: * A short video introduction to the topic * A written lesson located in the README * Python and TypeScript code samples supporting Azure OpenAI and OpenAI API * Links to extra resources to continue your learning ## 🗃️ Lessons | | Lesson Link | Description | Additional Learning | | :-: | :------------------------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------: | ------------------------------------------------------------------------------ | | 00 | Course Setup | **Learn:** How to Setup Your Development Environment | Learn More | | 01 | Introduction to Generative AI and LLMs | **Learn:** Understanding what Generative AI is and how Large Language Models (LLMs) work. | Learn More | | 02 | Exploring and comparing different LLMs | **Learn:** How to select the right model for your use case | Learn More | | 03 | Using Generative AI Responsibly | **Learn:** How to build Generative AI Applications responsibly | Learn More | | 04 | Understanding Prompt Engineering Fundamentals | **Learn:** Hands-on Prompt Engineering Best Practices | Learn More | | 05 | Creating Advanced Prompts | **Learn:** How to apply prompt engineering techniques that improve the outcome of your prompts. | Learn More | | 06 | Building Text Generation Applications | **Build:** A text generation app using Azure OpenAI | Learn More | | 07 | Building Chat Applications | **Build:** Techniques for efficiently building and integrating chat applications. | Learn More | | 08 | Building Search Apps Vector Databases | **Build:** A search application that uses Embeddings to search for data. | Learn More | | 09 | Building Image Generation Applications | **Build:** A image generation application | Learn More | | 10 | Building Low Code AI Applications | **Build:** A Generative AI application using Low Code tools | Learn More | | 11 | Integrating External Applications with Function Calling | **Build:** What is function calling and its use cases for applications | Learn More | | 12 | Designing UX for AI Applications | **Learn:** How to apply UX design principles when developing Generative AI Applications | Learn More | | 13 | Securing Your Generative AI Applications | **Learn:** The threats and risks to AI systems and methods to secure these systems. | Learn More | | 14 | The Generative AI Application Lifecycle | **Learn:** The tools and metrics to manage the LLM Lifecycle and LLMOps | Learn More | | 15 | Retrieval Augmented Generation (RAG) and Vector Databases | **Build:** An application using a RAG Framework to retrieve embeddings from a Vector Databases | Learn More | | 16 | Open Source Models and Hugging Face | **Build:** An application using open source models available on Hugging Face | Learn More | | 17 | AI Agents | **Build:** An application using an AI Agent Framework | Learn More | | 18 | Fine-Tuning LLMs | **Learn:** The what, why and how of fine-tuning LLMs | Learn More |

redis-ai-resources

A curated repository of code recipes, demos, and resources for basic and advanced Redis use cases in the AI ecosystem. It includes demos for ArxivChatGuru, Redis VSS, Vertex AI & Redis, Agentic RAG, ArXiv Search, and Product Search. Recipes cover topics like Getting started with RAG, Semantic Cache, Advanced RAG, and Recommendation systems. The repository also provides integrations/tools like RedisVL, AWS Bedrock, LangChain Python, LangChain JS, LlamaIndex, Semantic Kernel, RelevanceAI, and DocArray. Additional content includes blog posts, talks, reviews, and documentation related to Vector Similarity Search, AI-Powered Document Search, Vector Databases, Real-Time Product Recommendations, and more. Benchmarks compare Redis against other Vector Databases and ANN benchmarks. Documentation includes QuickStart guides, official literature for Vector Similarity Search, Redis-py client library docs, Redis Stack documentation, and Redis client list.

ai-samples

AI Samples for .NET is a repository containing various samples demonstrating how to use AI in .NET applications. It provides quickstarts using Semantic Kernel and Azure OpenAI SDK, covers LLM Core Concepts, End to End Examples, Local Models, Local Embedding Models, Tokenizers, Vector Databases, and Reference Examples. The repository showcases different AI-related projects and tools for developers to explore and learn from.

RustySEO

RustySEO is a free, modern SEO/GEO toolkit designed to help users crawl and analyze websites and server logs without crawl limits. It is an all-in-one, cross-platform marketing toolkit for comprehensive SEO & GEO analysis, providing actionable insights into marketing and SEO strategies. The tool offers features such as shallow & deep crawl, technical diagnostics, on-page SEO analysis, dashboards, reporting, topic and keyword generators, AI chatbot, crawl history, image conversion and optimization, and more. RustySEO aims to be a robust, free alternative to costly commercial SEO tools, with integrations like Google PageSpeed Insights, Google Gemini, and more.

opik

Comet Opik is a repository containing two main services: a frontend and a backend. It provides a Python SDK for easy installation. Users can run the full application locally with minikube, following specific installation prerequisites. The repository structure includes directories for applications like Opik backend, with detailed instructions available in the README files. Users can manage the installation using simple k8s commands and interact with the application via URLs for checking the running application and API documentation. The repository aims to facilitate local development and testing of Opik using Kubernetes technology.

kodus-ai

Kodus AI is an open-source AI agent designed to review code like a real teammate, providing personalized, context-aware code reviews to help teams catch bugs, enforce best practices, and maintain a clean codebase. It seamlessly integrates with Git workflows, learns team coding patterns, and offers custom review policies. Kodus supports all programming languages with semantic and AST analysis, enhancing code review accuracy and providing actionable feedback. The tool is available in Cloud and Self-Hosted editions, offering features like self-hosting, unlimited users, custom integrations, and advanced compliance support.

together-cookbook

The Together Cookbook is a collection of code and guides designed to help developers build with open source models using Together AI. The recipes provide examples on how to chain multiple LLM calls, create agents that route tasks to specialized models, run multiple LLMs in parallel, break down tasks into parallel subtasks, build agents that iteratively improve responses, perform LoRA fine-tuning and inference, fine-tune LLMs for repetition, improve summarization capabilities, fine-tune LLMs on multi-step conversations, implement retrieval-augmented generation, conduct multimodal search and conditional image generation, visualize vector embeddings, improve search results with rerankers, implement vector search with embedding models, extract structured text from images, summarize and evaluate outputs with LLMs, generate podcasts from PDF content, and get LLMs to generate knowledge graphs.

Groma

Groma is a grounded multimodal assistant that excels in region understanding and visual grounding. It can process user-defined region inputs and generate contextually grounded long-form responses. The tool presents a unique paradigm for multimodal large language models, focusing on visual tokenization for localization. Groma achieves state-of-the-art performance in referring expression comprehension benchmarks. The tool provides pretrained model weights and instructions for data preparation, training, inference, and evaluation. Users can customize training by starting from intermediate checkpoints. Groma is designed to handle tasks related to detection pretraining, alignment pretraining, instruction finetuning, instruction following, and more.

CS7320-AI

CS7320-AI is a repository containing lecture materials, simple Python code examples, and assignments for the course CS 5/7320 Artificial Intelligence. The code examples cover various chapters of the textbook 'Artificial Intelligence: A Modern Approach' by Russell and Norvig. The repository focuses on basic AI concepts rather than advanced implementation techniques. It includes HOWTO guides for installing Python, working on assignments, and using AI with Python.

LLM-PowerHouse-A-Curated-Guide-for-Large-Language-Models-with-Custom-Training-and-Inferencing

LLM-PowerHouse is a comprehensive and curated guide designed to empower developers, researchers, and enthusiasts to harness the true capabilities of Large Language Models (LLMs) and build intelligent applications that push the boundaries of natural language understanding. This GitHub repository provides in-depth articles, codebase mastery, LLM PlayLab, and resources for cost analysis and network visualization. It covers various aspects of LLMs, including NLP, models, training, evaluation metrics, open LLMs, and more. The repository also includes a collection of code examples and tutorials to help users build and deploy LLM-based applications.

Awesome-AITools

This repo collects AI-related utilities. ## All Categories * All Categories * ChatGPT and other closed-source LLMs * AI Search engine * Open Source LLMs * GPT/LLMs Applications * LLM training platform * Applications that integrate multiple LLMs * AI Agent * Writing * Programming Development * Translation * AI Conversation or AI Voice Conversation * Image Creation * Speech Recognition * Text To Speech * Voice Processing * AI generated music or sound effects * Speech translation * Video Creation * Video Content Summary * OCR(Optical Character Recognition)

For similar tasks

mcp-go

MCP Go is a Go implementation of the Model Context Protocol (MCP), facilitating seamless integration between LLM applications and external data sources and tools. It handles complex protocol details and server management, allowing developers to focus on building tools. The tool is designed to be fast, simple, and complete, aiming to provide a high-level and easy-to-use interface for developing MCP servers. MCP Go is currently under active development, with core features working and advanced capabilities in progress.

mcp-for-beginners

The Model Context Protocol (MCP) Curriculum for Beginners is an open-source framework designed to standardize interactions between AI models and client applications. It offers a structured learning path with practical coding examples and real-world use cases in popular programming languages like C#, Java, JavaScript, Rust, Python, and TypeScript. Whether you're an AI developer, system architect, or software engineer, this guide provides comprehensive resources for mastering MCP fundamentals and implementation strategies.

airswap-web

AirSwap Web is a peer-to-peer web frontend for AirSwap, an open developer community focused on decentralized trading systems. The repository utilizes React and NodeJS v16, with Yarn as the package manager. Designers and developers can contribute and earn through this platform, with detailed instructions available in the CONTRIBUTING file and the Discord server. The tool aims to facilitate decentralized trading and foster collaboration within the community.

blog

VNTechies Blog is a platform dedicated to sharing open-source resources and providing knowledge and career guidance for the Cloud and DevOps community. The blog encourages contributions and support through donations. All content on the blog and repository is licensed under Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

E2B

E2B Sandbox is a secure sandboxed cloud environment made for AI agents and AI apps. Sandboxes allow AI agents and apps to have long running cloud secure environments. In these environments, large language models can use the same tools as humans do. For example: * Cloud browsers * GitHub repositories and CLIs * Coding tools like linters, autocomplete, "go-to defintion" * Running LLM generated code * Audio & video editing The E2B sandbox can be connected to any LLM and any AI agent or app.

LlamaIndexTS

LlamaIndex.TS is a data framework for your LLM application. Use your own data with large language models (LLMs, OpenAI ChatGPT and others) in Typescript and Javascript.

floneum

Floneum is a graph editor that makes it easy to develop your own AI workflows. It uses large language models (LLMs) to run AI models locally, without any external dependencies or even a GPU. This makes it easy to use LLMs with your own data, without worrying about privacy. Floneum also has a plugin system that allows you to improve the performance of LLMs and make them work better for your specific use case. Plugins can be used in any language that supports web assembly, and they can control the output of LLMs with a process similar to JSONformer or guidance.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.