Acontext

Context Data Platform for AI Agents

Stars: 2905

Acontext is a context data platform designed for production AI agents, offering unified storage, built-in context management, and observability features. It helps agents scale from local demos to production without the need to rebuild context infrastructure. The platform provides solutions for challenges like scattered context data, long-running agents requiring context management, and tracking states from multi-modal agents. Acontext offers core features such as context storage, session management, disk storage, agent skills management, and sandbox for code execution and analysis. Users can connect to Acontext, install SDKs, initialize clients, store and retrieve messages, perform context engineering, and utilize agent storage tools. The platform also supports building agents using end-to-end scripts in Python and Typescript, with various templates available. Acontext's architecture includes client layer, backend with API and core components, infrastructure with PostgreSQL, S3, Redis, and RabbitMQ, and a web dashboard. Join the Acontext community on Discord and follow updates on GitHub.

README:

Acontext is a context data platform for production AI agents. Think of it as Supabase, but purpose-built for agent context.

We help agents scale from local demos to production without rebuilding context infrastructure — giving you unified storage, built-in context engineering, and context observability out of the box.

- Context data like llm messages, files, and skills are scattered across different storages

- Long-running agents need context management, and you have to build it yourself

- Tracking states from multi-modal, multi-llm Agents is a nightmare, how you know your agent is great?

- One unified storage for messages, files, skills, and more. Integrated with Claude Agent SDK, AI-SDK, OpenAI SDK...

- Built-in context management methods — just one argument, zero code

- Replay agent trajectory in Dashboard

- Observe agent with background monitor to estimate success rate

-

Context Storage

-

Session: save agent history from any llm, any modal.

- Context Editing - edit context window in one api.

- Disk: virtual, persistent filesystem,

- Agent Skills - manage skills in server-side.

- Sandbox - run code, analyze data, export artifacts.

-

Session: save agent history from any llm, any modal.

-

Context Observability

- Session Summary: asynchronously summarize agent's progress and user feedback.

- State Tracking: collect agent's working status in near real-time.

-

View everything in one dashboard

- Go to Acontext.io, claim your free credits.

- Go through a one-click onboarding to get your API Key (starts with

sk-ac)

💻 Self-host Acontext

We have an acontext-cli to help you do quick proof-of-concept. Download it first in your terminal:

curl -fsSL https://install.acontext.io | shYou should have docker installed and an OpenAI API Key to start an Acontext backend on your computer:

mkdir acontext_server && cd acontext_server

acontext server upMake sure your LLM has the ability to call tools. By default, Acontext will use

gpt-4.1.

acontext server up will create/use .env and config.yaml for Acontext, and create a db folder to persist data.

Once it's done, you can access the following endpoints:

- Acontext API Base URL: http://localhost:8029/api/v1

- Acontext Dashboard: http://localhost:3000/

We're maintaining Python

Click the doc link to see TS SDK Quickstart.

pip install acontextimport os

from acontext import AcontextClient

# For cloud:

client = AcontextClient(

api_key=os.getenv("ACONTEXT_API_KEY"),

)

# For self-hosted:

client = AcontextClient(

base_url="http://localhost:8029/api/v1",

api_key="sk-ac-your-root-api-bearer-token",

)Store messages in OpenAI, Anthropic, or Gemini format. Auto-converts on retrieval.

# Create session and store messages

session = client.sessions.create()

# Store text, image, file, etc.

client.sessions.store_message(

session_id=session.id,

blob={"role": "user", "content": "Hello!"},

format="openai"

)

# Retrieve in any format (auto-converts)

result = client.sessions.get_messages(session_id=session.id, format="anthropic")Compress context with summaries and edit strategies. Original messages unchanged.

# Session summary for prompt injection

summary = client.sessions.get_session_summary(session_id)

system_prompt = f"Previous tasks:\n{summary}\n\nContinue helping."

# Context editing - limit tokens on retrieval

result = client.sessions.get_messages(

session_id=session_id,

edit_strategies=[

{"type": "remove_tool_result", "params": {"keep_recent_n_tool_results": 3}},

{"type": "token_limit", "params": {"limit_tokens": 30000}}

]

)Disk Tool

Persistent file storage for agents. Supports read, write, grep, glob.

from acontext.agent.disk import DISK_TOOLS

from openai import OpenAI

disk = client.disks.create()

ctx = DISK_TOOLS.format_context(client, disk.id)

# Pass to LLM

response = OpenAI().chat.completions.create(

model="gpt-4.1",

messages=[

{"role": "system", "content": f"You have disk access.\n\n{ctx.get_context_prompt()}"},

{"role": "user", "content": "Create a todo.md with 3 tasks"}

],

tools=DISK_TOOLS.to_openai_tool_schema()

)

# Execute tool calls

for tc in response.choices[0].message.tool_calls:

result = DISK_TOOLS.execute_tool(ctx, tc.function.name, json.loads(tc.function.arguments))Sandbox Tool

Isolated code execution environment with bash, Python, and common tools.

from acontext.agent.sandbox import SANDBOX_TOOLS

from openai import OpenAI

sandbox = client.sandboxes.create()

disk = client.disks.create()

ctx = SANDBOX_TOOLS.format_context(client, sandbox.sandbox_id, disk.id)

# Pass to LLM

response = OpenAI().chat.completions.create(

model="gpt-4.1",

messages=[

{"role": "system", "content": f"You have sandbox access.\n\n{ctx.get_context_prompt()}"},

{"role": "user", "content": "Run a Python hello world script"}

],

tools=SANDBOX_TOOLS.to_openai_tool_schema()

)

# Execute tool calls

for tc in response.choices[0].message.tool_calls:

result = SANDBOX_TOOLS.execute_tool(ctx, tc.function.name, json.loads(tc.function.arguments))Sandbox with Skills

Mount reusable Agent Skills into sandbox at

/skills/{name}/. Download xlsx skill.

from acontext import FileUpload

# Upload a skill ZIP (e.g., xlsx.zip)

with open("web-artifacts-builder.zip", "rb") as f:

skill = client.skills.create(file=FileUpload(filename="xlsx.zip", content=f.read()))

# Mount into sandbox

ctx = SANDBOX_TOOLS.format_context(

client, sandbox.sandbox_id, disk.id,

mount_skills=[skill.id] # Available at /skills/{skill.name}/

)

# Context prompt includes skill instructions

response = OpenAI().chat.completions.create(

model="gpt-4.1",

messages=[

{"role": "system", "content": f"You have sandbox access.\n\n{ctx.get_context_prompt()}"},

{"role": "user", "content": "Create an Excel file with a simple budget spreadsheet and export it"}

],

tools=SANDBOX_TOOLS.to_openai_tool_schema()

)

# Execute tool calls

for tc in response.choices[0].message.tool_calls:

result = SANDBOX_TOOLS.execute_tool(ctx, tc.function.name, json.loads(tc.function.arguments))You can download a full skill interactive demo with acontext-cli:

acontext create my-skill --template-path "python/interactive-agent-skill"Download end-to-end scripts with acontext:

Python

acontext create my-proj --template-path "python/openai-basic"More examples on Python:

-

python/openai-agent-basic: openai agent sdk template -

python/openai-agent-artifacts: agent can edit and download artifacts -

python/claude-agent-sdk: claude agent sdk withClaudeAgentStorage -

python/agno-basic: agno framework template -

python/smolagents-basic: smolagents (huggingface) template -

python/interactive-agent-skill: interactive sandbox with mountable agent skills

Typescript

acontext create my-proj --template-path "typescript/openai-basic"More examples on Typescript:

-

typescript/vercel-ai-basic: agent in @vercel/ai-sdk -

typescript/claude-agent-sdk: claude agent sdk withClaudeAgentStorage -

typescript/interactive-agent-skill: interactive sandbox with mountable agent skills

[!NOTE]

Check our example repo for more templates: Acontext-Examples.

We're cooking more full-stack Agent Applications! Tell us what you want!

To understand what Acontext can do better, please view our docs

Star Acontext on Github to support and receive instant notifications

click to open

graph TB

subgraph "Client Layer"

PY["pip install acontext"]

TS["npm i @acontext/acontext"]

end

subgraph "Acontext Backend"

subgraph " "

API["API<br/>localhost:8029"]

CORE["Core"]

API -->|FastAPI & MQ| CORE

end

subgraph " "

Infrastructure["Infrastructures"]

PG["PostgreSQL"]

S3["S3"]

REDIS["Redis"]

MQ["RabbitMQ"]

end

end

subgraph "Dashboard"

UI["Web Dashboard<br/>localhost:3000"]

end

PY -->|RESTFUL API| API

TS -->|RESTFUL API| API

UI -->|RESTFUL API| API

API --> Infrastructure

CORE --> Infrastructure

Infrastructure --> PG

Infrastructure --> S3

Infrastructure --> REDIS

Infrastructure --> MQ

style PY fill:#3776ab,stroke:#fff,stroke-width:2px,color:#fff

style TS fill:#3178c6,stroke:#fff,stroke-width:2px,color:#fff

style API fill:#00add8,stroke:#fff,stroke-width:2px,color:#fff

style CORE fill:#ffd43b,stroke:#333,stroke-width:2px,color:#333

style UI fill:#000,stroke:#fff,stroke-width:2px,color:#fff

style PG fill:#336791,stroke:#fff,stroke-width:2px,color:#fff

style S3 fill:#ff9900,stroke:#fff,stroke-width:2px,color:#fff

style REDIS fill:#dc382d,stroke:#fff,stroke-width:2px,color:#fff

style MQ fill:#ff6600,stroke:#fff,stroke-width:2px,color:#fffJoin the community for support and discussions:

- Check our roadmap.md first.

- Read contributing.md

[](https://acontext.io)

[](https://acontext.io)This project is currently licensed under Apache License 2.0.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Acontext

Similar Open Source Tools

Acontext

Acontext is a context data platform designed for production AI agents, offering unified storage, built-in context management, and observability features. It helps agents scale from local demos to production without the need to rebuild context infrastructure. The platform provides solutions for challenges like scattered context data, long-running agents requiring context management, and tracking states from multi-modal agents. Acontext offers core features such as context storage, session management, disk storage, agent skills management, and sandbox for code execution and analysis. Users can connect to Acontext, install SDKs, initialize clients, store and retrieve messages, perform context engineering, and utilize agent storage tools. The platform also supports building agents using end-to-end scripts in Python and Typescript, with various templates available. Acontext's architecture includes client layer, backend with API and core components, infrastructure with PostgreSQL, S3, Redis, and RabbitMQ, and a web dashboard. Join the Acontext community on Discord and follow updates on GitHub.

agentops

AgentOps is a toolkit for evaluating and developing robust and reliable AI agents. It provides benchmarks, observability, and replay analytics to help developers build better agents. AgentOps is open beta and can be signed up for here. Key features of AgentOps include: - Session replays in 3 lines of code: Initialize the AgentOps client and automatically get analytics on every LLM call. - Time travel debugging: (coming soon!) - Agent Arena: (coming soon!) - Callback handlers: AgentOps works seamlessly with applications built using Langchain and LlamaIndex.

instructor

Instructor is a tool that provides structured outputs from Large Language Models (LLMs) in a reliable manner. It simplifies the process of extracting structured data by utilizing Pydantic for validation, type safety, and IDE support. With Instructor, users can define models and easily obtain structured data without the need for complex JSON parsing, error handling, or retries. The tool supports automatic retries, streaming support, and extraction of nested objects, making it production-ready for various AI applications. Trusted by a large community of developers and companies, Instructor is used by teams at OpenAI, Google, Microsoft, AWS, and YC startups.

LocalAGI

LocalAGI is a powerful, self-hostable AI Agent platform that allows you to design AI automations without writing code. It provides a complete drop-in replacement for OpenAI's Responses APIs with advanced agentic capabilities. With LocalAGI, you can create customizable AI assistants, automations, chat bots, and agents that run 100% locally, without the need for cloud services or API keys. The platform offers features like no-code agents, web-based interface, advanced agent teaming, connectors for various platforms, comprehensive REST API, short & long-term memory capabilities, planning & reasoning, periodic tasks scheduling, memory management, multimodal support, extensible custom actions, fully customizable models, observability, and more.

UnrealGenAISupport

The Unreal Engine Generative AI Support Plugin is a tool designed to integrate various cutting-edge LLM/GenAI models into Unreal Engine for game development. It aims to simplify the process of using AI models for game development tasks, such as controlling scene objects, generating blueprints, running Python scripts, and more. The plugin currently supports models from organizations like OpenAI, Anthropic, XAI, Google Gemini, Meta AI, Deepseek, and Baidu. It provides features like API support, model control, generative AI capabilities, UI generation, project file management, and more. The plugin is still under development but offers a promising solution for integrating AI models into game development workflows.

open-edison

OpenEdison is a secure MCP control panel that connects AI to data/software with additional security controls to reduce data exfiltration risks. It helps address the lethal trifecta problem by providing visibility, monitoring potential threats, and alerting on data interactions. The tool offers features like data leak monitoring, controlled execution, easy configuration, visibility into agent interactions, a simple API, and Docker support. It integrates with LangGraph, LangChain, and plain Python agents for observability and policy enforcement. OpenEdison helps gain observability, control, and policy enforcement for AI interactions with systems of records, existing company software, and data to reduce risks of AI-caused data leakage.

obsei

Obsei is an open-source, low-code, AI powered automation tool that consists of an Observer to collect unstructured data from various sources, an Analyzer to analyze the collected data with various AI tasks, and an Informer to send analyzed data to various destinations. The tool is suitable for scheduled jobs or serverless applications as all Observers can store their state in databases. Obsei is still in alpha stage, so caution is advised when using it in production. The tool can be used for social listening, alerting/notification, automatic customer issue creation, extraction of deeper insights from feedbacks, market research, dataset creation for various AI tasks, and more based on creativity.

hud-python

hud-python is a Python library for creating interactive heads-up displays (HUDs) in video games. It provides a simple and flexible way to overlay information on the screen, such as player health, score, and notifications. The library is designed to be easy to use and customizable, allowing game developers to enhance the user experience by adding dynamic elements to their games. With hud-python, developers can create engaging HUDs that improve gameplay and provide important feedback to players.

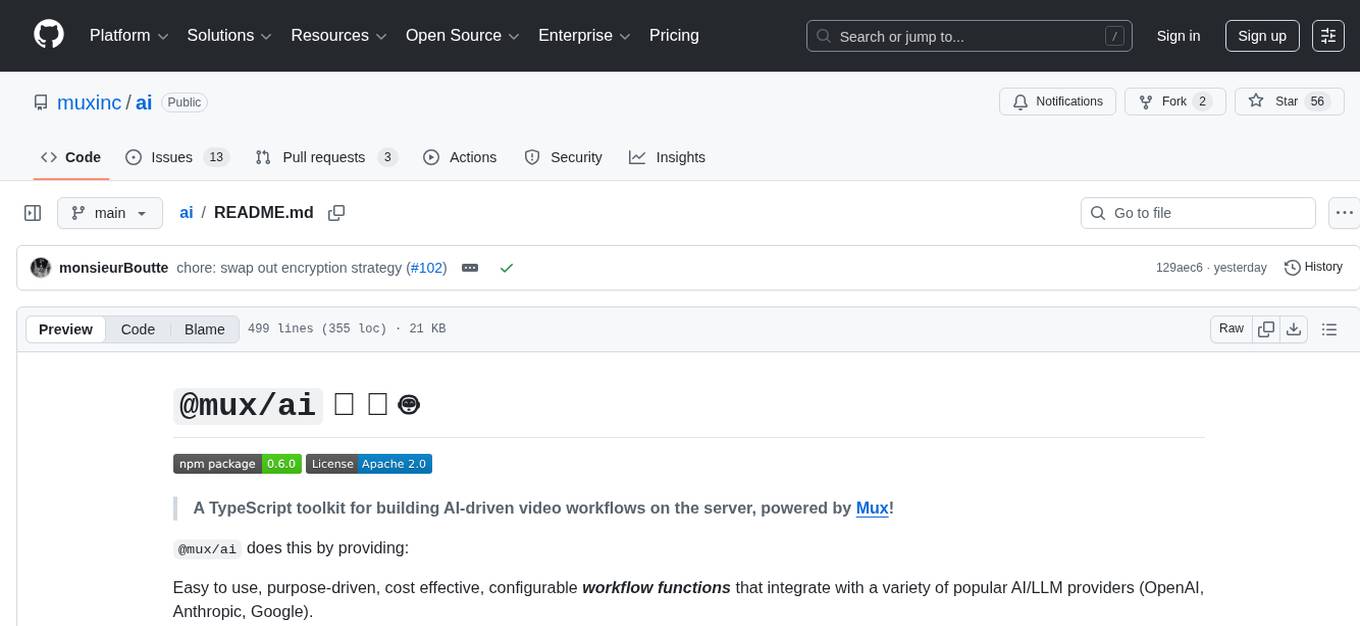

ai

A TypeScript toolkit for building AI-driven video workflows on the server, powered by Mux! @mux/ai provides purpose-driven workflow functions and primitive functions that integrate with popular AI/LLM providers like OpenAI, Anthropic, and Google. It offers pre-built workflows for tasks like generating summaries and tags, content moderation, chapter generation, and more. The toolkit is cost-effective, supports multi-modal analysis, tone control, and configurable thresholds, and provides full TypeScript support. Users can easily configure credentials for Mux and AI providers, as well as cloud infrastructure like AWS S3 for certain workflows. @mux/ai is production-ready, offers composable building blocks, and supports universal language detection.

client-python

The Mistral Python Client is a tool inspired by cohere-python that allows users to interact with the Mistral AI API. It provides functionalities to access and utilize the AI capabilities offered by Mistral. Users can easily install the client using pip and manage dependencies using poetry. The client includes examples demonstrating how to use the API for various tasks, such as chat interactions. To get started, users need to obtain a Mistral API Key and set it as an environment variable. Overall, the Mistral Python Client simplifies the integration of Mistral AI services into Python applications.

mcphub.nvim

MCPHub.nvim is a powerful Neovim plugin that integrates MCP (Model Context Protocol) servers into your workflow. It offers a centralized config file for managing servers and tools, with an intuitive UI for testing resources. Ideal for LLM integration, it provides programmatic API access and interactive testing through the `:MCPHub` command.

quantalogic

QuantaLogic is a ReAct framework for building advanced AI agents that seamlessly integrates large language models with a robust tool system. It aims to bridge the gap between advanced AI models and practical implementation in business processes by enabling agents to understand, reason about, and execute complex tasks through natural language interaction. The framework includes features such as ReAct Framework, Universal LLM Support, Secure Tool System, Real-time Monitoring, Memory Management, and Enterprise Ready components.

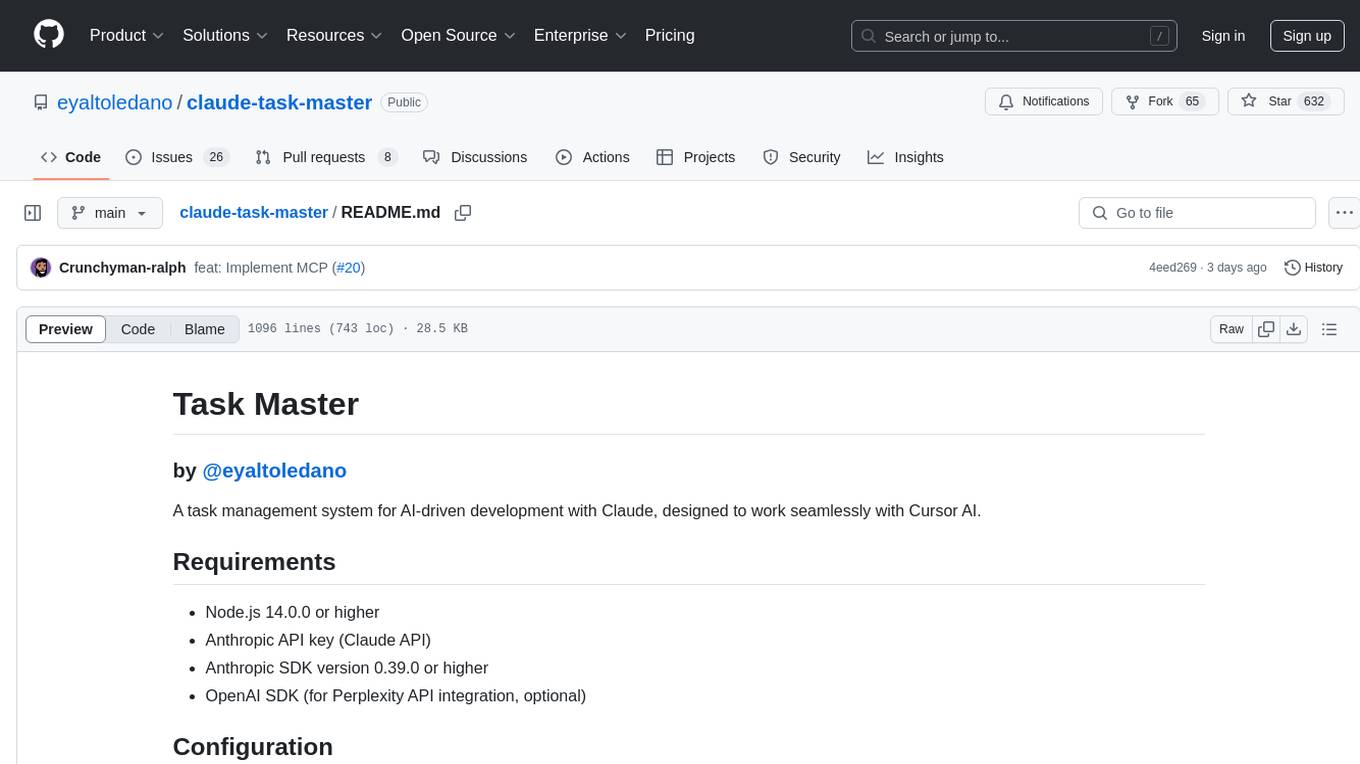

claude-task-master

Claude Task Master is a task management system designed for AI-driven development with Claude, seamlessly integrating with Cursor AI. It allows users to configure tasks through environment variables, parse PRD documents, generate structured tasks with dependencies and priorities, and manage task status. The tool supports task expansion, complexity analysis, and smart task recommendations. Users can interact with the system through CLI commands for task discovery, implementation, verification, and completion. It offers features like task breakdown, dependency management, and AI-driven task generation, providing a structured workflow for efficient development.

pixeltable

Pixeltable is a Python library designed for ML Engineers and Data Scientists to focus on exploration, modeling, and app development without the need to handle data plumbing. It provides a declarative interface for working with text, images, embeddings, and video, enabling users to store, transform, index, and iterate on data within a single table interface. Pixeltable is persistent, acting as a database unlike in-memory Python libraries such as Pandas. It offers features like data storage and versioning, combined data and model lineage, indexing, orchestration of multimodal workloads, incremental updates, and automatic production-ready code generation. The tool emphasizes transparency, reproducibility, cost-saving through incremental data changes, and seamless integration with existing Python code and libraries.

hayhooks

Hayhooks is a tool that simplifies the deployment and serving of Haystack pipelines as REST APIs. It allows users to wrap their pipelines with custom logic and expose them via HTTP endpoints, including OpenAI-compatible chat completion endpoints. With Hayhooks, users can easily convert their Haystack pipelines into API services with minimal boilerplate code.

llm-sandbox

LLM Sandbox is a lightweight and portable sandbox environment designed to securely execute large language model (LLM) generated code in a safe and isolated manner using Docker containers. It provides an easy-to-use interface for setting up, managing, and executing code in a controlled Docker environment, simplifying the process of running code generated by LLMs. The tool supports multiple programming languages, offers flexibility with predefined Docker images or custom Dockerfiles, and allows scalability with support for Kubernetes and remote Docker hosts.

For similar tasks

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

sorrentum

Sorrentum is an open-source project that aims to combine open-source development, startups, and brilliant students to build machine learning, AI, and Web3 / DeFi protocols geared towards finance and economics. The project provides opportunities for internships, research assistantships, and development grants, as well as the chance to work on cutting-edge problems, learn about startups, write academic papers, and get internships and full-time positions at companies working on Sorrentum applications.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

mojo

Mojo is a new programming language that bridges the gap between research and production by combining Python syntax and ecosystem with systems programming and metaprogramming features. Mojo is still young, but it is designed to become a superset of Python over time.

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.