lerobotdepot

LeRobotDepot is a community-driven repository listing open-source hardware, components, and 3D-printable projects compatible with the LeRobot library. It helps users easily discover, build, and contribute to affordable, accessible robotics solutions powered by state-of-the-art AI.

Stars: 69

LeRobotDepot is a repository listing open-source hardware, components, and 3D-printable projects compatible with the LeRobot library. It helps users discover, build, and contribute to affordable robotics solutions powered by AI. The repository includes various robot arms, grippers, cameras, and accessories, along with detailed information on pricing, compatibility, and additional components. Users can find kits for assembling arms, wrist cameras, haptic sensors, and other modules. The repository also features mobile arms, bi-manual arms, humanoid robots, and task kits for specific tasks like push T task and handling a toaster. Additionally, there are resources for teleoperation, cameras, and common accessories like self-fusing silicone rubber for increasing grip friction.

README:

Welcome to LeRobotDepot. This repository is listing open-source hardware, components, and 3D-printable projects compatible with the LeRobot library. It helps users easily discover, build, and contribute to affordable, accessible robotics solutions powered by state-of-the-art AI.

Hardware in this family uses Feetech motors—specifically, the STS3215 series available in both 7.4V and 12V variants. These motors are popular for their balance between performance and cost:

- 7.4V Version: Typically offers a stall torque of approximately 16.5 kg·cm at 6V. This option is often sufficient for basic robotics applications.

- 12V Version: Delivers around 30 kg·cm of stall torque, providing increased power for more demanding tasks.

By standardizing on the STS3215, projects in the Feetech Family maintain similar power and control characteristics, ensuring compatibility across accessories and modules.

This 5 DOF arm is the recommended arm to get started with LeRobot—especially the 7.4V version.

| Price | US | EU | RMB |

|---|---|---|---|

| Follower and Leader arms | $232 | €244 | ¥1343.16 |

| One Arm | $123 | €128 | ¥682.23 |

For detailed information on the various accessories available for the SO-ARM100, including mounting options and additional components, please refer to the SO-ARM100 repository’s hardware documentation.

The SO-ARM100 supports multiple wrist camera options to suit a variety of applications. There are three officially supported options and one community-developed alternative:

| Camera Name | Reference Link | Notes |

|---|---|---|

| Vinmooog Webcam | SO-ARM100 Instructions | |

| 32x32mm UVC Module | SO-ARM100 Instructions | |

| Arducam 5MP Wide Angle | Le Kiwi STL File | It can also be used with 32x32mm UVC modules, but if you don't use a wide-angle camera, the gripper will not appear in the camera view. |

| RealSense™ D405 | SO-ARM100 Instructions | |

| RealSense™ D435 | STL File |

You can find kits for the SO100 arms here:

Both assembled and non-assembled kits are available, depending on the supplier.

This 5 DOF arm is similar to the SO-ARM100 but uses only the gripper as a 3D printed part. It is recommended to build or purchase the SO100 arm instead. While the Moss v1 robot is still supported, it will be deprecated. Additionally, 3D-printed parts for the SO-ARM100 are now available for purchase if you don't have a printer.

| Price | US | EU | RMB |

|---|---|---|---|

| Follower and Leader arms | $288 | €274 | ¥1631.46 |

| One Arm | $159 | €153 | ¥868.13 |

See SO-ARM100 Accessories for compatible components and mounts.

This is a 6 DOF arm, based on the SO-ARM100 leader and follower arms, with an additional joint enabled by one more STS3215 servo motor.

The price is roughly equivalent to the SO-ARM100, plus the cost of one extra Feetech servo motor—either 7.4V or 12V, depending on your chosen configuration.

For wrist cameras, haptic sensors, and other modules, see SO-ARM100 Accessories for compatible components.

This is a 6 DOF arm, developed by the community around the SimpleAutomation repository. It is a refined version of the SO-ARM100, offering enhanced movement precision and a gripper better optimized for handling small objects.

| Price | US |

|---|---|

| Follower and Leader arms | ± $450 |

AB-SO-BOT is built using a combination of 3D-printed parts and standard 4040-T-slot aluminium extrusion to create a flexible and modular body for the SO-ARM100 robotic arm. This modularity allows for easy customization, expansion, and adaptation for different robotic applications.

Mobile version of the SO-ARM100.

| Price | US | EU |

|---|---|---|

| 12V | $488.21 | €542.56 |

| 5V | $524.95 | €525.9 |

| Base only (5V) | $251.95 | €306.9 |

| Base only (12V) | $257.43 | €305 |

| Base only wired | $174 | €233.3 |

Mobile version of the SO-ARM100 with two arms.

| Price | US | EU | RMB |

|---|---|---|---|

| Total | ~ $300 | ~ €300 | ~ ¥2000 |

Miniature version of the BDX Droid by Disney.

~€410

A project for a full body robot—currently featuring the torso and arms.

Parallel-finger gripper compatible with SO-ARM100.

~€25

Precise gripper compatible with SO-ARM100.

It provides an additional axis to the SO-ARM100 robot arm.

Hardware in this family uses Dynamixel servo motors, which are considered more of an industry standard than Feetech motors.

The Koch-v1-1 is a 5 DOF robotic arm. If you want to familiarise yourself with more industry standard Dynamixel servo motors, this project could be a good starting point. Compared to the SO-ARM100, you will have less torque and a more limited range of movement from its base.

| Price | US | EU | UK | RMB | JPY |

|---|---|---|---|---|---|

| Follower and Leader arms | $477 | €673 | £507 | ¥3947 | ¥22439 |

| Leader Arm | $278 | €368 | £285 | ¥2251 | ¥15446 |

| Follower Arm | $199 | €305 | £222 | ¥1696 | ¥6993 |

The Koch-v1-1 supports 2 wrist camera options:

| Camera Name | Reference Link | Notes |

|---|---|---|

| SVPRO 1080P | Discord Message with STL file | |

| N/A | WOWROBO Gripper-Camera Kit | Kit including the gripper, the camera mount and the camera |

- Robotic arm inspired by Kochv-1-1: WOWROBO Twinarm

ALOHA 2 is a bimanual teleoperation system that uses two types of arms—a pair of smaller, ergonomically designed leader arms and two robust follower arms—to support coordinated dual-arm manipulation. Each arm offers 6 degrees of freedom (6 DOF), which provides an extensive range of motion for accessing various positions and orientations.

The system is designed for research in fine-grained bimanual manipulation. Its construction includes enhanced gripper mechanisms, a passive gravity compensation system, and a rigid frame that supports precise and repeatable operations for complex tasks. These advanced features and components are reflected in its higher cost compared to more basic robotic arm solutions.

~$27,000

- "T" for push T task.

- A "toaster" with 2 pieces of "toast".

- A paper towel base & rod + paper towel roll.

- Cube.

- Ring.

To increase friction on gripper.

Hardware that attaches to the back of your hand and fingertips that tracks 16 degrees of freedom. Compatible with HOPEJr hands.

| Name | Price Range | Link | Resolution | FPS | Wide Angle | Microphone |

|---|---|---|---|---|---|---|

| Innomaker 1080P USB2.0 | ± $18, €16 | Innomaker Link | 1920×1080 | 30 | Fov(D) = 130° Fov(H) = 103° |

No |

| Innomaker 720p USB2.0 | ± $10, €14 | Innomaker Link | 1280×720 | 30 | FOV (D) = 120° FOV (H) = 102° |

No |

| Innomaker OV9281 USB 2.0 | ± $36, €42 | Innomaker Link | 1280×800 | 120 | FOV Up to 148° | No |

| Vinmooog Webcam | ± $14, €12 | Amazon Link | 1920×1080 | N/A | N/A | Yes |

- https://www.amazon.co.uk/ELP-Conferencing-Fisheye-0-01Lux-Computer/dp/B08Y1KY5T9?th=1

- https://www.amazon.com/dp/B07CSJN2KH

Interested in contributing? Please take a moment to review our CONTRIBUTING.md for guidelines on how to get started.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for lerobotdepot

Similar Open Source Tools

lerobotdepot

LeRobotDepot is a repository listing open-source hardware, components, and 3D-printable projects compatible with the LeRobot library. It helps users discover, build, and contribute to affordable robotics solutions powered by AI. The repository includes various robot arms, grippers, cameras, and accessories, along with detailed information on pricing, compatibility, and additional components. Users can find kits for assembling arms, wrist cameras, haptic sensors, and other modules. The repository also features mobile arms, bi-manual arms, humanoid robots, and task kits for specific tasks like push T task and handling a toaster. Additionally, there are resources for teleoperation, cameras, and common accessories like self-fusing silicone rubber for increasing grip friction.

TokenPacker

TokenPacker is a novel visual projector that compresses visual tokens by 75%∼89% with high efficiency. It adopts a 'coarse-to-fine' scheme to generate condensed visual tokens, achieving comparable or better performance across diverse benchmarks. The tool includes TokenPacker for general use and TokenPacker-HD for high-resolution image understanding. It provides training scripts, checkpoints, and supports various compression ratios and patch numbers.

ColossalAI

Colossal-AI is a deep learning system for large-scale parallel training. It provides a unified interface to scale sequential code of model training to distributed environments. Colossal-AI supports parallel training methods such as data, pipeline, tensor, and sequence parallelism and is integrated with heterogeneous training and zero redundancy optimizer.

MiniCPM-V

MiniCPM-V is a series of end-side multimodal LLMs designed for vision-language understanding. The models take image and text inputs to provide high-quality text outputs. The series includes models like MiniCPM-Llama3-V 2.5 with 8B parameters surpassing proprietary models, and MiniCPM-V 2.0, a lighter model with 2B parameters. The models support over 30 languages, efficient deployment on end-side devices, and have strong OCR capabilities. They achieve state-of-the-art performance on various benchmarks and prevent hallucinations in text generation. The models can process high-resolution images efficiently and support multilingual capabilities.

BitBLAS

BitBLAS is a library for mixed-precision BLAS operations on GPUs, for example, the $W_{wdtype}A_{adtype}$ mixed-precision matrix multiplication where $C_{cdtype}[M, N] = A_{adtype}[M, K] \times W_{wdtype}[N, K]$. BitBLAS aims to support efficient mixed-precision DNN model deployment, especially the $W_{wdtype}A_{adtype}$ quantization in large language models (LLMs), for example, the $W_{UINT4}A_{FP16}$ in GPTQ, the $W_{INT2}A_{FP16}$ in BitDistiller, the $W_{INT2}A_{INT8}$ in BitNet-b1.58. BitBLAS is based on techniques from our accepted submission at OSDI'24.

off-grid-mobile

Off Grid is a complete offline AI suite that allows users to perform various tasks such as text generation, image generation, vision AI, voice transcription, and document analysis on their mobile devices without sending any data out. The tool offers high performance on flagship devices and supports a wide range of models for different tasks. Users can easily install the tool on Android by downloading the APK from GitHub Releases or build it from source with Node.js and JDK. The documentation provides detailed information on the system architecture, codebase, design system, visual hierarchy, test flows, and more. Contributions are welcome, and the tool is built with a focus on user privacy and data security, ensuring no cloud, subscription, or data harvesting.

BharatMLStack

BharatMLStack is a comprehensive, production-ready machine learning infrastructure platform designed to democratize ML capabilities across India and beyond. It provides a robust, scalable, and accessible ML stack empowering organizations to build, deploy, and manage machine learning solutions at massive scale. It includes core components like Horizon, Trufflebox UI, Online Feature Store, Go SDK, Python SDK, and Numerix, offering features such as control plane, ML management console, real-time features, mathematical compute engine, and more. The platform is production-ready, cloud agnostic, and offers observability through built-in monitoring and logging.

inference

Xorbits Inference (Xinference) is a powerful and versatile library designed to serve language, speech recognition, and multimodal models. With Xorbits Inference, you can effortlessly deploy and serve your or state-of-the-art built-in models using just a single command. Whether you are a researcher, developer, or data scientist, Xorbits Inference empowers you to unleash the full potential of cutting-edge AI models.

InternLM-XComposer

InternLM-XComposer2 is a groundbreaking vision-language large model (VLLM) based on InternLM2-7B excelling in free-form text-image composition and comprehension. It boasts several amazing capabilities and applications: * **Free-form Interleaved Text-Image Composition** : InternLM-XComposer2 can effortlessly generate coherent and contextual articles with interleaved images following diverse inputs like outlines, detailed text requirements and reference images, enabling highly customizable content creation. * **Accurate Vision-language Problem-solving** : InternLM-XComposer2 accurately handles diverse and challenging vision-language Q&A tasks based on free-form instructions, excelling in recognition, perception, detailed captioning, visual reasoning, and more. * **Awesome performance** : InternLM-XComposer2 based on InternLM2-7B not only significantly outperforms existing open-source multimodal models in 13 benchmarks but also **matches or even surpasses GPT-4V and Gemini Pro in 6 benchmarks** We release InternLM-XComposer2 series in three versions: * **InternLM-XComposer2-4KHD-7B** 🤗: The high-resolution multi-task trained VLLM model with InternLM-7B as the initialization of the LLM for _High-resolution understanding_ , _VL benchmarks_ and _AI assistant_. * **InternLM-XComposer2-VL-7B** 🤗 : The multi-task trained VLLM model with InternLM-7B as the initialization of the LLM for _VL benchmarks_ and _AI assistant_. **It ranks as the most powerful vision-language model based on 7B-parameter level LLMs, leading across 13 benchmarks.** * **InternLM-XComposer2-VL-1.8B** 🤗 : A lightweight version of InternLM-XComposer2-VL based on InternLM-1.8B. * **InternLM-XComposer2-7B** 🤗: The further instruction tuned VLLM for _Interleaved Text-Image Composition_ with free-form inputs. Please refer to Technical Report and 4KHD Technical Reportfor more details.

rai

RAI is a framework designed to bring general multi-agent system capabilities to robots, enhancing human interactivity, flexibility in problem-solving, and out-of-the-box AI features. It supports multi-modalities, incorporates an advanced database for agent memory, provides ROS 2-oriented tooling, and offers a comprehensive task/mission orchestrator. The framework includes features such as voice interaction, customizable robot identity, camera sensor access, reasoning through ROS logs, and integration with LangChain for AI tools. RAI aims to support various AI vendors, improve human-robot interaction, provide an SDK for developers, and offer a user interface for configuration.

Vision-Agents

Vision Agents is an open-source project by Stream that provides building blocks for creating intelligent, low-latency video experiences powered by custom models and infrastructure. It offers multi-modal AI agents that watch, listen, and understand video in real-time. The project includes SDKs for various platforms and integrates with popular AI services like Gemini and OpenAI. Vision Agents can be used for tasks such as sports coaching, security camera systems with package theft detection, and building invisible assistants for various applications. The project aims to simplify the development of real-time vision AI applications by providing a range of processors, integrations, and out-of-the-box features.

MiniCPM-V-CookBook

MiniCPM-V & o Cookbook is a comprehensive repository for building multimodal AI applications effortlessly. It provides easy-to-use documentation, supports a wide range of users, and offers versatile deployment scenarios. The repository includes live demonstrations, inference recipes for vision and audio capabilities, fine-tuning recipes, serving recipes, quantization recipes, and a framework support matrix. Users can customize models, deploy them efficiently, and compress models to improve efficiency. The repository also showcases awesome works using MiniCPM-V & o and encourages community contributions.

llm4ad

LLM4AD is an open-source Python-based platform leveraging Large Language Models (LLMs) for Automatic Algorithm Design (AD). It provides unified interfaces for methods, tasks, and LLMs, along with features like evaluation acceleration, secure evaluation, logs, GUI support, and more. The platform was originally developed for optimization tasks but is versatile enough to be used in other areas such as machine learning, science discovery, game theory, and engineering design. It offers various search methods and algorithm design tasks across different domains. LLM4AD supports remote LLM API, local HuggingFace LLM deployment, and custom LLM interfaces. The project is licensed under the MIT License and welcomes contributions, collaborations, and issue reports.

Pallaidium

Pallaidium is a generative AI movie studio integrated into the Blender video editor. It allows users to AI-generate video, image, and audio from text prompts or existing media files. The tool provides various features such as text to video, text to audio, text to speech, text to image, image to image, image to video, video to video, image to text, and more. It requires a Windows system with a CUDA-supported Nvidia card and at least 6 GB VRAM. Pallaidium offers batch processing capabilities, text to audio conversion using Bark, and various performance optimization tips. Users can install the tool by downloading the add-on and following the installation instructions provided. The tool comes with a set of restrictions on usage, prohibiting the generation of harmful, pornographic, violent, or false content.

ai-hands-on

A complete, hands-on guide to becoming an AI Engineer. This repository is designed to help you learn AI from first principles, build real neural networks, and understand modern LLM systems end-to-end. Progress through math, PyTorch, deep learning, transformers, RAG, and OCR with clean, intuitive Jupyter notebooks guiding you at every step. Suitable for beginners and engineers leveling up, providing clarity, structure, and intuition to build real AI systems.

For similar tasks

lerobotdepot

LeRobotDepot is a repository listing open-source hardware, components, and 3D-printable projects compatible with the LeRobot library. It helps users discover, build, and contribute to affordable robotics solutions powered by AI. The repository includes various robot arms, grippers, cameras, and accessories, along with detailed information on pricing, compatibility, and additional components. Users can find kits for assembling arms, wrist cameras, haptic sensors, and other modules. The repository also features mobile arms, bi-manual arms, humanoid robots, and task kits for specific tasks like push T task and handling a toaster. Additionally, there are resources for teleoperation, cameras, and common accessories like self-fusing silicone rubber for increasing grip friction.

sunone_aimbot

Sunone Aimbot is an AI-powered aim bot for first-person shooter games. It leverages YOLOv8 and YOLOv10 models, PyTorch, and various tools to automatically target and aim at enemies within the game. The AI model has been trained on more than 30,000 images from popular first-person shooter games like Warface, Destiny 2, Battlefield 2042, CS:GO, Fortnite, The Finals, CS2, and more. The aimbot can be configured through the `config.ini` file to adjust various settings related to object search, capture methods, aiming behavior, hotkeys, mouse settings, shooting options, Arduino integration, AI model parameters, overlay display, debug window, and more. Users are advised to follow specific recommendations to optimize performance and avoid potential issues while using the aimbot.

Everything-LLMs-And-Robotics

The Everything-LLMs-And-Robotics repository is the world's largest GitHub repository focusing on the intersection of Large Language Models (LLMs) and Robotics. It provides educational resources, research papers, project demos, and Twitter threads related to LLMs, Robotics, and their combination. The repository covers topics such as reasoning, planning, manipulation, instructions and navigation, simulation frameworks, perception, and more, showcasing the latest advancements in the field.

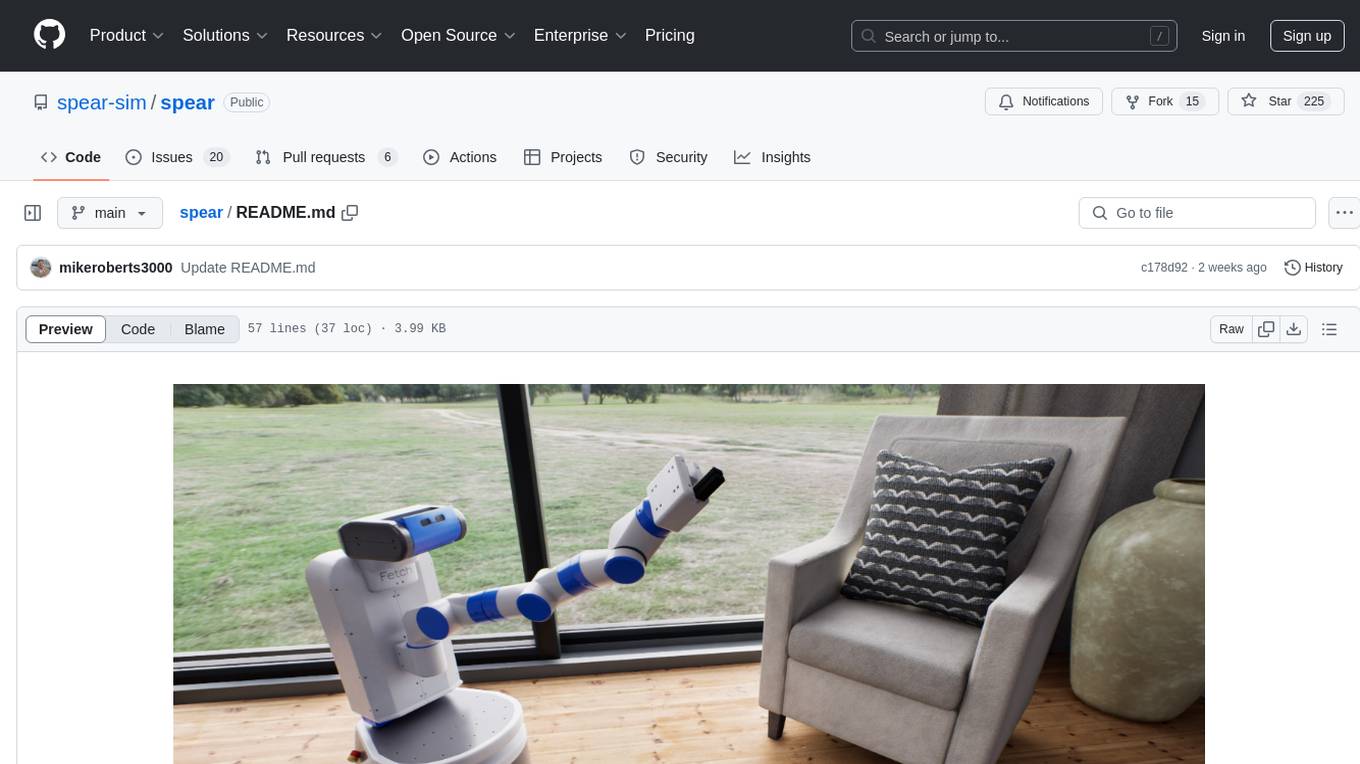

spear

SPEAR is a Simulator for Photorealistic Embodied AI Research that addresses limitations in existing simulators by offering 300 unique virtual indoor environments with detailed geometry, photorealistic materials, and unique floor plans. It provides an OpenAI Gym interface for interaction via Python, released under an MIT License. The simulator was developed with support from the Intelligent Systems Lab at Intel and Kujiale.

anthrax-ai

AnthraxAI is a Vulkan-based game engine that allows users to create and develop 3D games. The engine provides features such as scene selection, camera movement, object manipulation, debugging tools, audio playback, and real-time shader code updates. Users can build and configure the project using CMake and compile shaders using the glslc compiler. The engine supports building on both Linux and Windows platforms, with specific dependencies for each. Visual Studio Code integration is available for building and debugging the project, with instructions provided in the readme for setting up the workspace and required extensions.

For similar jobs

lerobotdepot

LeRobotDepot is a repository listing open-source hardware, components, and 3D-printable projects compatible with the LeRobot library. It helps users discover, build, and contribute to affordable robotics solutions powered by AI. The repository includes various robot arms, grippers, cameras, and accessories, along with detailed information on pricing, compatibility, and additional components. Users can find kits for assembling arms, wrist cameras, haptic sensors, and other modules. The repository also features mobile arms, bi-manual arms, humanoid robots, and task kits for specific tasks like push T task and handling a toaster. Additionally, there are resources for teleoperation, cameras, and common accessories like self-fusing silicone rubber for increasing grip friction.

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.