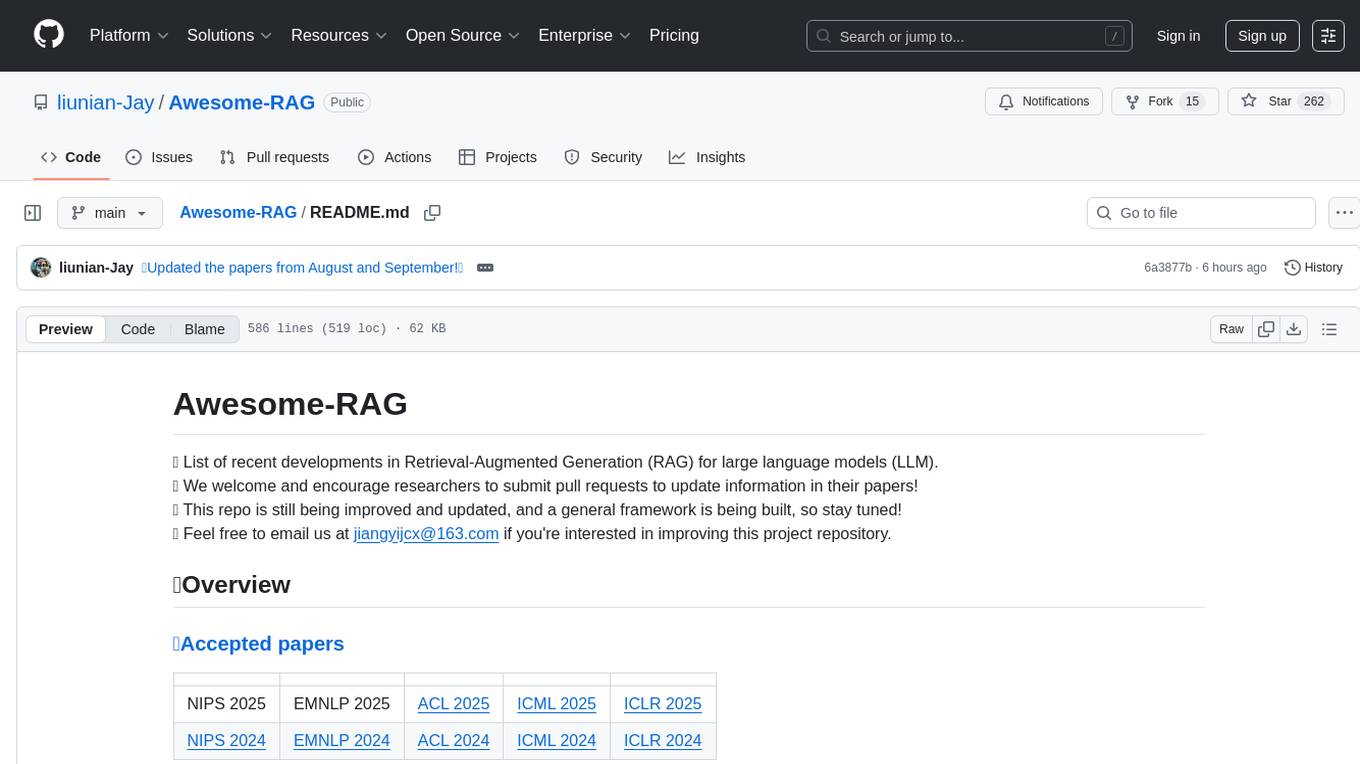

Awesome-RAG

💡 Awesome RAG: A resource of Retrieval-Augmented Generation (RAG) for LLMs, focusing on the development of technology.

Stars: 425

Awesome-RAG is a repository that lists recent developments in Retrieval-Augmented Generation (RAG) for large language models (LLM). It includes accepted papers, evaluation datasets, latest news, and papers from various conferences like NIPS, EMNLP, ACL, ICML, and ICLR. The repository is continuously updated and aims to build a general framework for RAG. Researchers are encouraged to submit pull requests to update information in their papers. The repository covers a wide range of topics related to RAG, including knowledge-enhanced generation, contrastive reasoning, self-alignment, mobile agents, and more.

README:

💡 List of recent developments in Retrieval-Augmented Generation (RAG) for large language models (LLM).

🤗 We welcome and encourage researchers to submit pull requests to update information in their papers!

📫 Repo under active development. Collaborations welcome on Framework & Survey. Contact: [email protected].

| NIPS 2025 | EMNLP 2025 | ACL 2025 | ICML 2025 | ICLR 2025 |

| NIPS 2024 | EMNLP 2024 | ACL 2024 | ICML 2024 | ICLR 2024 |

| 2026.01 |

| 2025.12 | 2025.11 | 2025.10 | 2025.09 | 2025.08 | 2025.07 |

| 2025.06 | 2025.05 | 2025.04 | 2025.03 | 2025.02 | 2025.01 |

| 2024.12 | 2024.11 | 2024.10 | 2024.09 | 2024.08 | 2024.07 |

| 2024.06 | 2024 .05 | 2024.04 | 2024.03 | 2024.02 | 2024.01 |

| HotpotQA | 2WikiMultiHopQA | WebQuestions | TriviaQA | MuSiQue | NaturalQA |

| SQuAD | PopQA | ASQA | Bamboogle | ARC_Challenge | PubHealth |

- [26.1]: Our ArcAligner released — designed for long memory!🚀 [Code]

- [26.1]: Our OptiSet released — unified selection and ranking!🚀 [Code]

- [25.10]: Updated the recent papers from September and October!📅

- [25.10]: Our QAgent released — an agentic RAG framework!🚀 [Code]

- [25.08]: Our CoCoA released — studying knowledge synergy!🚀 [Code]

- [25.06]: We built AgenticRAG-RL — a minimal RL-RAG! Feel free to contribute!🤝

- [25.05]: Our GainRAG released — studying preference alignment!🚀 [Code]

- [25.05]: Our GainRAG accepted at ACL2025 Main! 🎉 [Paper][Code]

- [25.01-05]: Updated the papers from 2025! 📄

- [24.10]: We built MU-GOT — a PDF parsing tool! Feel free to contribute!🤝

- [24.06-12]: Updated the papers from 2024! 📄

- [2025.05] A Survey on Knowledge-Oriented Retrieval-Augmented Generation [Link]

- [2025.01] Agentic Retrieval-Augmented Generation: A Survey on Agentic RAG [Link]

- [2024.09] Trustworthiness in Retrieval-Augmented Generation Systems: A Survey [Link]

- [2024.09] Retrieval Augmented Generation (RAG) and Beyond: A Comprehensive Survey on How to Make your LLMs use External Data More Wisely [Link]

- [2024.07] Retrieval-Augmented Generation for Natural Language Processing: A Survey [Link]

- [2024.05] A Survey on RAG Meeting LLMs: Towards Retrieval-Augmented Large Language Models [Link]

- [2024.02] Retrieval-Augmented Generation for AI-Generated Content: A Survey [Link]

- [2023.12] Retrieval-Augmented Generation for Large Language Models: A Survey [Link]

- Feb 13 LIR^3AG: A Lightweight Rerank Reasoning Strategy Framework for Retrieval-Augmented Generation

- Jan 30 Bounding Hallucinations: Information-Theoretic Guarantees for RAG Systems via Merlin-Arthur Protocols

- Jan 30 DIVERGE: Diversity-Enhanced RAG for Open-Ended Information Seeking

- Jan 30 RAGRouter-Bench: A Dataset and Benchmark for Adaptive RAG Routing

- Jan 29 ProRAG: Process-Supervised Reinforcement Learning for Retrieval-Augmented Generation

- Jan 29 EHR-RAG: Bridging Long-Horizon Structured Electronic Health Records and Large Language Models via Enhanced Retrieval-Augmented Generation

- Jan 27 LURE-RAG: Lightweight Utility-driven Reranking for Efficient RAG

- Jan 27 RPO-RAG: Aligning Small LLMs with Relation-aware Preference Optimization for Knowledge Graph Question Answering

- Jan 24 Less is More for RAG: Information Gain Pruning for Generator-Aligned Reranking and Evidence Selection

- Jan 23 DeepEra: A Deep Evidence Reranking Agent for Scientific Retrieval-Augmented Generated Question Answering

- Jan 23 DF-RAG: Query-Aware Diversity for Retrieval-Augmented Generation

- Jan 22 SPARC-RAG: Adaptive Sequential-Parallel Scaling with Context Management for Retrieval-Augmented Generation

- Jan 21 ManuRAG: Multi-modal Retrieval Augmented Generation for Manufacturing Question Answering (Early Version)

- Jan 21 MiRAGE: A Multiagent Framework for Generating Multimodal Multihop Question-Answer Dataset for RAG Evaluation

- Jan 20 Predicting Retrieval Utility and Answer Quality in Retrieval-Augmented Generation

- Jan 19 Augmenting Question Answering with A Hybrid RAG Approach

- Jan 16 NAACL: Noise-AwAre Verbal Confidence Calibration for LLMs in RAG Systems

- Jan 16 PruneRAG: Confidence-Guided Query Decomposition Trees for Efficient Retrieval-Augmented Generation

- Jan 16 Reasoning in Trees: Improving Retrieval-Augmented Generation for Multi-Hop Question Answering

- Jan 16 Unlocking the Potentials of Retrieval-Augmented Generation for Diffusion Language Models

- Jan 16 Deep GraphRAG: A Balanced Approach to Hierarchical Retrieval and Adaptive Integration

- Jan 16 Predict the Retrieval! Test time adaptation for Retrieval Augmented Generation

- Jan 15 RoutIR: Fast Serving of Retrieval Pipelines for Retrieval-Augmented Generation

- Jan 13 RAGShaper: Eliciting Sophisticated Agentic RAG Skills via Automated Data Synthesis

- Jan 12 Relink: Constructing Query-Driven Evidence Graph On-the-Fly for GraphRAG

- Jan 12 BayesRAG: Probabilistic Mutual Evidence Corroboration for Multimodal Retrieval-Augmented Generation

- Jan 12 FROAV: A Framework for RAG Observation and Agent Verification - Lowering the Barrier to LLM Agent Research

- Jan 12 Is Agentic RAG worth it? An experimental comparison of RAG approaches

- Jan 11 TreePS-RAG: Tree-based Process Supervision for Reinforcement Learning in Agentic RAG

- Jan 11 Seeing through the Conflict: Transparent Knowledge Conflict Handling in Retrieval-Augmented Generation

- Jan 11 Fine-Tuning vs. RAG for Multi-Hop Question Answering with Novel Knowledge

- Jan 10 Attribution Techniques for Mitigating Hallucinated Information in RAG Systems: A Survey

- Jan 10 MedRAGChecker: Claim-Level Verification for Biomedical Retrieval-Augmented Generation

- Jan 10 L-RAG: Balancing Context and Retrieval with Entropy-Based Lazy Loading

- Jan 8 Self-MedRAG: a Self-Reflective Hybrid Retrieval-Augmented Generation Framework for Reliable Medical Question Answering

- Jan 8 Orion-RAG: Path-Aligned Hybrid Retrieval for Graphless Data

- Jan 8 OptiSet: Unified Optimizing Set Selection and Ranking for Retrieval-Augmented Generation

- Jan 8 ArcAligner: Adaptive Recursive Aligner for Compressed Context Embeddings in RAG

- Jan 6 Enhancing Multilingual RAG Systems with Debiased Language Preference-Guided Query Fusion

- Jan 6 Stable-RAG: Mitigating Retrieval-Permutation-Induced Hallucinations in Retrieval-Augmented Generation

- Jan 6 Detecting Hallucinations in Retrieval-Augmented Generation via Semantic-level Internal Reasoning Graph

- Jan 6 Tackling the Inherent Difficulty of Noise Filtering in RAG

- Jan 7 Disco-RAG: Discourse-Aware Retrieval-Augmented Generation

- Jan 5 Clinical Knowledge Graph Construction and Evaluation with Multi-LLMs via Retrieval-Augmented Generation

- Jan 4 A Dynamic Retrieval-Augmented Generation System with Selective Memory and Remembrance

- Jan 2 Improving Multi-step RAG with Hypergraph-based Memory for Long-Context Complex Relational Modeling

- Jan 2 RAG-BioQA: A Retrieval-Augmented Generation Framework for Long-Form Biomedical Question Answering

- Dec 31 Enhancing Retrieval-Augmented Generation with Topic-Enriched Embeddings: A Hybrid Approach Integrating Traditional NLP Techniques

- Dec 29 Retrieval Augmented Question Answering: When Should LLMs Admit Ignorance?

- Dec 27 DICE: Discrete Interpretable Comparative Evaluation with Probabilistic Scoring for Retrieval-Augmented Generation

- Dec 27 HiFi-RAG: Hierarchical Content Filtering and Two-Pass Generation for Open-Domain RAG

- Dec 25 FVA-RAG: Falsification-Verification Alignment for Mitigating Sycophantic Hallucinations

- Dec 22 QuCo-RAG: Quantifying Uncertainty from the Pre-training Corpus for Dynamic Retrieval-Augmented Generation

- Dec 20 Bidirectional RAG: Safe Self-Improving Retrieval-Augmented Generation Through Multi-Stage Validation

- Dec 19 MMRAG-RFT: Two-stage Reinforcement Fine-tuning for Explainable Multi-modal Retrieval-augmented Generation

- Dec 17 The Semantic Illusion: Certified Limits of Embedding-Based Hallucination Detection in RAG Systems

- Dec 16 DrugRAG: Enhancing Pharmacy LLM Performance Through A Novel Retrieval-Augmented Generation Pipeline

- Dec 16 Dynamic Context Selection for Retrieval-Augmented Generation: Mitigating Distractors and Positional Bias

- Dec 16 Cog-RAG: Cognitive-Inspired Dual-Hypergraph with Theme Alignment Retrieval-Augmented Generation

- Dec 15 Semantic Grounding Index: Geometric Bounds on Context Engagement in RAG Systems

- Dec 12 LOOPRAG: Enhancing Loop Transformation Optimization with Retrieval-Augmented Large Language Models

- Dec 11 Cooperative Retrieval-Augmented Generation for Question Answering: Mutual Information Exchange and Ranking by Contrasting Layers

- Dec 10 MedBioRAG: Semantic Search and Retrieval-Augmented Generation with Large Language Models for Medical and Biological QA

- Dec 10 RouteRAG: Efficient Retrieval-Augmented Generation from Text and Graph via Reinforcement Learning

- Dec 10 Leveraging Language Models and RAG for Efficient Knowledge Discovery in Clinical Environments

- Dec 9 Detecting Hallucinations in Graph Retrieval-Augmented Generation via Attention Patterns and Semantic Alignment

- Dec 9 Toward Faithful Retrieval-Augmented Generation with Sparse Autoencoders

- Dec 5 Optimizing Medical Question-Answering Systems: A Comparative Study of Fine-Tuned and Zero-Shot Large Language Models with RAG Framework

- Dec 3 RAGVUE: A Diagnostic View for Explainable and Automated Evaluation of Retrieval-Augmented Generation

- Dec 3 BookRAG: A Hierarchical Structure-aware Index-based Approach for Retrieval-Augmented Generation on Complex Documents

- Nov 29 Breaking It Down: Domain-Aware Semantic Segmentation for Retrieval Augmented Generation

- Nov 28 Autonomous QA Agent: A Retrieval-Augmented Framework for Reliable Selenium Script Generation

- Nov 27 Unlocking Electronic Health Records: A Hybrid Graph RAG Approach to Safe Clinical AI for Patient QA

- Nov 26 MegaRAG: Multimodal Knowledge Graph-Based Retrieval Augmented Generation

- Nov 25 HKRAG: Holistic Knowledge Retrieval-Augmented Generation Over Visually-Rich Documents

- Nov 24 HyperbolicRAG: Enhancing Retrieval-Augmented Generation with Hyperbolic Representations

- Nov 22 Agent-as-a-Graph: Knowledge Graph-Based Tool and Agent Retrieval for LLM Multi-Agent Systems

- Nov 22 Rethinking Retrieval: From Traditional Retrieval Augmented Generation to Agentic and Non-Vector Reasoning Systems in the Financial Domain for Large Language Models

- Nov 21 Beyond Component Strength: Synergistic Integration and Adaptive Calibration in Multi-Agent RAG Systems

- Nov 20 Comparison of Text-Based and Image-Based Retrieval in Multimodal Retrieval Augmented Generation Large Language Model Systems

- Nov 19 CARE-RAG - Clinical Assessment and Reasoning in RAG

- Nov 19 ItemRAG: Item-Based Retrieval-Augmented Generation for LLM-Based Recommendation

- Nov 19 Noise-Robust Abstractive Compression in Retrieval-Augmented Language Models

- Nov 18 LiveRAG: A diverse Q&A dataset with varying difficulty level for RAG evaluation

- Nov 17 TelcoAI: Advancing 3GPP Technical Specification Search through Agentic Multi-Modal Retrieval-Augmented Generation

- Nov 16 TAdaRAG: Task Adaptive Retrieval-Augmented Generation via On-the-Fly Knowledge Graph Construction

- Nov 15 MME-RAG: Multi-Manager-Expert Retrieval-Augmented Generation for Fine-Grained Entity Recognition in Task-Oriented Dialogues

- Nov 13 Modeling Uncertainty Trends for Timely Retrieval in Dynamic RAG

- Nov 13 TruthfulRAG: Resolving Factual-level Conflicts in Retrieval-Augmented Generation with Knowledge Graphs

- Nov 13 RAGFort: Dual-Path Defense Against Proprietary Knowledge Base Extraction in Retrieval-Augmented Generation

- Nov 12 BarrierBench : Evaluating Large Language Models for Safety Verification in Dynamical Systems

- Nov 10 Q-RAG: Long Context Multi-step Retrieval via Value-based Embedder Training

- Nov 10 A survey: Information search time optimization based on RAG (Retrieval Augmentation Generation) chatbot

- Nov 8 Cross-Document Topic-Aligned Chunking for Retrieval-Augmented Generation

- Nov 8 Retrieval-Augmented Generation in Medicine: A Scoping Review of Technical Implementations, Clinical Applications, and Ethical Considerations

- Nov 7 TeaRAG: A Token-Efficient Agentic Retrieval-Augmented Generation Framework

- Nov 6 RAGalyst: Automated Human-Aligned Agentic Evaluation for Domain-Specific RAG

- Nov 5 RAGBoost: Efficient Retrieval-Augmented Generation with Accuracy-Preserving Context Reuse

- Nov 1 Zero-RAG: Towards Retrieval-Augmented Generation with Zero Redundant Knowledge

- Oct 29 DIRC-RAG: Accelerating Edge RAG with Robust High-Density and High-Loading-Bandwidth Digital In-ReRAM Computation

- Oct 28 Mitigating Hallucination in Large Language Models (LLMs): An Application-Oriented Survey on RAG, Reasoning, and Agentic Systems

- Oct 28 META-RAG: Meta-Analysis-Inspired Evidence-Re-Ranking Method for Retrieval-Augmented Generation in Evidence-Based Medicine

- Oct 28 PICOs-RAG: PICO-supported Query Rewriting for Retrieval-Augmented Generation in Evidence-Based Medicine

- Oct 25 FAIR-RAG: Faithful Adaptive Iterative Refinement for Retrieval-Augmented Generation

- Oct 24 InterpDetect: Interpretable Signals for Detecting Hallucinations in Retrieval-Augmented Generation

- Oct 24 SUBQRAG: Sub-Question Driven Dynamic Graph RAG

- Oct 21 Is Implicit Knowledge Enough for LLMs? A RAG Approach for Tree-based Structures

- Oct 21 Query Decomposition for RAG: Balancing Exploration-Exploitation

- Oct 17 RAG vs. GraphRAG: A Systematic Evaluation and Key Insights

- Oct 17 Stop-RAG: Value-Based Retrieval Control for Iterative RAG

- Oct 16 Multimodal RAG for Unstructured Data:Leveraging Modality-Aware Knowledge Graphs with Hybrid Retrieval

- Oct 16 MoM: Mixtures of Scenario-Aware Document Memories for Retrieval-Augmented Generation Systems

- Oct 15 ReMindRAG: Low-Cost LLM-Guided Knowledge Graph Traversal for Efficient RAG

- Oct 15 RAG Meets Temporal Graphs: Time-Sensitive Modeling and Retrieval for Evolving Knowledge

- Oct 15 SeCon-RAG: A Two-Stage Semantic Filtering and Conflict-Free Framework for Trustworthy RAG

- Oct 14 PRoH: Dynamic Planning and Reasoning over Knowledge Hypergraphs for Retrieval-Augmented Generation

- Oct 14 RAG-Anything: All-in-One RAG Framework

- Oct 13 Domain-Specific Data Generation Framework for RAG Adaptation

- Oct 12 RECON: Reasoning with Condensation for Efficient Retrieval-Augmented Generation

- Oct 12 Multimodal Retrieval-Augmented Generation with Large Language Models for Medical VQA

- Oct 11 LinearRAG: Linear Graph Retrieval Augmented Generation on Large-scale Corpora

- Oct 11 RAG-IGBench: Innovative Evaluation for RAG-based Interleaved Generation in Open-domain Question Answering

- Oct 10 Use of Retrieval-Augmented Large Language Model Agent for Long-Form COVID-19 Fact-Checking

- Oct 10 Chain-of-Retrieval Augmented Generation

- Oct 10 When Retrieval Succeeds and Fails: Rethinking Retrieval-Augmented Generation for LLMs

- Oct 9 QAgent: A modular Search Agent with Interactive Query Understanding

- Oct 9 STEPER: Step-wise Knowledge Distillation for Enhancing Reasoning Ability in Multi-Step Retrieval-Augmented Language Models

- Oct 7 HiPRAG: Hierarchical Process Rewards for Efficient Agentic Retrieval Augmented Generation

- Oct 6 MHA-RAG: Improving Efficiency, Accuracy, and Consistency by Encoding Exemplars as Soft Prompts

- Oct 4 Beyond Outcome Reward: Decoupling Search and Answering Improves LLM Agents

- Oct 4 Equipping Retrieval-Augmented Large Language Models with Document Structure Awareness

- Oct 2 Less LLM, More Documents: Searching for Improved RAG

- Oct 2 Learning to Route: A Rule-Driven Agent Framework for Hybrid-Source Retrieval-Augmented Generation

- Oct 2 Training Dynamics of Parametric and In-Context Knowledge Utilization in Language Models

- Oct 2 AccurateRAG: A Framework for Building Accurate Retrieval-Augmented Question-Answering Applications

- Oct 1 A Comparison of Independent and Joint Fine-tuning Strategies for Retrieval-Augmented Generation

- Oct 1 Fine-tuning with RAG for Improving LLM Learning of New Skills

- Oct 1 GRAD: Generative Retrieval-Aligned Demonstration Sampler for Efficient Few-Shot Reasoning

- Oct 1 HalluGuard: Evidence-Grounded Small Reasoning Models to Mitigate Hallucinations in Retrieval-Augmented Generation

- Sep 30 RAGferee: Building Contextual Reward Models for Retrieval-Augmented Generation

- Sep 27 From Evidence to Trajectory: Abductive Reasoning Path Synthesis for Training Retrieval-Augmented Generation Agents

- Sep 26 Beyond RAG vs. Long-Context: Learning Distraction-Aware Retrieval for Efficient Knowledge Grounding

- Sep 26 Can Synthetic Query Rewrites Capture User Intent Better than Humans in Retrieval-Augmented Generation?

- Sep 25 Concise and Sufficient Sub-Sentence Citations for Retrieval-Augmented Generation

- Sep 24 RAR2: Retrieval-Augmented Medical Reasoning via Thought-Driven Retrieval

- Sep 22 AttnComp: Attention-Guided Adaptive Context Compression for Retrieval-Augmented Generation

- Sep 21 Influence Guided Context Selection for Effective Retrieval-Augmented Generation

- Sep 20 SKILL-RAG: Self-Knowledge Induced Learning and Filtering for Retrieval-Augmented Generation

- Sep 19 Relevance to Utility: Process-Supervised Rewrite for RAG

- Sep 17 Improving Context Fidelity via Native Retrieval-Augmented Reasoning

- Sep 9 Rethinking LLM Parametric Knowledge as Post-retrieval Confidence for Dynamic Retrieval and Reranking

- Sep 8 HANRAG: Heuristic Accurate Noise-resistant Retrieval-Augmented Generation for Multi-hop Question Answering

- Sep 8 Domain-Aware RAG: MoL-Enhanced RL for Efficient Training and Scalable Retrieval

- Sep 8 HAVE: Head-Adaptive Gating and ValuE Calibration for Hallucination Mitigation in Large Language Models

- Sep 5 Fishing for Answers: Exploring One-shot vs. Iterative Retrieval Strategies for Retrieval Augmented Generation

- Sep 5 KERAG: Knowledge-Enhanced Retrieval-Augmented Generation for Advanced Question Answering

- Sep 4 SelfAug: Mitigating Catastrophic Forgetting in Retrieval-Augmented Generation via Distribution Self-Alignment

- Sep 4 MobileRAG: Enhancing Mobile Agent with Retrieval-Augmented Generation

- Sep 2 Better by Comparison: Retrieval-Augmented Contrastive Reasoning for Automatic Prompt Optimization

- Sep 1 REFRAG: Rethinking RAG based Decoding

- Sep 1 Towards Open-World Retrieval-Augmented Generation on Knowledge Graph: A Multi-Agent Collaboration Framework

- Aug 29 Atom-Searcher: Enhancing Agentic Deep Research via Fine-Grained Atomic Thought Reward

- Aug 27 Understanding and Leveraging the Expert Specialization of Context Faithfulness in Mixture-of-Experts LLMs

- Aug 27 Can Compact Language Models Search Like Agents? Distillation-Guided Policy Optimization for Preserving Agentic RAG Capabilities

- Aug 27 LFD: Layer Fused Decoding to Exploit External Knowledge in Retrieval-Augmented Generation

- Aug 26 Context-Adaptive Synthesis and Compression for Enhanced Retrieval-Augmented Generation in Complex Domains

- Aug 25 Improving End-to-End Training of Retrieval-Augmented Generation Models via Joint Stochastic Approximation

- Aug 24 CORE: Lossless Compression for Retrieval-Augmented LLMs via Reinforcement Learning

- Aug 24 SEFRQO: A Self-Evolving Fine-Tuned RAG-Based Query Optimizer

- Aug 24 SSFO: Self-Supervised Faithfulness Optimization for Retrieval-Augmented Generation

- Aug 21 Conflict-Aware Soft Prompting for Retrieval-Augmented Generation

- Aug 21 Select to Know: An Internal-External Knowledge Self-SelectionFramework for Domain-Specific Question Answering

- Aug 18 LeanRAG: Knowledge-Graph-Based Generation with Semantic Aggregation and Hierarchical Retrieval

- Aug 15 Cross-Granularity Hypergraph Retrieval-Augmented Generation for Multi-hop Question Answering

- Aug 14 SSRL: Self-Search Reinforcement Learning

- Aug 14 ComoRAG: A Cognitive-Inspired Memory-Organized RAG for Stateful Long Narrative Reasoning

- Aug 13 Towards Self-cognitive Exploration: Metacognitive Knowledge Graph Retrieval Augmented Generation

- Aug 13 Transforming Questions and Documents for Semantically Aligned Retrieval-Augmented Generation

- Aug 12 READER: Retrieval-Assisted Drafter for Efficient LLM Inference

- Aug 12 REX-RAG: Reasoning Exploration with Policy Correction in Retrieval-Augmented Generation

- Aug 11 LAG: Logic-Augmented Generation from a Cartesian Perspective

- Aug 11 Careful Queries, Credible Results: Teaching RAG Models Advanced Web Search Tools with Reinforcement Learning

- Aug 10 PrLM: Learning Explicit Reasoning for Personalized RAG via Contrastive Reward Optimization

- Aug 8 Guided Decoding and Its Critical Role in Retrieval-Augmented Generation

- Aug 8 UR2: Unify RAG and Reasoning through Reinforcement Learning

- Aug 8 Spectrum Projection Score: Aligning Retrieved Summaries with Reader Models in Retrieval-Augmented Generation

- Aug 7 BEE-RAG: Balanced Entropy Engineering for Retrieval-Augmented Generation

- Aug 6 PAIRS: Parametric–Verified Adaptive Information Retrieval and Selection for Efficient RAG

- Aug 5 Collaborative Chain-of-Agents for Parametric-Retrieved Knowledge Synergy

- Aug 1 MAO-ARAG: Multi-Agent Orchestration for Adaptive Retrieval-Augmented Generation

- Jul 29 FrugalRAG: Learning to retrieve and reason for multi-hop QA

- Jul 25 Injecting External Knowledge into the Reasoning Process Enhances Retrieval-Augmented Generation

- Jul 25 Distilling a Small Utility-Based Passage Selector to Enhance Retrieval-Augmented Generation

- Jul 25 Query-Aware Graph Neural Networks for Enhanced Retrieval-Augmented Generation

- Jul 23 HiRAG: Retrieval-Augmented Generation with Hierarchical Knowledge

- Jul 15 RAG-R1 : Incentivize the Search and Reasoning Capabilities of LLMs through Multi-query Parallelism

- Jun 20 PreQRAG -- Classify and Rewrite for Enhanced RAG

- Jun 15 Intra-Trajectory Consistency for Reward Modeling

- Jun 5 Knowledgeable-r1: Policy Optimization for Knowledge Exploration in Retrieval-Augmented Generation

- Jun 4 R-Search: Empowering LLM Reasoning with Search via Multi-Reward Reinforcement Learning

- Jun 2 ImpRAG: Retrieval-Augmented Generation with Implicit Queries

$main$

Methods & Pipeline & Framework

- GainRAG: Preference Alignment in Retrieval-Augmented Generation through Gain Signal Synthesis [Code]

- FaithfulRAG: Fact-Level Conflict Modeling for Context-Faithful Retrieval-Augmented Generation [Code]

- RPO: Retrieval Preference Optimization for Robust Retrieval-Augmented Generation

- RankCoT: Refining Knowledge for Retrieval-Augmented Generation through Ranking Chain-of-Thoughts

- Parenting: Optimizing Knowledge Selection of Retrieval-Augmented Language Models with Parameter Decoupling and Tailored Tuning

- RARE: Retrieval-Augmented Reasoning Enhancement for Large Language Models

- Divide-Then-Align: Honest Alignment based on the Knowledge Boundary of RAG

- MAIN-RAG: Multi-Agent Filtering Retrieval-Augmented Generation

- DualRAG: A Dual-Process Approach to Integrate Reasoning and Retrieval for Multi-Hop Question Answering

- DioR: Adaptive Cognitive Detection and Contextual Retrieval Optimization for Dynamic Retrieval-Augmented Generation

- Hierarchical Document Refinement for Long-context Retrieval-augmented Generation

- KiRAG: Knowledge-Driven Iterative Retriever for Enhancing Retrieval-Augmented Generation

- Enhancing Retrieval-Augmented Generation via Evidence Tree Search

- Mitigating Lost-in-Retrieval Problems in Retrieval Augmented Multi-Hop Question Answering

- SeaKR: Self-aware Knowledge Retrieval for Adaptive Retrieval Augmented Generation

- TC–RAG: Turing–Complete RAG’s Case study on Medical LLM Systems

- Removal of Hallucination on Hallucination: Debate-Augmented RAG

- Can We Further Elicit Reasoning in LLMs? Critic-Guided Planning with Retrieval-Augmentation for Solving Challenging Tasks

- Optimizing Question Semantic Space for Dynamic Retrieval-Augmented Multi-hop Question Answering

- UniRAG: Unified Query Understanding Method for Retrieval Augmented Generation

- DRAG: Distilling RAG for SLMs from LLMs to Transfer Knowledge and Mitigate Hallucination via Evidence and Graph-based Distillation

- Lexical Diversity-aware Relevance Assessment for Retrieval-Augmented Generation

- RAG-Critic: Leveraging Automated Critic-Guided Agentic Workflow for Retrieval Augmented Generation

- Sparse Latents Steer Retrieval-Augmented Generation

- GRAT: Guiding Retrieval-Augmented Reasoning through Process Rewards Tree Search

- LLMs Trust Humans More, That’s a Problem! Unveiling and Mitigating the Authority Bias in Retrieval-Augmented Generation

- Shifting from Ranking to Set Selection for Retrieval Augmented Generation

- Dialogue-RAG: Enhancing Retrieval for LLMs via Node-Linking Utterance Rewriting

- SGIC: A Self-Guided Iterative Calibration Framework for RAG

Benchmark & Evaluation & Analysis

- SafeRAG: Benchmarking Security in Retrieval-Augmented Generation of Large Language Model

- HybGRAG: Hybrid Retrieval-Augmented Generation on Textual and Relational Knowledge Bases

- RAGEval: Scenario Specific RAG Evaluation Dataset Generation Framework

- Unanswerability Evaluation for Retrieval Augmented Generation

- MEMERAG: A Multilingual End-to-End Meta-Evaluation Benchmark for Retrieval Augmented Generation

- Astute RAG: Overcoming Imperfect Retrieval Augmentation and Knowledge Conflicts for Large Language Models

- The Distracting Effect: Understanding Irrelevant Passages in RAG

- Pandora’s Box or Aladdin’s Lamp: A Comprehensive Analysis Revealing the Role of RAG Noise in Large Language Models

- A Reality Check on Context Utilisation for Retrieval-Augmented Generation

- MT-RAIG: Novel Benchmark and Evaluation Framework for Retrieval-Augmented Insight Generation over Multiple Tables

- On the Robustness of RAG Systems in Educational Question Answering under Knowledge Discrepancies

Chunk & Database

- MoC: Mixtures of Text Chunking Learners for Retrieval-Augmented Generation System

- HoH: A Dynamic Benchmark for Evaluating the Impact of Outdated Information on Retrieval-Augmented Generation

- Graph of Records: Boosting Retrieval Augmented Generation for Long-context Summarization with Graphs

Application

- Towards Omni-RAG: Comprehensive Retrieval-Augmented Generation for Large Language Models in Medical Applications

- Knowledge Graph Retrieval-Augmented Generation for LLM-based Recommendation

- Medical Graph RAG: Evidence-based Medical Large Language Model via Graph Retrieval-Augmented Generation

- VISA: Retrieval Augmented Generation with Visual Source Attribution

- The Efficiency vs. Accuracy Trade-off: Optimizing RAG-Enhanced LLM Recommender Systems Using Multi-Head Early Exit

- HyKGE: A Hypothesis Knowledge Graph Enhanced RAG Framework for Accurate and Reliable Medical LLMs Responses

- NeuSym-RAG: Hybrid Neural Symbolic Retrieval with Multiview Structuring for PDF Question Answering

- CoRe-MMRAG: Cross-Source Knowledge Reconciliation for Multimodal RAG

- Retrieval-Augmented Fine-Tuning With Preference Optimization For Visual Program Generation

$fingdings$

- Bridging Relevance and Reasoning: Rationale Distillation in Retrieval-Augmented Generation

- SimGRAG: Leveraging Similar Subgraphs for Knowledge Graphs Driven Retrieval-Augmented Generation

- EXIT: Context-Aware Extractive Compression for Enhancing Retrieval-Augmented Generation

- Judge as A Judge: Improving the Evaluation of Retrieval-Augmented Generation through the Judge-Consistency of Large Language Models

- RASD: Retrieval-Augmented Speculative Decoding

- FRAG: A Flexible Modular Framework for Retrieval-Augmented Generation based on Knowledge Graphs

- Towards Adaptive Memory-Based Optimization for Enhanced Retrieval-Augmented Generation

- Retrieval-Augmented Process Reward Model for Generalizable Mathematical Reasoning

- Fine-grained Knowledge Enhancement for Retrieval-Augmented Generation

- GeAR: Graph-enhanced Agent for Retrieval-augmented Generation

- CtrlA: Adaptive Retrieval-Augmented Generation via Inherent Control

- RoseRAG: Robust Retrieval-augmented Generation with Small-scale LLMs via Margin-aware Preference Optimization

- The Silent Saboteur: Imperceptible Adversarial Attacks against Black-Box Retrieval-Augmented Generation Systems

- PISCO: Pretty Simple Compression for Retrieval-Augmented Generation

- RAPID: Efficient Retrieval-Augmented Long Text Generation with Writing Planning and Information Discovery

- Ask in Any Modality: A Comprehensive Survey on Multimodal Retrieval-Augmented Generation

- CausalRAG: Integrating Causal Graphs into Retrieval-Augmented Generation

- Mitigating Bias in RAG: Controlling the Embedder

- HopRAG: Multi-Hop Reasoning for Logic-Aware Retrieval Augmented Generation

- SynapticRAG: Enhancing Temporal Memory Retrieval in Large Language Models through Synaptic Mechanisms

- HASH-RAG: Bridging Deep Hashing with Retriever for Efficient, Fine Retrieval and Augmented Generation

- RAG-RewardBench: Benchmarking Reward Models in Retrieval Augmented Generation for Preference Alignment

- LLMs are Biased Evaluators But Not Biased for Retrieval Augmented Generation

- Accelerating Adaptive Retrieval Augmented Generation via Instruction-Driven Representation Reduction of Retrieval Overlaps

- Evaluation of Attribution Bias in Generator-Informed Retrieval-Augmented Large Language Models

- Axiomatic Analysis of Uncertainty Estimation For Retrieval Augmented Generation

- ECoRAG: Evidentiality-guided Compression for Long Context RAG

- GNN-RAG: Graph Neural Retrieval for Efficient Large Language Model Reasoning on Knowledge Graphs

- LTRAG: Enhancing autoformalization and self-refinement for logical reasoning with Thought-Guided RAG

- Toward Structured Knowledge Reasoning: Contrastive Retrieval-Augmented Generation on Experience

- Document Segmentation Matters for Retrieval-Augmented Generation

- Exploring Knowledge Filtering for Retrieval-Augmented Discriminative Tasks

- TreeRAG: Unleashing the Power of Hierarchical Storage for Enhanced Knowledge Retrieval in Long Documents

- EC-RAFT: Automated Generation of Clinical Trial Eligibility Criteria through Retrieval-Augmented Fine-Tuning

- RASPberry: Retrieval-Augmented Monte Carlo Tree Self-Play with Reasoning Consistency for Multi-Hop Question Answering

- Safeguarding RAG Pipelines with GMTP: A Gradient-based Masked Token Probability Method for Poisoned Document Detection

- All That Glitters is Not Gold: Improving Robust Retrieval-Augmented Language Models with Fact-Centric Preference Alignment

- May 30 ComposeRAG: A Modular and Composable RAG for Corpus-Grounded Multi-Hop Question Answering

- May 30 Pangu DeepDiver: Adaptive Search Intensity Scaling via Open-Web Reinforcement Learning

- May 30 ClueAnchor: Clue-Anchored Knowledge Reasoning Exploration and Optimization for Retrieval-Augmented Generation

- May 26 R3-RAG: Learning Step-by-Step Reasoning and Retrieval for LLMs via Reinforcement Learning

- May 26 Iterative Self-Incentivization Empowers Large Language Models as Agentic Searchers

- May 24 GainRAG: Preference Alignment in Retrieval-Augmented Generation through Gain Signal Synthesis [Code]

- May 23 Curriculum Guided Reinforcement Learning for Efficient Multi Hop Retrieval Augmented Generation

- May 22 C-3PO: Compact Plug-and-Play Proxy Optimization to Achieve Human-like Retrieval-Augmented Generation

- May 22 R1-Searcher++: Incentivizing the Dynamic Knowledge Acquisition of LLMsvia Reinforcement Learning

- May 22 SimpleDeepSearcher: Deep Information Seeking via Web-Powered Reasoning Trajectory Synthesis

- May 22 O2-Searcher: A Searching-based Agent Model for Open-Domain Open-Ended Question Answering

- May 22 Attributing Response to Context: A Jensen–Shannon Divergence Driven Mechanistic Study of Context Attribution in Retrieval-Augmented Generation

- May 21 Ranking Free RAG: Replacing Re-ranking with Selection in RAG for Sensitive Domains

- May 20 s3: You Don’t Need That Much Data to Train a Search Agent via RL

- May 19 Finetune-RAG: Fine-Tuning Language Models to Resist Hallucination in Retrieval-Augmented Generation

- May 15 CL-RAG: Bridging the Gap in Retrieval-Augmented Generation with Curriculum Learning

- May 8 Rank-R1: Enhancing Reasoning in LLM-based Document Rerankers via Reinforcement Learning

- May 6 An Analysis of Hyper-Parameter Optimization Methods for Retrieval Augmented Generation

- May 5 Direct Retrieval-augmented Optimization: Synergizing Knowledge Selection and Language Models

- May 2 Retrieval Augmented Learning: A Retrial-based Large Language Model Self-Supervised Learning and Autonomous Knowledge Generation

- Apri 25 DualRAG: A Dual-Process Approach to Integrate Reasoning and Retrieval for Multi-Hop Question Answering

- Apri 23 Credible plan-driven RAG method for Multi-hop Question Answering

- Apri 22 Exploiting Contextual Knowledge in LLMs through V-usable Information based Layer Enhancement

- Apri 21 AlignRAG: An Adaptable Framework for Resolving Misalignments in Retrieval-Aware Reasoning of RAG

- Apri 17 DeepResearcher: Scaling Deep Research via Reinforcement Learning in Real-world Environments

- Apri 17 CDF-RAG: Causal Dynamic Feedback for Adaptive Retrieval-Augmented Generation

- Apri 17 Accommodate Knowledge Conflicts in Retrieval-augmented LLMs: Towards Reliable Response Generation in the Wild

- Apri 17 ACoRN: Noise-Robust Abstractive Compression in Retrieval-Augmented Language Models

- Apri 15 Preference-based Learning with Retrieval Augmented Generation for Conversational Question Answering

- Apri 10 Plan-and-Refine: Diverse and Comprehensive Retrieval-Augmented Generation

- Apri 8 Search-R1: Training LLMs to Reason and Leverage Search Engines with Reinforcement Learning

- Apri 7 Collab-RAG: Boosting Retrieval-Augmented Generation for Complex Question Answering via White-Box and Black-Box LLM Collaboration

- Apri 4 Efficient Dynamic Clustering-Based Document Compression for Retrieval-Augmented-Generation

- Apri 3 HyperRAG: Enhancing Quality-Efficiency Tradeoffs in Retrieval-Augmented Generation with Reranker KV-Cache Reuse

- Apri 3 Scaling Test-Time Inference with Policy-Optimized, Dynamic Retrieval-Augmented Generation via KV Caching and Decoding

- Apri 1 CoRanking: Collaborative Ranking with Small and Large Ranking Agents

- Mar 31 Insight-RAG: Enhancing LLMs with Insight-Driven Augmentation

- Mar 31 UltraRAG: A Modular and Automated Toolkit for Adaptive Retrieval-Augmented Generation

- Mar 31 Better wit than wealth: Dynamic Parametric Retrieval Augmented Generation for Test-time Knowledge Enhancement

- Mar 30 RARE: Retrieval-Augmented Reasoning Modeling

- Mar 28 Preference-based Learning with Retrieval Augmented Generation for Conversational Question Answering

- Mar 27 ReaRAG: Knowledge-guided Reasoning Enhances Factuality of Large Reasoning Models with Iterative Retrieval Augmented Generation

- Mar 23 ExpertRAG: Efficient RAG with Mixture of Experts -- Optimizing Context Retrieval for Adaptive LLM Responses

- Mar 20 Parameters vs. Context: Fine-Grained Control of Knowledge Reliance in Language Models

- Mar 11 OpenRAG: Optimizing RAG End-to-End via In-Context Retrieval Learning

- Feb 26 Judge as A Judge: Improving the Evaluation of Retrieval-Augmented Generation through the Judge-Consistency of Large Language Models

- Feb 25 RankCoT: Refining Knowledge for Retrieval-Augmented Generation through Ranking Chain-of-Thoughts

- Feb 25 Say Less, Mean More: Leveraging Pragmatics in Retrieval-Augmented Generation

- Feb 25 DRAMA: Diverse Augmentation from Large Language Models to Smaller Dense Retrievers

- Feb 20 Mitigating Lost-in-Retrieval Problems in Retrieval Augmented Multi-Hop Question Answering

- Feb 19 RAG-Gym: Optimizing Reasoning and Search Agents with Process Supervision

- Feb 19 Towards Context-Robust LLMs: A Gated Representation Fine-tuning Approach

- Feb 19 Towards Adaptive Memory-Based Optimization for Enhanced Retrieval-Augmented Generation

- Feb 18 RAG-Reward: Optimizing RAG with Reward Modeling and RLHF

- Feb 17 Revisiting Robust RAG: Do We Still Need Complex Robust Training in the Era of Powerful LLMs?

- Feb 16 RoseRAG: Robust Retrieval-augmented Generation with Small-scale LLMs via Margin-aware Preference Optimization

- Feb 14 Post-training an LLM for RAG? Train on Self-Generated Demonstrations

- Feb 3 DeepRAG: Thinking to Retrieval Step by Step for Large Language Models

- Jan 30 Can we Retrieve Everything All at Once? ARM: An Alignment-Oriented LLM-based Retrieval Method

- Jan 30 RbFT: Robust Fine-tuning for Retrieval-Augmented Generation against Retrieval Defects

- Jan 27 Parametric Retrieval Augmented Generation

- Jan 14 ReARTeR: Retrieval-Augmented Reasoning with Trustworthy Process Rewarding

- Jan 9 SUGAR: Leveraging Contextual Confidence for Smarter Retrieval

- Jan 7 Retrieval-Augmented Generation by Evidence Retroactivity in LLMs

- Jan 2 Synergistic Multi-Agent Framework with Trajectory Learning for Knowledge-Intensive Tasks

- From RAG to Memory: Non-Parametric Continual Learning for Large Language Models

- LaRA: Benchmarking Retrieval-Augmented Generation and Long-Context LLMs -- No Silver Bullet for LC or RAG Routing

- RAGGED: Towards Informed Design of Retrieval Augmented Generation Systems

- DocKS-RAG: Optimizing Document-Level Relation Extraction through LLM-Enhanced Hybrid Prompt Tuning

- Hierarchical Planning for Complex Tasks with Knowledge Graph-RAG and Symbolic Verification

- On the Vulnerability of Applying Retrieval-Augmented Generation within Knowledge-Intensive Application Domains

- C-3PO: Compact Plug-and-Play Proxy Optimization to Achieve Human-like Retrieval-Augmented Generation

- Long-Context Inference with Retrieval-Augmented Speculative Decoding

- Position: Retrieval-augmented systems are currently dangerous medical communicators

- SePer: Measure Retrieval Utility Through The Lens Of Semantic Perplexity Reduction

- Inference Scaling for Long-Context Retrieval Augmented Generation

- Measuring and Enhancing Trustworthiness of LLMs in RAG through Grounded Attributions and Learning to Refuse

- Sufficient Context: A New Lens on Retrieval Augmented Generation Systems

- Enhancing Large Language Models' Situated Faithfulness to External Contexts

- RAG-SR: Retrieval-Augmented Generation for Neural Symbolic Regression

- SmartRAG: Jointly Learn RAG-Related Tasks From the Environment Feedback

- InstructRAG: Instructing Retrieval-Augmented Generation via Self-Synthesized Rationales

- RAG-DDR: Optimizing Retrieval-Augmented Generation Using Differentiable Data Rewards

- TrojanRAG: Retrieval-Augmented Generation Can Be Backdoor Driver in Large Language Models

- Provence: efficient and robust context pruning for retrieval-augmented generation

- Long-Context LLMs Meet RAG: Overcoming Challenges for Long Inputs in RAG

- LongMemEval: Benchmarking Chat Assistants on Long-Term Interactive Memory

- MMed-RAG: Versatile Multimodal RAG System for Medical Vision Language Models

- A Theory for Token-Level Harmonization in Retrieval-Augmented Generation

- SiReRAG: Indexing Similar and Related Information for Multihop Reasoning

- VisRAG: Vision-based Retrieval-augmented Generation on Multi-modality Documents

- ReDeEP: Detecting Hallucination in Retrieval-Augmented Generation via Mechanistic Interpretability

- ChatQA 2: Bridging the Gap to Proprietary LLMs in Long Context and RAG Capabilities

- Accelerating Inference of Retrieval-Augmented Generation via Sparse Context Selection

- Retrieval or Reasoning: The Roles of Graphs and Large Language Models in Efficient Knowledge-Graph-Based Retrieval-Augmented Generation

- Think-on-Graph 2.0: Deep and Faithful Large Language Model Reasoning with Knowledge-guided Retrieval Augmented Generation

- DRoC: Elevating Large Language Models for Complex Vehicle Routing via Decomposed Retrieval of Constraints

- RAPID: Retrieval Augmented Training of Differentially Private Diffusion Models

- Auto-GDA: Automatic Domain Adaptation for Efficient Grounding Verification in Retrieval Augmented Generation

- Speculative RAG: Enhancing Retrieval Augmented Generation through Drafting

- Follow My Instruction and Spill the Beans: Scalable Data Extraction from Retrieval-Augmented Generation Systems

- Chunk-Distilled Language Modeling

- Benchmarking Multimodal Retrieval Augmented Generation with Dynamic VQA Dataset and Self-adaptive Planning Agent

- RAGraph: A General Retrieval-Augmented Graph Learning Framework

- RAGChecker: A Fine-grained Framework for Diagnosing Retrieval-Augmented Generation

- G-Retriever: Retrieval-Augmented Generation for Textual Graph Understanding and Question Answering

- RankRAG: Unifying Context Ranking with Retrieval-Augmented Generation in LLMs

- ChatQA: Surpassing GPT-4 on Conversational QA and RAG

- HippoRAG: Neurobiologically Inspired Long-Term Memory for Large Language Models

- BABILong: Testing the Limits of LLMs with Long Context Reasoning-in-a-Haystack

- Self-Retrieval: End-to-End Information Retrieval with One Large Language Model

- UDA: A Benchmark Suite for Retrieval Augmented Generation in Real-World Document Analysis

- Ad Auctions for LLMs via Retrieval Augmented Generation

- ReFIR: Grounding Large Restoration Models with Retrieval Augmentation

- TableRAG: Million-Token Table Understanding with Language Models

- xRAG: Extreme Context Compression for Retrieval-augmented Generation with One Token

- Scaling Retrieval-Based Language Models with a Trillion-Token Datastore

- Fine-grained Analysis of In-context Linear Estimation: Data, Architecture, and Beyond

- Molecule Generation with Fragment Retrieval Augmentation

- WikiContradict: A Benchmark for Evaluating LLMs on Real-World Knowledge Conflicts from Wikipedia

- Beyond Prompts: Dynamic Conversational Benchmarking of Large Language Models

- ConflictBank: A Benchmark for Evaluating the Influence of Knowledge Conflicts in LLMs

- CRAG - Comprehensive RAG Benchmark

- Dec 19 PA-RAG: RAG Alignment via Multi-Perspective Preference Optimization

- Dec 11 DADIO: Bridging Relevance and Reasoning: Rationale Distillation in Retrieval-Augmented Generation

- Dec 3 RARE: Retrieval-Augmented Reasoning Enhancement for Large Language Models

- Oct 12 Toward General Instruction-Following Alignment for Retrieval-Augmented Generation

- Oct 11 STRUCTRAG: BOOSTING KNOWLEDGE INTENSIVE REASONING OF LLMS VIA INFERENCE-TIME HYBRID INFORMATION STRUCTURIZATION

- Oct 11 Retriever-and-Memory: Towards Adaptive Note-Enhanced Retrieval-Augmented Generation

- Oct 9 Astute RAG: Overcoming Imperfect Retrieval Augmentation and Knowledge Conflicts for Large Language Models

- Oct 7 TableRAG: Million-Token Table Understanding with Language Models

- Oct 6 Inference Scaling for Long-Context Retrieval Augmented Generation

- Oct 4 How Much Can RAG Help the Reasoning of LLM?

- Oct 2OneGen: Efficient One-Pass Unified Generation and Retrieval for LLMs

- Oct 2 OPEN-RAG: Enhanced Retrieval-Augmented Reasoning with Open-Source Large Language Models

- Sep 23 Retrieval Augmented Generation (RAG) and Beyond: A Comprehensive Survey on How to Make your LLMs use External Data More Wisely

- Sep 4 Diversify-verify-adapt: Efficient and Robust Retrieval-Augmented Ambiguous Question Answering

- Aug 30 MaFeRw: Query Rewriting with Multi-Aspect Feedbacks for Retrieval-Augmented Large Language Models

- Aug 29 LRP4RAG: Detecting Hallucinations in Retrieval-Augmented Generation via Layer-wise Relevance Propagation

- Aug 21 RAGLAB: A Modular and Research-Oriented Unified Framework for Retrieval-Augmented Generation

- Aug 21 Evidence-backed Fact Checking using RAG and Few-Shot In-Context Learning with LLMs

- Aug 20 Analysis of Plan-based Retrieval for Grounded Text Generation

- Aug 20 Hierarchical Retrieval-Augmented Generation Model with Rethink for Multi-hop Question Answering

- Aug 19 KaPO: Knowledge-aware Preference Optimization for Controllable Knowledge Selection in Retrieval-Augmented Language Models

- Aug 17 TC-RAG:Turing-Complete RAG's Case study on Medical LLM Systems

- Aug 16 Meta Knowledge for Retrieval Augmented Large Language Models

- Aug 7 EfficientRAG: Efficient Retriever for Multi-Hop Question Answering

- Aug 7 Medical Graph RAG: Towards Safe Medical Large Language Model via Graph Retrieval-Augmented Generation

- Aug 7 Exploring RAG-based Vulnerability Augmentation with LLMs

- Aug 7 Wiping out the limitations of Large Language Models -- A Taxonomy for Retrieval Augmented Generation

- Aug 2 BioRAG: A RAG-LLM Framework for Biological Question Reasoning

- Aug 2 Adaptive Contrastive Decoding in Retrieval-Augmented Generation for Handling Noisy Contexts

- Jul 29 Improving Retrieval Augmented Language Model with Self-Reasoning

- Jul 29 Enhancing Code Translation in Language Models with Few-Shot Learning via Retrieval-Augmented Generation

- Jul 28 Enhancing Code Translation in Language Models with Few-Shot Learning via Retrieval-Augmented Generation

- Jul 25 Modular RAG: Transforming RAG Systems into LEGO-like Reconfigurable Frameworks

- Jul 20 Golden-Retriever: High-Fidelity Agentic Retrieval Augmented Generation for Industrial Knowledge Base

- Jul 19 RAG-QA Arena: Evaluating Domain Robustness for Long-form Retrieval Augmented Question Answering

- Jul 17 Optimizing Query Generation for Enhanced Document Retrieval in RAG

- Jul 11 Speculative RAG: Enhancing Retrieval Augmented Generation through Drafting

- jul 2 RankRAG: Unifying Context Ranking with Retrieval-Augmented Generation in LLMs

- Jul 1 Searching for Best Practices in Retrieval-Augmented Generation

- Jun 29 From RAG to RICHES:Retrieval Interlaced with Sequence Generation

- Jun 27 SEAKR: Self-aware Knowledge Retrieval for Adaptive Retrieval Augmented Genaration

- Jun 27 Unified Active Retrieval for Retrieval Augmented Generation

- Jun 27 CrAM: Credibility-Aware Attention Modification in LLMs for Combating Misinformation in RAG

- Jun 25 Entropy-Based Decoding for Retrieval-Augmented Large Language Models

- Jun 21 RichRAG: Crafting Rich Responses for Multi-faceted Queries in Retrieval-Augmented Generation

- Jun 21 LongRAG: Enhancing Retrieval-Augmented Generation with Long-context LLMs

- Jun 19 R2AG: Incorporating Retrieval Information into Retrieval Augmented Generation

- Jun 19 INSTRUCTRAG: Instructing Retrieval-Augmented Generation with Explicit Denoising

- Jun 18 Retrieve, Summarize, Plan: Advancing Multi-hop Question Answering with an Iterative Approach

- Jun 18 PlanRAG: A Plan-then-Retrieval Augmented Generation for Generative Large Language Models as Decision Makers

- Jun 18 Retrieval Meets Reasoning: Dynamic In-Context Editing for Long-Text Understanding

- Jun 12 Unsupervised Information Refinement Training of Large Language Models for Retrieval-Augmented Generation

- Jun 7 Multi-Head RAG: Solving Multi-Aspect Problems with LLMs

- Jun 7 CRAG - Comprehensive RAG Benchmark

- Jun 1 Mix-of-Granularity: Optimize the Chunking Granularity for Retrieval-Augmented Generation

- May 30 GNN-RAG: Graph Neural Retrieval for Large Language Model Reasoning

- May 26 Cocktail: A Comprehensive Information Retrieval Benchmark with LLM-Generated Documents Integration

- May 23 HippoRAG: Neurobiologically Inspired Long-Term Memory for Large Language Models

- May 22 xRAG: Extreme Context Compression for Retrieval-augmented Generation with One Token

- May 14 ERATTA: Extreme RAG for Table To Answers with Large Language Models

- May 13 Evaluation of Retrieval-Augmented Generation: A Survey

- May 12 DUETRAG: COLLABORATIVE RETRIEVAL-AUGMENTEDGENERATION

- May 6 ERAGent: Enhancing Retrieval-Augmented Language Models with Improved Accuracy, Efficiency, and Personalization

- Apr 26 Better Synthetic Data by Retrieving and Transforming Existing Datasets

- Apr 22 LLMs Know What They Need: Leveraging a Missing Information Guided Framework to Empower Retrieval-Augmented Generation

- Apr 16 How faithful are RAG models? Quantifying the tug-of-war between RAG and LLMs' internal prior

- Apr 12 Reducing hallucination in structured outputs via Retrieval-Augmented Generation

- Apr 1 ARAGOG: Advanced RAG Output Grading

- Mar 21 FIT-RAG: Black-Box RAG with Factual Information and Token Reduction

- Mar 15 RAFT: Adapting Language Model to Domain Specific RAG

- Mar 14 G-Retriever: Retrieval-AugmeAprnted Generation for Textual Graph Understanding and Question Answering

- Mar 8 RAT: Retrieval Augmented Thoughts Elicit Context-Aware Reasoning in Long-Horizon Generation

- Feb 27 REAR: A Relevance-Aware Retrieval-Augmented Framework for Open-Domain Question Answering

- Feb 22 Tug-of-War Between Knowledge: Exploring and Resolving Knowledge Conflicts in Retrieval-Augmented Language Models

- Feb 21 ACTIVERAG: Revealing the Treasures of Knowledge via Active Learning

- Feb 16 Retrieve Only When It Needs: Adaptive Retrieval Augmentation for Hallucination Mitigation in Large Language Models

- Feb 16 Corrective Retrieval Augmented Generation

- Jan 27 Enhancing Large Language Model Performance To Answer Questions and Extract Information More Accurately

- Jan 24 UniMS-RAG: A Unified Multi-source Retrieval-Augmented Generation for Personalized Dialogue Systems

$main$

- BlendFilter: Advancing Retrieval-Augmented Large Language Models via Query Generation Blending and Knowledge Filtering

- “Glue pizza and eat rocks” - Exploiting Vulnerabilities in Retrieval-Augmented Generative Models

- SEER: Self-Aligned Evidence Extraction for Retrieval-Augmented Generation

- Crafting Personalized Agents through Retrieval-Augmented Generation on Editable Memory Graphs

- REAR: A Relevance-Aware Retrieval-Augmented Framework for Open-Domain Question Answering

- Model Internals-based Answer Attribution for Trustworthy Retrieval-Augmented Generation

- TimeR4 : Time-aware Retrieval-Augmented Large Language Models for Temporal Knowledge Graph Question Answering

- Synchronous Faithfulness Monitoring for Trustworthy Retrieval-Augmented Generation

- ATM: Adversarial Tuning Multi-agent System Makes a Robust Retrieval-Augmented Generator

- Lifelong Knowledge Editing for LLMs with Retrieval-Augmented Continuous Prompt Learning

- Chain-of-Note: Enhancing Robustness in Retrieval-Augmented Language Models

- Searching for Best Practices in Retrieval-Augmented Generation

- Unveiling and Consulting Core Experts in Retrieval-Augmented MoE-based LLMs

- RE-RAG: Improving Open-Domain QA Performance and Interpretability with Relevance Estimator in Retrieval-Augmented Generation

- LongRAG: A Dual-perspective Retrieval-Augmented Generation Paradigm for Long-Context Question Answering

- ZEBRA: Zero-Shot Example-Based Retrieval Augmentation for Commonsense Question Answering

- RULE: Reliable Multimodal RAG for Factuality in Medical Vision Language Models

- From RAG to Riches: Retrieval Interlaced with Sequence Generation

- Summary of a Haystack: A Challenge to Long-Context LLMs and RAG Systems

- Deciphering the Interplay of Parametric and Non-Parametric Memory in RAG Models

- DynamicER: Resolving Emerging Mentions to Dynamic Entities for RAG

- RAG-QA Arena: Evaluating Domain Robustness for Long-form Retrieval Augmented Question Answering

- Do You Know What You Are Talking About? Characterizing Query-Knowledge Relevance For Reliable Retrieval Augmented Generation

$fingdings$

- RaFe: Ranking Feedback Improves Query Rewriting for RAG

- Adaptive Contrastive Decoding in Retrieval-Augmented Generation for Handling Noisy Contexts

- BSharedRAG: Backbone Shared Retrieval-Augmented Generation for the E-commerce Domain

- LONG2RAG: Evaluating Long-Context & Long-Form Retrieval-Augmented Generation with Key Point Recall

- Open-RAG: Enhanced Retrieval Augmented Reasoning with Open-Source Large Language Models

- TRACE the Evidence: Constructing Knowledge-Grounded Reasoning Chains for Retrieval-Augmented Generation

- BERGEN: A Benchmarking Library for Retrieval-Augmented Generation

- Learning to Plan for Retrieval-Augmented Large Language Models from Knowledge Graphs

- Retrieval-Augmented Code Generation for Situated Action Generation: A Case Study on Minecraft

- “Knowing When You Don’t Know”: A Multilingual Relevance Assessment Dataset for Robust Retrieval-Augmented Generation

- LLMs as Collaborator: Demands-Guided Collaborative Retrieval-Augmented Generation for Commonsense Knowledge-Grounded Open-Domain Dialogue Systems

- Retrieving, Rethinking and Revising: The Chain-of-Verification Can Improve Retrieval Augmented Generation

- R2AG: Incorporating Retrieval Information into Retrieval Augmented Generation

- RAG-Studio: Towards In-Domain Adaptation Of Retrieval Augmented Generation Through Self-Alignment

- Unified Active Retrieval for Retrieval Augmented Generation

- SeRTS: Self-Rewarding Tree Search for Biomedical Retrieval-Augmented Generation

- Controlling Risk of Retrieval-augmented Generation: A Counterfactual Prompting Framework

- AutoRAG-HP: Automatic Online Hyper-Parameter Tuning for Retrieval-Augmented Generation

- Typos that Broke the RAG’s Back: Genetic Attack on RAG Pipeline by Simulating Documents in the Wild via Low-level Perturbations

$main$

- Unsupervised Information Refinement Training of Large Language Models for Retrieval-Augmented Generation

- An Information Bottleneck Perspective for Effective Noise Filtering on Retrieval-Augmented Generation

- Bridging the Preference Gap between Retrievers and LLMs

- ARL2: Aligning Retrievers with Black-box Large Language Models via Self-guided Adaptive Relevance Labeling

- M-RAG: Reinforcing Large Language Model Performance through Retrieval-Augmented Generation with Multiple Partitions

- Generate-then-Ground in Retrieval-Augmented Generation for Multi-hop Question Answering

- Enhancing Noise Robustness of Retrieval-Augmented Language Models with Adaptive Adversarial Training

- RAGTruth: A Hallucination Corpus for Developing Trustworthy Retrieval-Augmented Language Models

- Grounding Language Model with Chunking-Free In-Context Retrieval

- On the Role of Long-tail Knowledge in Retrieval Augmented Large Language Models

- Landmark Embedding: A Chunking-Free Embedding Method For Retrieval Augmented Long-Context Large Language Models

- A Multi-Task Embedder For Retrieval Augmented LLM

- To Generate or to Retrieve? On the Effectiveness of Artificial Contexts for Medical Open-Domain Question Answering

- Blinded by Generated Contexts: How Language Models Merge Generated and Retrieved Contexts When Knowledge Conflicts?

- Small Models, Big Insights: Leveraging Slim Proxy Models To Decide When and What to Retrieve for LLMs

- RAM-EHR: Retrieval Augmentation Meets Clinical Predictions on Electronic Health Records

- DRAGIN: Dynamic Retrieval Augmented Generation based on the Real-time Information Needs of Large Language Models

- Retrieval Augmented Fact Verification by Synthesizing Contrastive Arguments

- Dataflow-Guided Retrieval Augmentation for Repository-Level Code Completion

- Understanding Retrieval Robustness for Retrieval-augmented Image Captioning

- Spiral of Silence: How is Large Language Model Killing Information Retrieval?—A Case Study on Open Domain Question Answering

- REANO: Optimising Retrieval-Augmented Reader Models through Knowledge Graph Generation

- Synergistic Interplay between Search and Large Language Models for Information Retrieval

$findings$

- MORE: Multi-mOdal REtrieval Augmented Generative Commonsense Reasoning

- RA-ISF: Learning to Answer and Understand from Retrieval Augmentation via Iterative Self-Feedback

- Improving Retrieval Augmented Open-Domain Question-Answering with Vectorized Contexts

- When Do LLMs Need Retrieval Augmentation? Mitigating LLMs’ Overconfidence Helps Retrieval Augmentation

- RetrievalQA: Assessing Adaptive Retrieval-Augmented Generation for Short-form Open-Domain Question Answering

- Retrieval-Augmented Retrieval: Large Language Models are Strong Zero-Shot Retriever

- Benchmarking Retrieval-Augmented Generation for Medicine

- Unraveling and Mitigating Retriever Inconsistencies in Retrieval-Augmented Large Language Models

- ChatKBQA: A Generate-then-Retrieve Framework for Knowledge Base Question Answering with Fine-tuned Large Language Models

- The Good and The Bad: Exploring Privacy Issues in Retrieval-Augmented Generation (RAG)

- C-RAG: Certified Generation Risks for Retrieval-Augmented Language Models

- DFA-RAG: Conversational Semantic Router for Large Language Model with Definite Finite Automaton

- InstructRetro: Instruction Tuning post Retrieval-Augmented Pretraining

- A Statistical Framework for Data-dependent Retrieval-Augmented Models

- Superposition Prompting: Improving and Accelerating Retrieval-Augmented Generation

- Trustworthy Alignment of Retrieval-Augmented Large Language Models via Reinforcement Learning

- Understanding Retrieval-Augmented Task Adaptation for Vision-Language Models

- Bottleneck-Minimal Indexing for Generative Document Retrieval

- PinNet: Pinpoint Instructive Information for Retrieval Augmented Code-to-Text Generation

- Retrieval-Augmented Score Distillation for Text-to-3D Generation

- Automated Evaluation of Retrieval-Augmented Language Models with Task-Specific Exam Generation

- Accelerating Iterative Retrieval-augmented Language Model Serving with Speculation

- Self-RAG: Learning to Retrieve, Generate, and Critique through Self-Reflection

- BTR: Binary Token Representations for Efficient Retrieval Augmented Language Models

- Making Retrieval-Augmented Language Models Robust to Irrelevant Context

- RA-DIT: Retrieval-Augmented Dual Instruction Tuning

- RAPTOR: Recursive Abstractive Processing for Tree-Organized Retrieval

- RECOMP: Improving Retrieval-Augmented LMs with Context Compression and Selective Augmentation

- Retrieval meets Long Context Large Language Models

- SuRe: Summarizing Retrievals using Answer Candidates for Open-domain QA of LLMs

Welcome to communicate with us by email at [email protected]

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome-RAG

Similar Open Source Tools

Awesome-RAG

Awesome-RAG is a repository that lists recent developments in Retrieval-Augmented Generation (RAG) for large language models (LLM). It includes accepted papers, evaluation datasets, latest news, and papers from various conferences like NIPS, EMNLP, ACL, ICML, and ICLR. The repository is continuously updated and aims to build a general framework for RAG. Researchers are encouraged to submit pull requests to update information in their papers. The repository covers a wide range of topics related to RAG, including knowledge-enhanced generation, contrastive reasoning, self-alignment, mobile agents, and more.

rag-in-action

rag-in-action is a GitHub repository that provides a practical course structure for developing a RAG system based on DeepSeek. The repository likely contains resources, code samples, and tutorials to guide users through the process of building and implementing a RAG system using DeepSeek technology. Users interested in learning about RAG systems and their development may find this repository helpful in gaining hands-on experience and practical knowledge in this area.

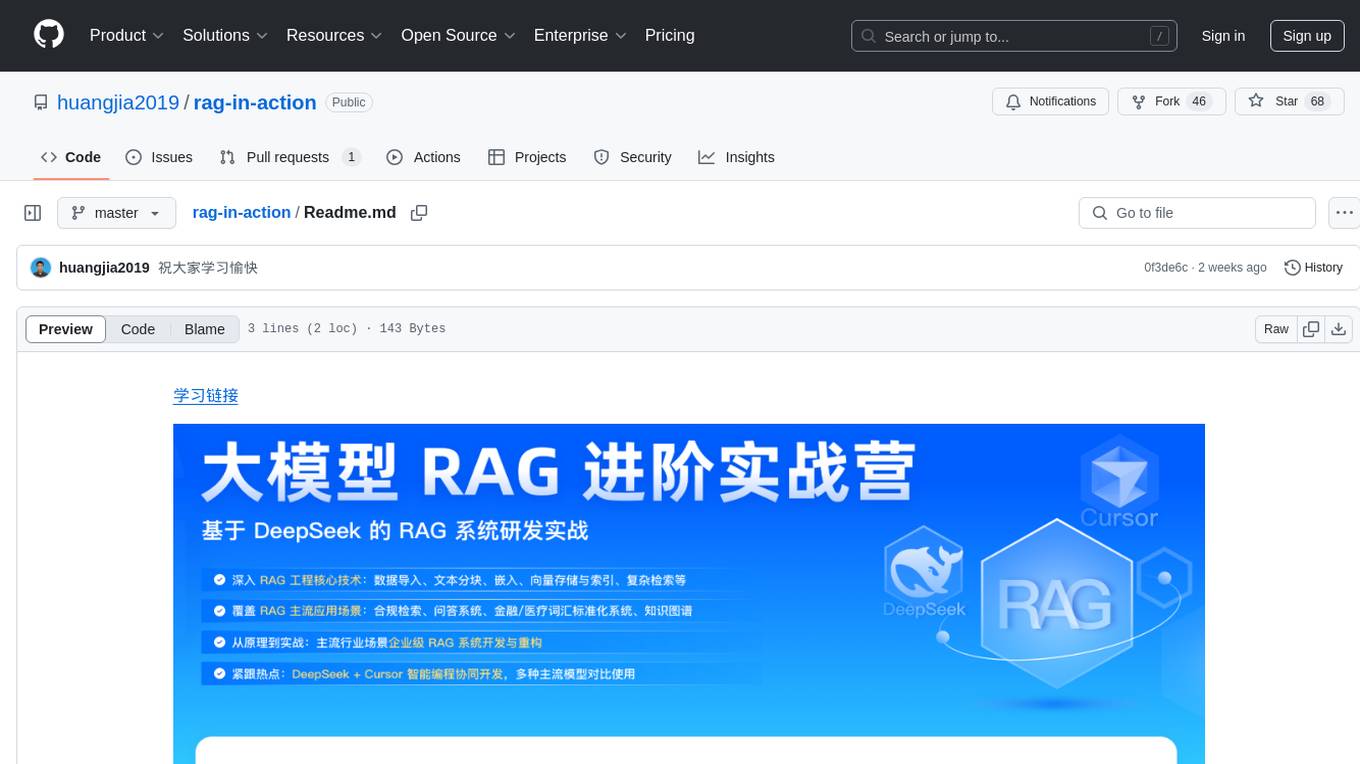

LLMs-playground

LLMs-playground is a repository containing code examples and tutorials for learning and experimenting with Large Language Models (LLMs). It provides a hands-on approach to understanding how LLMs work and how to fine-tune them for specific tasks. The repository covers various LLM architectures, pre-training techniques, and fine-tuning strategies, making it a valuable resource for researchers, students, and practitioners interested in natural language processing and machine learning. By exploring the code and following the tutorials, users can gain practical insights into working with LLMs and apply their knowledge to real-world projects.

God-Level-AI

A drill of scientific methods, processes, algorithms, and systems to build stories & models. An in-depth learning resource for humans. This repository is designed for individuals aiming to excel in the field of Data and AI, providing video sessions and text content for learning. It caters to those in leadership positions, professionals, and students, emphasizing the need for dedicated effort to achieve excellence in the tech field. The content covers various topics with a focus on practical application.

awesome-ai-agent-papers

This repository contains a curated list of papers related to artificial intelligence agents. It includes research papers, articles, and resources covering various aspects of AI agents, such as reinforcement learning, multi-agent systems, natural language processing, and more. Whether you are a researcher, student, or practitioner in the field of AI, this collection of papers can serve as a valuable reference to stay updated with the latest advancements and trends in AI agent technologies.

ai-tutor-rag-system

The AI Tutor RAG System repository contains Jupyter notebooks supporting the RAG course, focusing on enhancing AI models with retrieval-based methods. It covers foundational and advanced concepts in retrieval-augmented generation, including data retrieval techniques, model integration with retrieval systems, and practical applications of RAG in real-world scenarios.

enterprise-h2ogpte

Enterprise h2oGPTe - GenAI RAG is a repository containing code examples, notebooks, and benchmarks for the enterprise version of h2oGPTe, a powerful AI tool for generating text based on the RAG (Retrieval-Augmented Generation) architecture. The repository provides resources for leveraging h2oGPTe in enterprise settings, including implementation guides, performance evaluations, and best practices. Users can explore various applications of h2oGPTe in natural language processing tasks, such as text generation, content creation, and conversational AI.

RAG-To-Know

RAG-To-Know is a versatile tool for knowledge extraction and summarization. It leverages the RAG (Retrieval-Augmented Generation) framework to provide a seamless way to retrieve and summarize information from various sources. With RAG-To-Know, users can easily extract key insights and generate concise summaries from large volumes of text data. The tool is designed to streamline the process of information retrieval and summarization, making it ideal for researchers, students, journalists, and anyone looking to quickly grasp the essence of complex information.

intro-llm.github.io

Large Language Models (LLM) are language models built by deep neural networks containing hundreds of billions of weights, trained on a large amount of unlabeled text using self-supervised learning methods. Since 2018, companies and research institutions including Google, OpenAI, Meta, Baidu, and Huawei have released various models such as BERT, GPT, etc., which have performed well in almost all natural language processing tasks. Starting in 2021, large models have shown explosive growth, especially after the release of ChatGPT in November 2022, attracting worldwide attention. Users can interact with systems using natural language to achieve various tasks from understanding to generation, including question answering, classification, summarization, translation, and chat. Large language models demonstrate powerful knowledge of the world and understanding of language. This repository introduces the basic theory of large language models including language models, distributed model training, and reinforcement learning, and uses the Deepspeed-Chat framework as an example to introduce the implementation of large language models and ChatGPT-like systems.

ai-workshop-code

The ai-workshop-code repository contains code examples and tutorials for various artificial intelligence concepts and algorithms. It serves as a practical resource for individuals looking to learn and implement AI techniques in their projects. The repository covers a wide range of topics, including machine learning, deep learning, natural language processing, computer vision, and reinforcement learning. By exploring the code and following the tutorials, users can gain hands-on experience with AI technologies and enhance their understanding of how these algorithms work in practice.

cs-self-learning

This repository serves as an archive for computer science learning notes, codes, and materials. It covers a wide range of topics including basic knowledge, AI, backend & big data, tools, and other related areas. The content is organized into sections and subsections for easy navigation and reference. Users can find learning resources, programming practices, and tutorials on various subjects such as languages, data structures & algorithms, AI, frameworks, databases, development tools, and more. The repository aims to support self-learning and skill development in the field of computer science.

lemonai

LemonAI is a versatile machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and algorithms for data preprocessing, model training, and evaluation. With LemonAI, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is well-documented and beginner-friendly, making it suitable for both novice and experienced data scientists. LemonAI aims to streamline the development of AI applications and empower users to create innovative solutions using state-of-the-art machine learning methods.

osaurus

Osaurus is a versatile open-source tool designed for data scientists and machine learning engineers. It provides a wide range of functionalities for data preprocessing, feature engineering, model training, and evaluation. With Osaurus, users can easily clean and transform raw data, extract relevant features, build and tune machine learning models, and analyze model performance. The tool supports various machine learning algorithms and techniques, making it suitable for both beginners and experienced practitioners in the field. Osaurus is actively maintained and updated to incorporate the latest advancements in the machine learning domain, ensuring users have access to state-of-the-art tools and methodologies for their projects.

deeppowers

Deeppowers is a powerful Python library for deep learning applications. It provides a wide range of tools and utilities to simplify the process of building and training deep neural networks. With Deeppowers, users can easily create complex neural network architectures, perform efficient training and optimization, and deploy models for various tasks. The library is designed to be user-friendly and flexible, making it suitable for both beginners and experienced deep learning practitioners.

artificial-intelligence

This repository contains a collection of AI projects implemented in Python, primarily in Jupyter notebooks. The projects cover various aspects of artificial intelligence, including machine learning, deep learning, natural language processing, computer vision, and more. Each project is designed to showcase different AI techniques and algorithms, providing a hands-on learning experience for users interested in exploring the field of artificial intelligence.

Awesome-LLM-Agent-Optimization-Papers

This repository contains a curated list of papers related to agent optimization in reinforcement learning. It includes research papers, articles, and resources that focus on improving the performance of agents in various environments through optimization techniques. The collection covers a wide range of topics such as policy optimization, reward shaping, exploration strategies, and more. Whether you are a researcher, student, or practitioner in the field of reinforcement learning, this repository serves as a valuable resource to stay updated on the latest advancements and best practices in agent optimization.

For similar tasks

Awesome-RAG

Awesome-RAG is a repository that lists recent developments in Retrieval-Augmented Generation (RAG) for large language models (LLM). It includes accepted papers, evaluation datasets, latest news, and papers from various conferences like NIPS, EMNLP, ACL, ICML, and ICLR. The repository is continuously updated and aims to build a general framework for RAG. Researchers are encouraged to submit pull requests to update information in their papers. The repository covers a wide range of topics related to RAG, including knowledge-enhanced generation, contrastive reasoning, self-alignment, mobile agents, and more.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.