LangBot

Production-grade platform for building agentic IM bots - 生产级多平台智能机器人开发平台. 提供 Agent、知识库编排、插件系统 / Bots for Discord / Slack / LINE / Telegram / WeChat(企业微信, 企微智能机器人, 公众号) / 飞书 / 钉钉 / QQ e.g. Integrated with ChatGPT(GPT), DeepSeek, Dify, n8n, Langflow, Coze, Claude, Gemini, MiniMax, Ollama, SiliconFlow, Moonshot, GLM, clawdbot / moltbot / openclaw

Stars: 15290

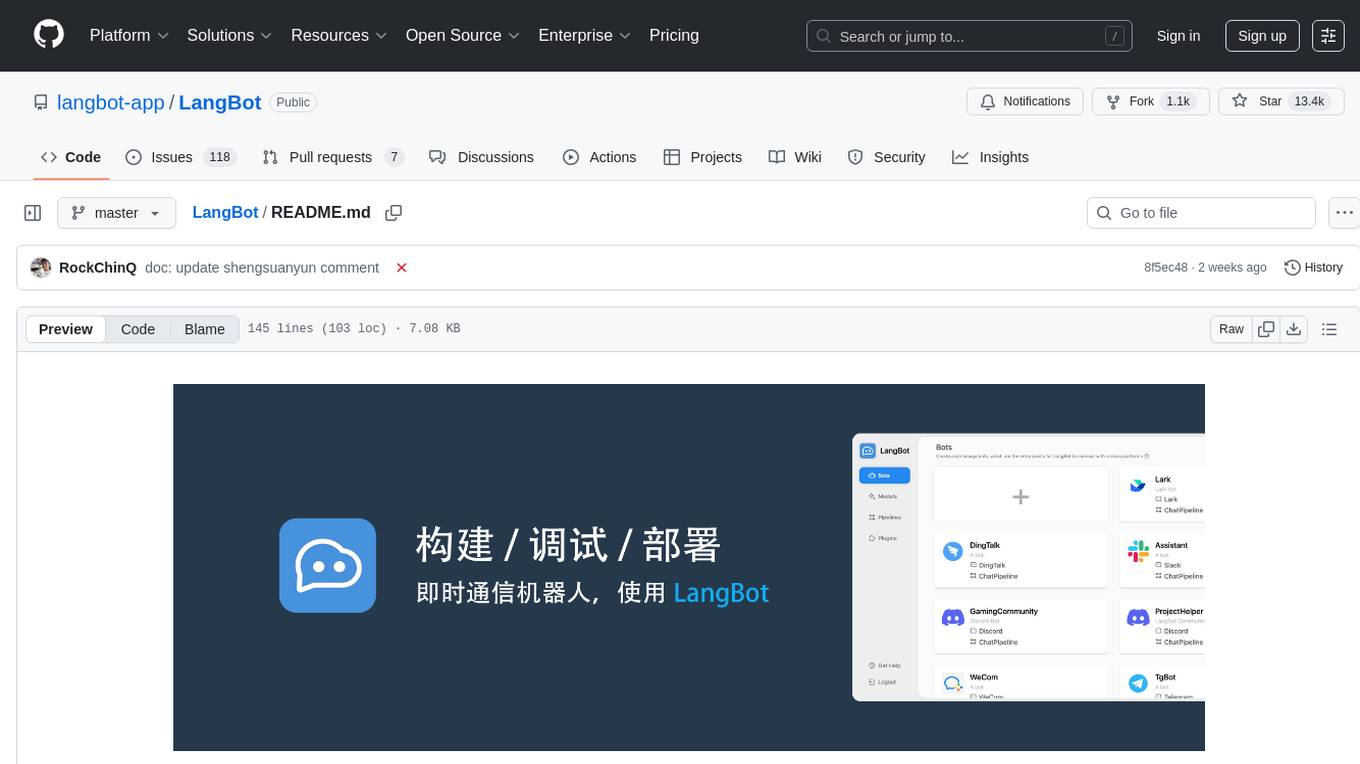

LangBot is an open-source large language model native instant messaging robot development platform, aiming to provide a plug-and-play IM robot development experience, with various LLM application functions such as Agent, RAG, MCP, adapting to mainstream instant messaging platforms globally, and providing rich API interfaces to support custom development.

README:

使用 uvx 一键启动(需要先安装 uv):

uvx langbot访问 http://localhost:5300 即可开始使用。

git clone https://github.com/langbot-app/LangBot

cd LangBot/docker

docker compose up -d访问 http://localhost:5300 即可开始使用。

详细文档Docker 部署。

已上架宝塔面板,若您已安装宝塔面板,可以根据文档使用。

社区贡献的 Zeabur 模板。

直接使用发行版运行,查看文档手动部署。

参考 Kubernetes 部署 文档。

点击仓库右上角 Star 和 Watch 按钮,获取最新动态。

- 💬 大模型对话、Agent:支持多种大模型,适配群聊和私聊;具有多轮对话、工具调用、多模态、流式输出能力,自带 RAG(知识库)实现,并深度适配 Dify、Coze、n8n、Langflow等 LLMOps 平台。

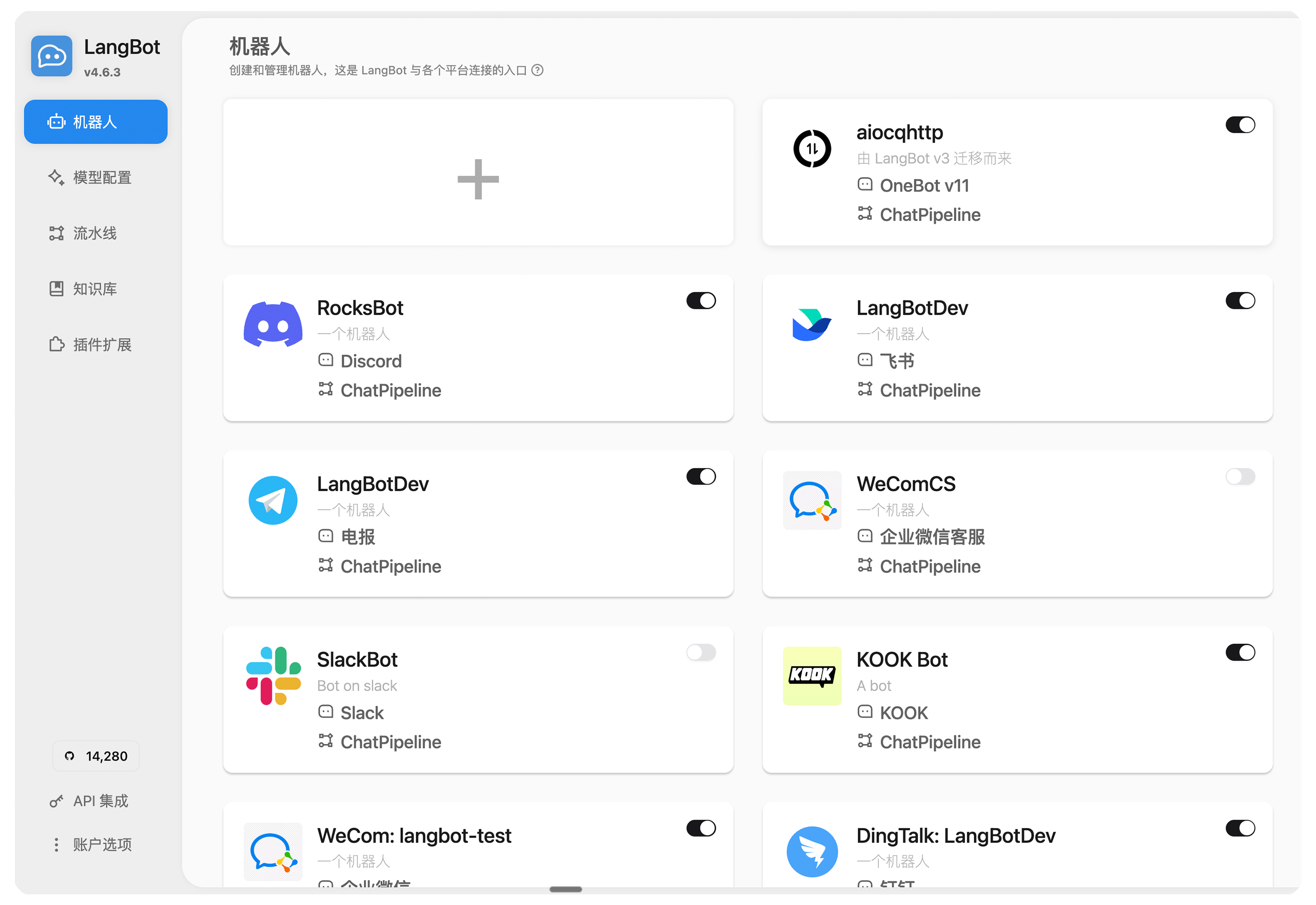

- 🤖 多平台支持:目前支持 QQ、QQ频道、企业微信、个人微信、飞书、Discord、Telegram、KOOK、Slack、LINE 等平台。

- 🛠️ 高稳定性、功能完备:原生支持访问控制、限速、敏感词过滤等机制;配置简单,支持多种部署方式。

- 🧩 插件扩展、活跃社区:高稳定性、高安全性的生产级插件系统,支持事件驱动、组件扩展等插件机制;适配 Anthropic MCP 协议;目前已有数百个插件。

- 😻 Web 管理面板:提供先进的 WebUI 管理面板,用最直观的方式配置、管理、监控机器人。

- 📊 生产级特性:支持多流水线配置,不同机器人用于不同应用场景。具有全面的监控和异常处理能力。已被多家企业采用。

详细规格特性请访问文档。

或访问 demo 环境:https://demo.langbot.dev/

- 登录信息:邮箱:

[email protected]密码:langbot123456 - 注意:仅展示 WebUI 效果,公开环境,请不要在其中填入您的任何敏感信息。

| 平台 | 状态 | 备注 |

|---|---|---|

| QQ 个人号 | ✅ | QQ 个人号私聊、群聊 |

| QQ 官方机器人 | ✅ | QQ 官方机器人,支持频道、私聊、群聊 |

| 企业微信 | ✅ | |

| 企微对外客服 | ✅ | |

| 企微智能机器人 | ✅ | |

| 个人微信 | ✅ | |

| 微信公众号 | ✅ | |

| 飞书 | ✅ | |

| 钉钉 | ✅ | |

| KOOK | ✅ | |

| Discord | ✅ | |

| Telegram | ✅ | |

| Slack | ✅ | |

| LINE | ✅ |

| 模型 | 状态 | 备注 |

|---|---|---|

| OpenAI | ✅ | 可接入任何 OpenAI 接口格式模型 |

| DeepSeek | ✅ | |

| Moonshot | ✅ | |

| Anthropic | ✅ | |

| xAI | ✅ | |

| 智谱AI | ✅ | |

| 胜算云 | ✅ | 全球大模型都可调用(友情推荐) |

| 优云智算 | ✅ | 大模型和 GPU 资源平台 |

| PPIO | ✅ | 大模型和 GPU 资源平台 |

| 接口 AI | ✅ | 大模型聚合平台,专注全球大模型接入 |

| 302.AI | ✅ | 大模型聚合平台 |

| Google Gemini | ✅ | |

| Dify | ✅ | LLMOps 平台 |

| Ollama | ✅ | 本地大模型运行平台 |

| LMStudio | ✅ | 本地大模型运行平台 |

| GiteeAI | ✅ | 大模型接口聚合平台 |

| SiliconFlow | ✅ | 大模型聚合平台 |

| 小马算力 | ✅ | 大模型聚合平台 |

| 阿里云百炼 | ✅ | 大模型聚合平台, LLMOps 平台 |

| 火山方舟 | ✅ | 大模型聚合平台, LLMOps 平台 |

| ModelScope | ✅ | 大模型聚合平台 |

| MCP | ✅ | 支持通过 MCP 协议获取工具 |

| 百宝箱Tbox | ✅ | 蚂蚁百宝箱智能体平台,每月免费10亿大模型Token |

| 平台/模型 | 备注 |

|---|---|

| FishAudio | 插件 |

| 海豚 AI | 插件 |

| AzureTTS | 插件 |

| 平台/模型 | 备注 |

|---|---|

| 阿里云百炼 | 插件 |

感谢以下代码贡献者和社区里其他成员对 LangBot 的贡献:

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LangBot

Similar Open Source Tools

LangBot

LangBot is an open-source large language model native instant messaging robot development platform, aiming to provide a plug-and-play IM robot development experience, with various LLM application functions such as Agent, RAG, MCP, adapting to mainstream instant messaging platforms globally, and providing rich API interfaces to support custom development.

LangBot

LangBot is a highly stable, extensible, and multimodal instant messaging chatbot platform based on large language models. It supports various large models, adapts to group chats and private chats, and has capabilities for multi-turn conversations, tool invocation, and multimodal interactions. It is deeply integrated with Dify and currently supports QQ and QQ channels, with plans to support platforms like WeChat, WhatsApp, and Discord. The platform offers high stability, comprehensive functionality, native support for access control, rate limiting, sensitive word filtering mechanisms, and simple configuration with multiple deployment options. It also features plugin extension capabilities, an active community, and a new web management panel for managing LangBot instances through a browser.

yudao-ui-admin-vue3

The yudao-ui-admin-vue3 repository is an open-source project focused on building a fast development platform for developers in China. It utilizes Vue3 and Element Plus to provide features such as configurable themes, internationalization, dynamic route permission generation, common component encapsulation, and rich examples. The project supports the latest front-end technologies like Vue3 and Vite4, and also includes tools like TypeScript, pinia, vueuse, vue-i18n, vue-router, unocss, iconify, and wangeditor. It offers a range of development tools and features for system functions, infrastructure, workflow management, payment systems, member centers, data reporting, e-commerce systems, WeChat public accounts, ERP systems, and CRM systems.

JiwuChat

JiwuChat is a lightweight multi-platform chat application built on Tauri2 and Nuxt3, with various real-time messaging features, AI group chat bots (such as 'iFlytek Spark', 'KimiAI' etc.), WebRTC audio-video calling, screen sharing, and AI shopping functions. It supports seamless cross-device communication, covering text, images, files, and voice messages, also supporting group chats and customizable settings. It provides light/dark mode for efficient social networking.

litellm

LiteLLM is a tool that allows you to call all LLM APIs using the OpenAI format. This includes Bedrock, Huggingface, VertexAI, TogetherAI, Azure, OpenAI, and more. LiteLLM manages translating inputs to provider's `completion`, `embedding`, and `image_generation` endpoints, providing consistent output, and retry/fallback logic across multiple deployments. It also supports setting budgets and rate limits per project, api key, and model.

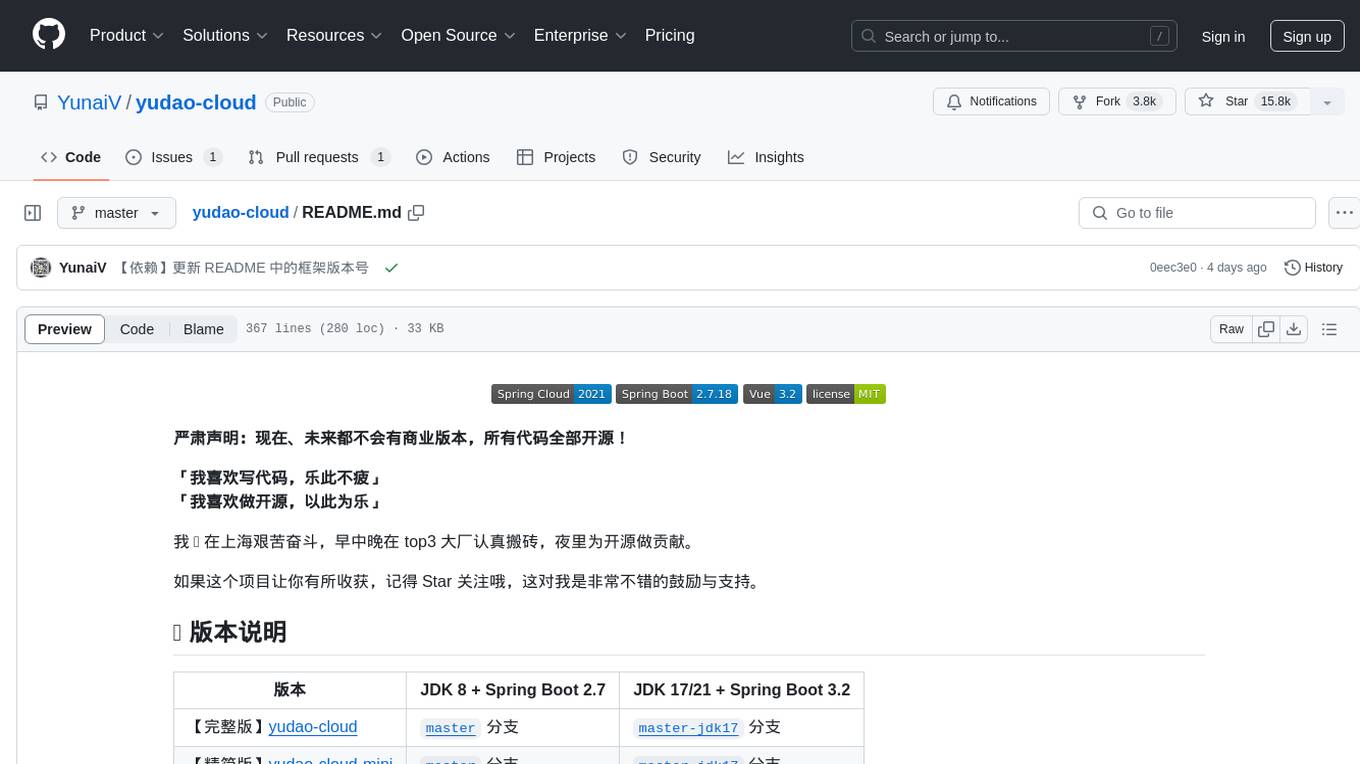

yudao-cloud

Yudao-cloud is an open-source project designed to provide a fast development platform for developers in China. It includes various system functions, infrastructure, member center, data reports, workflow, mall system, WeChat public account, CRM, ERP, etc. The project is based on Java backend with Spring Boot and Spring Cloud Alibaba microservices architecture. It supports multiple databases, message queues, authentication systems, dynamic menu loading, SaaS multi-tenant system, code generator, real-time communication, integration with third-party services like WeChat, Alipay, and more. The project is well-documented and follows the Alibaba Java development guidelines, ensuring clean code and architecture.

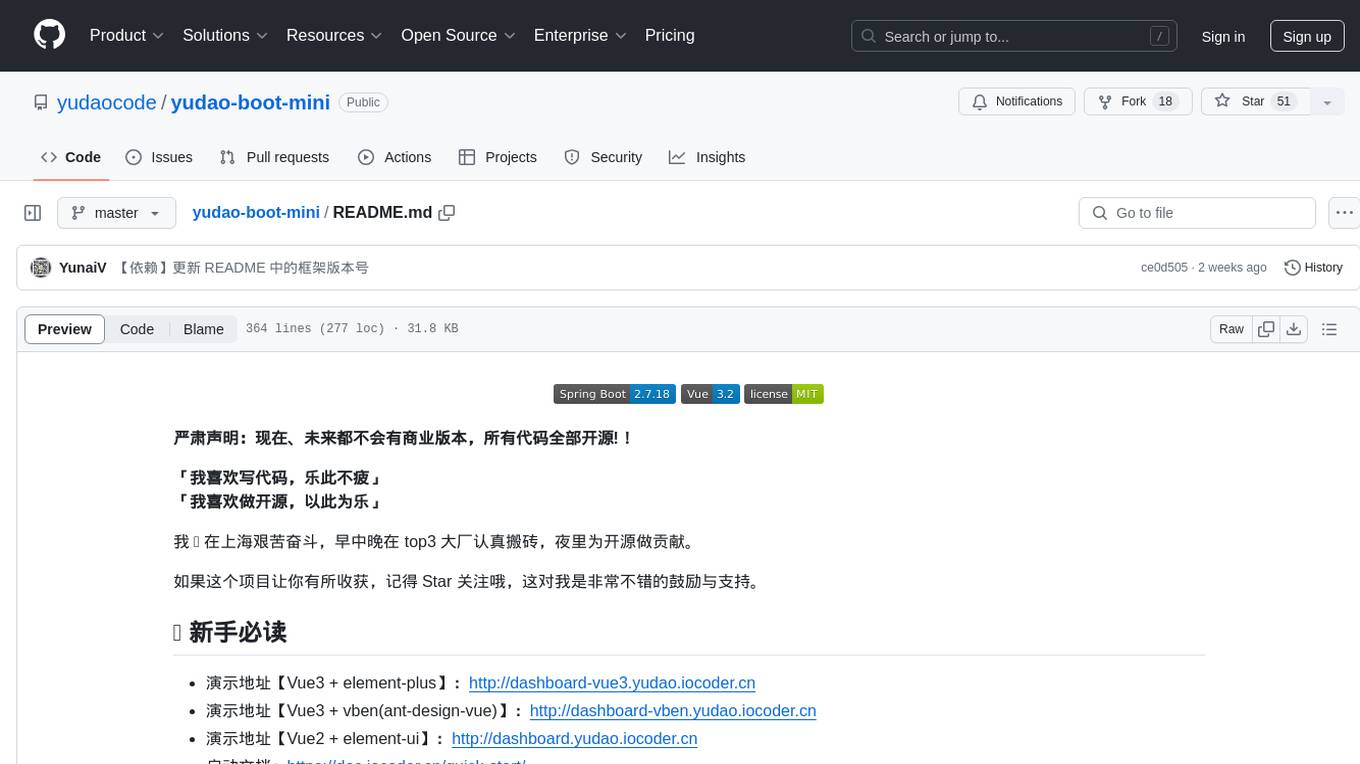

yudao-boot-mini

yudao-boot-mini is an open-source project focused on developing a rapid development platform for developers in China. It includes features like system functions, infrastructure, member center, data reports, workflow, mall system, WeChat official account, CRM, ERP, etc. The project is based on Spring Boot with Java backend and Vue for frontend. It offers various functionalities such as user management, role management, menu management, department management, workflow management, payment system, code generation, API documentation, database documentation, file service, WebSocket integration, message queue, Java monitoring, and more. The project is licensed under the MIT License, allowing both individuals and enterprises to use it freely without restrictions.

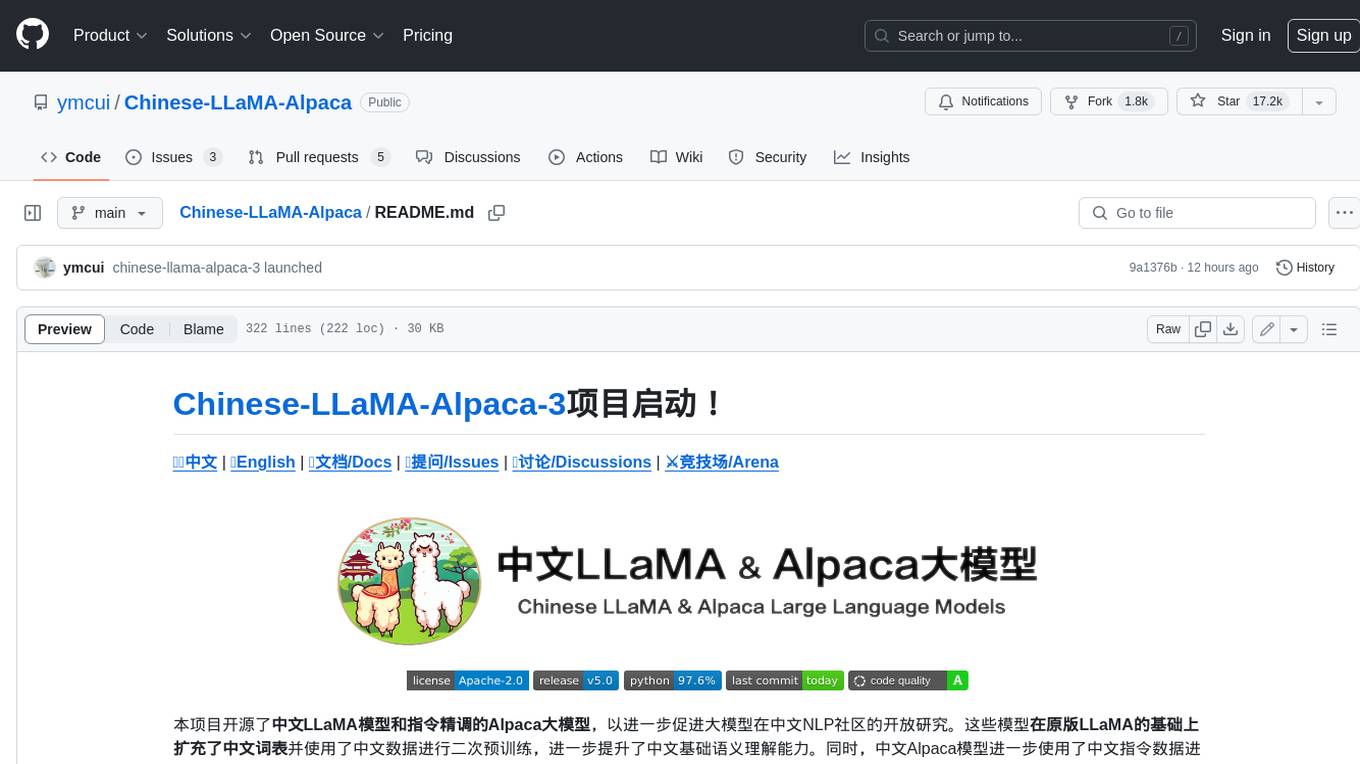

Chinese-LLaMA-Alpaca

This project open sources the **Chinese LLaMA model and the Alpaca large model fine-tuned with instructions**, to further promote the open research of large models in the Chinese NLP community. These models **extend the Chinese vocabulary based on the original LLaMA** and use Chinese data for secondary pre-training, further enhancing the basic Chinese semantic understanding ability. At the same time, the Chinese Alpaca model further uses Chinese instruction data for fine-tuning, significantly improving the model's understanding and execution of instructions.

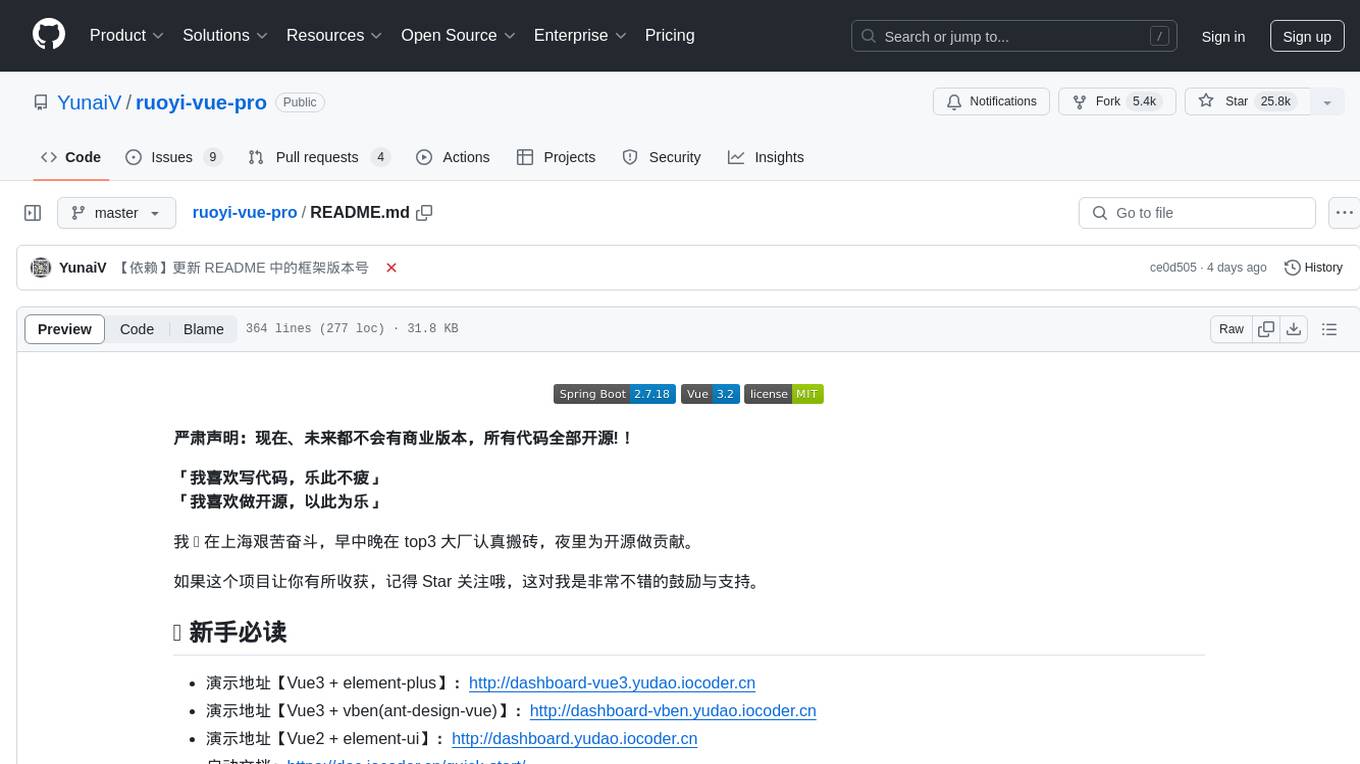

ruoyi-vue-pro

The ruoyi-vue-pro repository is an open-source project that provides a comprehensive development platform with various functionalities such as system features, infrastructure, member center, data reports, workflow, payment system, mall system, ERP system, CRM system, and AI big model. It is built using Java backend with Spring Boot framework and Vue frontend with different versions like Vue3 with element-plus, Vue3 with vben(ant-design-vue), and Vue2 with element-ui. The project aims to offer a fast development platform for developers and enterprises, supporting features like dynamic menu loading, button-level access control, SaaS multi-tenancy, code generator, real-time communication, integration with third-party services like WeChat, Alipay, and cloud services, and more.

adata

AData is a free and open-source A-share database that focuses on transaction-related data. It provides comprehensive data on stocks, including basic information, market data, and sentiment analysis. AData is designed to be easy to use and integrate with other applications, making it a valuable tool for quantitative trading and AI training.

teaching-boyfriend-llm

The 'teaching-boyfriend-llm' repository contains study notes on LLM (Large Language Models) for the purpose of advancing towards AGI (Artificial General Intelligence). The notes are a collaborative effort towards understanding and implementing LLM technology.

llm-scaler

LLM Scaler is a GenAI solution for text, image, and video generation running on Intel® Arc™ Pro B60 GPUs. It leverages standard frameworks such as vLLM, ComfyUI, SGLang Diffusion, Xinference, etc., ensuring optimal performance for State-of-Art GenAI models on Arc Pro B60 GPUs.

PaddleScience

PaddleScience is a scientific computing suite developed based on the deep learning framework PaddlePaddle. It utilizes the learning ability of deep neural networks and the automatic (higher-order) differentiation mechanism of PaddlePaddle to solve problems in physics, chemistry, meteorology, and other fields. It supports three solving methods: physics mechanism-driven, data-driven, and mathematical fusion, and provides basic APIs and detailed documentation for users to use and further develop.

XiaoFeiShu

XiaoFeiShu is a specialized automation software developed closely following the quality user rules of Xiaohongshu. It provides a set of automation workflows for Xiaohongshu operations, avoiding the issues of traditional RPA being mechanical, rule-based, and easily detected. The software is easy to use, with simple operation and powerful functionality.

sanic-web

Sanic-Web is a lightweight, end-to-end, and easily customizable large model application project built on technologies such as Dify, Ollama & Vllm, Sanic, and Text2SQL. It provides a one-stop solution for developing large model applications, supporting graphical data-driven Q&A using ECharts, handling table-based Q&A with CSV files, and integrating with third-party RAG systems for general knowledge Q&A. As a lightweight framework, Sanic-Web enables rapid iteration and extension to facilitate the quick implementation of large model projects.

For similar tasks

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

superagent-js

Superagent is an open source framework that enables any developer to integrate production ready AI Assistants into any application in a matter of minutes.

chainlit

Chainlit is an open-source async Python framework which allows developers to build scalable Conversational AI or agentic applications. It enables users to create ChatGPT-like applications, embedded chatbots, custom frontends, and API endpoints. The framework provides features such as multi-modal chats, chain of thought visualization, data persistence, human feedback, and an in-context prompt playground. Chainlit is compatible with various Python programs and libraries, including LangChain, Llama Index, Autogen, OpenAI Assistant, and Haystack. It offers a range of examples and a cookbook to showcase its capabilities and inspire users. Chainlit welcomes contributions and is licensed under the Apache 2.0 license.

neo4j-generative-ai-google-cloud

This repo contains sample applications that show how to use Neo4j with the generative AI capabilities in Google Cloud Vertex AI. We explore how to leverage Google generative AI to build and consume a knowledge graph in Neo4j.

MemGPT

MemGPT is a system that intelligently manages different memory tiers in LLMs in order to effectively provide extended context within the LLM's limited context window. For example, MemGPT knows when to push critical information to a vector database and when to retrieve it later in the chat, enabling perpetual conversations. MemGPT can be used to create perpetual chatbots with self-editing memory, chat with your data by talking to your local files or SQL database, and more.

py-gpt

Py-GPT is a Python library that provides an easy-to-use interface for OpenAI's GPT-3 API. It allows users to interact with the powerful GPT-3 model for various natural language processing tasks. With Py-GPT, developers can quickly integrate GPT-3 capabilities into their applications, enabling them to generate text, answer questions, and more with just a few lines of code.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.