Stellar-Chat

A versatile multi-modal chat application that enables users to develop custom agents, create images, leverage visual recognition, and engage in voice interactions. It integrates seamlessly with local LLMs and commercial models like OpenAI, Gemini, Perplexity, and Claude, and allows to converse with uploaded documents and websites.

Stars: 97

Stellar Chat is a multi-modal chat application that enables users to create custom agents and integrate with local language models and OpenAI models. It provides capabilities for generating images, visual recognition, text-to-speech, and speech-to-text functionalities. Users can engage in multimodal conversations, create custom agents, search messages and conversations, and integrate with various applications for enhanced productivity. The project is part of the '100 Commits' competition, challenging participants to make meaningful commits daily for 100 consecutive days.

README:

A powerful multi-modal chat application that empowers users to create custom agents, generate images, utilize visual recognition, and engage in voice conversations. It seamlessly integrates with local LLMs and commercial models like OpenAI, Gemini, Perplexity, and Claude, while also offering the capability to converse with uploaded documents and websites.

Documentation | Report Bug | Request Feature

[!NOTE]

This project is part of the "100 Commits" competition, which challenges participants to commit to their projects by making at least one meaningful commit every day for 100 consecutive days.

Table of Contents

- 🎥 Demo

-

✨ Features

1.Support for Local Open Source Models2.Support for Commercial Models3.Visual Recognition4.Support for TTS & STT5.Text to Image Generation6.Multimodal Chat7.Prompt Store8.Custom Agent Creation (GPTs)9.Message and Conversation Search10.Custom Action Creation for App Integration11.Multi-Agent Chat Capability

- 🚀 Self-Hosted

- ⌨️ Local Development

- ⭐ Enjoying the Project?

- 🚧 Issues

- 📝 License

https://github.com/ktutak1337/Stellar-Chat/assets/49451143/3482d401-70cb-4ce8-bf2e-ec69f5859367

[!IMPORTANT]

Planned Features

This is a list of planned features to be implemented in the future. Please note that the list may change over time as the project progresses and new priorities emerge.

1. Support for Local Open Source Models

Integrate and utilize local open source models through the OLLAMA platform.

2. Support for Commercial Models

Easily use commercial models like OpenAI, Gemini, Perplexity, and Claude.

3. Visual Recognition

Utilize the powerful visual recognition capabilities of the GPT-4-Vision model and Gemini Vision.

4. Support for TTS & STT

Enable text-to-speech (TTS) and speech-to-text (STT) functionalities within the application.

5. Text to Image Generation

Generate images from text inputs using advanced models such as Stable Diffusion and DALL-E 3.

6. Multimodal Chat

Analyze text, image, and audio files and engage in conversations with uploaded files.

7. Prompt Store

Create and manage your own repository of predefined prompts to easily use, modify, and enhance interactions with the models.

8. Custom Agent Creation (GPTs)

Easily create and customize your own agents to tailor the interactions and responses according to your specific needs.

9. Message and Conversation Search

Easily search through all messages and conversations to quickly find relevant information or previous interactions.

10. Custom Action Creation for App Integration

Create custom actions to seamlessly integrate with your favorite applications such as Gmail, Todoist, Spotify, and more, enhancing productivity and workflow efficiency.

11. Multi-Agent Chat Capability

Engage in conversations with multiple agents simultaneously within a single chat interface, enabling diverse interactions and enhanced collaboration.

Choose the deployment method that best suits your needs and get started with Stellar Chat today!

Explore our deployment options to get started quickly:

You have the option to utilize GitHub Codespaces for online development:

Or clone it for local development:

git clone https://github.com/ktutak1337/Stellar-Chat.git

# It is recommended to use Docker to run the infrastructure components (MongoDB, Qdrant, Seq):

cd src

docker compose up -d

# configrure API:

cd src/Server/StellarChat.Server.Api

# set all api keys (more details in docs):

dotnet user-secrets init

dotnet user-secrets set openAI:api_key [your API KEY]

# Run API:

dotnet run watch

# Run web app:

cd src/Client/StellarChat.Client.Web

dotnet run watchIf you want to delve deeper into setting up your local development environment, please feel free to consult our 📘 Development Guide.

If you find this project helpful, learned something new, or using it to kickstart your own solution, consider showing your appreciation by giving it a star! Your support means a lot. Thank you! 🚀

If you have discovered a bug or having some issues, please let me know by reporting a new issue.

This project is licensed under the AGPL-3.0 license - see the LICENSE file for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Stellar-Chat

Similar Open Source Tools

Stellar-Chat

Stellar Chat is a multi-modal chat application that enables users to create custom agents and integrate with local language models and OpenAI models. It provides capabilities for generating images, visual recognition, text-to-speech, and speech-to-text functionalities. Users can engage in multimodal conversations, create custom agents, search messages and conversations, and integrate with various applications for enhanced productivity. The project is part of the '100 Commits' competition, challenging participants to make meaningful commits daily for 100 consecutive days.

Revornix

Revornix is an information management tool designed for the AI era. It allows users to conveniently integrate all visible information and generates comprehensive reports at specific times. The tool offers cross-platform availability, all-in-one content aggregation, document transformation & vectorized storage, native multi-tenancy, localization & open-source features, smart assistant & built-in MCP, seamless LLM integration, and multilingual & responsive experience for users.

slidev-ai

Slidev AI is a web app that leverages LLM (Large Language Model) technology to make creating Slidev-based online presentations elegant and effortless. It is designed to help engineers and academics quickly produce content-focused, minimalist PPTs that are easily shareable online. This project serves as a reference implementation for OpenMCP agent development, a production-ready presentation generation solution, and a template for creating domain-specific AI agents.

refly

Refly.AI is an open-source AI-native creation engine that empowers users to transform ideas into production-ready content. It features a free-form canvas interface with multi-threaded conversations, knowledge base integration, contextual memory, intelligent search, WYSIWYG AI editor, and more. Users can leverage AI-powered capabilities, context memory, knowledge base integration, quotes, and AI document editing to enhance their content creation process. Refly offers both cloud and self-hosting options, making it suitable for individuals, enterprises, and organizations. The tool is designed to facilitate human-AI collaboration and streamline content creation workflows.

dify

Dify is an open-source LLM app development platform that combines AI workflow, RAG pipeline, agent capabilities, model management, observability features, and more. It allows users to quickly go from prototype to production. Key features include: 1. Workflow: Build and test powerful AI workflows on a visual canvas. 2. Comprehensive model support: Seamless integration with hundreds of proprietary / open-source LLMs from dozens of inference providers and self-hosted solutions. 3. Prompt IDE: Intuitive interface for crafting prompts, comparing model performance, and adding additional features. 4. RAG Pipeline: Extensive RAG capabilities that cover everything from document ingestion to retrieval. 5. Agent capabilities: Define agents based on LLM Function Calling or ReAct, and add pre-built or custom tools. 6. LLMOps: Monitor and analyze application logs and performance over time. 7. Backend-as-a-Service: All of Dify's offerings come with corresponding APIs for easy integration into your own business logic.

letmedoit

LetMeDoIt AI is a virtual assistant designed to revolutionize the way you work. It goes beyond being a mere chatbot by offering a unique and powerful capability - the ability to execute commands and perform computing tasks on your behalf. With LetMeDoIt AI, you can access OpenAI ChatGPT-4, Google Gemini Pro, and Microsoft AutoGen, local LLMs, all in one place, to enhance your productivity.

DocsGPT

DocsGPT is an open-source documentation assistant powered by GPT models. It simplifies the process of searching for information in project documentation by allowing developers to ask questions and receive accurate answers. With DocsGPT, users can say goodbye to manual searches and quickly find the information they need. The tool aims to revolutionize project documentation experiences and offers features like live previews, Discord community, guides, and contribution opportunities. It consists of a Flask app, Chrome extension, similarity search index creation script, and a frontend built with Vite and React. Users can quickly get started with DocsGPT by following the provided setup instructions and can contribute to its development by following the guidelines in the CONTRIBUTING.md file. The project follows a Code of Conduct to ensure a harassment-free community environment for all participants. DocsGPT is licensed under MIT and is built with LangChain.

ocular

Ocular is a set of modules and tools that allow you to build rich, reliable, and performant Generative AI-Powered Search Platforms without the need to reinvent Search Architecture. We help you build you spin up customized internal search in days not months.

nexent

Nexent is a powerful tool for analyzing and visualizing network traffic data. It provides comprehensive insights into network behavior, helping users to identify patterns, anomalies, and potential security threats. With its user-friendly interface and advanced features, Nexent is suitable for network administrators, cybersecurity professionals, and anyone looking to gain a deeper understanding of their network infrastructure.

gptme

GPTMe is a tool that allows users to interact with an LLM assistant directly in their terminal in a chat-style interface. The tool provides features for the assistant to run shell commands, execute code, read/write files, and more, making it suitable for various development and terminal-based tasks. It serves as a local alternative to ChatGPT's 'Code Interpreter,' offering flexibility and privacy when using a local model. GPTMe supports code execution, file manipulation, context passing, self-correction, and works with various AI models like GPT-4. It also includes a GitHub Bot for requesting changes and operates entirely in GitHub Actions. In progress features include handling long contexts intelligently, a web UI and API for conversations, web and desktop vision, and a tree-based conversation structure.

OmAgent

OmAgent is an open-source agent framework designed to streamline the development of on-device multimodal agents. It enables agents to empower various hardware devices, integrates speed-optimized SOTA multimodal models, provides SOTA multimodal agent algorithms, and focuses on optimizing the end-to-end computing pipeline for real-time user interaction experience. Key features include easy connection to diverse devices, scalability, flexibility, and workflow orchestration. The architecture emphasizes graph-based workflow orchestration, native multimodality, and device-centricity, allowing developers to create bespoke intelligent agent programs.

beeai

BeeAI is an open platform that helps users discover, run, and compose AI agents from any framework and language. It offers a framework-agnostic approach, allowing seamless integration of AI agents regardless of the language or platform. Users can build complex workflows using simple building blocks, explore a catalog of powerful agents with integrated search, and benefit from the BeeAI ecosystem with first-class support for Python and TypeScript agent developers.

tuff

Tuff is a local-first, AI-native, and infinitely extensible desktop command center designed to enhance workflow efficiency. It offers a seamless integration of core utilities, AI-powered search, contextual intelligence, and extensibility through custom plugins. With a beautiful UI design, rich functionality, simple operations, and a focus on security and reliability, Tuff provides users with a cross-platform desktop software that is easy to use and offers a good user experience.

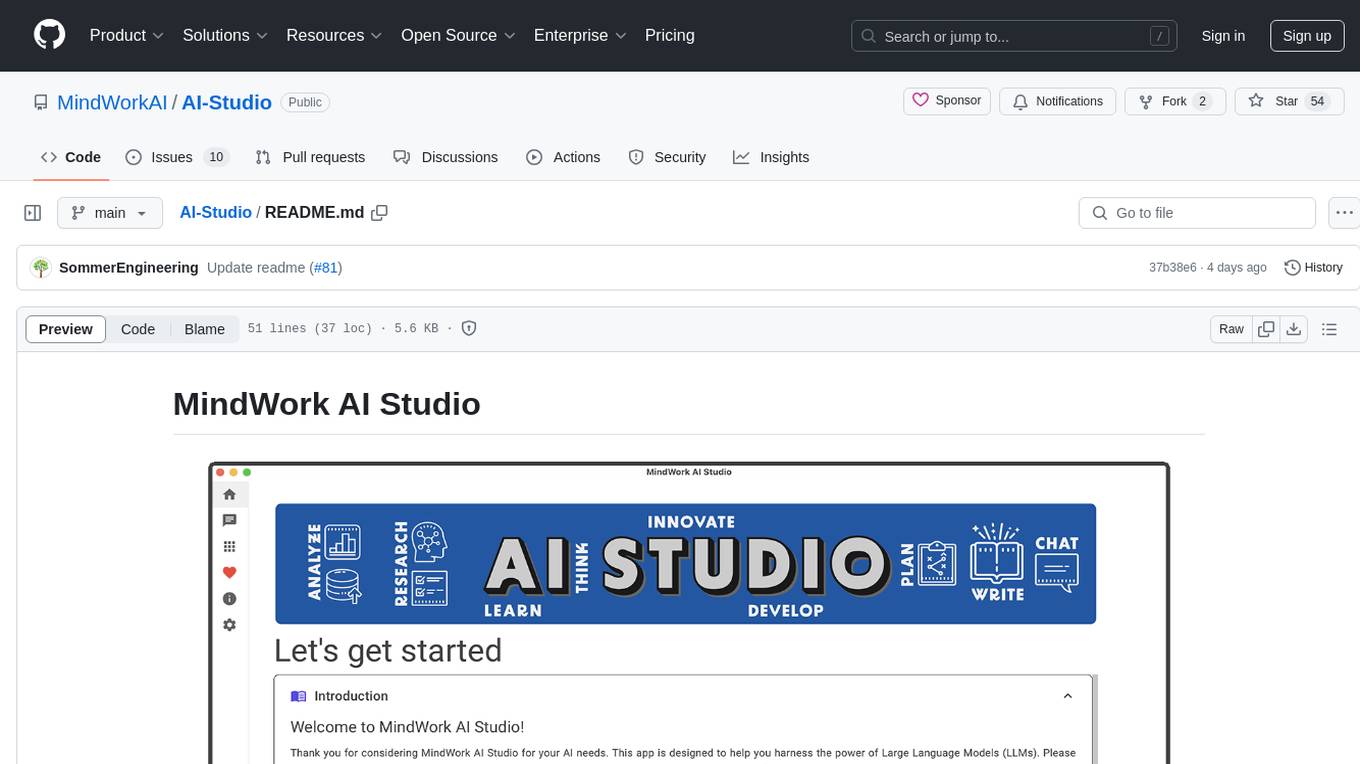

AI-Studio

MindWork AI Studio is a desktop application that provides a unified chat interface for Large Language Models (LLMs). It is free to use for personal and commercial purposes, offers independence in choosing LLM providers, provides unrestricted usage through the providers API, and is cost-effective with pay-as-you-go pricing. The app prioritizes privacy, flexibility, minimal storage and memory usage, and low impact on system resources. Users can support the project through monthly contributions or one-time donations, with opportunities for companies to sponsor the project for public relations and marketing benefits. Planned features include support for more LLM providers, system prompts integration, text replacement for privacy, and advanced interactions tailored for various use cases.

openroleplay.ai

Open Roleplay is an open-source alternative to Character.ai. It allows users to create their own AI characters, customize them, and generate images and voices for them. Open Roleplay also supports group chat and automatic translation. The tool is built with Next.js, React.js, Tailwind CSS, Vercel, Convex, and Clerk.

co-op-translator

Co-op Translator is a tool designed to facilitate communication between team members working on cooperative projects. It allows users to easily translate messages and documents in real-time, enabling seamless collaboration across language barriers. The tool supports multiple languages and provides accurate translations to ensure clear and effective communication within the team. With Co-op Translator, users can improve efficiency, productivity, and teamwork in their cooperative endeavors.

For similar tasks

Stellar-Chat

Stellar Chat is a multi-modal chat application that enables users to create custom agents and integrate with local language models and OpenAI models. It provides capabilities for generating images, visual recognition, text-to-speech, and speech-to-text functionalities. Users can engage in multimodal conversations, create custom agents, search messages and conversations, and integrate with various applications for enhanced productivity. The project is part of the '100 Commits' competition, challenging participants to make meaningful commits daily for 100 consecutive days.

neuron-ai

Neuron AI is a PHP framework that provides an Agent class for creating fully functional agents to perform tasks like analyzing text for SEO optimization. The framework manages advanced mechanisms such as memory, tools, and function calls. Users can extend the Agent class to create custom agents and interact with them to get responses based on the underlying LLM. Neuron AI aims to simplify the development of AI-powered applications by offering a structured framework with documentation and guidelines for contributions under the MIT license.

dbt-mcp

The dbt MCP Server is a Model Context Protocol server that provides tools to interact with dbt. It allows users to provide AI agents with context of their project in dbt Core, dbt Fusion, and dbt Platform. The server architecture enables agents to connect to various tools, and users can refer to the documentation for more details on its capabilities. Users can also contribute to the project by following the instructions in the CONTRIBUTING.md file.

Wegent

Wegent is an open-source AI-native operating system designed to define, organize, and run intelligent agent teams. It offers various core features such as a chat agent with multi-model support, conversation history, group chat, attachment parsing, follow-up mode, error correction mode, long-term memory, sandbox execution, and extensions. Additionally, Wegent includes a code agent for cloud-based code execution, AI feed for task triggers, AI knowledge for document management, and AI device for running tasks locally. The platform is highly extensible, allowing for custom agents, agent creation wizard, organization management, collaboration modes, skill support, MCP tools, execution engines, YAML config, and an API for easy integration with other systems.

ChatGPT

ChatGPT is a desktop application available on Mac, Windows, and Linux that provides a powerful AI wrapper experience. It allows users to interact with AI models for various tasks such as generating text, answering questions, and engaging in conversations. The application is designed to be user-friendly and accessible to both beginners and advanced users. ChatGPT aims to enhance the user experience by offering a seamless interface for leveraging AI capabilities in everyday scenarios.

LARS

LARS is an application that enables users to run Large Language Models (LLMs) locally on their devices, upload their own documents, and engage in conversations where the LLM grounds its responses with the uploaded content. The application focuses on Retrieval Augmented Generation (RAG) to increase accuracy and reduce AI-generated inaccuracies. LARS provides advanced citations, supports various file formats, allows follow-up questions, provides full chat history, and offers customization options for LLM settings. Users can force enable or disable RAG, change system prompts, and tweak advanced LLM settings. The application also supports GPU-accelerated inferencing, multiple embedding models, and text extraction methods. LARS is open-source and aims to be the ultimate RAG-centric LLM application.

Open-LLM-VTuber

Open-LLM-VTuber is a voice-interactive AI companion supporting real-time voice conversations and featuring a Live2D avatar. It can run offline on Windows, macOS, and Linux, offering web and desktop client modes. Users can customize appearance and persona, with rich LLM inference, text-to-speech, and speech recognition support. The project is highly customizable, extensible, and actively developed with exciting features planned. It provides privacy with offline mode, persistent chat logs, and various interaction features like voice interruption, touch feedback, Live2D expressions, pet mode, and more.

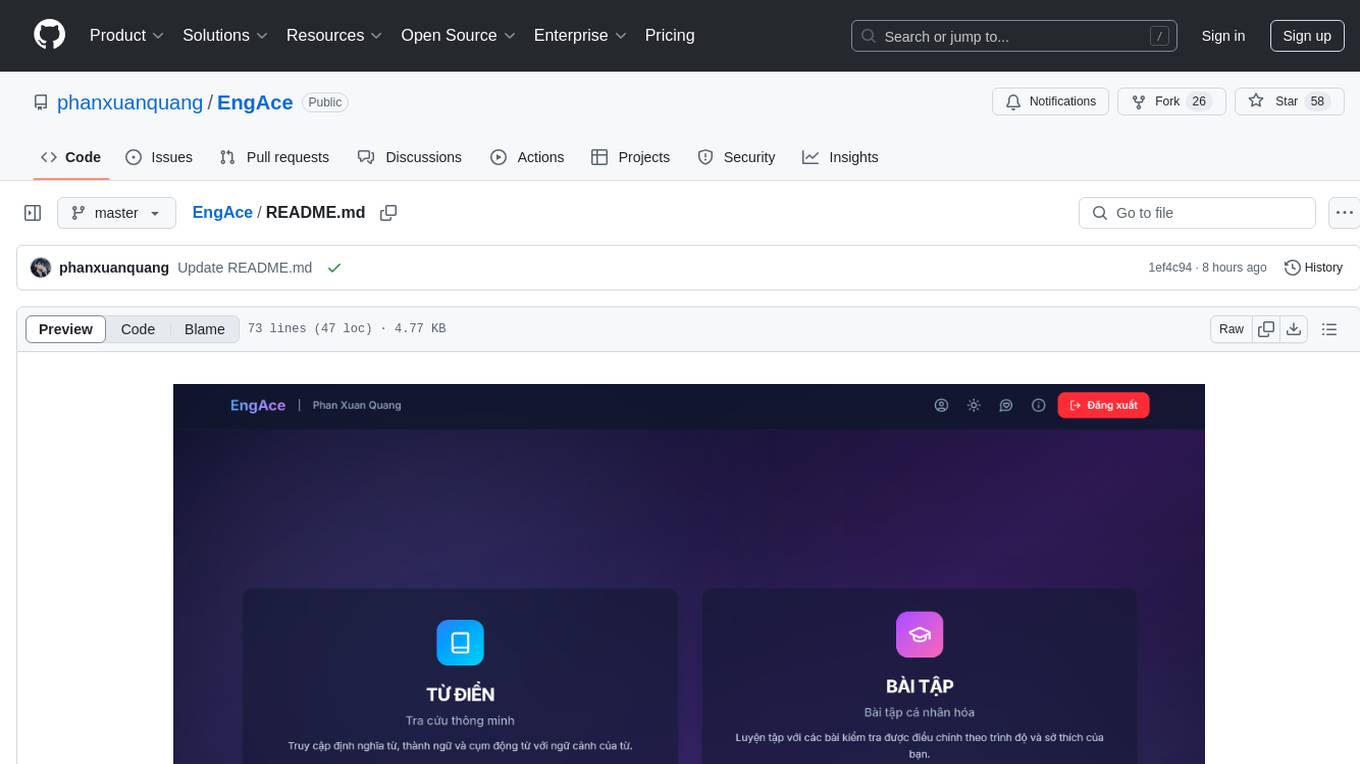

EngAce

EngAce is a cutting-edge, generative AI-powered application revolutionizing Vietnamese English learning. It offers personalized learning experiences combining AI with comprehensive features. The repository contains source code, documentation, and resources for the app.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.