science-codeevolve

CodeEvolve is an open-source evolutionary coding agent for algorithm discovery and optimization.

Stars: 55

CodeEvolve is an open-source framework that combines large language models with evolutionary algorithms to discover and optimize high-performing code solutions. It democratizes algorithmic discovery by making LLM-driven evolutionary search transparent, reproducible, and accessible. CodeEvolve provides a modular foundation for automated code synthesis guided by quantifiable metrics, addressing meta-optimization tasks where complex optimization problems need to be solved. The framework features islands-based genetic algorithm, modular evolutionary operators, quality-diversity optimization, flexible LLM integration, and distributed islands for efficient exploration. Core components include CLI entry point, process runner, evolution engine, program database, exploration schedulers, evaluator, islands coordinator, LLM interface, prompt sampler, and utilities. CodeEvolve demonstrates superior performance on algorithm-discovery benchmarks and is suitable for mathematical discovery, algorithm design, scientific discovery, and software optimization. Reproducibility and determinism are emphasized, with seedable internal algorithmic decisions. Contributions from the community are welcome, focusing on new selection policies, LLM integrations, benchmark problems, documentation, performance optimizations, and bug fixes.

README:

An open-source framework that combines large language models with evolutionary algorithms to discover and optimize high-performing code solutions.

CodeEvolve democratizes algorithmic discovery by making LLM-driven evolutionary search transparent, reproducible, and accessible. Whether you're tackling combinatorial optimization, discovering novel algorithms, or optimizing computational kernels, CodeEvolve provides a modular foundation for automated code synthesis guided by quantifiable metrics.

- Why CodeEvolve?

- Key Features

- How It Works

- Architecture

- Performance Highlights

- Quick Start

- Use Cases

- Reproducing Research Results

- Documentation

- Contributing

- Citation

State-of-the-art performance with transparency. CodeEvolve matches or exceeds the performance of closed-source systems like Google DeepMind's AlphaEvolve on established algorithm-discovery benchmarks, while remaining fully open and reproducible.

Cost-effective solutions. Open-weight models like Qwen often match or outperform expensive closed-source LLMs at a fraction of the compute cost, making cutting-edge algorithmic discovery accessible to researchers and practitioners with limited budgets.

Designed for real problems. CodeEvolve addresses meta-optimization tasks where you need to discover programs that solve complex optimization problems—from mathematical constructions to scientific discovery.

Multiple populations evolve independently and periodically exchange top performers, maintaining diversity while propagating successful solutions across the search space. This parallel architecture enables efficient exploration and scales naturally to concurrent evaluation.

Inspiration-based Crossover: Contextual recombination that combines successful solution patterns while preserving semantic coherence. Parent solutions are presented to the LLM along with high-performing "inspiration" programs, allowing it to synthesize novel combinations.

Meta-prompting Exploration: Evolves the prompts themselves, enabling the LLM to reflect on and rewrite its own instructions for more diverse search trajectories. The system maintains a population of prompts that co-evolve with solutions.

Depth-based Exploitation: Targeted refinement mechanism that makes precise edits to promising solutions by maintaining conversation history. The LLM sees the full evolutionary lineage, enabling incremental improvements while preserving working components.

MAP-Elites Integration: Optional quality-diversity archive that maintains behavioral diversity across the solution space. Supports both grid-based and CVT-based (Centroidal Voronoi Tessellation) feature maps.

Ensemble Support: Mix and match multiple LLMs with weighted selection. Use different models for exploration vs. exploitation phases, or combine open-weight and proprietary models.

OpenAI-Compatible APIs: Works with any OpenAI-compatible endpoint including vLLM, Ollama, Together AI, and cloud providers.

CodeEvolve operates as a distributed evolutionary algorithm where code itself is the evolving entity:

-

Initialization: Start with an initial code template and system prompt that defines the task

-

Selection: Choose parent and inspiration programs based on fitness

- Exploration mode: Random or uniform selection for broad search

- Exploitation mode: Tournament or roulette selection for refinement

-

Variation: Generate new candidates through LLM-driven operations

- Exploration: Broad modifications with meta-prompting

- Exploitation: Targeted improvements with conversation history

-

Code Generation: LLM produces SEARCH/REPLACE diffs

- Only specified code blocks are modified (between markers)

- Preserves working code outside evolution zones

- Applies structured diffs rather than regenerating entire files

-

Evaluation: Execute in sandboxed environment

- Resource limits (time, memory)

- Capture metrics and errors

- Extract fitness from evaluation results

-

Migration: Periodically exchange top solutions between islands

- Maintains diversity while spreading innovations

- Prevents premature convergence

-

Archiving (optional): MAP-Elites maintains diverse solutions

- Preserves behavioral variety

- Increases diversity in a multi-objective setting

The system dynamically balances exploration and exploitation:

| Phase | Selection | LLM Context | Operators |

|---|---|---|---|

| Exploration | Random | Random Inspirations | Meta-prompting exploration |

| Exploitation | Fitness | Best Inspirations + Full lineage | Depth exploitation |

The exploration rate is controlled by a scheduler (e.g., exponential decay) and can adapt based on fitness improvements.

┌─────────┐ ┌─────────┐ ┌─────────┐

│Island 0 │────▶│Island 1 │────▶│Island 2 │

│Pop: 20 │ │Pop: 20 │ │Pop: 20 │

└────┬────┘ └────┬────┘ └────┬────┘

│ │ │

└───────────────┴───────────────┘

Periodic Migration

Each island maintains:

- Solution population

- Prompt population

- Local fitness rankings

- Migration history

- Parses command-line arguments and orchestrates execution flow

- Validates environment, paths, and configuration

- Creates shared memory and synchronization primitives

- Coordinates island spawning, monitoring, and shutdown

- Process Management: Spawns and monitors island processes

- Signal Handling: Handles SIGTERM, SIGTSTP, SIGQUIT

- Failure Recovery: Detects crashed islands and terminates remaining processes

- Log Daemon: Manages centralized logging process

- Modular Design: Main loop decomposed into specialized helper functions for selection, meta-prompting, code generation, evaluation, and migration

- Coordinates exploration/exploitation scheduling with adaptive rates

- Handles checkpoint creation and restoration with state consistency

- Manages populations with genealogical tracking and fitness rankings

- Implements multiple selection strategies

- Implements the MAP-Elites algorithm for improved diversity, with Classic grid-based archive and Central Voronoi Tesselations variant

- ExponentialDecayScheduler: Reduces exploration rate over epochs

- PlateauScheduler: Adapts rate based on fitness improvements

- CosineScheduler: Cycles exploration rate using cosine annealing

- Sandboxed program execution with resource limits (time, memory)

- Multi-threaded memory monitoring with process tree management

- Isolated execution in temporary directories

- Structured metrics extraction from JSON results

-

Synchronization (

sync.py): Barriers, locks, and shared state for distributed coordination -

Communication Graph (

graph.py): Flexible topologies (ring, star, complete, etc.) -

Migration Logic (

migration.py): Threaded send/receive with barrier synchronization

-

Abstract Base Classes (

base.py): Clean interface for plugging in different LLM providers -

OpenAI Wrapper (

openai.py): Async client with retry logic and ensemble support - Mock Models: Set model_name to "MOCK" for debugging without API calls

- Ensemble Support: Weighted random selection from multiple models

- Embedding generation support for semantic search (optional)

-

Sampler (

sampler.py): Builds conversation histories from program lineages for context-aware generation -

Templates (

template.py): Predefined prompt templates for evolution and meta-prompting - Incorporates inspiration programs for crossover operations

- Meta-prompting: Evolves the system prompts themselves for adaptive search

- Dynamic depth control for managing context length

-

Constants (

constants.py): Centralized magic constants (markers, file names, defaults) -

Checkpointing (

ckpt.py): Save and load algorithm state -

Configuration (

cli_setup.py): Validation, loading, and island argument setup -

Parsing (

parsing.py): SEARCH/REPLACE diff application -

Logging (

logging.py): Distributed logging with real-time CLI dashboard -

Locking (

lock.py): Directory-level locks to prevent concurrent runs

CodeEvolve demonstrates superior performance on several benchmarks previously used to assess AlphaEvolve:

- Competitive or better results across diverse algorithm-discovery tasks including autocorrelation inequalities, packing problems, and Heilbronn problems

- Open-weight models (e.g., Qwen) matching closed-source performance at significantly lower cost

- Extensive ablations quantifying each component's contribution to search efficiency

For comprehensive evaluation details and specific results, see CodeEvolve's paper.

Clone this repository and create the conda environment:

git clone https://github.com/inter-co/science-codeevolve.git

cd science-codeevolve

conda env create -f environment.yml

conda activate codeevolveConfigure your LLM provider by setting environment variables:

export API_KEY=your_api_key_here

export API_BASE=your_api_base_url

API_BASEmust point to an OpenAI-compatible API base URL (hosted provider, gateway, or local inference server).

Run CodeEvolve via the command line:

codeevolve \

--inpt_dir=INPT_DIR \

--cfg_path=CFG_PATH \

--out_dir=RESULTS_DIR \

--load_ckpt=LOAD_CKPT \

--terminal_loggingArguments:

-

--inpt_dir: Directory containing the evaluation script and the initial codebase -

--cfg_path: Path to YAML configuration file (required for new runs) -

--out_dir: Directory where results will be saved -

--load_ckpt: Checkpoint to load (0 for new run, -1 for latest, or specific epoch) -

--terminal_logging: Enable live progress display (optional)

The scripts/run.sh provides a bash script for running CodeEvolve with taskset to limit CPU usage. See src/codeevolve/cli.py for further details.

Here's a complete minimal example for optimizing a simple function:

1. Create problem directory:

mkdir -p my_problem/input2. Create initial solution (my_problem/input/solution.py):

# EVOLVE-BLOCK-START

def objective(x):

"""Function to maximize."""

return -(x - 3)**2 + 10

# EVOLVE-BLOCK-END

if __name__ == '__main__':

x = 0.0 # Initial guess

result = objective(x)

print(f"Result: {result}")3. Create evaluator (my_problem/input/evaluate.py):

import sys

import json

import subprocess

def evaluate(code_path, results_path):

# Run the solution

result = subprocess.run(

[sys.executable, code_path],

capture_output=True,

text=True,

timeout=10

)

# Extract fitness from output

fitness = float(result.stdout.split(':')[1].strip())

# Save results

with open(results_path, 'w') as f:

json.dump({'fitness': fitness}, f)

if __name__ == '__main__':

evaluate(sys.argv[1], sys.argv[2])4. Create config (my_problem/config.yaml):

SEED: 42

CODEBASE_PATH: "."

EVAL_FILE_NAME: "evaluate.py"

INIT_FILE_DATA:

filename: "solution.py"

language: "python"

SYS_MSG: |

# PROMPT-BLOCK-START

You are an expert optimization algorithm designer. Your goal is to modify

the given code to maximize the objective function.

# PROMPT-BLOCK-END

ENSEMBLE:

- model_name: "meta-llama/Meta-Llama-3.1-8B-Instruct-Turbo"

temp: 0.8

weight: 1

seed: 42

SAMPLER_AUX_LM:

model_name: "meta-llama/Meta-Llama-3.1-8B-Instruct-Turbo"

temp: 0.7

seed: 42

EVOLVE_CONFIG:

num_epochs: 50

num_islands: 2

migration_topology: "directed_ring"

migration_interval: 10

selection_policy: "tournament"

selection_kwargs:

tournament_size: 3

num_inspirations: 2

exploration_rate: 0.3

fitness_key: "fitness"

ckpt: 10

early_stopping_rounds: 205. Run:

codeevolve \

--inpt_dir=my_problem/input \

--cfg_path=my_problem/config.yaml \

--out_dir=my_problem/results \

--terminal_loggingCodeEvolve is designed for algorithmic problems with quantifiable metrics. To apply it to your domain:

-

Define your evaluation function that measures solution quality

- Must accept: code file path, results file path

- Must output: JSON with at least one metric field

-

Specify the initial codebase or problem structure

- Mark evolution zones with

# EVOLVE-BLOCK-STARTand# EVOLVE-BLOCK-END - Code outside these blocks is preserved

- Mark evolution zones with

-

Configure evolutionary parameters

- Population size, mutation rates, selection policy

- Exploration/exploitation balance

- Migration topology and frequency

-

Choose your LLM ensemble composition

- Single model or multiple models

- Separate ensembles for exploration vs exploitation

- Weight distribution across models

See problems/problem_template for a general template. Comprehensive tutorials and example notebooks will be released soon.

The framework is suitable for any domain where solutions can be represented as code and evaluated programmatically:

- Finding solutions to open problems in mathematics

- Discovering new inequalities or bounds

- Constructing optimal geometric configurations

- Optimizing computational kernels and scheduling algorithms

- Discovering novel heuristics for NP-hard problems

- Automated algorithm configuration

- Exploring hypothesis spaces expressed as executable code

- Parameter optimization for scientific models

- Automated experimental design

- Performance tuning of critical code paths

- Automatic parallelization strategies

- Resource allocation optimization

CodeEvolve is designed to be seedable for all internal algorithmic decisions (selection, scheduling, migration, etc.). However, exact end-to-end reproducibility depends on your LLM provider:

-

Framework-level seeding:

- Set

SEEDin the YAML config to seed internal randomness. - In multi-island runs, each island derives a deterministic island seed (

SEED + island_id) for its local stochastic decisions.

- Set

-

LLM seeding:

- Model configs can include a

seedfield, and CodeEvolve forwards it to OpenAI-compatible APIs. - Some providers do not support deterministic sampling and may ignore

seedor still return nondeterministic outputs.

- Model configs can include a

For hosted LLM APIs, treat results as statistical—run multiple seeds and compare distributions. If you use a fully controlled inference stack that supports deterministic decoding, you may get much closer to exact replay.

For complete experimental configurations, benchmark implementations, and step-by-step examples demonstrating how to run CodeEvolve on various problems, visit the experiments repository:

github.com/inter-co/science-codeevolve-experiments

This companion repository contains all code necessary to reproduce the results from CodeEvolve's paper, including:

- All benchmark problem implementations

- Experimental configurations for each problem

- Raw results and checkpoints from paper runs

- Analysis notebooks with visualizations

- Comparisons with AlphaEvolve

Key configuration parameters in your YAML file:

EVOLVE_CONFIG:

# Basic settings

num_epochs: 100 # Total iterations

num_islands: 4 # Parallel populations

init_pop: 20 # Initial population per island

max_size: 50 # Max population size (null = unlimited)

# Selection

selection_policy: "tournament" # or "roulette", "random", "best"

selection_kwargs:

tournament_size: 3

# Exploration/Exploitation

exploration_rate: 0.3 # Probability of exploration

use_scheduler: true # Use adaptive scheduling

type: "ExponentialDecayScheduler"

scheduler_kwargs:

decay_rate: 0.995

# Operators

num_inspirations: 3 # Inspiration programs for crossover

meta_prompting: true # Enable prompt evolution

max_chat_depth: 5 # Conversation history depth

# Migration

migration_topology: "directed_ring" # Island topology

migration_interval: 20 # Epochs between migrations

migration_rate: 0.1 # Fraction to migrate

# Quality-Diversity (optional)

use_map_elites: false # Enable MAP-Elites

# Checkpointing

ckpt: 10 # Checkpoint frequency

early_stopping_rounds: 50 # Stop after N epochs without improvement

# Fitness

fitness_key: "fitness" # Metric name from evaluation JSONcodeevolve [OPTIONS]

Required:

--inpt_dir PATH Input directory with solution and evaluator

--out_dir PATH Output directory for results

Optional:

--cfg_path PATH Config file (required for new runs)

--load_ckpt INT Checkpoint: 0=new, -1=latest, N=epoch N

--terminal_logging Show live progress in terminaloutput_directory/

├── config.yaml # Copy of configuration used

├── 0/ # Island 0 results

│ ├── island.log # Detailed execution log

│ ├── best_sol.py # Best solution found

│ ├── best_prompt.txt # Best prompt evolved

│ └── ckpt/ # Checkpoints

│ ├── ckpt_10.pkl

│ ├── ckpt_20.pkl

│ └── ...

├── 1/ # Island 1 results

│ └── ...

└── ...

We organize the different versions of CodeEvolve as releases in both this repository and its companion experiments repository. Currently, we have the following releases:

- v0.1.0: Initial version of CodeEvolve, corresponds to v1 of CodeEvolve's paper.

- v0.2.0 / v0.2.1: Corresponds to v3 of CodeEvolve's paper with minor bug fixes.

- v0.3.0: Most recent release, with major quality of life improvements and code refactoring.

We welcome contributions from the community! Here's how to get involved:

-

Start with an issue: Browse existing issues or create a new one describing your proposed change

-

Submit a pull request:

- Fork the repository

- Create a feature branch

- Make your changes with tests

- Reference the issue in your PR description

-

Keep PRs focused: Avoid massive changes—smaller, well-tested contributions are easier to review

-

Maintain quality: Ensure code is tested, documented, and follows existing style

Please refer to CONTRIBUTING.md for detailed guidelines.

- New selection policies or evolutionary operators

- Additional LLM providers and integrations

- Benchmark problems from your domain

- Documentation improvements and tutorials

- Performance optimizations

- Bug fixes and test coverage

@article{assumpção2025codeevolveopensourceevolutionary,

title={CodeEvolve: An open source evolutionary coding agent for algorithm discovery and optimization},

author={Henrique Assumpção and Diego Ferreira and Leandro Campos and Fabricio Murai},

year={2025},

eprint={2510.14150},

archivePrefix={arXiv},

primaryClass={cs.AI},

url={https://arxiv.org/abs/2510.14150},

}The authors thank Bruno Grossi for his continuous support during the development of this project. We thank Fernando Augusto and Tiago Machado for useful conversations about possible applications of CodeEvolve. We also thank the OpenEvolve community for their inspiration and discussion about evolutionary coding agents.

All software is licensed under the Apache License, Version 2.0 (Apache 2.0); you may not use this file except in compliance with the Apache 2.0 license. You may obtain a copy of the Apache 2.0 license at: https://www.apache.org/licenses/LICENSE-2.0.

This is not an official Inter product.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for science-codeevolve

Similar Open Source Tools

science-codeevolve

CodeEvolve is an open-source framework that combines large language models with evolutionary algorithms to discover and optimize high-performing code solutions. It democratizes algorithmic discovery by making LLM-driven evolutionary search transparent, reproducible, and accessible. CodeEvolve provides a modular foundation for automated code synthesis guided by quantifiable metrics, addressing meta-optimization tasks where complex optimization problems need to be solved. The framework features islands-based genetic algorithm, modular evolutionary operators, quality-diversity optimization, flexible LLM integration, and distributed islands for efficient exploration. Core components include CLI entry point, process runner, evolution engine, program database, exploration schedulers, evaluator, islands coordinator, LLM interface, prompt sampler, and utilities. CodeEvolve demonstrates superior performance on algorithm-discovery benchmarks and is suitable for mathematical discovery, algorithm design, scientific discovery, and software optimization. Reproducibility and determinism are emphasized, with seedable internal algorithmic decisions. Contributions from the community are welcome, focusing on new selection policies, LLM integrations, benchmark problems, documentation, performance optimizations, and bug fixes.

llmxcpg

LLMxCPG is a framework for vulnerability detection using Code Property Graphs (CPG) and Large Language Models (LLM). It involves a two-phase process: Slice Construction where an LLM generates queries for a CPG to extract a code slice, and Vulnerability Detection where another LLM classifies the code slice as vulnerable or safe. The repository includes implementations of baseline models, information on datasets, scripts for running models, prompt templates, query generation examples, and configurations for fine-tuning models.

paelladoc

PAELLADOC is an intelligent documentation system that uses AI to analyze code repositories and generate comprehensive technical documentation. It offers a modular architecture with MECE principles, interactive documentation process, key features like Orchestrator and Commands, and a focus on context for successful AI programming. The tool aims to streamline documentation creation, code generation, and product management tasks for software development teams, providing a definitive standard for AI-assisted development documentation.

llmos

LLMos is an operating system designed for physical AI agents, providing a hybrid runtime environment where AI agents can perceive, reason, act on hardware, and evolve over time locally without cloud dependency. It allows natural language programming, dual-brain architecture for fast instinct and deep planner brains, markdown-as-code for defining agents and skills, and supports swarm intelligence and cognitive world models. The tool is built on a tech stack including Next.js, Electron, Python, and WebAssembly, and is structured around a dual-brain cognitive architecture, volume system, HAL for hardware abstraction, applet system for dynamic UI, and dreaming & evolution for robot improvement. The project is in Phase 1 (Foundation) and aims to move into Phase 2 (Dual-Brain & Local Intelligence), with contributions welcomed under the Apache 2.0 license by Evolving Agents Labs.

probe

Probe is an AI-friendly, fully local, semantic code search tool designed to power the next generation of AI coding assistants. It combines the speed of ripgrep with the code-aware parsing of tree-sitter to deliver precise results with complete code blocks, making it perfect for large codebases and AI-driven development workflows. Probe is fully local, keeping code on the user's machine without relying on external APIs. It supports multiple languages, offers various search options, and can be used in CLI mode, MCP server mode, AI chat mode, and web interface. The tool is designed to be flexible, fast, and accurate, providing developers and AI models with full context and relevant code blocks for efficient code exploration and understanding.

BioAgents

BioAgents AgentKit is an advanced AI agent framework tailored for biological and scientific research. It offers powerful conversational AI capabilities with specialized knowledge in biology, life sciences, and scientific research methodologies. The framework includes state-of-the-art analysis agents, configurable research agents, and a variety of specialized agents for tasks such as file parsing, research planning, literature search, data analysis, hypothesis generation, research reflection, and user-facing responses. BioAgents also provides support for LLM libraries, multiple search backends for literature agents, and two backends for data analysis. The project structure includes backend source code, services for chat, job queue system, real-time notifications, and JWT authentication, as well as a frontend UI built with Preact.

graphiti

Graphiti is a framework for building and querying temporally-aware knowledge graphs, tailored for AI agents in dynamic environments. It continuously integrates user interactions, structured and unstructured data, and external information into a coherent, queryable graph. The framework supports incremental data updates, efficient retrieval, and precise historical queries without complete graph recomputation, making it suitable for developing interactive, context-aware AI applications.

persistent-ai-memory

Persistent AI Memory System is a comprehensive tool that offers persistent, searchable storage for AI assistants. It includes features like conversation tracking, MCP tool call logging, and intelligent scheduling. The system supports multiple databases, provides enhanced memory management, and offers various tools for memory operations, schedule management, and system health checks. It also integrates with various platforms like LM Studio, VS Code, Koboldcpp, Ollama, and more. The system is designed to be modular, platform-agnostic, and scalable, allowing users to handle large conversation histories efficiently.

shannon

Shannon is an AI pentester that delivers actual exploits, not just alerts. It autonomously hunts for attack vectors in your code, then uses its built-in browser to execute real exploits, such as injection attacks, and auth bypass, to prove the vulnerability is actually exploitable. Shannon closes the security gap by acting as your on-demand whitebox pentester, providing concrete proof of vulnerabilities to let you ship with confidence. It is a core component of the Keygraph Security and Compliance Platform, automating penetration testing and compliance journey. Shannon Lite achieves a 96.15% success rate on a hint-free, source-aware XBOW benchmark.

CyberStrikeAI

CyberStrikeAI is an AI-native security testing platform built in Go that integrates 100+ security tools, an intelligent orchestration engine, role-based testing with predefined security roles, a skills system with specialized testing skills, and comprehensive lifecycle management capabilities. It enables end-to-end automation from conversational commands to vulnerability discovery, attack-chain analysis, knowledge retrieval, and result visualization, delivering an auditable, traceable, and collaborative testing environment for security teams. The platform features an AI decision engine with OpenAI-compatible models, native MCP implementation with various transports, prebuilt tool recipes, large-result pagination, attack-chain graph, password-protected web UI, knowledge base with vector search, vulnerability management, batch task management, role-based testing, and skills system.

biomcp

BioMCP is an open source toolkit that empowers AI assistants and agents with specialized biomedical knowledge. It follows the Model Context Protocol (MCP) to connect AI systems to authoritative biomedical data sources, enabling precise answers about clinical trials, scientific literature, and genomic variants. It provides structured access to various biomedical data sources, supports natural language queries, and functions as an MCP server for AI assistants and agents. BioMCP integrates with literature sources like PubMed and bioRxiv, clinical and genomic sources like ClinicalTrials.gov and MyVariant.info, and regulatory and safety sources like OpenFDA. It offers 24 specialized tools for biomedical research, including core tools like Think Tool, Search Tool, and Fetch Tool, as well as individual tools for articles, trials, variants, NCI-specific data, genes, diseases, and drugs. BioMCP can be run locally or as an HTTP server, supports Docker deployment, and provides a comprehensive CLI for database interaction.

empirica

Empirica is an epistemic self-awareness framework for AI agents to understand their knowledge boundaries. It introduces epistemic vectors to measure knowledge state and uncertainty, enabling honest communication. The tool emerged from 600+ real working sessions across various AI systems, providing cognitive infrastructure for distinguishing between confident knowledge and guessing. Empirica's 13 foundational vectors cover engagement, domain knowledge depth, execution capability, information access, understanding clarity, coherence, signal-to-noise ratio, information richness, working state, progress rate, task completion level, work significance, and explicit doubt tracking. It is applicable across industries like software development, research, healthcare, legal, education, and finance, aiding in tasks such as code review, hypothesis testing, diagnostic confidence, case analysis, learning assessment, and risk assessment.

SMRY

SMRY.ai is a Next.js application that bypasses paywalls and generates AI-powered summaries by fetching content from multiple sources simultaneously. It provides a distraction-free reader with summary builder, cleans articles, offers multi-source fetching, built-in AI summaries in 14 languages, rich debug context, soft paywall access, smart extraction using Diffbot's AI, multi-source parallel fetching, type-safe error handling, dual caching strategy, intelligent source routing, content parsing pipeline, multilingual summaries, and more. The tool aims to make referencing reporting easier, provide original articles alongside summaries, and offer concise summaries in various languages.

TranslateBookWithLLM

TranslateBookWithLLM is a Python application designed for large-scale text translation, such as entire books (.EPUB), subtitle files (.SRT), and plain text. It leverages local LLMs via the Ollama API or Gemini API. The tool offers both a web interface for ease of use and a command-line interface for advanced users. It supports multiple format translations, provides a user-friendly browser-based interface, CLI support for automation, multiple LLM providers including local Ollama models and Google Gemini API, and Docker support for easy deployment.

lyraios

LYRAIOS (LLM-based Your Reliable AI Operating System) is an advanced AI assistant platform built with FastAPI and Streamlit, designed to serve as an operating system for AI applications. It offers core features such as AI process management, memory system, and I/O system. The platform includes built-in tools like Calculator, Web Search, Financial Analysis, File Management, and Research Tools. It also provides specialized assistant teams for Python and research tasks. LYRAIOS is built on a technical architecture comprising FastAPI backend, Streamlit frontend, Vector Database, PostgreSQL storage, and Docker support. It offers features like knowledge management, process control, and security & access control. The roadmap includes enhancements in core platform, AI process management, memory system, tools & integrations, security & access control, open protocol architecture, multi-agent collaboration, and cross-platform support.

For similar tasks

science-codeevolve

CodeEvolve is an open-source framework that combines large language models with evolutionary algorithms to discover and optimize high-performing code solutions. It democratizes algorithmic discovery by making LLM-driven evolutionary search transparent, reproducible, and accessible. CodeEvolve provides a modular foundation for automated code synthesis guided by quantifiable metrics, addressing meta-optimization tasks where complex optimization problems need to be solved. The framework features islands-based genetic algorithm, modular evolutionary operators, quality-diversity optimization, flexible LLM integration, and distributed islands for efficient exploration. Core components include CLI entry point, process runner, evolution engine, program database, exploration schedulers, evaluator, islands coordinator, LLM interface, prompt sampler, and utilities. CodeEvolve demonstrates superior performance on algorithm-discovery benchmarks and is suitable for mathematical discovery, algorithm design, scientific discovery, and software optimization. Reproducibility and determinism are emphasized, with seedable internal algorithmic decisions. Contributions from the community are welcome, focusing on new selection policies, LLM integrations, benchmark problems, documentation, performance optimizations, and bug fixes.

LLM-as-HH

LLM-as-HH is a codebase that accompanies the paper ReEvo: Large Language Models as Hyper-Heuristics with Reflective Evolution. It introduces Language Hyper-Heuristics (LHHs) that leverage LLMs for heuristic generation with minimal manual intervention and open-ended heuristic spaces. Reflective Evolution (ReEvo) is presented as a searching framework that emulates the reflective design approach of human experts while surpassing human capabilities with scalable LLM inference, Internet-scale domain knowledge, and powerful evolutionary search. The tool can improve various algorithms on problems like Traveling Salesman Problem, Capacitated Vehicle Routing Problem, Orienteering Problem, Multiple Knapsack Problems, Bin Packing Problem, and Decap Placement Problem in both black-box and white-box settings.

open-ai

Open AI is a powerful tool for artificial intelligence research and development. It provides a wide range of machine learning models and algorithms, making it easier for developers to create innovative AI applications. With Open AI, users can explore cutting-edge technologies such as natural language processing, computer vision, and reinforcement learning. The platform offers a user-friendly interface and comprehensive documentation to support users in building and deploying AI solutions. Whether you are a beginner or an experienced AI practitioner, Open AI offers the tools and resources you need to accelerate your AI projects and stay ahead in the rapidly evolving field of artificial intelligence.

universal

The Universal Numbers Library is a header-only C++ template library designed for universal number arithmetic, offering alternatives to native integer and floating-point for mixed-precision algorithm development and optimization. It tailors arithmetic types to the application's precision and dynamic range, enabling improved application performance and energy efficiency. The library provides fast implementations of special IEEE-754 formats like quarter precision, half-precision, and quad precision, as well as vendor-specific extensions. It supports static and elastic integers, decimals, fixed-points, rationals, linear floats, tapered floats, logarithmic, interval, and adaptive-precision integers, rationals, and floats. The library is suitable for AI, DSP, HPC, and HFT algorithms.

how-to-optim-algorithm-in-cuda

This repository documents how to optimize common algorithms based on CUDA. It includes subdirectories with code implementations for specific optimizations. The optimizations cover topics such as compiling PyTorch from source, NVIDIA's reduce optimization, OneFlow's elementwise template, fast atomic add for half data types, upsample nearest2d optimization in OneFlow, optimized indexing in PyTorch, OneFlow's softmax kernel, linear attention optimization, and more. The repository also includes learning resources related to deep learning frameworks, compilers, and optimization techniques.

data-scientist-roadmap2024

The Data Scientist Roadmap2024 provides a comprehensive guide to mastering essential tools for data science success. It includes programming languages, machine learning libraries, cloud platforms, and concepts categorized by difficulty. The roadmap covers a wide range of topics from programming languages to machine learning techniques, data visualization tools, and DevOps/MLOps tools. It also includes web development frameworks and specific concepts like supervised and unsupervised learning, NLP, deep learning, reinforcement learning, and statistics. Additionally, it delves into DevOps tools like Airflow and MLFlow, data visualization tools like Tableau and Matplotlib, and other topics such as ETL processes, optimization algorithms, and financial modeling.

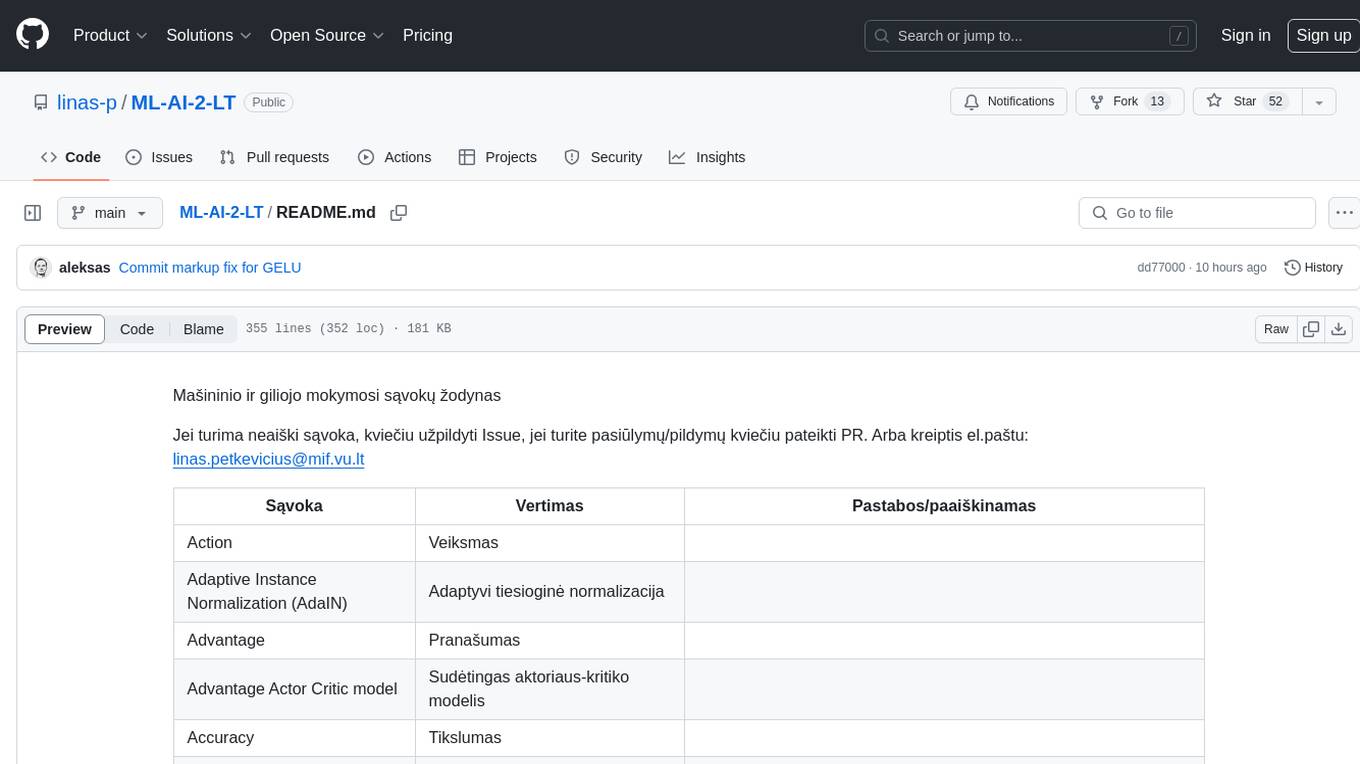

ML-AI-2-LT

ML-AI-2-LT is a repository that serves as a glossary for machine learning and deep learning concepts. It contains translations and explanations of various terms related to artificial intelligence, including definitions and notes. Users can contribute by filling issues for unclear concepts or by submitting pull requests with suggestions or additions. The repository aims to provide a comprehensive resource for understanding key terminology in the field of AI and machine learning.

sciml.ai

SciML.ai is an open source software organization dedicated to unifying packages for scientific machine learning. It focuses on developing modular scientific simulation support software, including differential equation solvers, inverse problems methodologies, and automated model discovery. The organization aims to provide a diverse set of tools with a common interface, creating a modular, easily-extendable, and highly performant ecosystem for scientific simulations. The website serves as a platform to showcase SciML organization's packages and share news within the ecosystem. Pull requests are encouraged for contributions.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.