ai-development-patterns

A comprehensive collection of AI development patterns for building software with AI assistance, organized by implementation maturity and development lifecycle phases. Includes Foundation, Development, and Operations patterns with practical examples and anti-patterns.

Stars: 238

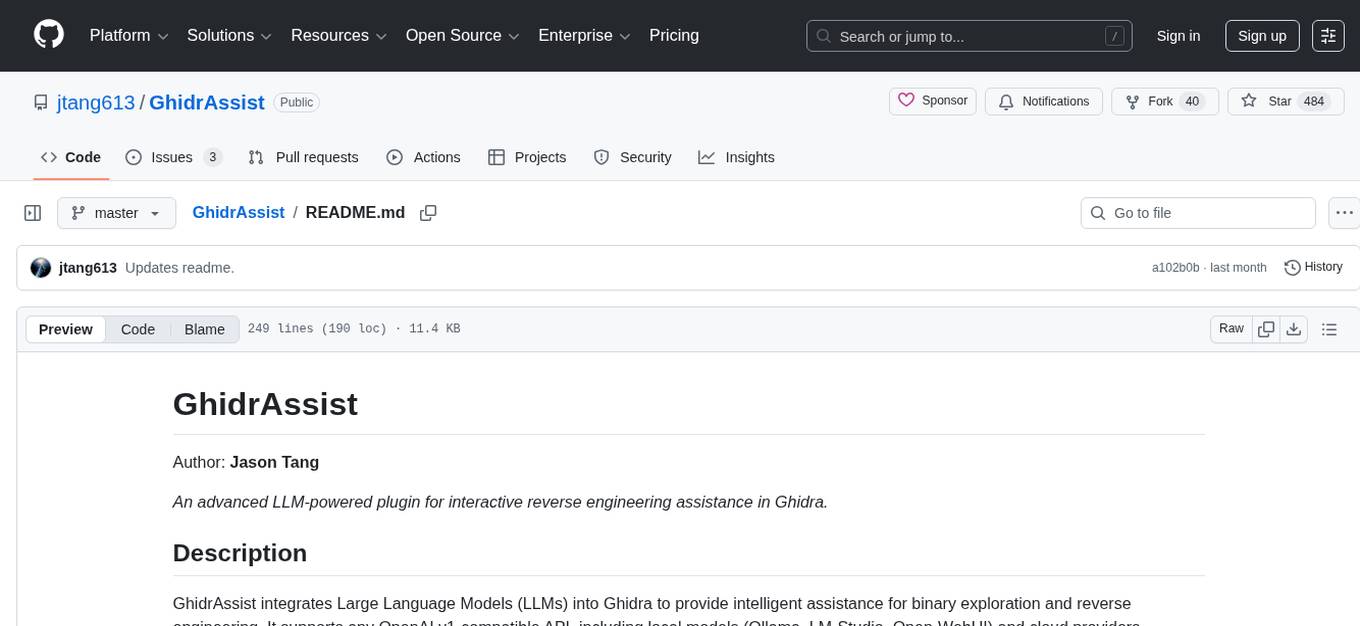

README:

A comprehensive collection of patterns based on my experience for building software with AI assistance, organized by implementation maturity and development lifecycle phases. These patterns are subject to change as the field evolves.

This repository provides a structured approach to AI-assisted development through three pattern categories:

- Foundation Patterns - Essential patterns for team readiness and basic AI integration

- Development Patterns - Daily practice patterns for AI-assisted coding workflows

- Operations Patterns - CI/CD, security, and production management with AI

- Experimental Patterns - Advanced and experimental patterns under active development and/or consideration.

Important: These phases represent a learning progression for teams new to AI development, not a waterfall approach. Teams with existing DevOps/security expertise should implement patterns continuously across all phases from day one, following a "continuous everything" model.

graph TD

subgraph "Phase 1: Foundation (Weeks 1-2)"

A[AI Readiness Assessment] --> B[Rules as Code]

B --> C[AI Security Sandbox]

C --> D[AI Developer Lifecycle]

A --> E[AI Issue Generation]

D --> F[AI Tool Integration]

end

subgraph "Phase 2: Development (Weeks 3-4)"

D --> G[Specification Driven Development]

D --> H[AI Plan-First Development]

G --> I[Progressive AI Enhancement]

H --> I

I --> J[AI Choice Generation]

G --> K[Atomic Task Decomposition]

K --> L[Parallelized AI Coding Agents]

end

subgraph "Phase 3: Operations (Weeks 5-6)"

C --> M[Policy-as-Code Generation]

M --> N[Security Scanning Orchestration]

L --> O[Performance Baseline Management]

D --> P[AI-Driven Traceability]

endContinuous Implementation Note: Security patterns (AI Security Sandbox, AI Security & Compliance) and deployment patterns should be implemented continuously throughout development, not delayed until specific phases. The dependencies shown represent learning prerequisites, not deployment gates.

| Pattern | Maturity | Type | Description | Dependencies |

|---|---|---|---|---|

| AI Readiness Assessment | Beginner | Foundation | Systematic evaluation of codebase and team readiness for AI integration | None |

| Rules as Code | Beginner | Foundation | Version and maintain AI coding standards as explicit configuration files | AI Readiness Assessment |

| AI Security Sandbox | Beginner | Foundation | Run AI tools in isolated environments without access to secrets or sensitive data | Rules as Code |

| AI Developer Lifecycle | Intermediate | Workflow | Structured 9-stage process from problem definition through deployment with AI assistance | Rules as Code, AI Security Sandbox |

| AI Tool Integration | Intermediate | Foundation | Connect AI systems to external data sources, APIs, and tools for enhanced capabilities beyond prompt-only interactions | AI Security Sandbox, AI Developer Lifecycle |

| AI Issue Generation | Intermediate | Foundation | Generate Kanban-optimized work items (4-8 hours max) from requirements using AI to ensure continuous flow with clear acceptance criteria and dependencies | AI Readiness Assessment |

| Specification Driven Development | Intermediate | Development | Use executable specifications to guide AI code generation with clear acceptance criteria before implementation | AI Developer Lifecycle |

| AI Plan-First Development | Beginner | Development | Generate explicit implementation plans before writing code to improve quality, reduce iterations, and enable better collaboration | None |

| Progressive AI Enhancement | Beginner | Development | Build complex features through small, deployable iterations rather than big-bang generation | None |

| AI Choice Generation | Intermediate | Development | Generate multiple implementation options for exploration and comparison rather than accepting first AI solution | Progressive AI Enhancement |

| Atomic Task Decomposition | Intermediate | Development | Break complex features into atomic, independently implementable tasks for parallel AI agent execution | Progressive AI Enhancement |

| Parallelized AI Coding Agents | Advanced | Development | Run multiple AI agents concurrently on isolated tasks or environments to maximize development speed and exploration | Atomic Task Decomposition |

| AI Knowledge Persistence | Intermediate | Development | Capture successful patterns and failed attempts as versioned knowledge for future sessions | Rules as Code |

| Constraint-Based AI Development | Beginner | Development | Give AI specific constraints to prevent over-engineering and ensure focused solutions | None |

| Observable AI Development | Intermediate | Development | Strategic logging and debugging that makes system behavior visible to AI | AI Developer Lifecycle |

| AI-Driven Refactoring | Intermediate | Development | Systematic code improvement using AI to detect and resolve code smells with measurable quality metrics | Rules as Code |

| AI-Driven Architecture Design | Intermediate | Development | Apply architectural frameworks (DDD, Well-Architected, 12-Factor) using AI to ensure sound system design | AI Developer Lifecycle, Rules as Code |

| AI-Driven Traceability | Intermediate | Development | Maintain automated links between requirements, specifications, tests, implementation, and documentation using AI | AI Developer Lifecycle |

| Security & Compliance | Operations | Category containing security and compliance patterns | ||

| Policy-as-Code Generation | Advanced | Operations | Transform compliance requirements into executable Cedar/OPA policy files with AI assistance | AI Security Sandbox |

| Security Scanning Orchestration | Intermediate | Workflow | Aggregate multiple security tools and use AI to summarize findings for actionable insights | AI Security Sandbox |

| Deployment Automation | Operations | Category containing deployment and pipeline patterns | ||

| Monitoring & Maintenance | Operations | Category containing monitoring and maintenance patterns | ||

| Performance Baseline Management | Advanced | Operations | Establish intelligent performance baselines and configure monitoring thresholds automatically | AI Tool Integration |

Patterns are classified by implementation complexity and prerequisite knowledge:

Beginner: Basic AI tool usage with minimal setup required

- Prerequisites: Basic programming skills, access to AI tools

- Complexity: Single tool usage, straightforward prompts

- Examples: Simple code generation, basic constraint setting

Intermediate: Multi-tool coordination and process integration

- Prerequisites: Development workflow experience, team coordination

- Complexity: Multiple tools, orchestration patterns, quality gates

- Examples: Testing strategies, parallel workflows, choice generation

Advanced: Complex systems with enterprise concerns

- Prerequisites: Architecture experience, security/compliance knowledge

- Complexity: Multi-agent systems, advanced safety, compliance automation

- Examples: Enterprise security, compliance automation, chaos engineering

The patterns use different task sizing approaches based on their purpose and context:

graph TD

A[Feature Request] --> B[AI Issue Generation]

B --> C[4-8 Hour Work Items]

C --> D{Parallel Implementation?}

D -->|Yes| E[Atomic Task Decomposition]

D -->|No| F[Progressive Enhancement]

E --> G[1-2 Hour Atomic Tasks]

F --> H[Daily Deployment Cycles]

G --> I[Parallel Agent Execution]

H --> J[Sequential Enhancement]

C --> K[Standard Kanban Flow]Task Sizing Hierarchy:

- AI Issue Generation (4-8 hours): Standard Kanban work items for continuous flow and rapid feedback

- Atomic Task Decomposition (1-2 hours): Ultra-small tasks for parallel agent execution without conflicts

- Progressive AI Enhancement (Daily cycles): Deployment-focused iterations that may contain multiple work items

When to Use Each Approach:

- Use AI Issue Generation for standard team development with human developers

- Use Atomic Task Decomposition when implementing with parallel AI agents

- Use Progressive Enhancement when prioritizing rapid market feedback over task granularity

Pattern Differentiation:

- AI Issue Generation: Creates Kanban work items (4-8 hours) for human team workflows

- Atomic Task Decomposition: Creates ultra-small tasks (1-2 hours) for parallel AI agents

- Progressive AI Enhancement: Creates deployment cycles (daily) focused on user feedback

Choose the right patterns based on your team's context, project requirements, and AI development maturity:

graph TD

A[Starting AI Development] --> B{Team AI Experience?}

B -->|New to AI| C[Start with Foundation Patterns]

B -->|Some Experience| D[Focus on Development Patterns]

B -->|Advanced| E[Implement Operations Patterns]

C --> F[AI Readiness Assessment]

F --> G[Rules as Code]

G --> H[AI Security Sandbox]

H --> I{Need Structured Development?}

I -->|Yes| J[AI Developer Lifecycle]

I -->|No| K[AI Plan-First Development]

K --> L[Progressive AI Enhancement]

D --> M{Multiple Developers/Agents?}

M -->|Yes| N[Parallelized AI Coding Agents]

M -->|No| O[Specification Driven Development]

N --> P[Atomic Task Decomposition]

O --> Q[AI-Driven Traceability]

E --> R{Enterprise Requirements?}

R -->|Compliance| S[Policy-as-Code Generation]

R -->|Scale| T[Performance Baseline Management]

R -->|Quality| U[Technical Debt Forecasting]For New Teams (First 2 weeks):

- AI Readiness Assessment - Evaluate current state

- Rules as Code - Establish consistent standards

- AI Security Sandbox - Ensure safe experimentation

- AI Plan-First Development - Learn structured planning approaches

- Progressive AI Enhancement - Start with simple iterations

For Development Teams (Weeks 3-8):

- AI Developer Lifecycle - Structured development process

- Specification Driven Development - Quality-focused development

- AI Issue Generation - Organized work breakdown

- Comprehensive AI Testing Strategy - Quality assurance

For Parallel Implementation:

- Atomic Task Decomposition - Ultra-small independent tasks

- AI Workflow Orchestration - Agent coordination

- AI Review Automation - Automated integration

- AI Security Sandbox - Enhanced with parallel safety

For Enterprise/Production (Month 2+):

- Policy-as-Code Generation - Compliance automation

- Security Scanning Orchestration - Integrated security

- Performance Baseline Management - Production monitoring

- Technical Debt Forecasting - Proactive maintenance

MVP/Startup Projects:

- Primary: Progressive AI Enhancement, AI Choice Generation

- Secondary: AI Security Sandbox, Constraint-Based AI Development

- Avoid: Complex orchestration patterns until scale demands

Enterprise Applications:

- Primary: AI Developer Lifecycle, Policy-as-Code Generation

- Secondary: AI-Driven Traceability, Security Scanning Orchestration

- Essential: All foundation patterns before development patterns

Research/Experimental Projects:

- Primary: AI Choice Generation, Observable AI Development

- Secondary: AI Knowledge Persistence, Context Window Optimization

- Focus: Learning and exploration over production readiness

High-Scale Production:

- Primary: Parallelized AI Coding Agents, Performance Baseline Management

- Secondary: Chaos Engineering Scenarios, Incident Response Automation

- Critical: All security and monitoring patterns

Solo Teams:

- Focus on Progressive AI Enhancement and AI Choice Generation

- Add Observable AI Development for debugging

- Skip parallel orchestration patterns

Two-Pizza Teams (small, autonomous teams):

- Implement AI Issue Generation for coordination

- Use Specification Driven Development for quality

- Consider AI Tool Integration for role clarity

- Full AI Developer Lifecycle implementation

- Parallelized AI Coding Agents for complex features

- AI-Driven Traceability for quality gates

Multi Two-Pizza Team Organizations:

- Atomic Task Decomposition for parallel work across teams

- AI-Driven Traceability for coordination at scale

- All Operations Patterns for organizational management

Cloud-Native Applications:

- Emphasize Policy-as-Code Generation and Compliance Evidence Automation

- Implement Drift Detection & Remediation for infrastructure

- Use AI-Guided Blue-Green Deployment for safe releases

On-Premise Systems:

- Focus on AI Security Sandbox with network isolation

- Implement AI Knowledge Persistence for institutional knowledge

- Use Technical Debt Forecasting for maintenance planning

Microservices Architecture:

- Parallelized AI Coding Agents for service coordination

- Observable AI Development across service boundaries

- Performance Baseline Management for distributed monitoring

Monolithic Applications:

- Progressive AI Enhancement for gradual modernization

- AI-Driven Refactoring for code quality improvement

- Constraint-Based AI Development to prevent over-engineering

Foundation patterns establish the essential infrastructure and team readiness required for successful AI-assisted development. These patterns must be implemented first as they enable all subsequent patterns.

Maturity: Beginner

Description: Systematic evaluation of codebase and team readiness for AI-assisted development before implementing AI patterns.

Related Patterns: Rules as Code, AI Issue Generation

Assessment Framework

graph TD

A[Codebase Assessment] --> B[Team Assessment]

B --> C[Infrastructure Assessment]

C --> D[Readiness Score]

D --> E[Implementation Plan]Codebase Readiness Checklist

## Code Quality Prerequisites

□ Consistent code formatting and style guide

□ Comprehensive test coverage (>80% for critical paths)

□ Clear separation of concerns and modular architecture

□ Documented APIs and interfaces

□ Version-controlled configuration and secrets management

## Documentation Standards

□ README with setup and development instructions

□ API documentation (OpenAPI/Swagger)

□ Architecture decision records (ADRs)

□ Coding standards and conventions documented

□ Deployment and operational proceduresAnti-pattern: Rushing Into AI Starting AI adoption without proper assessment leads to inconsistent practices, security vulnerabilities, and team frustration.

Maturity: Beginner

Description: Version and maintain AI coding standards as explicit configuration files that persist across sessions and team members.

Related Patterns: AI Developer Lifecycle, AI Knowledge Persistence

Standardized Project Structure

project/

├── .ai/ # AI configuration directory

│ ├── rules/ # Modular rule sets

│ │ ├── security.md # Security standards

│ │ ├── testing.md # Testing requirements

│ │ ├── style.md # Code style guide

│ │ └── architecture.md # Architectural patterns

│ ├── prompts/ # Reusable prompt templates

│ │ ├── implementation.md # Implementation prompts

│ │ ├── review.md # Code review prompts

│ │ └── testing.md # Test generation prompts

│ └── knowledge/ # Captured patterns and gotchas

│ ├── successful.md # Proven successful patterns

│ └── failures.md # Known failure patterns

├── .cursorrules # Cursor IDE configuration

├── CLAUDE.md # Claude Code session context

└── .windsurf/ # Windsurf configuration

└── rules.mdAnti-pattern: Context Drift Each developer maintains their own prompts and preferences, leading to inconsistent code across the team.

Maturity: Beginner

Description: Run AI tools in isolated environments without access to secrets or sensitive data to prevent credential leaks and maintain security compliance.

Related Patterns: Security & Compliance Patterns, Rules as Code

Core Security Implementation

# Basic AI isolation with complete network isolation

services:

ai-development:

network_mode: none # Zero network access

cap_drop: [ALL] # No system privileges

volumes:

- ./src:/workspace/src:ro # Read-only source code

# DO NOT mount ~/.aws, .env, secrets/, etc.Complete Example: See examples/ai-security-sandbox/ for:

- Complete Docker isolation configurations for single and multi-agent setups

- Resource locking and emergency shutdown procedures

- Security monitoring and violation detection

- Multi-agent coordination with conflict resolution

Production Implementation: Amazon Bedrock AgentCore provides enterprise-grade implementation of these security controls:

- Isolated runtimes: microVM session isolation for complete workload separation

- Identity layer: IAM integration for fine-grained access control

- Secure tool gateway: MCP-compatible interface with controlled tool access

- Code execution sandbox: Safe environment for AI-generated code execution

- Controlled web browsing: Network access limited to approved domains

- Observability & guardrails: CloudWatch/CloudTrail logging with Bedrock Guardrails integration

Anti-pattern: Unrestricted Access Allowing AI tools full system access risks credential leaks, data breaches, and security compliance violations.

Anti-pattern: Shared Agent Workspaces Allowing multiple parallel agents to write to the same directories creates race conditions, file conflicts, and unpredictable behavior that can corrupt the development environment.

Maturity: Intermediate

Description: Structured 9-stage process from problem definition through deployment with AI assistance.

Related Patterns: Rules as Code, Specification Driven Development, Observable AI Development

Workflow Interaction Sequence

sequenceDiagram

participant D as Developer

participant AI as AI Assistant

participant S as System/CI

participant T as Tests

participant M as Monitoring

Note over D,M: Stage 1-3: Problem → Plan → Requirements

D->>AI: Problem Definition (e.g., JWT Authentication)

AI->>D: Technical Architecture Plan

D->>AI: Requirements Clarification

AI->>D: API Specs + Kanban Tasks + Security Requirements

Note over D,M: Stage 4-5: Issues → Specifications

D->>AI: Generate Executable Tests

AI->>T: Gherkin Scenarios + API Tests + Security Tests

T->>D: Test Suite Ready (Performance Criteria: <200ms)

Note over D,M: Stage 6: Implementation

D->>AI: Implement Following Specifications

AI->>S: Code + Tests + Error Handling + Logging

S->>D: Implementation Results

Note over D,M: Stage 7-9: Testing → Deployment → Monitoring

D->>S: Run All Tests

S->>D: Test Results + Security Scan + Performance Benchmark

alt Tests Pass

S->>S: Deploy to Production

S->>M: Setup Monitoring Alerts

M->>D: Deployment Complete + Monitoring Active

else Tests Fail

S->>D: Failure Report

D->>AI: Fix Issues

AI->>S: Updated Implementation

end

Note over D,M: Continuous Monitoring

M->>D: Performance Alerts + Security EventsCore Workflow Implementation

# Stage 1-3: Problem → Plan → Requirements

ai "Analyze request → Generate architecture, tasks, API specs"

# Stage 4-5: Issues → Specifications

ai "Generate executable tests → Gherkin scenarios, API tests, security tests"

# Stage 6: Implementation

ai "Implement following specifications → Use tests as guide, security best practices"

# Stage 7-9: Testing → Deployment → Monitoring

ai "Complete QA → Run tests, security scan, deploy, monitor"Complete Implementation: See examples/ai-development-lifecycle/ for full 9-stage workflow scripts, detailed prompts for each stage, and integration with CI/CD pipelines.

Anti-pattern: Ad-Hoc AI Development Jumping straight to coding with AI without proper planning, requirements, or testing strategy.

Maturity: Intermediate

Description: Connect AI systems to external data sources, APIs, and tools for enhanced capabilities beyond prompt-only interactions.

Related Patterns: AI Security Sandbox, AI Developer Lifecycle, Observable AI Development

Core Concept

Modern AI development requires more than chat-based interactions. AI systems become significantly more capable when connected to real-world data sources and tools. This pattern demonstrates the architectural shift from isolated prompt-only AI to tool-augmented AI systems.

Implementation Overview

# Core tool-augmented AI system with security controls

class ToolAugmentedAI:

def __init__(self, config_path: str = ".ai/tools.json"):

self.available_tools = {

"database_query": self._query_database, # Read-only SQL queries

"file_operations": self._file_operations, # Controlled file access

"api_requests": self._api_requests, # Allowlisted HTTP requests

"system_info": self._system_info # Safe system information

}

def execute_with_tools(self, ai_request: str, tool_calls: list) -> dict:

"""Execute AI request with secure tool access"""

# Process tool calls with security validation

# Return structured results with error handlingTool Categories & Security

-

Database Access: Read-only queries with operation whitelisting (

SELECT,WITHonly) - File Operations: Path-restricted read/write within configured directories

- API Integration: HTTP requests limited to allowlisted domains with timeouts

- System Information: Safe environment data without sensitive details

Configuration Example

{

"allowed_apis": ["api.github.com", "api.openweathermap.org"],

"file_access_paths": ["./data/", "./logs/", "./generated/"],

"max_query_results": 100,

"security": {

"read_only_database": true,

"api_rate_limits": true,

"file_size_limits": "10MB"

}

}Model Context Protocol (MCP) Integration

This pattern can be implemented using Anthropic's Model Context Protocol (MCP) for standardized tool integration across AI systems:

{

"mcp_servers": {

"filesystem": {

"command": "npx",

"args": ["@modelcontextprotocol/server-filesystem", "./data"]

},

"sqlite": {

"command": "npx",

"args": ["@modelcontextprotocol/server-sqlite", "app_data.db"]

}

}

}What Tool Integration Enables

- Real-time data access: AI queries current database state, not training data

- File system interaction: Read logs, write generated code, manage project files

- API integration: Fetch live data from external services and APIs

- System awareness: Access to current environment state and configuration

- Enhanced context: AI decisions based on actual system state, not assumptions

Complete Implementation

See examples/ai-tool-integration/ for:

- Full Python implementation with security controls

- Configuration examples and MCP integration

- Usage patterns and deployment guidelines

- Integration with AI Security Sandbox

Anti-pattern: Prompt-Only AI Development Attempting to solve complex data analysis, system integration, or real-time problems using only natural language prompts without providing AI access to actual data sources, APIs, or system tools. This leads to hallucinated responses, outdated information, and inability to interact with real systems.

Maturity: Intermediate

Description: Generate Kanban-optimized work items (4-8 hours max) from requirements using AI to ensure continuous flow with clear acceptance criteria and dependencies.

Related Patterns: AI Readiness Assessment, Specification Driven Development

Issue Generation Framework

graph TD

A[Requirements Document] --> B[AI Feature Analysis]

B --> C[Work Item Splitting]

C --> D{<8 hours?}

D -->|No| E[Split Further]

E --> C

D -->|Yes| F[Story Generation]

F --> G[Acceptance Criteria]

G --> H[Cycle Time Target]

H --> I[Dependency Mapping]

I --> J[Kanban Card Creation]Examples

Input: High-level requirement

## Feature Request

"Users need to be able to reset their passwords via email"AI Prompt for Kanban-Ready Task Generation

ai "Break down this feature into small Kanban tasks:

Feature: Password reset via email

Create work items following Kanban principles:

- Ensure each task can be completed in less than a day

- Clear titles and descriptions

- Specific acceptance criteria

- Labels (frontend, backend, testing)

- Dependencies between tasks

- If any task takes >2 days, split it further

Output structured work items ready for your issue tracker."Generated Kanban-Ready Work Items

{

"title": "Backend: Implement password reset token generation",

"description": "## Acceptance Criteria\n- [ ] Generate secure reset tokens\n- [ ] Set 15-minute expiration\n## Cycle Time Target\n8-12 hours",

"labels": ["backend", "security", "kanban-ready"],

"milestone": "Password Reset MVP"

}Complete Implementation: See examples/ai-issue-generation/ai-prompts-for-epic-management.md for practical AI prompts that directly interface with issue tracking systems to create epics, manage subissue relationships, and automate progress tracking.

Kanban Epic Breakdown

ai "Break down this epic for optimal Kanban flow:

Epic: User Dashboard with Analytics

Kanban task requirements:

- Maximum 4-8 hours per task (1 day)

- If a task would take longer, split it

- Each task independently deployable

- Focus on flow over estimates

Break down into:

- Database migrations (each table/index separately)

- Individual API endpoints (one endpoint per task)

- UI components (one component per task)

- Test suites (by feature area)

- Security checks (per component)

- Performance optimizations (targeted improvements)

Goal: Continuous flow with rapid feedback cycles."Epic-Subissue Relationship Management

AI-assisted establishment and maintenance of bidirectional links between epics and their constituent work items for comprehensive progress tracking and dependency management.

graph TD

A[Epic Creation] --> B[AI Decomposition]

B --> C[Subissue Generation]

C --> D[Cross-Linking]

D --> E[Progress Tracking]

E --> F[Epic Status Updates]

F --> G[Dependency Resolution]

G --> H[Completion Validation]AI-Driven Cross-Linking Strategy

ai "Generate epic-subissue relationships for:

Epic: User Authentication System

Requirements:

- Create parent-child references in both directions

- Establish dependency chains between subissues

- Include progress tracking mechanisms

- Generate status rollup criteria

- Add completion validation rules

Output universal relationship structure:

- Reference IDs for linking

- Status propagation rules

- Dependency validation

- Progress calculation logic"Generated Relationship Structure

{

"epic": {

"id": "AUTH-001",

"title": "Epic: User Authentication System",

"subissues": ["AUTH-002", "AUTH-003", "AUTH-004", "AUTH-005"],

"completion_criteria": "all_subissues_closed",

"status_calculation": "percentage_complete"

},

"subissues": [

{

"id": "AUTH-002",

"title": "Password validation service",

"parent_epic": "AUTH-001",

"blocks": [],

"blocked_by": [],

"completion_updates_epic": true

}

],

"relationships": {

"AUTH-002": {"depends_on": [], "enables": ["AUTH-005"]},

"AUTH-003": {"depends_on": [], "enables": ["AUTH-005"]},

"AUTH-004": {"depends_on": [], "enables": ["AUTH-005"]},

"AUTH-005": {"depends_on": ["AUTH-002", "AUTH-003", "AUTH-004"], "enables": []}

}

}Automated Progress Tracking

# AI-generated progress monitoring

ai "Create progress tracking for epic AUTH-001:

Monitor subissue completion and update epic status:

- Calculate completion percentage

- Identify blocking dependencies

- Update epic description with progress

- Generate status reports

- Alert on timeline risks

Track these metrics:

- Subissues completed vs total

- Cycle time per subissue

- Dependency chain health

- Epic velocity trends"Universal Linking Implementation

ai "Generate relationship linking strategy:

Common platform capabilities to leverage:

- Reference linking between work items

- Custom fields for parent/child relationships

- Labels for epic grouping

- Milestones for epic tracking

- Comments for progress updates

Create linking strategy that:

1. Works with platform limitations

2. Maintains bidirectional references

3. Survives tool migrations

4. Enables automated reporting"Dynamic Epic Status Management

ai "Update epic AUTH-001 status based on subissue changes:

Current state:

- AUTH-002: Completed

- AUTH-003: In Progress (60% done)

- AUTH-004: Not Started

- AUTH-005: Blocked (waiting for AUTH-002, AUTH-003, AUTH-004)

Generate:

- Epic progress summary

- Updated epic description

- Risk assessment

- Next action recommendations

- Stakeholder communication"Dependency Chain Validation

ai "Validate dependency relationships for epic AUTH-001:

Check for:

- Circular dependencies

- Missing prerequisite work

- Parallel work opportunities

- Critical path identification

- Resource contention

Output:

- Dependency graph validation

- Suggested scheduling optimizations

- Risk mitigation recommendations

- Parallel execution opportunities"Work Item Integration

# Epic-aware work item creation

ai "Create linked work items maintaining epic relationships"

# Universal linking approach

epic_id="EPIC-123"

parent_reference="Relates to: ${epic_id}"

# Standard relationship format

item_description="$original_description\n\n## Epic Relationship\nParent Epic: $epic_id\nDependencies: [see epic for full context]\nProgress contributes to: Epic completion"Anti-pattern: Orphaned Work Items Creating subissues without establishing clear parent-child relationships, leading to epic progress that cannot be tracked and completed work that doesn't contribute to measurable outcomes.

Anti-pattern: Manual Relationship Maintenance Relying on humans to manually update epic progress and maintain cross-references as subissues change, resulting in stale information and broken dependency chains when teams are under pressure.

Kanban Work Item Splitting

ai "Apply Kanban principles to split these work items:

Kanban splitting rules:

- Maximum cycle time: 4-8 hours (1 day)

- If >8 hours, must split into smaller items

- Each item independently deployable

- Measure actual cycle time, not estimates

Historical cycle times for reference:

- Authentication token generation: 8 hours

- Email template setup: 4 hours

- Password reset form: 4 hours

- API endpoint creation: 6 hours

- Database migration: 3 hours per table

For each task:

1. Can it be completed in <8 hours?

2. If no, how to split it?

3. What's the smallest valuable increment?

Remember: Flow over estimates, rapid feedback over perfect planning.""If a task takes more than one day, split it."

– Kanban Guide, Lean Kanban University

"Small, frequent deliveries expose issues early and keep teams aligned."

– Agile Alliance, Kanban Glossary

Anti-pattern: Vague Issue Generation Creating generic tasks without specific acceptance criteria, proper sizing, or clear dependencies leads to scope creep and estimation errors.

Anti-pattern Examples:

❌ "Fix the login page"

❌ "Make the dashboard better"

❌ "Add some tests"

✅ "Add OAuth 2.0 token validation endpoint (8 hours)"

✅ "Implement dashboard metric WebSocket connection (6 hours)"

✅ "Write unit tests for user service login method (4 hours)"Development patterns provide tactical approaches for day-to-day AI-assisted coding workflows, focusing on quality, maintainability, and team collaboration.

Maturity: Intermediate

Description: Use executable specifications to guide AI code generation with clear acceptance criteria before implementation.

Core Principle: Precision Enables Productivity

SpecDriven AI combines three key elements:

- Machine-readable specifications with unique identifiers and authority levels

- Rigorous Test-Driven Development with coverage tracking and automated validation

- AI-powered implementation with persistent context through structured specifications

Key Innovation: Authority Level System

Specifications use authority levels to resolve conflicts and establish precedence:

-

authority=system: Core business logic and security requirements (highest precedence) -

authority=platform: Infrastructure and technical architecture decisions -

authority=feature: User interface and experience requirements (lowest precedence)

When requirements conflict, higher authority levels take precedence, enabling clear decision-making for AI implementation.

Related Patterns: AI Developer Lifecycle, AI Tool Integration, Comprehensive AI Testing Strategy, Observable AI Development

SpecDriven AI Workflow

graph TD

A[Machine-Readable Specifications<br/>with Authority Levels] --> B[Coverage Tracking<br/>& Validation]

B --> C[AI Implementation<br/>with Ephemeral Prompts]

C --> D[Automated Testing<br/>& Compliance Check]

D --> E{Specs Pass?}

E -->|No| F[Refine Prompts<br/>Not Specs]

F --> C

E -->|Yes| G[Coverage Report<br/>& Deployment]

G --> H[Specification Persistence<br/>for Regression]

style A fill:#e1f5e1

style B fill:#e1f5e1

style H fill:#e1f5e1

style C fill:#ffe6e6

style F fill:#ffe6e6Core Implementation

Machine-Readable Specification with Authority Levels

# IAM Policy Generator Specification {#iam_policy_gen}

## CLI Requirements {#cli_requirements authority=system}

The system MUST provide a command-line interface that:

- Accepts policy type via `--policy-type` flag

- Validates input parameters against AWS IAM constraints

- Generates syntactically correct IAM policy JSON [^test_iam_syntax]

- Returns exit code 0 for success, 1 for validation errors

## Input Validation {#input_validation authority=platform}

The system MUST:

- Reject invalid AWS service names with clear error messages

- Validate resource ARN format before policy generation

- Implement rate limiting for API calls [^test_rate_limit]

[^test_iam_syntax]: tests/test_iam_policy_syntax.py

[^test_rate_limit]: tests/test_rate_limiting.pyAutomated Coverage Tracking

# Run specification compliance validation

pytest --cov=src --cov-report=html --spec-coverage

python spec_validator.py --check-coverage --authority-conflicts

# Output shows specification coverage

# Specification Coverage Report:

# ✅ cli_requirements: 100% (3/3 tests linked)

# ✅ input_validation: 85% (6/7 tests linked)

# ⚠️ Missing test: [^test_malformed_arn] in line 23Tooling Integration

# Pre-commit hook validates specification compliance

# .pre-commit-config.yaml

repos:

- repo: local

hooks:

- id: spec-coverage

name: Specification Coverage Check

entry: python spec_validator.py --check-coverage

language: python

pass_filenames: false

# Git workflow with specification traceability

git commit -m "feat: implement rate limiting [spec:rl2c]

Implements rate limiting requirement from input_validation

section. Closes specification anchor #failed_auth.

Coverage: 94% (31/33 spec requirements covered)"Key Benefits

- Authority-based conflict resolution prevents requirement ambiguity

- Automated coverage tracking ensures no specifications are missed

- AI tool independence through persistent, machine-readable requirements

- Precise traceability from specification anchors to test implementations

- Living documentation that evolves with the system

Complete Implementation

See examples/specification-driven-development/ for:

- Complete IAM Policy Generator implementation

- spec_validator.py tool for automated compliance checking

- Pre-commit hooks and Git workflow integration

- Full specification examples with authority levels

- Coverage tracking and reporting tools

Anti-pattern: Implementation-First AI Writing code with AI first, then trying to retrofit tests, resulting in tests that mirror implementation rather than specify behavior.

Anti-pattern: Prompt Hoarding Saving collections of prompts as if they were specifications. Prompts are implementation details; specifications are behavioral contracts.

Maturity: Beginner Description: Generate explicit implementation plans before writing code to improve quality, reduce iterations, and enable better collaboration.

Related Patterns: Specification Driven Development, Progressive AI Enhancement, AI Choice Generation

Core Principle: Think Before You Code

Modern AI coding tools provide planning capabilities that allow developers to iterate on implementation approaches before writing any code. This pattern leverages these planning features to:

- Reduce implementation iterations by validating approach upfront

- Improve code quality through structured thinking

- Enable better collaboration via shareable plans

- Minimize context switching between planning and execution

Planning Workflow

graph TD

A[Problem Statement] --> B[Generate Initial Plan]

B --> C[Review & Refine Plan]

C --> D{Plan Approved?}

D -->|No| E[Iterate on Plan]

E --> C

D -->|Yes| F[Execute Implementation]

F --> G[Validate Against Plan]

G --> H{Meets Plan?}

H -->|No| I[Adjust Implementation]

I --> F

H -->|Yes| J[Complete]

style A fill:#e1f5e1

style C fill:#e1f5e1

style F fill:#ffe6e6

style J fill:#e1f5e1Core Implementation

1. Plan Generation Phase

# Example planning prompt structure

CONTEXT: "Building user authentication for SaaS application"

REQUIREMENTS: "JWT tokens, password reset, rate limiting"

CONSTRAINTS: "Must integrate with existing user table, 2-hour time limit"

REQUEST: "Generate step-by-step implementation plan with:

- Database changes needed

- API endpoints to create/modify

- Security considerations

- Testing approach

- Rollback strategy"2. Plan Review and Iteration

# Generated Plan Review Checklist

### Technical Approach

- [ ] Database schema changes are backwards compatible

- [ ] API design follows existing conventions

- [ ] Security measures address OWASP top 10

- [ ] Performance impact is minimal

### Implementation Strategy

- [ ] Tasks are broken into deployable increments

- [ ] Dependencies are clearly identified

- [ ] Rollback plan is feasible

- [ ] Testing strategy covers edge cases

### Resource Requirements

- [ ] Time estimate is realistic

- [ ] Required permissions are available

- [ ] External dependencies are identified3. Execution with Plan Validation

# During implementation, validate against plan

echo "✓ Step 1: Created user_sessions table (matches plan)"

echo "✓ Step 2: Added JWT service (matches plan)"

echo "⚠ Step 3: Rate limiting - using Redis instead of in-memory (plan deviation documented)"Tool-Agnostic Planning Approach

Planning Session Structure

## 1. Problem Definition (2-3 sentences)

Clear statement of what needs to be built and why

## 2. Constraints & Requirements

- Technical constraints (existing systems, performance, security)

- Business requirements (timeline, user experience, compliance)

- Resource constraints (team size, expertise, budget)

## 3. Implementation Options

- Option A: [Brief description, pros/cons, time estimate]

- Option B: [Brief description, pros/cons, time estimate]

- Recommended: [Choice with justification]

## 4. Detailed Plan

- [ ] Step 1: [Concrete action with acceptance criteria]

- [ ] Step 2: [Concrete action with acceptance criteria]

- [ ] Step 3: [Concrete action with acceptance criteria]

## 5. Validation Strategy

How to verify each step works and overall solution meets requirementsWhen to Use Plan-First Development

- Complex Features: Multi-step implementations requiring coordination

- Unknown Domains: Working in unfamiliar technologies or business areas

- Team Collaboration: When multiple developers need to understand the approach

- High-Stakes Changes: Security, performance, or business-critical modifications

- Learning Contexts: When using AI to explore new implementation approaches

Complete Implementation

See examples/ai-plan-first-development/ for:

- Tool-specific planning examples (Claude Code, Cursor)

- Planning templates and checklists

- Integration with existing development workflows

- Plan validation and iteration strategies

Anti-pattern: Blind Code Generation Immediately jumping to code generation without understanding the problem scope, constraints, or implementation options, leading to over-engineered or incorrect solutions.

Anti-pattern: Analysis Paralysis Spending excessive time refining plans without moving to implementation, missing opportunities for rapid feedback and iterative improvement.

Maturity: Beginner

Description: Build complex features through small, deployable iterations rather than big-bang generation.

Related Patterns: AI Plan-First Development, AI Developer Lifecycle, Constraint-Based AI Development, AI Choice Generation, AI-Driven Architecture Design

Examples Building authentication progressively:

# Day 1: Minimal login

"Create POST /login that returns 200 for admin/admin, 401 otherwise"

→ Deploy

# Day 2: Real password check

"Modify login to check passwords against users table. Keep existing API."

→ Deploy

# Day 3: Add security

"Add bcrypt hashing to login. Support both hashed and plain passwords temporarily."

→ Deploy

# Day 4: Modern tokens

"Replace session with JWT. Keep session endpoint for backward compatibility."

→ DeployWhen to Use Progressive AI Enhancement

- MVP Development: When you need to get to market quickly with minimal features

- Uncertain Requirements: When requirements are likely to change based on user feedback

- Risk Mitigation: When you want to reduce the risk of large, complex implementations

- Continuous Delivery: When you have automated deployment and want rapid iterations

- Learning Projects: When the team is learning new technologies or domains

Anti-pattern: Big Bang Generation Asking AI to "create a complete user management system" results in 5000 lines of coupled, untested code that takes days to review and debug.

Maturity: Intermediate

Description: Generate multiple implementation options for exploration and comparison rather than accepting the first AI solution.

Related Patterns: AI Plan-First Development, Progressive AI Enhancement, Context Window Optimization

Multi-Option Implementation Comparison

# Generate and compare multiple implementation approaches

ai "Generate 3 different authentication approaches for user management:

Option 1 (Performance-focused):

- In-memory JWT with Redis caching

- Sub-10ms token validation, horizontal scaling ready

- Trade-off: Memory intensive, Redis dependency

- Best for: High-traffic APIs (>10k req/sec)

Option 2 (Security-focused):

- Database-backed sessions with audit trail

- Immediate revocation, multi-factor authentication

- Trade-off: Higher latency, complex session management

- Best for: Banking, healthcare, government

Option 3 (Simplicity-focused):

- Standard JWT with established libraries

- Well-documented patterns, minimal custom code

- Trade-off: Less optimization opportunities

- Best for: Startups, MVPs, small teams

For each option provide:

- 30-minute working prototype

- Performance benchmarks (response time, memory usage)

- Implementation complexity assessment (LOC, dependencies)

- Specific trade-offs and when to choose this approach

Recommend best option based on project constraints and team experience."Anti-pattern: Analysis Paralysis Generating too many choices or spending more time evaluating options than implementing them.

Maturity: Advanced

Description: Run multiple AI agents concurrently on isolated tasks or environments to maximize development speed and exploration.

Related Patterns: AI Workflow Orchestration, Atomic Task Decomposition, AI Security Sandbox

Agent Coordination Lifecycle

sequenceDiagram

participant M as Manager

participant A1 as Auth Agent

participant A2 as API Agent

participant A3 as Test Agent

participant SM as Shared Memory

participant CS as Conflict Scanner

M->>A1: Start (OAuth2 Task)

M->>A2: Start (REST API Task)

M->>A3: Start (Test Suite Task)

par Parallel Development

A1->>A1: Implement OAuth2 Flow

A1->>SM: Record Learning

and

A2->>A2: Implement REST Endpoints

A2->>SM: Record API Patterns

and

A3->>A3: Generate Integration Tests

A3->>SM: Record Test Patterns

end

SM->>CS: Trigger Conflict Analysis

CS->>M: Report Conflicts/All Clear

M->>M: Merge Components & CleanupCore Implementation Approaches

# Container-based isolation

# docker-compose.parallel-agents.yml

services:

agent-auth:

image: ai-dev-environment:latest

volumes:

- ./feature-auth:/workspace:rw

- shared-memory:/shared:ro

environment:

- AGENT_ID=auth-feature

- TASK_ID=implement-oauth2

networks:

- agent-network

agent-api:

image: ai-dev-environment:latest

volumes:

- ./feature-api:/workspace:rw

- shared-memory:/shared:ro

environment:

- AGENT_ID=api-feature

- TASK_ID=implement-rest-endpoints

volumes:

shared-memory:

driver: local

networks:

agent-network:

driver: bridge

internal: trueGit Worktree Parallelization

# Create isolated branches for parallel work

git worktree add -b agent/auth ../agent-auth

git worktree add -b agent/api ../agent-api

git worktree add -b agent/tests ../agent-tests

# Launch agents in parallel

parallel --jobs 3 << EOF

cd ../agent-auth && ai-agent implement-oauth2

cd ../agent-api && ai-agent implement-rest-endpoints

cd ../agent-tests && ai-agent generate-integration-tests

EOF

# Automated conflict detection and merge

for branch in $(git branch -r | grep 'agent/'); do

git checkout -b temp-merge main

if git merge --no-commit --no-ff $branch; then

echo "✓ No conflicts in $branch"

git merge --abort

else

echo "⚠ Conflicts detected - using AI resolution"

ai-agent resolve-conflicts --branch $branch

fi

git checkout main && git branch -D temp-merge

done

# Cleanup

git worktree remove ../agent-auth ../agent-api ../agent-testsShared Memory & Coordination

# Agent coordination with shared knowledge

class AgentMemory:

def record_learning(self, agent_id, key, value):

"""Thread-safe learning capture with file locking"""

with fcntl.flock(self.lock_file, fcntl.LOCK_EX):

self.memory[agent_id][key] = value

def get_shared_knowledge(self):

"""Consolidated knowledge from all agents"""

return self.consolidated_memory

# Task definition

tasks = {

"auth-service": {

"agent_count": 1,

"isolation": "container",

"dependencies": [],

"instructions": "Implement OAuth2 with JWT tokens"

},

"api-endpoints": {

"agent_count": 2,

"isolation": "worktree",

"dependencies": ["auth-service"],

"instructions": "REST endpoints: user mgmt + CRUD"

}

}Complete Implementation: See examples/parallelized-ai-agents/ for:

- Full Docker isolation and coordination setup

- Git worktree management and conflict resolution

- Shared memory system with file locking

- Emergency shutdown and safety monitoring

- Task distribution and dependency management

When to Use Parallel Agents

- Complex features requiring multiple specialized implementations

- Time-critical projects where speed trumps coordination overhead

- Exploration phases testing multiple approaches simultaneously

- Large teams with strong DevOps and coordination processes

Source: AI Native Dev - How to Parallelize AI Coding Agents

Anti-pattern: Uncoordinated Parallel Execution Running multiple agents without isolation, shared memory, or conflict resolution leads to race conditions, lost work, and system instability.

Maturity: Intermediate

Description: Capture successful patterns and failed attempts as versioned knowledge for future AI sessions to accelerate development and avoid repeating mistakes.

Related Patterns: Rules as Code, AI-Driven Traceability

Core Implementation

# Initialize organized knowledge structure

./knowledge-capture.sh --init

# Capture successful patterns with success rates

./knowledge-capture.sh --success \

--domain "auth" \

--pattern "JWT Auth" \

--prompt "JWT with RS256, 15min access, httpOnly cookie" \

--success-rate "95%"

# Document failures to avoid repeating

./knowledge-capture.sh --failure \

--domain "auth" \

--bad-prompt "Make auth secure" \

--problem "Too vague → AI over-engineers" \

--solution "Specify exact JWT requirements"Complete Implementation: See examples/ai-knowledge-persistence/ for:

- Automated knowledge capture and maintenance scripts

- Structured templates for patterns, failures, and gotchas

- Success rate tracking and stale knowledge detection

- Team knowledge sharing and export tools

Anti-pattern: Knowledge Hoarding

Creating extensive knowledge bases that become maintenance burdens instead of accelerating development through selective, actionable knowledge capture.

Why it's problematic:

- Knowledge bases become outdated and misleading

- Developers spend more time documenting than developing

- Overly detailed entries are ignored in favor of quick experimentation

- Knowledge becomes siloed and not easily discoverable

Signs of Knowledge Hoarding:

- Knowledge files haven't been accessed in months

- Entries are extremely detailed but rarely referenced

- Multiple overlapping knowledge bases with conflicting information

- Knowledge capture takes longer than the original development work

Instead, focus on:

- Capture only high-impact patterns (>80% success rate)

- Document failures that wasted significant time (>30 minutes)

- Keep entries concise and immediately actionable

- Review and prune knowledge quarterly

# Good: Focused, actionable knowledge

echo "### JWT Auth Success" >> .ai/knowledge/auth.md

echo "Prompt: 'JWT with RS256, 15min expiry' - 95% success" >> .ai/knowledge/auth.md

# Bad: Exhaustive documentation

echo "### Complete JWT Implementation Guide" >> .ai/knowledge/auth.md

echo "Full JWT specification with 47 configuration options..." >> .ai/knowledge/auth.mdMaturity: Beginner

Description: Give AI specific constraints to prevent over-engineering and ensure focused solutions.

Related Patterns: Progressive AI Enhancement, Human-AI Handoff Protocol, AI Choice Generation

Examples

Bad: "Create user service"

Good: "Create user service: <100 lines, 3 methods max, only bcrypt dependency"

Bad: "Add caching"

Good: "Add caching using Map, max 1000 entries, LRU eviction"

Bad: "Improve performance"

Good: "Reduce p99 latency to <50ms without new dependencies"

Anti-pattern: Unconstrained Generation Giving AI vague instructions like "make it better" or "add features" leads to over-engineered solutions that are hard to maintain and review.

Anti-pattern: Constraint Overload

Adding too many constraints ("use exactly 50 lines, 2 methods, no dependencies, 100% test coverage, sub-10ms response time") paralyzes AI decision-making and produces suboptimal solutions.

Maturity: Intermediate

Description: Break complex features into atomic, independently implementable tasks for parallel AI agent execution.

Related Patterns: AI Workflow Orchestration, Progressive AI Enhancement, AI Issue Generation

Atomic Task Criteria

graph TD

A[Feature Requirement] --> B[Task Analysis]

B --> C{Atomic Task Check}

C -->|✓ Independent| D[Can run in parallel]

C -->|✓ <2 hours| E[Rapid feedback cycle]

C -->|✓ Clear I/O| F[Testable interface]

C -->|✓ No shared state| G[Conflict-free]

C -->|✗ Fails check| H[Split Further]

H --> B

D --> I[Ready for Agent]

E --> I

F --> I

G --> ICore Decomposition Process

# Feature: User Authentication System

# Bad: Monolithic task

❌ "Implement complete user authentication with JWT, password hashing, rate limiting, and email verification"

# Good: Atomic breakdown with AI validation

ai_decompose "Break down user authentication into atomic tasks:

Task 1: Password validation service (1.5h)

- Input: plain text password, validation rules

- Output: validation result object

- Dependencies: None (pure function)

Task 2: JWT token generation service (1h)

- Input: user ID, role, expiration config

- Output: signed JWT token

- Dependencies: None (crypto operations only)

Task 3: Rate limiting middleware (2h)

- Input: request metadata, rate limit config

- Output: allow/deny decision

- Dependencies: None (stateless logic)

Task 4: Login endpoint integration (1h)

- Input: credentials, services from tasks 1-3

- Output: HTTP response with token/error

- Dependencies: Tasks 1-3 (integration only)"

# Validate atomicity

ai_task_validator "Check each task for:

1. <2 hour completion time

2. No shared mutable state

3. Clear input/output contracts

4. Testable in isolation

5. No circular dependencies"Agent Assignment & Coordination

# .ai/task-assignment.yml

authentication_feature:

parallel_tasks:

- id: "auth-001" # Password validation

agent: "backend-specialist-1"

estimated_hours: 1.5

dependencies: []

- id: "auth-002" # JWT generation

agent: "security-specialist"

estimated_hours: 1

dependencies: []

- id: "auth-003" # Rate limiting

agent: "backend-specialist-2"

estimated_hours: 2

dependencies: []

integration_tasks:

- id: "auth-004" # Login endpoint

agent: "integration-specialist"

estimated_hours: 1

dependencies: ["auth-001", "auth-002", "auth-003"]Task Contract Validation

# Ensure tasks meet atomic criteria

class TaskContract:

def validate_atomic(self) -> bool:

return all([

len(self.side_effects) == 0, # No side effects

self.estimated_hours <= 2, # Rapid completion

self.has_clear_io_contract() # Testable interface

])

# Example validation

task = TaskContract("auth-001")

task.inputs = {"password": str, "rules": PasswordRules}

task.outputs = {"is_valid": bool, "errors": List[str]}

assert task.validate_atomic() # ✓ Passes atomic criteriaComplete Implementation: See examples/atomic-task-decomposition/ for:

- Contract validation system with automated checking

- Task dependency resolution and scheduling

- Parallel execution coordination and monitoring

- Agent assignment and resource management

When to Use Atomic Decomposition

- Parallel Agent Implementation: Multiple AI agents working simultaneously

- Complex Feature Development: Large features benefiting from parallel work

- Time-Critical Projects: Speed through parallelization essential

- Risk Mitigation: Reduce blast radius of individual task failures

Anti-pattern: Pseudo-Atomic Tasks Creating tasks that appear independent but secretly share state, require specific execution order, or have hidden dependencies on other concurrent work.

Anti-pattern: Over-Decomposition

Breaking tasks so small that coordination overhead exceeds the benefits of parallelization, leading to more complexity than value.

Maturity: Intermediate

Description: Design systems with comprehensive logging, tracing, and debugging capabilities that enable AI to understand system behavior and diagnose issues effectively.

Related Patterns: AI Developer Lifecycle, AI Tool Integration, Comprehensive AI Testing Strategy, AI-Driven Traceability

Core Implementation

# AI-friendly structured logging

def log_operation(operation, **context):

logging.info(json.dumps({

"timestamp": datetime.utcnow().isoformat(),

"operation": operation,

"context": context

}))

# Observable business logic with comprehensive context

def process_order(order):

log_operation("order_start", order_id=order.id, total=order.total)

try:

validate_order(order)

log_operation("validation_success")

result = charge_payment(order)

log_operation("payment_success", transaction_id=result.id)

return result

except PaymentError as e:

log_operation("payment_error", error=str(e), code=e.code)

raiseComplete Implementation: See examples/observable-ai-development/ for:

- Full structured logging framework with correlation IDs

- Performance monitoring decorators and utilities

- AI-friendly debug tools and log analysis scripts

- Integration examples for e-commerce and authentication systems

Anti-pattern: Black Box Development

Building systems with minimal observability that provide insufficient context for AI to understand system behavior, diagnose issues, or suggest improvements.

Why it's problematic: AI cannot debug systems with generic logs like "Payment failed" or "Something went wrong" - it needs specific context, timing, and error details.

# Bad: Black box logging

def process_payment(amount):

try:

result = payment_service.charge(amount)

logger.info("Payment processed")

return result

except Exception:

logger.error("Payment failed")

raise

# Good: Observable implementation

def process_payment(amount):

log_operation("payment_start", amount=amount)

try:

result = payment_service.charge(amount)

log_operation("payment_success", transaction_id=result.id)

return result

except Exception as e:

log_operation("payment_error", error=str(e), amount=amount)

raiseMaturity: Intermediate

Description: Systematic code improvement using AI to detect and resolve code smells with measurable quality metrics, following established refactoring rules and maintaining test coverage throughout the process.

Related Patterns: Rules as Code, Comprehensive AI Testing Strategy, Technical Debt Forecasting

Code Smell Detection Framework

graph TD

A[Code Analysis] --> B[Smell Detection]

B --> C[Refactoring Strategy]

C --> D[AI Implementation]

D --> E[Test Validation]

E --> F[Quality Metrics]

F --> G{Improvement?}

G -->|Yes| H[Commit Changes]

G -->|No| I[Revert & Retry]

H --> J[Update Knowledge Base]

I --> CCore Workflow

# 1. Define refactoring rules

cat > .ai/rules/refactoring.md << 'EOF'

## Long Method Smell

- Max lines: 20 (excluding docstrings)

- Max cyclomatic complexity: 10

- Detection: flake8 C901, pylint R0915

## Large Class Smell

- Max class lines: 250, Max methods: 20

- Detection: pylint R0902, R0904

EOF

# 2. Detect code smells with AI

flake8 --select=C901 src/ > smells.txt

pylint src/ --disable=all --enable=R0915,R0902,R0904 >> smells.txt

ai "Analyze smells.txt using .ai/rules/refactoring.md:

1. Prioritize by impact and complexity

2. Suggest specific refactoring strategy for each smell

3. Generate implementation plan with risk assessment"

# 3. Apply refactoring with test preservation

pytest --cov=src tests/ # Baseline coverage

ai "Refactor process_user_data() method (35 lines, exceeds threshold):

- Apply Extract Method pattern for validation, database, notifications

- Maintain test coverage >90% and API contract

- Create atomic commits for each extracted method"

# 4. Validate and track improvements

pytest --cov=src tests/

flake8 src/ && pylint src/

ai "Generate refactoring impact report:

Before: complexity=12, length=35 lines, coverage=85%

After: complexity=4+2+2, length=8+6+7 lines, coverage=92%

Document lessons learned in .ai/knowledge/refactoring.md"Common Refactoring Patterns

- Extract Method: Break down long methods (>20 lines)

- Extract Class: Split large classes (>250 lines, >20 methods)

- Replace Primitive: Convert strings/dicts to value objects

- Consolidate Duplicates: Merge similar code patterns

Complete Implementation: See examples/ai-driven-refactoring/ for:

- Automated refactoring pipeline with CI integration

- Quality metrics tracking and reporting

- Risk assessment guidelines and rollback procedures

- Knowledge base templates for refactoring outcomes

Anti-pattern: Shotgun Surgery Making widespread changes without systematic analysis leads to introduced bugs and degraded code quality.

Anti-pattern: Speculative Refactoring Refactoring code for hypothetical future requirements rather than addressing current code smells and quality issues.

Maturity: Intermediate

Description: Apply architectural frameworks (DDD, Well-Architected, 12-Factor) using AI to ensure sound system design and maintainable code structure.

Related Patterns: AI Developer Lifecycle, Rules as Code, AI-Driven Refactoring

Example Frameworks

- Domain-Driven Design (DDD): Bounded contexts, entities, value objects

- AWS Well-Architected: 6 pillars compliance assessment

- 12-Factor App: Cloud-native application principles

- Event-Driven Architecture: Event sourcing and saga patterns

- ADRs: Architecture Decision Records generation

Core Implementation: Domain-Driven Design Analysis

# Create DDD analysis prompt

cat > .ai/prompts/ddd-analysis.md << 'EOF'

# Domain-Driven Design Analysis

Analyze user stories and generate:

1. Bounded context boundaries

2. Core entities and value objects

3. Domain services and repositories

4. Integration patterns between contexts

Return bounded context map and suggested code structure.

EOF

# Run domain analysis

ai-assistant analyze-domain \

--input requirements/user-stories.md \

--framework ddd \

--output architecture/domain-model.mdExample Output: E-commerce Domain Model

Bounded Contexts:

- Order Management: Order, OrderItem, OrderStatus

- Payment: Payment, PaymentMethod, Transaction

- Inventory: Product, Stock, Warehouse

- Customer: Customer, Address, Preferences

Integration:

- Order → Payment (via PaymentRequested event)

- Order → Inventory (via StockReservation command)

Anti-pattern: Architecture Astronaut AI

Letting AI generate over-engineered solutions with complex patterns and frameworks without considering business constraints, team capabilities, or actual requirements.

Why it's problematic: AI creates over-complex solutions (microservices + CQRS + event sourcing) when simple CRUD would suffice.

# Good: Constrained architecture

ai-assistant design-architecture \

--requirements requirements/user-stories.md \

--constraints "team_size=5,experience=intermediate,timeline=3months"

# Bad: Unconstrained

ai-assistant design-architecture \

--requirements requirements/user-stories.md \

--generate "enterprise_patterns,microservices,event_sourcing,cqrs"Maturity: Intermediate

Description: Maintain automated links between requirements, specifications, tests, implementation, and documentation using AI.

Related Patterns: AI Developer Lifecycle, Specification Driven Development, Comprehensive AI Testing Strategy

Core Implementation

# Automated traceability maintenance

./maintain_traceability.sh

# Check new code for requirement links and validate existing ones

git diff --name-only HEAD~1 | while read file; do

ai "Analyze $file and suggest requirement traceability links"

done

# Generate impact analysis for recent changes

ai "Map recent changes to affected requirements and tests"Complete Implementation: See examples/ai-driven-traceability/ for:

- Complete traceability maintenance automation system

- Link validation and gap analysis tools

- Impact analysis and reporting scripts

- Integration with project management tools (GitHub, JIRA)

Anti-pattern: Manual Traceability Management Maintaining requirement links in spreadsheets or manual documentation that becomes stale and inaccurate over time.

Operations patterns focus on CI/CD, security, compliance, and production management with AI assistance, building on the foundation and development patterns.

Maturity: Advanced

Description: Transform compliance requirements into executable Cedar/OPA policy files with AI assistance, ensuring regulatory requirements become testable code.

Related Patterns: AI Security Sandbox, Rules as Code

# Transform compliance requirements into executable policies

ai "Convert compliance requirements into Cedar policy code:

SOC 2: Data at rest must be AES-256 encrypted" > encryption.cedar

# Test generated policies

opa test encryption.cedarComplete Implementation: See examples/policy-as-code-generation/ for:

- Complete policy generation pipeline with AI assistance

- Cedar/OPA policy templates and compliance mapping

- Policy testing and validation frameworks

- CI/CD integration examples

Anti-pattern: Manual Policy Translation Hand-coding policies from written requirements introduces inconsistencies and interpretation errors.

Maturity: Intermediate

Description: Aggregate multiple security tools and use AI to summarize findings for actionable insights, reducing alert fatigue while maintaining security rigor.

Related Patterns: Policy-as-Code Generation

# Orchestrate multiple security tools

snyk test --json > snyk.json

bandit -r src -f json > bandit.json

trivy fs --format json . > trivy.json

# AI-powered summarization for actionable insights

ai "Summarize security findings; focus on CRITICAL issues" > pr-comment.txt

gh pr comment --body-file pr-comment.txtComplete Implementation: See examples/security-scanning-orchestration/ for:

- Complete security scanning pipeline with tool orchestration

- AI-powered report summarization and prioritization

- CI/CD integration and automated PR commenting

- Custom security tool configurations and reporting

Anti-pattern: Alert Fatigue Posting every low-severity finding buries real issues and frustrates developers.

Maturity: Advanced

Description: Establish intelligent performance baselines and configure monitoring thresholds automatically, minimizing false positives while catching real performance issues.

Related Patterns: Observable AI Development

# Collect performance metrics and generate intelligent baselines

aws cloudwatch get-metric-statistics --period 86400 > perf.csv

ai "From perf.csv, recommend latency thresholds and autoscale policies" > perf-policy.json

deploy-tool apply perf-policy.jsonComplete Implementation: See examples/performance-baseline-management/ for:

- Complete performance monitoring setup with baseline establishment

- AI-powered threshold calculation and alert configuration

- Autoscaling policy generation and deployment automation

- Integration with multiple monitoring platforms (CloudWatch, Prometheus, etc.)

Anti-pattern: One-Off Alerts Manual thresholds quickly become stale, causing alert storms or blind spots.

- Rushing Into AI: Starting AI adoption without proper assessment

- Context Drift: Inconsistent AI rules across team members

- Unrestricted Access: Allowing AI tools access to sensitive data

- Ad-Hoc Development: Skipping structured development lifecycle

- Implementation-First AI: Writing code before defining acceptance criteria

- Test Generation Without Strategy: Creating tests without coherent quality goals

- Big Bang Generation: Attempting complex features in single AI interaction

- Uncoordinated Multi-Tool Usage: Using multiple AI tools without orchestration

- Black Box Systems: Insufficient logging for AI debugging

- Unclear Boundaries: Ambiguous human-AI handoff points

- Fragmented Security: Isolated security tools without unified framework

- Alert Fatigue: Overwhelming developers with low-priority findings

- Static Deployment: Fixed scripts without AI adaptation

- Trusting AI Blue-Green Generation: Accepting AI output without validation for deployment patterns

- Reactive Maintenance: Firefighting instead of proactive AI-assisted management

- Blind Chaos Testing: Random fault injection without understanding dependencies

- AI Readiness Assessment - Evaluate team and codebase readiness

- Rules as Code - Establish consistent AI coding standards

- AI Security Sandbox - Implement secure AI tool isolation

- AI Developer Lifecycle - Define structured development process

- AI Issue Generation - Generate structured work items from requirements

- Specification Driven Development - Implement specification-first approach

- Progressive AI Enhancement - Practice iterative development

- AI Choice Generation - Generate multiple implementation options

- Atomic Task Decomposition - Break down complex features

- Security & Compliance Patterns - Implement unified security framework

- Deployment Automation Patterns - Establish AI-powered CI/CD

- Monitoring & Maintenance Patterns - Deploy proactive system management

Note: For teams practicing continuous delivery, implement security (AI Security Sandbox, AI Security & Compliance) and deployment patterns (Deployment Automation) from week 1 alongside foundation patterns. The phases represent learning dependencies, not deployment sequences.

- Team readiness score improvement

- Consistent AI rule adherence across projects

- Zero credential leaks in AI-generated code

- Reduced onboarding time for new developers

- Test coverage maintenance (>90% for AI-generated code)

- Reduced code review cycles

- Faster feature delivery with maintained quality

- Decreased debugging time for AI-generated issues

- Automated policy compliance verification

- Reduced deployment failures

- Faster incident response with AI-generated runbooks

- Proactive technical debt management

Have a pattern that's working well for your team? Open an issue or PR to share your experience. The AI development landscape is evolving rapidly, and we're all learning together.

- Follow the established pattern template (Maturity, Description, Related Patterns, Examples, Anti-patterns)

- Include practical, runnable examples

- Specify clear success criteria and anti-patterns

- Reference related patterns appropriately

- Test patterns with multiple AI tools when applicable

MIT License - See LICENSE file for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ai-development-patterns

Similar Open Source Tools

paelladoc

PAELLADOC is an intelligent documentation system that uses AI to analyze code repositories and generate comprehensive technical documentation. It offers a modular architecture with MECE principles, interactive documentation process, key features like Orchestrator and Commands, and a focus on context for successful AI programming. The tool aims to streamline documentation creation, code generation, and product management tasks for software development teams, providing a definitive standard for AI-assisted development documentation.

persistent-ai-memory

Persistent AI Memory System is a comprehensive tool that offers persistent, searchable storage for AI assistants. It includes features like conversation tracking, MCP tool call logging, and intelligent scheduling. The system supports multiple databases, provides enhanced memory management, and offers various tools for memory operations, schedule management, and system health checks. It also integrates with various platforms like LM Studio, VS Code, Koboldcpp, Ollama, and more. The system is designed to be modular, platform-agnostic, and scalable, allowing users to handle large conversation histories efficiently.

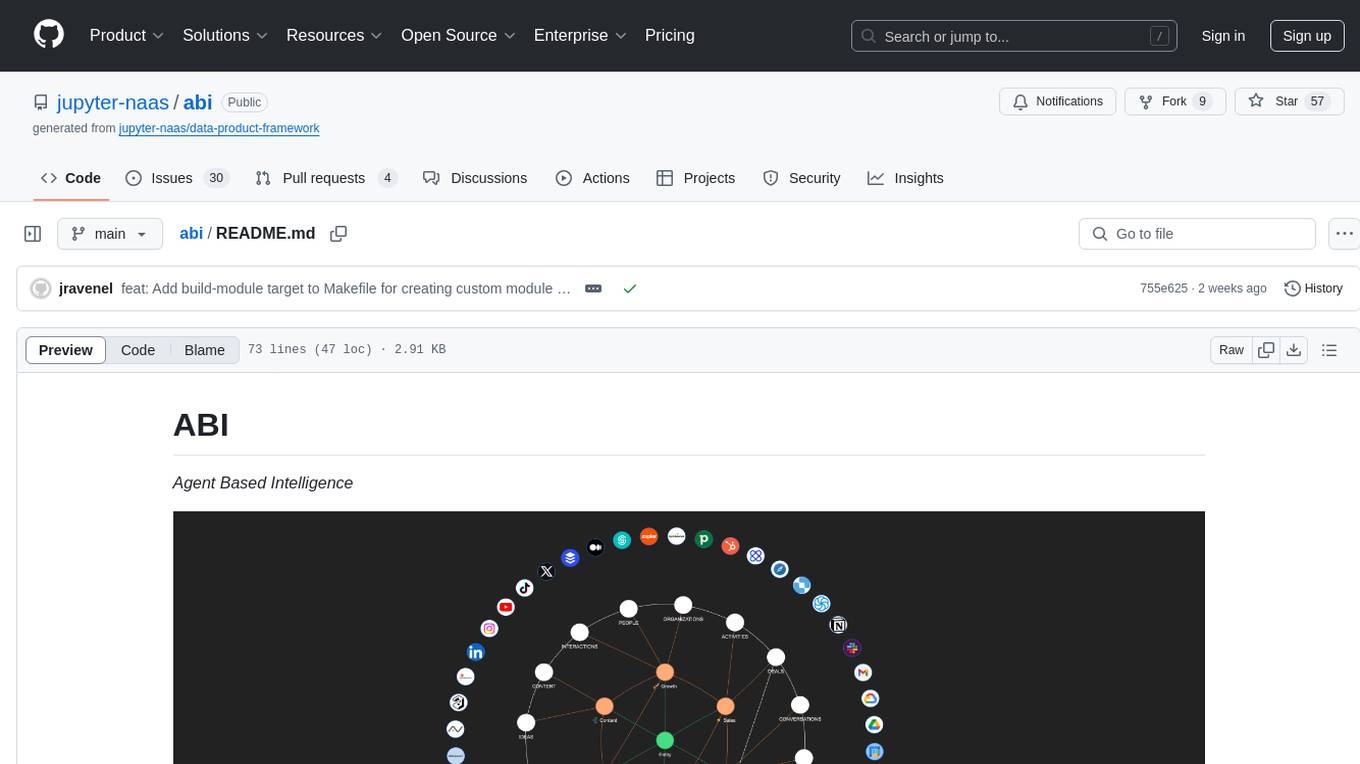

abi

ABI (Agentic Brain Infrastructure) is a Python-based AI Operating System designed to serve as the core infrastructure for building an Agentic AI Ontology Engine. It empowers organizations to integrate, manage, and scale AI-driven operations with multiple AI models, focusing on ontology, agent-driven workflows, and analytics. ABI emphasizes modularity and customization, providing a customizable framework aligned with international standards and regulatory frameworks. It offers features such as configurable AI agents, ontology management, integrations with external data sources, data processing pipelines, workflow automation, analytics, and data handling capabilities.

aider-desk

AiderDesk is a desktop application that enhances coding workflow by leveraging AI capabilities. It offers an intuitive GUI, project management, IDE integration, MCP support, settings management, cost tracking, structured messages, visual file management, model switching, code diff viewer, one-click reverts, and easy sharing. Users can install it by downloading the latest release and running the executable. AiderDesk also supports Python version detection and auto update disabling. It includes features like multiple project management, context file management, model switching, chat mode selection, question answering, cost tracking, MCP server integration, and MCP support for external tools and context. Development setup involves cloning the repository, installing dependencies, running in development mode, and building executables for different platforms. Contributions from the community are welcome following specific guidelines.

CyberStrikeAI

CyberStrikeAI is an AI-native security testing platform built in Go that integrates 100+ security tools, an intelligent orchestration engine, role-based testing with predefined security roles, a skills system with specialized testing skills, and comprehensive lifecycle management capabilities. It enables end-to-end automation from conversational commands to vulnerability discovery, attack-chain analysis, knowledge retrieval, and result visualization, delivering an auditable, traceable, and collaborative testing environment for security teams. The platform features an AI decision engine with OpenAI-compatible models, native MCP implementation with various transports, prebuilt tool recipes, large-result pagination, attack-chain graph, password-protected web UI, knowledge base with vector search, vulnerability management, batch task management, role-based testing, and skills system.

octocode-mcp

Octocode is a methodology and platform that empowers AI assistants with the skills of a Senior Staff Engineer. It transforms how AI interacts with code by moving from 'guessing' based on training data to 'knowing' based on deep, evidence-based research. The ecosystem includes the Manifest for Research Driven Development, the MCP Server for code interaction, Agent Skills for extending AI capabilities, a CLI for managing agent capabilities, and comprehensive documentation covering installation, core concepts, tutorials, and reference materials.

dotclaude