empirica

Cognitive Operating System for AI Agents - Git-native epistemic middleware enabling self-awareness, multi-agent coordination, and measurable learning through CASCADE workflow. Turns context loss into transparent uncertainty tracking.

Stars: 112

Empirica is an epistemic self-awareness framework for AI agents to understand their knowledge boundaries. It introduces epistemic vectors to measure knowledge state and uncertainty, enabling honest communication. The tool emerged from 600+ real working sessions across various AI systems, providing cognitive infrastructure for distinguishing between confident knowledge and guessing. Empirica's 13 foundational vectors cover engagement, domain knowledge depth, execution capability, information access, understanding clarity, coherence, signal-to-noise ratio, information richness, working state, progress rate, task completion level, work significance, and explicit doubt tracking. It is applicable across industries like software development, research, healthcare, legal, education, and finance, aiding in tasks such as code review, hypothesis testing, diagnostic confidence, case analysis, learning assessment, and risk assessment.

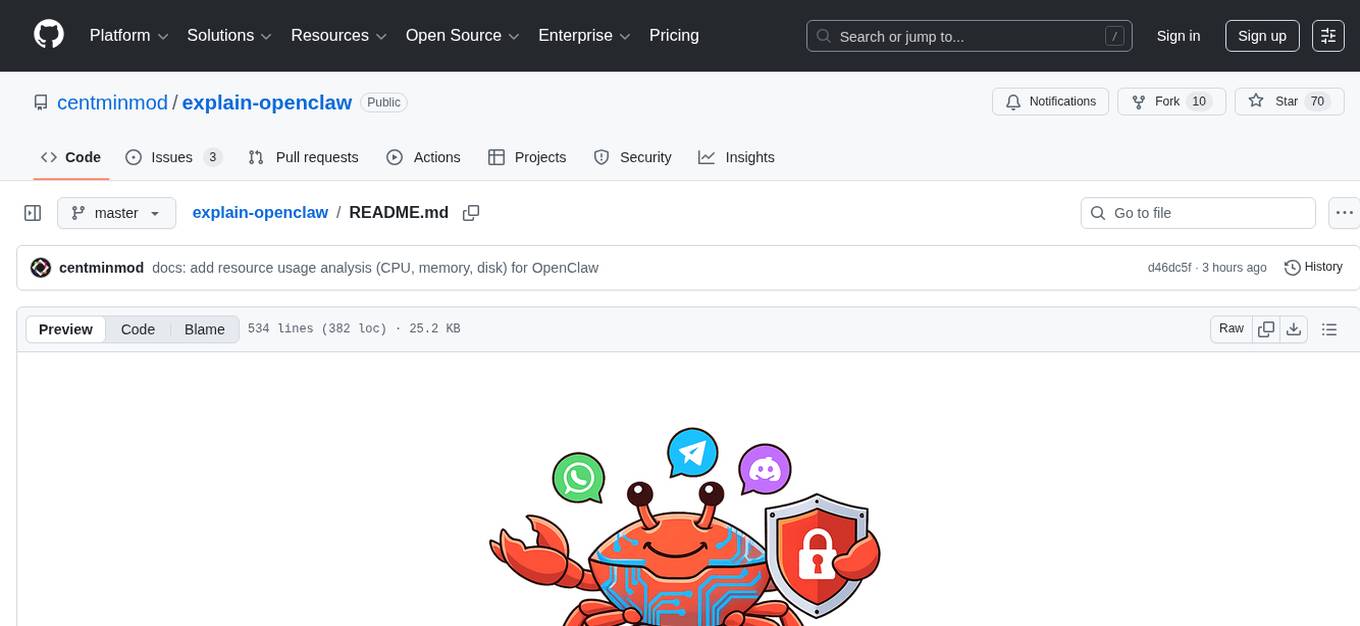

README:

Teaching AI to know what it knows—and what it doesn't

Empirica is an epistemic self-awareness framework that enables AI agents to genuinely understand the boundaries of their own knowledge. Instead of producing confident-sounding responses regardless of actual understanding, AI agents using Empirica can accurately assess what they know, identify gaps, and communicate uncertainty honestly.

The core insight: AI systems today lack functional self-awareness. They can't reliably distinguish between "I know this well" and "I'm guessing." Empirica provides the cognitive infrastructure to make this distinction measurable and actionable.

The Problem: AI agents exhibit "confident ignorance"—they generate plausible-sounding responses about topics they don't actually understand. This leads to:

- Hallucinated facts presented as truth

- Wasted time investigating already-explored dead ends

- Knowledge lost between sessions

- No way to tell when an AI is genuinely confident vs. bluffing

The Solution: Empirica introduces epistemic vectors—quantified measures of knowledge state that AI agents track in real-time. These vectors emerged from observing what information actually matters when assessing cognitive readiness.

These vectors weren't designed in a vacuum. They emerged from 600+ real working sessions across multiple AI systems (Claude, GPT-4, Gemini, Qwen, and others), with Claude serving as the primary development partner due to its reasoning capabilities.

The pattern proved universal: regardless of which AI system we tested, these same dimensions consistently predicted success or failure in complex tasks.

| Tier | Vector | What It Measures |

|---|---|---|

| Gate | engagement |

Is the AI actively processing or disengaged? |

| Foundation | know |

Domain knowledge depth (0.7+ = ready to act) |

do |

Execution capability | |

context |

Access to relevant information | |

| Comprehension | clarity |

How clear is the understanding? |

coherence |

Do the pieces fit together? | |

signal |

Signal-to-noise in available information | |

density |

Information richness | |

| Execution | state |

Current working state |

change |

Rate of progress/change | |

completion |

Task completion level | |

impact |

Significance of the work | |

| Meta | uncertainty |

Explicit doubt tracking (0.35- = ready to act) |

Readiness Gate: Through empirical observation, we found that know ≥ 0.70 AND uncertainty ≤ 0.35 reliably predicts successful task execution. Below these thresholds, investigation is needed.

The Key Insight: The uncertainty vector is explicitly tracked because AI systems naturally underreport doubt. Making it a first-class metric forces honest assessment.

While the vectors emerged from software development work, they map to any domain requiring knowledge assessment:

| Industry | Primary Vectors | Use Case |

|---|---|---|

| Software Development | know, context, uncertainty, completion | Code review, architecture decisions, debugging |

| Research & Analysis | know, clarity, coherence, signal | Literature review, hypothesis testing |

| Healthcare | know, uncertainty, impact | Diagnostic confidence, treatment recommendations |

| Legal | context, clarity, coherence | Case analysis, precedent research |

| Education | know, do, completion | Learning assessment, curriculum design |

| Finance | know, uncertainty, impact | Risk assessment, investment analysis |

Software engineering provides an ideal testbed because:

- Measurable outcomes - Code either works or it doesn't

- Complex knowledge states - Requires synthesizing documentation, code, tests, and context

- Session continuity - Projects span days/weeks with context loss between sessions

- Multi-agent potential - Team collaboration benefits from shared epistemic state

Empirica was battle-tested here before expanding to other domains.

Visit getempirica.com for the guided setup experience with tutorials and support.

The installer sets up everything: Claude Code hooks, system prompts, environment configuration, and a demo project.

curl -fsSL https://raw.githubusercontent.com/Nubaeon/empirica/main/scripts/install.py | python3 -Or download and run manually:

wget https://raw.githubusercontent.com/Nubaeon/empirica/main/scripts/install.py

python3 install.pyInvoke-WebRequest -Uri "https://raw.githubusercontent.com/Nubaeon/empirica/main/scripts/install.py" -OutFile "install.py"

python install.py- Installs Empirica via pip

- Sets up Claude Code hooks for automatic epistemic continuity

-

Places CLAUDE.md in the correct location (

~/.claude/CLAUDE.md) - Configures environment variables for your shell

- Creates a demo project so you can try it immediately

- Optionally sets up Qdrant for semantic memory (local vector search)

If you prefer manual setup:

# Install from PyPI

pip install empirica

# Or with all features

pip install empirica[all]

# MCP Server (for Claude Desktop, Cursor, etc.)

pip install empirica-mcp

# Initialize in your project

cd your-project

empirica project-init

⚠️ Important: System Prompt RequiredEmpirica requires a system prompt to function correctly. The CLI tools work without it, but the full epistemic workflow (CASCADE phases, calibration, Sentinel gates) requires the AI to understand the framework.

For manual installations, copy the system prompt:

# Create Claude Code config directory mkdir -p ~/.claude # Copy the system prompt (choose your AI) curl -fsSL https://raw.githubusercontent.com/Nubaeon/empirica/main/docs/human/developers/system-prompts/CLAUDE.md \ -o ~/.claude/CLAUDE.mdThe installer handles this automatically. See System Prompts for prompts for other AI assistants (Copilot, etc.).

brew tap nubaeon/tap

brew install empirica# Standard image (Debian slim, ~414MB)

docker pull nubaeon/empirica:1.5.0

# Security-hardened Alpine image (~276MB, recommended)

docker pull nubaeon/empirica:1.5.0-alpine

# Run

docker run -it -v $(pwd)/.empirica:/data/.empirica nubaeon/empirica:1.5.0 /bin/bashOnce installed, let Empirica teach you how it works:

# Start the guided onboarding experience

empirica onboardThis walks you through creating your first session, understanding vectors, and logging your first finding.

If you're using Claude Code or another AI with Empirica installed:

"Explain how Empirica works using docs-explain"

"What are epistemic vectors and how do I use them?"

"Help me set up Empirica for my project"

The AI can query Empirica's documentation semantically and explain concepts tailored to your context.

# Search documentation semantically

empirica docs-explain --topic "epistemic vectors"

empirica docs-explain --topic "CASCADE workflow"

empirica docs-explain --topic "session management"

# List all available topics

empirica docs-listThe installer creates a demo project at ~/empirica-demo/. Navigate there and follow the WALKTHROUGH.md:

cd ~/empirica-demo

cat WALKTHROUGH.mdOnce you understand the basics, add epistemic foundations to your existing projects:

cd your-existing-project

empirica project-init

# Create your first session

empirica session-create --ai-id claude-code --output json

# Start tracking what you know

empirica preflight-submit -Start here based on your role:

| Role | Start With | Then Read |

|---|---|---|

| End User | Getting Started | Empirica Explained Simply |

| Developer | Developer README | Claude Code Setup |

Documentation Structure:

docs/

├── human/ # Human-readable documentation

│ ├── end-users/ # Installation, concepts, troubleshooting

│ └── developers/ # Integration, system prompts, API

│ └── system-prompts/ # AI system prompts (Claude, Copilot, etc.)

│

└── architecture/ # Technical architecture (for AI context loading)

If you're integrating Empirica into an AI system:

- System Prompts: docs/human/developers/system-prompts/

- MCP Server: empirica-mcp/ (Model Context Protocol integration)

- Architecture Docs: docs/architecture/ (AI-optimized technical reference)

| Guide | Purpose |

|---|---|

| CASCADE Workflow | The PREFLIGHT → CHECK → POSTFLIGHT loop |

| Epistemic Vectors Explained | Deep dive into all 13 vectors |

| CLI Reference | Complete command documentation |

| Storage Architecture | Four-layer data persistence |

Every significant task follows this loop:

PREFLIGHT ────────► CHECK ────────► POSTFLIGHT

│ │ │

│ │ │

Baseline Decision Learning

Assessment Gate Delta

│ │ │

"What do I "Am I ready "What did I

know now?" to act?" learn?"

PREFLIGHT: AI assesses its knowledge state before starting work. CHECK: Sentinel gate validates readiness (know ≥ 0.70, uncertainty ≤ 0.35). POSTFLIGHT: AI measures what it learned, creating a learning delta.

Session 1: know=0.40 → know=0.65 (Δ +0.25)

↓ (findings persisted)

Session 2: know=0.70 → know=0.85 (Δ +0.15)

↓ (compound learning)

Session 3: know=0.82 → know=0.92 (Δ +0.10)

Each session starts higher because learnings persist. No more re-investigating the same questions.

With Claude Code hooks enabled, you see epistemic state in your terminal:

[empirica] ⚡94% │ 🎯3 ❓12/5 │ POSTFLIGHT │ K:95% U:5% C:92% │ Δ K:+0.07 U:-0.05 ✓:+0.90 │ ✓ stable

What this tells you:

- ⚡94% — Overall epistemic confidence (⚡ high, 💡 good, 💫 uncertain, 🌑 low)

- 🎯3 ❓12/5 — Open goals (3) and unknowns (12 total, 5 blocking goals)

- POSTFLIGHT — CASCADE phase (PREFLIGHT → CHECK → POSTFLIGHT)

- K:95% U:5% C:92% — Knowledge, Uncertainty, Context scores

- Δ K:+0.07 ✓:+0.90 — Learning deltas (K=know, U=uncertainty, ✓=completion)

- ✓ stable — Drift indicator (✓ stable, ⚠ drifting, ✗ severe)

Projects using Empirica's epistemic foundations:

| Project | Description | Use Case |

|---|---|---|

| Docpistemic | Epistemic documentation system | Self-aware documentation that tracks what it explains well vs. poorly |

| Carapace | Defensive AI shell | Security-focused AI wrapper with epistemic safety gates |

| Empirica CRM | Customer relationship management | CRM where AI knows its confidence about customer insights |

Building something with Empirica? Open an issue to get listed here.

-

Simplified CASCADE Workflow — Direct

submit_preflight_assessment/submit_postflight_assessment(removed execute_* theater) - Python 3.10+ Support — Lowered minimum from 3.11 to 3.10 for broader compatibility

- Fixed Homebrew/Installer — Corrected checksums and version, added Python version check

- Plugin Auto-Discovery — Entry points for extending Empirica

- Qdrant Memory Integration — Full 4-collection semantic memory system

- Lessons System — Cold storage procedural knowledge with cognitive immune decay

- Cross-Platform Installer — One-command setup for Linux, macOS, Windows

- Sentinel Safety Gates — Human-in-the-loop gates bounding AI autonomy

Your data stays local:

-

.empirica/— Local SQLite database (gitignored by default) -

.git/refs/notes/empirica/*— Epistemic checkpoints (local unless you push) - Qdrant runs locally if enabled

No cloud dependencies. No telemetry. Your epistemic data is yours.

- Website: getempirica.com

- Issues: GitHub Issues

- Discussions: GitHub Discussions

MIT License — Maximum adoption, aligned with Empirica's transparency principles.

See LICENSE for details.

Author: David S. L. Van Assche Version: 1.5.0

Built through 800+ sessions of genuine epistemic collaboration between humans and AI.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for empirica

Similar Open Source Tools

empirica

Empirica is an epistemic self-awareness framework for AI agents to understand their knowledge boundaries. It introduces epistemic vectors to measure knowledge state and uncertainty, enabling honest communication. The tool emerged from 600+ real working sessions across various AI systems, providing cognitive infrastructure for distinguishing between confident knowledge and guessing. Empirica's 13 foundational vectors cover engagement, domain knowledge depth, execution capability, information access, understanding clarity, coherence, signal-to-noise ratio, information richness, working state, progress rate, task completion level, work significance, and explicit doubt tracking. It is applicable across industries like software development, research, healthcare, legal, education, and finance, aiding in tasks such as code review, hypothesis testing, diagnostic confidence, case analysis, learning assessment, and risk assessment.

explain-openclaw

Explain OpenClaw is a comprehensive documentation repository for the OpenClaw framework, a self-hosted AI assistant platform. It covers various aspects such as plain English explanations, technical architecture, deployment scenarios, privacy and safety measures, security audits, worst-case security scenarios, optimizations, and AI model comparisons. The repository serves as a living knowledge base with beginner-friendly explanations and detailed technical insights for contributors.

pollinations

pollinations.ai is an open-source generative AI platform based in Berlin, empowering community projects with accessible text, image, video, and audio generation APIs. It offers a unified API endpoint for various AI generation needs, including text, images, audio, and video. The platform provides features like image generation using models such as Flux, GPT Image, Seedream, and Kontext, video generation with Seedance and Veo, and audio generation with text-to-speech and speech-to-text capabilities. Users can access the platform through a web interface or API, and authentication is managed through API keys. The platform is community-driven, transparent, and ethical, aiming to make AI technology open, accessible, and interconnected while fostering innovation and responsible development.

RepoMaster

RepoMaster is an AI agent that leverages GitHub repositories to solve complex real-world tasks. It transforms how coding tasks are solved by automatically finding the right GitHub tools and making them work together seamlessly. Users can describe their tasks, and RepoMaster's AI analysis leads to auto discovery and smart execution, resulting in perfect outcomes. The tool provides a web interface for beginners and a command-line interface for advanced users, along with specialized agents for deep search, general assistance, and repository tasks.

EnvScaler

EnvScaler is an automated, scalable framework that creates tool-interactive environments for training LLM agents. It consists of SkelBuilder for environment description mining and quality inspection, ScenGenerator for synthesizing multiple environment scenarios, and modules for supervised fine-tuning and reinforcement learning. The tool provides data, models, and evaluation guides for users to build, generate scenarios, collect training data, train models, and evaluate performance. Users can interact with environments, build environments from scratch, and improve LLMs' task-solving abilities in complex environments.

DreamLayer

DreamLayer AI is an open-source Stable Diffusion WebUI designed for AI researchers, labs, and developers. It automates prompts, seeds, and metrics for benchmarking models, datasets, and samplers, enabling reproducible evaluations across multiple seeds and configurations. The tool integrates custom metrics and evaluation pipelines, providing a streamlined workflow for AI research. With features like automated benchmarking, reproducibility, built-in metrics, multi-modal readiness, and researcher-friendly interface, DreamLayer AI aims to simplify and accelerate the model evaluation process.

airunner

AI Runner is a multi-modal AI interface that allows users to run open-source large language models and AI image generators on their own hardware. The tool provides features such as voice-based chatbot conversations, text-to-speech, speech-to-text, vision-to-text, text generation with large language models, image generation capabilities, image manipulation tools, utility functions, and more. It aims to provide a stable and user-friendly experience with security updates, a new UI, and a streamlined installation process. The application is designed to run offline on users' hardware without relying on a web server, offering a smooth and responsive user experience.

jido_ai

Jido.AI is an AI integration layer for the Jido ecosystem, providing a toolkit for building intelligent agents with LLMs. It implements reasoning strategies for tool use, multi-step reasoning, and complex planning to enhance results from language models.

MassGen

MassGen is a cutting-edge multi-agent system that leverages the power of collaborative AI to solve complex tasks. It assigns a task to multiple AI agents who work in parallel, observe each other's progress, and refine their approaches to converge on the best solution to deliver a comprehensive and high-quality result. The system operates through an architecture designed for seamless multi-agent collaboration, with key features including cross-model/agent synergy, parallel processing, intelligence sharing, consensus building, and live visualization. Users can install the system, configure API settings, and run MassGen for various tasks such as question answering, creative writing, research, development & coding tasks, and web automation & browser tasks. The roadmap includes plans for advanced agent collaboration, expanded model, tool & agent integration, improved performance & scalability, enhanced developer experience, and a web interface.

EvoAgentX

EvoAgentX is an open-source framework for building, evaluating, and evolving LLM-based agents or agentic workflows in an automated, modular, and goal-driven manner. It enables developers and researchers to move beyond static prompt chaining or manual workflow orchestration by introducing a self-evolving agent ecosystem. The framework includes features such as agent workflow autoconstruction, built-in evaluation, self-evolution engine, plug-and-play compatibility, comprehensive built-in tools, memory module support, and human-in-the-loop interactions.

pocketpaw

PocketPaw is a lightweight and user-friendly tool designed for managing and organizing your digital assets. It provides a simple interface for users to easily categorize, tag, and search for files across different platforms. With PocketPaw, you can efficiently organize your photos, documents, and other files in a centralized location, making it easier to access and share them. Whether you are a student looking to organize your study materials, a professional managing project files, or a casual user wanting to declutter your digital space, PocketPaw is the perfect solution for all your file management needs.

octocode-mcp

Octocode is a methodology and platform that empowers AI assistants with the skills of a Senior Staff Engineer. It transforms how AI interacts with code by moving from 'guessing' based on training data to 'knowing' based on deep, evidence-based research. The ecosystem includes the Manifest for Research Driven Development, the MCP Server for code interaction, Agent Skills for extending AI capabilities, a CLI for managing agent capabilities, and comprehensive documentation covering installation, core concepts, tutorials, and reference materials.

spaCy

spaCy is an industrial-strength Natural Language Processing (NLP) library in Python and Cython. It incorporates the latest research and is designed for real-world applications. The library offers pretrained pipelines supporting 70+ languages, with advanced neural network models for tasks such as tagging, parsing, named entity recognition, and text classification. It also facilitates multi-task learning with pretrained transformers like BERT, along with a production-ready training system and streamlined model packaging, deployment, and workflow management. spaCy is commercial open-source software released under the MIT license.

llamafarm

LlamaFarm is a comprehensive AI framework that empowers users to build powerful AI applications locally, with full control over costs and deployment options. It provides modular components for RAG systems, vector databases, model management, prompt engineering, and fine-tuning. Users can create differentiated AI products without needing extensive ML expertise, using simple CLI commands and YAML configs. The framework supports local-first development, production-ready components, strategy-based configuration, and deployment anywhere from laptops to the cloud.

dotclaude

A sophisticated multi-agent configuration system for Claude Code that provides specialized agents and command templates to accelerate code review, refactoring, security audits, tech-lead-guidance, and UX evaluations. It offers essential commands, directory structure details, agent system overview, command templates, usage patterns, collaboration philosophy, sync management, advanced usage guidelines, and FAQ. The tool aims to streamline development workflows, enhance code quality, and facilitate collaboration between developers and AI agents.

For similar tasks

empirica

Empirica is an epistemic self-awareness framework for AI agents to understand their knowledge boundaries. It introduces epistemic vectors to measure knowledge state and uncertainty, enabling honest communication. The tool emerged from 600+ real working sessions across various AI systems, providing cognitive infrastructure for distinguishing between confident knowledge and guessing. Empirica's 13 foundational vectors cover engagement, domain knowledge depth, execution capability, information access, understanding clarity, coherence, signal-to-noise ratio, information richness, working state, progress rate, task completion level, work significance, and explicit doubt tracking. It is applicable across industries like software development, research, healthcare, legal, education, and finance, aiding in tasks such as code review, hypothesis testing, diagnostic confidence, case analysis, learning assessment, and risk assessment.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.