tracking-aircraft

None

Stars: 61

This repository provides a demo that tracks aircraft using Redis and Node.js by receiving aircraft transponder broadcasts through a software-defined radio (SDR) and storing them in Redis. The demo includes instructions for setting up the hardware and software components required for tracking aircraft. It consists of four main components: Radio Ingestor, Flight Server, Flight UI, and Redis. The Radio Ingestor captures transponder broadcasts and writes them to a Redis event stream, while the Flight Server consumes the event stream, enriches the data, and provides APIs to query aircraft status. The Flight UI presents flight data to users in map and detail views. Users can run the demo by setting up the hardware, installing SDR software, and running the components using Docker or Node.js.

README:

I wrote a rather elaborate demo that tracks aircraft using Redis and Node.js. It does this by receiving aircraft transponder broadcasts and shoving them into an event stream in Redis.

There is no Internet involved in receiving these broadcasts. Instead, a hardware device called a software-defined radio (SDR) receives these broadcasts from the air and converts them into useful data that we can use. Which is pretty dang cool!

This repo contains the code and instructions you need to get it up and running for yourself.

I also have a talk about this. If you want to check out the slides, they're in here too.

This demo uses a software-defined radio. Despite the use of the word software in its name, software-defined radio is actually a hardware device. So, you'll need to buy an SDR and an antenna to use this demo. No worries, SDRs are cheap.

Here's the load-out I like to use. It includes the SDR dongle and an antenna specifically designed for picking up aircraft transponder broadcasts.

- RTL SDR: https://www.amazon.com/RTL-SDR-Blog-RTL2832U-Software-Defined/dp/B0CD745394/

- ADS-B Antenna: https://www.amazon.com/1090MHz-Antenna-Fiberglass-SMA-Male-Extension/dp/B09BQ4H26L/

If you want something cheaper and more general-purpose, and this is probably a better choice, some bundles include the same SDR and some more flexible antennas. This is a good way to go if you want to do other things with your SDR. Which will happen.

You can also make your own antenna if you are ambitious. I'll leave that up to your own googling.

If you choose to make an antenna or to use the kit above, you'll want both legs of the antenna extended/cut to a length of ~69mm. Arrange them so that they are in a line, 180 degrees apart. This, in radio terms, is called a dipole.

Regardless of which antenna option you go with, you'll want to mount it vertically as this matches the way they are mounted on aircraft. Here's a picture of a homemade antenna (not mine) mounted vertically in an attic (not mine):

Thanks for the picture, random Internet stranger!

Installing SDR software can be a bit fiddly. You've been warned. That said, it has gotten easier over the years. You'll need two pieces of software to make this code work—besides, like, Node.js and stuff: the RTL SDR drivers, and dump1090.

These drivers allow your computer to talk to the SDR. And they provide lots of interesting command-line tools to boot. You'll need this to do, well, anything with your SDR.

sudo apt update

sudo apt install rtl-sdrbrew install rtl-sdrYou can download the RTL SDR drivers and tools from https://ftp.osmocom.org/binaries/windows/rtl-sdr/. They are built weekly so get the latest one for your platform—probably the 64-bit one. The download is simply a .ZIP file full of .EXE and .DLL files. Put these files in a folder somewhere on your system and add that folder to your PATH to get it working.

Details on the drivers themselves can be found at https://osmocom.org/projects/rtl-sdr/wiki/Rtl-sdr.

Regardless of your platform, testing is the same. Plug in your SDR and run the following command:

rtl_testYou should get back something like:

Found 1 device(s):

0: Realtek, RTL2838UHIDIR, SN: 00000001

Using device 0: Generic RTL2832U OEM

Detached kernel driver

Found Rafael Micro R820T tuner

Supported gain values (29): 0.0 0.9 1.4 2.7 3.7 7.7 8.7 12.5 14.4 15.7 16.6 19.7 20.7 22.9 25.4 28.0 29.7 32.8 33.8 36.4 37.2 38.6 40.2 42.1 43.4 43.9 44.5 48.0 49.6

[R82XX] PLL not locked!

Sampling at 2048000 S/s.

Info: This tool will continuously read from the device, and report if

samples get lost. If you observe no further output, everything is fine.

Reading samples in async mode...

Allocating 15 zero-copy buffers

lost at least 12 bytes

Hooray! Your drivers are working. Press Ctrl-C to stop and go to the next step.

Dump1090 is software that uses an RTL SDR to listen to aircraft transponder broadcasts, present them to the user, and make them available over a socket connection. The code in this repo uses that socket connection to read the broadcasts.

sudo apt install dump1090-mutabilityYou might be asked if you want to install this so that it always runs. I've only ever said no.

brew install dump1090-mutabilityWindows has a lot of ports of dump1090. I like to use this one: https://github.com/MalcolmRobb/dump1090.

Just download the .ZIP file in the root of the repository—yes, it's really 10 years old—and unzip it into a folder of your choice. From there, you can just run it from that folder or, if you prefer, add it to your path.

You should now be able to run dump1090 using the following command:

dump1090 --net --interactiveIf you installed to Linux, you'll need to run this instead:

dump1090-mutability --net --interactiveIf you have your antenna attached, aircraft should start showing up. Here's some that I found today while writing this README:

Hex Mode Sqwk Flight Alt Spd Hdg Lat Long RSSI Msgs Ti/

-------------------------------------------------------------------------------

AD4C2A S -39.6 3 1

A097CE S 40000 -32.5 11 0

06A1DA S 47000 -33.4 7 0

A71ABB S 7143 43000 476 272 -33.1 18 1

A280FE S 40000 443 299 40.346 -83.589 -35.5 5 0

A1FF90 S 4000 230 294 39.920 -83.152 -28.2 13 0

A59398 S 1512 AAL464 34975 472 082 40.013 -82.266 -27.4 55 0

A05544 S 43000 474 107 40.163 -82.640 -29.5 21 2

A66312 S 1200 OSU51 1700 90 270 40.091 -83.082 -25.2 49 0

A537ED S 6646 RPA4723 31000 439 103 39.867 -82.767 -28.0 100 0

A05AAC S 6616 UCA4824 31000 431 102 39.984 -82.297 -23.8 39 0

A16B47 S 41000 491 045 40.486 -83.588 -31.7 39 1

A69939 S 1546 EJA524 5925 240 275 39.909 -82.957 -25.0 64 0

AC0B74 S SWA4635 36000 455 267 40.523 -83.089 -28.6 94 0

A0B990 S 6036 40000 423 322 39.488 -83.482 -26.7 56 0

C07C7A S 7276 37000 467 053 40.124 -82.966 -19.3 56 0

Now that we have the fiddly bits working, we can get the demo running. The demo itself is made up of four components: the Radio Ingestor, the Flight Server, the Flight UI, and Redis.

graph LR

subgraph "Atlanta"

ANT1{Antenna} --> SDR1((RTL SDR)) --> DMP1[dump1090] --> ING1("Radio Ingestor")

end

subgraph "Columbus"

ANT2{Antenna} --> SDR2((RTL SDR)) --> DMP2[dump1090] --> ING2("Radio Ingestor")

end

subgraph "Denver"

ANT3{Antenna} --> SDR3((RTL SDR)) --> DMP3[dump1090] --> ING3("Radio Ingestor")

end

ING1 --> RED[Redis]

ING2 --> RED

ING3 --> RED

RED <--> SRV("Flight Server")

subgraph "Consumer"

SRV <--> WEB("Flight UI")

endThe purpose of the Radio Ingestor is to take transponder broadcasts and write them to a Redis event stream. It is designed so that multiple instances can run at the same time feeding aircraft spots into Redis from multiple, geographically dispersed locations.

The Flight Server consumes the event stream, enriches it, and saves current flight statuses to Redis. It also publishes the enriched data as a WebSocket and provides simple HTTP APIs to query aircraft status and stats.

The Flight UI presents flight data to the end user providing both map and detail views. It is designed to work alongside the Flight Server and is useless without it.

In a dedicated window, launch dump1090 with one of the following commands:

dump1090 --net --interactive # for Mac or Windows

dump1090-mutability --net --interactive # for LinuxThe --net option tells dump1090 to publish transponder broadcasts on port 30003. The --interactive option just makes it prettier.

Now, from the root of this repo run:

docker compose up --buildThis will download Redis, build all the components, and start them up with defaults that will work. No fuss. No muss.

Once it's started, point your browser at http://localhost:8000 and watch the aircraft move about.

Redis is where we're storing our aircraft spots. If you're not going with the quickstart, you'll need Redis somewhere. You can either install it locally, use Docker, or use Redis Cloud.

-

To install Redis locally, follow the instructions at https://redis.io/docs/latest/operate/oss_and_stack/install/install-stack/.

-

To use Docker, run the following command:

docker run -d --name redis-stack-server -p 6379:6379 redis/redis-stack-server:latest- To use Redis Cloud, sign up for a free account at https://cloud.redis.io/.

You might also want to snag Redis Insight so you can see what Redis is doing. You can find that on the App Store, the Microsoft Store, or directly from Redis.

You'll need an existing Redis instance to do this. This might be local, but will probably be a Redis Cloud instance.

Before you run the Radio Ingestor, it must be configured. Details are in the the sample.env file in the radio-ingestor folder. However, the tl;dr is:

cd radio-ingestor

cp sample.env .envThen edit the Redis options in the .env file to point to your Redis instance.

To run the Radio Ingestor you can just use Docker:

cd radio-ingestor

docker compose up --buildIf you'd rather run it using Node.js directly, then make sure you have Node.js installed and run the following commands:

cd radio-ingestor

npm install

npm run build

npm startIf you'd like to run it in dev mode instead you can skip the build:

cd radio-ingestor

npm install

npm run devYou should be able to see an event stream in Redis—using Redis Insight, of course—populating with aircraft transponder events.

You'll need an existing Redis instance to do this. This could be a local instance of Redis, but will probably be a shared instance like Redis Cloud. This instance should be fed by one or more instances of a Radio Ingestor. Technically, this'll work even if the instance isn't being fed, but it won't be very interesting. Nothing in. Nothing out.

Before you run the Flight Server, it must be configured. Details are in the sample.env file in the flight-server folder. However, the tl;dr is:

cd flight-server

cp sample.env .envThen edit the Redis options in the .env file to point to your Redis instance.

To run the Flight Server you can just use Docker:

cd flight-server

docker compose up --buildIf you'd rather run it using Node.js directly, then make sure you have Node.js installed and run the following commands:

cd flight-server

npm install

npm run build

npm startIf you'd like to run it in dev mode instead you can skip the build:

cd flight-server

npm install

npm run devIf these instructions seem familiar, that's because I copied them directly from the Radio Ingestor instructions. It's the same process.

Regardless, if you look in Redis Insight, you should see a lot more keys including JSON documents for each of the aircraft spotted; T-Digests gathering stats about altitude, velocity, and climb; a HyperLogLog counting unique aircraft; and a humble little string counting the number of messages received.

The Flight UI does not need to be configured. It's all ready to go. However, it is hard-coded to look for the Flight Server on localhost:8080 and to expose itself on port 8000 when run in developer or preview mode. Once you compile it, you can server up the files on any port you'd like.

At some point, I'd like to make the Flight Server host and port configurable. But this is what we have for now.

To run the Flight Server you can just use Docker. Docker will compile the site and then host it internally on an NGINX instance:

cd flight-ui

docker compose up --buildIf you'd rather run it using Node.js and vite, then make sure you have Node.js installed and run the following commands:

cd flight-ui

npm install

npm run build

npm run previewIf you'd like to run it in dev mode instead you can skip the build:

cd flight-ui

npm install

npm run devIn any of these cases, point your browser at http://localhost:8000 and you should see an aircraft map.

That's pretty much it. If you see a bug, a typo, or some small improvements, feel free to send a PR. If you see something big or would like to make major improvements, reach out and let's discuss it.

I hope you find this fun and instructive. Happy spotting!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for tracking-aircraft

Similar Open Source Tools

tracking-aircraft

This repository provides a demo that tracks aircraft using Redis and Node.js by receiving aircraft transponder broadcasts through a software-defined radio (SDR) and storing them in Redis. The demo includes instructions for setting up the hardware and software components required for tracking aircraft. It consists of four main components: Radio Ingestor, Flight Server, Flight UI, and Redis. The Radio Ingestor captures transponder broadcasts and writes them to a Redis event stream, while the Flight Server consumes the event stream, enriches the data, and provides APIs to query aircraft status. The Flight UI presents flight data to users in map and detail views. Users can run the demo by setting up the hardware, installing SDR software, and running the components using Docker or Node.js.

maxheadbox

Max Headbox is an open-source voice-activated LLM Agent designed to run on a Raspberry Pi. It can be configured to execute a variety of tools and perform actions. The project requires specific hardware and software setups, and provides detailed instructions for installation, configuration, and usage. Users can create custom tools by making JavaScript modules and backend API handlers. The project acknowledges the use of various open-source projects and resources in its development.

ai-voice-cloning

This repository provides a tool for AI voice cloning, allowing users to generate synthetic speech that closely resembles a target speaker's voice. The tool is designed to be user-friendly and accessible, with a graphical user interface that guides users through the process of training a voice model and generating synthetic speech. The tool also includes a variety of features that allow users to customize the generated speech, such as the pitch, volume, and speaking rate. Overall, this tool is a valuable resource for anyone interested in creating realistic and engaging synthetic speech.

aiarena-web

aiarena-web is a website designed for running the aiarena.net infrastructure. It consists of different modules such as core functionality, web API endpoints, frontend templates, and a module for linking users to their Patreon accounts. The website serves as a platform for obtaining new matches, reporting results, featuring match replays, and connecting with Patreon supporters. The project is licensed under GPLv3 in 2019.

AlwaysReddy

AlwaysReddy is a simple LLM assistant with no UI that you interact with entirely using hotkeys. It can easily read from or write to your clipboard, and voice chat with you via TTS and STT. Here are some of the things you can use AlwaysReddy for: - Explain a new concept to AlwaysReddy and have it save the concept (in roughly your words) into a note. - Ask AlwaysReddy "What is X called?" when you know how to roughly describe something but can't remember what it is called. - Have AlwaysReddy proofread the text in your clipboard before you send it. - Ask AlwaysReddy "From the comments in my clipboard, what do the r/LocalLLaMA users think of X?" - Quickly list what you have done today and get AlwaysReddy to write a journal entry to your clipboard before you shutdown the computer for the day.

llamafile

llamafile is a tool that enables users to distribute and run Large Language Models (LLMs) with a single file. It combines llama.cpp with Cosmopolitan Libc to create a framework that simplifies the complexity of LLMs into a single-file executable called a 'llamafile'. Users can run these executable files locally on most computers without the need for installation, making open LLMs more accessible to developers and end users. llamafile also provides example llamafiles for various LLM models, allowing users to try out different LLMs locally. The tool supports multiple CPU microarchitectures, CPU architectures, and operating systems, making it versatile and easy to use.

Open-LLM-VTuber

Open-LLM-VTuber is a project in early stages of development that allows users to interact with Large Language Models (LLM) using voice commands and receive responses through a Live2D talking face. The project aims to provide a minimum viable prototype for offline use on macOS, Linux, and Windows, with features like long-term memory using MemGPT, customizable LLM backends, speech recognition, and text-to-speech providers. Users can configure the project to chat with LLMs, choose different backend services, and utilize Live2D models for visual representation. The project supports perpetual chat, offline operation, and GPU acceleration on macOS, addressing limitations of existing solutions on macOS.

openui

OpenUI is a tool designed to simplify the process of building UI components by allowing users to describe UI using their imagination and see it rendered live. It supports converting HTML to React, Svelte, Web Components, etc. The tool is open source and aims to make UI development fun, fast, and flexible. It integrates with various AI services like OpenAI, Groq, Gemini, Anthropic, Cohere, and Mistral, providing users with the flexibility to use different models. OpenUI also supports LiteLLM for connecting to various LLM services and allows users to create custom proxy configs. The tool can be run locally using Docker or Python, and it offers a development environment for quick setup and testing.

polis

Polis is an AI powered sentiment gathering platform that offers a more organic approach than surveys and requires less effort than focus groups. It provides a comprehensive wiki, main deployment at https://pol.is, discussions, issue tracking, and project board for users. Polis can be set up using Docker infrastructure and offers various commands for building and running containers. Users can test their instance, update the system, and deploy Polis for production. The tool also provides developer conveniences for code reloading, type checking, and database connections. Additionally, Polis supports end-to-end browser testing using Cypress and offers troubleshooting tips for common Docker and npm issues.

ultravox

Ultravox is a fast multimodal Language Model (LLM) that can understand both text and human speech in real-time without the need for a separate Audio Speech Recognition (ASR) stage. By extending Meta's Llama 3 model with a multimodal projector, Ultravox converts audio directly into a high-dimensional space used by Llama 3, enabling quick responses and potential understanding of paralinguistic cues like timing and emotion in human speech. The current version (v0.3) has impressive speed metrics and aims for further enhancements. Ultravox currently converts audio to streaming text and plans to emit speech tokens for direct audio conversion. The tool is open for collaboration to enhance this functionality.

AI-Horde-Worker

AI-Horde-Worker is a repository containing the original reference implementation for a worker that turns your graphics card(s) into a worker for the AI Horde. It allows users to generate or alchemize images for others. The repository provides instructions for setting up the worker on Windows and Linux, updating the worker code, running with multiple GPUs, and stopping the worker. Users can configure the worker using a WebUI to connect to the horde with their username and API key. The repository also includes information on model usage and running the Docker container with specified environment variables.

fasttrackml

FastTrackML is an experiment tracking server focused on speed and scalability, fully compatible with MLFlow. It provides a user-friendly interface to track and visualize your machine learning experiments, making it easy to compare different models and identify the best performing ones. FastTrackML is open source and can be easily installed and run with pip or Docker. It is also compatible with the MLFlow Python package, making it easy to integrate with your existing MLFlow workflows.

python-sc2

python-sc2 is an easy-to-use library for writing AI Bots for StarCraft II in Python 3. It aims for simplicity and ease of use while providing both high and low level abstractions. The library covers only the raw scripted interface and intends to help new bot authors with added functions. Users can install the library using pip and need a StarCraft II executable to run bots. The API configuration options allow users to customize bot behavior and performance. The community provides support through Discord servers, and users can contribute to the project by creating new issues or pull requests following style guidelines.

Demucs-Gui

Demucs GUI is a graphical user interface for the music separation project Demucs. It aims to allow users without coding experience to easily separate tracks. The tool provides a user-friendly interface for running the Demucs project, which originally used the scientific library torch. The GUI simplifies the process of separating tracks and provides support for different platforms such as Windows, macOS, and Linux. Users can donate to support the development of new models for the project, and the tool has specific system requirements including minimum system versions and hardware specifications.

ai-town

AI Town is a virtual town where AI characters live, chat, and socialize. This project provides a deployable starter kit for building and customizing your own version of AI Town. It features a game engine, database, vector search, auth, text model, deployment, pixel art generation, background music generation, and local inference. You can customize your own simulation by creating characters and stories, updating spritesheets, changing the background, and modifying the background music.

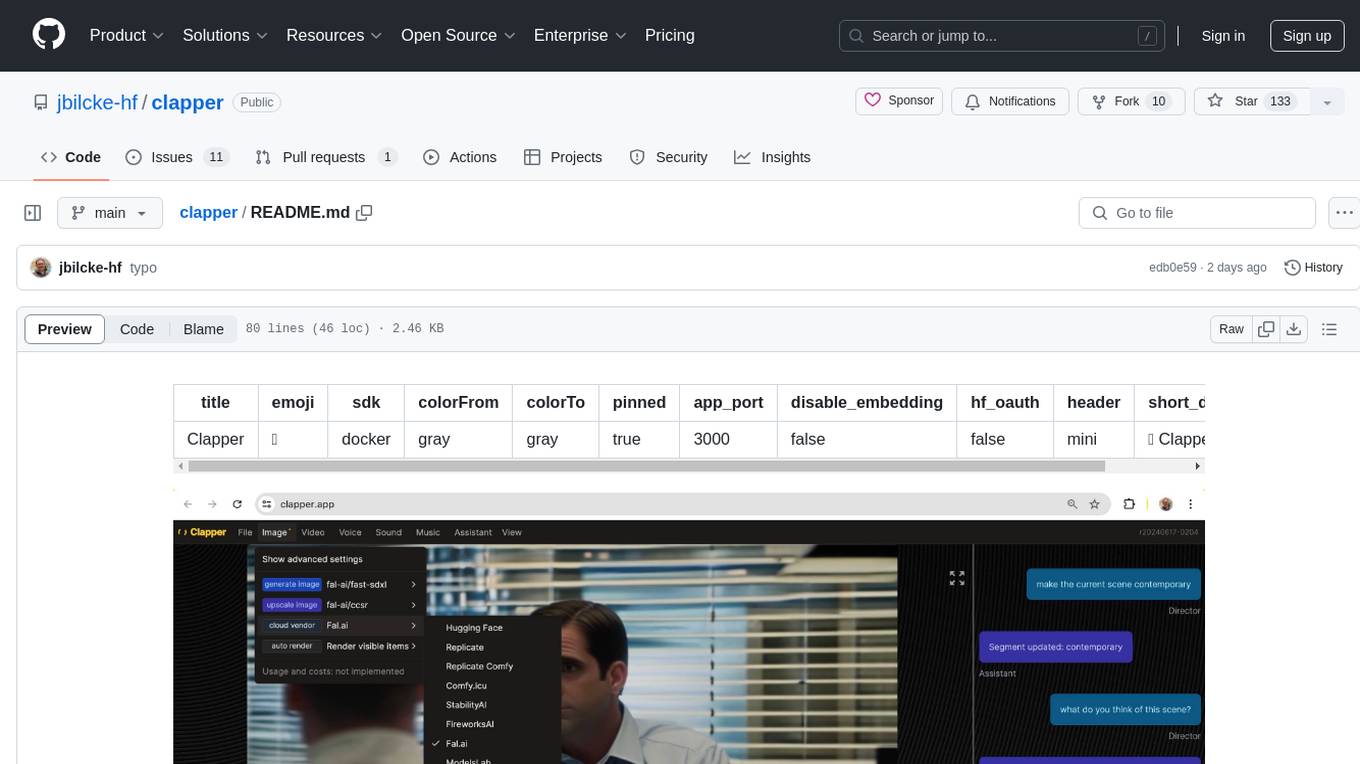

clapper

Clapper is an open-source AI story visualization tool that can interpret screenplays and render them into storyboards, videos, voice, sound, and music. It is currently in early development stages and not recommended for general use due to some non-functional features and lack of tutorials. A public alpha version is available on Hugging Face's platform. Users can sponsor specific features through bounties and developers can contribute to the project under the GPL v3 license. The tool lacks automated tests and code conventions like Prettier or a Linter.

For similar tasks

tracking-aircraft

This repository provides a demo that tracks aircraft using Redis and Node.js by receiving aircraft transponder broadcasts through a software-defined radio (SDR) and storing them in Redis. The demo includes instructions for setting up the hardware and software components required for tracking aircraft. It consists of four main components: Radio Ingestor, Flight Server, Flight UI, and Redis. The Radio Ingestor captures transponder broadcasts and writes them to a Redis event stream, while the Flight Server consumes the event stream, enriches the data, and provides APIs to query aircraft status. The Flight UI presents flight data to users in map and detail views. Users can run the demo by setting up the hardware, installing SDR software, and running the components using Docker or Node.js.

airplanes-live-app

The airplanes.live App is an Android application designed for users to view aircraft on a map, search for aircraft by various criteria, receive notifications for specific events, and access shortcuts for specific views. The app provides features such as SQUAWK alerts and ICAO alerts to keep users informed about aircraft activities. Users can download the app from GitHub or F-Droid (IzzyOnDroid) to enjoy its functionalities. The code is available under a GPL v3 license, excluding certain assets and materials like icons, logos, and documentation.

For similar jobs

tracking-aircraft

This repository provides a demo that tracks aircraft using Redis and Node.js by receiving aircraft transponder broadcasts through a software-defined radio (SDR) and storing them in Redis. The demo includes instructions for setting up the hardware and software components required for tracking aircraft. It consists of four main components: Radio Ingestor, Flight Server, Flight UI, and Redis. The Radio Ingestor captures transponder broadcasts and writes them to a Redis event stream, while the Flight Server consumes the event stream, enriches the data, and provides APIs to query aircraft status. The Flight UI presents flight data to users in map and detail views. Users can run the demo by setting up the hardware, installing SDR software, and running the components using Docker or Node.js.

airplanes-live-app

The airplanes.live App is an Android application designed for users to view aircraft on a map, search for aircraft by various criteria, receive notifications for specific events, and access shortcuts for specific views. The app provides features such as SQUAWK alerts and ICAO alerts to keep users informed about aircraft activities. Users can download the app from GitHub or F-Droid (IzzyOnDroid) to enjoy its functionalities. The code is available under a GPL v3 license, excluding certain assets and materials like icons, logos, and documentation.

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.