awesome-generative-information-retrieval

None

Stars: 552

This repository contains a curated list of resources on generative information retrieval, including research papers, datasets, tools, and applications. Generative information retrieval is a subfield of information retrieval that uses generative models to generate new documents or passages of text that are relevant to a given query. This can be useful for a variety of tasks, such as question answering, summarization, and document generation. The resources in this repository are intended to help researchers and practitioners stay up-to-date on the latest advances in generative information retrieval.

README:

Conversational models started to be able to access the web or backup their claims with sources (a.k.a. attribution). These chatbots are thus arguably information retrieval machines, competing against or even substituing traditional search engines. We would like to dedicate a space to these models but also to the more general field of generative information retrieval. We tentatively devide the field in two main topics: Grounded Answer Generation and Generative Document Retrieval. We also include generative recommendation, generative grounded summarization etc.

Pull-requests welcome!

- Blog Posts

- Datasets

- Tools

- Evaluation

- Workshops and Tutorials

- Epistemology Papers

-

Grounded Answer Generation

- Retrieval Augmented Generation (RAG) (external grounding/retrieval at inference time)

- LLM Memory Manipulation (grounded in internal model weights at inference time)

- Fact Uncertainty Estimates

- Constrained Generation

- Data Centric

- Utility Maximization

- Multimodal

- Prompting

- Generate Code

- Query Generation

- Summarization and Document Rewriting

- Table QA

- Generative Document Retrieval

- Generative Recommendation

- Generative Knowledge Graphs

- Live Generative Retrieval

Deterministic Quoting: Making LLMs Safer for Healthcare

Matt Yeung

Personal Blog – Apr 2024 [link]

Retrieval Augmented Generation Research: 2017-2024

Moritz Mallawitsch

Scaling Knowledge – Feb 2024 [link]

Mastering RAG: How To Architect An Enterprise RAG System

Pratik Bhavsar

Galileo Labs – Jan 2024 [link]

Running Mixtral 8x7 locally with LlamaIndex

LlamaIndex

LlamaIndex Blog – Dec 2023 [link]

Advanced RAG Techniques: an Illustrated Overview

Ivan Ilin

Towards AI – Dec 2023 [link]

Multimodal RAG pipeline with LlamaIndex and Neo4j

Tomaz Bratanic

LlamaIndex Blog – Dec 2023 [link]

Benchmarking RAG on tables

LangChain

LangChain Blog – Dec 2023 [link]

Advanced RAG 01: Small-to-Big Retrieval

Sophia Yang

Towards Data Science – Nov 2023 [link]

Query Transformations

LangChain

LangChain Blog – Oct 2023 [link]

What Makes a Dialog Agent Useful?

Nazneen Rajani, Nathan Lambert, Victor Sanh, Thomas Wolf

Hugging Face Blog – Jan 2023 [link]

Forecasting potential misuses of language models for disinformation campaigns and how to reduce risk

Josh A. Goldstein, Girish Sastry, Micah Musser, Renée DiResta, Matthew Gentzel, Katerina Sedova

OpenAI Blog – Jan 2023 [link]

FreshLLMs: Refreshing Large Language Models with Search Engine Augmentation

Tu Vu, Mohit Iyyer, Xuezhi Wang, Noah Constant, Jerry Wei, Jason Wei, Chris Tar, Yun-Hsuan Sung, Denny Zhou, Quoc Le, Thang Luong

arXiv – October 2023 [paper] [code]

LegalBench: A Collaboratively Built Benchmark for Measuring Legal Reasoning in Large Language Models

Neel Guha, Julian Nyarko, Daniel E. Ho, Christopher Ré, Adam Chilton, Aditya Narayana, Alex Chohlas-Wood, Austin Peters, Brandon Waldon, Daniel N. Rockmore, Diego Zambrano, Dmitry Talisman, Enam Hoque, Faiz Surani, Frank Fagan, Galit Sarfaty, Gregory M. Dickinson, Haggai Porat, Jason Hegland, Jessica Wu, Joe Nudell, Joel Niklaus, John Nay, Jonathan H. Choi, Kevin Tobia, Margaret Hagan, Megan Ma, Michael Livermore, Nikon Rasumov-Rahe, Nils Holzenberger, Noam Kolt, Peter Henderson, Sean Rehaag, Sharad Goel, Shang Gao, Spencer Williams, Sunny Gandhi, Tom Zur, Varun Iyer, Zehua Li

arXiv – Aug 2023 [paper] [dataset]

OpenAssistant Conversations - Democratizing Large Language Model Alignment

Andreas Köpf, Yannic Kilcher, Dimitri von Rütte, Sotiris Anagnostidis, Zhi-Rui Tam, Keith Stevens, Abdullah Barhoum, Nguyen Minh Duc, Oliver Stanley, Richárd Nagyfi, Shahul ES, Sameer Suri, David Glushkov, Arnav Dantuluri, Andrew Maguire, Christoph Schuhmann, Huu Nguyen, Alexander Mattick

arXiv – April 2023 [paper]

ChatGPT-RetrievalQA

Arian Askari, Mohammad Aliannejadi, Evangelos Kanoulas, Suzan Verberne

Github – Feb 2023 [code]

KAMEL : Knowledge Analysis with Multitoken Entities in Language Models

Jan-Christoph Kalo, Leandra Fichtel

AKBC 22 – [paper]

TruthfulQA: Measuring How Models Mimic Human Falsehoods

Stephanie Lin, Jacob Hilton, Owain Evans

arXiv – Sep 2021 [paper] [code]

Complex Answer Retrieval

Laura Dietz, Manisha Verma, Filip Radlinski, Nick Craswell, Ben Gamari, Jeff Dalton, John Foley

TREC – 2017-2019 [link]

Narrowing the Knowledge Evaluation Gap: Open-Domain Question Answering with Multi-Granularity Answers

Gal Yona, Roee Aharoni, Mor Geva

arXiv – Jan 2024 [paper]

DHS LLM Workshop - Module 6

Sourab Mangrulkar

GitHub – Dec 2023 [code]

PrimeQA: The Prime Repository for State-of-the-Art Multilingual Question Answering Research and Development

Avirup Sil, Jaydeep Sen, Bhavani Iyer, Martin Franz, Kshitij Fadnis, Mihaela Bornea, Sara Rosenthal, Scott McCarley, Rong Zhang, Vishwajeet Kumar, Yulong Li, Md Arafat Sultan, Riyaz Bhat, Radu Florian, Salim Roukos

arXiv – Jan 2023 [paper] [code]

TRL: Transformer Reinforcement Learning

Leandro von Werra, Younes Belkada, Lewis Tunstall, Edward Beeching, Tristan Thrush, Nathan Lambert, Shengyi Huang

GitHub – 2020 [code]

FACTSCORE: Fine-grained Atomic Evaluation of Factual Precision in Long Form Text Generation

Sewon Min, Kalpesh Krishna, Xinxi Lyu, Mike Lewis, Wen-tau Yih, Pang Wei Koh, Mohit Iyyer, Luke Zettlemoyer, Hannaneh Hajishirzi

Pypi – May 2023 [paper] [code]

FACTKB: Generalizable Factuality Evaluation using Language Models Enhanced with Factual Knowledge

Shangbin Feng, Vidhisha Balachandran, Yuyang Bai, Yulia Tsvetkov

arXiv – May 2023 [paper] [code]

Evaluating Verifiability in Generative Search Engines

Nelson F. Liu, Tianyi Zhang, Percy Liang

arXiv – April 2023 [paper] [code]

Workshop on Generative AI for Recommender Systems and Personalization

Narges Tabari, Aniket Deshmukh, Wang-Cheng Kang, Rashmi Gangadharaiah, Hamed Zamani, Julian McAuley, George Karypis

KDD 24 – Aug 2024 [link]

Second Workshop on Generative Information Retrieval

Gabriel Bénédict, Ruqing Zhang, Donald Metzler, Andrew Yates, Ziyan Jiang

SIGIR 24 – Jul 2024 [link]

Personalized Generative AI

Zheng Chen, Ziyan Jiang, Fan Yang, Zhankui He, Yupeng Hou, Eunah Cho, Julian McAuley, Aram Galstyan, Xiaohua Hu, Jie Yang

CIKM 23 – Oct 2023 [link]

First Workshop on Recommendation with Generative Models

Wenjie Wang, Yong Liu, Yang Zhang, Weiwen Liu, Fuli Feng, Xiangnan He, Aixin Sun

CIKM 23 – Oct 2023 [link]

First Workshop on Generative Information Retrieval

Gabriel Bénédict, Ruqing Zhang, Donald Metzler

SIGIR 23 – Jul 2023 [link]

Retrieval-based Language Models and Applications

Akari Asai, Sewon Min, Zexuan Zhong, Danqi Chen

ACL 23 – Jul 2023 [link]

Recite, Reconstruct, Recollect: Memorization in LMs as a Multifaceted Phenomenon

USVSN Sai Prashanth, Alvin Deng, Kyle O'Brien, Jyothir S V, Mohammad Aflah Khan, Jaydeep Borkar, Christopher A. Choquette-Choo, Jacob Ray Fuehne, Stella Biderman, Tracy Ke, Katherine Lee, Naomi Saphra

arXiv – Jun 2024 [paper]

ChatGPT is bullshit

Michael Townsen Hicks, James Humphries, Joe Slater

Ethics Inf Technol – Jun 2024 [paper]

Hallucination of Multimodal Large Language Models: A Survey

Zechen Bai, Pichao Wang, Tianjun Xiao, Tong He, Zongbo Han, Zheng Zhang, Mike Zheng Shou

arXiv – Apr 2024 [paper]

From Matching to Generation: A Survey on Generative Information Retrieval

Xiaoxi Li, Jiajie Jin, Yujia Zhou, Yuyao Zhang, Peitian Zhang, Yutao Zhu, and Zhicheng Dou

arXiv – Apr 2024 [paper]

Knowledge Conflicts for LLMs: A Survey

Rongwu Xu, Zehan Qi, Cunxiang Wang, Hongru Wang, Yue Zhang, Wei Xu

arXiv – Mar 2024 [paper]

Report on the 1st Workshop on Generative Information Retrieval (Gen-IR 2023) at SIGIR 2023

Gabriel Bénédict, Ruqing Zhang, Donald Metzler, Andrew Yates, Romain Deffayet, Philipp Hager, Sami Jullien

SIGIR Forum – Dec 2023 [paper]

Report on the 1st Workshop on Task Focused IR in the Era of Generative AI

Chirag Shah, Ryen W. White

SIGIR Forum – Dec 2023 [paper]

Towards Generative Search and Recommendation: A keynote at RecSys 2023

Tat-Seng Chua

SIGIR Forum – Dec 2023 [paper]

Large Search Model: Redefining Search Stack in the Era of LLMs

Liang Wang, Nan Yang, Xiaolong Huang, Linjun Yang, Rangan Majumder, Furu Wei

SIGIR Forum – Dec 2023 [paper]

Large Language Models for Generative Information Extraction: A Survey

Derong Xu, Wei Chen, Wenjun Peng, Chao Zhang, Tong Xu, Xiangyu Zhao, Xian Wu, Yefeng Zheng, Enhong Chen

arXiv – Dec 2023 [paper]

Dense Text Retrieval based on Pretrained Language Models: A Survey

Wayne Xin Zhao, Jing Liu, Ruiyang Ren, Ji-Rong Wen

TOIS – Dec 2023 [paper]

Retrieval-Augmented Generation for Large Language Models: A Survey

Yunfan Gao, Yun Xiong, Xinyu Gao, Kangxiang Jia, Jinliu Pan, Yuxi Bi, Yi Dai, Jiawei Sun, Haofen Wang

arXiv – Dec 2023 [paper]

Calibrated Language Models Must Hallucinate

Adam Tauman Kalai, Santosh S. Vempala

arXiv – Nov 2023 [paper]

Siren's Song in the AI Ocean: A Survey on Hallucination in Large Language Models

Yue Zhang, Yafu Li, Leyang Cui, Deng Cai, Lemao Liu, Tingchen Fu, Xinting Huang, Enbo Zhao, Yu Zhang, Yulong Chen, Longyue Wang, Anh Tuan Luu, Wei Bi, Freda Shi, Shuming Shi

arXiv – Sep 2023 [paper]

The False Promise of Imitating Proprietary LLMs

Arnav Gudibande, Eric Wallace, Charlie Snell, Xinyang Geng, Hao Liu, Pieter Abbeel, Sergey Levine, Dawn Song

arXiv – May 2023 [paper]

Generative Recommendation: Towards Next-generation Recommender Paradigm

Fengji Zhang, Bei Chen, Yue Zhang, Jin Liu, Daoguang Zan, Yi Mao, Jian-Guang Lou, Weizhu Chen

arXiv – April 2023 [paper]

Augmented Language Models: a Survey

Grégoire Mialon, Roberto Dessì, Maria Lomeli, Christoforos Nalmpantis, Ram Pasunuru, Roberta Raileanu, Baptiste Rozière, Timo Schick, Jane Dwivedi-Yu, Asli Celikyilmaz, Edouard Grave, Yann LeCun, Thomas Scialom

arXiv – Feb 2023 [paper]

Generative Language Models and Automated Influence Operations: Emerging Threats and Potential Mitigations

Josh A. Goldstein, Girish Sastry, Micah Musser, Renee DiResta, Matthew Gentzel, Katerina Sedova

arXiv – Jan 2023 [paper]

Conversational Information Seeking. An Introduction to Conversational Search, Recommendation, and Question Answering

Hamed Zamani, Johanne R. Trippas, Jeff Dalton and Filip Radlinski

arXiv – Jan 2023 [paper]

Facts

Kevin Mulligan and Fabrice Correia

The Stanford Encyclopedia of Philosophy – Winter 2021 [url]

Truthful AI: Developing and governing AI that does not lie

Owain Evans, Owen Cotton-Barratt, Lukas Finnveden, Adam Bales, Avital Balwit, Peter Wills, Luca Righetti, William Saunders

arXiv – Oct 2021 [paper]

Rethinking Search: Making Domain Experts out of Dilettantes

Donald Metzler, Yi Tay, Dara Bahri, Marc Najork

SIGIR Forum 2021 – May 2021 [paper]

Attributed Question Answering: Evaluation and Modeling for Attributed Large Language Models

Bernd Bohnet, Vinh Q. Tran, Pat Verga, Roee Aharoni, Daniel Andor, Livio Baldini Soares, Jacob Eisenstein, Kuzman Ganchev, Jonathan Herzig, Kai Hui, Tom Kwiatkowski, Ji Ma, Jianmo Ni, Tal Schuster, William W. Cohen, Michael Collins, Dipanjan Das, Donald Metzler, Slav Petrov, Kellie Webster

arXiv – Dec 2022 [paper]

external grounding/retrieval at inference time

RAPTOR: Recursive Abstractive Processing for Tree-Organized Retrieval

Parth Sarthi, Salman Abdullah, Aditi Tuli, Shubh Khanna, Anna Goldie, Christopher D. Manning

ICLR 24 – Jan 2024 [paper]

Corrective Retrieval Augmented Generation

Shi-Qi Yan, Jia-Chen Gu, Yun Zhu, Zhen-Hua Ling

arXiv – Jan 2024 [paper]

It's About Time: Incorporating Temporality in Retrieval Augmented Language Models

Anoushka Gade, Jorjeta Jetcheva

arXiv – Jan 2024 [paper]

RAG vs Fine-tuning: Pipelines, Tradeoffs, and a Case Study on Agriculture

Angels Balaguer, Vinamra Benara, Renato Luiz de Freitas Cunha, Roberto de M. Estevão Filho, Todd Hendry, Daniel Holstein, Jennifer Marsman, Nick Mecklenburg, Sara Malvar, Leonardo O. Nunes, Rafael Padilha, Morris Sharp, Bruno Silva, Swati Sharma, Vijay Aski, Ranveer Chandra

arXiv – Jan 2024 [paper]

Is ChatGPT Good at Search? Investigating Large Language Models as Re-Ranking Agents

Wenhao Yu, Hongming Zhang, Xiaoman Pan, Kaixin Ma, Hongwei Wang, Dong Yu

arXiv – Nov 2023 [paper]

Self-RAG: Learning to Retrieve, Generate, and Critique through Self-Reflection

Anonymous

ICLR 24 – Oct 2023 [paper]

RA-DIT: Retrieval-Augmented Dual Instruction Tuning

Anonymous

ICLR 24 – Oct 2023 [paper]

In-Context Learning with Retrieval Augmented Encoder-Decoder Language Models

Anonymous

ICLR 24 – Oct 2023 [paper]

Making Retrieval-Augmented Language Models Robust to Irrelevant Context

Anonymous

ICLR 24 – Oct 2023 [paper]

Retrieval meets Long Context Large Language Models

Anonymous

ICLR 24 – Oct 2023 [paper]

Reformulating Domain Adaptation of Large Language Models as Adapt-Retrieve-Revise

Anonymous

ICLR 24 – Oct 2023 [paper]

InstructRetro: Instruction Tuning post Retrieval-Augmented Pretraining

Anonymous

ICLR 24 – Oct 2023 [paper]

SuRe: Improving Open-domain Question Answering of LLMs via Summarized Retrieval

Anonymous

ICLR 24 – Oct 2023 [paper]

RECOMP: Improving Retrieval-Augmented LMs with Context Compression and Selective Augmentation

Anonymous

ICLR 24 – Oct 2023 [paper]

Retrieval is Accurate Generation

Anonymous

ICLR 24 – Oct 2023 [paper]

PaperQA: Retrieval-Augmented Generative Agent for Scientific Research

Anonymous

ICLR 24 – Oct 2023 [paper]

Understanding Retrieval Augmentation for Long-Form Question Answering

Anonymous

ICLR 24 – Oct 2023 [paper]

Personalized Language Generation via Bayesian Metric Augmented Retrieval

Anonymous

ICLR 24 – Oct 2023 [paper]

DSPy: Compiling Declarative Language Model Calls into Self-Improving Pipelines

Omar Khattab, Arnav Singhvi, Paridhi Maheshwari, Zhiyuan Zhang, Keshav Santhanam, Sri Vardhamanan, Saiful Haq, Ashutosh Sharma, Thomas T. Joshi, Hanna Moazam, Heather Miller, Matei Zaharia, Christopher Potts

arXiv – Oct 2023 [paper] [code]

RA-DIT: Retrieval-Augmented Dual Instruction Tuning

Xi Victoria Lin, Xilun Chen, Mingda Chen, Weijia Shi, Maria Lomeli, Rich James, Pedro Rodriguez, Jacob Kahn, Gergely Szilvasy, Mike Lewis, Luke Zettlemoyer, Scott Yih

arXiv – Aug 2023 [paper]

Tool Documentation Enables Zero-Shot Tool-Usage with Large Language Models

Cheng-Yu Hsieh, Si-An Chen, Chun-Liang Li, Yasuhisa Fujii, Alexander Ratner, Chen-Yu Lee, Ranjay Krishna, Tomas Pfister

arXiv – Aug 2023 [paper]

ReAugKD: Retrieval-Augmented Knowledge Distillation For Pre-trained Language Models

Jianyi Zhang, Aashiq Muhamed, Aditya Anantharaman, Guoyin Wang, Changyou Chen, Kai Zhong, Qingjun Cui, Yi Xu, Belinda Zeng, Trishul Chilimbi, Yiran Chen

ACL 23 – Jul 2023 [paper]

Surface-Based Retrieval Reduces Perplexity of Retrieval-Augmented Language Models

Ehsan Doostmohammadi, Tobias Norlund, Marco Kuhlmann, Richard Johansson

ACL 23 – Jul 2023 [paper]

Soft Prompt Tuning for Augmenting Dense Retrieval with Large Language Models

Zhiyuan Peng, Xuyang Wu, Yi Fang

arXiv – Jun 2023 [paper]

RETA-LLM: A Retrieval-Augmented Large Language Model Toolkit

Jiongnan Liu, Jiajie Jin, Zihan Wang, Jiehan Cheng, Zhicheng Dou, Ji-Rong Wen

arXiv – Jun 2023 [paper]

WebGLM: Towards An Efficient Web-Enhanced Question Answering System with Human Preferences

Xiao Liu, Hanyu Lai, Hao Yu, Yifan Xu, Aohan Zeng, Zhengxiao Du, Peng Zhang, Yuxiao Dong, Jie Tang

arXiv – Jun 2023 [paper]

WikiChat: Stopping the Hallucination of Large Language Model Chatbots by Few-Shot Grounding on Wikipedia

Sina J. Semnani, Violet Z. Yao, Heidi C. Zhang, Monica S. Lam

EMNLP Findings 2023 – May 2023 [paper] [code] [demo]

RET-LLM: Towards a General Read-Write Memory for Large Language Models

Ali Modarressi, Ayyoob Imani, MOhsen Fayyaz, Hinrich Schutze

arXiv – May 2023 [paper]

Gorilla: Large Language Model Connected with Massive APIs

Shishir G. Patil, Tianjun Zhang, Xin Wang, Joseph E. Gonzalez

arXiv – May 2023 [paper] [code]

Active Retrieval Augmented Generation

Zhengbao Jiang, Frank F. Xu, Luyu Gao, Zhiqing Sun, Qian Liu, Jane Dwivedi-Yu, Yiming Yang, Jamie Callan, Graham Neubig

arXiv – May 2023 [paper] [code]

Shall We Pretrain Autoregressive Language Models with Retrieval? A Comprehensive Study

Boxin Wang, Wei Ping, Peng Xu, Lawrence McAfee, Zihan Liu, Mohammad Shoeybi, Yi Dong, Oleksii Kuchaiev, Bo Li, Chaowei Xiao, Anima Anandkumar, Bryan Catanzaro

arXiv – Apr 2023 [paper] [code]

Check Your Facts and Try Again: Improving Large Language Models with External Knowledge and Automated Feedback

Baolin Peng, Michel Galley, Pengcheng He, Hao Cheng, Yujia Xie, Yu Hu, Qiuyuan Huang, Lars Liden, Zhou Yu, Weizhu Chen, Jianfeng Gao

arXiv – Feb 2023 [paper] [code]

Toolformer: Language Models Can Teach Themselves to Use Tools

Timo Schick, Jane Dwivedi-Yu, Roberto Dessì, Roberta Raileanu, Maria Lomeli, Luke Zettlemoyer, Nicola Cancedda, Thomas Scialom

arXiv – Feb 2023 [paper]

REPLUG: Retrieval-Augmented Black-Box Language Models

Weijia Shi, Sewon Min, Michihiro Yasunaga, Minjoon Seo, Rich James, Mike Lewis, Luke Zettlemoyer, Wen-tau Yih

arXiv – Jan 2023 [paper]

In-Context Retrieval-Augmented Language Models

Ori Ram, Yoav Levine, Itay Dalmedigos, Dor Muhlgay, Amnon Shashua, Kevin Leyton-Brown, Yoav Shoham

AI21 Labs – Jan 2023 [paper] [code]

Recipes for Building an Open-Domain Chatbot

Stephen Roller, Emily Dinan, Naman Goyal, Da Ju, Mary Williamson, Yinhan Liu, Jing Xu, Myle Ott, Eric Michael Smith, Y-Lan Boureau, Jason Weston

EACL 2021 – Apr 2021 [paper]

AtMan: Understanding Transformer Predictions Through Memory Efficient Attention Manipulation

Hamed Zamani, Johanne R. Trippas, Jeff Dalton and Filip Radlinski

arXiv – Jan 2023 [paper]

RetroMAE v2: Duplex Masked Auto-Encoder For Pre-Training Retrieval-Oriented Language Models

Shitao Xiao, Zheng Liu

arXiv – Nov 2023 [paper]

Demonstrate-Search-Predict: Composing retrieval and language models for knowledge-intensive NLP

Omar Khattab, Keshav Santhanam, Xiang Lisa Li, David Hall, Percy Liang, Christopher Potts, Matei Zaharia

arXiv – Dec 2022 [paper]

Improving language models by retrieving from trillions of tokens

Sebastian Borgeaud, Arthur Mensch, Jordan Hoffmann, Trevor Cai, Eliza Rutherford, Katie Millican, George van den Driessche, Jean-Baptiste Lespiau, Bogdan Damoc, Aidan Clark, Diego de Las Casas, Aurelia Guy, Jacob Menick, Roman Ring, Tom Hennigan, Saffron Huang, Loren Maggiore, Chris Jones, Albin Cassirer, Andy Brock, Michela Paganini, Geoffrey Irving, Oriol Vinyals, Simon Osindero, Karen Simonyan, Jack W. Rae, Erich Elsen and Laurent Sifre

arXiv – Feb 2022 [paper]

Improving language models by retrieving from trillions of tokens

Sebastian Borgeaud, Arthur Mensch, Jordan Hoffmann, Trevor Cai, Eliza Rutherford, Katie Millican, George van den Driessche, Jean-Baptiste Lespiau, Bogdan Damoc, Aidan Clark, Diego de Las Casas, Aurelia Guy, Jacob Menick, Roman Ring, Tom Hennigan, Saffron Huang, Loren Maggiore, Chris Jones, Albin Cassirer, Andy Brock, Michela Paganini, Geoffrey Irving, Oriol Vinyals, Simon Osindero, Karen Simonyan, Jack W. Rae, Erich Elsen, Laurent Sifre

arXiv – Dec 2021 [paper]

WebGPT: Browser-assisted question-answering with human feedback

Reiichiro Nakano, Jacob Hilton, Suchir Balaji, Jeff Wu, Long Ouyang, Christina Kim, Christopher Hesse, Shantanu Jain, Vineet Kosaraju, William Saunders, Xu Jiang, Karl Cobbe, Tyna Eloundou, Gretchen Krueger, Kevin Button, Matthew Knight, Benjamin Chess, John Schulman

arXiv – Dec 2021 [paper]

BERT-kNN: Adding a kNN Search Component to Pretrained Language Models for Better QA

Nora Kassner, Hinrich Schütze

EMNLP 2020 – Nov 2020 [paper]

REALM: Retrieval-Augmented Language Model Pre-Training

Kelvin Guu, Kenton Lee, Zora Tung, Panupong Pasupat, Ming-Wei Chang

ICML 2020 – Jul 2020 [paper]

A Hybrid Retrieval-Generation Neural Conversation Model

Liu Yang, Junjie Hu, Minghui Qiu, Chen Qu, Jianfeng Gao, W. Bruce Croft, Xiaodong Liu, Yelong Shen, Jingjing Liu

arXiv – Apr 2019 [paper]

grounded in internal model weights at inference time

How Do Large Language Models Acquire Factual Knowledge During Pretraining?

Hoyeon Chang, Jinho Park, Seonghyeon Ye, Sohee Yang, Youngkyung Seo, Du-Seong Chang, Minjoon Seo

arXiv – Jun 2024 [paper]

R-Tuning: Teaching Large Language Models to Refuse Unknown Questions

Hanning Zhang, Shizhe Diao, Yong Lin, Yi R. Fung, Qing Lian, Xingyao Wang, Yangyi Chen, Heng Ji, Tong Zhang

arXiv – Nov 2023 [paper]

EasyEdit: An Easy-to-use Knowledge Editing Framework for Large Language Models

Peng Wang, Ningyu Zhang, Xin Xie, Yunzhi Yao, Bozhong Tian, Mengru Wang, Zekun Xi, Siyuan Cheng, Kangwei Liu, Guozhou Zheng, Huajun Chen

arXiv – Aug 2023 [paper]

Inspecting and Editing Knowledge Representations in Language Models

Evan Hernandez, Belinda Z. Li, Jacob Andreas

arXiv – Apr 2023 [paper] [code]

Leveraging Passage Retrieval with Generative Models for Open Domain Question Answering

Gautier Izacard, Edouard Grave

arXiv – Feb 2023 [paper]

Discovering Latent Knowledge in Language Models Without Supervision

Collin Burns, Haotian Ye, Dan Klein, Jacob Steinhardt

ICLR 23 – Feb 2023 [paper] [code]

Galactica: A Large Language Model for Science

Ross Taylor, Marcin Kardas, Guillem Cucurull,

Thomas Scialom, Anthony Hartshorn, Elvis Saravia,

Andrew Poulton, Viktor Kerkez, Robert Stojnic

Galactica.org – 2022 [paper]

BlenderBot 3: a deployed conversational agent that continually learns to responsibly engage

Kurt Shuster, Jing Xu, Mojtaba Komeili, Da Ju, Eric Michael Smith, Stephen Roller, Megan Ung, Moya Chen, Kushal Arora, Joshua Lane, Morteza Behrooz, William Ngan, Spencer Poff, Naman Goyal, Arthur Szlam, Y-Lan Boureau, Melanie Kambadur, Jason Weston

arXiv – Aug 2022 [paper]

Generate rather than Retrieve: Large Language Models are Strong Context Generators

Wenhao Yu, Dan Iter, Shuohang Wang, Yichong Xu, Mingxuan Ju, Soumya Sanyal, Chenguang Zhu, Michael Zeng, Meng Jiang

ICLR 2023 – Sep 2022 [paper]

Recitation-Augmented Language Models

Zhiqing Sun, Xuezhi Wang, Yi Tay, Yiming Yang, Denny Zhou

ICLR 2023 – Sep 2022 [paper]

Improving alignment of dialogue agents via targeted human judgements

Amelia Glaese, Nat McAleese, Maja Trębacz, John Aslanides, Vlad Firoiu, Timo Ewalds, Maribeth Rauh, Laura Weidinger, Martin Chadwick, Phoebe Thacker, Lucy Campbell-Gillingham, Jonathan Uesato, Po-Sen Huang, Ramona Comanescu, Fan Yang, Abigail See, Sumanth Dathathri, Rory Greig, Charlie Chen, Doug Fritz, Jaume Sanchez Elias, Richard Green, Soňa Mokrá, Nicholas Fernando, Boxi Wu, Rachel Foley, Susannah Young, Iason Gabriel, William Isaac, John Mellor, Demis Hassabis, Koray Kavukcuoglu, Lisa Anne Hendricks, Geoffrey Irving

arXiv – Sep 2022 [paper]

LaMDA: Language Models for Dialog Applications

Romal Thoppilan, Daniel De Freitas, Jamie Hall, Noam Shazeer, Apoorv Kulshreshtha, Heng-Tze Cheng, Alicia Jin, Taylor Bos, Leslie Baker, Yu Du, YaGuang Li, Hongrae Lee, Huaixiu Steven Zheng, Amin Ghafouri, Marcelo Menegali, Yanping Huang, Maxim Krikun, Dmitry Lepikhin, James Qin, Dehao Chen, Yuanzhong Xu, Zhifeng Chen, Adam Roberts, Maarten Bosma, Vincent Zhao, Yanqi Zhou, Chung-Ching Chang, Igor Krivokon, Will Rusch, Marc Pickett, Pranesh Srinivasan, Laichee Man, Kathleen Meier-Hellstern, Meredith Ringel Morris, Tulsee Doshi, Renelito Delos Santos, Toju Duke, Johnny Soraker, Ben Zevenbergen, Vinodkumar Prabhakaran, Mark Diaz, Ben Hutchinson, Kristen Olson, Alejandra Molina, Erin Hoffman-John, Josh Lee, Lora Aroyo, Ravi Rajakumar, Alena Butryna, Matthew Lamm, Viktoriya Kuzmina, Joe Fenton, Aaron Cohen, Rachel Bernstein, Ray Kurzweil, Blaise Aguera-Arcas, Claire Cui, Marian Croak, Ed Chi, Quoc Le

arXiv – Jan 2022 [paper]

Language Models As or For Knowledge Bases

Simon Razniewski, Andrew Yates, Nora Kassner, Gerhard Weikum

DL4KG 2021 – Oct 2021 [paper]

Generalization through Memorization: Nearest Neighbor Language Models

Urvashi Khandelwal, Omer Levy, Dan Jurafsky, Luke Zettlemoyer, Mike Lewis

ICLR 2020 – Sep 2019 [paper] [code]

Linguistic Calibration of Long-Form Generations

Neil Band, Xuechen Li, Tengyu Ma, Tatsunori Hashimoto

arXiv 2024 – Jun 2024 [paper]

To Believe or Not to Believe Your LLM

Yasin Abbasi Yadkori, Ilja Kuzborskij, András György, Csaba Szepesvári

arXiv 2024 – Jun 2024 [paper]

SaySelf: Teaching LLMs to Express Confidence with Self-Reflective Rationales

Tianyang Xu, Shujin Wu, Shizhe Diao, Xiaoze Liu, Xingyao Wang, Yangyi Chen, Jing Gao

arXiv 2024 – May 2024 [paper]

Experts Don’t Cheat: Learning What You Don’t Know By Predicting Pairs

Daniel D. Johnson, Daniel Tarlow, David Duvenaud, Chris J. Maddison

arXiv 2024 – Feb 2024 [paper]

Unlocking Anticipatory Text Generation: A Constrained Approach for Faithful Decoding with Large Language Models

Anonymous

ICLR 24 – Oct 2023 [paper]

DoLa: Decoding by Contrasting Layers Improves Factuality in Large Language Models

Yung-Sung Chuang, Yujia Xie, Hongyin Luo, Yoon Kim, James Glass, Pengcheng He

ICLR 24 – Sep 2023 [paper]

A Data-Centric Approach To Generate Faithful and High Quality Patient Summaries with Large Language Models

Stefan Hegselmann, Shannon Zejiang Shen, Florian Gierse, Monica Agrawal, David Sontag, Xiaoyi Jiang

arXiv 24 – Feb 2024 [paper]

Stochastic RAG: End-to-End Retrieval-Augmented Generation through Expected Utility Maximization

Hamed Zamani, Michael Bendersky

arXiv 24 – May 2024 [paper]

Constitutional AI: Harmlessness from AI Feedback

Yuntao Bai, Saurav Kadavath, Sandipan Kundu, Amanda Askell, Jackson Kernion,

Andy Jones, Anna Chen, Anna Goldie, Azalia Mirhoseini, Cameron McKinnon,

Carol Chen, Catherine Olsson, Christopher Olah, Danny Hernandez, Dawn Drain,

Deep Ganguli, Dustin Li, Eli Tran-Johnson, Ethan Perez, Jamie Kerr, Jared Mueller,

Jeffrey Ladish, Joshua Landau, Kamal Ndousse, Kamile Lukosiute, Liane Lovitt,

Michael Sellitto, Nelson Elhage, Nicholas Schiefer, Noemi Mercado, Nova DasSarma,

Robert Lasenby, Robin Larson, Sam Ringer, Scott Johnston, Shauna Kravec,

Sheer El Showk, Stanislav Fort, Tamera Lanham, Timothy Telleen-Lawton, Tom Conerly,

Tom Henighan, Tristan Hume, Samuel R. Bowman, Zac Hatfield-Dodds, Ben Mann,

Dario Amodei, Nicholas Joseph, Sam McCandlish, Tom Brown, Jared Kaplan

Anthropic.com – Dec 2022 [paper]

Learning New Skills after Deployment: Improving open-domain internet-driven dialogue with human feedback

Jing Xu, Megan Ung, Mojtaba Komeili, Kushal Arora, Y-Lan Boureau, Jason Weston

arXiv – Aug 2022 [paper]

Retrieval-Augmented Multimodal Language Modeling

Michihiro Yasunaga, Armen Aghajanyan, Weijia Shi, Rich James, Jure Leskovec, Percy Liang, Mike Lewis, Luke Zettlemoyer, Wen-tau Yih

arXiv – Nov 2022 [paper]

RAMM: Retrieval-augmented Biomedical Visual Question Answering with Multi-modal Pre-training

Zheng Yuan, Qiao Jin, Chuanqi Tan, Zhengyun Zhao, Hongyi Yuan, Fei Huang, Songfang Huang

arXiv – Mar 2023 [paper]

Interleaving Retrieval with Chain-of-Thought Reasoning for Knowledge-Intensive Multi-Step Questions Harsh Trivedi, Niranjan Balasubramanian, Tushar Khot and Ashish Sabharwal ACL 23 – Jul 2023 [paper]

ReAct: Synergizing Reasoning and Acting in Language Models

Shunyu Yao, Jeffrey Zhao, Dian Yu, Nan Du, Izhak Shafran, Karthik Narasimhan, Yuan Cao

arXiv – Oct 2022 [paper]

RepoCoder: Repository-Level Code Completion Through Iterative Retrieval and Generation

Fengji Zhang, Bei Chen, Yue Zhang, Jin Liu, Daoguang Zan, Yi Mao, Jian-Guang Lou, Weizhu Chen

arXiv – Mar 2023 [paper]

DocPrompting: Generating Code by Retrieving the Docs

Shuyan Zhou, Uri Alon, Frank F. Xu, Zhiruo Wang, Zhengbao Jiang, Graham Neubig

ICLR 23 – Jul 2022 [paper] [code] [data]

Generate, Filter, and Fuse: Query Expansion via Multi-Step Keyword Generation for Zero-Shot Neural Rankers

Minghan Li, Honglei Zhuang, Kai Hui, Zhen Qin, Jimmy Lin, Rolf Jagerman, Xuanhui Wang, Michael Bendersky

arXiv – Nov 2023 [paper]

Agent4Ranking: Semantic Robust Ranking via Personalized Query Rewriting Using Multi-agent LLM

Xiaopeng Li, Lixin Su, Pengyue Jia, Xiangyu Zhao, Suqi Cheng, Junfeng Wang, Dawei Yin

arXiv – Dec 2023 [paper]

Unified Generative & Dense Retrieval for Query Rewriting in Sponsored Search

Akash Kumar Mohankumar, Bhargav Dodla, Gururaj K, Amit Singh

arXiv – Sep 2022 [paper]

Generating Factually Consistent Sport Highlights Narrations

Noah Sarfati, Ido Yerushalmy, Michael Chertok, Yosi Keller

MMSports 2023 – Oct 23 [paper]

Genetic Generative Information Retrieval

Hrishikesh Kulkarni, Zachary Young, Nazli Goharian, Ophir Frieder, Sean MacAvaney

DocEng 23 – Aug 23 [paper]

Learning to summarize with human feedback

Nisan Stiennon, Long Ouyang, Jeff Wu, Daniel M. Ziegler, Ryan Lowe, Chelsea Voss, Alec Radford, Dario Amodei, Paul Christiano

NeurIPS 2020 – Sep 2020 [paper]

On Faithfulness and Factuality in Abstractive Summarization

Joshua Maynez, Shashi Narayan, Bernd Bohnet, Ryan McDonald

ACL 2020 – May 2020 [paper]

Augment before You Try: Knowledge-Enhanced Table Question Answering via Table Expansion

Yujian Liu, Jiabao Ji, Tong Yu, Ryan Rossi, Sungchul Kim, Handong Zhao, Ritwik Sinha, Yang Zhang, Shiyu Chang

arXiv – Jan 2024 [paper]

We jump-started this section by reusing the content of awesome-generative-retrieval-models and give full credit to Chriskuei for that! We now have added some content on top.

De-DSI: Decentralised Differentiable Search Index

Petru Neague, Marcel Gregoriadis, Johan Pouwelse

EuroMLSys 24 – Apr 2024 [paper]

Listwise Generative Retrieval Models via a Sequential Learning Process

Yubao Tang, Ruqing Zhang, Jiafeng Guo, Maarten de Rijke, Wei Chen, Xueqi Cheng

TOIS 2024 – Mar 2024 [Paper]

Distillation Enhanced Generative Retrieval

Yongqi Li, Zhen Zhang, Wenjie Wang, Liqiang Nie, Wenjie Li, Tat-Seng Chua

arXiv 2024 – Feb 2024 [Paper]

Self-Retrieval: Building an Information Retrieval System with One Large Language Model

Qiaoyu Tang, Jiawei Chen, Bowen Yu, Yaojie Lu, Cheng Fu, Haiyang Yu, Hongyu Lin, Fei Huang, Ben He, Xianpei Han, Le Sun, Yongbin Li

arXiv 2024 – Feb 2024 [Paper]

Generative Dense Retrieval: Memory Can Be a Burden

Peiwen Yuan, Xinglin Wang, Shaoxiong Feng, Boyuan Pan, Yiwei Li, Heda Wang, Xupeng Miao, Kan Li

EACL 2024 - Jan 2024 [paper] [code]

Auto Search Indexer for End-to-End Document Retrieval

Tianchi Yang, Minghui Song, Zihan Zhang, Haizhen Huang, Weiwei Deng, Feng Sun, Qi Zhang

EMNLP 2023 - December 23 [paper]

DiffusionRet: Diffusion-Enhanced Generative Retriever using Constrained Decoding

Shanbao Qiao, Xuebing Liu, Seung-Hoon Na

EMNLP Findings 2023 – Dec 2023 [paper]

Scalable and Effective Generative Information Retrieval

Hansi Zeng, Chen Luo, Bowen Jin, Sheikh Muhammad Sarwar, Tianxin Wei, Hamed Zamani

WWW 2024 - Nov 2023 [paper] [code]

Nonparametric Decoding for Generative Retrieval

Hyunji Lee, JaeYoung Kim, Hoyeon Chang, Hanseok Oh, Sohee Yang, Vladimir Karpukhin, Yi Lu, Minjoon Seo

ACL Findings 2023 – Jul 2023 [paper]

Model-enhanced Vector Index

Hailin Zhang, Yujing Wang, Qi Chen, Ruiheng Chang, Ting Zhang, Ziming Miao, Yingyan Hou, Yang Ding, Xupeng Miao, Haonan Wang, Bochen Pang, Yuefeng Zhan, Hao Sun, Weiwei Deng, Qi Zhang, Fan Yang, Xing Xie, Mao Yang, Bin Cui

NeurIPS 2023 – May 2023 [paper] [code]

Continual Learning for Generative Retrieval over Dynamic Corpora

Jiangui Chen, Ruqing Zhang, Jiafeng Guo, Maarten de Rijke, Wei Chen, Yixing Fan, Xueqi Cheng

CIKM 2023 - Aug 2023 [paper]

Learning to Rank in Generative Retrieval

Yongqi Li, Nan Yang, Liang Wang, Furu Wei, Wenjie Li

arXiv – Jun 2023 [paper]

Large Language Models are Built-in Autoregressive Search Engines

Noah Ziems, Wenhao Yu, Zhihan Zhang, Meng Jiang

ACL Findings 2023 – May 2023 [paper]

Multiview Identifiers Enhanced Generative Retrieval

Yongqi Li, Nan Yang, Liang Wang, Furu Wei, Wenjie Li

ACL 2023 – May 2023 [paper]

How Does Generative Retrieval Scale to Millions of Passages?

Ronak Pradeep, Kai Hui, Jai Gupta, Adam D. Lelkes, Honglei Zhuang, Jimmy Lin, Donald Metzler, Vinh Q. Tran

arXiv – May 2023 [paper]

TOME: A Two-stage Approach for Model-based Retrieval

Ruiyang Ren, Wayne Xin Zhao, Jing Liu, Hua Wu, Ji-Rong Wen, Haifeng Wang

ACL 2023 - May 2023 [paper]

Understanding Differential Search Index for Text Retrieval

Xiaoyang Chen, Yanjiang Liu, Ben He, Le Sun, Yingfei Sun

ACL Findings 2023 - May 2023 [paper]

Learning to Tokenize for Generative Retrieval

Weiwei Sun, Lingyong Yan, Zheng Chen, Shuaiqiang Wang, Haichao Zhu, Pengjie Ren, Zhumin Chen, Dawei Yin, Maarten de Rijke, Zhaochun Ren

arXiv – Apr 2023 [paper]

DynamicRetriever: A Pre-trained Model-based IR System Without an Explicit Index

Yu-Jia Zhou, Jing Yao, Zhi-Cheng Dou, Ledell Wu, Ji-Rong Wen

Machine Intelligence Research – Jan 2023 [paper]

DSI++: Updating Transformer Memory with New Documents

Sanket Vaibhav Mehta, Jai Gupta, Yi Tay, Mostafa Dehghani, Vinh Q. Tran, Jinfeng Rao, Marc Najork, Emma Strubell, Donald Metzler

arXiv – Dec 2022 [paper]

CodeDSI: Differentiable Code Search

Usama Nadeem, Noah Ziems, Shaoen Wu

arXiv – Oct 2022 [paper]

Contextualized Generative Retrieval

Hyunji Lee, Jaeyoung Kim, Hoyeon Chang, Hanseok Oh, Sohee Yang, Vlad Karpukhin, Yi Lu, Minjoon Seo

arXiv – Oct 2022 [paper]

Transformer Memory as a Differentiable Search Index

Yi Tay, Vinh Q. Tran, Mostafa Dehghani, Jianmo Ni, Dara Bahri, Harsh Mehta, Zhen Qin, Kai Hui, Zhe Zhao, Jai Gupta, Tal Schuster, William W. Cohen, Donald Metzler

Neurips 2022 – Oct 2022 [paper] [Video] [third-party code]

A Neural Corpus Indexer for Document Retrieval

Wang et al.

Arxiv 2022 [paper]

Bridging the Gap Between Indexing and Retrieval for Differentiable Search Index with Query Generation

Shengyao Zhuang, Houxing Ren, Linjun Shou, Jian Pei, Ming Gong, Guido Zuccon, and Daxin Jiang

Arxiv 2022 [paper] [Code]

DynamicRetriever: A Pre-training Model-based IR System with Neither Sparse nor Dense Index

Zhou et al

Arxiv 2022 [paper]

Ultron: An Ultimate Retriever on Corpus with a Model-based Indexer

Zhou et al

Arxiv 2022 [paper]

Planning Ahead in Generative Retrieval: Guiding Autoregressive Generation through Simultaneous Decoding

Hansi Zeng ,Chen Luo ,Hamed Zamani

arXiv – Apr 2024 [paper] [Code]

NOVO: Learnable and Interpretable Document Identifiers for Model-Based IR

Zihan Wang, Yujia Zhou, Yiteng Tu, Zhicheng Dou

CIKM 2023 - October 2023 [paper]

Generative Retrieval as Multi-Vector Dense Retrieval

Shiguang Wu, Wenda Wei, Mengqi Zhang, Zhumin Chen, Jun Ma, Zhaochun Ren, Maarten de Rijke, Pengjie Ren

SIGIR 2024 - March 24 [paper] [Code]

Re3val: Reinforced and Reranked Generative Retrieval

EuiYul Song, Sangryul Kim, Haeju Lee, Joonkee Kim, James Thorne

EACL Findings 2023 – Jan 24 [paper]

GLEN: Generative Retrieval via Lexical Index Learning

Sunkyung Lee, Minjin Choi, Jongwuk Lee

EMNLP 2023 - December 23 [paper] [Code]

Enhancing Generative Retrieval with Reinforcement Learning from Relevance Feedback

Yujia Zhou, Zhicheng Dou, Ji-Rong Wen

EMNLP 2023 - December 23 [paper]

Generative Retrieval with Large Language Models

Anonymous

ICLR 24 – October 23 [paper]

Semantic-Enhanced Differentiable Search Index Inspired by Learning Strategies

Yubao Tang, Ruqing Zhang, Jiafeng Guo, Jiangui Chen, Zuowei Zhu, Shuaiqiang Wang, Dawei Yin, Xueqi Cheng

KDD 2023 – May 2023 [paper]

Term-Sets Can Be Strong Document Identifiers For Auto-Regressive Search Engines

Peitian Zhang, Zheng Liu, Yujia Zhou, Zhicheng Dou, Zhao Cao

arXiv – May 2023 [paper] [Code]

A Unified Generative Retriever for Knowledge-Intensive Language Tasks via Prompt Learning

Jiangui Chen, Ruqing Zhang, Jiafeng Guo, Maarten de Rijke, Yiqun Liu, Yixing Fan, Xueqi Cheng

SIGIR 2023 – Apr 2023 [paper] [Code]

CorpusBrain: Pre-train a Generative Retrieval Model for Knowledge-Intensive Language Tasks

Jiangui Chen, Ruqing Zhang, Jiafeng Guo, Yiqun Liu, Yixing Fan, Xueqi Cheng

CIKM 2022 – Aug 2022 [paper] [Code]

Autoregressive Search Engines: Generating Substrings as Document Identifiers

Michele Bevilacqua, Giuseppe Ottaviano, Patrick Lewis, Wen-tau Yih, Sebastian Riedel, Fabio Petroni

arXiv – Apr 2022 [paper] [Code]

Autoregressive Entity Retrieval

Nicola De Cao, Gautier Izacard, Sebastian Riedel, Fabio Petroni

ICLR 2021 – Oct 2020 [paper] [Code]

Data-Efficient Autoregressive Document Retrieval for Fact Verification

James Thorne

SustaiNLP@EMNLP 2022 – Nov 2022 [paper]

GERE: Generative Evidence Retrieval for Fact Verification

Jiangui Chen, Ruqing Zhang, Jiafeng Guo, Yixing Fan, Xueqi Cheng

SIGIR 2022 [paper] [Code]

Generative Multi-hop Retrieval

Hyunji Lee, Sohee Yang, Hanseok Oh, Minjoon Seo

arXiv – Apr 2022 [paper]

Improving LLMs for Recommendation with Out-Of-Vocabulary Tokens

Ting-Ji Huang, Jia-Qi Yang, Chunxu Shen, Kai-Qi Liu, De-Chuan Zhan, Han-Jia Ye

arXiv – Jun 2024 [paper]

Plug-in Diffusion Model for Sequential Recommendation

Haokai Ma, Ruobing Xie, Lei Meng, Xin Chen, Xu Zhang, Leyu Lin, Zhanhui Kang

arXiv – Jan 2024 [paper]

Towards Graph-Aware Diffusion Modeling For Collaborative Filtering

Yunqin Zhu1, Chao Wang, Hui Xiong

arXiv – Nov 2023 [paper]

RecMind: Large Language Model Powered Agent For Recommendation

Yancheng Wang, Ziyan Jiang, Zheng Chen, Fan Yang, Yingxue Zhou, Eunah Cho, Xing Fan, Xiaojiang Huang, Yanbin Lu, Yingzhen Yang

arXiv – Aug 2023 [paper]

Is ChatGPT Fair for Recommendation? Evaluating Fairness in Large Language Model Recommendation

Jizhi Zhang, Keqin Bao, Yang Zhang, Wenjie Wang, Fuli Feng, Xiangnan He

Recsys 2023 – Jul 2023 [paper]

RecFusion: A Binomial Diffusion Process for 1D Data for Recommendation

Gabriel Bénédict, Olivier Jeunen, Samuele Papa, Samarth Bhargav, Daan Odijk, Maarten de Rijke

arXiv – Jun 2023 [paper]

A First Look at LLM-Powered Generative News Recommendation

Qijiong Liu, Nuo Chen, Tetsuya Sakai, Xiao-Ming Wu

arXiv – Jun 2023 [paper]

Large Language Models as Zero-Shot Conversational Recommenders

Yupeng Hou, Junjie Zhang, Zihan Lin, Hongyu Lu, Ruobing Xie, Julian McAuley, Wayne Xin Zhao

arXiv – May 2023 [paper]

DiffuRec: A Diffusion Model for Sequential Recommendation

Zihao Li, Aixin Sun, Chenliang Li

arXiv – Apr 2023 [paper]

Diffusion Recommender Model

Wenjie Wang, Yiyan Xu, Fuli Feng, Xinyu Lin, Xiangnan He, Tat-Seng Chua

SIGIR 2023 – Apr 2023 [paper]

Blurring-Sharpening Process Models for Collaborative Filtering

Jeongwhan Choi, Seoyoung Hong, Noseong Park, Sung-Bae Cho

SIGIR 2023 – Apr 2023 [paper] [code]

Recommender Systems with Generative Retrieval

Shashank Rajput, Nikhil Mehta, Anima Singh, Raghunandan Keshavan, Trung Vu, Lukasz Heldt, Lichan Hong, Yi Tay, Vinh Q. Tran, Jonah Samost, Maciej Kula, Ed H. Chi, Maheswaran Sathiamoorthy

non-archival – Mar 2023 [paper]

Pre-train, Prompt and Recommendation: A Comprehensive Survey of Language Modelling Paradigm Adaptations in Recommender Systems

Peng Liu, Lemei Zhang, Jon Atle Gulla

arXiv – Feb 2023 [paper]

Generative Slate Recommendation with Reinforcement Learning

Romain Deffayet, Thibaut Thonet, Jean-Michel Renders, and Maarten de Rijke

WSDM 2023 – Feb 2023 [paper]

Recommendation via Collaborative Diffusion Generative Model

Joojo Walker, Ting Zhong, Fengli Zhang, Qiang Gao, Fan Zhou

KSEM 2022 – Aug 2022 [paper]

DocGraphLM: Documental Graph Language Model for Information Extraction

Dongsheng Wang, Zhiqiang Ma, Armineh Nourbakhsh, Kang Gu, Sameena Shah

arXiv – Jan 2024 [paper]

KBFormer: A Diffusion Model for Structured Entity Completion

Ouail Kitouni, Niklas Nolte, James Hensman, Bhaskar Mitra

arXiv – Dec 2023 [paper]

From Retrieval to Generation: Efficient and Effective Entity Set Expansion

Shulin Huang, Shirong Ma, Yangning Li, Yinghui Li, Hai-Tao Zheng, Yong Jiang

arXiv – Apr 2023 [paper]

Crawling the Internal Knowledge-Base of Language Models

Roi Cohen, Mor Geva, Jonathan Berant, Amir Globerson

arXiv – Jan 2023 [paper]

Prompt Tuning or Fine-Tuning - Investigating Relational Knowledge in Pre-Trained Language Models

Leandra Fichtel, Jan-Christoph Kalo, Wolf-Tilo Balke

AKBC 2021 – [paper]

Language Models as Knowledge Bases?

Fabio Petroni, Tim Rocktäschel, Patrick Lewis, Anton Bakhtin, Yuxiang Wu, Alexander H. Miller, Sebastian Riedel

EMNLP 2019 – Sep 2019 [paper]

Although some of these are not accompanied by a paper, they might be useful to other Generative IR researchers for empirical studies or interface design considerations.

⚡ Gemini Dec 2023 [live] ⚡️ factiverse Jun 2023 [live] ⚡️ devmarizer Mar 2023 [live] ⚡️ TaxGenius Mar 2023 [live] ⚡️ doc-gpt Mar 2023 [live] ⚡️ book-gpt Feb 2023 [live] ⚡️ Neeva Feb 2023 [live] ⚡️ Golden Retriever Feb 2023 [live] ⚡️ Bing – Prometheus Feb 2023 [waitlist] ⚡️ Google – Bard Feb 2023 [only in certain countries] ⚡️ Paper QA Feb 2023 [code] [demo] ⚡️ DocsGPT Feb 2023 [live] [code] ⚡️ DocAsker Jan 2023 [live] ⚡️ Lexii.ai Jan 2023 [live] ⚡️ YOU.com Dec 2022 [live] ⚡️ arXivGPT Dec 2022 [Chrome extension] ⚡️ GPT Index Nov 2022 [API] ⚡️ BlenderBot Aug 2022 [live (USA)] [model weights] [code] [paper1] [paper2] ⚡️ PHIND date? [live] ⚡️ Perplexity date? [live] ⚡️ Galactica date? [demo] [API] [paper] ⚡️ Elicit date? [live] ⚡️ ZetaAlpha date? [live] uses OpenAI API

To get just the paper titles do grep '\*\*' README.md | sed 's/\*\*//g'

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for awesome-generative-information-retrieval

Similar Open Source Tools

awesome-generative-information-retrieval

This repository contains a curated list of resources on generative information retrieval, including research papers, datasets, tools, and applications. Generative information retrieval is a subfield of information retrieval that uses generative models to generate new documents or passages of text that are relevant to a given query. This can be useful for a variety of tasks, such as question answering, summarization, and document generation. The resources in this repository are intended to help researchers and practitioners stay up-to-date on the latest advances in generative information retrieval.

Awesome-LLM-RAG

This repository, Awesome-LLM-RAG, aims to record advanced papers on Retrieval Augmented Generation (RAG) in Large Language Models (LLMs). It serves as a resource hub for researchers interested in promoting their work related to LLM RAG by updating paper information through pull requests. The repository covers various topics such as workshops, tutorials, papers, surveys, benchmarks, retrieval-enhanced LLMs, RAG instruction tuning, RAG in-context learning, RAG embeddings, RAG simulators, RAG search, RAG long-text and memory, RAG evaluation, RAG optimization, and RAG applications.

Awesome-LLM-Preference-Learning

The repository 'Awesome-LLM-Preference-Learning' is the official repository of a survey paper titled 'Towards a Unified View of Preference Learning for Large Language Models: A Survey'. It contains a curated list of papers related to preference learning for Large Language Models (LLMs). The repository covers various aspects of preference learning, including on-policy and off-policy methods, feedback mechanisms, reward models, algorithms, evaluation techniques, and more. The papers included in the repository explore different approaches to aligning LLMs with human preferences, improving mathematical reasoning in LLMs, enhancing code generation, and optimizing language model performance.

awesome-open-ended

A curated list of open-ended learning AI resources focusing on algorithms that invent new and complex tasks endlessly, inspired by human advancements. The repository includes papers, safety considerations, surveys, perspectives, and blog posts related to open-ended AI research.

LLM4DB

LLM4DB is a repository focused on the intersection of Large Language Models (LLM) and Database technologies. It covers various aspects such as data processing, data analysis, database optimization, and data management for LLM. The repository includes works on data cleaning, entity matching, schema matching, data discovery, NL2SQL, data exploration, data visualization, configuration tuning, query optimization, and anomaly diagnosis using LLMs. It aims to provide insights and advancements in leveraging LLMs for improving data processing, analysis, and database management tasks.

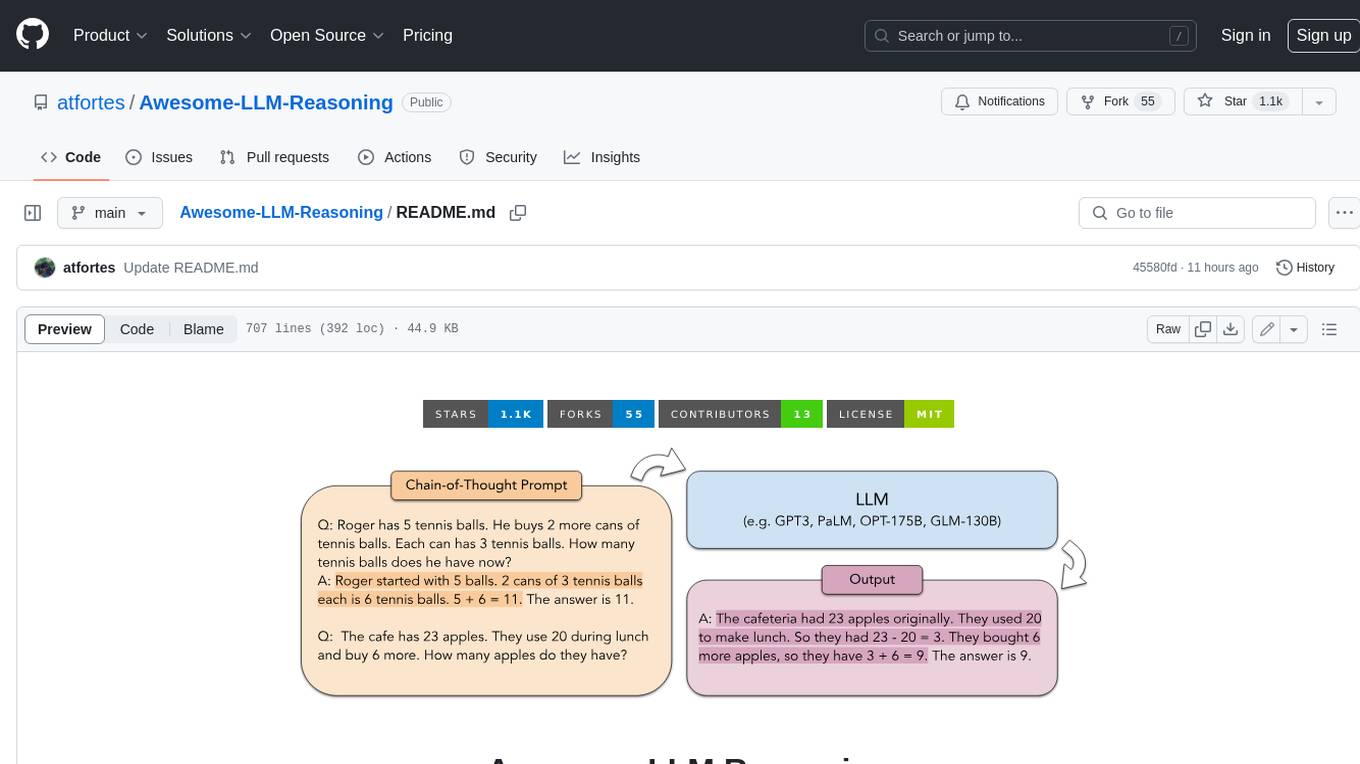

Awesome-LLM-Reasoning

**Curated collection of papers and resources on how to unlock the reasoning ability of LLMs and MLLMs.** **Description in less than 400 words, no line breaks and quotation marks.** Large Language Models (LLMs) have revolutionized the NLP landscape, showing improved performance and sample efficiency over smaller models. However, increasing model size alone has not proved sufficient for high performance on challenging reasoning tasks, such as solving arithmetic or commonsense problems. This curated collection of papers and resources presents the latest advancements in unlocking the reasoning abilities of LLMs and Multimodal LLMs (MLLMs). It covers various techniques, benchmarks, and applications, providing a comprehensive overview of the field. **5 jobs suitable for this tool, in lowercase letters.** - content writer - researcher - data analyst - software engineer - product manager **Keywords of the tool, in lowercase letters.** - llm - reasoning - multimodal - chain-of-thought - prompt engineering **5 specific tasks user can use this tool to do, in less than 3 words, Verb + noun form, in daily spoken language.** - write a story - answer a question - translate a language - generate code - summarize a document

LLMAgentPapers

LLM Agents Papers is a repository containing must-read papers on Large Language Model Agents. It covers a wide range of topics related to language model agents, including interactive natural language processing, large language model-based autonomous agents, personality traits in large language models, memory enhancements, planning capabilities, tool use, multi-agent communication, and more. The repository also provides resources such as benchmarks, types of tools, and a tool list for building and evaluating language model agents. Contributors are encouraged to add important works to the repository.

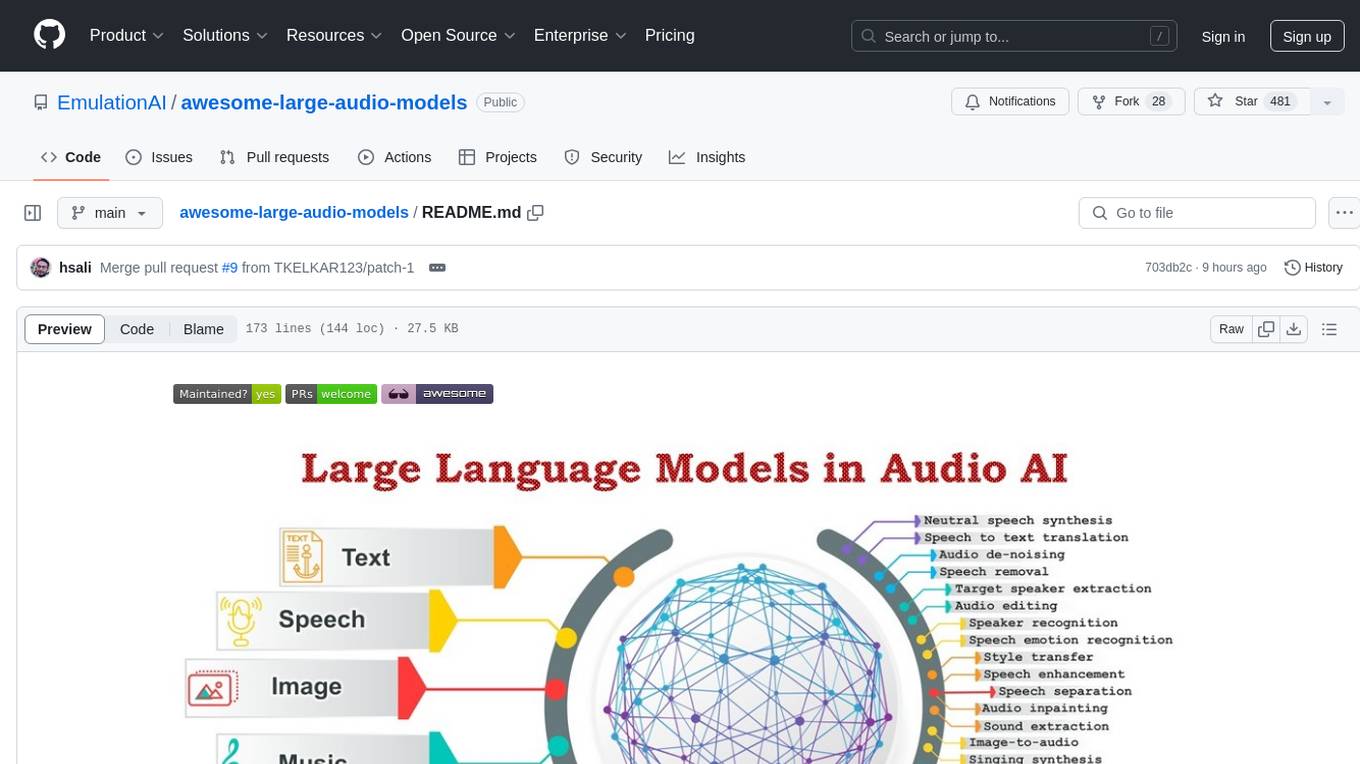

awesome-large-audio-models

This repository is a curated list of awesome large AI models in audio signal processing, focusing on the application of large language models to audio tasks. It includes survey papers, popular large audio models, automatic speech recognition, neural speech synthesis, speech translation, other speech applications, large audio models in music, and audio datasets. The repository aims to provide a comprehensive overview of recent advancements and challenges in applying large language models to audio signal processing, showcasing the efficacy of transformer-based architectures in various audio tasks.

LLM4DB

LLM4DB is a repository focused on the intersection of Large Language Models (LLMs) and Database technologies. It covers various aspects such as data processing, data analysis, database optimization, and data management for LLMs. The repository includes research papers, tools, and techniques related to leveraging LLMs for tasks like data cleaning, entity matching, schema matching, data discovery, NL2SQL, data exploration, data visualization, knob tuning, query optimization, and database diagnosis.

Awesome-LLM-Reasoning-Openai-o1-Survey

The repository 'Awesome LLM Reasoning Openai-o1 Survey' provides a collection of survey papers and related works on OpenAI o1, focusing on topics such as LLM reasoning, self-play reinforcement learning, complex logic reasoning, and scaling law. It includes papers from various institutions and researchers, showcasing advancements in reasoning bootstrapping, reasoning scaling law, self-play learning, step-wise and process-based optimization, and applications beyond math. The repository serves as a valuable resource for researchers interested in exploring the intersection of language models and reasoning techniques.

ai4math-papers

The 'ai4math-papers' repository contains a collection of research papers related to AI applications in mathematics, including automated theorem proving, synthetic theorem generation, autoformalization, proof refactoring, premise selection, benchmarks, human-in-the-loop interactions, and constructing examples/counterexamples. The papers cover various topics such as neural theorem proving, reinforcement learning for theorem proving, generative language modeling, formal mathematics statement curriculum learning, and more. The repository serves as a valuable resource for researchers and practitioners interested in the intersection of AI and mathematics.

Prompt4ReasoningPapers

Prompt4ReasoningPapers is a repository dedicated to reasoning with language model prompting. It provides a comprehensive survey of cutting-edge research on reasoning abilities with language models. The repository includes papers, methods, analysis, resources, and tools related to reasoning tasks. It aims to support various real-world applications such as medical diagnosis, negotiation, etc.

awesome-llm-role-playing-with-persona

Awesome-llm-role-playing-with-persona is a curated list of resources for large language models for role-playing with assigned personas. It includes papers and resources related to persona-based dialogue systems, personalized response generation, psychology of LLMs, biases in LLMs, and more. The repository aims to provide a comprehensive collection of research papers and tools for exploring role-playing abilities of large language models in various contexts.

SLMs-Survey

SLMs-Survey is a comprehensive repository that includes papers and surveys on small language models. It covers topics such as technology, on-device applications, efficiency, enhancements for LLMs, and trustworthiness. The repository provides a detailed overview of existing SLMs, their architecture, enhancements, and specific applications in various domains. It also includes information on SLM deployment optimization techniques and the synergy between SLMs and LLMs.

For similar tasks

awesome-generative-information-retrieval

This repository contains a curated list of resources on generative information retrieval, including research papers, datasets, tools, and applications. Generative information retrieval is a subfield of information retrieval that uses generative models to generate new documents or passages of text that are relevant to a given query. This can be useful for a variety of tasks, such as question answering, summarization, and document generation. The resources in this repository are intended to help researchers and practitioners stay up-to-date on the latest advances in generative information retrieval.

developer-kit

A modular plugin system for automating development tasks in Claude Code. It provides reusable skills, agents, and commands across multiple languages and frameworks. Users can install only the necessary plugins from a marketplace. The tool is specialized for code review, testing, AI development, and full-stack development, with composable and portable features.

LLM-FineTuning-Large-Language-Models

This repository contains projects and notes on common practical techniques for fine-tuning Large Language Models (LLMs). It includes fine-tuning LLM notebooks, Colab links, LLM techniques and utils, and other smaller language models. The repository also provides links to YouTube videos explaining the concepts and techniques discussed in the notebooks.

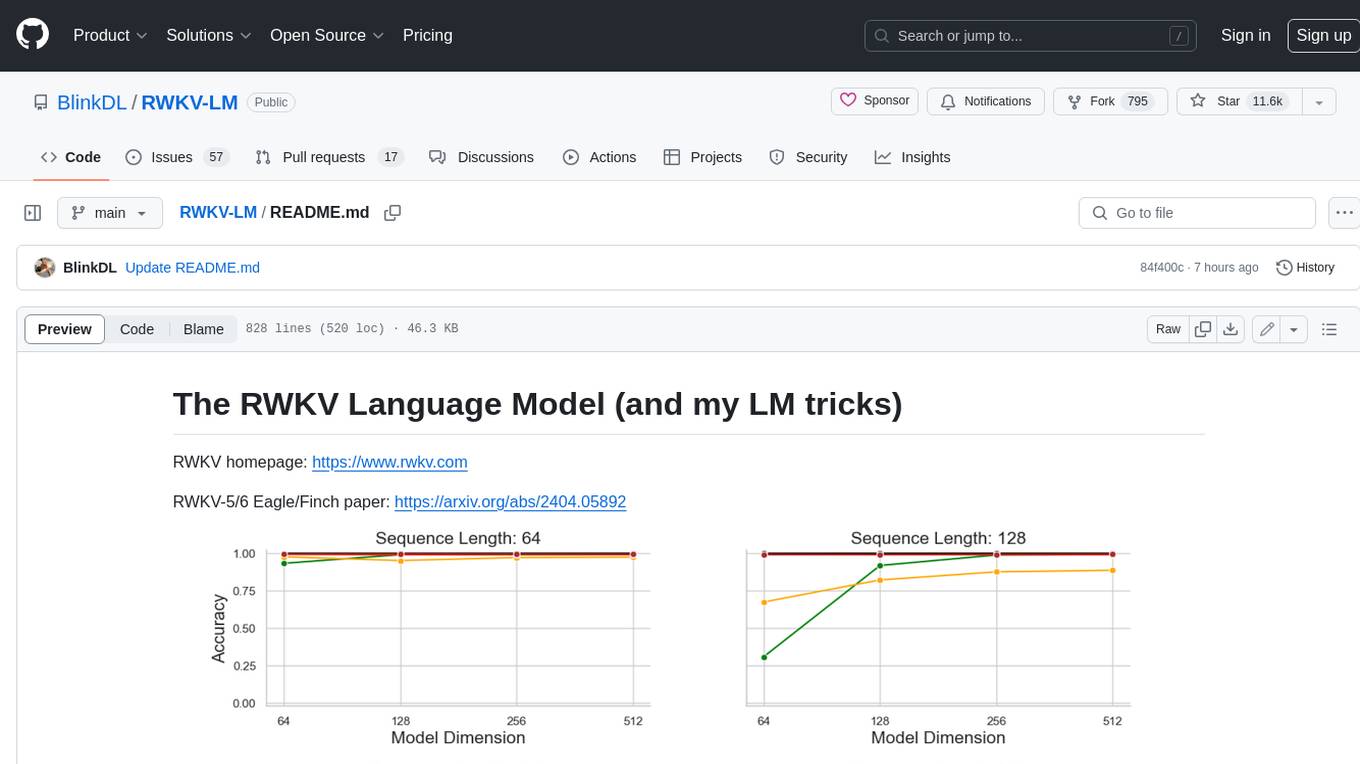

RWKV-LM

RWKV is an RNN with Transformer-level LLM performance, which can also be directly trained like a GPT transformer (parallelizable). And it's 100% attention-free. You only need the hidden state at position t to compute the state at position t+1. You can use the "GPT" mode to quickly compute the hidden state for the "RNN" mode. So it's combining the best of RNN and transformer - **great performance, fast inference, saves VRAM, fast training, "infinite" ctx_len, and free sentence embedding** (using the final hidden state).

awesome-transformer-nlp

This repository contains a hand-curated list of great machine (deep) learning resources for Natural Language Processing (NLP) with a focus on Generative Pre-trained Transformer (GPT), Bidirectional Encoder Representations from Transformers (BERT), attention mechanism, Transformer architectures/networks, Chatbot, and transfer learning in NLP.

self-llm

This project is a Chinese tutorial for domestic beginners based on the AutoDL platform, providing full-process guidance for various open-source large models, including environment configuration, local deployment, and efficient fine-tuning. It simplifies the deployment, use, and application process of open-source large models, enabling more ordinary students and researchers to better use open-source large models and helping open and free large models integrate into the lives of ordinary learners faster.

LLMs-from-scratch

This repository contains the code for coding, pretraining, and finetuning a GPT-like LLM and is the official code repository for the book Build a Large Language Model (From Scratch). In _Build a Large Language Model (From Scratch)_, you'll discover how LLMs work from the inside out. In this book, I'll guide you step by step through creating your own LLM, explaining each stage with clear text, diagrams, and examples. The method described in this book for training and developing your own small-but-functional model for educational purposes mirrors the approach used in creating large-scale foundational models such as those behind ChatGPT.

PaddleNLP

PaddleNLP is an easy-to-use and high-performance NLP library. It aggregates high-quality pre-trained models in the industry and provides out-of-the-box development experience, covering a model library for multiple NLP scenarios with industry practice examples to meet developers' flexible customization needs.

For similar jobs

Pearl

Pearl is a production-ready Reinforcement Learning AI agent library open-sourced by the Applied Reinforcement Learning team at Meta. It enables researchers and practitioners to develop Reinforcement Learning AI agents that prioritize cumulative long-term feedback over immediate feedback and can adapt to environments with limited observability, sparse feedback, and high stochasticity. Pearl offers a diverse set of unique features for production environments, including dynamic action spaces, offline learning, intelligent neural exploration, safe decision making, history summarization, and data augmentation.

awesome-generative-information-retrieval

This repository contains a curated list of resources on generative information retrieval, including research papers, datasets, tools, and applications. Generative information retrieval is a subfield of information retrieval that uses generative models to generate new documents or passages of text that are relevant to a given query. This can be useful for a variety of tasks, such as question answering, summarization, and document generation. The resources in this repository are intended to help researchers and practitioners stay up-to-date on the latest advances in generative information retrieval.

open-webui

Open WebUI is an extensible, feature-rich, and user-friendly self-hosted WebUI designed to operate entirely offline. It supports various LLM runners, including Ollama and OpenAI-compatible APIs. For more information, be sure to check out our Open WebUI Documentation.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

litgpt

LitGPT is a command-line tool designed to easily finetune, pretrain, evaluate, and deploy 20+ LLMs **on your own data**. It features highly-optimized training recipes for the world's most powerful open-source large-language-models (LLMs).

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

firecrawl

Firecrawl is an API service that takes a URL, crawls it, and converts it into clean markdown. It crawls all accessible subpages and provides clean markdown for each, without requiring a sitemap. The API is easy to use and can be self-hosted. It also integrates with Langchain and Llama Index. The Python SDK makes it easy to crawl and scrape websites in Python code.

NeMo

NeMo Framework is a generative AI framework built for researchers and pytorch developers working on large language models (LLMs), multimodal models (MM), automatic speech recognition (ASR), and text-to-speech synthesis (TTS). The primary objective of NeMo is to provide a scalable framework for researchers and developers from industry and academia to more easily implement and design new generative AI models by being able to leverage existing code and pretrained models.