Awesome-LLM-Preference-Learning

The official repository of our survey paper: "Towards a Unified View of Preference Learning for Large Language Models: A Survey"

Stars: 54

The repository 'Awesome-LLM-Preference-Learning' is the official repository of a survey paper titled 'Towards a Unified View of Preference Learning for Large Language Models: A Survey'. It contains a curated list of papers related to preference learning for Large Language Models (LLMs). The repository covers various aspects of preference learning, including on-policy and off-policy methods, feedback mechanisms, reward models, algorithms, evaluation techniques, and more. The papers included in the repository explore different approaches to aligning LLMs with human preferences, improving mathematical reasoning in LLMs, enhancing code generation, and optimizing language model performance.

README:

The official repository of our survey paper: "Towards a Unified View of Preference Learning for Large Language Models: A Survey".

This repo contains a curated list of 📙Awesome LLM Preference Learning Papers.

Reinforced Self-Training (ReST) for Language Modeling (2023.8) Caglar Gulcehre, Tom Le Paine, Srivatsan Srinivasan, Ksenia Konyushkova, Lotte Weerts, Abhishek Sharma, Aditya Siddhant, Alex Ahern, Miaosen Wang, Chenjie Gu, Wolfgang Macherey, Arnaud Doucet, Orhan Firat, Nando de Freitas [paper]

Statistical Rejection Sampling Improves Preference Optimization (2023.9) Tianqi Liu, Yao Zhao, Rishabh Joshi, Misha Khalman, Mohammad Saleh, Peter J. Liu, Jialu Liu [paper]

West-of-N: Synthetic Preference Generation for Improved Reward Modeling (2024.1) Alizée Pace, Jonathan Mallinson, Eric Malmi, Sebastian Krause, Aliaksei Severyn [paper]

Regularized Best-of-N Sampling to Mitigate Reward Hacking for Language Model Alignment (2024.6) Yuu Jinnai, Tetsuro Morimura, Kaito Ariu, Kenshi Abe [paper]

MCTS-based methods are commonly found in tasks involving complex reasoning, making them particularly promising for applications in mathematics, code generation, and general reasoning.

Alphazero-like Tree-Search can Guide Large Language Model Decoding and Training (2023.9) Xidong Feng, Ziyu Wan, Muning Wen, Stephen Marcus McAleer, Ying Wen, Weinan Zhang, Jun Wang [paper]

Math-Shepherd: Verify and Reinforce LLMs Step-by-step without Human Annotations (2023.12) Peiyi Wang, Lei Li, Zhihong Shao, R.X. Xu, Damai Dai, Yifei Li, Deli Chen, Y.Wu, Zhifang Sui [paper]

Improve Mathematical Reasoning in Language Models by Automated Process Supervision (2024.6) Liangchen Luo, Yinxiao Liu, Rosanne Liu, Samrat Phatale, Harsh Lara, Yunxuan Li, Lei Shu, Yun Zhu, Lei Meng, Jiao Sun, Abhinav Rastogi [paper]

ReST-MCTS*: LLM Self-Training via Process Reward Guided Tree Search (2024.6) Dan Zhang, Sining Zhoubian, Ziniu Hu, Yisong Yue, Yuxiao Dong, Jie Tang [paper]

Accessing GPT-4 level Mathematical Olympiad Solutions via Monte Carlo Tree Self-refine with LLaMa-3 8B (2024.6) Di Zhang, Xiaoshui Huang, Dongzhan Zhou, Yuqiang Li, Wanli Ouyang [paper]

Recovering Mental Representations from Large Language Models with Markov Chain Monte Carlo (2024.6) Jian-Qiao Zhu, Haijiang Yan, Thomas L. Griffiths [paper]

Mutual Reasoning Makes Smaller LLMs Stronger Problem-Solvers (2024.8) Zhenting Qi, Mingyuan Ma, Jiahang Xu, Li Lyna Zhang, Fan Yang, Mao Yang [paper]

Off-Policy data is usually the datasets related to preference alignment, which can be found in RewardBench and Preference_dataset_repo. Preference data for training the reward model can also be used for preference learning.

Scaling Relationship on Learning Mathematical Reasoning with Large Language Models (2023.8) -- Answer Equivalence Zheng Yuan, Hongyi Yuan, Chengpeng Li, Guanting Dong, Keming Lu, Chuanqi Tan, Chang Zhou, Jingren Zhou [paper]

DeepSeek-Prover: Advancing Theorem Proving in LLMs through Large-Scale Synthetic Data (2024.5) Huajian Xin, Daya Guo, Zhihong Shao, Zhizhou Ren, Qihao Zhu, Bo Liu, Chong Ruan, Wenda Li, Xiaodan Liang [paper]

DeepSeek-Prover-V1.5: Harnessing Proof Assistant Feedback for Reinforcement Learning and Monte-Carlo Tree Search Huajian Xin, Z.Z. Ren, Junxiao Song, Zhihong Shao, Wanjia Zhao, Haocheng Wang, Bo Liu, Liyue Zhang, Xuan Lu, Qiushi Du, Wenjun Gao, Qihao Zhu, Dejian Yang, Zhibin Gou, Z.F. Wu, Fuli Luo, Chong Ruan [paper]

Contrastive Preference Optimization: Pushing the Boundaries of LLM Performance in Machine Translation Haoran Xu, Amr Sharaf, Yunmo Chen, Weiting Tan, Lingfeng Shen, Benjamin Van Durme, Kenton Murray, Young Jin Kim [paper]

PanGu-Coder2: Boosting Large Language Models for Code with Ranking Feedback (2023.7) Bo Shen, Jiaxin Zhang, Taihong Chen, Daoguang Zan, Bing Geng, An Fu, Muhan Zeng, Ailun Yu, Jichuan Ji, Jingyang Zhao, Yuenan Guo, Qianxiang Wang [paper]

RLTF: Reinforcement Learning from Unit Test Feedback (2023.7) Jiate Liu, Yiqin Zhu, Kaiwen Xiao, Qiang Fu, Xiao Han, Wei Yang, Deheng Ye [paper]

StepCoder: Improve Code Generation with Reinforcement Learning from Compiler Feedback (2024.2) Shihan Dou, Yan Liu, Haoxiang Jia, Limao Xiong, Enyu Zhou, Wei Shen, Junjie Shan, Caishuang Huang, Xiao Wang, Xiaoran Fan, Zhiheng Xi, Yuhao Zhou, Tao Ji, Rui Zheng, Qi Zhang, Xuanjing Huang, Tao Gui [paper]

Aligning LLM Agents by Learning Latent Preference from User Edits (2024.4) Ge Gao, Alexey Taymanov, Eduardo Salinas, Paul Mineiro, Dipendra Misra [paper]

RLAIF vs. RLHF: Scaling Reinforcement Learning from Human Feedback with AI Feedback (2023.9) Harrison Lee, Samrat Phatale, Hassan Mansoor, Thomas Mesnard, Johan Ferret, Kellie Lu, Colton Bishop, Ethan Hall, Victor Carbune, Abhinav Rastogi, Sushant Prakash [paper]

Regularized Best-of-N Sampling to Mitigate Reward Hacking for Language Model Alignment (2024.4) Yuu Jinnai, Tetsuro Morimura, Kaito Ariu, Kenshi Abe [paper]

West-of-N: Synthetic Preference Generation for Improved Reward Modeling (2024.1) Alizée Pace, Jonathan Mallinson, Eric Malmi, Sebastian Krause, Aliaksei Severyn [paper]

Reward Model Ensembles Help Mitigate Overoptimization (2023.10) Thomas Coste, Usman Anwar, Robert Kirk, David Krueger [paper]

Uncertainty-Penalized Reinforcement Learning from Human Feedback with Diverse Reward LoRA Ensembles (2023.12) Yuanzhao Zhai, Han Zhang, Yu Lei, Yue Yu, Kele Xu, Dawei Feng, Bo Ding, Huaimin Wang [paper]

WARM: On the Benefits of Weight Averaged Reward Models (2024.1) Alexandre Ramé, Nino Vieillard, Léonard Hussenot, Robert Dadashi, Geoffrey Cideron, Olivier Bachem, Johan Ferret [paper]

Improving Reinforcement Learning from Human Feedback with Efficient Reward Model Ensemble (2024.1) Shun Zhang, Zhenfang Chen, Sunli Chen, Yikang Shen, Zhiqing Sun, Chuang Gan [paper]

Solving math word problems with process- and outcome-based feedback (2022.11) Jonathan Uesato, Nate Kushman, Ramana Kumar, Francis Song, Noah Siegel, Lisa Wang, Antonia Creswell, Geoffrey Irving, Irina Higgins [paper]

Fine-Grained Human Feedback Gives Better Rewards for Language Model Training (2023.6) Zeqiu Wu, Yushi Hu, Weijia Shi, Nouha Dziri, Alane Suhr, Prithviraj Ammanabrolu, Noah A. Smith, Mari Ostendorf, Hannaneh Hajishirzi [paper]

Let's Verify Step by Step (2023.5) Hunter Lightman, Vineet Kosaraju, Yura Burda, Harri Edwards, Bowen Baker, Teddy Lee, Jan Leike, John Schulman, Ilya Sutskever, Karl Cobbe [paper]

OVM, Outcome-supervised Value Models for Planning in Mathematical Reasoning (2023.11) Fei Yu, Anningzhe Gao, Benyou Wang [paper]

Math-Shepherd: Verify and Reinforce LLMs Step-by-step without Human Annotations (2023.12) Peiyi Wang, Lei Li, Zhihong Shao, R.X. Xu, Damai Dai, Yifei Li, Deli Chen, Y.Wu, Zhifang Sui [paper]

Prior Constraints-based Reward Model Training for Aligning Large Language Models (2024.4) Hang Zhou, Chenglong Wang, Yimin Hu, Tong Xiao, Chunliang Zhang, Jingbo Zhu [paper]

Improve Mathematical Reasoning in Language Models by Automated Process Supervision (2024.6) Liangchen Luo, Yinxiao Liu, Rosanne Liu, Samrat Phatale, Harsh Lara, Yunxuan Li, Lei Shu, Yun Zhu, Lei Meng, Jiao Sun, Abhinav Rastogi [paper]

LLM Critics Help Catch Bugs in Mathematics: Towards a Better Mathematical Verifier with Natural Language Feedback (2024.6) Bofei Gao, Zefan Cai, Runxin Xu, Peiyi Wang, Ce Zheng, Runji Lin, Keming Lu, Dayiheng Liu, Chang Zhou, Wen Xiao, Junjie Hu, Tianyu Liu, Baobao Chang [paper]

PandaLM: An Automatic Evaluation Benchmark for LLM Instruction Tuning Optimization (2023.6) Yidong Wang, Zhuohao Yu, Zhengran Zeng, Linyi Yang, Cunxiang Wang, Hao Chen, Chaoya Jiang, Rui Xie, Jindong Wang, Xing Xie, Wei Ye, Shikun Zhang, Yue Zhang [paper]

LLM-Blender: Ensembling Large Language Models with Pairwise Ranking and Generative Fusion (2023.7) Dongfu Jiang, Xiang Ren, Bill Yuchen Lin [paper]

Self-Rewarding Language Models (2024.1) Weizhe Yuan, Richard Yuanzhe Pang, Kyunghyun Cho, Xian Li, Sainbayar Sukhbaatar, Jing Xu, Jason Weston [paper]

LLM Critics Help Catch LLM Bugs (2024.6) Nat McAleese, Rai Michael Pokorny, Juan Felipe Ceron Uribe, Evgenia Nitishinskaya, Maja Trebacz, Jan Leike [paper]

Meta-Rewarding Language Models: Self-Improving Alignment with LLM-as-a-Meta-Judge (2024.7) Tianhao Wu, Weizhe Yuan, Olga Golovneva, Jing Xu, Yuandong Tian, Jiantao Jiao, Jason Weston, Sainbayar Sukhbaatar [paper]

Generative Verifiers: Reward Modeling as Next-Token Prediction (2024.8) Lunjun Zhang, Arian Hosseini, Hritik Bansal, Mehran Kazemi, Aviral Kumar, Rishabh Agarwal [paper]

STaR: Bootstrapping Reasoning With Reasoning (2022.5) Eric Zelikman, Yuhuai Wu, Jesse Mu, Noah D. Goodman [paper]

RAFT: Reward rAnked FineTuning for Generative Foundation Model Alignment (2023.4) Hanze Dong, Wei Xiong, Deepanshu Goyal, Yihan Zhang, Winnie Chow, Rui Pan, Shizhe Diao, Jipeng Zhang, Kashun Shum, Tong Zhang [paper]

Scaling Relationship on Learning Mathematical Reasoning with Large Language Models (2023.8) Zheng Yuan, Hongyi Yuan, Chengpeng Li, Guanting Dong, Keming Lu, Chuanqi Tan, Chang Zhou, Jingren Zhou [paper]

Proximal Policy Optimization Algorithms (2017.7) John Schulman, Filip Wolski, Prafulla Dhariwal, Alec Radford, Oleg Klimov [paper]

DeepSeekMath: Pushing the Limits of Mathematical Reasoning in Open Language Models (2024.2) Zhihong Shao, Peiyi Wang, Qihao Zhu, Runxin Xu, Junxiao Song, Xiao Bi, Haowei Zhang, Mingchuan Zhang, Y.K. Li, Y. Wu, Daya Guo [paper]

ReMax: A Simple, Effective, and Efficient Reinforcement Learning Method for Aligning Large Language Models (2023.10) Ziniu Li, Tian Xu, Yushun Zhang, Zhihang Lin, Yang Yu, Ruoyu Sun, Zhi-Quan Luo [paper]

KTO: Model Alignment as Prospect Theoretic Optimization (2024.2) Kawin Ethayarajh, Winnie Xu, Niklas Muennighoff, Dan Jurafsky, Douwe Kiela [paper]

Chain of Hindsight Aligns Language Models with Feedback (2023.2) Hao Liu, Carmelo Sferrazza, Pieter Abbeel [paper]

Calibrating Sequence likelihood Improves Conditional Language Generation (2022.9) Yao Zhao, Misha Khalman, Rishabh Joshi, Shashi Narayan, Mohammad Saleh, Peter J. Liu [paper]

Direct Preference Optimization: Your Language Model is Secretly a Reward Model (2023.5) Rafael Rafailov, Archit Sharma, Eric Mitchell, Stefano Ermon, Christopher D. Manning, Chelsea Finn [paper]

A General Theoretical Paradigm to Understand Learning from Human Preferences (2023.10) Mohammad Gheshlaghi Azar, Mark Rowland, Bilal Piot, Daniel Guo, Daniele Calandriello, Michal Valko, Rémi Munos [paper]

Direct Alignment of Language Models via Quality-Aware Self-Refinement (2024.5) Runsheng Yu, Yong Wang, Xiaoqi Jiao, Youzhi Zhang, James T. Kwok [paper]

ORPO: Monolithic Preference Optimization without Reference Model (2024.3) Jiwoo Hong, Noah Lee, James Thorne [paper]

Mallows-DPO: Fine-Tune Your LLM with Preference Dispersions (2024.5) Haoxian Chen, Hanyang Zhao, Henry Lam, David Yao, Wenpin Tang [paper]

Group Robust Preference Optimization in Reward-free RLHF (2024.5) Shyam Sundhar Ramesh, Yifan Hu, Iason Chaimalas, Viraj Mehta, Pier Giuseppe Sessa, Haitham Bou Ammar, Ilija Bogunovic [paper]

Smaug: Fixing Failure Modes of Preference Optimisation with DPO-Positive (2024.2) Arka Pal, Deep Karkhanis, Samuel Dooley, Manley Roberts, Siddartha Naidu, Colin White [paper]

Beyond Reverse KL: Generalizing Direct Preference Optimization with Diverse Divergence Constraints (2023.9) Chaoqi Wang, Yibo Jiang, Chenghao Yang, Han Liu, Yuxin Chen [paper]

Towards Efficient Exact Optimization of Language Model Alignment (2024.2) Haozhe Ji, Cheng Lu, Yilin Niu, Pei Ke, Hongning Wang, Jun Zhu, Jie Tang, Minlie Huang [paper]

SimPO: Simple Preference Optimization with a Reference-Free Reward (2024.5) Yu Meng, Mengzhou Xia, Danqi Chen [paper]

sDPO: Don't Use Your Data All at Once (2024.3) Dahyun Kim, Yungi Kim, Wonho Song, Hyeonwoo Kim, Yunsu Kim, Sanghoon Kim, Chanjun Park [paper]

Learn Your Reference Model for Real Good Alignment (2024.4) Alexey Gorbatovski, Boris Shaposhnikov, Alexey Malakhov, Nikita Surnachev, Yaroslav Aksenov, Ian Maksimov, Nikita Balagansky, Daniil Gavrilov [paper]

Statistical Rejection Sampling Improves Preference Optimization (2023.9) Tianqi Liu, Yao Zhao, Rishabh Joshi, Misha Khalman, Mohammad Saleh, Peter J. Liu, Jialu Liu [paper]

Controllable Preference Optimization: Toward Controllable Multi-Objective Alignment (2024.2) Yiju Guo, Ganqu Cui, Lifan Yuan, Ning Ding, Jiexin Wang, Huimin Chen, Bowen Sun, Ruobing Xie, Jie Zhou, Yankai Lin, Zhiyuan Liu, Maosong Sun [paper]

MAPO: Advancing Multilingual Reasoning through Multilingual Alignment-as-Preference Optimization (2024.1) Shuaijie She, Wei Zou, Shujian Huang, Wenhao Zhu, Xiang Liu, Xiang Geng, Jiajun Chen [paper]

KnowTuning: Knowledge-aware Fine-tuning for Large Language Models (2024.2) Yougang Lyu, Lingyong Yan, Shuaiqiang Wang, Haibo Shi, Dawei Yin, Pengjie Ren, Zhumin Chen, Maarten de Rijke, Zhaochun Ren [paper]

TS-Align: A Teacher-Student Collaborative Framework for Scalable Iterative Finetuning of Large Language Models (2024.5) Chen Zhang, Chengguang Tang, Dading Chong, Ke Shi, Guohua Tang, Feng Jiang, Haizhou Li [paper]

Beyond One-Preference-Fits-All Alignment: Multi-Objective Direct Preference Optimization (2023.10) Zhanhui Zhou, Jie Liu, Jing Shao, Xiangyu Yue, Chao Yang, Wanli Ouyang, Yu Qiao [paper]

Hybrid Preference Optimization: Augmenting Direct Preference Optimization with Auxiliary Objectives(2024.5) Anirudhan Badrinath, Prabhat Agarwal, Jiajing Xu [paper]

RRHF: Rank Responses to Align Language Models with Human Feedback without tears (2023.4) Zheng Yuan, Hongyi Yuan, Chuanqi Tan, Wei Wang, Songfang Huang, Fei Huang [paper]

Preference Ranking Optimization for Human Alignment (2023.6) Feifan Song, Bowen Yu, Minghao Li, Haiyang Yu, Fei Huang, Yongbin Li, Houfeng Wang [paper]

CycleAlign: Iterative Distillation from Black-box LLM to White-box Models for Better Human Alignment (2023.10) Jixiang Hong, Quan Tu, Changyu Chen, Xing Gao, Ji Zhang, Rui Yan [paper]

Making Large Language Models Better Reasoners with Alignment (2023.9) Peiyi Wang, Lei Li, Liang Chen, Feifan Song, Binghuai Lin, Yunbo Cao, Tianyu Liu, Zhifang Sui [paper]

Don't Forget Your Reward Values: Language Model Alignment via Value-based Calibration (2024.2) Xin Mao, Feng-Lin Li, Huimin Xu, Wei Zhang, Anh Tuan Luu [paper]

LiPO: Listwise Preference Optimization through Learning-to-Rank (2024.2) Tianqi Liu, Zhen Qin, Junru Wu, Jiaming Shen, Misha Khalman, Rishabh Joshi, Yao Zhao, Mohammad Saleh, Simon Baumgartner, Jialu Liu, Peter J. Liu, Xuanhui Wang [paper]

LIRE: listwise reward enhancement for preference alignment (2024.5) Mingye Zhu, Yi Liu, Lei Zhang, Junbo Guo, Zhendong Mao [paper]

Black-Box Prompt Optimization: Aligning Large Language Models without Model Training (2023.11) Jiale Cheng, Xiao Liu, Kehan Zheng, Pei Ke, Hongning Wang, Yuxiao Dong, Jie Tang, Minlie Huang [paper]

The Unlocking Spell on Base LLMs: Rethinking Alignment via In-Context Learning (2023.12) Bill Yuchen Lin, Abhilasha Ravichander, Ximing Lu, Nouha Dziri, Melanie Sclar, Khyathi Chandu, Chandra Bhagavatula, Yejin Choi [paper]

ICDPO: Effectively Borrowing Alignment Capability of Others via In-context Direct Preference Optimization (2024.2) Feifan Song, Yuxuan Fan, Xin Zhang, Peiyi Wang, Houfeng Wang [paper]

Aligner: Efficient Alignment by Learning to Correct (2024.2) Jiaming Ji, Boyuan Chen, Hantao Lou, Donghai Hong, Borong Zhang, Xuehai Pan, Juntao Dai, Tianyi Qiu, Yaodong Yang [paper]

RAIN: Your Language Models Can Align Themselves without Finetuning (2023.9) Yuhui Li, Fangyun Wei, Jinjing Zhao, Chao Zhang, Hongyang Zhang [paper]

Reward-Augmented Decoding: Efficient Controlled Text Generation With a Unidirectional Reward Model (2023.10) Haikang Deng, Colin Raffel [paper]

Controlled Decoding from Language Models (2023.10) Sidharth Mudgal, Jong Lee, Harish Ganapathy, YaGuang Li, Tao Wang, Yanping Huang, Zhifeng Chen, Heng-Tze Cheng, Michael Collins, Trevor Strohman, Jilin Chen, Alex Beutel, Ahmad Beirami [paper]

DeAL: Decoding-time Alignment for Large Language Models (2024.2) James Y. Huang, Sailik Sengupta, Daniele Bonadiman, Yi-an Lai, Arshit Gupta, Nikolaos Pappas, Saab Mansour, Katrin Kirchhoff, Dan Roth [paper]

Decoding-time Realignment of Language Models (2024.2) Tianlin Liu, Shangmin Guo, Leonardo Bianco, Daniele Calandriello, Quentin Berthet, Felipe Llinares, Jessica Hoffmann, Lucas Dixon, Michal Valko, Mathieu Blondel [paper]

Rule-based benchmarks are traditional benchmarks that span various domains such as reasoning, translation, dialogue, question-answering, code generation, and more. We won't list them all individually.

G-Eval: NLG Evaluation using GPT-4 with Better Human Alignment (2023.3) Yang Liu, Dan Iter, Yichong Xu, Shuohang Wang, Ruochen Xu, Chenguang Zhu [paper]

Automated Evaluation of Personalized Text Generation using Large Language Models (2023.10) Yaqing Wang, Jiepu Jiang, Mingyang Zhang, Cheng Li, Yi Liang, Qiaozhu Mei, Michael Bendersky [paper]

Multi-Dimensional Evaluation of Text Summarization with In-Context Learning (2023.6) Sameer Jain, Vaishakh Keshava, Swarnashree Mysore Sathyendra, Patrick Fernandes, Pengfei Liu, Graham Neubig, Chunting Zhou [paper]

Large Language Models Are State-of-the-Art Evaluators of Translation Quality (2023.2) Tom Kocmi, Christian Federmann [paper]

Large Language Models are not Fair Evaluators (2023.5) Peiyi Wang, Lei Li, Liang Chen, Zefan Cai, Dawei Zhu, Binghuai Lin, Yunbo Cao, Qi Liu, Tianyu Liu, Zhifang Sui [paper]

Generative Judge for Evaluating Alignment (2023.10) Junlong Li, Shichao Sun, Weizhe Yuan, Run-Ze Fan, Hai Zhao, Pengfei Liu [paper]

Judging LLM-as-a-Judge with MT-Bench and Chatbot Arena (2023.6) Lianmin Zheng, Wei-Lin Chiang, Ying Sheng, Siyuan Zhuang, Zhanghao Wu, Yonghao Zhuang, Zi Lin, Zhuohan Li, Dacheng Li, Eric P. Xing, Hao Zhang, Joseph E. Gonzalez, Ion Stoica [paper]

Prometheus: Inducing Fine-grained Evaluation Capability in Language Models (2023.10) Seungone Kim, Jamin Shin, Yejin Cho, Joel Jang, Shayne Longpre, Hwaran Lee, Sangdoo Yun, Seongjin Shin, Sungdong Kim, James Thorne, Minjoon Seo [paper]

PandaLM: An Automatic Evaluation Benchmark for LLM Instruction Tuning Optimization (2023.6) PandaLM: An Automatic Evaluation Benchmark for LLM Instruction Tuning Optimization [paper]

PRD: Peer Rank and Discussion Improve Large Language Model based Evaluations (2023.7) Ruosen Li, Teerth Patel, Xinya Du [paper]

Evaluating Large Language Models at Evaluating Instruction Following (2023.10) Zhiyuan Zeng, Jiatong Yu, Tianyu Gao, Yu Meng, Tanya Goyal, Danqi Chen [paper]

Wider and Deeper LLM Networks are Fairer LLM Evaluators (2023.8) Xinghua Zhang, Bowen Yu, Haiyang Yu, Yangyu Lv, Tingwen Liu, Fei Huang, Hongbo Xu, Yongbin Li [paper]

Welcome to star & submit a PR to this repo!

@misc{gao2024unifiedviewpreferencelearning,

title={Towards a Unified View of Preference Learning for Large Language Models: A Survey},

author={Bofei Gao and Feifan Song and Yibo Miao and Zefan Cai and Zhe Yang and Liang Chen and Helan Hu and Runxin Xu and Qingxiu Dong and Ce Zheng and Wen Xiao and Ge Zhang and Daoguang Zan and Keming Lu and Bowen Yu and Dayiheng Liu and Zeyu Cui and Jian Yang and Lei Sha and Houfeng Wang and Zhifang Sui and Peiyi Wang and Tianyu Liu and Baobao Chang},

year={2024},

eprint={2409.02795},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2409.02795},

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome-LLM-Preference-Learning

Similar Open Source Tools

Awesome-LLM-Preference-Learning

The repository 'Awesome-LLM-Preference-Learning' is the official repository of a survey paper titled 'Towards a Unified View of Preference Learning for Large Language Models: A Survey'. It contains a curated list of papers related to preference learning for Large Language Models (LLMs). The repository covers various aspects of preference learning, including on-policy and off-policy methods, feedback mechanisms, reward models, algorithms, evaluation techniques, and more. The papers included in the repository explore different approaches to aligning LLMs with human preferences, improving mathematical reasoning in LLMs, enhancing code generation, and optimizing language model performance.

LLMAgentPapers

LLM Agents Papers is a repository containing must-read papers on Large Language Model Agents. It covers a wide range of topics related to language model agents, including interactive natural language processing, large language model-based autonomous agents, personality traits in large language models, memory enhancements, planning capabilities, tool use, multi-agent communication, and more. The repository also provides resources such as benchmarks, types of tools, and a tool list for building and evaluating language model agents. Contributors are encouraged to add important works to the repository.

awesome-llm-role-playing-with-persona

Awesome-llm-role-playing-with-persona is a curated list of resources for large language models for role-playing with assigned personas. It includes papers and resources related to persona-based dialogue systems, personalized response generation, psychology of LLMs, biases in LLMs, and more. The repository aims to provide a comprehensive collection of research papers and tools for exploring role-playing abilities of large language models in various contexts.

Prompt4ReasoningPapers

Prompt4ReasoningPapers is a repository dedicated to reasoning with language model prompting. It provides a comprehensive survey of cutting-edge research on reasoning abilities with language models. The repository includes papers, methods, analysis, resources, and tools related to reasoning tasks. It aims to support various real-world applications such as medical diagnosis, negotiation, etc.

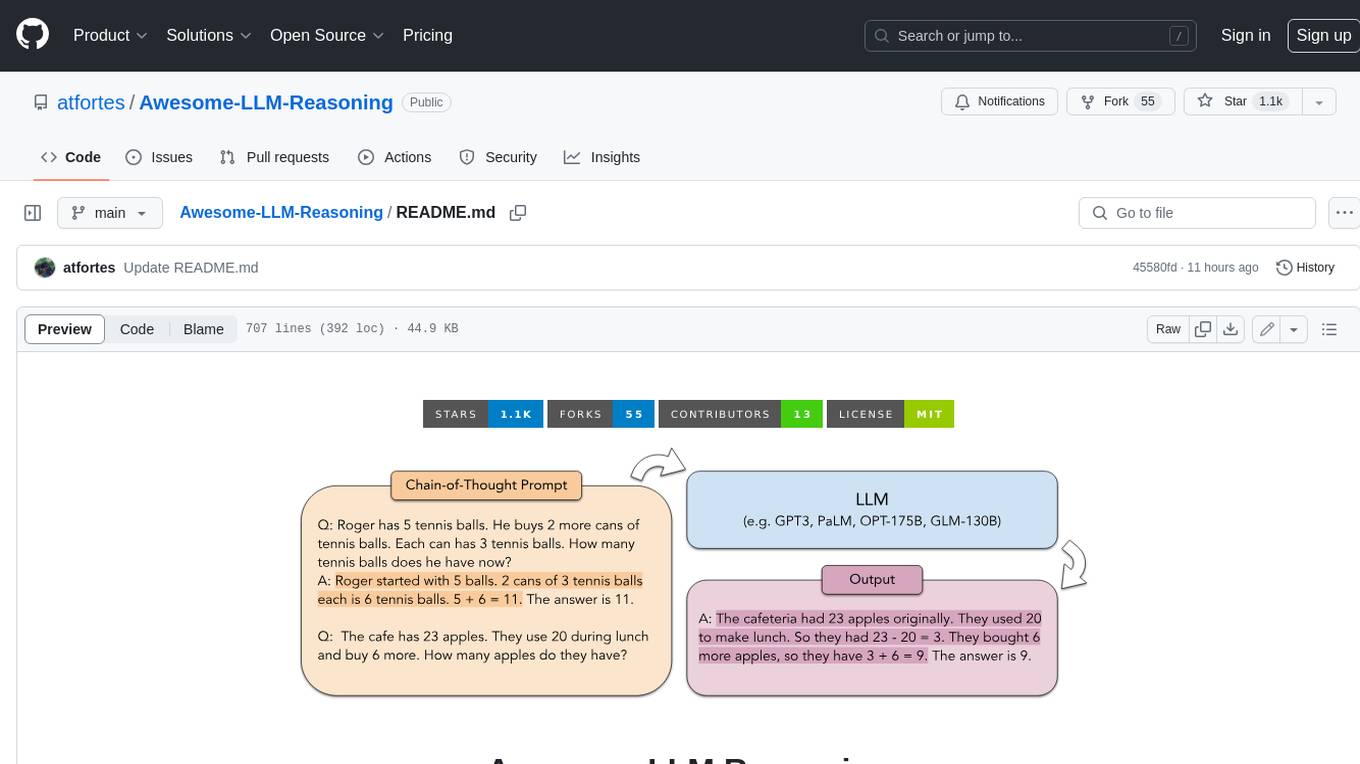

Awesome-LLM-Reasoning

**Curated collection of papers and resources on how to unlock the reasoning ability of LLMs and MLLMs.** **Description in less than 400 words, no line breaks and quotation marks.** Large Language Models (LLMs) have revolutionized the NLP landscape, showing improved performance and sample efficiency over smaller models. However, increasing model size alone has not proved sufficient for high performance on challenging reasoning tasks, such as solving arithmetic or commonsense problems. This curated collection of papers and resources presents the latest advancements in unlocking the reasoning abilities of LLMs and Multimodal LLMs (MLLMs). It covers various techniques, benchmarks, and applications, providing a comprehensive overview of the field. **5 jobs suitable for this tool, in lowercase letters.** - content writer - researcher - data analyst - software engineer - product manager **Keywords of the tool, in lowercase letters.** - llm - reasoning - multimodal - chain-of-thought - prompt engineering **5 specific tasks user can use this tool to do, in less than 3 words, Verb + noun form, in daily spoken language.** - write a story - answer a question - translate a language - generate code - summarize a document

Awesome-LLM-Reasoning-Openai-o1-Survey

The repository 'Awesome LLM Reasoning Openai-o1 Survey' provides a collection of survey papers and related works on OpenAI o1, focusing on topics such as LLM reasoning, self-play reinforcement learning, complex logic reasoning, and scaling law. It includes papers from various institutions and researchers, showcasing advancements in reasoning bootstrapping, reasoning scaling law, self-play learning, step-wise and process-based optimization, and applications beyond math. The repository serves as a valuable resource for researchers interested in exploring the intersection of language models and reasoning techniques.

awesome-generative-information-retrieval

This repository contains a curated list of resources on generative information retrieval, including research papers, datasets, tools, and applications. Generative information retrieval is a subfield of information retrieval that uses generative models to generate new documents or passages of text that are relevant to a given query. This can be useful for a variety of tasks, such as question answering, summarization, and document generation. The resources in this repository are intended to help researchers and practitioners stay up-to-date on the latest advances in generative information retrieval.

LLM4DB

LLM4DB is a repository focused on the intersection of Large Language Models (LLM) and Database technologies. It covers various aspects such as data processing, data analysis, database optimization, and data management for LLM. The repository includes works on data cleaning, entity matching, schema matching, data discovery, NL2SQL, data exploration, data visualization, configuration tuning, query optimization, and anomaly diagnosis using LLMs. It aims to provide insights and advancements in leveraging LLMs for improving data processing, analysis, and database management tasks.

LLM4DB

LLM4DB is a repository focused on the intersection of Large Language Models (LLMs) and Database technologies. It covers various aspects such as data processing, data analysis, database optimization, and data management for LLMs. The repository includes research papers, tools, and techniques related to leveraging LLMs for tasks like data cleaning, entity matching, schema matching, data discovery, NL2SQL, data exploration, data visualization, knob tuning, query optimization, and database diagnosis.

llm-self-correction-papers

This repository contains a curated list of papers focusing on the self-correction of large language models (LLMs) during inference. It covers various frameworks for self-correction, including intrinsic self-correction, self-correction with external tools, self-correction with information retrieval, and self-correction with training designed specifically for self-correction. The list includes survey papers, negative results, and frameworks utilizing reinforcement learning and OpenAI o1-like approaches. Contributions are welcome through pull requests following a specific format.

Awesome-LLM-RAG

This repository, Awesome-LLM-RAG, aims to record advanced papers on Retrieval Augmented Generation (RAG) in Large Language Models (LLMs). It serves as a resource hub for researchers interested in promoting their work related to LLM RAG by updating paper information through pull requests. The repository covers various topics such as workshops, tutorials, papers, surveys, benchmarks, retrieval-enhanced LLMs, RAG instruction tuning, RAG in-context learning, RAG embeddings, RAG simulators, RAG search, RAG long-text and memory, RAG evaluation, RAG optimization, and RAG applications.

LLM-as-a-Judge

LLM-as-a-Judge is a repository that includes papers discussed in a survey paper titled 'A Survey on LLM-as-a-Judge'. The repository covers various aspects of using Large Language Models (LLMs) as judges for tasks such as evaluation, reasoning, and decision-making. It provides insights into evaluation pipelines, improvement strategies, and specific tasks related to LLMs. The papers included in the repository explore different methodologies, applications, and future research directions for leveraging LLMs as evaluators in various domains.

awesome-ai-llm4education

The 'awesome-ai-llm4education' repository is a curated list of papers related to artificial intelligence (AI) and large language models (LLM) for education. It collects papers from top conferences, journals, and specialized domain-specific conferences, categorizing them based on specific tasks for better organization. The repository covers a wide range of topics including tutoring, personalized learning, assessment, material preparation, specific scenarios like computer science, language, math, and medicine, aided teaching, as well as datasets and benchmarks for educational research.

For similar tasks

alignment-handbook

The Alignment Handbook provides robust training recipes for continuing pretraining and aligning language models with human and AI preferences. It includes techniques such as continued pretraining, supervised fine-tuning, reward modeling, rejection sampling, and direct preference optimization (DPO). The handbook aims to fill the gap in public resources on training these models, collecting data, and measuring metrics for optimal downstream performance.

Xwin-LM

Xwin-LM is a powerful and stable open-source tool for aligning large language models, offering various alignment technologies like supervised fine-tuning, reward models, reject sampling, and reinforcement learning from human feedback. It has achieved top rankings in benchmarks like AlpacaEval and surpassed GPT-4. The tool is continuously updated with new models and features.

Awesome-LLM-Preference-Learning

The repository 'Awesome-LLM-Preference-Learning' is the official repository of a survey paper titled 'Towards a Unified View of Preference Learning for Large Language Models: A Survey'. It contains a curated list of papers related to preference learning for Large Language Models (LLMs). The repository covers various aspects of preference learning, including on-policy and off-policy methods, feedback mechanisms, reward models, algorithms, evaluation techniques, and more. The papers included in the repository explore different approaches to aligning LLMs with human preferences, improving mathematical reasoning in LLMs, enhancing code generation, and optimizing language model performance.

LLM-Synthetic-Data

LLM-Synthetic-Data is a repository focused on real-time, fine-grained LLM-Synthetic-Data generation. It includes methods, surveys, and application areas related to synthetic data for language models. The repository covers topics like pre-training, instruction tuning, model collapse, LLM benchmarking, evaluation, and distillation. It also explores application areas such as mathematical reasoning, code generation, text-to-SQL, alignment, reward modeling, long context, weak-to-strong generalization, agent and tool use, vision and language, factuality, federated learning, generative design, and safety.

syncode

SynCode is a novel framework for the grammar-guided generation of Large Language Models (LLMs) that ensures syntactically valid output with respect to defined Context-Free Grammar (CFG) rules. It supports general-purpose programming languages like Python, Go, SQL, JSON, and more, allowing users to define custom grammars using EBNF syntax. The tool compares favorably to other constrained decoders and offers features like fast grammar-guided generation, compatibility with HuggingFace Language Models, and the ability to work with various decoding strategies.

ice-score

ICE-Score is a tool designed to instruct large language models to evaluate code. It provides a minimum viable product (MVP) for evaluating generated code snippets using inputs such as problem, output, task, aspect, and model. Users can also evaluate with reference code and enable zero-shot chain-of-thought evaluation. The tool is built on codegen-metrics and code-bert-score repositories and includes datasets like CoNaLa and HumanEval. ICE-Score has been accepted to EACL 2024.

flashinfer

FlashInfer is a library for Language Languages Models that provides high-performance implementation of LLM GPU kernels such as FlashAttention, PageAttention and LoRA. FlashInfer focus on LLM serving and inference, and delivers state-the-art performance across diverse scenarios.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.