Best AI tools for< Optimize Language Model Performance >

20 - AI tool Sites

Intel Gaudi AI Accelerator Developer

The Intel Gaudi AI accelerator developer website provides resources, guidance, tools, and support for building, migrating, and optimizing AI models. It offers software, model references, libraries, containers, and tools for training and deploying Generative AI and Large Language Models. The site focuses on the Intel Gaudi accelerators, including tutorials, documentation, and support for developers to enhance AI model performance.

Moreh

Moreh is an AI platform that aims to make hyperscale AI infrastructure more accessible for scaling any AI model and application. It provides a full-stack infrastructure software from PyTorch to GPUs for the LLM era, enabling users to train large language models efficiently and effectively.

Cerebras

Cerebras is an AI tool that offers products and services related to AI supercomputers, cloud system processors, and applications for various industries. It provides high-performance computing solutions, including large language models, and caters to sectors such as health, energy, government, scientific computing, and financial services. Cerebras specializes in AI model services, offering state-of-the-art models and training services for tasks like multi-lingual chatbots and DNA sequence prediction. The platform also features the Cerebras Model Zoo, an open-source repository of AI models for developers and researchers.

Athina AI

Athina AI is a comprehensive platform designed to monitor, debug, analyze, and improve the performance of Large Language Models (LLMs) in production environments. It provides a suite of tools and features that enable users to detect and fix hallucinations, evaluate output quality, analyze usage patterns, and optimize prompt management. Athina AI supports integration with various LLMs and offers a range of evaluation metrics, including context relevancy, harmfulness, summarization accuracy, and custom evaluations. It also provides a self-hosted solution for complete privacy and control, a GraphQL API for programmatic access to logs and evaluations, and support for multiple users and teams. Athina AI's mission is to empower organizations to harness the full potential of LLMs by ensuring their reliability, accuracy, and alignment with business objectives.

Arcee AI

Arcee AI is a platform that offers a cost-effective, secure, end-to-end solution for building and deploying Small Language Models (SLMs). It allows users to merge and train custom language models by leveraging open source models and their own data. The platform is known for its Model Merging technique, which combines the power of pre-trained Large Language Models (LLMs) with user-specific data to create high-performing models across various industries.

LM-Kit.NET

LM-Kit.NET is a comprehensive AI toolkit for .NET developers, offering a wide range of features such as AI agent integration, data processing, text analysis, translation, text generation, and model optimization. The toolkit enables developers to create intelligent and adaptable AI applications by providing tools for language models, sentiment analysis, emotion detection, and more. With a focus on performance optimization and security, LM-Kit.NET empowers developers to build cutting-edge AI solutions seamlessly into their C# and VB.NET applications.

DentroChat

DentroChat is an AI chat application that reimagines the way users interact with AI models. It allows users to select from various large language models (LLMs) in different modes, enabling them to choose the best AI for their specific tasks. With seamless mode switching and optimized performance, DentroChat offers flexibility and precision in AI interactions.

Unify

Unify is an AI tool that offers a unified platform for accessing and comparing various Language Models (LLMs) from different providers. It allows users to combine models for faster, cheaper, and better responses, optimizing for quality, speed, and cost-efficiency. Unify simplifies the complex task of selecting the best LLM by providing transparent benchmarks, personalized routing, and performance optimization tools.

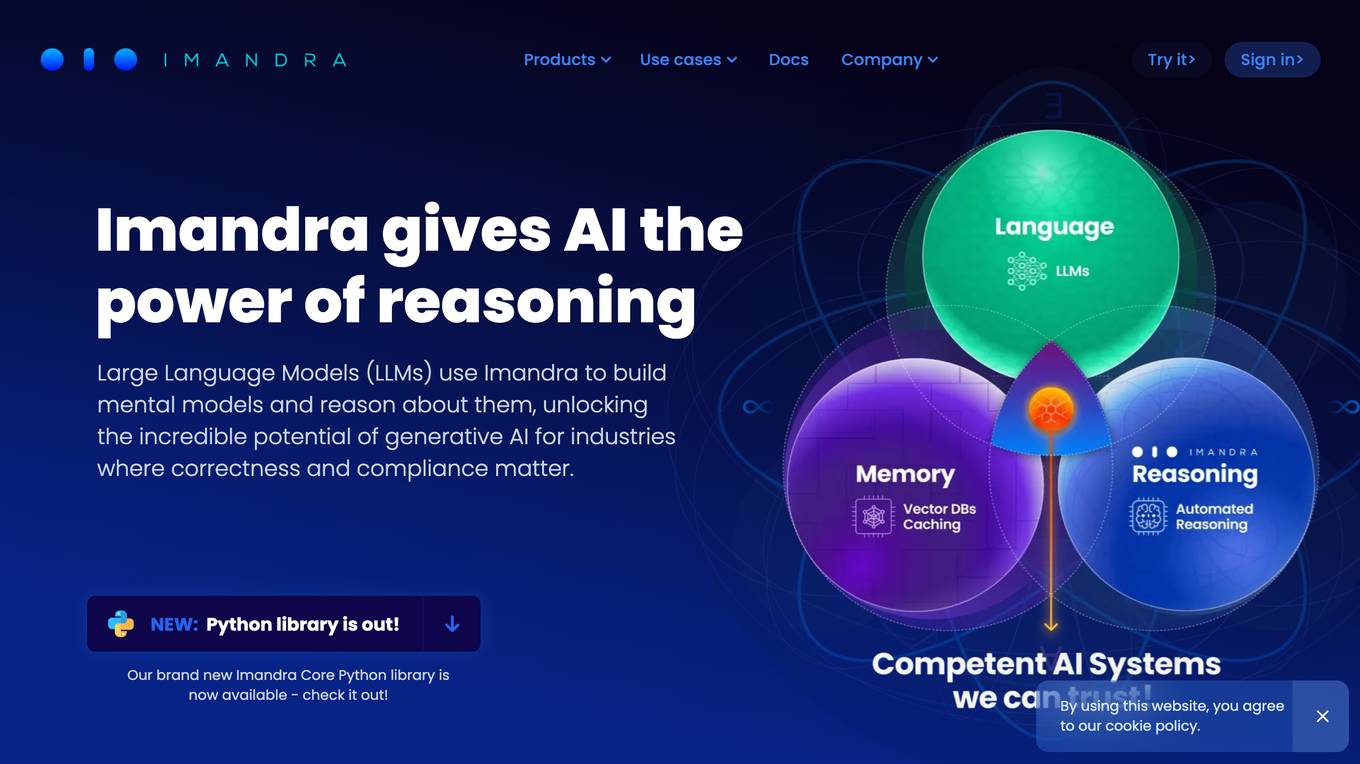

Imandra

Imandra is a company that provides automated logical reasoning for Large Language Models (LLMs). Imandra's technology allows LLMs to build mental models and reason about them, unlocking the potential of generative AI for industries where correctness and compliance matter. Imandra's platform is used by leading financial firms, the US Air Force, and DARPA.

Motific.ai

Motific.ai is a responsible GenAI tool powered by data at scale. It offers a fully managed service with natural language compliance and security guardrails, an intelligence service, and an enterprise data-powered, end-to-end retrieval augmented generation (RAG) service. Users can rapidly deliver trustworthy GenAI assistants and API endpoints, configure assistants with organization's data, optimize performance, and connect with top GenAI model providers. Motific.ai enables users to create custom knowledge bases, connect to various data sources, and ensure responsible AI practices. It supports English language only and offers insights on usage, time savings, and model optimization.

ChatTTS

ChatTTS is a text-to-speech tool optimized for natural, conversational scenarios. It supports both Chinese and English languages, trained on approximately 100,000 hours of data. With features like multi-language support, large data training, dialog task compatibility, open-source plans, control, security, and ease of use, ChatTTS provides high-quality and natural-sounding voice synthesis. It is designed for conversational tasks, dialogue speech generation, video introductions, educational content synthesis, and more. Users can integrate ChatTTS into their applications using provided API and SDKs for a seamless text-to-speech experience.

Prompt Engineering

Prompt Engineering is a discipline focused on developing and optimizing prompts to efficiently utilize language models (LMs) for various applications and research topics. It involves skills to understand the capabilities and limitations of large language models, improving their performance on tasks like question answering and arithmetic reasoning. Prompt engineering is essential for designing robust prompting techniques that interact with LLMs and other tools, enhancing safety and building new capabilities by augmenting LLMs with domain knowledge and external tools.

Weavel

Weavel is an AI tool designed to revolutionize prompt engineering for large language models (LLMs). It offers features such as tracing, dataset curation, batch testing, and evaluations to enhance the performance of LLM applications. Weavel enables users to continuously optimize prompts using real-world data, prevent performance regression with CI/CD integration, and engage in human-in-the-loop interactions for scoring and feedback. Ape, the AI prompt engineer, outperforms competitors on benchmark tests and ensures seamless integration and continuous improvement specific to each user's use case. With Weavel, users can effortlessly evaluate LLM applications without the need for pre-existing datasets, streamlining the assessment process and enhancing overall performance.

Trieve

Trieve is an AI-first infrastructure API that offers search, recommendations, and RAG capabilities by combining language models with tools for fine-tuning ranking and relevance. It helps companies build unfair competitive advantages through their discovery experiences, powering over 30,000 discovery experiences across various categories. Trieve supports semantic vector search, BM25 & SPLADE full-text search, hybrid search, merchandising & relevance tuning, and sub-sentence highlighting. The platform is built on open-source models, ensuring data privacy, and offers self-hostable options for sensitive data and maximum performance.

Tensoic AI

Tensoic AI is an AI tool designed for custom Large Language Models (LLMs) fine-tuning and inference. It offers ultra-fast fine-tuning and inference capabilities for enterprise-grade LLMs, with a focus on use case-specific tasks. The tool is efficient, cost-effective, and easy to use, enabling users to outperform general-purpose LLMs using synthetic data. Tensoic AI generates small, powerful models that can run on consumer-grade hardware, making it ideal for a wide range of applications.

Confident AI

Confident AI is an open-source evaluation infrastructure for Large Language Models (LLMs). It provides a centralized platform to judge LLM applications, ensuring substantial benefits and addressing any weaknesses in LLM implementation. With Confident AI, companies can define ground truths to ensure their LLM is behaving as expected, evaluate performance against expected outputs to pinpoint areas for iterations, and utilize advanced diff tracking to guide towards the optimal LLM stack. The platform offers comprehensive analytics to identify areas of focus and features such as A/B testing, evaluation, output classification, reporting dashboard, dataset generation, and detailed monitoring to help productionize LLMs with confidence.

MaestroQA

MaestroQA is a comprehensive Call Center Quality Assurance Software that offers a range of products and features to enhance QA processes. It provides customizable report builders, scorecard builders, calibration workflows, coaching workflows, automated QA workflows, screen capture, accurate transcriptions, root cause analysis, performance dashboards, AI grading assist, analytics, and integrations with various platforms. The platform caters to industries like eCommerce, financial services, gambling, insurance, B2B software, social media, and media, offering solutions for QA managers, team leaders, and executives.

Directly

Directly is an AI-powered platform that offers on-demand and automated customer support solutions. The platform connects organizations with highly qualified experts who can handle customer inquiries efficiently. By leveraging AI and machine learning, Directly automates repetitive questions, improving business continuity and digital transformation. The platform follows a pay-for-performance compensation model and provides global support in multiple languages. Directly aims to enhance customer satisfaction, reduce contact center volume, and save costs for businesses.

Groq

Groq is a fast AI inference tool that offers instant intelligence for openly-available models like Llama 3.1. It provides ultra-low-latency inference for cloud deployments and is compatible with other providers like OpenAI. Groq's speed is proven to be instant through independent benchmarks, and it powers leading openly-available AI models such as Llama, Mixtral, Gemma, and Whisper. The tool has gained recognition in the industry for its high-speed inference compute capabilities and has received significant funding to challenge established players like Nvidia.

Beebzi.AI

Beebzi.AI is an all-in-one AI content creation platform that offers a wide array of tools for generating various types of content such as articles, blogs, emails, images, voiceovers, and more. The platform utilizes advanced AI technology and behavioral science to empower businesses and individuals in their marketing and sales endeavors. With features like AI Article Wizard, AI Room Designer, AI Landing Page Generator, and AI Code Generation, Beebzi.AI revolutionizes content creation by providing customizable templates, multiple language support, and real-time data insights. The platform also offers various subscription plans tailored for individual entrepreneurs, teams, and businesses, with flexible pricing models based on word count allocations. Beebzi.AI aims to streamline content creation processes, enhance productivity, and drive organic traffic through SEO-optimized content.

2 - Open Source AI Tools

flashinfer

FlashInfer is a library for Language Languages Models that provides high-performance implementation of LLM GPU kernels such as FlashAttention, PageAttention and LoRA. FlashInfer focus on LLM serving and inference, and delivers state-the-art performance across diverse scenarios.

Awesome-LLM-Preference-Learning

The repository 'Awesome-LLM-Preference-Learning' is the official repository of a survey paper titled 'Towards a Unified View of Preference Learning for Large Language Models: A Survey'. It contains a curated list of papers related to preference learning for Large Language Models (LLMs). The repository covers various aspects of preference learning, including on-policy and off-policy methods, feedback mechanisms, reward models, algorithms, evaluation techniques, and more. The papers included in the repository explore different approaches to aligning LLMs with human preferences, improving mathematical reasoning in LLMs, enhancing code generation, and optimizing language model performance.

20 - OpenAI Gpts

LFG GPT

Talk to Navigation with Large Language Models: Semantic Guesswork as a Heuristic for Planning (LFG)

QuickSilver AI - Natural Language R.A.G DocuMaster

Easily format and optimize your documents, create NLRAG (Natural Language Retrieval Augmented Generation) indexes and more!

Legal Bidding Expert

Chinese-language support for Baidu legal bidding with a focus on audience analysis and creative content.

MT5 Master

Meta Trader 5 and MQL5 language expert, here to solve programming problems and answer questions.

Instablog

I will create a blog post optimized for search engines on any topic and in any language.

Subtitle Proofreader

For Proofreading the Auto-Generated YouTube subtitles. To prepare for translation.