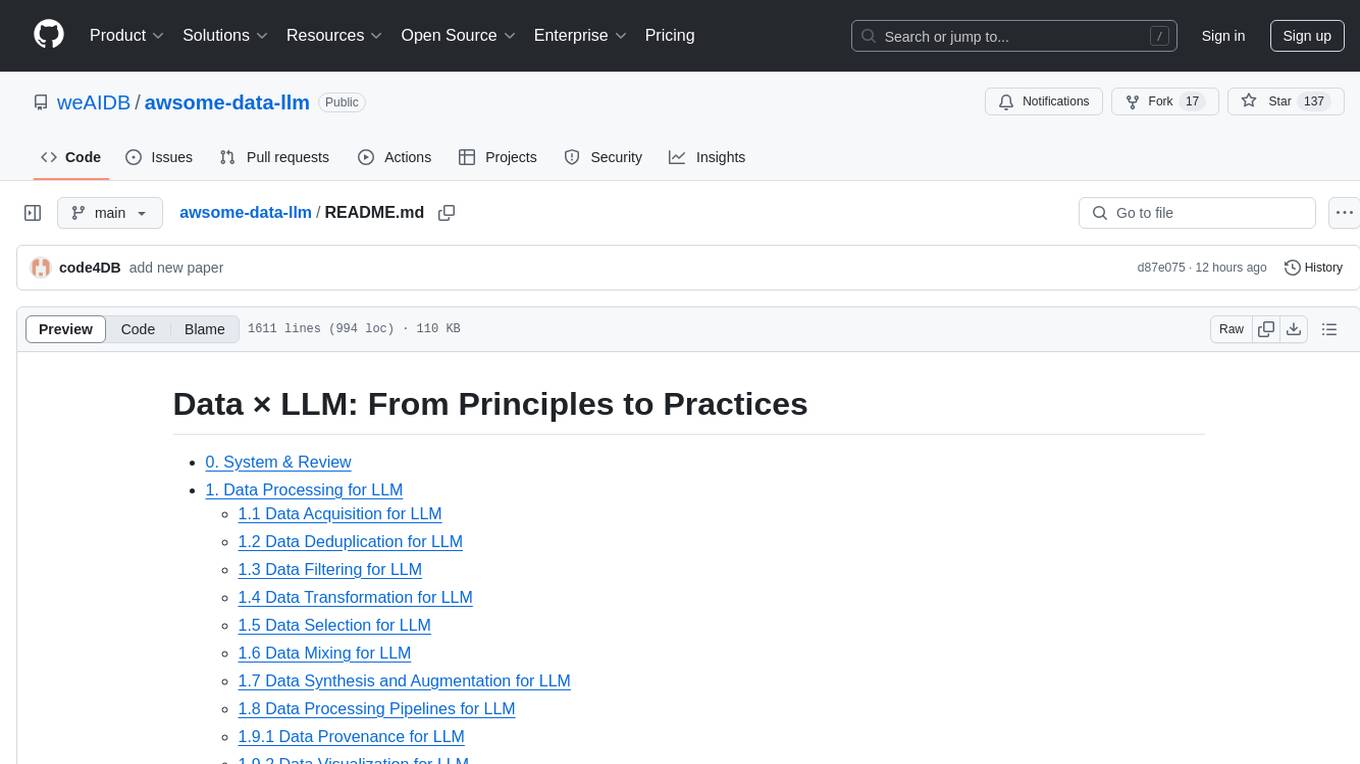

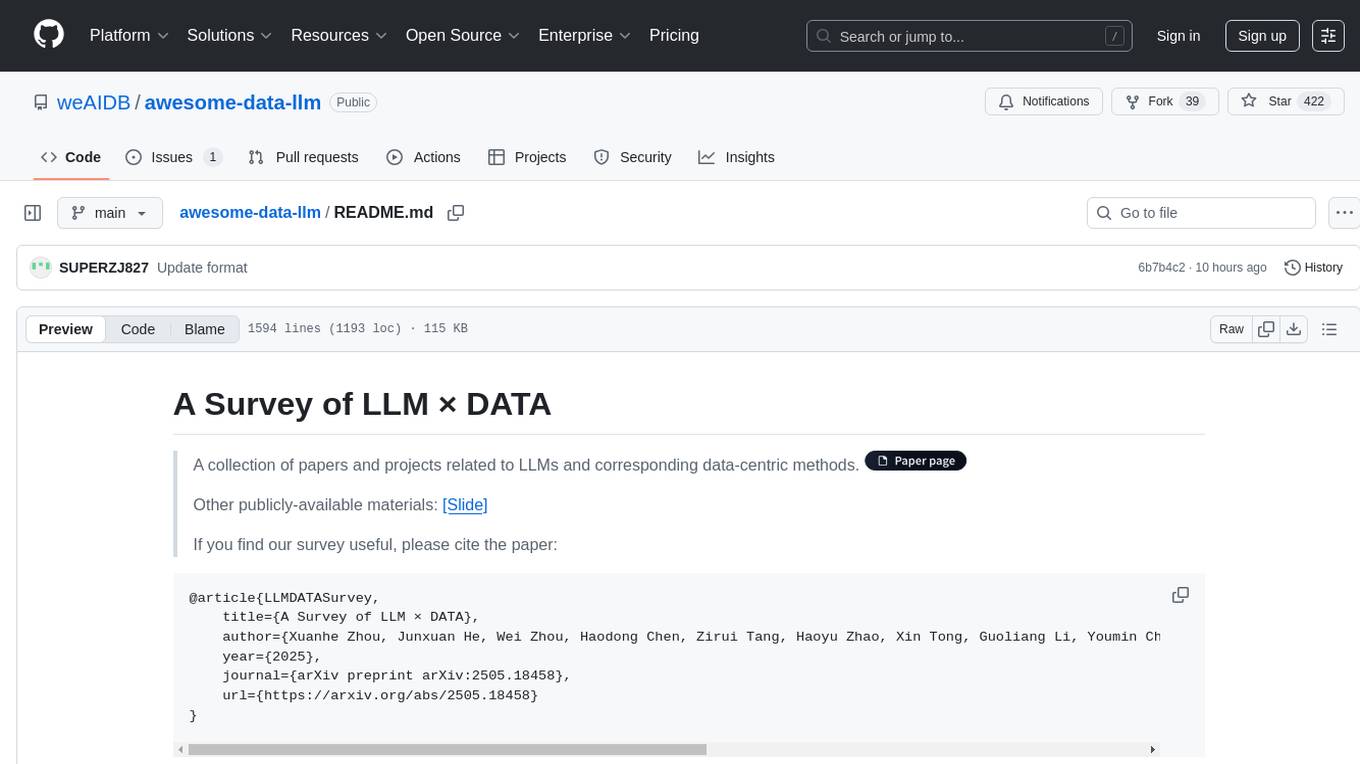

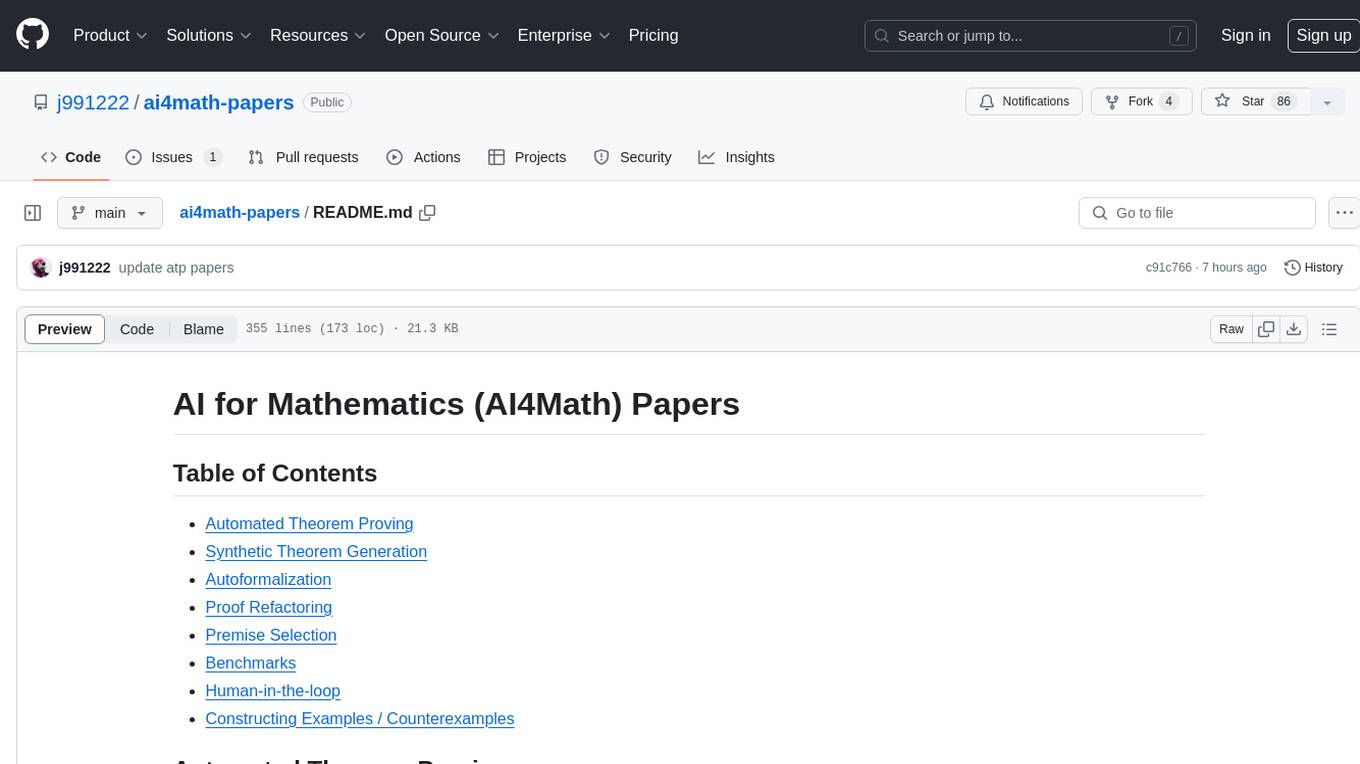

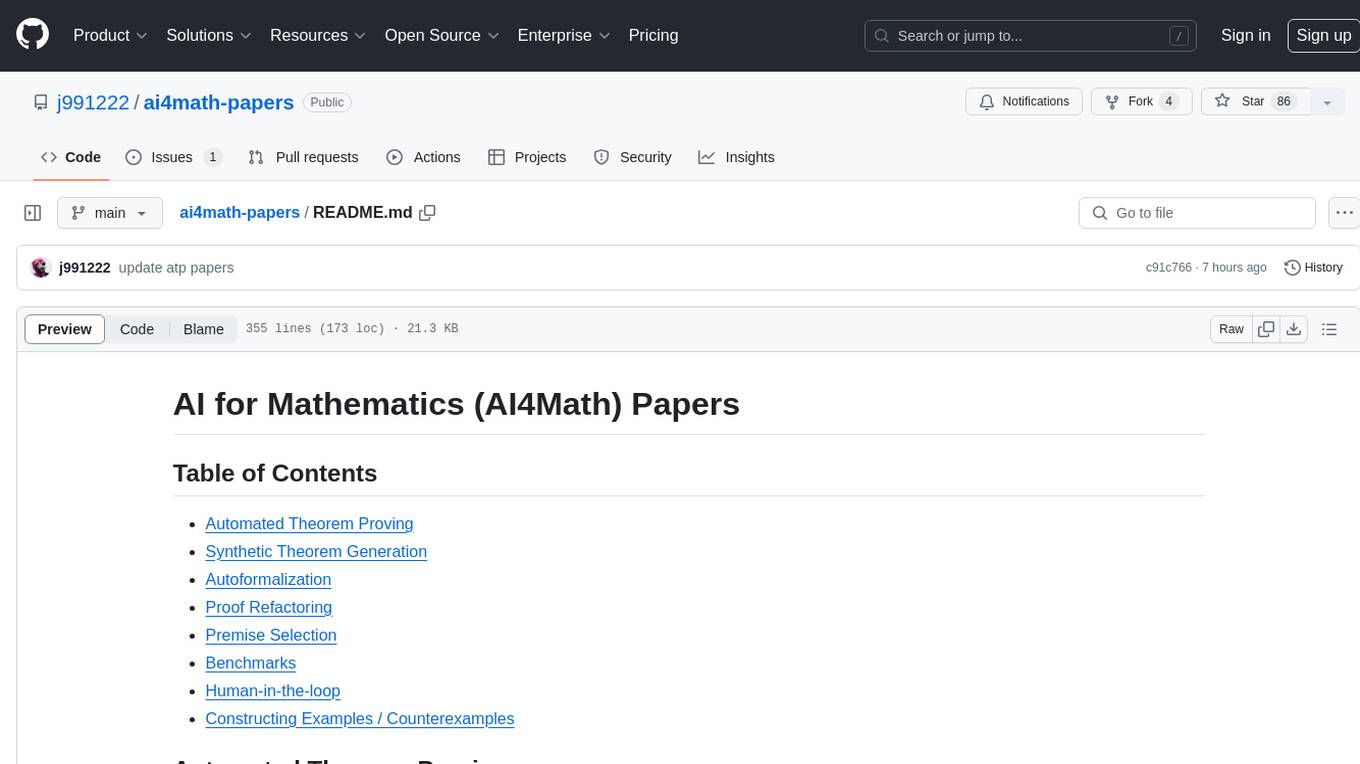

ai4math-papers

AI for Mathematics (AI4Math) paper list

Stars: 126

The 'ai4math-papers' repository contains a collection of research papers related to AI applications in mathematics, including automated theorem proving, synthetic theorem generation, autoformalization, proof refactoring, premise selection, benchmarks, human-in-the-loop interactions, and constructing examples/counterexamples. The papers cover various topics such as neural theorem proving, reinforcement learning for theorem proving, generative language modeling, formal mathematics statement curriculum learning, and more. The repository serves as a valuable resource for researchers and practitioners interested in the intersection of AI and mathematics.

README:

- Automated Theorem Proving

- Synthetic Theorem Generation

- Autoformalization

- Proof Refactoring

- Premise Selection

- Benchmarks

- Human-in-the-loop

- Constructing Examples / Counterexamples

-

Holophrasm: a neural Automated Theorem Prover for higher-order logic. arXiv preprint 2016 [pdf]

Daniel Whalen

-

Deep Network Guided Proof Search. 21st International Conference on Logic for Programming, Artificial Intelligence and Reasoning 2017 [pdf]

Sarah Loos, Geoffrey Irving, Christian Szegedy, Cezary Kaliszyk

-

Reinforcement Learning of Theorem Proving. NeurIPS 2018 [pdf]

Cezary Kaliszyk, Josef Urban, Henryk Michalewski, Miroslav Olšák

-

GamePad: A Learning Environment for Theorem Proving. ICLR 2019 [pdf] [code]

Daniel Huang, Prafulla Dhariwal, Dawn Song, Ilya Sutskever

-

HOList: An Environment for Machine Learning of Higher Order Logic Theorem Proving. ICML 2019 [pdf] [dataset]

Kshitij Bansal, Sarah Loos, Markus Rabe, Christian Szegedy, Stewart Wilcox

-

Learning to Prove Theorems via Interacting with Proof Assistants. ICML 2019 [pdf] [code]

Kaiyu Yang, Jia Deng

-

Graph Representations for Higher-Order Logic and Theorem Proving. AAAI 2020 [pdf]

Aditya Paliwal, Sarah Loos, Markus Rabe, Kshitij Bansal, Christian Szegedy

-

Generative Language Modeling for Automated Theorem Proving. arXiv preprint 2020 [pdf]

Stanislas Polu, Ilya Sutskever

-

Learning to Prove Theorems by Learning to Generate Theorems. NeurIPS 2020 [pdf] [code]

Mingzhe Wang, Jia Deng

-

TacticToe: Learning to Prove with Tactics. Journal of Automated Reasoning 2021 [pdf]

Thibault Gauthier, Cezary Kaliszyk, Josef Urban, Ramana Kumar, Michael Norrish

-

A Deep Reinforcement Learning Approach to First-Order Logic Theorem Proving. AAAI 2021 [pdf] [code]

Maxwell Crouse, Ibrahim Abdelaziz, Bassem Makni, Spencer Whitehead, Cristina Cornelio, Pavan Kapanipathi, Kavitha Srinivas, Veronika Thost, Michael Witbrock, Achille Fokoue

-

TacticZero: Learning to Prove Theorems from Scratch with Deep Reinforcement Learning. NeurIPS 2021 [pdf]

Minchao Wu, Michael Norrish, Christian Walder, Amir Dezfouli

-

Learning to Guide a Saturation-Based Theorem Prover. TPAMI 2022 [pdf] [code]

Ibrahim Abdelaziz, Maxwell Crouse, Bassem Makni, Vernon Austil, Cristina Cornelio, Shajith Ikbal, Pavan Kapanipathi, Ndivhuwo Makondo, Kavitha Srinivas, Michael Witbrock, Achille Fokoue

-

Proof Artifact Co-training for Theorem Proving with Language Model. ICLR 2022 [pdf] [tactic step data] [PACT data]

Jesse Michael Han, Jason Rute, Yuhuai Wu, Edward W. Ayers, Stanislas Polu

-

Formal Mathematics Statement Curriculum Learning. ICML 2022 [pdf]

Stanislas Polu, Jesse Michael Han, Kunhao Zheng, Mantas Baksys, Igor Babuschkin, Ilya Sutskever

-

Thor: Wielding Hammers to Integrate Language Models and Automated Theorem Provers. NeurIPS 2022 [pdf]

Albert Q. Jiang, Wenda Li, Szymon Tworkowski, Konrad Czechowski, Tomasz Odrzygóźdź, Piotr Miłoś, Yuhuai Wu, Mateja Jamnik

-

NaturalProver: Grounded Mathematical Proof Generation with Language Models. NeurIPS 2022 [pdf] [code]

Sean Welleck, Jiacheng Liu, Ximing Lu, Hannaneh Hajishirzi, Yejin Choi

-

HyperTree Proof Search for Neural Theorem Proving. NeurIPS 2022 [pdf]

Guillaume Lample, Timothee Lacroix, Marie-anne Lachaux, Aurelien Rodriguez, Amaury Hayat, Thibaut Lavril, Gabriel Ebner, Xavier Martinet

-

Draft, Sketch, and Prove: Guiding Formal Theorem Provers with Informal Proofs. ICLR 2023 [pdf] [code]

Albert Qiaochu Jiang, Sean Welleck, Jin Peng Zhou, Timothee Lacroix, Jiacheng Liu, Wenda Li, Mateja Jamnik, Guillaume Lample, Yuhuai Wu

-

Baldur: Whole-Proof Generation and Repair with Large Language Models arxiv preprint 2023 [pdf]

Emily First, Markus N. Rabe, Talia Ringer, Yuriy Brun

-

Decomposing the Enigma: Subgoal-based Demonstration Learning for Formal Theorem Proving. arXiv preprint 2023 [pdf] [code]

Xueliang Zhao, Wenda Li, Lingpeng Kong

-

LeanDojo: Theorem Proving with Retrieval-Augmented Language Models. NeurIPS 2023 Datasets and Benchmarks Track [pdf] [code]

Kaiyu Yang, Aidan M. Swope, Alex Gu, Rahul Chalamala, Peiyang Song, Shixing Yu, Saad Godil, Ryan Prenger, Anima Anandkumar

-

DT-Solver: Automated Theorem Proving with Dynamic-Tree Sampling Guided by Proof-level Value Function. ACL 2023 [pdf]

Haiming Wang, Ye Yuan, Zhengying Liu, Jianhao Shen, Yichun Yin, Jing Xiong, Enze Xie, Han Shi, Yujun Li, Lin Li, Jian Yin, Zhenguo Li, Xiaodan Liang

-

Lyra: Orchestrating Dual Correction in Automated Theorem Proving. arXiv preprint 2023 [pdf] [code]

Chuanyang Zheng, Haiming Wang, Enze Xie, Zhengying Liu, Jiankai Sun, Huajian Xin, Jianhao Shen, Zhenguo Li, Yu Li

-

A Language-Agent Approach to Formal Theorem-Proving. arXiv preprint 2023 [pdf]

Amitayush Thakur, Yeming Wen, Swarat Chaudhuri

-

LEGO-Prover: Neural Theorem Proving with Growing Libraries. ICLR 2024 [pdf] [code]

Haiming Wang, Huajian Xin, Chuanyang Zheng, Lin Li, Zhengying Liu, Qingxing Cao, Yinya Huang, Jing Xiong, Han Shi, Enze Xie, Jian Yin, Zhenguo Li, Heng Liao, Xiaodan Liang

-

LLMSTEP: LLM proofstep suggestions in Lean. The 3rd Workshop on Mathematical Reasoning and AI at NeurIPS 2023 [pdf] [code]

Sean Welleck, Rahul Saha

-

Temperature-scaled large language models for Lean proofstep prediction. The 3rd Workshop on Mathematical Reasoning and AI at NeurIPS 2023 [pdf]

Fabian Gloeckle, Baptiste Roziere, Amaury Hayat, Gabriel Synnaeve

-

Graph2Tac: Learning Hierarchical Representations of Math Concepts in Theorem proving. arXiv preprint 2024 [pdf] [code]

Jason Rute, Miroslav Olšák, Lasse Blaauwbroek, Fidel Ivan Schaposnik Massolo, Jelle Piepenbrock, Vasily Pestun

-

Solving olympiad geometry without human demonstrations. Nature 2024 [pdf][code]

Trieu H. Trinh, Yuhuai Wu, Quoc V. Le, He He, Thang Luong

-

FGeo-TP: A Language Model-Enhanced Solver for Geometry Problems. Symmetry 2024 [pdf]

Yiming He, Jia Zou, Xiaokai Zhang, Na Zhu, Tuo Leng

-

FGeo-HyperGNet: Geometry Problem Solving Integrating Formal Symbolic System and Hypergraph Neural Network. arXiv preprint 2024 [pdf][code]

Xiaokai Zhang, Na Zhu, Yiming He, Jia Zou, Cheng Qin, Yang Li, Zhenbing Zeng, Tuo Leng

-

FGeo-SSS: A Search-Based Symbolic Solver for Human-like Automated Geometric Reasoning. Symmetry 2024 [pdf]

Xiaokai Zhang, Na Zhu, Yiming He, Jia Zou, Cheng Qin, Yang Li, Tuo Leng

-

FGeo-DRL: Deductive Reasoning for Geometric Problems through Deep Reinforcement Learning. Symmetry 2024 [pdf]

Jia Zou, Xiaokai Zhang, Yiming He, Na Zhu, Tuo Leng

-

Wu's Method can Boost Symbolic AI to Rival Silver Medalists and AlphaGeometry to Outperform Gold Medalists at IMO Geometry. arXiv preprint 2024 [pdf][code]

Shiven Sinha, Ameya Prabhu, Ponnurangam Kumaraguru, Siddharth Bhat, Matthias Bethge

-

Learn from Failure: Fine-Tuning LLMs with Trial-and-Error Data for Intuitionistic Propositional Logic Proving. ACL 2024 [pdf] [dataset]

Chenyang An, Zhibo Chen, Qihao Ye, Emily First, Letian Peng, Jiayun Zhang, Zihan Wang, Sorin Lerner, Jingbo Shang

-

Proving Theorems Recursively. arXiv preprint 2024 [pdf] [code]

Haiming Wang, Huajian Xin, Zhengying Liu, Wenda Li, Yinya Huang, Jianqiao Lu, Zhicheng Yang, Jing Tang, Jian Yin, Zhenguo Li, Xiaodan Liang

-

Learning to Prove Theorems by Learning to Generate Theorems. NeurIPS 2020 [pdf] [code]

Mingzhe Wang, Jia Deng

-

INT: An Inequality Benchmark for Evaluating Generalization in Theorem Proving. ICLR 2021 [pdf] [code]

Yuhuai Wu, Albert Q. Jiang, Jimmy Ba, Roger Grosse

-

Solving olympiad geometry without human demonstrations. Nature 2024 [pdf][code]

Trieu H. Trinh, Yuhuai Wu, Quoc V. Le, He He, Thang Luong

-

MUSTARD: Mastering Uniform Synthesis of Theorem and Proof Data. ICLR 2024 [pdf][code]

Yinya Huang, Xiaohan Lin, Zhengying Liu, Qingxing Cao, Huajian Xin, Haiming Wang, Zhenguo Li, Linqi Song, Xiaodan Liang

-

ATG: Benchmarking Automated Theorem Generation for Generative Language Models. NAACL 2024 [pdf]

Xiaohan Lin, Qingxing Cao, Yinya Huang, Zhicheng Yang, Zhengying Liu, Zhenguo Li, Xiaodan Liang

-

Exploration of Neural Machine Translation in Autoformalization of Mathematics in Mizar. CPP 2020 [pdf]

Qingxiang Wang, Chad Brown, Cezary Kaliszyk, Josef Urban

-

Autoformalization with Large Language Models. NeurIPS 2022 [pdf]

Yuhuai Wu, Albert Qiaochu Jiang, Wenda Li, Markus Norman Rabe, Charles E Staats, Mateja Jamnik, Christian Szegedy

-

ProofNet: Autoformalizing and Formally Proving Undergraduate-Level Mathematics. arXiv preprint 2023 [pdf] [code]

Zhangir Azerbayev, Bartosz Piotrowski, Hailey Schoelkopf, Edward W. Ayers, Dragomir Radev, Jeremy Avigad

-

Multilingual Mathematical Autoformalization. arXiv preprint 2023 [pdf] [code]

Albert Q. Jiang, Wenda Li, Mateja Jamnik

-

Lean Workbook: A large-scale Lean problem set formalized from natural language math problems. arXiv preprint 2024 [pdf] [dataset] [code]

Huaiyuan Ying, Zijian Wu, Yihan Geng, Jiayu Wang, Dahua Lin, Kai Chen

-

REFACTOR: Learning to Extract Theorems from Proofs. ICLR 2024 [pdf] [code]

Jin Peng Zhou, Yuhuai Wu, Qiyang Li, Roger Grosse

-

DeepMath - Deep Sequence Models for Premise Selection. NeurIPS 2016 [pdf]

Alex A. Alemi, Francois Chollet, Niklas Een, Geoffrey Irving, Christian Szegedy, Josef Urban

-

Premise Selection for Theorem Proving by Deep Graph Embedding. NeurIPS 2017 [pdf]

Mingzhe Wang, Yihe Tang, Jian Wang, Jia Deng

-

Natural Language Premise Selection: Finding Supporting Statements for Mathematical Text. LREC 2020 [pdf] [code]

Deborah Ferreira, André Freitas

-

Premise Selection in Natural Language Mathematical Texts. ACL 2020 [pdf]

Deborah Ferreira, André Freitas

-

Machine-Learned Premise Selection for Lean arXiv preprint 2023 [pdf] [code]

Bartosz Piotrowski, Ramon Fernández Mir, Edward Ayers

-

Magnushammer: A Transformer-based Approach to Premise Selection. ICLR 2024 [pdf]

Maciej Mikuła, Szymon Antoniak, Szymon Tworkowski, Albert Qiaochu Jiang, Jin Peng Zhou, Christian Szegedy, Łukasz Kuciński, Piotr Miłoś, Yuhuai Wu

-

HolStep: A Machine Learning Dataset for Higher-order Logic Theorem Proving. ICLR 2017 [pdf] [dataset] [code]

Cezary Kaliszyk, François Chollet, Christian Szegedy

-

Learning to Prove Theorems via Interacting with Proof Assistants. ICML 2019 [pdf] [code]

Kaiyu Yang, Jia Deng

-

HOList: An Environment for Machine Learning of Higher Order Logic Theorem Proving. ICML 2019 [pdf] [dataset]

Kshitij Bansal, Sarah Loos, Markus Rabe, Christian Szegedy, Stewart Wilcox

-

IsarStep: a Benchmark for High-level Mathematical Reasoning. ICLR 2021 [pdf] [code]

Wenda Li, Lei Yu, Yuhuai Wu, Lawrence C. Paulson

-

INT: An Inequality Benchmark for Evaluating Generalization in Theorem Proving. ICLR 2021 [pdf] [code]

Yuhuai Wu, Albert Q. Jiang, Jimmy Ba, Roger Grosse

-

NaturalProofs: Mathematical Theorem Proving in Natural Language. NeurIPS 2021 Datasets and Benchmarks Track (Round 1) [pdf] [code]

Sean Welleck, Jiacheng Liu, Ronan Le Bras, Hannaneh Hajishirzi, Yejin Choi, Kyunghyun Cho

-

MiniF2F: a cross-system benchmark for formal Olympiad-level mathematics. ICLR 2022 [pdf] [dataset]

Kunhao Zheng, Jesse Michael Han, Stanislas Polu

-

ProofNet: Autoformalizing and Formally Proving Undergraduate-Level Mathematics. arXiv preprint 2023 [pdf] [code]

Zhangir Azerbayev, Bartosz Piotrowski, Hailey Schoelkopf, Edward W. Ayers, Dragomir Radev, Jeremy Avigad

-

Evaluating Language Models for Mathematics through Interactions. arXiv preprint 2023 [pdf] [code]

Katherine M. Collins, Albert Q. Jiang, Simon Frieder, Lionel Wong, Miri Zilka, Umang Bhatt, Thomas Lukasiewicz, Yuhuai Wu, Joshua B. Tenenbaum, William Hart, Timothy Gowers, Wenda Li, Adrian Weller, Mateja Jamnik

-

LeanDojo: Theorem Proving with Retrieval-Augmented Language Models. NeurIPS 2023 Datasets and Benchmarks Track [pdf] [code]

Kaiyu Yang, Aidan M. Swope, Alex Gu, Rahul Chalamala, Peiyang Song, Shixing Yu, Saad Godil, Ryan Prenger, Anima Anandkumar

-

FIMO: A Challenge Formal Dataset for Automated Theorem Proving. arXiv preprint 2023 [pdf]

Chengwu Liu, Jianhao Shen, Huajian Xin, Zhengying Liu, Ye Yuan, Haiming Wang, Wei Ju, Chuanyang Zheng, Yichun Yin, Lin Li, Ming Zhang, Qun Liu

-

TRIGO: Benchmarking Formal Mathematical Proof Reduction for Generative Language Models. EMNLP 2023 [pdf] [code]

Jing Xiong, Jianhao Shen, Ye Yuan, Haiming Wang, Yichun Yin, Zhengying Liu, Lin Li, Zhijiang Guo, Qingxing Cao, Yinya Huang, Chuanyang Zheng, Xiaodan Liang, Ming Zhang, Qun Liu

-

MLFMF: Data Sets for Machine Learning for Mathematical Formalization. NeurIPS 2023 [pdf] [code]

Andrej Bauer, Matej Petković, Ljupčo Todorovski

-

FormalGeo: The First Step Toward Human-like IMO-level Geometric Automated Reasoning. arXiv preprint 2023 [pdf] [code]

Xiaokai Zhang, Na Zhu, Yiming He, Jia Zou, Qike Huang, Xiaoxiao Jin, Yanjun Guo, Chenyang Mao, Zhe Zhu, Dengfeng Yue, Fangzhen Zhu, Yang Li, Yifan Wang, Yiwen Huang, Runan Wang, Cheng Qin, Zhenbing Zeng, Shaorong Xie, Xiangfeng Luo, Tuo Leng

-

MUSTARD: Mastering Uniform Synthesis of Theorem and Proof Data. ICLR 2024 [pdf][code]

Yinya Huang, Xiaohan Lin, Zhengying Liu, Qingxing Cao, Huajian Xin, Haiming Wang, Zhenguo Li, Linqi Song, Xiaodan Liang

-

ATG: Benchmarking Automated Theorem Generation for Generative Language Models. NAACL 2024 [pdf]

Xiaohan Lin, Qingxing Cao, Yinya Huang, Zhicheng Yang, Zhengying Liu, Zhenguo Li, Xiaodan Liang

-

Advancing mathematics by guiding human intuition with AI. Nature 2021 [pdf]

Alex Davies, Petar Veličković, Lars Buesing, Sam Blackwell, Daniel Zheng, Nenad Tomašev, Richard Tanburn, Peter Battaglia, Charles Blundell, András Juhász, Marc Lackenby, Geordie Williamson, Demis Hassabis & Pushmeet Kohli

-

Machine Learning Kreuzer--Skarke Calabi--Yau Threefolds. arXiv preprint 2021 [pdf]

Per Berglund, Ben Campbell, Vishnu Jejjala

-

Machine Learning Calabi-Yau Hypersurfaces. Physical Review D 2022 [pdf]

David S. Berman, Yang-Hui He, Edward Hirst

-

Machine learning assisted exploration for affine Deligne-Lusztig varieties. Peking Mathematical Journal 2024 [pdf] [code]

Bin Dong, Xuhua He, Pengfei Jin, Felix Schremmer, Qingchao Yu

-

Can Transformers Do Enumerative Geometry? arXiv preprint 2024 [pdf]

Baran Hashemi, Roderic G. Corominas, Alessandro Giacchetto

-

Constructions in combinatorics via neural networks. arXiv preprint 2021 [pdf]

Adam Zsolt Wagner

-

Searching for ribbons with machine learning. arXiv preprint 2023 [pdf]

Sergei Gukov, James Halverson, Ciprian Manolescu, Fabian Ruehle

-

Mathematical discoveries from program search with large language models. Nature 2024 [pdf][code]

Bernardino Romera-Paredes, Mohammadamin Barekatain, Alexander Novikov, Matej Balog, M. Pawan Kumar, Emilien Dupont, Francisco J. R. Ruiz, Jordan S. Ellenberg, Pengming Wang, Omar Fawzi, Pushmeet Kohli, Alhussein Fawzi

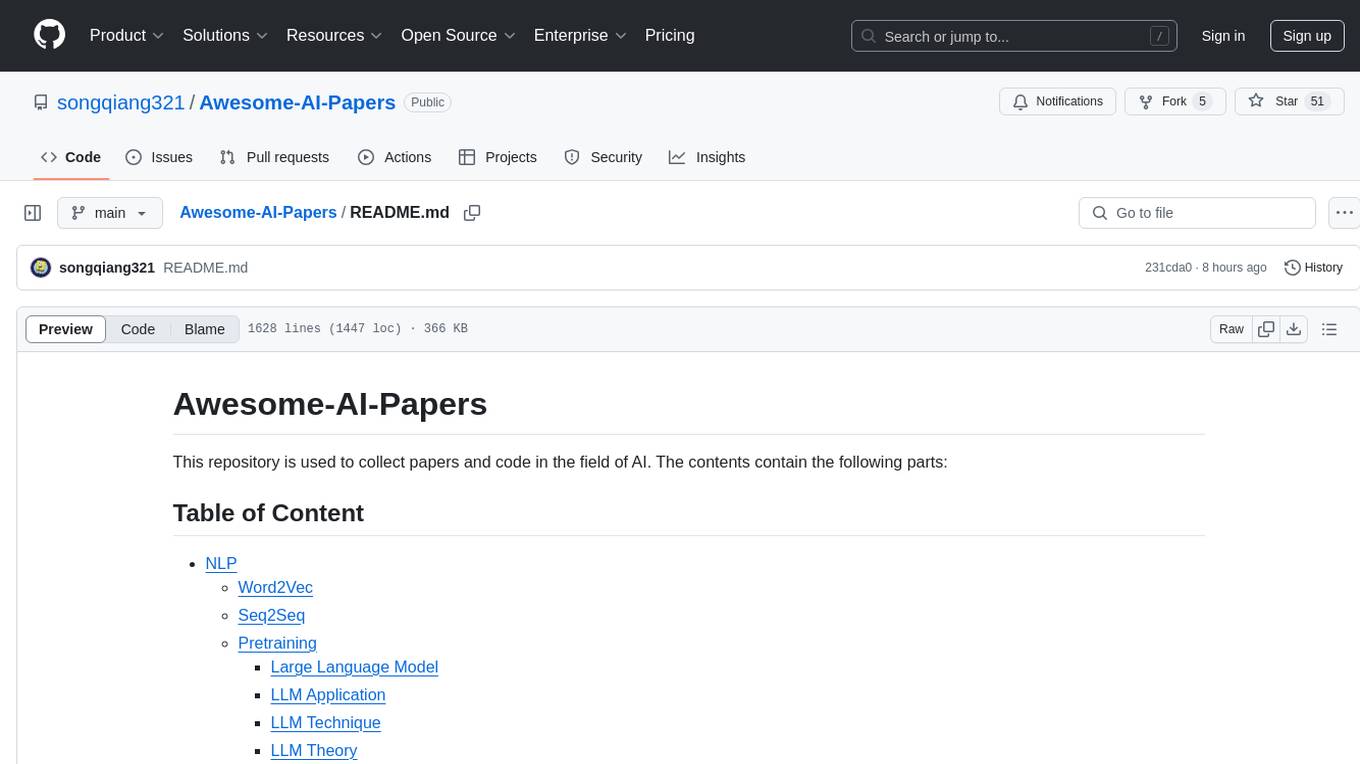

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ai4math-papers

Similar Open Source Tools

ai4math-papers

The 'ai4math-papers' repository contains a collection of research papers related to AI applications in mathematics, including automated theorem proving, synthetic theorem generation, autoformalization, proof refactoring, premise selection, benchmarks, human-in-the-loop interactions, and constructing examples/counterexamples. The papers cover various topics such as neural theorem proving, reinforcement learning for theorem proving, generative language modeling, formal mathematics statement curriculum learning, and more. The repository serves as a valuable resource for researchers and practitioners interested in the intersection of AI and mathematics.

LLM4DB

LLM4DB is a repository focused on the intersection of Large Language Models (LLM) and Database technologies. It covers various aspects such as data processing, data analysis, database optimization, and data management for LLM. The repository includes works on data cleaning, entity matching, schema matching, data discovery, NL2SQL, data exploration, data visualization, configuration tuning, query optimization, and anomaly diagnosis using LLMs. It aims to provide insights and advancements in leveraging LLMs for improving data processing, analysis, and database management tasks.

awesome-generative-information-retrieval

This repository contains a curated list of resources on generative information retrieval, including research papers, datasets, tools, and applications. Generative information retrieval is a subfield of information retrieval that uses generative models to generate new documents or passages of text that are relevant to a given query. This can be useful for a variety of tasks, such as question answering, summarization, and document generation. The resources in this repository are intended to help researchers and practitioners stay up-to-date on the latest advances in generative information retrieval.

LLM4DB

LLM4DB is a repository focused on the intersection of Large Language Models (LLMs) and Database technologies. It covers various aspects such as data processing, data analysis, database optimization, and data management for LLMs. The repository includes research papers, tools, and techniques related to leveraging LLMs for tasks like data cleaning, entity matching, schema matching, data discovery, NL2SQL, data exploration, data visualization, knob tuning, query optimization, and database diagnosis.

Awesome-LLM-RAG

This repository, Awesome-LLM-RAG, aims to record advanced papers on Retrieval Augmented Generation (RAG) in Large Language Models (LLMs). It serves as a resource hub for researchers interested in promoting their work related to LLM RAG by updating paper information through pull requests. The repository covers various topics such as workshops, tutorials, papers, surveys, benchmarks, retrieval-enhanced LLMs, RAG instruction tuning, RAG in-context learning, RAG embeddings, RAG simulators, RAG search, RAG long-text and memory, RAG evaluation, RAG optimization, and RAG applications.

awesome-open-ended

A curated list of open-ended learning AI resources focusing on algorithms that invent new and complex tasks endlessly, inspired by human advancements. The repository includes papers, safety considerations, surveys, perspectives, and blog posts related to open-ended AI research.

SLMs-Survey

SLMs-Survey is a comprehensive repository that includes papers and surveys on small language models. It covers topics such as technology, on-device applications, efficiency, enhancements for LLMs, and trustworthiness. The repository provides a detailed overview of existing SLMs, their architecture, enhancements, and specific applications in various domains. It also includes information on SLM deployment optimization techniques and the synergy between SLMs and LLMs.

Awesome-LLM-Preference-Learning

The repository 'Awesome-LLM-Preference-Learning' is the official repository of a survey paper titled 'Towards a Unified View of Preference Learning for Large Language Models: A Survey'. It contains a curated list of papers related to preference learning for Large Language Models (LLMs). The repository covers various aspects of preference learning, including on-policy and off-policy methods, feedback mechanisms, reward models, algorithms, evaluation techniques, and more. The papers included in the repository explore different approaches to aligning LLMs with human preferences, improving mathematical reasoning in LLMs, enhancing code generation, and optimizing language model performance.

Awesome-Latent-CoT

This repository contains a regularly updated paper list for Large Language Models (LLMs) reasoning in latent space. Reasoning in latent space allows for more flexible and efficient thought representation beyond language tokens, bringing AI closer to human-like cognition. The repository covers various aspects of LLMs, including pre-training, supervised finetuning, analysis, interpretability, multimodal reasoning, and applications. It aims to showcase the advancements in reasoning with latent thoughts and continuous concepts in AI models.

LLMAgentPapers

LLM Agents Papers is a repository containing must-read papers on Large Language Model Agents. It covers a wide range of topics related to language model agents, including interactive natural language processing, large language model-based autonomous agents, personality traits in large language models, memory enhancements, planning capabilities, tool use, multi-agent communication, and more. The repository also provides resources such as benchmarks, types of tools, and a tool list for building and evaluating language model agents. Contributors are encouraged to add important works to the repository.

Awesome-LLM-Reasoning-Openai-o1-Survey

The repository 'Awesome LLM Reasoning Openai-o1 Survey' provides a collection of survey papers and related works on OpenAI o1, focusing on topics such as LLM reasoning, self-play reinforcement learning, complex logic reasoning, and scaling law. It includes papers from various institutions and researchers, showcasing advancements in reasoning bootstrapping, reasoning scaling law, self-play learning, step-wise and process-based optimization, and applications beyond math. The repository serves as a valuable resource for researchers interested in exploring the intersection of language models and reasoning techniques.

Awesome-Multimodal-LLM-for-Code

This repository contains papers, methods, benchmarks, and evaluations for code generation under multimodal scenarios. It covers UI code generation, scientific code generation, slide code generation, visually rich programming, logo generation, program repair, UML code generation, and general benchmarks.

For similar tasks

ai4math-papers

The 'ai4math-papers' repository contains a collection of research papers related to AI applications in mathematics, including automated theorem proving, synthetic theorem generation, autoformalization, proof refactoring, premise selection, benchmarks, human-in-the-loop interactions, and constructing examples/counterexamples. The papers cover various topics such as neural theorem proving, reinforcement learning for theorem proving, generative language modeling, formal mathematics statement curriculum learning, and more. The repository serves as a valuable resource for researchers and practitioners interested in the intersection of AI and mathematics.

Evaluator

NeMo Evaluator SDK is an open-source platform for robust, reproducible, and scalable evaluation of Large Language Models. It enables running hundreds of benchmarks across popular evaluation harnesses against any OpenAI-compatible model API. The platform ensures auditable and trustworthy results by executing evaluations in open-source Docker containers. NeMo Evaluator SDK is built on four core principles: Reproducibility by Default, Scale Anywhere, State-of-the-Art Benchmarking, and Extensible and Customizable.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.