react-native-nitro-mlx

Run LLMs on device using Apple's MLX

Stars: 74

The react-native-nitro-mlx repository allows users to run LLMs, Text-to-Speech, and Speech-to-Text on-device in React Native using MLX Swift. It provides functionalities for downloading models, loading and generating responses, streaming audio, text-to-speech, and speech-to-text capabilities. Users can interact with various MLX-compatible models from Hugging Face, with pre-defined models available for convenience. The repository supports iOS 26.0+ and offers detailed API documentation for each feature.

README:

Run LLMs, Text-to-Speech, and Speech-to-Text on-device in React Native using MLX Swift.

- iOS 26.0+

npm install react-native-nitro-mlx react-native-nitro-modulesThen run pod install:

cd ios && pod installimport { ModelManager } from 'react-native-nitro-mlx'

await ModelManager.download('mlx-community/Qwen3-0.6B-4bit', (progress) => {

console.log(`Download progress: ${(progress * 100).toFixed(1)}%`)

})import { LLM } from 'react-native-nitro-mlx'

await LLM.load('mlx-community/Qwen3-0.6B-4bit', {

onProgress: (progress) => {

console.log(`Loading: ${(progress * 100).toFixed(0)}%`)

}

})

const response = await LLM.generate('What is the capital of France?')

console.log(response)You can provide conversation history or few-shot examples when loading the model:

await LLM.load('mlx-community/Qwen3-0.6B-4bit', {

onProgress: (progress) => {

console.log(`Loading: ${(progress * 100).toFixed(0)}%`)

},

additionalContext: [

{ role: 'user', content: 'What is machine learning?' },

{ role: 'assistant', content: 'Machine learning is...' },

{ role: 'user', content: 'Can you explain neural networks?' }

]

})let response = ''

await LLM.stream('Tell me a story', (token) => {

response += token

console.log(response)

})LLM.stop()import { TTS, MLXModel } from 'react-native-nitro-mlx'

await TTS.load(MLXModel.PocketTTS, {

onProgress: (progress) => {

console.log(`Loading: ${(progress * 100).toFixed(0)}%`)

}

})

const audioBuffer = await TTS.generate('Hello world!', {

voice: 'alba',

speed: 1.0

})

// Or stream audio chunks as they're generated

await TTS.stream('Hello world!', (chunk) => {

// Process each audio chunk

}, { voice: 'alba' })Available voices: alba, azelma, cosette, eponine, fantine, javert, jean, marius

import { STT, MLXModel } from 'react-native-nitro-mlx'

await STT.load(MLXModel.GLM_ASR_Nano_4bit, {

onProgress: (progress) => {

console.log(`Loading: ${(progress * 100).toFixed(0)}%`)

}

})

// Transcribe an audio buffer

const text = await STT.transcribe(audioBuffer)

// Or use live microphone transcription

await STT.startListening()

const partial = await STT.transcribeBuffer() // Get current transcript

const final = await STT.stopListening() // Stop and get final transcript| Method | Description |

|---|---|

load(modelId: string, options?: LLMLoadOptions): Promise<void> |

Load a model into memory |

generate(prompt: string): Promise<string> |

Generate a complete response |

stream(prompt: string, onToken: (token: string) => void): Promise<string> |

Stream tokens as they're generated |

stop(): void |

Stop the current generation |

| Property | Type | Description |

|---|---|---|

onProgress |

(progress: number) => void |

Optional callback invoked with loading progress (0-1) |

additionalContext |

LLMMessage[] |

Optional conversation history or few-shot examples to provide to the model |

| Property | Type | Description |

|---|---|---|

role |

'user' | 'assistant' | 'system' |

The role of the message sender |

content |

string |

The message content |

| Property | Description |

|---|---|

isLoaded: boolean |

Whether a model is loaded |

isGenerating: boolean |

Whether generation is in progress |

modelId: string |

The currently loaded model ID |

debug: boolean |

Enable debug logging |

| Method | Description |

|---|---|

load(modelId: string, options?: TTSLoadOptions): Promise<void> |

Load a TTS model into memory |

generate(text: string, options?: TTSGenerateOptions): Promise<ArrayBuffer> |

Generate audio from text |

stream(text: string, onAudioChunk: (audio: ArrayBuffer) => void, options?: TTSGenerateOptions): Promise<void> |

Stream audio chunks as they're generated |

stop(): void |

Stop the current generation |

unload(): void |

Unload the model and free memory |

| Property | Type | Description |

|---|---|---|

voice |

string |

Voice to use (alba, azelma, cosette, eponine, fantine, javert, jean, marius) |

speed |

number |

Speech speed multiplier |

| Property | Description |

|---|---|

isLoaded: boolean |

Whether a TTS model is loaded |

isGenerating: boolean |

Whether audio generation is in progress |

modelId: string |

The currently loaded model ID |

sampleRate: number |

Audio sample rate of the loaded model (e.g. 24000) |

| Method | Description |

|---|---|

load(modelId: string, options?: STTLoadOptions): Promise<void> |

Load an STT model into memory |

transcribe(audio: ArrayBuffer): Promise<string> |

Transcribe an audio buffer |

transcribeStream(audio: ArrayBuffer, onToken: (token: string) => void): Promise<string> |

Stream transcription tokens as they're generated |

startListening(): Promise<void> |

Start capturing audio from the microphone |

transcribeBuffer(): Promise<string> |

Transcribe the current audio buffer while listening |

stopListening(): Promise<string> |

Stop listening and transcribe final audio |

stop(): void |

Stop the current transcription |

unload(): void |

Unload the model and free memory |

| Property | Description |

|---|---|

isLoaded: boolean |

Whether an STT model is loaded |

isTranscribing: boolean |

Whether transcription is in progress |

isListening: boolean |

Whether the microphone is active |

modelId: string |

The currently loaded model ID |

| Method | Description |

|---|---|

download(modelId: string, onProgress: (progress: number) => void): Promise<string> |

Download a model from Hugging Face |

isDownloaded(modelId: string): Promise<boolean> |

Check if a model is downloaded |

getDownloadedModels(): Promise<string[]> |

Get list of downloaded models |

deleteModel(modelId: string): Promise<void> |

Delete a downloaded model |

getModelPath(modelId: string): Promise<string> |

Get the local path of a model |

| Property | Description |

|---|---|

debug: boolean |

Enable debug logging |

Any MLX-compatible model from Hugging Face should work. The package exports an MLXModel enum with pre-defined models for convenience that are more likely to run well on-device:

import { MLXModel } from 'react-native-nitro-mlx'

await ModelManager.download(MLXModel.Llama_3_2_1B_Instruct_4bit, (progress) => {

console.log(`Download progress: ${(progress * 100).toFixed(1)}%`)

})| Model | Enum Key | Hugging Face ID |

|---|---|---|

| Llama 3.2 (Meta) | ||

| Llama 3.2 1B 4-bit | Llama_3_2_1B_Instruct_4bit |

mlx-community/Llama-3.2-1B-Instruct-4bit |

| Llama 3.2 1B 8-bit | Llama_3_2_1B_Instruct_8bit |

mlx-community/Llama-3.2-1B-Instruct-8bit |

| Llama 3.2 3B 4-bit | Llama_3_2_3B_Instruct_4bit |

mlx-community/Llama-3.2-3B-Instruct-4bit |

| Llama 3.2 3B 8-bit | Llama_3_2_3B_Instruct_8bit |

mlx-community/Llama-3.2-3B-Instruct-8bit |

| Qwen 2.5 (Alibaba) | ||

| Qwen 2.5 0.5B 4-bit | Qwen2_5_0_5B_Instruct_4bit |

mlx-community/Qwen2.5-0.5B-Instruct-4bit |

| Qwen 2.5 0.5B 8-bit | Qwen2_5_0_5B_Instruct_8bit |

mlx-community/Qwen2.5-0.5B-Instruct-8bit |

| Qwen 2.5 1.5B 4-bit | Qwen2_5_1_5B_Instruct_4bit |

mlx-community/Qwen2.5-1.5B-Instruct-4bit |

| Qwen 2.5 1.5B 8-bit | Qwen2_5_1_5B_Instruct_8bit |

mlx-community/Qwen2.5-1.5B-Instruct-8bit |

| Qwen 2.5 3B 4-bit | Qwen2_5_3B_Instruct_4bit |

mlx-community/Qwen2.5-3B-Instruct-4bit |

| Qwen 2.5 3B 8-bit | Qwen2_5_3B_Instruct_8bit |

mlx-community/Qwen2.5-3B-Instruct-8bit |

| Qwen 3 | ||

| Qwen 3 1.7B 4-bit | Qwen3_1_7B_4bit |

mlx-community/Qwen3-1.7B-4bit |

| Qwen 3 1.7B 8-bit | Qwen3_1_7B_8bit |

mlx-community/Qwen3-1.7B-8bit |

| Gemma 3 (Google) | ||

| Gemma 3 1B 4-bit | Gemma_3_1B_IT_4bit |

mlx-community/gemma-3-1b-it-4bit |

| Gemma 3 1B 8-bit | Gemma_3_1B_IT_8bit |

mlx-community/gemma-3-1b-it-8bit |

| Phi 3.5 Mini (Microsoft) | ||

| Phi 3.5 Mini 4-bit | Phi_3_5_Mini_Instruct_4bit |

mlx-community/Phi-3.5-mini-instruct-4bit |

| Phi 3.5 Mini 8-bit | Phi_3_5_Mini_Instruct_8bit |

mlx-community/Phi-3.5-mini-instruct-8bit |

| Phi 4 Mini (Microsoft) | ||

| Phi 4 Mini 4-bit | Phi_4_Mini_Instruct_4bit |

mlx-community/Phi-4-mini-instruct-4bit |

| Phi 4 Mini 8-bit | Phi_4_Mini_Instruct_8bit |

mlx-community/Phi-4-mini-instruct-8bit |

| SmolLM (HuggingFace) | ||

| SmolLM 1.7B 4-bit | SmolLM_1_7B_Instruct_4bit |

mlx-community/SmolLM-1.7B-Instruct-4bit |

| SmolLM 1.7B 8-bit | SmolLM_1_7B_Instruct_8bit |

mlx-community/SmolLM-1.7B-Instruct-8bit |

| SmolLM2 (HuggingFace) | ||

| SmolLM2 1.7B 4-bit | SmolLM2_1_7B_Instruct_4bit |

mlx-community/SmolLM2-1.7B-Instruct-4bit |

| SmolLM2 1.7B 8-bit | SmolLM2_1_7B_Instruct_8bit |

mlx-community/SmolLM2-1.7B-Instruct-8bit |

| OpenELM (Apple) | ||

| OpenELM 1.1B 4-bit | OpenELM_1_1B_4bit |

mlx-community/OpenELM-1_1B-4bit |

| OpenELM 1.1B 8-bit | OpenELM_1_1B_8bit |

mlx-community/OpenELM-1_1B-8bit |

| OpenELM 3B 4-bit | OpenELM_3B_4bit |

mlx-community/OpenELM-3B-4bit |

| OpenELM 3B 8-bit | OpenELM_3B_8bit |

mlx-community/OpenELM-3B-8bit |

| Model | Enum Key | Hugging Face ID |

|---|---|---|

| PocketTTS (Kyutai) - 44.6M params | ||

| PocketTTS bf16 | PocketTTS |

mlx-community/pocket-tts |

| PocketTTS 8-bit | PocketTTS_8bit |

mlx-community/pocket-tts-8bit |

| PocketTTS 4-bit | PocketTTS_4bit |

mlx-community/pocket-tts-4bit |

| Model | Enum Key | Hugging Face ID |

|---|---|---|

| GLM-ASR (Alibaba) - 1B params | ||

| GLM-ASR Nano 4-bit | GLM_ASR_Nano_4bit |

mlx-community/GLM-ASR-Nano-2512-4bit |

Browse more models at huggingface.co/mlx-community.

MIT

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for react-native-nitro-mlx

Similar Open Source Tools

react-native-nitro-mlx

The react-native-nitro-mlx repository allows users to run LLMs, Text-to-Speech, and Speech-to-Text on-device in React Native using MLX Swift. It provides functionalities for downloading models, loading and generating responses, streaming audio, text-to-speech, and speech-to-text capabilities. Users can interact with various MLX-compatible models from Hugging Face, with pre-defined models available for convenience. The repository supports iOS 26.0+ and offers detailed API documentation for each feature.

LightMem

LightMem is a lightweight and efficient memory management framework designed for Large Language Models and AI Agents. It provides a simple yet powerful memory storage, retrieval, and update mechanism to help you quickly build intelligent applications with long-term memory capabilities. The framework is minimalist in design, ensuring minimal resource consumption and fast response times. It offers a simple API for easy integration into applications with just a few lines of code. LightMem's modular architecture supports custom storage engines and retrieval strategies, making it flexible and extensible. It is compatible with various cloud APIs like OpenAI and DeepSeek, as well as local models such as Ollama and vLLM.

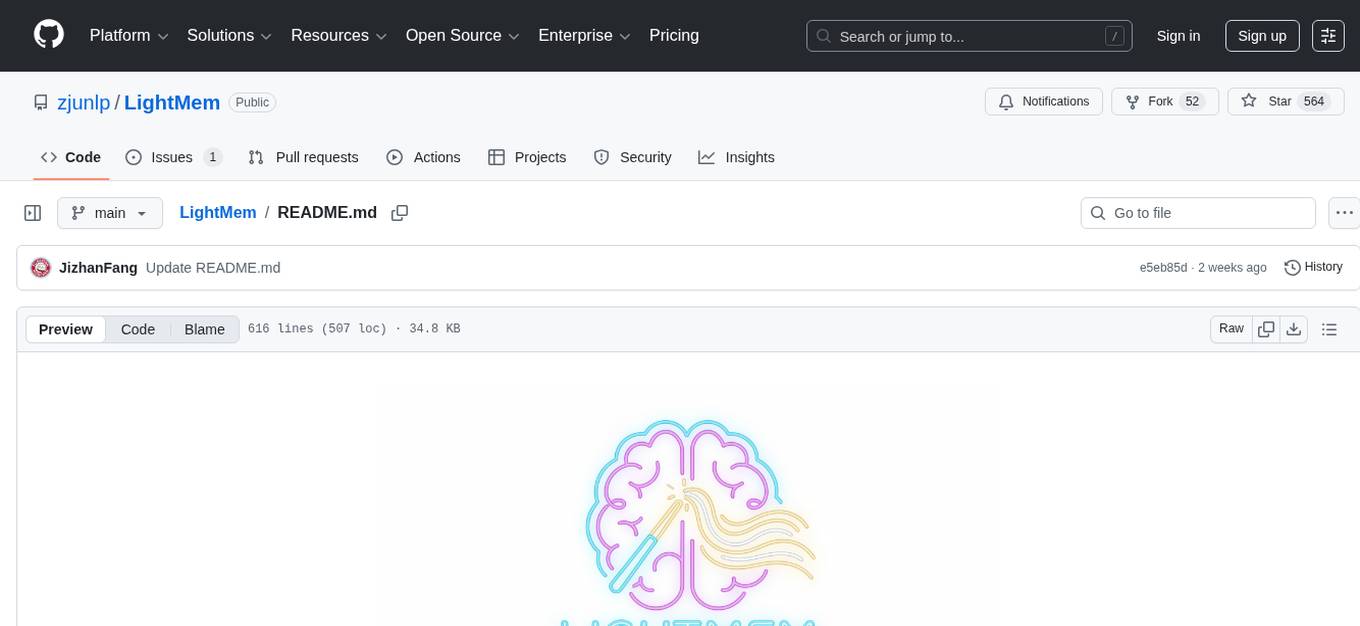

no-cost-ai

No-cost-ai is a repository dedicated to providing a comprehensive list of free AI models and tools for developers, researchers, and curious builders. It serves as a living index for accessing state-of-the-art AI models without any cost. The repository includes information on various AI applications such as chat interfaces, media generation, voice and music tools, AI IDEs, and developer APIs and platforms. Users can find links to free models, their limits, and usage instructions. Contributions to the repository are welcome, and users are advised to use the listed services at their own risk due to potential changes in models, limitations, and reliability of free services.

api-for-open-llm

This project provides a unified backend interface for open large language models (LLMs), offering a consistent experience with OpenAI's ChatGPT API. It supports various open-source LLMs, enabling developers to seamlessly integrate them into their applications. The interface features streaming responses, text embedding capabilities, and support for LangChain, a tool for developing LLM-based applications. By modifying environment variables, developers can easily use open-source models as alternatives to ChatGPT, providing a cost-effective and customizable solution for various use cases.

clother

Clother is a command-line tool that allows users to switch between different Claude Code providers instantly. It provides launchers for various cloud, open router, China endpoints, local, and custom providers, enabling users to configure, list profiles, test connectivity, check installation status, and uninstall. Users can also change the default model for each provider and troubleshoot common issues. Clother simplifies the management of API keys and installation directories, supporting macOS, Linux, and Windows (WSL) platforms. It is designed to streamline the workflow of interacting with different AI models and services.

Free-LLM-Collection

Free-LLM-Collection is a curated list of free resources for mastering the Legal Language Model (LLM) technology. It includes datasets, research papers, tutorials, and tools to help individuals learn and work with LLM models. The repository aims to provide a comprehensive collection of materials to support researchers, developers, and enthusiasts interested in exploring and leveraging LLM technology for various applications in the legal domain.

xiaogpt

xiaogpt is a tool that allows you to play ChatGPT and other LLMs with Xiaomi AI Speaker. It supports ChatGPT, New Bing, ChatGLM, Gemini, Doubao, and Tongyi Qianwen. You can use it to ask questions, get answers, and have conversations with AI assistants. xiaogpt is easy to use and can be set up in a few minutes. It is a great way to experience the power of AI and have fun with your Xiaomi AI Speaker.

OneClickLLAMA

OneClickLLAMA is a tool designed to run local LLM models such as Qwen2.5 and SakuraLLM with ease. It can be used in conjunction with various OpenAI format translators and analyzers, including LinguaGacha and KeywordGacha. By following the setup guides provided on the page, users can optimize performance and achieve a 3-5 times speed improvement compared to default settings. The tool requires a minimum of 8GB dedicated graphics memory, preferably NVIDIA, and the latest version of graphics drivers installed. Users can download the tool from the release page, choose the appropriate model based on usage and memory size, and start the tool by selecting the corresponding launch script.

flute

FLUTE (Flexible Lookup Table Engine for LUT-quantized LLMs) is a tool designed for uniform quantization and lookup table quantization of weights in lower-precision intervals. It offers flexibility in mapping intervals to arbitrary values through a lookup table. FLUTE supports various quantization formats such as int4, int3, int2, fp4, fp3, fp2, nf4, nf3, nf2, and even custom tables. The tool also introduces new quantization algorithms like Learned Normal Float (NFL) for improved performance and calibration data learning. FLUTE provides benchmarks, model zoo, and integration with frameworks like vLLM and HuggingFace for easy deployment and usage.

devops-gpt

DevOpsGPT is a revolutionary tool designed to streamline your workflow and empower you to build systems and automate tasks with ease. Tired of spending hours on repetitive DevOps tasks? DevOpsGPT is here to help! Whether you're setting up infrastructure, speeding up deployments, or tackling any other DevOps challenge, our app can make your life easier and more productive. With DevOpsGPT, you can expect faster task completion, simplified workflows, and increased efficiency. Ready to experience the DevOpsGPT difference? Visit our website, sign in or create an account, start exploring the features, and share your feedback to help us improve. DevOpsGPT will become an essential tool in your DevOps toolkit.

moonpalace

MoonPalace is a debugging tool for API provided by Moonshot AI. It supports all platforms (Mac, Windows, Linux) and is simple to use by replacing 'base_url' with 'http://localhost:9988'. It captures complete requests, including 'accident scenes' during network errors, and allows quick retrieval and viewing of request information using 'request_id' and 'chatcmpl_id'. It also enables one-click export of BadCase structured reporting data to help improve Kimi model capabilities. MoonPalace is recommended for use as an API 'supplier' during code writing and debugging stages to quickly identify and locate various issues related to API calls and code writing processes, and to export request details for submission to Moonshot AI to improve Kimi model.

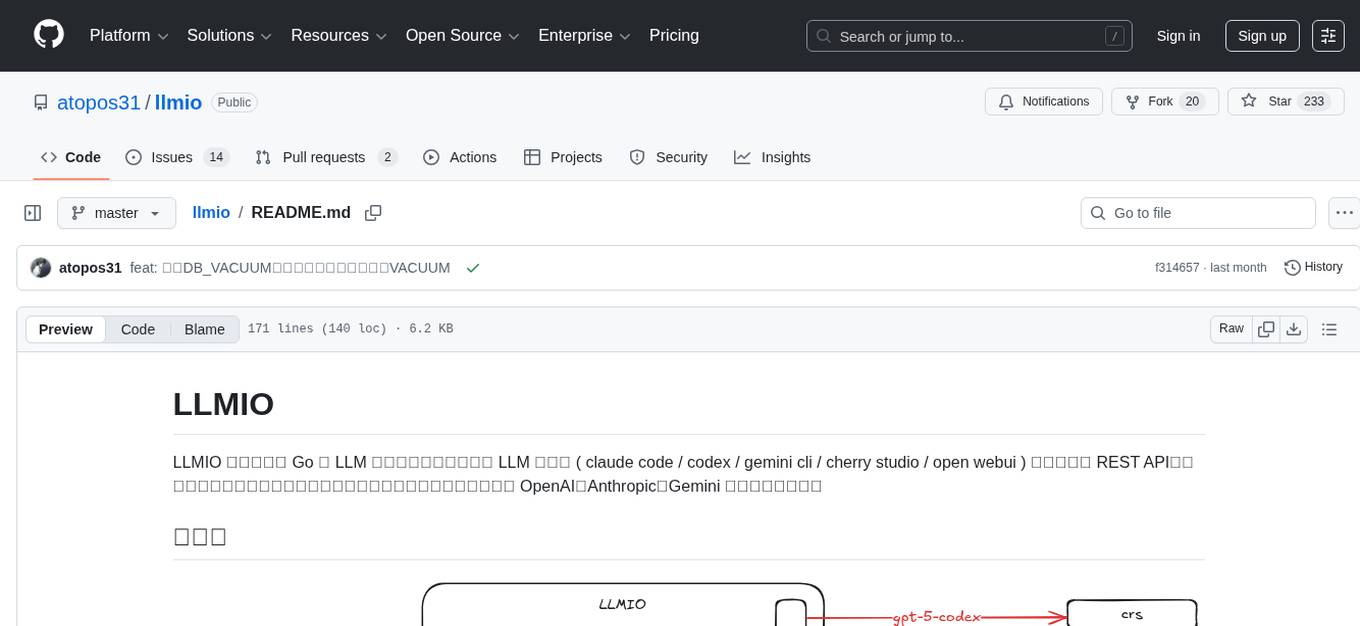

llmio

LLMIO is a Go-based LLM load balancing gateway that provides a unified REST API, weight scheduling, logging, and modern management interface for your LLM clients. It helps integrate different model capabilities from OpenAI, Anthropic, Gemini, and more in a single service. Features include unified API compatibility, weight scheduling with two strategies, visual management dashboard, rate and failure handling, and local persistence with SQLite. The tool supports multiple vendors' APIs and authentication methods, making it versatile for various AI model integrations.

llm.nvim

llm.nvim is a universal plugin for a large language model (LLM) designed to enable users to interact with LLM within neovim. Users can customize various LLMs such as gpt, glm, kimi, and local LLM. The plugin provides tools for optimizing code, comparing code, translating text, and more. It also supports integration with free models from Cloudflare, Github models, siliconflow, and others. Users can customize tools, chat with LLM, quickly translate text, and explain code snippets. The plugin offers a flexible window interface for easy interaction and customization.

agentkit-samples

AgentKit Samples is a repository containing a series of examples and tutorials to help users understand, implement, and integrate various functionalities of AgentKit into their applications. The platform offers a complete solution for building, deploying, and maintaining AI agents, significantly reducing the complexity of developing intelligent applications. The repository provides different levels of examples and tutorials, including basic tutorials for understanding AgentKit's concepts and use cases, as well as more complex examples for experienced developers.

fittencode.nvim

Fitten Code AI Programming Assistant for Neovim provides fast completion using AI, asynchronous I/O, and support for various actions like document code, edit code, explain code, find bugs, generate unit test, implement features, optimize code, refactor code, start chat, and more. It offers features like accepting suggestions with Tab, accepting line with Ctrl + Down, accepting word with Ctrl + Right, undoing accepted text, automatic scrolling, and multiple HTTP/REST backends. It can run as a coc.nvim source or nvim-cmp source.

apidash

API Dash is an open-source cross-platform API Client that allows users to easily create and customize API requests, visually inspect responses, and generate API integration code. It supports various HTTP methods, GraphQL requests, and multimedia API responses. Users can organize requests in collections, preview data in different formats, and generate code for multiple languages. The tool also offers dark mode support, data persistence, and various customization options.

For similar tasks

semantic-router

Semantic Router is a superfast decision-making layer for your LLMs and agents. Rather than waiting for slow LLM generations to make tool-use decisions, we use the magic of semantic vector space to make those decisions — _routing_ our requests using _semantic_ meaning.

hass-ollama-conversation

The Ollama Conversation integration adds a conversation agent powered by Ollama in Home Assistant. This agent can be used in automations to query information provided by Home Assistant about your house, including areas, devices, and their states. Users can install the integration via HACS and configure settings such as API timeout, model selection, context size, maximum tokens, and other parameters to fine-tune the responses generated by the AI language model. Contributions to the project are welcome, and discussions can be held on the Home Assistant Community platform.

luna-ai

Luna AI is a virtual streamer driven by a 'brain' composed of ChatterBot, GPT, Claude, langchain, chatglm, text-generation-webui, 讯飞星火, 智谱AI. It can interact with viewers in real-time during live streams on platforms like Bilibili, Douyin, Kuaishou, Douyu, or chat with you locally. Luna AI uses natural language processing and text-to-speech technologies like Edge-TTS, VITS-Fast, elevenlabs, bark-gui, VALL-E-X to generate responses to viewer questions and can change voice using so-vits-svc, DDSP-SVC. It can also collaborate with Stable Diffusion for drawing displays and loop custom texts. This project is completely free, and any identical copycat selling programs are pirated, please stop them promptly.

KULLM

KULLM (구름) is a Korean Large Language Model developed by Korea University NLP & AI Lab and HIAI Research Institute. It is based on the upstage/SOLAR-10.7B-v1.0 model and has been fine-tuned for instruction. The model has been trained on 8×A100 GPUs and is capable of generating responses in Korean language. KULLM exhibits hallucination and repetition phenomena due to its decoding strategy. Users should be cautious as the model may produce inaccurate or harmful results. Performance may vary in benchmarks without a fixed system prompt.

cria

Cria is a Python library designed for running Large Language Models with minimal configuration. It provides an easy and concise way to interact with LLMs, offering advanced features such as custom models, streams, message history management, and running multiple models in parallel. Cria simplifies the process of using LLMs by providing a straightforward API that requires only a few lines of code to get started. It also handles model installation automatically, making it efficient and user-friendly for various natural language processing tasks.

beyondllm

Beyond LLM offers an all-in-one toolkit for experimentation, evaluation, and deployment of Retrieval-Augmented Generation (RAG) systems. It simplifies the process with automated integration, customizable evaluation metrics, and support for various Large Language Models (LLMs) tailored to specific needs. The aim is to reduce LLM hallucination risks and enhance reliability.

Groma

Groma is a grounded multimodal assistant that excels in region understanding and visual grounding. It can process user-defined region inputs and generate contextually grounded long-form responses. The tool presents a unique paradigm for multimodal large language models, focusing on visual tokenization for localization. Groma achieves state-of-the-art performance in referring expression comprehension benchmarks. The tool provides pretrained model weights and instructions for data preparation, training, inference, and evaluation. Users can customize training by starting from intermediate checkpoints. Groma is designed to handle tasks related to detection pretraining, alignment pretraining, instruction finetuning, instruction following, and more.

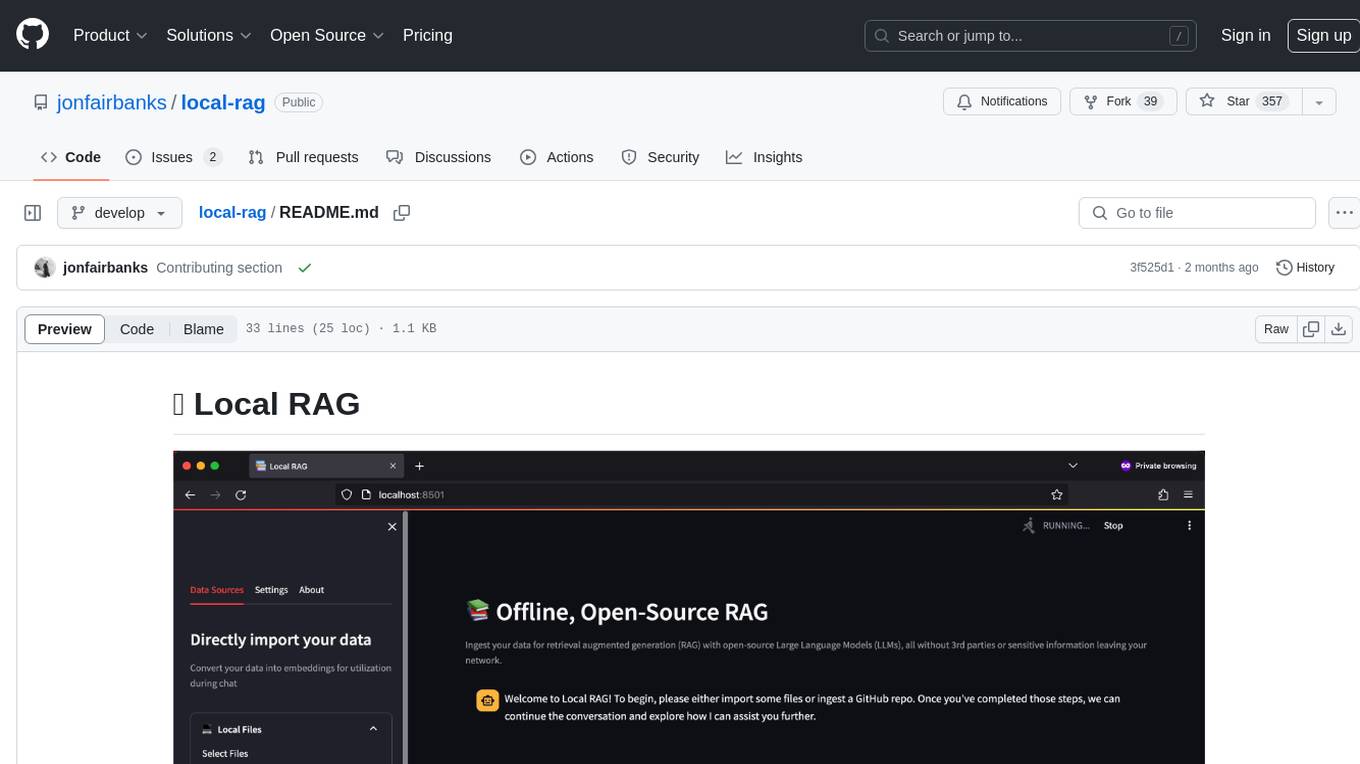

local-rag

Local RAG is an offline, open-source tool that allows users to ingest files for retrieval augmented generation (RAG) using large language models (LLMs) without relying on third parties or exposing sensitive data. It supports offline embeddings and LLMs, multiple sources including local files, GitHub repos, and websites, streaming responses, conversational memory, and chat export. Users can set up and deploy the app, learn how to use Local RAG, explore the RAG pipeline, check planned features, known bugs and issues, access additional resources, and contribute to the project.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.