Free-LLM-Collection

免费大模型API合集 / Free LLM api Collection

Stars: 64

Free-LLM-Collection is a curated list of free resources for mastering the Legal Language Model (LLM) technology. It includes datasets, research papers, tutorials, and tools to help individuals learn and work with LLM models. The repository aims to provide a comprehensive collection of materials to support researchers, developers, and enthusiasts interested in exploring and leveraging LLM technology for various applications in the legal domain.

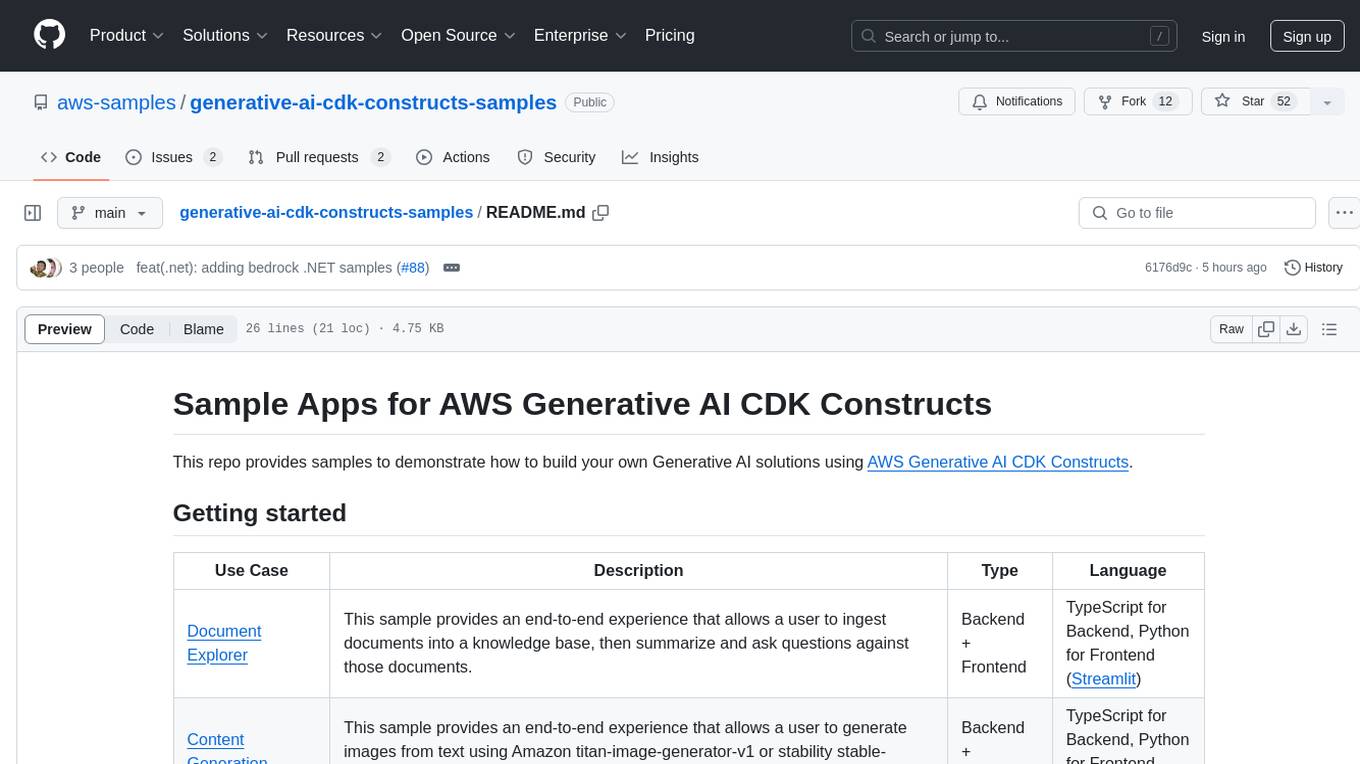

README:

这是一个关于免费的大模型api的合集,并精选了一部分模型

This is a collection of free LLM apis, and selected some models

我会尽可能更新维护这个项目(目前只有我一个人)

I will keep maintaining and updating this project to the best of my ability

入选原则是:限制请求速率而不是token > 尽可能多的来源 > 尽可能新且好的模型 > 足够用的请求速率

The selection criteria are: limit request rate over token count > more sources > newer and better models > sufficient rate limits

主要是有一定热度的文本模型

Primarily text models that have gained some popularity

目前只接受提供了OpenAI格式的API

At present, only accepted OpenAI-formated API

欢迎大家分享更多api

Welcome to share more apis

这个表格是由Gemini 2.5 Pro生成的,由Taple渲染

This table was generated by Gemini 2.5 Pro, Rendered by Taple

| 名称 / Name | API | 模型 / Models | 请求速率 / Rate Limits | 后台 / Dashboard | 注 / Tips |

|---|---|---|---|---|---|

| ChatAnywhere | https://api.chatanywhere.tech |

gpt-4o-mini |

Not Limited | https://api.chatanywhere.org/ |

|

| 硅基流动 / SiliconFlow | https://api.siliconflow.cn/v1 |

deepseek-ai/DeepSeek-R1-0528-Qwen3-8B Qwen/Qwen3-8B THUDM/glm-4-9b-chat``THUDM/GLM-4-9B-0414``THUDM/GLM-Z1-9B-0414 THUDM/GLM-4.1V-9B-Thinking

|

1000 RPM (each model) | https://cloud.siliconflow.cn/bills |

|

| OpenRouter | https://openrouter.ai/api/v1 |

deepseek/deepseek-r1-0528:free tencent/hunyuan-a13b-instruct:free moonshotai/kimi-k2:free z-ai/glm-4.5-air:free qwen/qwen3-coder:free qwen/qwen3-235b-a22b:free openai/gpt-oss-20b:free x-ai/grok-4-fast:free

|

20 RPM / 200 RPD (each model) | https://openrouter.ai/activity |

|

| 书生 / Intern AI | https://chat.intern-ai.org.cn/api/v1 |

intern-latest |

10 RPM | https://internlm.intern-ai.org.cn/api/callDetail |

密钥有效期6个月 / The key is vailed for 6 months |

| 共享算力 / suanli.com | https://api.suanli.cn/v1 |

free:QwQ-32B |

Unknown | https://api.suanli.cn/detail |

算力由他人设备共享提供 / Shared computing by other people's devices |

| Google Gemini | https://generativelanguage.googleapis.com/v1beta/openai |

gemini-2.5-pro |

5 RPM / 100 RPD | https://aistudio.google.com/usage |

GFW |

| ↑ | ↑ | gemini-2.5-flash |

10 RPM / 250 RPD | ↑ | |

| ↑ | ↑ | gemini-2.5-flash-lite |

15 RPM / 1000 RPD | ↑ | |

| Cohere | https://api.cohere.ai/compatibility/v1 |

command-a-03-2025 command-a-vision-07-2025

|

20 RPM | https://dashboard.cohere.com/billing |

绑定支付方式可以使用速率限制跟宽松的Production Key / Binding payment methods can use rate limiting and relaxed Production Key GFW |

| Bigmodel | https://open.bigmodel.cn/api/paas/v4/ |

GLM-4-Flash-250414 GLM-Z1-Flash GLM-4.5-Flash

|

只有并发数限制(均为30) / Only the number of concurrent transactions is limited (both 30). | ? | |

| Github Models | https://models.github.ai/inference |

openai/gpt-4.1-mini openai/gpt-4.1 openai/gpt-4o

|

15 RPM / 150 RPD | ? | 如果使用Azure API,可以使用更多模型 / If used Azure API, more models available |

这是我的另一个项目,建议配套使用

My another project, recommended for use together

示例配置文件/Sample Configuration File : asak.json

asak: for-the-zero/asak

- llm_benchmark:个人评测榜单,可信度高,而且收录更全 / A personal review list, it is highly credible, and it is more comprehensive

- Artifical Analysis

- lmsys lmarena

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Free-LLM-Collection

Similar Open Source Tools

Free-LLM-Collection

Free-LLM-Collection is a curated list of free resources for mastering the Legal Language Model (LLM) technology. It includes datasets, research papers, tutorials, and tools to help individuals learn and work with LLM models. The repository aims to provide a comprehensive collection of materials to support researchers, developers, and enthusiasts interested in exploring and leveraging LLM technology for various applications in the legal domain.

hcaptcha-challenger

hCaptcha Challenger is a tool designed to gracefully face hCaptcha challenges using a multimodal large language model. It does not rely on Tampermonkey scripts or third-party anti-captcha services, instead implementing interfaces for 'AI vs AI' scenarios. The tool supports various challenge types such as image labeling, drag and drop, and advanced tasks like self-supervised challenges and Agentic Workflow. Users can access documentation in multiple languages and leverage resources for tasks like model training, dataset annotation, and model upgrading. The tool aims to enhance user experience in handling hCaptcha challenges with innovative AI capabilities.

LightMem

LightMem is a lightweight and efficient memory management framework designed for Large Language Models and AI Agents. It provides a simple yet powerful memory storage, retrieval, and update mechanism to help you quickly build intelligent applications with long-term memory capabilities. The framework is minimalist in design, ensuring minimal resource consumption and fast response times. It offers a simple API for easy integration into applications with just a few lines of code. LightMem's modular architecture supports custom storage engines and retrieval strategies, making it flexible and extensible. It is compatible with various cloud APIs like OpenAI and DeepSeek, as well as local models such as Ollama and vLLM.

react-native-nitro-mlx

The react-native-nitro-mlx repository allows users to run LLMs, Text-to-Speech, and Speech-to-Text on-device in React Native using MLX Swift. It provides functionalities for downloading models, loading and generating responses, streaming audio, text-to-speech, and speech-to-text capabilities. Users can interact with various MLX-compatible models from Hugging Face, with pre-defined models available for convenience. The repository supports iOS 26.0+ and offers detailed API documentation for each feature.

beet

Beet is a collection of crates for authoring and running web pages, games and AI behaviors. It includes crates like `beet_flow` for scenes-as-control-flow bevy library, `beet_spatial` for spatial behaviors, `beet_ml` for machine learning, `beet_sim` for simulation tooling, `beet_rsx` for authoring tools for html and bevy, and `beet_router` for file-based router for web docs. The `beet` crate acts as a base crate that re-exports sub-crates based on feature flags, similar to the `bevy` crate structure.

aikit

AIKit is a comprehensive platform for hosting, deploying, building, and fine-tuning large language models (LLMs). It offers inference using LocalAI, extensible fine-tuning interface, and OCI packaging for distributing models. AIKit supports various models, multi-modal model and image generation, Kubernetes deployment, and supply chain security. It can run on AMD64 and ARM64 CPUs, NVIDIA GPUs, and Apple Silicon (experimental). Users can quickly get started with AIKit without a GPU and access pre-made models. The platform is OpenAI API compatible and provides easy-to-use configuration for inference and fine-tuning.

apidash

API Dash is an open-source cross-platform API Client that allows users to easily create and customize API requests, visually inspect responses, and generate API integration code. It supports various HTTP methods, GraphQL requests, and multimedia API responses. Users can organize requests in collections, preview data in different formats, and generate code for multiple languages. The tool also offers dark mode support, data persistence, and various customization options.

aikit

AIKit is a one-stop shop to quickly get started to host, deploy, build and fine-tune large language models (LLMs). AIKit offers two main capabilities: Inference: AIKit uses LocalAI, which supports a wide range of inference capabilities and formats. LocalAI provides a drop-in replacement REST API that is OpenAI API compatible, so you can use any OpenAI API compatible client, such as Kubectl AI, Chatbot-UI and many more, to send requests to open-source LLMs! Fine Tuning: AIKit offers an extensible fine tuning interface. It supports Unsloth for fast, memory efficient, and easy fine-tuning experience.

YuLan-Mini

YuLan-Mini is a lightweight language model with 2.4 billion parameters that achieves performance comparable to industry-leading models despite being pre-trained on only 1.08T tokens. It excels in mathematics and code domains. The repository provides pre-training resources, including data pipeline, optimization methods, and annealing approaches. Users can pre-train their own language models, perform learning rate annealing, fine-tune the model, research training dynamics, and synthesize data. The team behind YuLan-Mini is AI Box at Renmin University of China. The code is released under the MIT License with future updates on model weights usage policies. Users are advised on potential safety concerns and ethical use of the model.

models

This repository contains comprehensive pricing and configuration data for LLMs, providing accurate pricing for 2,000+ models across 40+ providers. It addresses the challenges of LLM pricing, such as naming inconsistencies, varied pricing units, hidden dimensions, and rapid pricing changes. The repository offers a free API without authentication requirements, enabling users to access model configurations and pricing information easily. It aims to create a community-maintained database to streamline cost attribution for enterprises utilizing LLMs.

DownEdit

DownEdit is a powerful program that allows you to download videos from various social media platforms such as TikTok, Douyin, Kuaishou, and more. With DownEdit, you can easily download videos from user profiles and edit them in bulk. You have the option to flip the videos horizontally or vertically throughout the entire directory with just a single click. Stay tuned for more exciting features coming soon!

bionemo-framework

NVIDIA BioNeMo Framework is a collection of programming tools, libraries, and models for computational drug discovery. It accelerates building and adapting biomolecular AI models by providing domain-specific, optimized models and tooling for GPU-based computational resources. The framework offers comprehensive documentation and support for both community and enterprise users.

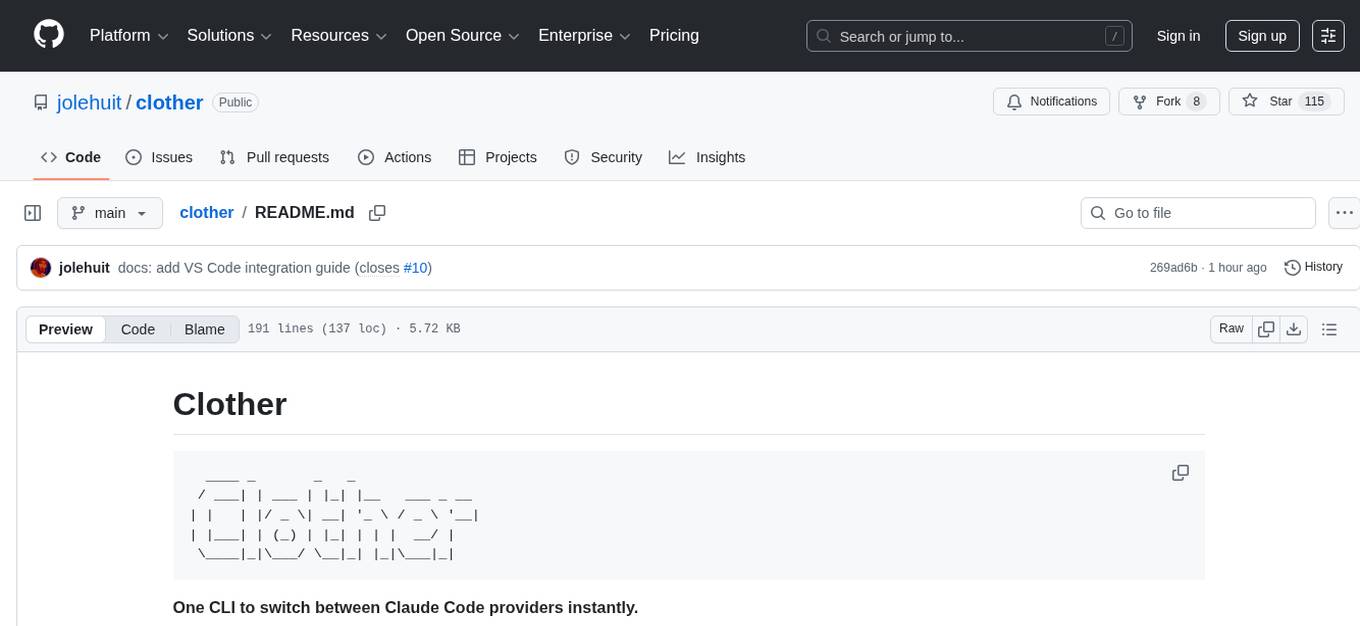

clother

Clother is a command-line tool that allows users to switch between different Claude Code providers instantly. It provides launchers for various cloud, open router, China endpoints, local, and custom providers, enabling users to configure, list profiles, test connectivity, check installation status, and uninstall. Users can also change the default model for each provider and troubleshoot common issues. Clother simplifies the management of API keys and installation directories, supporting macOS, Linux, and Windows (WSL) platforms. It is designed to streamline the workflow of interacting with different AI models and services.

DownEdit

DownEdit is a fast and powerful program for downloading and editing videos from platforms like TikTok, Douyin, and Kuaishou. It allows users to effortlessly grab videos, make bulk edits, and utilize advanced AI features for generating videos, images, and sounds in bulk. The tool offers features like video, photo, and sound editing, downloading videos without watermarks, bulk AI generation, and AI editing for content enhancement.

EVE

EVE is an official PyTorch implementation of Unveiling Encoder-Free Vision-Language Models. The project aims to explore the removal of vision encoders from Vision-Language Models (VLMs) and transfer LLMs to encoder-free VLMs efficiently. It also focuses on bridging the performance gap between encoder-free and encoder-based VLMs. EVE offers a superior capability with arbitrary image aspect ratio, data efficiency by utilizing publicly available data for pre-training, and training efficiency with a transparent and practical strategy for developing a pure decoder-only architecture across modalities.

free-chat

Free Chat is a forked project from chatgpt-demo that allows users to deploy a chat application with various features. It provides branches for different functionalities like token-based message list trimming and usage demonstration of 'promplate'. Users can control the website through environment variables, including setting OpenAI API key, temperature parameter, proxy, base URL, and more. The project welcomes contributions and acknowledges supporters. It is licensed under MIT by Muspi Merol.

For similar tasks

Advanced-GPTs

Nerority's Advanced GPT Suite is a collection of 33 GPTs that can be controlled with natural language prompts. The suite includes tools for various tasks such as strategic consulting, business analysis, career profile building, content creation, educational purposes, image-based tasks, knowledge engineering, marketing, persona creation, programming, prompt engineering, role-playing, simulations, and task management. Users can access links, usage instructions, and guides for each GPT on their respective pages. The suite is designed for public demonstration and usage, offering features like meta-sequence optimization, AI priming, prompt classification, and optimization. It also provides tools for generating articles, analyzing contracts, visualizing data, distilling knowledge, creating educational content, exploring topics, generating marketing copy, simulating scenarios, managing tasks, and more.

generative-ai-cdk-constructs-samples

This repository contains sample applications showcasing the use of AWS Generative AI CDK Constructs to build solutions for document exploration, content generation, image description, and deploying various models on SageMaker. It also includes samples for deploying Amazon Bedrock Agents and automating contract compliance analysis. The samples cover a range of backend and frontend technologies such as TypeScript, Python, and React.

OpenContracts

OpenContracts is an Apache-2 licensed enterprise document analytics tool that supports multiple formats, including PDF and txt-based formats. It features multiple document ingestion pipelines with a pluggable architecture for easy format and ingestion engine support. Users can create custom document analytics tools with beautiful result displays, support mass document data extraction with a LlamaIndex wrapper, and manage document collections, layout parsing, automatic vector embeddings, and human annotation. The tool also offers pluggable parsing pipelines, human annotation interface, LlamaIndex integration, data extraction capabilities, and custom data extract pipelines for bulk document querying.

open-extract

open-extract simplifies the ingestion and processing of unstructured data for those building AI Agents/Agentic Workflows using frameworks such as LangGraph, AG2, and CrewAI. It allows applications to identify and extract relevant data from large documents and websites with a single API call, supporting multi-schema/multi-document extraction without vendor lock-in. The tool includes built-in caching for rapid repeat extractions, providing flexibility in model provider choice.

Free-LLM-Collection

Free-LLM-Collection is a curated list of free resources for mastering the Legal Language Model (LLM) technology. It includes datasets, research papers, tutorials, and tools to help individuals learn and work with LLM models. The repository aims to provide a comprehensive collection of materials to support researchers, developers, and enthusiasts interested in exploring and leveraging LLM technology for various applications in the legal domain.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.