clother

Configure and launch multiple Claude Code–compatible LLM providers from one CLI, switching profiles instantly with simple clother-* commands.

Stars: 112

Clother is a command-line tool that allows users to switch between different Claude Code providers instantly. It provides launchers for various cloud, open router, China endpoints, local, and custom providers, enabling users to configure, list profiles, test connectivity, check installation status, and uninstall. Users can also change the default model for each provider and troubleshoot common issues. Clother simplifies the management of API keys and installation directories, supporting macOS, Linux, and Windows (WSL) platforms. It is designed to streamline the workflow of interacting with different AI models and services.

README:

____ _ _ _

/ ___| | ___ | |_| |__ ___ _ __

| | | |/ _ \| __| '_ \ / _ \ '__|

| |___| | (_) | |_| | | | __/ |

\____|_|\___/ \__|_| |_|\___|_|

One CLI to switch between Claude Code providers instantly.

# 1. Install Claude Code CLI

curl -fsSL https://claude.ai/install.sh | bash

# 2. Install Clother

curl -fsSL https://raw.githubusercontent.com/jolehuit/clother/main/clother.sh | bashclother-native # Use your Claude Pro/Team subscription

clother-zai # Z.AI (GLM-5)

clother-ollama --model qwen3-coder # Local with Ollama

clother config # Configure providers| Command | Provider | Model | API Key |

|---|---|---|---|

clother-native |

Anthropic | Claude | Your subscription |

clother-zai |

Z.AI | GLM-5 | z.ai |

clother-minimax |

MiniMax | MiniMax-M2.1 | minimax.io |

clother-kimi |

Kimi | kimi-k2.5 | kimi.com |

clother-moonshot |

Moonshot AI | kimi-k2.5 | moonshot.ai |

clother-deepseek |

DeepSeek | deepseek-chat | deepseek.com |

clother-mimo |

Xiaomi MiMo | mimo-v2-flash | xiaomimimo.com |

Access Grok, Gemini, Mistral and more via openrouter.ai.

clother config openrouter # Set API key + add models

clother-or-kimi-k2 # Use itFor non-Claude models, use the

:exactovariant (e.g.moonshotai/kimi-k2-0905:exacto).

| Command | Endpoint |

|---|---|

clother-zai-cn |

open.bigmodel.cn |

clother-minimax-cn |

api.minimaxi.com |

clother-ve |

ark.cn-beijing.volces.com |

| Command | Provider | Port | Setup |

|---|---|---|---|

clother-ollama |

Ollama | 11434 | ollama.com |

clother-lmstudio |

LM Studio | 1234 | lmstudio.ai |

clother-llamacpp |

llama.cpp | 8000 | github.com/ggml-org/llama.cpp |

# Ollama

ollama pull qwen3-coder && ollama serve

clother-ollama --model qwen3-coder

# LM Studio

clother-lmstudio --model <model>

# llama.cpp

./llama-server --model model.gguf --port 8000 --jinja

clother-llamacpp --model <model>clother config # Choose "custom"

clother-myprovider # Ready| Command | Description |

|---|---|

clother config [provider] |

Configure provider |

clother list |

List profiles |

clother test |

Test connectivity |

clother status |

Installation status |

clother uninstall |

Remove everything |

Each provider launcher comes with a default model (e.g. glm-5 for Z.AI). You can override it in several ways:

# One-time: use --model flag

clother-zai --model glm-4.7

# Permanent: set ANTHROPIC_MODEL in your shell profile (.zshrc / .bashrc)

export ANTHROPIC_MODEL="glm-4.7"

clother-zai

# Or edit the launcher directly

nano ~/bin/clother-zai # Replace the model name on all relevant linesTip: The

--modelflag is passed directly to Claude CLI and takes priority over everything else.

Clother creates launcher scripts that set environment variables:

# clother-zai does:

export ANTHROPIC_BASE_URL="https://api.z.ai/api/anthropic"

export ANTHROPIC_AUTH_TOKEN="$ZAI_API_KEY"

exec claude "$@"API keys stored in ~/.local/share/clother/secrets.env (chmod 600).

By default, Clother installs launchers to:

-

macOS:

~/bin -

Linux:

~/.local/bin(XDG standard)

You can override this with --bin-dir or the CLOTHER_BIN environment variable:

# Using --bin-dir flag

curl -fsSL https://raw.githubusercontent.com/jolehuit/clother/main/clother.sh | bash -s -- --bin-dir ~/.local/bin

# Using environment variable

export CLOTHER_BIN="$HOME/.local/bin"

curl -fsSL https://raw.githubusercontent.com/jolehuit/clother/main/clother.sh | bashMake sure the chosen directory is in your PATH.

| Problem | Solution |

|---|---|

claude: command not found |

Install Claude CLI first |

clother: command not found |

Add your bin directory to PATH (see Install Directory) |

API key not set |

Run clother config

|

macOS (zsh/bash) • Linux (zsh/bash) • Windows (WSL)

- @darkokoa — China endpoints

- @RawToast — Kimi endpoint fix

- @sammcj — Security hardening

- @luciano-fiandesio — Install directory improvement (issue)

MIT © jolehuit

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for clother

Similar Open Source Tools

clother

Clother is a command-line tool that allows users to switch between different Claude Code providers instantly. It provides launchers for various cloud, open router, China endpoints, local, and custom providers, enabling users to configure, list profiles, test connectivity, check installation status, and uninstall. Users can also change the default model for each provider and troubleshoot common issues. Clother simplifies the management of API keys and installation directories, supporting macOS, Linux, and Windows (WSL) platforms. It is designed to streamline the workflow of interacting with different AI models and services.

shodh-memory

Shodh-Memory is a cognitive memory system designed for AI agents to persist memory across sessions, learn from experience, and run entirely offline. It features Hebbian learning, activation decay, and semantic consolidation, packed into a single ~17MB binary. Users can deploy it on cloud, edge devices, or air-gapped systems to enhance the memory capabilities of AI agents.

pup

Pup is a Go-based command-line wrapper designed for easy interaction with Datadog APIs. It provides a fast, cross-platform binary with support for OAuth2 authentication and traditional API key authentication. The tool offers simple commands for common Datadog operations, structured JSON output for parsing and automation, and dynamic client registration with unique OAuth credentials per installation. Pup currently implements 38 out of 85+ available Datadog APIs, covering core observability, monitoring & alerting, security & compliance, infrastructure & cloud, incident & operations, CI/CD & development, organization & access, and platform & configuration domains. Users can easily install Pup via Homebrew, Go Install, or manual download, and authenticate using OAuth2 or API key methods. The tool supports various commands for tasks such as testing connection, managing monitors, querying metrics, handling dashboards, working with SLOs, and handling incidents.

dbhub

DBHub is a universal database gateway that implements the Model Context Protocol (MCP) server interface. It allows MCP-compatible clients to connect to and explore different databases. The gateway supports various database resources and tools, providing capabilities such as executing queries, listing connectors, generating SQL, and explaining database elements. Users can easily configure their database connections and choose between different transport modes like stdio and sse. DBHub also offers a demo mode with a sample employee database for testing purposes.

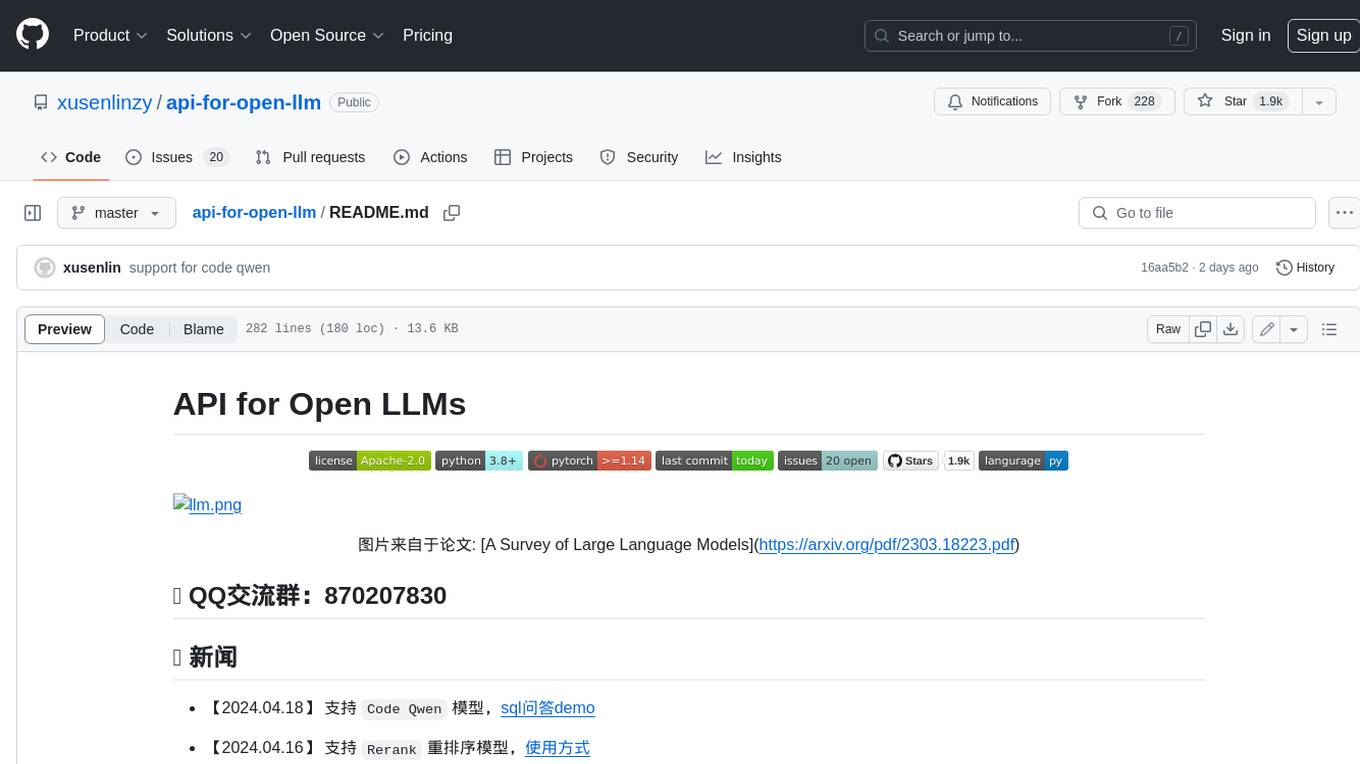

api-for-open-llm

This project provides a unified backend interface for open large language models (LLMs), offering a consistent experience with OpenAI's ChatGPT API. It supports various open-source LLMs, enabling developers to seamlessly integrate them into their applications. The interface features streaming responses, text embedding capabilities, and support for LangChain, a tool for developing LLM-based applications. By modifying environment variables, developers can easily use open-source models as alternatives to ChatGPT, providing a cost-effective and customizable solution for various use cases.

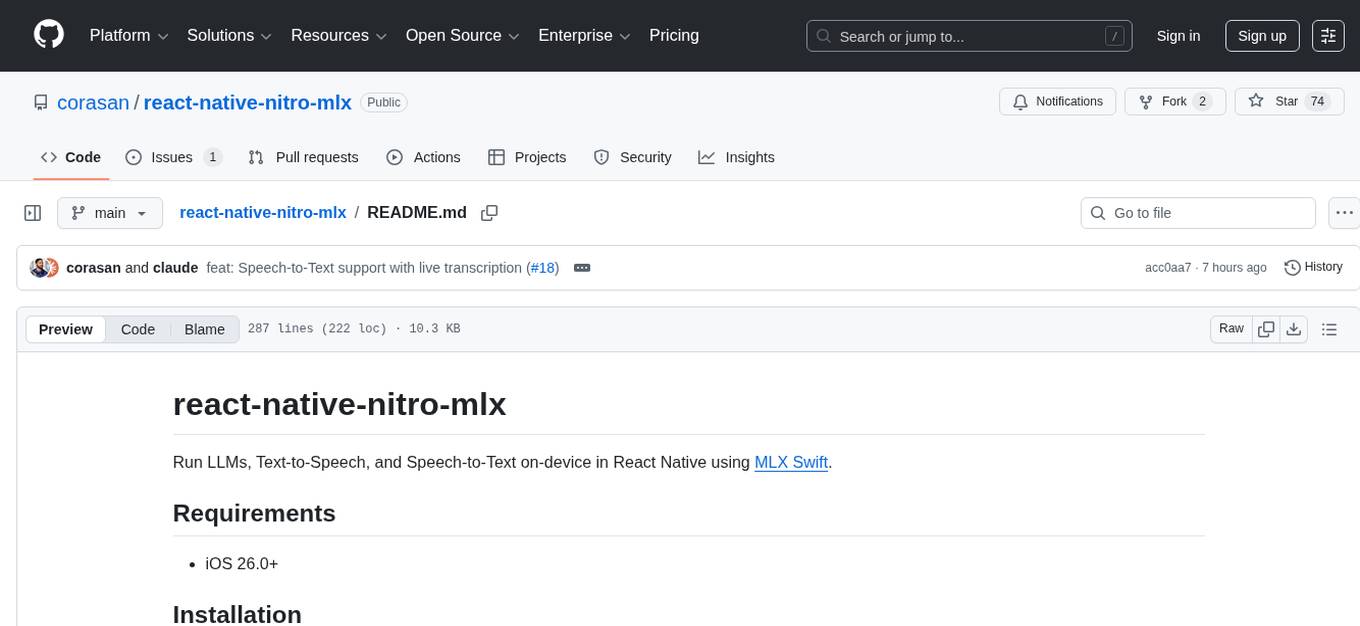

react-native-nitro-mlx

The react-native-nitro-mlx repository allows users to run LLMs, Text-to-Speech, and Speech-to-Text on-device in React Native using MLX Swift. It provides functionalities for downloading models, loading and generating responses, streaming audio, text-to-speech, and speech-to-text capabilities. Users can interact with various MLX-compatible models from Hugging Face, with pre-defined models available for convenience. The repository supports iOS 26.0+ and offers detailed API documentation for each feature.

new-api

New API is a next-generation large model gateway and AI asset management system that provides a wide range of features, including a new UI interface, multi-language support, online recharge function, key query for usage quota, compatibility with the original One API database, model charging by usage count, channel weighted randomization, data dashboard, token grouping and model restrictions, support for various authorization login methods, support for Rerank models, OpenAI Realtime API, Claude Messages format, reasoning effort setting, content reasoning, user-specific model rate limiting, request format conversion, cache billing support, and various model support such as gpts, Midjourney-Proxy, Suno API, custom channels, Rerank models, Claude Messages format, Dify, and more.

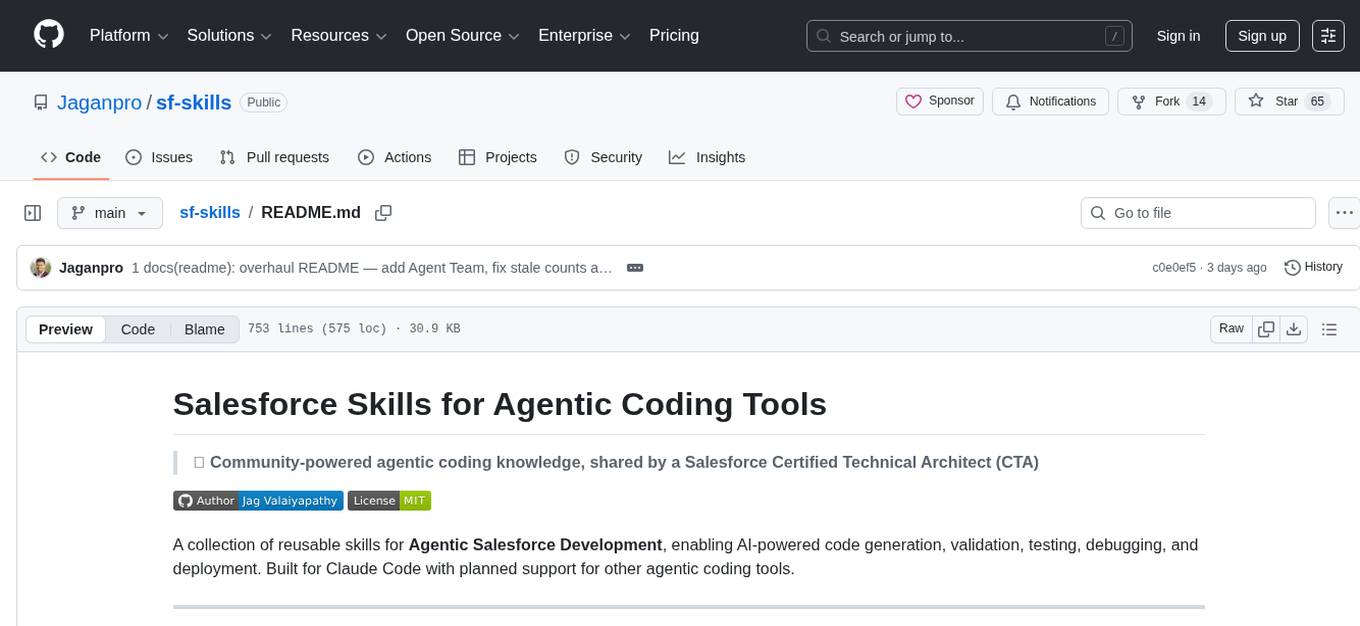

sf-skills

sf-skills is a collection of reusable skills for Agentic Salesforce Development, enabling AI-powered code generation, validation, testing, debugging, and deployment. It includes skills for development, quality, foundation, integration, AI & automation, DevOps & tooling. The installation process is newbie-friendly and includes an installer script for various CLIs. The skills are compatible with platforms like Claude Code, OpenCode, Codex, Gemini, Amp, Droid, Cursor, and Agentforce Vibes. The repository is community-driven and aims to strengthen the Salesforce ecosystem.

DownEdit

DownEdit is a powerful program that allows you to download videos from various social media platforms such as TikTok, Douyin, Kuaishou, and more. With DownEdit, you can easily download videos from user profiles and edit them in bulk. You have the option to flip the videos horizontally or vertically throughout the entire directory with just a single click. Stay tuned for more exciting features coming soon!

claude-craft

Claude Craft is a comprehensive framework for AI-assisted development with Claude Code, providing standardized rules, agents, and commands across multiple technology stacks. It includes autonomous sprint capabilities, documentation accuracy improvements, CI hardening, and test coverage enhancements. With support for 10 technology stacks, 5 languages, 40 AI agents, 157 slash commands, and various project management features like BMAD v6 framework, Ralph Wiggum loop execution, skills, templates, checklists, and hooks system, Claude Craft offers a robust solution for project development and management. The tool also supports workflow methodology, development tracks, document generation, BMAD v6 project management, quality gates, batch processing, backlog migration, and Claude Code hooks integration.

llm.nvim

llm.nvim is a universal plugin for a large language model (LLM) designed to enable users to interact with LLM within neovim. Users can customize various LLMs such as gpt, glm, kimi, and local LLM. The plugin provides tools for optimizing code, comparing code, translating text, and more. It also supports integration with free models from Cloudflare, Github models, siliconflow, and others. Users can customize tools, chat with LLM, quickly translate text, and explain code snippets. The plugin offers a flexible window interface for easy interaction and customization.

chatglm.cpp

ChatGLM.cpp is a C++ implementation of ChatGLM-6B, ChatGLM2-6B, ChatGLM3-6B and more LLMs for real-time chatting on your MacBook. It is based on ggml, working in the same way as llama.cpp. ChatGLM.cpp features accelerated memory-efficient CPU inference with int4/int8 quantization, optimized KV cache and parallel computing. It also supports P-Tuning v2 and LoRA finetuned models, streaming generation with typewriter effect, Python binding, web demo, api servers and more possibilities.

roam-code

Roam is a tool that builds a semantic graph of your codebase and allows AI agents to query it with one shell command. It pre-indexes your codebase into a semantic graph stored in a local SQLite DB, providing architecture-level graph queries offline, cross-language, and compact. Roam understands functions, modules, tests coverage, and overall architecture structure. It is best suited for agent-assisted coding, large codebases, architecture governance, safe refactoring, and multi-repo projects. Roam is not suitable for real-time type checking, dynamic/runtime analysis, small scripts, or pure text search. It offers speed, dependency-awareness, LLM-optimized output, fully local operation, and CI readiness.

DownEdit

DownEdit is a fast and powerful program for downloading and editing videos from platforms like TikTok, Douyin, and Kuaishou. It allows users to effortlessly grab videos, make bulk edits, and utilize advanced AI features for generating videos, images, and sounds in bulk. The tool offers features like video, photo, and sound editing, downloading videos without watermarks, bulk AI generation, and AI editing for content enhancement.

everything-claude-code

The 'Everything Claude Code' repository is a comprehensive collection of production-ready agents, skills, hooks, commands, rules, and MCP configurations developed over 10+ months. It includes guides for setup, foundations, and philosophy, as well as detailed explanations of various topics such as token optimization, memory persistence, continuous learning, verification loops, parallelization, and subagent orchestration. The repository also provides updates on bug fixes, multi-language rules, installation wizard, PM2 support, OpenCode plugin integration, unified commands and skills, and cross-platform support. It offers a quick start guide for installation, ecosystem tools like Skill Creator and Continuous Learning v2, requirements for CLI version compatibility, key concepts like agents, skills, hooks, and rules, running tests, contributing guidelines, OpenCode support, background information, important notes on context window management and customization, star history chart, and relevant links.

llmio

LLMIO is a Go-based LLM load balancing gateway that provides a unified REST API, weight scheduling, logging, and modern management interface for your LLM clients. It helps integrate different model capabilities from OpenAI, Anthropic, Gemini, and more in a single service. Features include unified API compatibility, weight scheduling with two strategies, visual management dashboard, rate and failure handling, and local persistence with SQLite. The tool supports multiple vendors' APIs and authentication methods, making it versatile for various AI model integrations.

For similar tasks

clother

Clother is a command-line tool that allows users to switch between different Claude Code providers instantly. It provides launchers for various cloud, open router, China endpoints, local, and custom providers, enabling users to configure, list profiles, test connectivity, check installation status, and uninstall. Users can also change the default model for each provider and troubleshoot common issues. Clother simplifies the management of API keys and installation directories, supporting macOS, Linux, and Windows (WSL) platforms. It is designed to streamline the workflow of interacting with different AI models and services.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.