machinascript-for-robots

Build LLM-powered robots in your garage with MachinaScript For Robots!

Stars: 158

MachinaScript For Robots is a dynamic set of tools and a LLM-JSON-based language designed to empower humans in the creation of their own robots. It facilitates the animation of generative movements, the integration of personality, and the teaching of new skills with a high degree of autonomy. With MachinaScript, users can control a wide range of electronic components, including Arduinos, Raspberry Pis, servo motors, cameras, sensors, and more. The tool enables the creation of intelligent robots accessible to everyone, allowing for complex tasks to be performed with elegance and precision.

README:

MACHINA3 embodies a loop of perception and action that simulates the flow of human thought. Through the integration of a vision systems and a serial to analogic signals parser, MACHINA3 interprets visual data and crafts responses in near real-time, enabling machines to perform complex tasks with elegance and some level of precision - a fantastic achievement for such an early stage of the technology.

Added support for Llama 3.2 Vision at ultra fast Groq Inference (+300tps) Added support for JSON mode

See full release Here

Intro • How MachinaScript Works • Getting Started • Installation • Community •

MachinaScript is a dynamic set of tools and a LLM-JSON-based language designed to empower humans in the creation of their own robots.

It facilitates the animation of generative movements, the integration of personality, and the teaching of new skills with a high degree of autonomy. With MachinaScript, you can control a wide range of electronic components, including Arduinos, Raspberry Pis, servo motors, cameras, sensors, and much more.

MachinaScript's mission is to make cutting-edge intelligent robotics accessible for everyone.

Read all about it on the medium article

Read the user manual in the code directory here.

-

Input Reception: Upon receiving an input, the brain unit, (a central processing unit like a raspberry pi or a computer of your choice) initiates the process. For example listen for a wake up word, or a function to keep reading images in real time on a multimodal LLM.

-

Instruction Generation: A Language Model (LLM) then crafts a sequence of instructions for actions, movements and skills. These are formatted in MachinaScript, optimized for sequential execution.

-

Instruction Parsing: The robot's brain unit interprets the generated MachinaScript instructions.

-

Action Serialization: Instructions are relayed to the microcontroller, the entity governing the robot's physical operations like servo motors and sensors.

The MachinaScript language LLM-JSON-based synthax is incredibly modular because it is generative. It is composed of three major nested components: Actions, Movements and Skills.

Actions: a set of instructions to be executed in a specific order. They may contain multiple movements and multiple skill usages.

Movements: they address motors to move and parameters like degrees and the speed. This can be used to create very personal animations.

Skills: function calling the MachinaScript way, to make use of cameras, sensors and even to speak with text-to-speech.

As long as your brain unit code is adapted to interpret it, you have no ending for your creativity.

This is an example of the complete language structure in its current latest version. Note you can change the complete synthax for the language structure for your needs, no strings attached. Just make sure it will work with your brain module generating, parsing and serializing.

The project was designed to be used accross the wide ecosystem of large language models, multimodals and non-multimodals, locals and non-locals. Note that autopilot units like Machina2 would require some form of multi-modality to sense the world via images and plan actions by itself.

To instruct a LLM to talk in the MachinaScript Synthax, we pass a system message that looks like this:

You are a MachinaScript for Robots generator.

MachinaScript is a LLM-JSON-based format used to define robotic actions, including

motor movements and skill usage, under specific contexts given by the user.

Each action can involve multiple movements, motors and skills, with defined parameters

like motor positions, speeds, and skill-specific details, like this:

(...)

Please generate a new MachinaScript using the exact given format and project specifications.

This piece of code is refered as machinascript_language.txt and is recommended to stay unchanged.

Ideally you will only change the specs of your project.

No artisanal robot is the same. They are all beautifully unique.

One of the most mind blowing things about MachinaScript is that it can embody any design ever. You just need to tell it in a set of specs what are their physical properties and limitations, as well as instructions for the behavior of the LLM. Should it be funny? Serious? What are its goals? Favorite color? The machinascript_project_specs.txt is where you put everything related to your robot personality.

For this to work, we will append a little extra information in the system message containing the following information:

Project specs:

{

"Motors": [

{"id": "motor_neck_vertical", "range": [0, 180]},

{"id": "motor_neck_horizontal", "range": [0, 180]}

],

"Skills": [

{"id": "photograph", "description": "Captures a photograph using an attached camera and send to a multimodal LLM."},

{"id": "blink_led", "parameters": {"led_pin": 10, "duration": 500, "times": 3}, "description": "Blinks an LED to indicate action."}

],

"Limitations": [

{"motor": "motor_neck_vertical", "max_speed": "medium"}

{"motor speeds": [slow, medium, high]}

]

Personality: Funny, delicate

Agency Level: high

}

note the JSON-style here can be completely reworked into any kind of text you want. You can even describe it in a single paragraph if you feel like. However for sake of human readability and developer experience, you can use this template for better "mental mapping" your project specs. This is all in very early beta so take it with a grain of salt.

We are releasing a set of finetuned models for MachinaScript soon to make its generations even better. You can also finetune models for your own specific usecase too.

An action can contain multiple movements in an order to perform animations (set of movements). It may even contain embodied personality in the motion.

Check out Disney's latest robot that combines engineering with their team of motion designers to create a more human friendly machine in the style of BD-1.

You can learn more about the 12 principles of animation here.

-

Begin with Arduino: The easiest entry point is to start by programming your robot with Arduino code.

- Construct your robot and get it moving with simple programmed commands.

- Modify the Arduino code to accept dynamic commands, similar to how a remote-controlled car operates.

-

Components: Utilize a variety of components to enhance your robot:

- Servo motors, sensors, buttons, LEDs, and any other compatible electronics.

-

Connect the Hardware: Link your Arduino to a computing device of your choice. This could be a Raspberry Pi, a personal computer, or even an older laptop with internet access.

-

Edit the Brain Code:

- Map Arduino components within your code and establish their rules and functions for interaction. For instance, a servo motor might be named

head_motor_verticaland programmed to move up to 180 degrees. - Modify the "system prompt" passed to the LLM with your defined rules and component names.

- Map Arduino components within your code and establish their rules and functions for interaction. For instance, a servo motor might be named

- Skills encompass any function callable from the LLM, ranging from complex movement sequences (e.g., making a drink, dancing) to interactive tasks like taking pictures or utilizing text-to-speech.

Here's a quick overview:

- Clone/Download: Clone or download this repository into a chosen directory.

- Edit the Brain Code: Customize the brain code's system prompt to describe your robot's capabilities.

- Connect Hardware: Integrate your robot's locomotion and sensory systems as previously outlined.

Ready to share your projects to the world? Join our community on discord: https://discord.gg/SQFZNkQP3x

MachinaScript is my gift for the maker community,

wich has teached me so much about being a human.

Let the robots live forever.

Made with love for all the makers out there!

This project is and always will be free and open source for everyone.

babycommando

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for machinascript-for-robots

Similar Open Source Tools

machinascript-for-robots

MachinaScript For Robots is a dynamic set of tools and a LLM-JSON-based language designed to empower humans in the creation of their own robots. It facilitates the animation of generative movements, the integration of personality, and the teaching of new skills with a high degree of autonomy. With MachinaScript, users can control a wide range of electronic components, including Arduinos, Raspberry Pis, servo motors, cameras, sensors, and more. The tool enables the creation of intelligent robots accessible to everyone, allowing for complex tasks to be performed with elegance and precision.

DemoGPT

DemoGPT is an all-in-one agent library that provides tools, prompts, frameworks, and LLM models for streamlined agent development. It leverages GPT-3.5-turbo to generate LangChain code, creating interactive Streamlit applications. The tool is designed for creating intelligent, interactive, and inclusive solutions in LLM-based application development. It offers model flexibility, iterative development, and a commitment to user engagement. Future enhancements include integrating Gorilla for autonomous API usage and adding a publicly available database for refining the generation process.

12-factor-agents

12-Factor Agents is a project focused on building reliable LLM-powered software by outlining 12 core engineering principles. The project aims to provide guidance on creating production-ready customer-facing agents that leverage AI technology effectively. It emphasizes the importance of software design, context management, tool integration, and control flow in developing high-quality AI agents. The project offers insights, design patterns, and practical advice for software engineers looking to enhance their AI applications with agent-based approaches.

hal-9100

This repository is now archived and the code is privately maintained. If you are interested in this infrastructure, please contact the maintainer directly.

Instruct2Act

Instruct2Act is a framework that utilizes Large Language Models to map multi-modal instructions to sequential actions for robotic manipulation tasks. It generates Python programs using the LLM model for perception, planning, and action. The framework leverages foundation models like SAM and CLIP to convert high-level instructions into policy codes, accommodating various instruction modalities and task demands. Instruct2Act has been validated on robotic tasks in tabletop manipulation domains, outperforming learning-based policies in several tasks.

chatgpt-universe

ChatGPT is a large language model that can generate human-like text, translate languages, write different kinds of creative content, and answer your questions in a conversational way. It is trained on a massive amount of text data, and it is able to understand and respond to a wide range of natural language prompts. Here are 5 jobs suitable for this tool, in lowercase letters: 1. content writer 2. chatbot assistant 3. language translator 4. creative writer 5. researcher

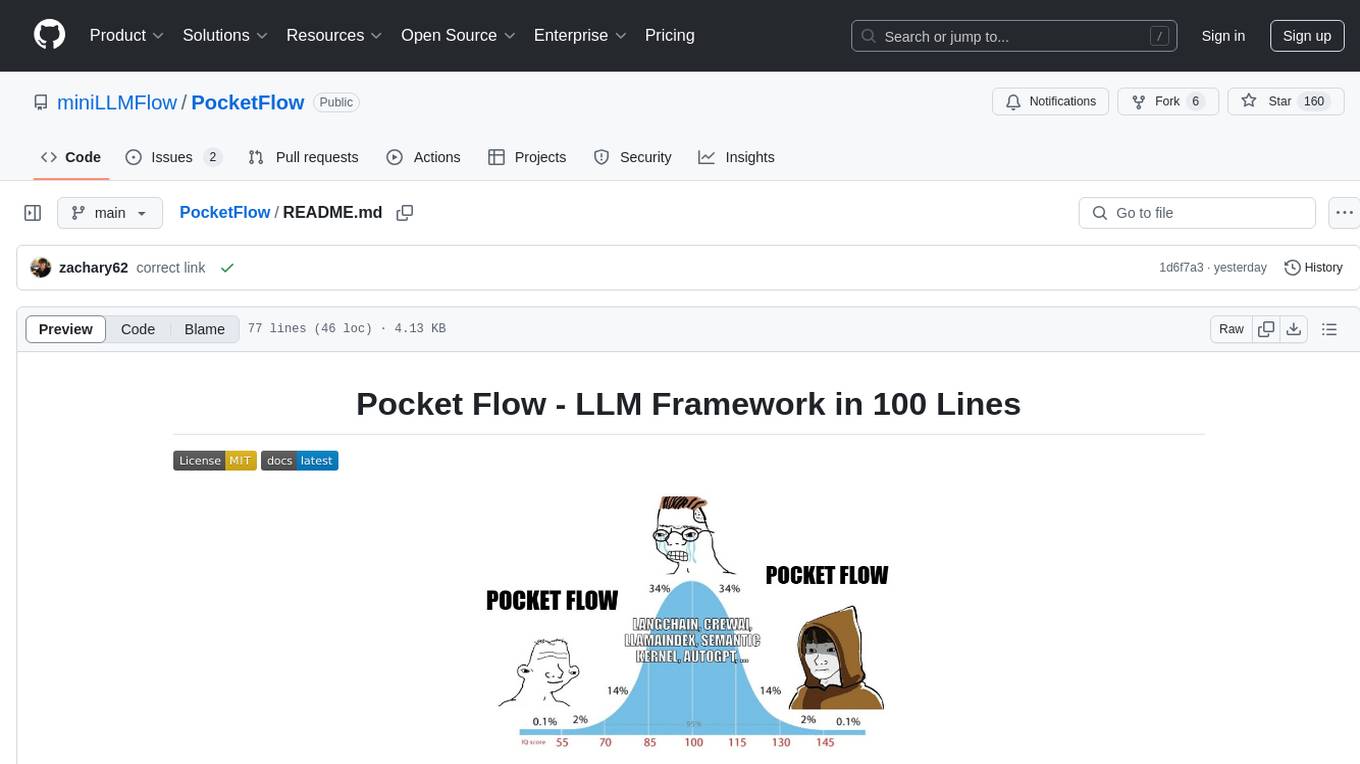

PocketFlow

Pocket Flow is a 100-line minimalist LLM framework designed for (Multi-)Agents, Task Decomposition, RAG, etc. It aims to be the framework used by LLMs, focusing on stripping away low-level implementation details and emphasizing high-level programming paradigms. Pocket Flow serves as a learning resource and provides a core abstraction of a nested directed graph for breaking down tasks into multiple steps.

crewAI

crewAI is a cutting-edge framework for orchestrating role-playing, autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It provides a flexible and structured approach to AI collaboration, enabling users to define agents with specific roles, goals, and tools, and assign them tasks within a customizable process. crewAI supports integration with various LLMs, including OpenAI, and offers features such as autonomous task delegation, flexible task management, and output parsing. It is open-source and welcomes contributions, with a focus on improving the library based on usage data collected through anonymous telemetry.

llms

The 'llms' repository is a comprehensive guide on Large Language Models (LLMs), covering topics such as language modeling, applications of LLMs, statistical language modeling, neural language models, conditional language models, evaluation methods, transformer-based language models, practical LLMs like GPT and BERT, prompt engineering, fine-tuning LLMs, retrieval augmented generation, AI agents, and LLMs for computer vision. The repository provides detailed explanations, examples, and tools for working with LLMs.

model2vec

Model2Vec is a technique to turn any sentence transformer into a really small static model, reducing model size by 15x and making the models up to 500x faster, with a small drop in performance. It outperforms other static embedding models like GLoVe and BPEmb, is lightweight with only `numpy` as a major dependency, offers fast inference, dataset-free distillation, and is integrated into Sentence Transformers, txtai, and Chonkie. Model2Vec creates powerful models by passing a vocabulary through a sentence transformer model, reducing dimensionality using PCA, and weighting embeddings using zipf weighting. Users can distill their own models or use pre-trained models from the HuggingFace hub. Evaluation can be done using the provided evaluation package. Model2Vec is licensed under MIT.

ComfyUI-HunyuanVideo-Nyan

ComfyUI-HunyuanVideo-Nyan is a repository that provides tools for manipulating the attention of LLM models, allowing users to shuffle the AI's attention and cause confusion. The repository includes a Nerdy Transformer Shuffle node that enables users to mess with the LLM's attention layers, providing a workflow for installation and usage. It also offers a new SAE-informed Long-CLIP model with high accuracy, along with recommendations for CLIP models. Users can find detailed instructions on how to use the provided nodes to scale CLIP & LLM factors and create high-quality nature videos. The repository emphasizes compatibility with other related tools and provides insights into the functionality of the included nodes.

argilla

Argilla is a collaboration platform for AI engineers and domain experts that require high-quality outputs, full data ownership, and overall efficiency. It helps users improve AI output quality through data quality, take control of their data and models, and improve efficiency by quickly iterating on the right data and models. Argilla is an open-source community-driven project that provides tools for achieving and maintaining high-quality data standards, with a focus on NLP and LLMs. It is used by AI teams from companies like the Red Cross, Loris.ai, and Prolific to improve the quality and efficiency of AI projects.

DeepFabric

Deepfabric is an SDK and CLI tool that leverages large language models to generate high-quality synthetic datasets. It's designed for researchers and developers building teacher-student distillation pipelines, creating evaluation benchmarks for models and agents, or conducting research requiring diverse training data. The key innovation lies in Deepfabric's graph and tree-based architecture, which uses structured topic nodes as generation seeds. This approach ensures the creation of datasets that are both highly diverse and domain-specific, while minimizing redundancy and duplication across generated samples.

ludwig

Ludwig is a declarative deep learning framework designed for scale and efficiency. It is a low-code framework that allows users to build custom AI models like LLMs and other deep neural networks with ease. Ludwig offers features such as optimized scale and efficiency, expert level control, modularity, and extensibility. It is engineered for production with prebuilt Docker containers, support for running with Ray on Kubernetes, and the ability to export models to Torchscript and Triton. Ludwig is hosted by the Linux Foundation AI & Data.

SwiftSage

SwiftSage is a tool designed for conducting experiments in the field of machine learning and artificial intelligence. It provides a platform for researchers and developers to implement and test various algorithms and models. The tool is particularly useful for exploring new ideas and conducting experiments in a controlled environment. SwiftSage aims to streamline the process of developing and testing machine learning models, making it easier for users to iterate on their ideas and achieve better results. With its user-friendly interface and powerful features, SwiftSage is a valuable tool for anyone working in the field of AI and ML.

Woodpecker

Woodpecker is a tool designed to correct hallucinations in Multimodal Large Language Models (MLLMs) by introducing a training-free method that picks out and corrects inconsistencies between generated text and image content. It consists of five stages: key concept extraction, question formulation, visual knowledge validation, visual claim generation, and hallucination correction. Woodpecker can be easily integrated with different MLLMs and provides interpretable results by accessing intermediate outputs of the stages. The tool has shown significant improvements in accuracy over baseline models like MiniGPT-4 and mPLUG-Owl.

For similar tasks

awesome-mobile-robotics

The 'awesome-mobile-robotics' repository is a curated list of important content related to Mobile Robotics and AI. It includes resources such as courses, books, datasets, software and libraries, podcasts, conferences, journals, companies and jobs, laboratories and research groups, and miscellaneous resources. The repository covers a wide range of topics in the field of Mobile Robotics and AI, providing valuable information for enthusiasts, researchers, and professionals in the domain.

machinascript-for-robots

MachinaScript For Robots is a dynamic set of tools and a LLM-JSON-based language designed to empower humans in the creation of their own robots. It facilitates the animation of generative movements, the integration of personality, and the teaching of new skills with a high degree of autonomy. With MachinaScript, users can control a wide range of electronic components, including Arduinos, Raspberry Pis, servo motors, cameras, sensors, and more. The tool enables the creation of intelligent robots accessible to everyone, allowing for complex tasks to be performed with elegance and precision.

rai

This repository contains core sources related to Robotics & AI. It serves as a submodule in integrated projects, providing a minimal Ubuntu-specific build system and development tests. The code originated around 2004 in Edinburgh and has grown over the years to encompass various functionalities for Robotics, ML, and AI. Users are advised to explore example projects using this bare code for a better understanding of its capabilities.

Awesome-Embodied-AI-Job

Awesome Embodied AI Job is a curated list of resources related to jobs in the field of Embodied Artificial Intelligence. It includes job boards, companies hiring, and resources for job seekers interested in roles such as robotics engineer, computer vision specialist, AI researcher, machine learning engineer, and data scientist.

lerobot

LeRobot is a state-of-the-art AI library for real-world robotics in PyTorch. It aims to provide models, datasets, and tools to lower the barrier to entry to robotics, focusing on imitation learning and reinforcement learning. LeRobot offers pretrained models, datasets with human-collected demonstrations, and simulation environments. It plans to support real-world robotics on affordable and capable robots. The library hosts pretrained models and datasets on the Hugging Face community page.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.