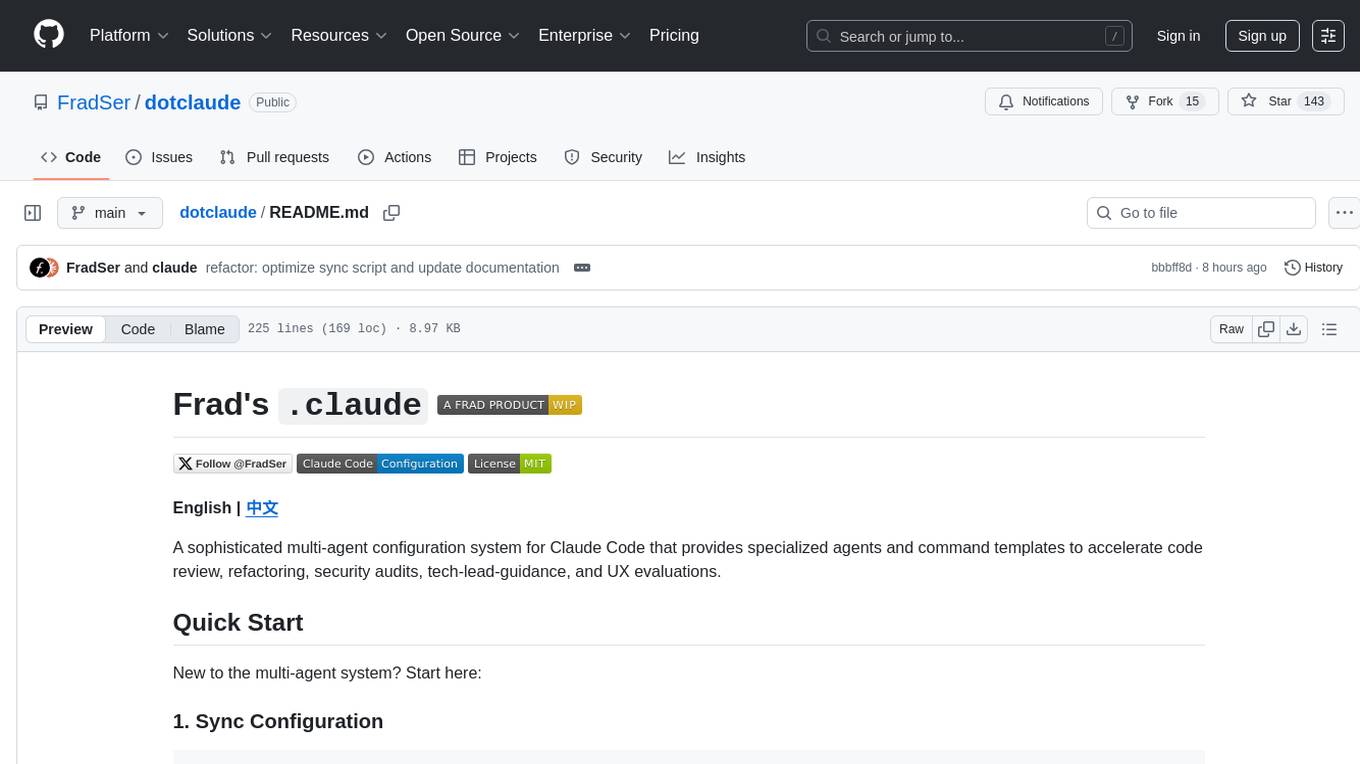

agentsys

AI writes code. This automates everything else · 12 plugins · 41 agents · 27 skills · for Claude Code, OpenCode, Codex.

Stars: 424

AgentSys is a modular runtime and orchestration system for AI agents, with 13 plugins, 42 agents, and 28 skills that compose into structured pipelines for software development. It handles task selection, branch management, code review, artifact cleanup, CI, PR comments, and deployment. The system runs on Claude Code, OpenCode, and Codex CLI, providing a functional software suite and runtime for AI agent orchestration.

README:

A modular runtime and orchestration system for AI agents.

Renamed from

awesome-slash— Theawesome-prefix implies a curated list of links, but this project is a functional software suite and runtime. Please update your installs:npm install -g agentsys

13 plugins · 42 agents · 28 skills · 26k lines of lib code · 3,357 tests · 3 platforms

Commands · Installation · Website · Discussions

Built for Claude Code · Codex CLI · OpenCode

New skills, agents, and integrations ship constantly. Follow for real-time updates:

AI models can write code. That's not the hard part anymore. The hard part is everything around it — task selection, branch management, code review, artifact cleanup, CI, PR comments, deployment. AgentSys is the runtime that orchestrates agents to handle all of it — structured pipelines, gated phases, specialized agents, and persistent state that survives session boundaries.

Building custom skills, agents, hooks, or MCP tools? agnix is the CLI + LSP linter that catches config errors before they fail silently - real-time IDE validation, auto suggestions, auto-fix, and 155 rules for Cursor, Claude Code, Cline, Copilot, Codex, Windsurf, and more.

An agent orchestration system — 13 plugins, 42 agents, and 28 skills that compose into structured pipelines for software development.

Each agent has a single responsibility, a specific model assignment, and defined inputs/outputs. Pipelines enforce phase gates so agents can't skip steps. State persists across sessions so work survives interruptions.

The system runs on Claude Code, OpenCode, and Codex CLI. Install the plugins, get the runtime.

Code does code work. AI does AI work.

- Detection: regex, AST analysis, static analysis—fast, deterministic, no tokens wasted

- Judgment: LLM calls for synthesis, planning, review—where reasoning matters

- Result: 77% fewer tokens for /drift-detect vs multi-agent approaches, certainty-graded findings throughout

Certainty levels exist because not all findings are equal:

| Level | Meaning | Action |

|---|---|---|

| HIGH | Definitely a problem | Safe to auto-fix |

| MEDIUM | Probably a problem | Needs context |

| LOW | Might be a problem | Needs human judgment |

This came from testing on 1,000+ repositories.

| Command | What it does |

|---|---|

/next-task |

Task → exploration → plan → implementation → review → ship |

/agnix |

Lint agent configs - 155 rules for Skills, Memory, Hooks, MCP across 10+ AI tools |

/ship |

Branch → PR → CI → reviews addressed → merge → cleanup |

/deslop |

3-phase detection pipeline, certainty-graded findings |

/perf |

10-phase performance investigation with baselines and profiling |

/drift-detect |

AST-based plan vs code analysis, finds what's documented but not built |

/audit-project |

Multi-agent code review, iterates until issues resolved |

/enhance |

Analyzes prompts, agents, plugins, docs, hooks, skills |

/repo-map |

AST symbol and import mapping via ast-grep |

/sync-docs |

Finds outdated references, stale examples, missing CHANGELOG entries |

/learn |

Research any topic, gather online sources, create learning guide with RAG index |

/consult |

Consult another AI CLI tool for a second opinion. Use when you want to cross-check ideas, get alternative approaches, or validate decisions with Gemini, Codex, Claude, OpenCode, or Copilot. |

/debate |

Structured debate between two AI tools to stress-test ideas. Proposer/Challenger format with a verdict. |

Each command works standalone. Together, they compose into end-to-end pipelines.

28 skills included across the plugins:

| Category | Skills |

|---|---|

| Performance |

perf:perf-analyzer, perf:perf-baseline-manager, perf:perf-benchmarker, perf:perf-code-paths, perf:perf-investigation-logger, perf:perf-profiler, perf:perf-theory-gatherer, perf:perf-theory-tester

|

| Enhancement |

enhance:enhance-agent-prompts, enhance:enhance-claude-memory, enhance:enhance-cross-file, enhance:enhance-docs, enhance:enhance-hooks, enhance:enhance-orchestrator, enhance:enhance-plugins, enhance:enhance-prompts, enhance:enhance-skills

|

| Workflow |

next-task:discover-tasks, next-task:orchestrate-review, next-task:validate-delivery

|

| Cleanup |

deslop:deslop, sync-docs:sync-docs

|

| Analysis |

debate:debate, drift-detect:drift-analysis, repo-map:repo-mapping

|

| Productivity | consult:consult |

| Learning | learn:learn |

| Linting | agnix:agnix |

Skills are the reusable implementation units. Agents invoke skills; commands orchestrate agents. When you install a plugin, its skills become available to all agents in that session.

| Section | What's there |

|---|---|

| The Approach | Why it's built this way |

| Commands | All 12 commands overview |

| Skills | 28 skills across plugins |

| Command Details | Deep dive into each command |

| How Commands Work Together | Standalone vs integrated |

| Design Philosophy | The thinking behind the architecture |

| Installation | Get started |

| Research & Testing | What went into building this |

| Documentation | Links to detailed docs |

Purpose: Complete task-to-production automation.

What happens when you run it:

- Policy Selection - Choose task source (GitHub issues, GitLab, local file), priority filter, stopping point

- Task Discovery - Shows top 5 prioritized tasks, you pick one

- Worktree Setup - Creates isolated branch and working directory

- Exploration - Deep codebase analysis to understand context

- Planning - Designs implementation approach

- User Approval - You review and approve the plan (last human interaction)

- Implementation - Executes the plan

- Pre-Review - Runs deslop-agent and test-coverage-checker

- Review Loop - Multi-agent review iterates until clean

- Delivery Validation - Verifies tests pass, build passes, requirements met

- Docs Update - Updates CHANGELOG and related documentation

- Ship - Creates PR, monitors CI, addresses comments, merges

Phase 9 uses the orchestrate-review skill to spawn parallel reviewers (code quality, security, performance, test coverage) plus conditional specialists.

Agents involved:

| Agent | Model | Role |

|---|---|---|

| task-discoverer | sonnet | Finds and ranks tasks from your source |

| worktree-manager | haiku | Creates git worktrees and branches |

| exploration-agent | opus | Deep codebase analysis before planning |

| planning-agent | opus | Designs step-by-step implementation plan |

| implementation-agent | opus | Writes the actual code |

| test-coverage-checker | sonnet | Validates tests exist and are meaningful |

| delivery-validator | sonnet | Final checks before shipping |

| ci-monitor | haiku | Watches CI status |

| ci-fixer | sonnet | Fixes CI failures and review comments |

| simple-fixer | haiku | Executes mechanical edits |

Cross-plugin agent:

| Agent | Plugin | Role |

|---|---|---|

| deslop-agent | deslop | Removes AI artifacts before review |

| sync-docs-agent | sync-docs | Updates documentation |

Usage:

/next-task # Start new workflow

/next-task --resume # Resume interrupted workflow

/next-task --status # Check current state

/next-task --abort # Cancel and cleanupPurpose: Lint agent configurations before they break your workflow. The first dedicated linter for AI agent configs.

agnix is a standalone open-source project that provides the validation engine. This plugin integrates it into your workflow.

The problem it solves:

Agent configurations are code. They affect behavior, security, and reliability. But unlike application code, they have no linting. You find out your SKILL.md is malformed when the agent fails. You discover your hooks have security issues when they're exploited. You realize your CLAUDE.md has conflicting rules when the AI behaves unexpectedly.

agnix catches these issues before they cause problems.

What it validates:

| Category | What It Checks |

|---|---|

| Structure | Required fields, valid YAML/JSON, proper frontmatter |

| Security | Prompt injection vectors, overpermissive tools, exposed secrets |

| Consistency | Conflicting rules, duplicate definitions, broken references |

| Best Practices | Tool restrictions, model selection, trigger phrase quality |

| Cross-Platform | Compatibility across Claude Code, Cursor, Copilot, Codex, OpenCode, Gemini CLI, Cline, and more |

155 validation rules (57 auto-fixable) derived from:

- Official tool specifications (Claude Code, Cursor, GitHub Copilot, Codex CLI, OpenCode, Gemini CLI, and more)

- Research papers on agent reliability and prompt injection

- Real-world testing across 500+ repositories

- Community-reported issues and edge cases

Supported files:

| File Type | Examples |

|---|---|

| Skills |

SKILL.md, */SKILL.md

|

| Memory |

CLAUDE.md, AGENTS.md, .github/CLAUDE.md

|

| Hooks |

.claude/settings.json, hooks configuration |

| MCP |

*.mcp.json, MCP server configs |

| Cursor |

.cursor/rules/*.mdc, .cursorrules

|

| Copilot | .github/copilot-instructions.md |

CI/CD Integration:

agnix outputs SARIF format for GitHub Code Scanning. Add it to your workflow:

- name: Lint agent configs

run: agnix --format sarif > results.sarif

- uses: github/codeql-action/upload-sarif@v3

with:

sarif_file: results.sarifUsage:

/agnix # Validate current project

/agnix --fix # Auto-fix fixable issues

/agnix --strict # Treat warnings as errors

/agnix --target claude-code # Only Claude Code rules

/agnix --format sarif # Output for GitHub Code ScanningAgent: agnix-agent (sonnet model)

External tool: Requires agnix CLI

npm install -g agnix # Install via npm

# or

cargo install agnix-cli # Install via Cargo

# or

brew install agnix # Install via Homebrew (macOS)Why use agnix:

- Catch config errors before they cause agent failures

- Enforce security best practices across your team

- Maintain consistency as your agent configs grow

- Integrate validation into CI/CD pipelines

- Support multiple AI tools from one linter

Purpose: Takes your current branch from "ready to commit" to "merged PR."

What happens when you run it:

- Pre-flight - Detects CI platform, deployment platform, branch strategy

- Commit - Stages and commits with generated message (if uncommitted changes)

- Push & PR - Pushes branch, creates pull request

- CI Monitor - Waits for CI, retries on transient failures

- Review Wait - Waits 3 minutes for auto-reviewers (Copilot, Claude, Gemini, Codex)

- Address Comments - Handles every comment from every reviewer

- Merge - Merges when all comments resolved and CI passes

- Deploy - Deploys and validates (if multi-branch workflow)

- Cleanup - Removes worktree, closes issue, deletes branch

Platform Detection:

| Type | Detected |

|---|---|

| CI | GitHub Actions, GitLab CI, CircleCI, Jenkins, Travis |

| Deploy | Railway, Vercel, Netlify, Fly.io, Render |

| Project | Node.js, Python, Rust, Go, Java |

Review Comment Handling:

Every comment gets addressed. No exceptions. The workflow categorizes comments and handles each:

- Code fixes get implemented

- Style suggestions get applied

- Questions get answered

- False positives get explained

If something can't be fixed, the workflow replies explaining why and resolves the thread.

Usage:

/ship # Full workflow

/ship --dry-run # Preview without executing

/ship --strategy rebase # Use rebase instead of squashPurpose: Finds AI slop—debug statements, placeholder text, verbose comments, TODOs—and removes it.

How detection works:

Three phases run in sequence:

-

Phase 1: Regex Patterns (HIGH certainty)

-

console.log,print(),dbg!(),println!() -

// TODO,// FIXME,// HACK - Empty catch blocks, disabled linters

- Hardcoded secrets (API keys, tokens)

-

-

Phase 2: Multi-Pass Analyzers (MEDIUM certainty)

- Doc-to-code ratio (excessive comments)

- Verbosity ratio (AI preambles)

- Over-engineering patterns

- Buzzword inflation

- Dead code detection

- Stub functions

-

Phase 3: CLI Tools (LOW certainty, optional)

- jscpd, madge, escomplex (JS/TS)

- pylint, radon (Python)

- golangci-lint (Go)

- clippy (Rust)

Languages supported: JavaScript/TypeScript, Python, Rust, Go, Java

Usage:

/deslop # Report only (safe)

/deslop apply # Fix HIGH certainty issues

/deslop apply src/ 10 # Fix 10 issues in src/Thoroughness levels:

-

quick- Phase 1 only (fastest) -

normal- Phase 1 + Phase 2 (default) -

deep- All phases if tools available

Purpose: Structured performance investigation with baselines, profiling, and evidence-backed decisions.

10-phase methodology (based on recorded real performance investigation sessions):

- Setup - Confirm scenario, success criteria, benchmark command

- Baseline - 60s minimum runs, PERF_METRICS markers required

- Breaking Point - Binary search to find failure threshold

- Constraints - CPU/memory limits, measure delta vs baseline

- Hypotheses - Generate up to 5 hypotheses with evidence and confidence

- Code Paths - Use repo-map to identify entrypoints and hot files

- Profiling - Language-specific tools (--cpu-prof, JFR, cProfile, pprof)

- Optimization - One change per experiment, 2+ validation passes

- Decision - Continue or stop based on measurable improvement

- Consolidation - Final baseline, evidence log, investigation complete

Agents and skills:

| Component | Role |

|---|---|

| perf-orchestrator | Coordinates all phases |

| perf-theory-gatherer | Generates hypotheses from git history and code |

| perf-theory-tester | Validates hypotheses with controlled experiments |

| perf-analyzer | Synthesizes findings into recommendations |

| perf-code-paths | Maps entrypoints and likely hot paths |

| perf-investigation-logger | Structured evidence logging |

Usage:

/perf # Start new investigation

/perf --resume # Resume previous investigationPhase flags (advanced):

/perf --phase baseline --command "npm run bench" --version v1.2.0

/perf --phase breaking-point --param-min 1 --param-max 500

/perf --phase constraints --cpu 1 --memory 1GB

/perf --phase hypotheses --hypotheses-file perf-hypotheses.json

/perf --phase optimization --change "reduce allocations"

/perf --phase decision --verdict stop --rationale "no measurable improvement"Purpose: Compares your documentation and plans to what's actually in the code.

The problem it solves:

Your roadmap says "user authentication: done." But is it actually implemented? Your GitHub issue says "add dark mode." Is it already in the codebase? Plans drift from reality. This command finds the drift.

How it works:

-

JavaScript collectors gather data (fast, token-efficient)

- GitHub issues and their labels

- Documentation files

- Actual code exports and implementations

-

Single Opus call performs semantic analysis

- Matches concepts, not strings ("user auth" matches

auth/,login.js,session.ts) - Identifies implemented but not documented

- Identifies documented but not implemented

- Finds stale issues that should be closed

- Matches concepts, not strings ("user auth" matches

Why this approach:

Multi-agent collection wastes tokens on coordination. JavaScript collectors are fast and deterministic. One well-prompted LLM call does the actual analysis. Result: 77% token reduction vs multi-agent approaches.

Tested on 1,000+ repositories before release.

Usage:

/drift-detect # Full analysis

/drift-detect --depth quick # Quick scanPurpose: Multi-agent code review that iterates until issues are resolved.

What happens when you run it:

Up to 10 specialized role-based agents run based on your project:

| Agent | When Active | Focus Area |

|---|---|---|

| code-quality-reviewer | Always | Code quality, error handling |

| security-expert | Always | Vulnerabilities, auth, secrets |

| performance-engineer | Always | N+1 queries, memory, blocking ops |

| test-quality-guardian | Always | Coverage, edge cases, mocking |

| architecture-reviewer | If 50+ files | Modularity, patterns, SOLID |

| database-specialist | If DB detected | Queries, indexes, transactions |

| api-designer | If API detected | REST, errors, pagination |

| frontend-specialist | If frontend detected | Components, state, UX |

| backend-specialist | If backend detected | Services, domain logic |

| devops-reviewer | If CI/CD detected | Pipelines, configs, secrets |

Findings are collected and categorized by severity (critical/high/medium/low). All non-false-positive issues get fixed automatically. The loop repeats until no open issues remain.

Usage:

/audit-project # Full review

/audit-project --quick # Single pass

/audit-project --resume # Resume from queue file

/audit-project --domain security # Security focus only

/audit-project --recent # Only recent changesPurpose: Analyzes your prompts, plugins, agents, docs, hooks, and skills for improvement opportunities.

Seven analyzers run in parallel:

| Analyzer | What it checks |

|---|---|

| plugin-enhancer | Plugin structure, MCP tool definitions, security patterns |

| agent-enhancer | Agent frontmatter, prompt quality |

| claudemd-enhancer | CLAUDE.md/AGENTS.md structure, token efficiency |

| docs-enhancer | Documentation readability, RAG optimization |

| prompt-enhancer | Prompt engineering patterns, clarity, examples |

| hooks-enhancer | Hook frontmatter, structure, safety |

| skills-enhancer | SKILL.md structure, trigger phrases |

Each finding includes:

- Certainty level (HIGH/MEDIUM/LOW)

- Specific location (file:line)

- What's wrong

- How to fix it

- Whether it can be auto-fixed

Auto-learning: Detects obvious false positives (pattern docs, workflow gates) and saves them for future runs. Reduces noise over time without manual suppression files.

Usage:

/enhance # Run all analyzers

/enhance --focus=agent # Just agent prompts

/enhance --apply # Apply HIGH certainty fixes

/enhance --show-suppressed # Show what's being filtered

/enhance --no-learn # Analyze but don't save false positivesPurpose: Builds an AST-based map of symbols and imports for fast repo analysis.

What it generates:

- Cached file→symbols map (exports, functions, classes)

- Import graph for dependency hints

Output is cached at {state-dir}/repo-map.json and exposed via the MCP repo_map tool.

Why it matters:

Tools like /drift-detect and planners can use the map instead of re-scanning the repo every time.

Usage:

/repo-map init # First-time map generation

/repo-map update # Incremental update

/repo-map status # Check freshnessRequired: ast-grep (sg) must be installed.

Purpose: Sync documentation with actual code changes—find outdated refs, update CHANGELOG, flag stale examples.

The problem it solves:

You refactor auth.js into auth/index.js. Your README still says import from './auth'. You rename a function. Three docs still reference the old name. You ship a feature. CHANGELOG doesn't mention it. Documentation drifts from code. This command finds the drift.

What it detects:

| Category | Examples |

|---|---|

| Broken references | Imports to moved/renamed files, deleted exports |

| Version mismatches | Doc says v2.0, package.json says v2.1 |

| Stale code examples | Import paths that no longer exist |

| Missing CHANGELOG |

feat: and fix: commits without entries |

Auto-fixable vs flagged:

| Auto-fixable (apply mode) | Flagged for review |

|---|---|

| Version number updates | Removed exports referenced in docs |

| CHANGELOG entries for commits | Code examples needing context |

| Function renames |

Usage:

/sync-docs # Check what docs need updates (safe)

/sync-docs apply # Apply safe fixes

/sync-docs report src/ # Check docs related to src/

/sync-docs --all # Full codebase scanPurpose: Research any topic online and create a comprehensive learning guide with RAG-optimized indexes.

What it does:

- Progressive Discovery - Uses funnel approach (broad → specific → deep) to find quality sources

- Quality Scoring - Scores sources by authority, recency, depth, examples, uniqueness

- Just-In-Time Extraction - Fetches only high-scoring sources to save tokens

- Synthesis - Creates structured learning guide with examples and best practices

- RAG Index - Updates CLAUDE.md/AGENTS.md master index for future lookups

- Enhancement - Runs enhance:enhance-docs and enhance:enhance-prompts

Depth levels:

| Depth | Sources | Use Case |

|---|---|---|

| brief | 10 | Quick overview |

| medium | 20 | Default, balanced |

| deep | 40 | Comprehensive |

Output structure:

agent-knowledge/

CLAUDE.md # Master index (updated each run)

AGENTS.md # Index for OpenCode/Codex

recursion.md # Topic-specific guide

resources/

recursion-sources.json # Source metadata with quality scores

Usage:

/learn recursion # Default (20 sources)

/learn react hooks --depth=deep # Comprehensive (40 sources)

/learn kubernetes --depth=brief # Quick overview (10 sources)

/learn python async --no-enhance # Skip enhancement passAgent: learn-agent (opus model for research quality)

Purpose: Get a second opinion from another AI CLI tool without leaving your current session.

What it does:

- Tool Detection - Detects which AI CLI tools are installed (cross-platform)

- Interactive Picker - If no tool specified, shows only installed tools to choose from

- Effort Mapping - Maps effort levels to per-provider models and reasoning flags

- Execution - Runs the consultation with safe-mode defaults and 120s timeout

-

Session Continuity - Saves session state for Claude and Gemini (supports

--continue)

Supported tools:

| Tool | Default Model (high) | Reasoning Control |

|---|---|---|

| Claude | opus | max-turns |

| Gemini | gemini-3-pro-preview | built-in |

| Codex | gpt-5.3-codex | model_reasoning_effort |

| OpenCode | github-copilot/claude-opus-4-6 | --variant |

| Copilot | (default) | none |

Usage:

/consult "Is this the right approach?" --tool=gemini --effort=high

/consult "Review for performance issues" --tool=codex

/consult "Suggest alternatives" --tool=claude --effort=max

/consult "Continue from where we left off" --continue

/consult "Explain this error" --context=diff --tool=geminiAgent: consult-agent (sonnet model for orchestration)

Standalone use:

/deslop apply # Just clean up your code

/sync-docs # Just check if docs need updates

/ship # Just ship this branch

/audit-project # Just review the codebaseIntegrated workflow:

When you run /next-task, it orchestrates everything:

/next-task picks task → explores codebase → plans implementation

↓

implementation-agent writes code

↓

deslop-agent cleans AI artifacts

↓

Phase 9 review loop iterates until approved

↓

delivery-validator checks requirements

↓

sync-docs-agent syncs documentation

↓

[/ship](#ship) creates PR → monitors CI → merges

The workflow tracks state so you can resume from any point.

Architecture decisions and trade-offs (click to expand)

Frontier models write good code. That's solved. What's not solved:

- Context management - Models forget what they're doing mid-session

- Compaction amnesia - Long sessions get summarized, losing critical state

- Task drift - Without structure, agents wander from the actual goal

- Skipped steps - Agents skip reviews, tests, or cleanup when not enforced

- Token waste - Using LLM calls for work that static analysis can do faster

- Babysitting - Manually orchestrating each phase of development

- Repetitive requests - Asking for the same workflow every single session

1. One agent, one job, done extremely well

Same principle as good code: single responsibility. The exploration-agent explores. The implementation-agent implements. Phase 9 spawns multiple focused reviewers. No agent tries to do everything. Specialized agents, each with narrow scope and clear success criteria.

2. Pipeline with gates, not a monolith

Same principle as DevOps. Each step must pass before the next begins. Can't push before review. Can't merge before CI passes. Hooks enforce this—agents literally cannot skip phases.

3. Tools do tool work, agents do agent work

If static analysis, regex, or a shell command can do it, don't ask an LLM. Pattern detection uses pre-indexed regex. File discovery uses glob. Platform detection uses file existence checks. The LLM only handles what requires judgment.

4. Agents don't need to know how tools work

The slop detector returns findings with certainty levels. The agent doesn't need to understand the three-phase pipeline, the regex patterns, or the analyzer heuristics. Good tool design means the consumer doesn't need implementation details.

5. Build tools where tools don't exist

Many tasks lack existing tools. JavaScript collectors for drift-detect. Multi-pass analyzers for slop detection. The result: agents receive structured data, not raw problems to figure out.

6. Research-backed prompt engineering

Documented techniques that measurably improve results:

- Progressive disclosure - Agents see only what's needed for the current step

- Structured output - JSON between delimiters, XML tags for sections

- Explicit constraints - What agents MUST NOT do matters as much as what they do

- Few-shot examples - Where patterns aren't obvious

- Tool calling over generation - Let the model use tools rather than generate tool-like output

7. Validate plan and results, not every step

Approve the plan. See the results. The middle is automated. One plan approval unlocks autonomous execution through implementation, review, cleanup, and shipping.

8. Right model for the task

Match model capability to task complexity:

- opus - Exploration, planning, implementation, review orchestration

- sonnet - Pattern matching, validation, discovery

- haiku - Git operations, file moves, CI polling

Quality compounds. Poor exploration → poor plan → poor implementation → review cycles. Early phases deserve the best model.

9. Persistent state survives sessions

Two JSON files track everything: what task, what phase. Sessions can die and resume. Multiple sessions run in parallel on different tasks using separate worktrees.

10. Delegate everything automatable

Agents don't just write code. They:

- Clean their own output (deslop-agent)

- Update documentation (sync-docs-agent)

- Fix CI failures (ci-fixer)

- Respond to review comments

- Check for plan drift (/drift-detect)

- Analyze their own prompts (/enhance)

If it can be specified, it can be delegated.

11. Orchestrator stays high-level

The main workflow orchestrator doesn't read files, search code, or write implementations. It launches specialized agents and receives their outputs. Keeps the orchestrator's context window available for coordination rather than filled with file contents.

12. Composable, not monolithic

Every command works standalone. /deslop cleans code without needing /next-task. /ship merges PRs without needing the full workflow. Pieces compose together, but each piece is useful on its own.

- Run multiple sessions - Different tasks in different worktrees, no interference

- Fast iteration - Approve plan, check results, repeat

- Stay in the interesting parts - Policy decisions, architecture choices, edge cases

- Minimal review burden - Most issues caught and fixed before you see the output

- No repetitive requests - The workflow you want, without asking each time

- Scale horizontally - More sessions, more tasks, same oversight level

/plugin marketplace add avifenesh/agentsys

/plugin install next-task@agentsys

/plugin install ship@agentsysnpm install -g agentsys && agentsysInteractive installer for Claude Code, OpenCode, and Codex CLI.

# Non-interactive install

agentsys --tool claude # Single tool

agentsys --tools "claude,opencode" # Multiple tools

agentsys --development # Dev mode (bypasses marketplace)Required:

- Git

- Node.js 18+

For GitHub workflows:

- GitHub CLI (

gh) authenticated

For GitLab workflows:

- GitLab CLI (

glab) authenticated

For /repo-map:

- ast-grep (

sg) installed

For /agnix:

-

agnix CLI installed (

cargo install agnix-cliorbrew install agnix)

Local diagnostics (optional):

npm run detect # Platform detection (CI, deploy, project type)

npm run verify # Tool availability + versionsThe system is built on research, not guesswork.

Knowledge base (agent-docs/): 8,000 lines of curated documentation from Anthropic, OpenAI, Google, and Microsoft covering:

- Agent architecture and design patterns

- Prompt engineering techniques

- Function calling and tool use

- Context efficiency and token optimization

- Multi-agent systems and orchestration

- Instruction following reliability

Testing:

- 1,818 tests passing

- Drift-detect validated on 1,000+ repositories

- E2E workflow testing across all commands

- Cross-platform validation (Claude Code, OpenCode, Codex CLI)

Methodology:

-

/perfinvestigation phases based on recorded real performance investigation sessions - Certainty levels derived from pattern analysis across repositories

- Token optimization measured and validated (77% reduction in drift-detect)

| Topic | Link |

|---|---|

| Installation | docs/INSTALLATION.md |

| Cross-Platform Setup | docs/CROSS_PLATFORM.md |

| Usage Examples | docs/USAGE.md |

| Architecture | docs/ARCHITECTURE.md |

| Workflow | Link |

|---|---|

| /next-task Flow | docs/workflows/NEXT-TASK.md |

| /ship Flow | docs/workflows/SHIP.md |

| Topic | Link |

|---|---|

| Slop Patterns | docs/reference/SLOP-PATTERNS.md |

| Agent Reference | docs/reference/AGENTS.md |

- Issues: github.com/avifenesh/agentsys/issues

- Discussions: github.com/avifenesh/agentsys/discussions

MIT License | Made by Avi Fenesh

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for agentsys

Similar Open Source Tools

agentsys

AgentSys is a modular runtime and orchestration system for AI agents, with 13 plugins, 42 agents, and 28 skills that compose into structured pipelines for software development. It handles task selection, branch management, code review, artifact cleanup, CI, PR comments, and deployment. The system runs on Claude Code, OpenCode, and Codex CLI, providing a functional software suite and runtime for AI agent orchestration.

awesome-slash

Automate the entire development workflow beyond coding. awesome-slash provides production-ready skills, agents, and commands for managing tasks, branches, reviews, CI, and deployments. It automates the entire workflow, including task exploration, planning, implementation, review, and shipping. The tool includes 11 plugins, 40 agents, 26 skills, and 26k lines of lib code, with 3,357 tests and support for 3 platforms. It works with Claude Code, OpenCode, and Codex CLI, offering specialized capabilities through skills and agents.

empirica

Empirica is an epistemic self-awareness framework for AI agents to understand their knowledge boundaries. It introduces epistemic vectors to measure knowledge state and uncertainty, enabling honest communication. The tool emerged from 600+ real working sessions across various AI systems, providing cognitive infrastructure for distinguishing between confident knowledge and guessing. Empirica's 13 foundational vectors cover engagement, domain knowledge depth, execution capability, information access, understanding clarity, coherence, signal-to-noise ratio, information richness, working state, progress rate, task completion level, work significance, and explicit doubt tracking. It is applicable across industries like software development, research, healthcare, legal, education, and finance, aiding in tasks such as code review, hypothesis testing, diagnostic confidence, case analysis, learning assessment, and risk assessment.

rhesis

Rhesis is a comprehensive test management platform designed for Gen AI teams, offering tools to create, manage, and execute test cases for generative AI applications. It ensures the robustness, reliability, and compliance of AI systems through features like test set management, automated test generation, edge case discovery, compliance validation, integration capabilities, and performance tracking. The platform is open source, emphasizing community-driven development, transparency, extensible architecture, and democratizing AI safety. It includes components such as backend services, frontend applications, SDK for developers, worker services, chatbot applications, and Polyphemus for uncensored LLM service. Rhesis enables users to address challenges unique to testing generative AI applications, such as non-deterministic outputs, hallucinations, edge cases, ethical concerns, and compliance requirements.

nothumanallowed

NotHumanAllowed is a security-first platform built exclusively for AI agents. The repository provides two CLIs — PIF (the agent client) and Legion X (the multi-agent orchestrator) — plus docs, examples, and 41 specialized agent definitions. Every agent authenticates via Ed25519 cryptographic signatures, ensuring no passwords or bearer tokens are used. Legion X orchestrates 41 specialized AI agents through a 9-layer Geth Consensus pipeline, with zero-knowledge protocol ensuring API keys stay local. The system learns from each session, with features like task decomposition, neural agent routing, multi-round deliberation, and weighted authority synthesis. The repository also includes CLI commands for orchestration, agent management, tasks, sandbox execution, Geth Consensus, knowledge search, configuration, system health check, and more.

claudia

Claudia is a personal AI assistant that tracks relationships and commitments, helping users remember important details and connections across their network. It catches commitments, remembers context, warns before things slip, and shows the source of information. Claudia syncs memory to an Obsidian vault, ensuring data privacy by running fully locally. Users can try a demo mode with pre-populated data or install Claudia for personalized workspace creation. It is suitable for roles like consultants, executives, founders, solo professionals, and creators, offering features like client folders, deliverable tracking, leadership tools, and collaboration tracking.

airunner

AI Runner is a multi-modal AI interface that allows users to run open-source large language models and AI image generators on their own hardware. The tool provides features such as voice-based chatbot conversations, text-to-speech, speech-to-text, vision-to-text, text generation with large language models, image generation capabilities, image manipulation tools, utility functions, and more. It aims to provide a stable and user-friendly experience with security updates, a new UI, and a streamlined installation process. The application is designed to run offline on users' hardware without relying on a web server, offering a smooth and responsive user experience.

Evaluator

NeMo Evaluator SDK is an open-source platform for robust, reproducible, and scalable evaluation of Large Language Models. It enables running hundreds of benchmarks across popular evaluation harnesses against any OpenAI-compatible model API. The platform ensures auditable and trustworthy results by executing evaluations in open-source Docker containers. NeMo Evaluator SDK is built on four core principles: Reproducibility by Default, Scale Anywhere, State-of-the-Art Benchmarking, and Extensible and Customizable.

explain-openclaw

Explain OpenClaw is a comprehensive documentation repository for the OpenClaw framework, a self-hosted AI assistant platform. It covers various aspects such as plain English explanations, technical architecture, deployment scenarios, privacy and safety measures, security audits, worst-case security scenarios, optimizations, and AI model comparisons. The repository serves as a living knowledge base with beginner-friendly explanations and detailed technical insights for contributors.

orchestkit

OrchestKit is a powerful and flexible orchestration tool designed to streamline and automate complex workflows. It provides a user-friendly interface for defining and managing orchestration tasks, allowing users to easily create, schedule, and monitor workflows. With support for various integrations and plugins, OrchestKit enables seamless automation of tasks across different systems and applications. Whether you are a developer looking to automate deployment processes or a system administrator managing complex IT operations, OrchestKit offers a comprehensive solution to simplify and optimize your workflow management.

pocketpaw

PocketPaw is a lightweight and user-friendly tool designed for managing and organizing your digital assets. It provides a simple interface for users to easily categorize, tag, and search for files across different platforms. With PocketPaw, you can efficiently organize your photos, documents, and other files in a centralized location, making it easier to access and share them. Whether you are a student looking to organize your study materials, a professional managing project files, or a casual user wanting to declutter your digital space, PocketPaw is the perfect solution for all your file management needs.

netdata

Netdata is an open-source, real-time infrastructure monitoring platform that provides instant insights, zero configuration deployment, ML-powered anomaly detection, efficient monitoring with minimal resource usage, and secure & distributed data storage. It offers real-time, per-second updates and clear insights at a glance. Netdata's origin story involves addressing the limitations of existing monitoring tools and led to a fundamental shift in infrastructure monitoring. It is recognized as the most energy-efficient tool for monitoring Docker-based systems according to a study by the University of Amsterdam.

deepfabric

DeepFabric is a CLI tool and SDK designed for researchers and developers to generate high-quality synthetic datasets at scale using large language models. It leverages a graph and tree-based architecture to create diverse and domain-specific datasets while minimizing redundancy. The tool supports generating Chain of Thought datasets for step-by-step reasoning tasks and offers multi-provider support for using different language models. DeepFabric also allows for automatic dataset upload to Hugging Face Hub and uses YAML configuration files for flexibility in dataset generation.

agentfield

AgentField is an open-source control plane designed for autonomous AI agents, providing infrastructure for agents to make decisions beyond chatbots. It offers features like scaling infrastructure, routing & discovery, async execution, durable state, observability, trust infrastructure with cryptographic identity, verifiable credentials, and policy enforcement. Users can write agents in Python, Go, TypeScript, or interact via REST APIs. The tool enables the creation of AI backends that reason autonomously within defined boundaries, offering predictability and flexibility. AgentField aims to bridge the gap between AI frameworks and production-ready infrastructure for AI agents.

openakita

OpenAkita is a self-evolving AI Agent framework that autonomously learns new skills, performs daily self-checks and repairs, accumulates experience from task execution, and persists until the task is done. It auto-generates skills, installs dependencies, learns from mistakes, and remembers preferences. The framework is standards-based, multi-platform, and provides a Setup Center GUI for intuitive installation and configuration. It features self-learning and evolution mechanisms, a Ralph Wiggum Mode for persistent execution, multi-LLM endpoints, multi-platform IM support, desktop automation, multi-agent architecture, scheduled tasks, identity and memory management, a tool system, and a guided wizard for setup.

dotclaude

A sophisticated multi-agent configuration system for Claude Code that provides specialized agents and command templates to accelerate code review, refactoring, security audits, tech-lead-guidance, and UX evaluations. It offers essential commands, directory structure details, agent system overview, command templates, usage patterns, collaboration philosophy, sync management, advanced usage guidelines, and FAQ. The tool aims to streamline development workflows, enhance code quality, and facilitate collaboration between developers and AI agents.

For similar tasks

agentsys

AgentSys is a modular runtime and orchestration system for AI agents, with 13 plugins, 42 agents, and 28 skills that compose into structured pipelines for software development. It handles task selection, branch management, code review, artifact cleanup, CI, PR comments, and deployment. The system runs on Claude Code, OpenCode, and Codex CLI, providing a functional software suite and runtime for AI agent orchestration.

chat-with-code

Chat-with-code is a codebase chatbot that enables users to interact with their codebase using the OpenAI Language Model. It provides a user-friendly chat interface where users can ask questions and interact with their code. The tool clones, chunks, and embeds the codebase, allowing for natural language interactions. It is designed to assist users in exploring and understanding their codebase more intuitively.

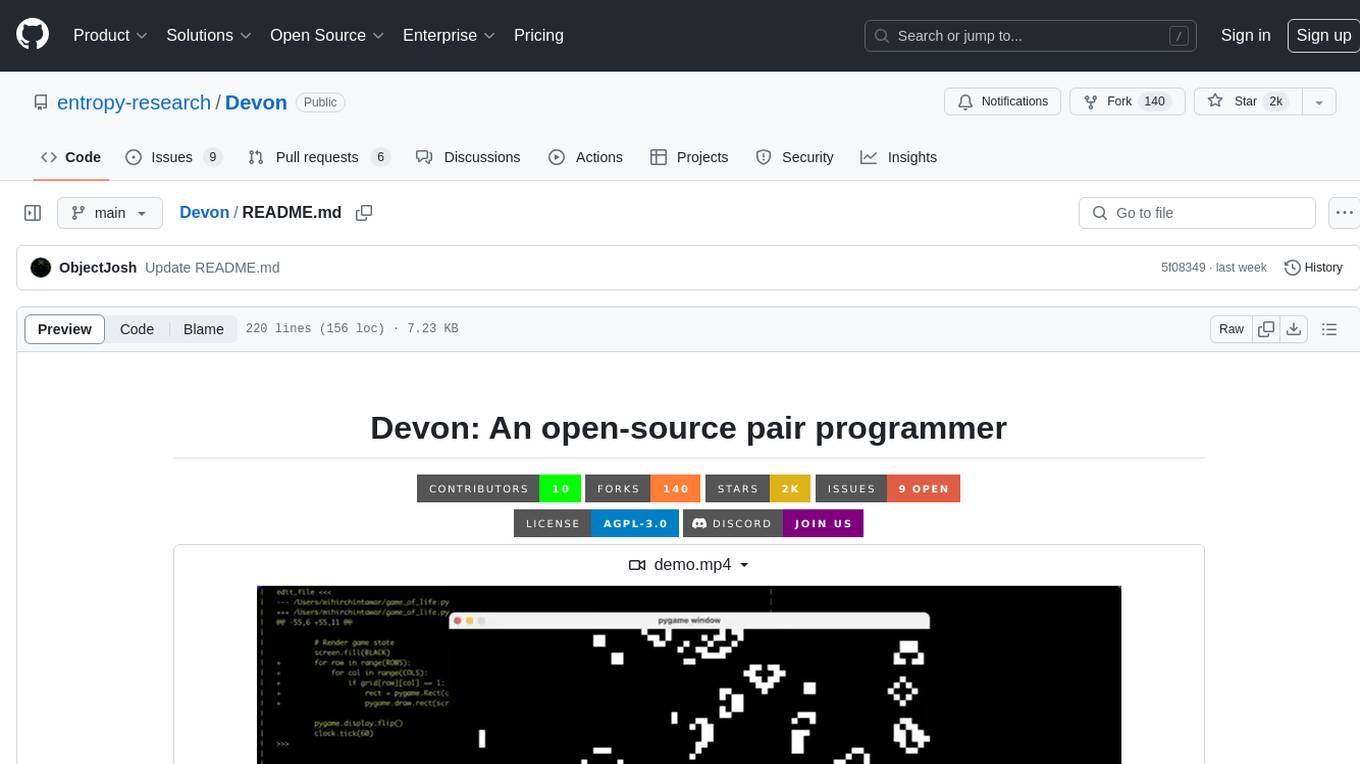

Devon

Devon is an open-source pair programmer tool designed to facilitate collaborative coding sessions. It provides features such as multi-file editing, codebase exploration, test writing, bug fixing, and architecture exploration. The tool supports Anthropic, OpenAI, and Groq APIs, with plans to add more models in the future. Devon is community-driven, with ongoing development goals including multi-model support, plugin system for tool builders, self-hostable Electron app, and setting SOTA on SWE-bench Lite. Users can contribute to the project by developing core functionality, conducting research on agent performance, providing feedback, and testing the tool.

sage

Sage is a tool that allows users to chat with any codebase, providing a chat interface for code understanding and integration. It simplifies the process of learning how a codebase works by offering heavily documented answers sourced directly from the code. Users can set up Sage locally or on the cloud with minimal effort. The tool is designed to be easily customizable, allowing users to swap components of the pipeline and improve the algorithms powering code understanding and generation.

brokk

Brokk is a code assistant designed to understand code semantically, allowing LLMs to work effectively on large codebases. It offers features like agentic search, summarizing related classes, parsing stack traces, adding source for usages, and autonomously fixing errors. Users can interact with Brokk through different panels and commands, enabling them to manipulate context, ask questions, search codebase, run shell commands, and more. Brokk helps with tasks like debugging regressions, exploring codebase, AI-powered refactoring, and working with dependencies. It is particularly useful for making complex, multi-file edits with o1pro.

AI-Governor-Framework

The AI Governor Framework is a system designed to govern AI assistants in coding projects by providing rules and workflows to ensure consistency, respect architectural decisions, and enforce coding standards. It leverages Context Engineering to provide the AI with the right information at the right time, using an In-Repo approach to keep governance rules and architectural context directly inside the repository. The framework consists of two core components: The Governance Engine for passive rules and the Operator's Playbook for active protocols. It follows a 4-step Operator's Playbook to move features from idea to production with clarity and control.

awesome-slash

Automate the entire development workflow beyond coding. awesome-slash provides production-ready skills, agents, and commands for managing tasks, branches, reviews, CI, and deployments. It automates the entire workflow, including task exploration, planning, implementation, review, and shipping. The tool includes 11 plugins, 40 agents, 26 skills, and 26k lines of lib code, with 3,357 tests and support for 3 platforms. It works with Claude Code, OpenCode, and Codex CLI, offering specialized capabilities through skills and agents.

smithers

Smithers is a tool for declarative AI workflow orchestration using React components. It allows users to define complex multi-agent workflows as component trees, ensuring composability, durability, and error handling. The tool leverages React's re-rendering mechanism to persist outputs to SQLite, enabling crashed workflows to resume seamlessly. Users can define schemas for task outputs, create workflow instances, define agents, build workflow trees, and run workflows programmatically or via CLI. Smithers supports components for pipeline stages, structured output validation with Zod, MDX prompts, validation loops with Ralph, dynamic branching, and various built-in tools like read, edit, bash, grep, and write. The tool follows a clear workflow execution process involving defining, rendering, executing, re-rendering, and repeating tasks until completion, all while storing task results in SQLite for fault tolerance.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.