awesome-slash

AI writes code. This automates everything else · 11 plugins · 40 agents · 26 skills · for Claude Code, OpenCode, Codex.

Stars: 363

Automate the entire development workflow beyond coding. awesome-slash provides production-ready skills, agents, and commands for managing tasks, branches, reviews, CI, and deployments. It automates the entire workflow, including task exploration, planning, implementation, review, and shipping. The tool includes 11 plugins, 40 agents, 26 skills, and 26k lines of lib code, with 3,357 tests and support for 3 platforms. It works with Claude Code, OpenCode, and Codex CLI, offering specialized capabilities through skills and agents.

README:

Automate the entire dev workflow. Not just the coding.

11 plugins · 40 agents · 26 skills · 26k lines of lib code · 3,357 tests · 3 platforms

Commands · Installation · Website · Discussions

AI models can write code. That's not the hard part anymore. The hard part is everything else—picking what to work on, managing branches, reviewing output, cleaning up artifacts, handling CI, addressing comments, deploying. awesome-slash automates the entire workflow, not just the coding.

Building custom skills, agents, hooks, or MCP tools? agnix is the CLI + LSP linter that catches config errors before they fail silently - real-time IDE validation, auto suggestions, auto-fix, and 155 rules for Cursor, Claude Code, Cline, Copilot, Codex, Windsurf, and more.

Production-ready skills, agents, and commands for Claude Code, OpenCode, and Codex CLI.

Install the plugins → get the skills → your agents become more capable. Each piece was built to work with the others. The whole system is E2E tested.

From messy project to clean codebase. From drifted plan to focused execution. From task to merged PR.

Works with: Claude Code · OpenCode · Codex CLI

Code does code work. AI does AI work.

- Detection: regex, AST analysis, static analysis—fast, deterministic, no tokens wasted

- Judgment: LLM calls for synthesis, planning, review—where reasoning matters

- Result: 77% fewer tokens for /drift-detect vs multi-agent approaches, certainty-graded findings throughout

Certainty levels exist because not all findings are equal:

| Level | Meaning | Action |

|---|---|---|

| HIGH | Definitely a problem | Safe to auto-fix |

| MEDIUM | Probably a problem | Needs context |

| LOW | Might be a problem | Needs human judgment |

This came from testing on 1,000+ repositories.

| Command | What it does |

|---|---|

/next-task |

Task → exploration → plan → implementation → review → ship |

/agnix |

Lint agent configs - 155 rules for Skills, Memory, Hooks, MCP across 10+ AI tools |

/ship |

Branch → PR → CI → reviews addressed → merge → cleanup |

/deslop |

3-phase detection pipeline, certainty-graded findings |

/perf |

10-phase performance investigation with baselines and profiling |

/drift-detect |

AST-based plan vs code analysis, finds what's documented but not built |

/audit-project |

Multi-agent code review, iterates until issues resolved |

/enhance |

Analyzes prompts, agents, plugins, docs, hooks, skills |

/repo-map |

AST symbol and import mapping via ast-grep |

/sync-docs |

Finds outdated references, stale examples, missing CHANGELOG entries |

/learn |

Research any topic, gather online sources, create learning guide with RAG index |

Each command works standalone. Together, they form complete workflows.

26 skills included across the plugins:

| Category | Skills |

|---|---|

| Performance |

perf:perf-analyzer, perf:perf-baseline-manager, perf:perf-benchmarker, perf:perf-code-paths, perf:perf-investigation-logger, perf:perf-profiler, perf:perf-theory-gatherer, perf:perf-theory-tester

|

| Enhancement |

enhance:enhance-agent-prompts, enhance:enhance-claude-memory, enhance:enhance-cross-file, enhance:enhance-docs, enhance:enhance-hooks, enhance:enhance-orchestrator, enhance:enhance-plugins, enhance:enhance-prompts, enhance:enhance-skills

|

| Workflow |

next-task:discover-tasks, next-task:orchestrate-review, next-task:validate-delivery

|

| Cleanup |

deslop:deslop, sync-docs:sync-docs

|

| Analysis |

drift-detect:drift-analysis, repo-map:repo-mapping

|

| Learning | learn:learn |

| Linting | agnix:agnix |

Skills give your agents specialized capabilities. When you install a plugin, its skills become available to all agents in that session.

| Section | What's there |

|---|---|

| The Approach | Why it's built this way |

| Commands | All 11 commands overview |

| Skills | 26 skills across plugins |

| Command Details | Deep dive into each command |

| How Commands Work Together | Standalone vs integrated |

| Design Philosophy | The thinking behind the architecture |

| Installation | Get started |

| Research & Testing | What went into building this |

| Documentation | Links to detailed docs |

Purpose: Complete task-to-production automation.

What happens when you run it:

- Policy Selection - Choose task source (GitHub issues, GitLab, local file), priority filter, stopping point

- Task Discovery - Shows top 5 prioritized tasks, you pick one

- Worktree Setup - Creates isolated branch and working directory

- Exploration - Deep codebase analysis to understand context

- Planning - Designs implementation approach

- User Approval - You review and approve the plan (last human interaction)

- Implementation - Executes the plan

- Pre-Review - Runs deslop-agent and test-coverage-checker

- Review Loop - Multi-agent review iterates until clean

- Delivery Validation - Verifies tests pass, build passes, requirements met

- Docs Update - Updates CHANGELOG and related documentation

- Ship - Creates PR, monitors CI, addresses comments, merges

Phase 9 uses the orchestrate-review skill to spawn parallel reviewers (code quality, security, performance, test coverage) plus conditional specialists.

Agents involved:

| Agent | Model | Role |

|---|---|---|

| task-discoverer | sonnet | Finds and ranks tasks from your source |

| worktree-manager | haiku | Creates git worktrees and branches |

| exploration-agent | opus | Deep codebase analysis before planning |

| planning-agent | opus | Designs step-by-step implementation plan |

| implementation-agent | opus | Writes the actual code |

| test-coverage-checker | sonnet | Validates tests exist and are meaningful |

| delivery-validator | sonnet | Final checks before shipping |

| ci-monitor | haiku | Watches CI status |

| ci-fixer | sonnet | Fixes CI failures and review comments |

| simple-fixer | haiku | Executes mechanical edits |

Cross-plugin agent:

| Agent | Plugin | Role |

|---|---|---|

| deslop-agent | deslop | Removes AI artifacts before review |

| sync-docs-agent | sync-docs | Updates documentation |

Usage:

/next-task # Start new workflow

/next-task --resume # Resume interrupted workflow

/next-task --status # Check current state

/next-task --abort # Cancel and cleanupPurpose: Lint agent configurations before they break your workflow. The first dedicated linter for AI agent configs.

agnix is a standalone open-source project that provides the validation engine. This plugin integrates it into your workflow.

The problem it solves:

Agent configurations are code. They affect behavior, security, and reliability. But unlike application code, they have no linting. You find out your SKILL.md is malformed when the agent fails. You discover your hooks have security issues when they're exploited. You realize your CLAUDE.md has conflicting rules when the AI behaves unexpectedly.

agnix catches these issues before they cause problems.

What it validates:

| Category | What It Checks |

|---|---|

| Structure | Required fields, valid YAML/JSON, proper frontmatter |

| Security | Prompt injection vectors, overpermissive tools, exposed secrets |

| Consistency | Conflicting rules, duplicate definitions, broken references |

| Best Practices | Tool restrictions, model selection, trigger phrase quality |

| Cross-Platform | Compatibility across Claude Code, Cursor, Copilot, Codex, OpenCode, Gemini CLI, Cline, and more |

155 validation rules (57 auto-fixable) derived from:

- Official tool specifications (Claude Code, Cursor, GitHub Copilot, Codex CLI, OpenCode, Gemini CLI, and more)

- Research papers on agent reliability and prompt injection

- Real-world testing across 500+ repositories

- Community-reported issues and edge cases

Supported files:

| File Type | Examples |

|---|---|

| Skills |

SKILL.md, */SKILL.md

|

| Memory |

CLAUDE.md, AGENTS.md, .github/CLAUDE.md

|

| Hooks |

.claude/settings.json, hooks configuration |

| MCP |

*.mcp.json, MCP server configs |

| Cursor |

.cursor/rules/*.mdc, .cursorrules

|

| Copilot | .github/copilot-instructions.md |

CI/CD Integration:

agnix outputs SARIF format for GitHub Code Scanning. Add it to your workflow:

- name: Lint agent configs

run: agnix --format sarif > results.sarif

- uses: github/codeql-action/upload-sarif@v3

with:

sarif_file: results.sarifUsage:

/agnix # Validate current project

/agnix --fix # Auto-fix fixable issues

/agnix --strict # Treat warnings as errors

/agnix --target claude-code # Only Claude Code rules

/agnix --format sarif # Output for GitHub Code ScanningAgent: agnix-agent (sonnet model)

External tool: Requires agnix CLI

npm install -g agnix # Install via npm

# or

cargo install agnix-cli # Install via Cargo

# or

brew install agnix # Install via Homebrew (macOS)Why use agnix:

- Catch config errors before they cause agent failures

- Enforce security best practices across your team

- Maintain consistency as your agent configs grow

- Integrate validation into CI/CD pipelines

- Support multiple AI tools from one linter

Purpose: Takes your current branch from "ready to commit" to "merged PR."

What happens when you run it:

- Pre-flight - Detects CI platform, deployment platform, branch strategy

- Commit - Stages and commits with generated message (if uncommitted changes)

- Push & PR - Pushes branch, creates pull request

- CI Monitor - Waits for CI, retries on transient failures

- Review Wait - Waits 3 minutes for auto-reviewers (Copilot, Claude, Gemini, Codex)

- Address Comments - Handles every comment from every reviewer

- Merge - Merges when all comments resolved and CI passes

- Deploy - Deploys and validates (if multi-branch workflow)

- Cleanup - Removes worktree, closes issue, deletes branch

Platform Detection:

| Type | Detected |

|---|---|

| CI | GitHub Actions, GitLab CI, CircleCI, Jenkins, Travis |

| Deploy | Railway, Vercel, Netlify, Fly.io, Render |

| Project | Node.js, Python, Rust, Go, Java |

Review Comment Handling:

Every comment gets addressed. No exceptions. The workflow categorizes comments and handles each:

- Code fixes get implemented

- Style suggestions get applied

- Questions get answered

- False positives get explained

If something can't be fixed, the workflow replies explaining why and resolves the thread.

Usage:

/ship # Full workflow

/ship --dry-run # Preview without executing

/ship --strategy rebase # Use rebase instead of squashPurpose: Finds AI slop—debug statements, placeholder text, verbose comments, TODOs—and removes it.

How detection works:

Three phases run in sequence:

-

Phase 1: Regex Patterns (HIGH certainty)

-

console.log,print(),dbg!(),println!() -

// TODO,// FIXME,// HACK - Empty catch blocks, disabled linters

- Hardcoded secrets (API keys, tokens)

-

-

Phase 2: Multi-Pass Analyzers (MEDIUM certainty)

- Doc-to-code ratio (excessive comments)

- Verbosity ratio (AI preambles)

- Over-engineering patterns

- Buzzword inflation

- Dead code detection

- Stub functions

-

Phase 3: CLI Tools (LOW certainty, optional)

- jscpd, madge, escomplex (JS/TS)

- pylint, radon (Python)

- golangci-lint (Go)

- clippy (Rust)

Languages supported: JavaScript/TypeScript, Python, Rust, Go, Java

Usage:

/deslop # Report only (safe)

/deslop apply # Fix HIGH certainty issues

/deslop apply src/ 10 # Fix 10 issues in src/Thoroughness levels:

-

quick- Phase 1 only (fastest) -

normal- Phase 1 + Phase 2 (default) -

deep- All phases if tools available

Purpose: Structured performance investigation with baselines, profiling, and evidence-backed decisions.

10-phase methodology (based on recorded real performance investigation sessions):

- Setup - Confirm scenario, success criteria, benchmark command

- Baseline - 60s minimum runs, PERF_METRICS markers required

- Breaking Point - Binary search to find failure threshold

- Constraints - CPU/memory limits, measure delta vs baseline

- Hypotheses - Generate up to 5 hypotheses with evidence and confidence

- Code Paths - Use repo-map to identify entrypoints and hot files

- Profiling - Language-specific tools (--cpu-prof, JFR, cProfile, pprof)

- Optimization - One change per experiment, 2+ validation passes

- Decision - Continue or stop based on measurable improvement

- Consolidation - Final baseline, evidence log, investigation complete

Agents and skills:

| Component | Role |

|---|---|

| perf-orchestrator | Coordinates all phases |

| perf-theory-gatherer | Generates hypotheses from git history and code |

| perf-theory-tester | Validates hypotheses with controlled experiments |

| perf-analyzer | Synthesizes findings into recommendations |

| perf-code-paths | Maps entrypoints and likely hot paths |

| perf-investigation-logger | Structured evidence logging |

Usage:

/perf # Start new investigation

/perf --resume # Resume previous investigationPhase flags (advanced):

/perf --phase baseline --command "npm run bench" --version v1.2.0

/perf --phase breaking-point --param-min 1 --param-max 500

/perf --phase constraints --cpu 1 --memory 1GB

/perf --phase hypotheses --hypotheses-file perf-hypotheses.json

/perf --phase optimization --change "reduce allocations"

/perf --phase decision --verdict stop --rationale "no measurable improvement"Purpose: Compares your documentation and plans to what's actually in the code.

The problem it solves:

Your roadmap says "user authentication: done." But is it actually implemented? Your GitHub issue says "add dark mode." Is it already in the codebase? Plans drift from reality. This command finds the drift.

How it works:

-

JavaScript collectors gather data (fast, token-efficient)

- GitHub issues and their labels

- Documentation files

- Actual code exports and implementations

-

Single Opus call performs semantic analysis

- Matches concepts, not strings ("user auth" matches

auth/,login.js,session.ts) - Identifies implemented but not documented

- Identifies documented but not implemented

- Finds stale issues that should be closed

- Matches concepts, not strings ("user auth" matches

Why this approach:

Multi-agent collection wastes tokens on coordination. JavaScript collectors are fast and deterministic. One well-prompted LLM call does the actual analysis. Result: 77% token reduction vs multi-agent approaches.

Tested on 1,000+ repositories before release.

Usage:

/drift-detect # Full analysis

/drift-detect --depth quick # Quick scanPurpose: Multi-agent code review that iterates until issues are resolved.

What happens when you run it:

Up to 10 specialized role-based agents run based on your project:

| Agent | When Active | Focus Area |

|---|---|---|

| code-quality-reviewer | Always | Code quality, error handling |

| security-expert | Always | Vulnerabilities, auth, secrets |

| performance-engineer | Always | N+1 queries, memory, blocking ops |

| test-quality-guardian | Always | Coverage, edge cases, mocking |

| architecture-reviewer | If 50+ files | Modularity, patterns, SOLID |

| database-specialist | If DB detected | Queries, indexes, transactions |

| api-designer | If API detected | REST, errors, pagination |

| frontend-specialist | If frontend detected | Components, state, UX |

| backend-specialist | If backend detected | Services, domain logic |

| devops-reviewer | If CI/CD detected | Pipelines, configs, secrets |

Findings are collected and categorized by severity (critical/high/medium/low). All non-false-positive issues get fixed automatically. The loop repeats until no open issues remain.

Usage:

/audit-project # Full review

/audit-project --quick # Single pass

/audit-project --resume # Resume from queue file

/audit-project --domain security # Security focus only

/audit-project --recent # Only recent changesPurpose: Analyzes your prompts, plugins, agents, docs, hooks, and skills for improvement opportunities.

Seven analyzers run in parallel:

| Analyzer | What it checks |

|---|---|

| plugin-enhancer | Plugin structure, MCP tool definitions, security patterns |

| agent-enhancer | Agent frontmatter, prompt quality |

| claudemd-enhancer | CLAUDE.md/AGENTS.md structure, token efficiency |

| docs-enhancer | Documentation readability, RAG optimization |

| prompt-enhancer | Prompt engineering patterns, clarity, examples |

| hooks-enhancer | Hook frontmatter, structure, safety |

| skills-enhancer | SKILL.md structure, trigger phrases |

Each finding includes:

- Certainty level (HIGH/MEDIUM/LOW)

- Specific location (file:line)

- What's wrong

- How to fix it

- Whether it can be auto-fixed

Auto-learning: Detects obvious false positives (pattern docs, workflow gates) and saves them for future runs. Reduces noise over time without manual suppression files.

Usage:

/enhance # Run all analyzers

/enhance --focus=agent # Just agent prompts

/enhance --apply # Apply HIGH certainty fixes

/enhance --show-suppressed # Show what's being filtered

/enhance --no-learn # Analyze but don't save false positivesPurpose: Builds an AST-based map of symbols and imports for fast repo analysis.

What it generates:

- Cached file→symbols map (exports, functions, classes)

- Import graph for dependency hints

Output is cached at {state-dir}/repo-map.json and exposed via the MCP repo_map tool.

Why it matters:

Tools like /drift-detect and planners can use the map instead of re-scanning the repo every time.

Usage:

/repo-map init # First-time map generation

/repo-map update # Incremental update

/repo-map status # Check freshnessRequired: ast-grep (sg) must be installed.

Purpose: Sync documentation with actual code changes—find outdated refs, update CHANGELOG, flag stale examples.

The problem it solves:

You refactor auth.js into auth/index.js. Your README still says import from './auth'. You rename a function. Three docs still reference the old name. You ship a feature. CHANGELOG doesn't mention it. Documentation drifts from code. This command finds the drift.

What it detects:

| Category | Examples |

|---|---|

| Broken references | Imports to moved/renamed files, deleted exports |

| Version mismatches | Doc says v2.0, package.json says v2.1 |

| Stale code examples | Import paths that no longer exist |

| Missing CHANGELOG |

feat: and fix: commits without entries |

Auto-fixable vs flagged:

| Auto-fixable (apply mode) | Flagged for review |

|---|---|

| Version number updates | Removed exports referenced in docs |

| CHANGELOG entries for commits | Code examples needing context |

| Function renames |

Usage:

/sync-docs # Check what docs need updates (safe)

/sync-docs apply # Apply safe fixes

/sync-docs report src/ # Check docs related to src/

/sync-docs --all # Full codebase scanPurpose: Research any topic online and create a comprehensive learning guide with RAG-optimized indexes.

What it does:

- Progressive Discovery - Uses funnel approach (broad → specific → deep) to find quality sources

- Quality Scoring - Scores sources by authority, recency, depth, examples, uniqueness

- Just-In-Time Extraction - Fetches only high-scoring sources to save tokens

- Synthesis - Creates structured learning guide with examples and best practices

- RAG Index - Updates CLAUDE.md/AGENTS.md master index for future lookups

- Enhancement - Runs enhance:enhance-docs and enhance:enhance-prompts

Depth levels:

| Depth | Sources | Use Case |

|---|---|---|

| brief | 10 | Quick overview |

| medium | 20 | Default, balanced |

| deep | 40 | Comprehensive |

Output structure:

agent-knowledge/

CLAUDE.md # Master index (updated each run)

AGENTS.md # Index for OpenCode/Codex

recursion.md # Topic-specific guide

resources/

recursion-sources.json # Source metadata with quality scores

Usage:

/learn recursion # Default (20 sources)

/learn react hooks --depth=deep # Comprehensive (40 sources)

/learn kubernetes --depth=brief # Quick overview (10 sources)

/learn python async --no-enhance # Skip enhancement passAgent: learn-agent (opus model for research quality)

Standalone use:

/deslop apply # Just clean up your code

/sync-docs # Just check if docs need updates

/ship # Just ship this branch

/audit-project # Just review the codebaseIntegrated workflow:

When you run /next-task, it orchestrates everything:

/next-task picks task → explores codebase → plans implementation

↓

implementation-agent writes code

↓

deslop-agent cleans AI artifacts

↓

Phase 9 review loop iterates until approved

↓

delivery-validator checks requirements

↓

sync-docs-agent syncs documentation

↓

[/ship](#ship) creates PR → monitors CI → merges

The workflow tracks state so you can resume from any point.

Why build this? What's the thinking? (click to expand)

Frontier models write good code. That's solved. What's not solved:

- Context management - Models forget what they're doing mid-session

- Compaction amnesia - Long sessions get summarized, losing critical state

- Task drift - Without structure, agents wander from the actual goal

- Skipped steps - Agents skip reviews, tests, or cleanup when not enforced

- Token waste - Using LLM calls for work that static analysis can do faster

- Babysitting - Manually orchestrating each phase of development

- Repetitive requests - Asking for the same workflow every single session

1. One agent, one job, done extremely well

Same principle as good code: single responsibility. The exploration-agent explores. The implementation-agent implements. Phase 9 spawns multiple focused reviewers. No agent tries to do everything. Specialized agents, each with narrow scope and clear success criteria.

2. Pipeline with gates, not a monolith

Same principle as DevOps. Each step must pass before the next begins. Can't push before review. Can't merge before CI passes. Hooks enforce this—agents literally cannot skip phases.

3. Tools do tool work, agents do agent work

If static analysis, regex, or a shell command can do it, don't ask an LLM. Pattern detection uses pre-indexed regex. File discovery uses glob. Platform detection uses file existence checks. The LLM only handles what requires judgment.

4. Agents don't need to know how tools work

The slop detector returns findings with certainty levels. The agent doesn't need to understand the three-phase pipeline, the regex patterns, or the analyzer heuristics. Good tool design means the consumer doesn't need implementation details.

5. Build tools where tools don't exist

Many tasks lack existing tools. JavaScript collectors for drift-detect. Multi-pass analyzers for slop detection. The result: agents receive structured data, not raw problems to figure out.

6. Research-backed prompt engineering

Documented techniques that measurably improve results:

- Progressive disclosure - Agents see only what's needed for the current step

- Structured output - JSON between delimiters, XML tags for sections

- Explicit constraints - What agents MUST NOT do matters as much as what they do

- Few-shot examples - Where patterns aren't obvious

- Tool calling over generation - Let the model use tools rather than generate tool-like output

7. Validate plan and results, not every step

Approve the plan. See the results. The middle is automated. One plan approval unlocks autonomous execution through implementation, review, cleanup, and shipping.

8. Right model for the task

Match model capability to task complexity:

- opus - Exploration, planning, implementation, review orchestration

- sonnet - Pattern matching, validation, discovery

- haiku - Git operations, file moves, CI polling

Quality compounds. Poor exploration → poor plan → poor implementation → review cycles. Early phases deserve the best model.

9. Persistent state survives sessions

Two JSON files track everything: what task, what phase. Sessions can die and resume. Multiple sessions run in parallel on different tasks using separate worktrees.

10. Delegate everything automatable

Agents don't just write code. They:

- Clean their own output (deslop-agent)

- Update documentation (sync-docs-agent)

- Fix CI failures (ci-fixer)

- Respond to review comments

- Check for plan drift (/drift-detect)

- Analyze their own prompts (/enhance)

If it can be specified, it can be delegated.

11. Orchestrator stays high-level

The main workflow orchestrator doesn't read files, search code, or write implementations. It launches specialized agents and receives their outputs. Keeps the orchestrator's context window available for coordination rather than filled with file contents.

12. Composable, not monolithic

Every command works standalone. /deslop cleans code without needing /next-task. /ship merges PRs without needing the full workflow. Pieces compose together, but each piece is useful on its own.

- Run multiple sessions - Different tasks in different worktrees, no interference

- Fast iteration - Approve plan, check results, repeat

- Stay in the interesting parts - Policy decisions, architecture choices, edge cases

- Minimal review burden - Most issues caught and fixed before you see the output

- No repetitive requests - The workflow you want, without asking each time

- Scale horizontally - More sessions, more tasks, same oversight level

/plugin marketplace add avifenesh/awesome-slash

/plugin install next-task@awesome-slash

/plugin install ship@awesome-slashnpm install -g awesome-slash && awesome-slashInteractive installer for Claude Code, OpenCode, and Codex CLI.

# Non-interactive install

awesome-slash --tool claude # Single tool

awesome-slash --tools "claude,opencode" # Multiple tools

awesome-slash --development # Dev mode (bypasses marketplace)The shared library is published as @awesome-slash/lib for direct use:

npm install @awesome-slash/libconst { platform, patterns, state, sources, xplat } = require('@awesome-slash/lib');Zero dependencies, CommonJS, Node.js >= 18.

Required:

- Git

- Node.js 18+

For GitHub workflows:

- GitHub CLI (

gh) authenticated

For GitLab workflows:

- GitLab CLI (

glab) authenticated

For /repo-map:

- ast-grep (

sg) installed

For /agnix:

-

agnix CLI installed (

cargo install agnix-cliorbrew install agnix)

Local diagnostics (optional):

npm run detect # Platform detection (CI, deploy, project type)

npm run verify # Tool availability + versionsThis project is built on research, not guesswork.

Knowledge base (agent-docs/): 8,000 lines of curated documentation from Anthropic, OpenAI, Google, and Microsoft covering:

- Agent architecture and design patterns

- Prompt engineering techniques

- Function calling and tool use

- Context efficiency and token optimization

- Multi-agent systems and orchestration

- Instruction following reliability

Testing:

- 1,818 tests passing

- Drift-detect validated on 1,000+ repositories

- E2E workflow testing across all commands

- Cross-platform validation (Claude Code, OpenCode, Codex CLI)

Methodology:

-

/perfinvestigation phases based on recorded real performance investigation sessions - Certainty levels derived from pattern analysis across repositories

- Token optimization measured and validated (77% reduction in drift-detect)

| Topic | Link |

|---|---|

| Installation | docs/INSTALLATION.md |

| Cross-Platform Setup | docs/CROSS_PLATFORM.md |

| Usage Examples | docs/USAGE.md |

| Architecture | docs/ARCHITECTURE.md |

| Workflow | Link |

|---|---|

| /next-task Flow | docs/workflows/NEXT-TASK.md |

| /ship Flow | docs/workflows/SHIP.md |

| Topic | Link |

|---|---|

| Slop Patterns | docs/reference/SLOP-PATTERNS.md |

| Agent Reference | docs/reference/AGENTS.md |

- Issues: github.com/avifenesh/awesome-slash/issues

- Discussions: github.com/avifenesh/awesome-slash/discussions

MIT License | Made by Avi Fenesh

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for awesome-slash

Similar Open Source Tools

For similar tasks

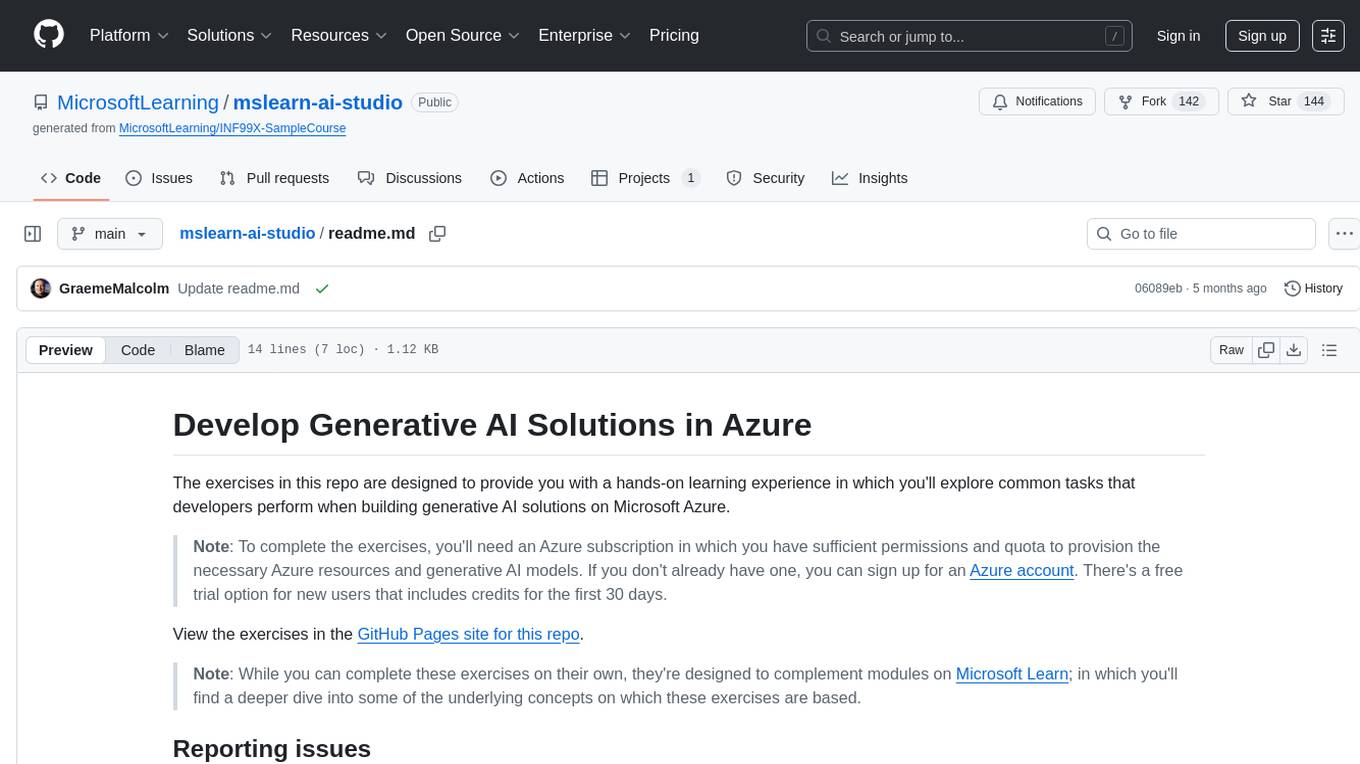

mslearn-ai-studio

The mslearn-ai-studio repository provides hands-on exercises for building generative AI solutions on Microsoft Azure. Users can explore common tasks related to generative AI models and Azure resources. The exercises are designed to complement Microsoft Learn modules and require an Azure subscription for completion.

awesome-slash

Automate the entire development workflow beyond coding. awesome-slash provides production-ready skills, agents, and commands for managing tasks, branches, reviews, CI, and deployments. It automates the entire workflow, including task exploration, planning, implementation, review, and shipping. The tool includes 11 plugins, 40 agents, 26 skills, and 26k lines of lib code, with 3,357 tests and support for 3 platforms. It works with Claude Code, OpenCode, and Codex CLI, offering specialized capabilities through skills and agents.

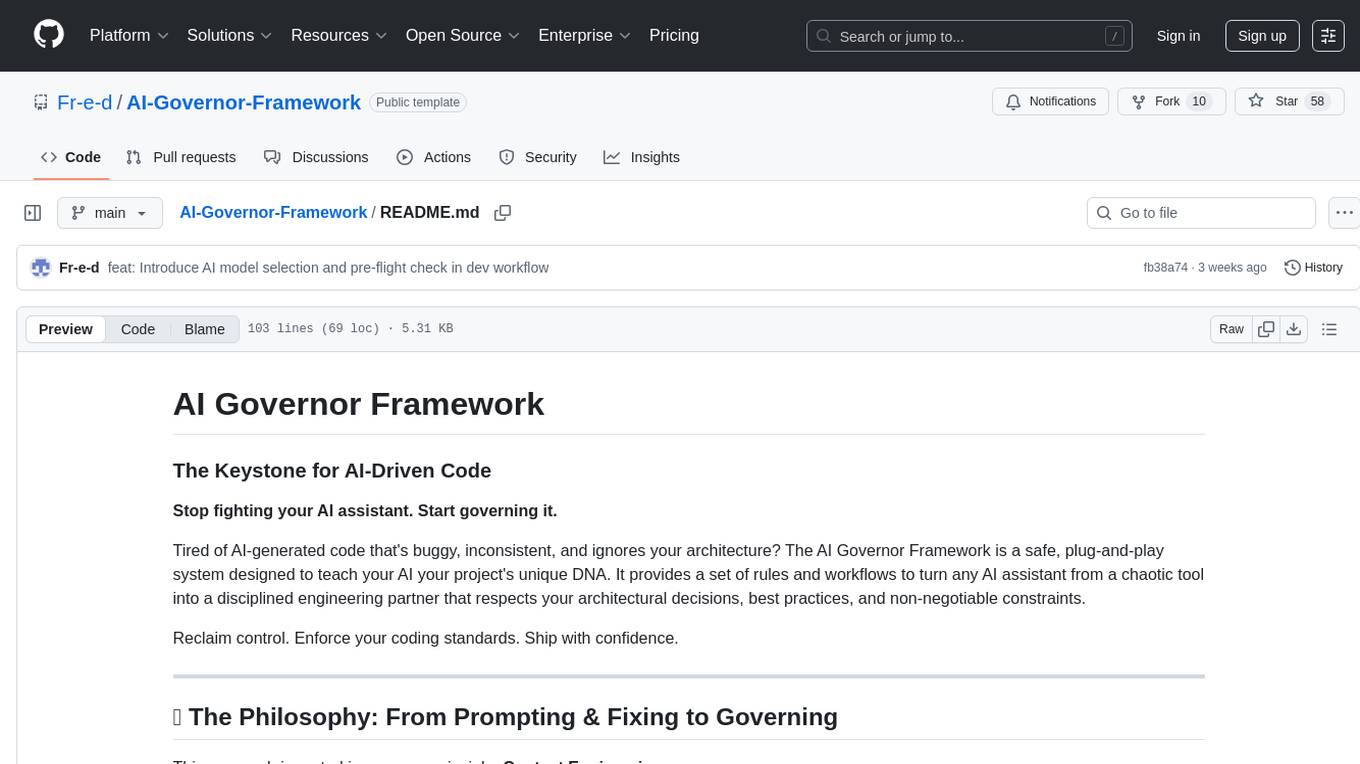

AI-Governor-Framework

The AI Governor Framework is a system designed to govern AI assistants in coding projects by providing rules and workflows to ensure consistency, respect architectural decisions, and enforce coding standards. It leverages Context Engineering to provide the AI with the right information at the right time, using an In-Repo approach to keep governance rules and architectural context directly inside the repository. The framework consists of two core components: The Governance Engine for passive rules and the Operator's Playbook for active protocols. It follows a 4-step Operator's Playbook to move features from idea to production with clarity and control.

sourcegraph

Sourcegraph is a code search and navigation tool that helps developers read, write, and fix code in large, complex codebases. It provides features such as code search across all repositories and branches, code intelligence for navigation and refactoring, and the ability to fix and refactor code across multiple repositories at once.

pr-agent

PR-Agent is a tool that helps to efficiently review and handle pull requests by providing AI feedbacks and suggestions. It supports various commands such as generating PR descriptions, providing code suggestions, answering questions about the PR, and updating the CHANGELOG.md file. PR-Agent can be used via CLI, GitHub Action, GitHub App, Docker, and supports multiple git providers and models. It emphasizes real-life practical usage, with each tool having a single GPT-4 call for quick and affordable responses. The PR Compression strategy enables effective handling of both short and long PRs, while the JSON prompting strategy allows for modular and customizable tools. PR-Agent Pro, the hosted version by CodiumAI, provides additional benefits such as full management, improved privacy, priority support, and extra features.

code-review-gpt

Code Review GPT uses Large Language Models to review code in your CI/CD pipeline. It helps streamline the code review process by providing feedback on code that may have issues or areas for improvement. It should pick up on common issues such as exposed secrets, slow or inefficient code, and unreadable code. It can also be run locally in your command line to review staged files. Code Review GPT is in alpha and should be used for fun only. It may provide useful feedback but please check any suggestions thoroughly.

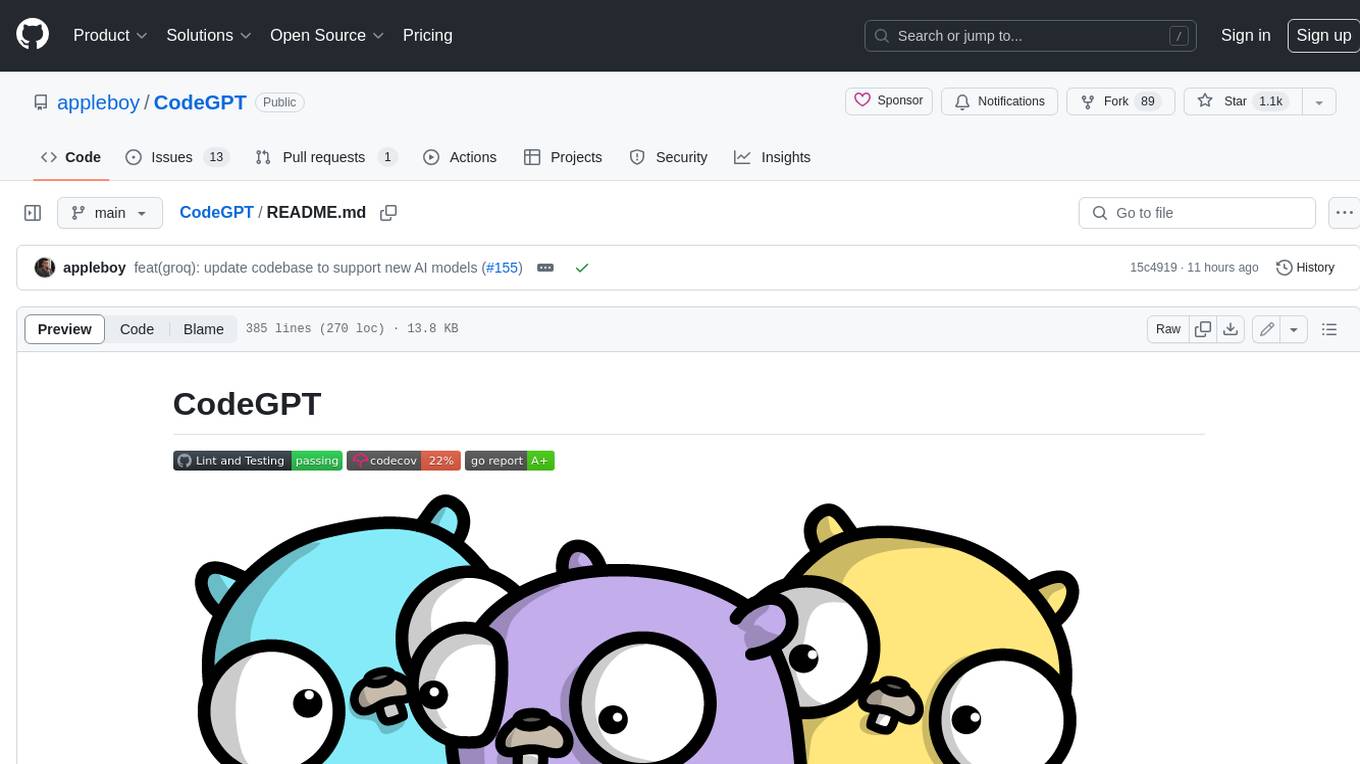

CodeGPT

CodeGPT is a CLI tool written in Go that helps you write git commit messages or do a code review brief using ChatGPT AI (gpt-3.5-turbo, gpt-4 model) and automatically installs a git prepare-commit-msg hook. It supports Azure OpenAI Service or OpenAI API, conventional commits specification, Git prepare-commit-msg Hook, customizing the number of lines of context in diffs, excluding files from the git diff command, translating commit messages into different languages, using socks or custom network HTTP proxies, specifying model lists, and doing brief code reviews.

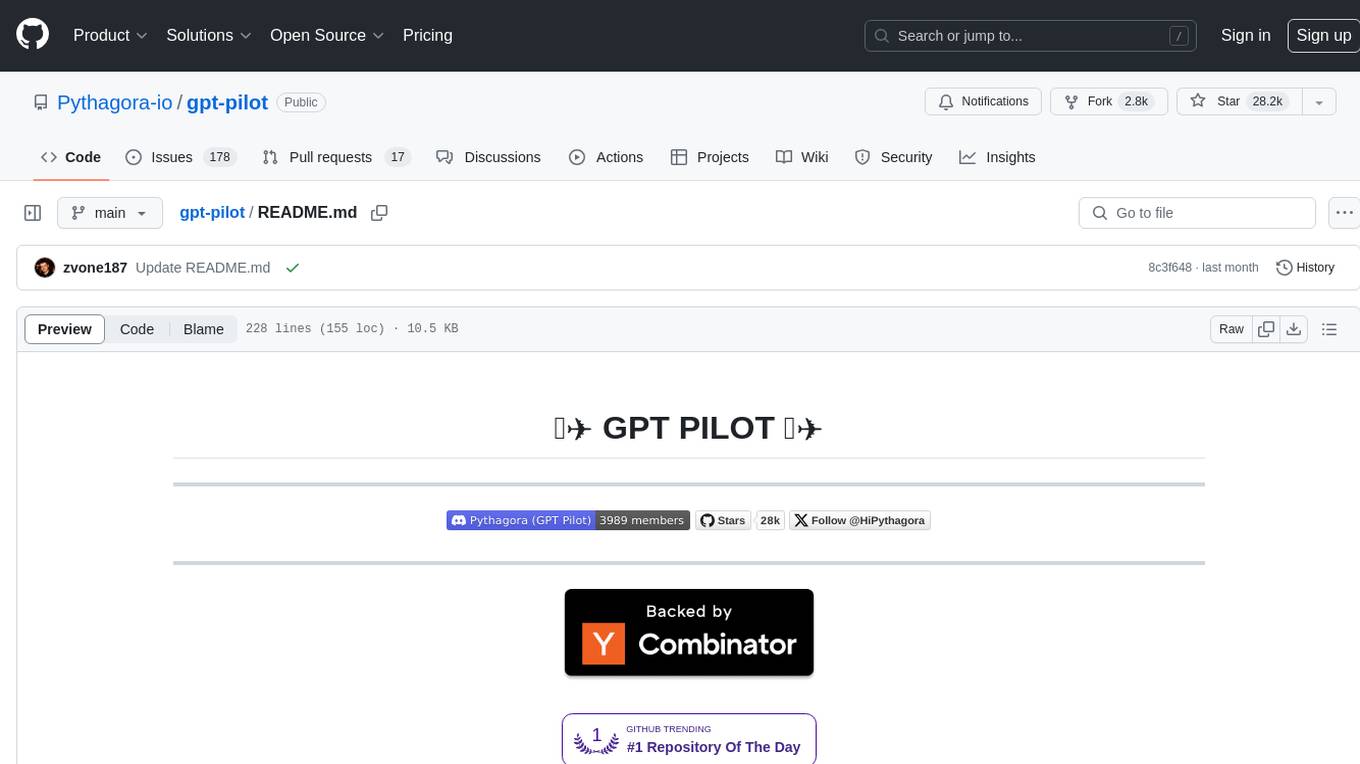

gpt-pilot

GPT Pilot is a core technology for the Pythagora VS Code extension, aiming to provide the first real AI developer companion. It goes beyond autocomplete, helping with writing full features, debugging, issue discussions, and reviews. The tool utilizes LLMs to generate production-ready apps, with developers overseeing the implementation. GPT Pilot works step by step like a developer, debugging issues as they arise. It can work at any scale, filtering out code to show only relevant parts to the AI during tasks. Contributions are welcome, with debugging and telemetry being key areas of focus for improvement.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.