iffy

Intelligent content moderation at scale

Stars: 236

Iffy is a tool for intelligent content moderation at scale, allowing users to keep unwanted content off their platform without the need to manage a team of moderators. It features a Moderation Dashboard to view and manage all moderation activities, User Lifecycle for automatically suspending users with flagged content, Appeals Management for efficient handling of user appeals, and Powerful Rules & Presets to create custom moderation rules based on unique business needs. Users can choose between the managed Iffy Cloud or the free self-hosted Iffy Community version, each offering different features and setups.

README:

Intelligent content moderation at scale. Keep unwanted content off your platform without managing a team of moderators.

Features:

- Moderation Dashboard: View and manage all content moderation activity from a single place.

- User Lifecycle: Automatically suspend users with flagged content (and handle automatic compliance when moderated content is removed).

- Appeals Management: Handle user appeals efficiently through email notifications and a user-friendly web form.

- Powerful Rules & Presets: Create rules to automatically moderate content based on your unique business needs.

Install postgres with a username postgres and password postgres:

brew install postgresql

brew services start postgresql

createdb

psql -c "CREATE USER postgres WITH LOGIN SUPERUSER PASSWORD 'postgres';"Install dependencies:

npm iCopy .env.example to .env.local.

Generate a FIELD_ENCRYPTION_KEY:

npx @47ng/cloak generate | head -1 | cut -d':' -f2 | tr -d ' *'Generate a SECRET_KEY:

openssl rand -base64 32Clerk

- Go to clerk.com and create a new app.

- Name the app and disable all login methods except Email.

- Under "Configure > Email, phone, username", limit authentication strategies to "Email verification link" and "Email verification code". Turn on "Personal information > Name"

- (Optional) Under "Configure > Restrictions", turn on "Sign-up mode > Restricted"

- Under "Configure > Organization Management", turn on "Enable organizations"

- Under "Configure > API Keys", add

CLERK_SECRET_KEYandNEXT_PUBLIC_CLERK_PUBLISHABLE_KEYto your.env.localfile. - Under "Organizations", create a new organization and add your email to the "Members" list.

- Add the organization ID to your

.env.localfile asSEED_CLERK_ORGANIZATION_ID. - (Optional, for testing) In the Clerk dashboard, disable the "Require the same device and browser" setting to ensure tests with Mailosaur work properly.

OpenAI

- Create an account at openai.com.

- Create a new API key at platform.openai.com/api-keys.

- Add the API key to your

.env.localfile asOPENAI_API_KEY.

Set up the database, run migrations, and add seed data:

createuser -s postgres # Create postgres superuser if needed

createdb iffy_development

npm run dev:db:setupRun the development server:

npm run devOpen http://localhost:3000 to access the app.

To enable public sign-ups:

# .env.local

ENABLE_PUBLIC_SIGNUP=true

To enable subscriptions and billing with Stripe:

# .env.local

STRIPE_API_KEY=sk_test_...

ENABLE_BILLING=true

Email notifications (optional, via Resend)

In order to send email with Iffy, you will additionally need a Resend API key.

- Create an account at resend.com.

- Create and verify a new domain. Add the desired from email (e.g.

[email protected]) to your.env.localfile asRESEND_FROM_EMAIL. - Add the desired from name (e.g.

Iffy) to your.env.localfile asRESEND_FROM_NAME. - Create a new API key at API Keys with at least sending permissions.

- Add the API key to your

.env.localfile asRESEND_API_KEY.

Email audiences (optional, via Resend)

- Create an account at resend.com.

- Create a new audience (or use the default audience) at Audiences.

- Add the audience ID to your

.env.localfile asRESEND_AUDIENCE_ID. - Create a new API key at API Keys with full permissions.

- Add the API key to your

.env.localfile asRESEND_API_KEY. - Additionally, go to Clerk and create a new webhook at Webhooks.

- Use the URL

https://<your-app-url>/api/webhooks/clerkfor the webhook URL. - Subscribe to the

user.createdevent. - Add the webhook secret to your

.env.localfile asCLERK_WEBHOOK_SECRET.

Natural language AI tests (optional, via Shortest)

In order to write and run natural language AI tests with Shortest, you will additionally need an Anthropic API key and a Mailosaur API key.

- Create an account at anthropic.com.

- Create a new API key at Account Settings.

- Add the API key to your

.env.localfile asSHORTEST_ANTHROPIC_API_KEY. - Create an account at mailosaur.com.

- Create a new Inbox/Server.

- Go to API Keys and create a standard key.

- Update the environment variables:

-

MAILOSAUR_API_KEY: Your API key -

MAILOSAUR_SERVER_ID: Your server ID

-

To run asynchronous jobs, you will need to set up a local Inngest server. In a separate terminal, run:

npm run dev:inngestStart the development server

npm run devStart the local Inngest server (for asynchronous jobs)

npm run dev:inngestRun API (unit) tests

npm run testRun app (end-to-end) tests

npm run shortest

npm run shortest -- --no-cache # with argumentsYou may self-host Iffy Community for free, if your business has less than 1 million USD total revenue in the prior tax year, and less than 10 million USD GMV (Gross Merchandise Value). For more details, see the Iffy Community License 1.0.

Here are the differences between the managed, hosted Iffy Cloud and the free Iffy Community version.

| Iffy Cloud | Iffy Community | |

|---|---|---|

| Infrastructure | Easy setup. We manage everything. | You set up a server and dependent services. You are responsible for installation, maintenance, upgrades, uptime, security, and service costs. |

| Rules/Presets | 9 powerful presets: Adult content, Spam, Harassment, Non-fiat currency, Weapon components, Government services, Gambling, IPTV, and Phishing | 2 basic presets: Adult content and Spam |

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for iffy

Similar Open Source Tools

iffy

Iffy is a tool for intelligent content moderation at scale, allowing users to keep unwanted content off their platform without the need to manage a team of moderators. It features a Moderation Dashboard to view and manage all moderation activities, User Lifecycle for automatically suspending users with flagged content, Appeals Management for efficient handling of user appeals, and Powerful Rules & Presets to create custom moderation rules based on unique business needs. Users can choose between the managed Iffy Cloud or the free self-hosted Iffy Community version, each offering different features and setups.

bedrock-claude-chat

This repository is a sample chatbot using the Anthropic company's LLM Claude, one of the foundational models provided by Amazon Bedrock for generative AI. It allows users to have basic conversations with the chatbot, personalize it with their own instructions and external knowledge, and analyze usage for each user/bot on the administrator dashboard. The chatbot supports various languages, including English, Japanese, Korean, Chinese, French, German, and Spanish. Deployment is straightforward and can be done via the command line or by using AWS CDK. The architecture is built on AWS managed services, eliminating the need for infrastructure management and ensuring scalability, reliability, and security.

agenticSeek

AgenticSeek is a voice-enabled AI assistant powered by DeepSeek R1 agents, offering a fully local alternative to cloud-based AI services. It allows users to interact with their filesystem, code in multiple languages, and perform various tasks autonomously. The tool is equipped with memory to remember user preferences and past conversations, and it can divide tasks among multiple agents for efficient execution. AgenticSeek prioritizes privacy by running entirely on the user's hardware without sending data to the cloud.

tiledesk-dashboard

Tiledesk is an open-source live chat platform with integrated chatbots written in Node.js and Express. It is designed to be a multi-channel platform for web, Android, and iOS, and it can be used to increase sales or provide post-sales customer service. Tiledesk's chatbot technology allows for automation of conversations, and it also provides APIs and webhooks for connecting external applications. Additionally, it offers a marketplace for apps and features such as CRM, ticketing, and data export.

hash

HASH is a self-building, open-source database which grows, structures and checks itself. With it, we're creating a platform for decision-making, which helps you integrate, understand and use data in a variety of different ways.

ChatGPT

The ChatGPT API Free Reverse Proxy provides free self-hosted API access to ChatGPT (`gpt-3.5-turbo`) with OpenAI's familiar structure, eliminating the need for code changes. It offers streaming response, API endpoint compatibility, and complimentary access without an API key. Installation options include Docker, PC/Server, and Termux on Android devices. The API can be accessed through a self-hosted local server or a pre-hosted API with an API key obtained from the Discord server. Usage examples are provided for Python and Node.js, and the project is licensed under AGPL-3.0.

chat-ui

A chat interface using open source models, eg OpenAssistant or Llama. It is a SvelteKit app and it powers the HuggingChat app on hf.co/chat.

apify-mcp-server

The Apify MCP Server enables AI agents to extract data from various websites using ready-made scrapers and automation tools. It supports OAuth for easy connection from clients like Claude.ai or Visual Studio Code. The server also supports Skyfire agentic payments for AI agents to pay for Actor runs without an API token. Compatible with various clients adhering to the Model Context Protocol, it allows dynamic tool discovery and interaction with Apify Actors. The server provides tools for interacting with Apify Actors, dynamic tool discovery, and telemetry data collection. It offers a set of example prompts and resources for users to explore and interact with Apify through MCP.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

ChatOpsLLM

ChatOpsLLM is a project designed to empower chatbots with effortless DevOps capabilities. It provides an intuitive interface and streamlined workflows for managing and scaling language models. The project incorporates robust MLOps practices, including CI/CD pipelines with Jenkins and Ansible, monitoring with Prometheus and Grafana, and centralized logging with the ELK stack. Developers can find detailed documentation and instructions on the project's website.

agent-service-toolkit

The AI Agent Service Toolkit is a comprehensive toolkit designed for running an AI agent service using LangGraph, FastAPI, and Streamlit. It includes a LangGraph agent, a FastAPI service, a client for interacting with the service, and a Streamlit app for providing a chat interface. The project offers a template for building and running agents with the LangGraph framework, showcasing a complete setup from agent definition to user interface. Key features include LangGraph Agent with latest features, FastAPI Service, Advanced Streaming support, Streamlit Interface, Multiple Agent Support, Asynchronous Design, Content Moderation, RAG Agent implementation, Feedback Mechanism, Docker Support, and Testing. The repository structure includes directories for defining agents, protocol schema, core modules, service, client, Streamlit app, and tests.

sosumi.ai

sosumi.ai provides Apple Developer documentation in an AI-readable format by converting JavaScript-rendered pages into Markdown. It offers an HTTP API to access Apple docs, supports external Swift-DocC sites, integrates with MCP server, and provides tools like searchAppleDocumentation and fetchAppleDocumentation. The project can be self-hosted and is currently hosted on Cloudflare Workers. It is built with Hono and supports various runtimes. The application is designed for accessibility-first, on-demand rendering of Apple Developer pages to Markdown.

chatgpt-cli

ChatGPT CLI provides a powerful command-line interface for seamless interaction with ChatGPT models via OpenAI and Azure. It features streaming capabilities, extensive configuration options, and supports various modes like streaming, query, and interactive mode. Users can manage thread-based context, sliding window history, and provide custom context from any source. The CLI also offers model and thread listing, advanced configuration options, and supports GPT-4, GPT-3.5-turbo, and Perplexity's models. Installation is available via Homebrew or direct download, and users can configure settings through default values, a config.yaml file, or environment variables.

shortest

Shortest is a project for local development that helps set up environment variables and services for a web application. It provides a guide for setting up Node.js and pnpm dependencies, configuring services like Clerk, Vercel Postgres, Anthropic, Stripe, and GitHub OAuth, and running the application and tests locally.

OpenAI-sublime-text

The OpenAI Completion plugin for Sublime Text provides first-class code assistant support within the editor. It utilizes LLM models to manipulate code, engage in chat mode, and perform various tasks. The plugin supports OpenAI, llama.cpp, and ollama models, allowing users to customize their AI assistant experience. It offers separated chat histories and assistant settings for different projects, enabling context-specific interactions. Additionally, the plugin supports Markdown syntax with code language syntax highlighting, server-side streaming for faster response times, and proxy support for secure connections. Users can configure the plugin's settings to set their OpenAI API key, adjust assistant modes, and manage chat history. Overall, the OpenAI Completion plugin enhances the Sublime Text editor with powerful AI capabilities, streamlining coding workflows and fostering collaboration with AI assistants.

kwaak

Kwaak is a tool that allows users to run a team of autonomous AI agents locally from their own machine. It enables users to write code, improve test coverage, update documentation, and enhance code quality while focusing on building innovative projects. Kwaak is designed to run multiple agents in parallel, interact with codebases, answer questions about code, find examples, write and execute code, create pull requests, and more. It is free and open-source, allowing users to bring their own API keys or models via Ollama. Kwaak is part of the bosun.ai project, aiming to be a platform for autonomous code improvement.

For similar tasks

iffy

Iffy is a tool for intelligent content moderation at scale, allowing users to keep unwanted content off their platform without the need to manage a team of moderators. It features a Moderation Dashboard to view and manage all moderation activities, User Lifecycle for automatically suspending users with flagged content, Appeals Management for efficient handling of user appeals, and Powerful Rules & Presets to create custom moderation rules based on unique business needs. Users can choose between the managed Iffy Cloud or the free self-hosted Iffy Community version, each offering different features and setups.

iffy

Iffy is a tool for intelligent content moderation at scale, allowing users to keep unwanted content off their platform without the need to manage a team of moderators. It provides features such as a Moderation Dashboard to view and manage all moderation activity, User Lifecycle to automatically suspend users with flagged content, Appeals Management for efficient handling of user appeals, and Powerful Rules & Presets to create custom moderation rules. Users can choose between the managed Iffy Cloud or the free self-hosted Iffy Community version, each offering different features and setup requirements.

basehub

JavaScript / TypeScript SDK for BaseHub, the first AI-native content hub. **Features:** * ✨ Infers types from your BaseHub repository... _meaning IDE autocompletion works great._ * 🏎️ No dependency on graphql... _meaning your bundle is more lightweight._ * 🌐 Works everywhere `fetch` is supported... _meaning you can use it anywhere._

AI-Prompt-Genius

AI Prompt Genius is a Chrome extension that allows you to curate a custom library of AI prompts. It is built using React web app and Tailwind CSS with DaisyUI components. The extension enables users to create and manage AI prompts for various purposes. It provides a user-friendly interface for organizing and accessing AI prompts efficiently. AI Prompt Genius is designed to enhance productivity and creativity by offering a personalized collection of prompts tailored to individual needs. Users can easily install the extension from the Chrome Web Store and start using it to generate AI prompts for different tasks.

ai-cms-grapesjs

The Aimeos GrapesJS CMS extension provides a simple to use but powerful page editor for creating content pages based on extensible components. It integrates seamlessly with Laravel applications and allows users to easily manage and display CMS content. The tool also supports Google reCAPTCHA v3 for enhanced security. Users can create and customize pages with various components and manage multi-language setups effortlessly. The extension simplifies the process of creating and managing content pages, making it ideal for developers and businesses looking to enhance their website's content management capabilities.

hyperfy

Hyperfy is a powerful tool for automating social media marketing tasks. It provides a user-friendly interface to schedule posts, analyze performance metrics, and engage with followers across multiple platforms. With Hyperfy, users can save time and effort by streamlining their social media management processes in one centralized platform.

comfyui-web-viewer

The ComfyUI Web Viewer by vrch.ai is a real-time AI-generated interactive art framework that integrates realtime streaming into ComfyUI workflows. It supports keyboard control nodes, OSC control nodes, sound input nodes, and more, accessible from any device with a web browser. It enables real-time interaction with AI-generated content, ideal for interactive visual projects and enhancing ComfyUI workflows with efficient content management and display.

Pixelle-MCP

Pixelle-MCP is a multi-channel publishing tool designed to streamline the process of publishing content across various social media platforms. It allows users to create, schedule, and publish posts simultaneously on platforms such as Facebook, Twitter, and Instagram. With a user-friendly interface and advanced scheduling features, Pixelle-MCP helps users save time and effort in managing their social media presence. The tool also provides analytics and insights to track the performance of posts and optimize content strategy. Whether you are a social media manager, content creator, or digital marketer, Pixelle-MCP is a valuable tool to enhance your online presence and engage with your audience effectively.

For similar jobs

mage

XMage is an open-source, cross-platform application that allows users to play the collectible card game Magic: The Gathering online against other players or computer opponents. It supports over 25,000 unique cards and more than 65,000 reprints from different editions, including custom sets like Star Wars. XMage supports single matches and tournaments with dozens of game modes, including duel, multiplayer, standard, modern, commander, pauper, oathbreaker, historic, freeform, and richman. It also features a deck editor, a player rating system, and support for special formats like Commander, Oathbreaker, Cube, Tiny Leaders, Super Standard, and Historic Standard.

Forza-Mods-AIO

Forza Mods AIO is a free and open-source tool that enhances the gaming experience in Forza Horizon 4 and 5. It offers a range of time-saving and quality-of-life features, making gameplay more enjoyable and efficient. The tool is designed to streamline various aspects of the game, improving user satisfaction and overall enjoyment.

eos-airdrops

This repository contains a list of EOS airdrops. Airdrops are a way for projects to distribute tokens to their community. They can be used to reward early adopters, promote the project, or raise funds. This repository includes airdrops for a variety of projects, including both new and established projects.

Discord-AI-Chatbot

Discord AI Chatbot is a versatile tool that seamlessly integrates into your Discord server, offering a wide range of capabilities to enhance your communication and engagement. With its advanced language model, the bot excels at imaginative generation, providing endless possibilities for creative expression. Additionally, it offers secure credential management, ensuring the privacy of your data. The bot's hybrid command system combines the best of slash and normal commands, providing flexibility and ease of use. It also features mention recognition, ensuring prompt responses whenever you mention it or use its name. The bot's message handling capabilities prevent confusion by recognizing when you're replying to others. You can customize the bot's behavior by selecting from a range of pre-existing personalities or creating your own. The bot's web access feature unlocks a new level of convenience, allowing you to interact with it from anywhere. With its open-source nature, you have the freedom to modify and adapt the bot to your specific needs.

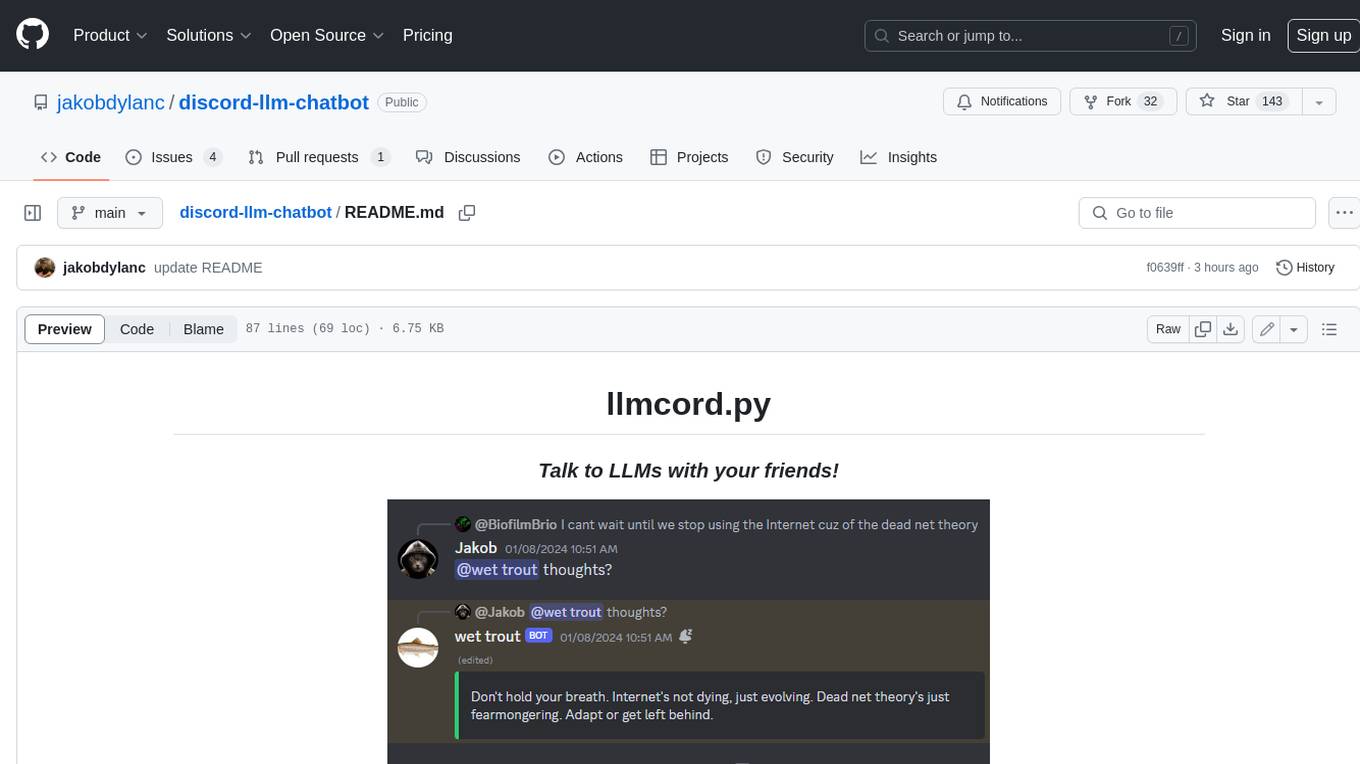

discord-llm-chatbot

llmcord.py enables collaborative LLM prompting in your Discord server. It works with practically any LLM, remote or locally hosted. ### Features ### Reply-based chat system Just @ the bot to start a conversation and reply to continue. Build conversations with reply chains! You can do things like: - Build conversations together with your friends - "Rewind" a conversation simply by replying to an older message - @ the bot while replying to any message in your server to ask a question about it Additionally: - Back-to-back messages from the same user are automatically chained together. Just reply to the latest one and the bot will see all of them. - You can seamlessly move any conversation into a thread. Just create a thread from any message and @ the bot inside to continue. ### Choose any LLM Supports remote models from OpenAI API, Mistral API, Anthropic API and many more thanks to LiteLLM. Or run a local model with ollama, oobabooga, Jan, LM Studio or any other OpenAI compatible API server. ### And more: - Supports image attachments when using a vision model - Customizable system prompt - DM for private access (no @ required) - User identity aware (OpenAI API only) - Streamed responses (turns green when complete, automatically splits into separate messages when too long, throttled to prevent Discord ratelimiting) - Displays helpful user warnings when appropriate (like "Only using last 20 messages", "Max 5 images per message", etc.) - Caches message data in a size-managed (no memory leaks) and per-message mutex-protected (no race conditions) global dictionary to maximize efficiency and minimize Discord API calls - Fully asynchronous - 1 Python file, ~200 lines of code

discourse-ai

Discourse AI is a plugin for the Discourse forum software that uses artificial intelligence to improve the user experience. It can automatically generate content, moderate posts, and answer questions. This can free up moderators and administrators to focus on other tasks, and it can help to create a more engaging and informative community.

MukeshRobot

MukeshRobot is a Telegram group controller bot written in Python. It is designed to help group administrators manage their groups more effectively. The bot can perform a variety of tasks, including: - Welcoming new members - Banning spammers - Deleting inappropriate messages - Managing group settings - Sending announcements - Playing games MukeshRobot is easy to set up and use. Simply add the bot to your group and give it administrator privileges. The bot will then automatically start performing its tasks. You can also customize the bot's behavior by editing the config file. MukeshRobot is a powerful tool that can help you keep your Telegram groups clean and organized. It is a must-have for any group administrator.

galxe-aio

Galxe AIO is a versatile tool designed to automate various tasks on social media platforms like Twitter, email, and Discord. It supports tasks such as following, retweeting, liking, and quoting on Twitter, as well as solving quizzes, submitting surveys, and more. Users can link their Twitter accounts, email accounts (IMAP or mail3.me), and Discord accounts to the tool to streamline their activities. Additionally, the tool offers features like claiming rewards, quiz solving, submitting surveys, and managing referral links and account statistics. It also supports different types of rewards like points, mystery boxes, gas-less OATs, gas OATs and NFTs, and participation in raffles. The tool provides settings for managing EVM wallets, proxies, twitters, emails, and discords, along with custom configurations in the `config.toml` file. Users can run the tool using Python 3.11 and install dependencies using `pip` and `playwright`. The tool generates results and logs in specific folders and allows users to donate using TRC-20 or ERC-20 tokens.