Pixelle-MCP

An Open-Source Multimodal AIGC Solution based on ComfyUI + MCP + LLM https://pixelle.ai

Stars: 615

Pixelle-MCP is a multi-channel publishing tool designed to streamline the process of publishing content across various social media platforms. It allows users to create, schedule, and publish posts simultaneously on platforms such as Facebook, Twitter, and Instagram. With a user-friendly interface and advanced scheduling features, Pixelle-MCP helps users save time and effort in managing their social media presence. The tool also provides analytics and insights to track the performance of posts and optimize content strategy. Whether you are a social media manager, content creator, or digital marketer, Pixelle-MCP is a valuable tool to enhance your online presence and engage with your audience effectively.

README:

English | 中文

✨ An AIGC solution based on the MCP protocol, seamlessly converting ComfyUI workflows into MCP tools with zero code, empowering LLM and ComfyUI integration.

https://github.com/user-attachments/assets/65422cef-96f9-44fe-a82b-6a124674c417

- ✅ 2025-09-03: Architecture refactoring from three services to unified application; added CLI tool support; published to PyPI

- ✅ 2025-08-12: Integrated the LiteLLM framework, adding multi-model support for Gemini, DeepSeek, Claude, Qwen, and more

- ✅ 🔄 Full-modal Support: Supports TISV (Text, Image, Sound/Speech, Video) full-modal conversion and generation

- ✅ 🧩 ComfyUI Ecosystem: Built on ComfyUI, inheriting all capabilities from the open ComfyUI ecosystem

- ✅ 🔧 Zero-code Development: Defines and implements the Workflow-as-MCP Tool solution, enabling zero-code development and dynamic addition of new MCP Tools

- ✅ 🗄️ MCP Server: Based on the MCP protocol, supporting integration with any MCP client (including but not limited to Cursor, Claude Desktop, etc.)

- ✅ 🌐 Web Interface: Developed based on the Chainlit framework, inheriting Chainlit's UI controls and supporting integration with more MCP Servers

- ✅ 📦 One-click Deployment: Supports PyPI installation, CLI commands, Docker and other deployment methods, ready to use out of the box

- ✅ ⚙️ Simplified Configuration: Uses environment variable configuration scheme, simple and intuitive configuration

- ✅ 🤖 Multi-LLM Support: Supports multiple mainstream LLMs, including OpenAI, Ollama, Gemini, DeepSeek, Claude, Qwen, and more

Pixelle MCP adopts a unified architecture design, integrating MCP server, web interface, and file services into one application, providing:

- 🌐 Web Interface: Chainlit-based chat interface supporting multimodal interaction

- 🔌 MCP Endpoint: For external MCP clients (such as Cursor, Claude Desktop) to connect

- 📁 File Service: Handles file upload, download, and storage

- 🛠️ Workflow Engine: Automatically converts ComfyUI workflows into MCP tools

Choose the deployment method that best suits your needs, from simple to complex:

💡 Zero configuration startup, perfect for quick experience and testing

# Start with one command, no system installation required

uvx pixelle@latest# Install to system

pip install -U pixelle

# Start service

pixelleAfter startup, it will automatically enter the configuration wizard to guide you through ComfyUI connection and LLM configuration.

💡 Supports custom workflows and secondary development

git clone https://github.com/AIDC-AI/Pixelle-MCP.git

cd Pixelle-MCP# Interactive mode (recommended)

uv run pixelle📚 View Complete CLI Reference →

# Copy example workflows to data directory (run this in your desired project directory)

cp -r workflows/* ./data/custom_workflows/💡 Suitable for production environments and containerized deployment

git clone https://github.com/AIDC-AI/Pixelle-MCP.git

cd Pixelle-MCP

# Create environment configuration file

cp .env.example .env

# Edit .env file to configure your ComfyUI address and LLM settings# Start all services in background

docker compose up -d

# View logs

docker compose logs -fRegardless of which method you use, after startup you can access via:

-

🌐 Web Interface: http://localhost:9004

Default username and password are bothdev, can be modified after startup -

🔌 MCP Endpoint: http://localhost:9004/pixelle/mcp

For MCP clients like Cursor, Claude Desktop to connect

💡 Port Configuration: Default port is 9004, can be customized via environment variable PORT=your_port.

On first startup, the system will automatically detect configuration status:

-

🔧 ComfyUI Connection: Ensure ComfyUI service is running at

http://localhost:8188 - 🤖 LLM Configuration: Configure at least one LLM provider (OpenAI, Ollama, etc.)

- 📁 Workflow Directory: System will automatically create necessary directory structure

🆘 Need Help? Join community groups for support (see Community section below)

⚡ One workflow = One MCP Tool

-

📝 Build a workflow in ComfyUI for image Gaussian blur (Get it here), then set the

LoadImagenode's title to$image.image!as shown below:

-

📤 Export it as an API format file and rename it to

i_blur.json. You can export it yourself or use our pre-exported version (Get it here) -

📋 Copy the exported API workflow file (must be API format), input it on the web page, and let the LLM add this Tool

-

✨ After sending, the LLM will automatically convert this workflow into an MCP Tool

-

🎨 Now, refresh the page and send any image to perform Gaussian blur processing via LLM

The steps are the same as above, only the workflow part differs (Download workflow: UI format and API format)

The system supports ComfyUI workflows. Just design your workflow in the canvas and export it as API format. Use special syntax in node titles to define parameters and outputs.

In the ComfyUI canvas, double-click the node title to edit, and use the following DSL syntax to define parameters:

$<param_name>.[~]<field_name>[!][:<description>]

-

param_name: The parameter name for the generated MCP tool function -

~: Optional, indicates URL parameter upload processing, returns relative path -

field_name: The corresponding input field in the node -

!: Indicates this parameter is required -

description: Description of the parameter

Required parameter example:

- Set LoadImage node title to:

$image.image!:Input image URL - Meaning: Creates a required parameter named

image, mapped to the node'simagefield

URL upload processing example:

- Set any node title to:

$image.~image!:Input image URL - Meaning: Creates a required parameter named

image, system will automatically download URL and upload to ComfyUI, returns relative path

📝 Note:

LoadImage,VHS_LoadAudioUpload,VHS_LoadVideoand other nodes have built-in functionality, no need to add~marker

Optional parameter example:

- Set EmptyLatentImage node title to:

$width.width:Image width, default 512 - Meaning: Creates an optional parameter named

width, mapped to the node'swidthfield, default value is 512

The system automatically infers parameter types based on the current value of the node field:

- 🔢

int: Integer values (e.g. 512, 1024) - 📊

float: Floating-point values (e.g. 1.5, 3.14) - ✅

bool: Boolean values (e.g. true, false) - 📝

str: String values (default type)

The system will automatically detect the following common output nodes:

- 🖼️

SaveImage- Image save node - 🎬

SaveVideo- Video save node - 🔊

SaveAudio- Audio save node - 📹

VHS_SaveVideo- VHS video save node - 🎵

VHS_SaveAudio- VHS audio save node

Usually used for multiple outputs Use

$output.var_namein any node title to mark output:

- Set node title to:

$output.result - The system will use this node's output as the tool's return value

You can add a node titled MCP in the workflow to provide a tool description:

- Add a

String (Multiline)or similar text node (must have a single string property, and the node field should be one of: value, text, string) - Set the node title to:

MCP - Enter a detailed tool description in the value field

- 🔒 Parameter Validation: Optional parameters (without !) must have default values set in the node

- 🔗 Node Connections: Fields already connected to other nodes will not be parsed as parameters

- 🏷️ Tool Naming: Exported file name will be used as the tool name, use meaningful English names

- 📋 Detailed Descriptions: Provide detailed parameter descriptions for better user experience

- 🎯 Export Format: Must export as API format, do not export as UI format

Scan the QR codes below to join our communities for latest updates and technical support:

| Discord Community | WeChat Group |

|---|---|

|

|

We welcome all forms of contribution! Whether you're a developer, designer, or user, you can participate in the project in the following ways:

- 📋 Submit bug reports on the Issues page

- 🔍 Please search for similar issues before submitting

- 📝 Describe the reproduction steps and environment in detail

- 🚀 Submit feature requests in Issues

- 💭 Describe the feature you want and its use case

- 🎯 Explain how it improves user experience

- 🍴 Fork this repo to your GitHub account

- 🌿 Create a feature branch:

git checkout -b feature/your-feature-name - 💻 Develop and add corresponding tests

- 📝 Commit changes:

git commit -m "feat: add your feature" - 📤 Push to your repo:

git push origin feature/your-feature-name - 🔄 Create a Pull Request to the main repo

- 🐍 Python code follows PEP 8 style guide

- 📖 Add appropriate documentation and comments for new features

- 📦 Share your ComfyUI workflows with the community

- 🛠️ Submit tested workflow files

- 📚 Add usage instructions and examples for workflows

❤️ Sincere thanks to the following organizations, projects, and teams for supporting the development and implementation of this project.

This project is released under the MIT License (LICENSE, SPDX-License-identifier: MIT).

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Pixelle-MCP

Similar Open Source Tools

Pixelle-MCP

Pixelle-MCP is a multi-channel publishing tool designed to streamline the process of publishing content across various social media platforms. It allows users to create, schedule, and publish posts simultaneously on platforms such as Facebook, Twitter, and Instagram. With a user-friendly interface and advanced scheduling features, Pixelle-MCP helps users save time and effort in managing their social media presence. The tool also provides analytics and insights to track the performance of posts and optimize content strategy. Whether you are a social media manager, content creator, or digital marketer, Pixelle-MCP is a valuable tool to enhance your online presence and engage with your audience effectively.

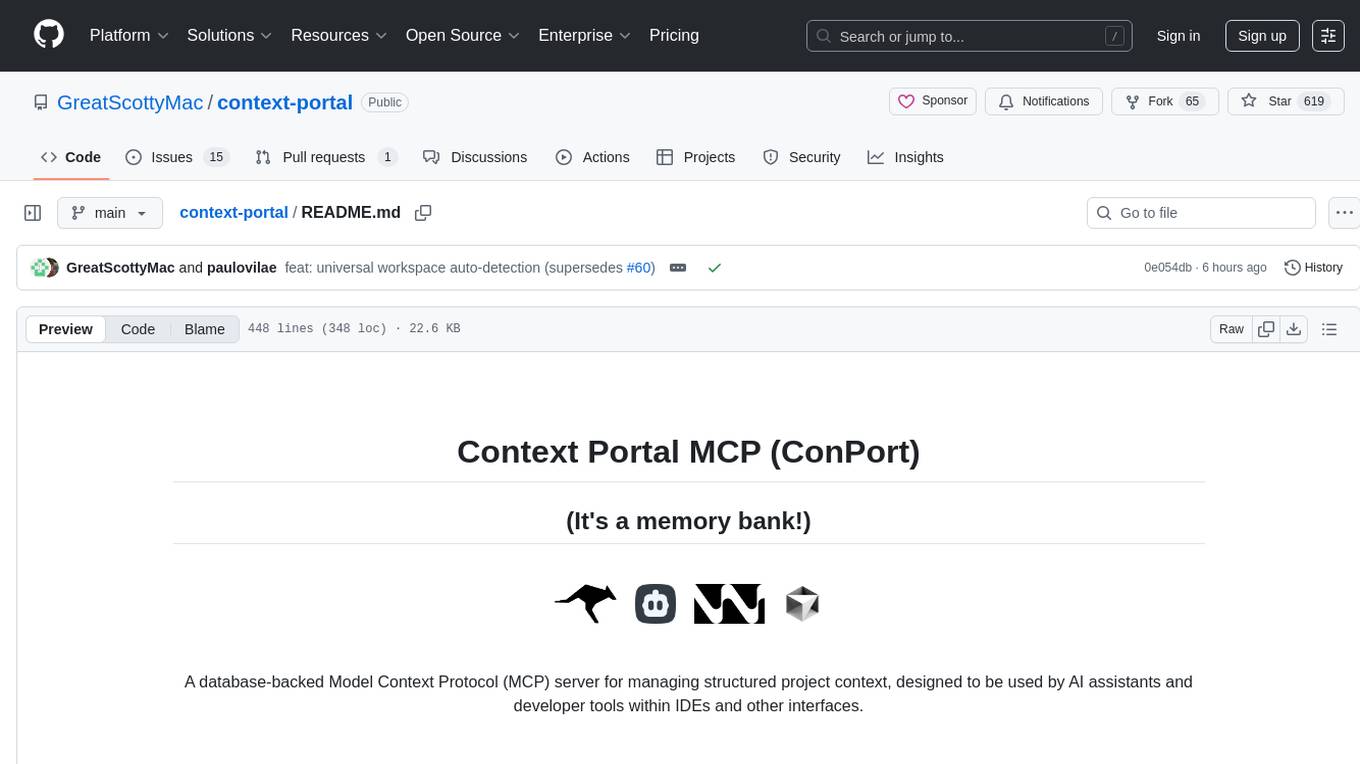

context-portal

Context-portal is a versatile tool for managing and visualizing data in a collaborative environment. It provides a user-friendly interface for organizing and sharing information, making it easy for teams to work together on projects. With features such as customizable dashboards, real-time updates, and seamless integration with popular data sources, Context-portal streamlines the data management process and enhances productivity. Whether you are a data analyst, project manager, or team leader, Context-portal offers a comprehensive solution for optimizing workflows and driving better decision-making.

trubrics-sdk

Trubrics-sdk is a software development kit designed to facilitate the integration of analytics features into applications. It provides a set of tools and functionalities that enable developers to easily incorporate analytics capabilities, such as data collection, analysis, and reporting, into their software products. The SDK streamlines the process of implementing analytics solutions, allowing developers to focus on building and enhancing their applications' functionality and user experience. By leveraging trubrics-sdk, developers can quickly and efficiently integrate robust analytics features, gaining valuable insights into user behavior and application performance.

GrowthHacking-Notes

GrowthHacking-Notes is a repository containing detailed notes, strategies, and resources related to growth hacking. It provides valuable insights and tips for individuals and businesses looking to accelerate their growth through innovative marketing techniques and data-driven strategies. The repository covers various topics such as user acquisition, retention, conversion optimization, and more, making it a comprehensive resource for anyone interested in growth hacking.

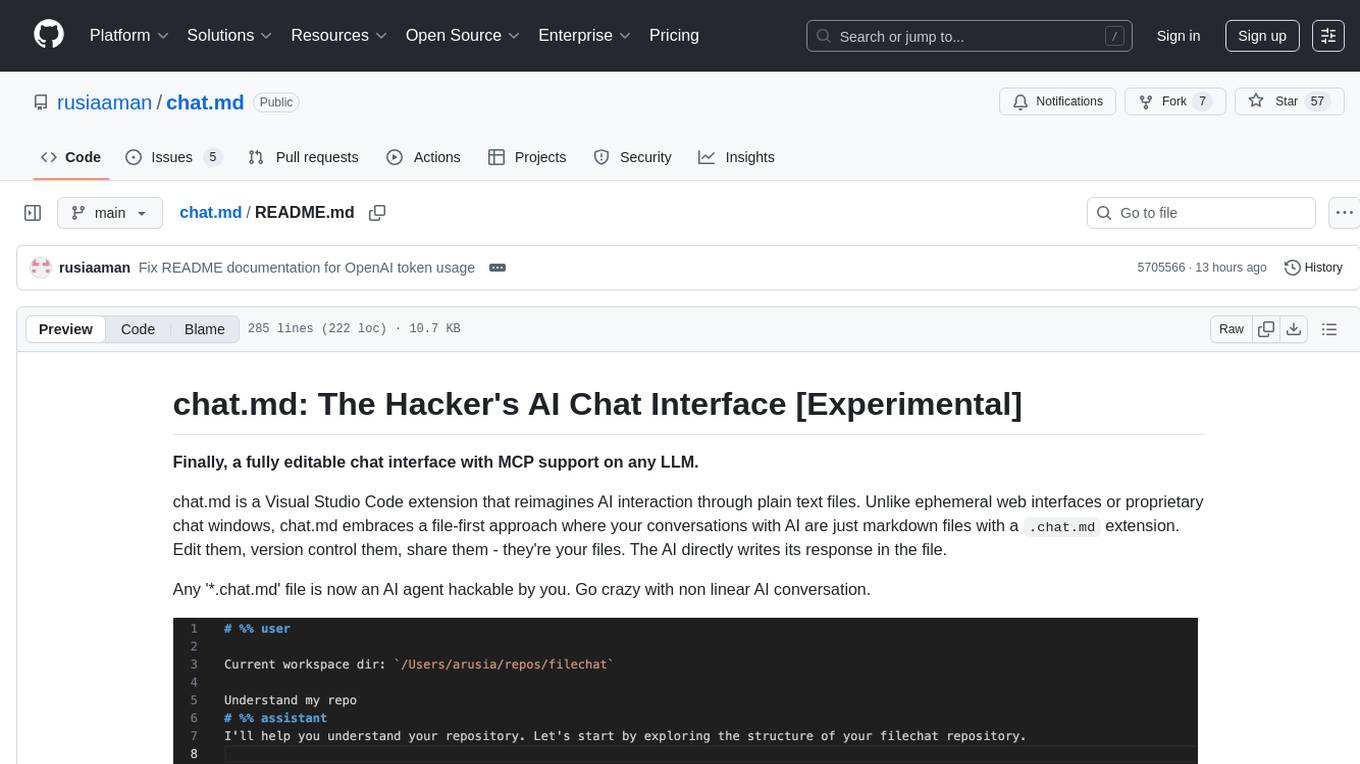

chat.md

This repository contains a chatbot tool that utilizes natural language processing to interact with users. The tool is designed to understand and respond to user input in a conversational manner, providing information and assistance. It can be integrated into various applications to enhance user experience and automate customer support. The chatbot tool is user-friendly and customizable, making it suitable for businesses looking to improve customer engagement and streamline communication.

duckduckgo-ai-chat

This repository contains a chatbot tool powered by AI technology. The chatbot is designed to interact with users in a conversational manner, providing information and assistance on various topics. Users can engage with the chatbot to ask questions, seek recommendations, or simply have a casual conversation. The AI technology behind the chatbot enables it to understand natural language inputs and provide relevant responses, making the interaction more intuitive and engaging. The tool is versatile and can be customized for different use cases, such as customer support, information retrieval, or entertainment purposes. Overall, the chatbot offers a user-friendly and interactive experience, leveraging AI to enhance communication and engagement.

Conversational-Azure-OpenAI-Accelerator

The Conversational Azure OpenAI Accelerator is a tool designed to provide rapid, no-cost custom demos tailored to customer use cases, from internal HR/IT to external contact centers. It focuses on top use cases of GenAI conversation and summarization, plus live backend data integration. The tool automates conversations across voice and text channels, providing a valuable way to save money and improve customer and employee experience. By combining Azure OpenAI + Cognitive Search, users can efficiently deploy a ChatGPT experience using web pages, knowledge base articles, and data sources. The tool enables simultaneous deployment of conversational content to chatbots, IVR, voice assistants, and more in one click, eliminating the need for in-depth IT involvement. It leverages Microsoft's advanced AI technologies, resulting in a conversational experience that can converse in human-like dialogue, respond intelligently, and capture content for omni-channel unified analytics.

airstate

AirState is a straightforward software development kit that enables users to integrate real-time collaboration functionalities into their web applications. With its user-friendly interface and robust capabilities, AirState simplifies the process of incorporating live collaboration features, making it an ideal choice for developers seeking to enhance the interactive elements of their projects. The SDK offers a seamless solution for creating engaging and interactive web experiences, allowing users to easily implement real-time collaboration tools without the need for extensive coding knowledge or complex configurations. By leveraging AirState, developers can streamline the development process and deliver dynamic web applications that facilitate real-time communication and collaboration among users.

onlook

Onlook is a web scraping tool that allows users to extract data from websites easily and efficiently. It provides a user-friendly interface for creating web scraping scripts and supports various data formats for exporting the extracted data. With Onlook, users can automate the process of collecting information from multiple websites, saving time and effort. The tool is designed to be flexible and customizable, making it suitable for a wide range of web scraping tasks.

Companion

Companion is a software tool designed to provide support and enhance development. It offers various features and functionalities to assist users in their projects and tasks. The tool aims to be user-friendly and efficient, helping individuals and teams to streamline their workflow and improve productivity.

baibot

Baibot is a versatile chatbot framework designed to simplify the process of creating and deploying chatbots. It provides a user-friendly interface for building custom chatbots with various functionalities such as natural language processing, conversation flow management, and integration with external APIs. Baibot is highly customizable and can be easily extended to suit different use cases and industries. With Baibot, developers can quickly create intelligent chatbots that can interact with users in a seamless and engaging manner, enhancing user experience and automating customer support processes.

design-studio

Tiledesk Design Studio is an open-source, no-code development platform for creating chatbots and conversational apps. It offers a user-friendly, drag-and-drop interface with pre-ready actions and integrations. The platform combines the power of LLM/GPT AI with a flexible 'graph' approach for creating conversations and automations with ease. Users can automate customer conversations, prototype conversations, integrate ChatGPT, enhance user experience with multimedia, provide personalized product recommendations, set conditions, use random replies, connect to other tools like HubSpot CRM, integrate with WhatsApp, send emails, and seamlessly enhance existing setups.

feeds.fun

Feeds Fun is a self-hosted news reader tool that automatically assigns tags to news entries. Users can create rules to score news based on tags, filter and sort news as needed, and track read news. The tool offers multi/single-user support, feeds management, and various features for personalized news consumption. Users can access the tool's backend as the ffun package on PyPI and the frontend as the feeds-fun package on NPM. Feeds Fun requires setting up OpenAI or Gemini API keys for full tag generation capabilities. The tool uses tag processors to detect tags for news entries, with options for simple and complex processors. Feeds Fun primarily relies on LLM tag processors from OpenAI and Google for tag generation.

open-webui-tools

Open WebUI Tools Collection is a set of tools for structured planning, arXiv paper search, Hugging Face text-to-image generation, prompt enhancement, and multi-model conversations. It enhances LLM interactions with academic research, image generation, and conversation management. Tools include arXiv Search Tool and Hugging Face Image Generator. Function Pipes like Planner Agent offer autonomous plan generation and execution. Filters like Prompt Enhancer improve prompt quality. Installation and configuration instructions are provided for each tool and pipe.

InvokeAI

InvokeAI is a leading creative engine built to empower professionals and enthusiasts alike. Generate and create stunning visual media using the latest AI-driven technologies. InvokeAI offers an industry leading Web Interface, interactive Command Line Interface, and also serves as the foundation for multiple commercial products.

proxyless-llm-websearch

Proxyless-LLM-WebSearch is a tool that enables users to perform large language model-based web search without the need for proxies. It leverages state-of-the-art language models to provide accurate and efficient web search results. The tool is designed to be user-friendly and accessible for individuals looking to conduct web searches at scale. With Proxyless-LLM-WebSearch, users can easily search the web using natural language queries and receive relevant results in a timely manner. This tool is particularly useful for researchers, data analysts, content creators, and anyone interested in leveraging advanced language models for web search tasks.

For similar tasks

intro-llm-rag

This repository serves as a comprehensive guide for technical teams interested in developing conversational AI solutions using Retrieval-Augmented Generation (RAG) techniques. It covers theoretical knowledge and practical code implementations, making it suitable for individuals with a basic technical background. The content includes information on large language models (LLMs), transformers, prompt engineering, embeddings, vector stores, and various other key concepts related to conversational AI. The repository also provides hands-on examples for two different use cases, along with implementation details and performance analysis.

LLM-Viewer

LLM-Viewer is a tool for visualizing Language and Learning Models (LLMs) and analyzing performance on different hardware platforms. It enables network-wise analysis, considering factors such as peak memory consumption and total inference time cost. With LLM-Viewer, users can gain valuable insights into LLM inference and performance optimization. The tool can be used in a web browser or as a command line interface (CLI) for easy configuration and visualization. The ongoing project aims to enhance features like showing tensor shapes, expanding hardware platform compatibility, and supporting more LLMs with manual model graph configuration.

llm-colosseum

llm-colosseum is a tool designed to evaluate Language Model Models (LLMs) in real-time by making them fight each other in Street Fighter III. The tool assesses LLMs based on speed, strategic thinking, adaptability, out-of-the-box thinking, and resilience. It provides a benchmark for LLMs to understand their environment and take context-based actions. Users can analyze the performance of different LLMs through ELO rankings and win rate matrices. The tool allows users to run experiments, test different LLM models, and customize prompts for LLM interactions. It offers installation instructions, test mode options, logging configurations, and the ability to run the tool with local models. Users can also contribute their own LLM models for evaluation and ranking.

eureka-ml-insights

The Eureka ML Insights Framework is a repository containing code designed to help researchers and practitioners run reproducible evaluations of generative models efficiently. Users can define custom pipelines for data processing, inference, and evaluation, as well as utilize pre-defined evaluation pipelines for key benchmarks. The framework provides a structured approach to conducting experiments and analyzing model performance across various tasks and modalities.

Pixelle-MCP

Pixelle-MCP is a multi-channel publishing tool designed to streamline the process of publishing content across various social media platforms. It allows users to create, schedule, and publish posts simultaneously on platforms such as Facebook, Twitter, and Instagram. With a user-friendly interface and advanced scheduling features, Pixelle-MCP helps users save time and effort in managing their social media presence. The tool also provides analytics and insights to track the performance of posts and optimize content strategy. Whether you are a social media manager, content creator, or digital marketer, Pixelle-MCP is a valuable tool to enhance your online presence and engage with your audience effectively.

trae-agent

Trae-agent is a Python library for building and training reinforcement learning agents. It provides a simple and flexible framework for implementing various reinforcement learning algorithms and experimenting with different environments. With Trae-agent, users can easily create custom agents, define reward functions, and train them on a variety of tasks. The library also includes utilities for visualizing agent performance and analyzing training results, making it a valuable tool for both beginners and experienced researchers in the field of reinforcement learning.

dataset-viewer

Dataset Viewer is a modern, high-performance tool built with Tauri, React, and TypeScript, designed to handle massive datasets from multiple sources with efficient streaming for large files (100GB+) and lightning-fast search capabilities. It supports instant large file opening, real-time search, direct archive preview, multi-protocol and multi-format support, and features a modern interface with dark/light themes and responsive design. The tool is perfect for data scientists, log analysis, archive management, remote access, and performance-critical tasks.

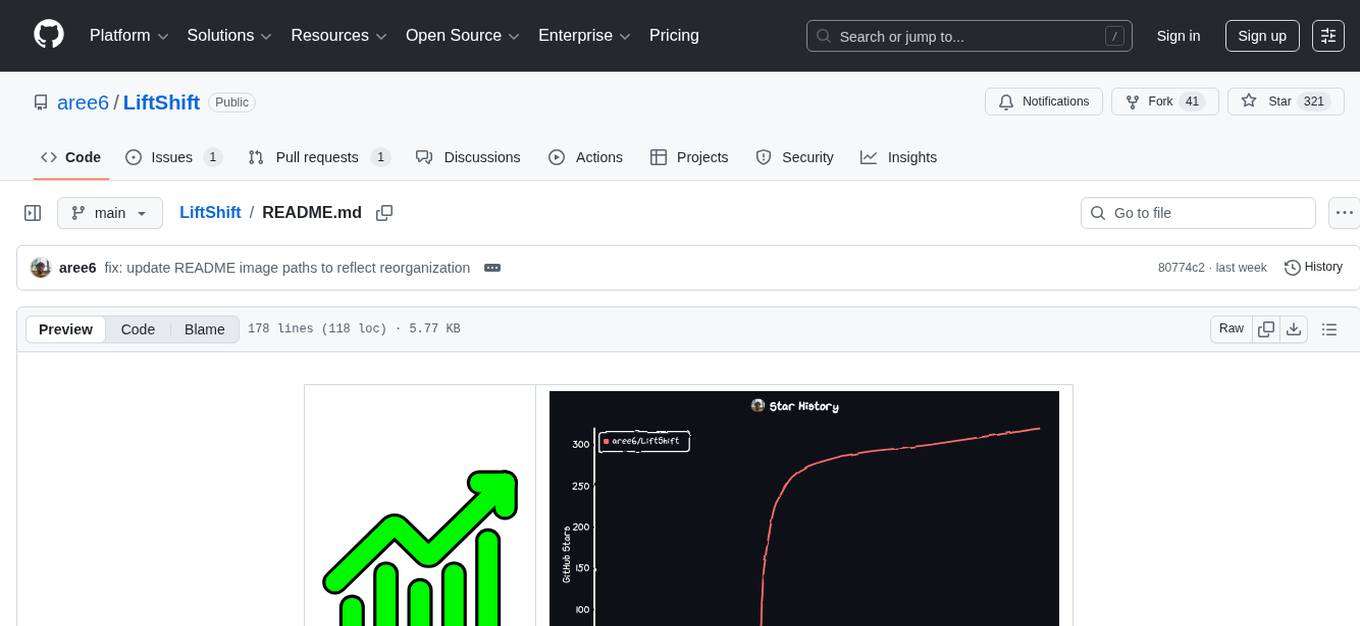

LiftShift

LiftShift is a web application that provides analytics and tracking features for fitness enthusiasts. Users can upload workout data, explore analytics dashboards, receive real-time feedback, and visualize workout history. The tool supports different body types and units, and offers insights on workout trends and performance. LiftShift also detects session goals and provides set-by-set feedback to enhance workout experience. With local storage support and various theme modes, users can easily track their fitness progress and customize their experience.

For similar jobs

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

Twitter-Insight-LLM

This project enables you to fetch liked tweets from Twitter (using Selenium), save it to JSON and Excel files, and perform initial data analysis and image captions. This is part of the initial steps for a larger personal project involving Large Language Models (LLMs).

AISuperDomain

Aila Desktop Application is a powerful tool that integrates multiple leading AI models into a single desktop application. It allows users to interact with various AI models simultaneously, providing diverse responses and insights to their inquiries. With its user-friendly interface and customizable features, Aila empowers users to engage with AI seamlessly and efficiently. Whether you're a researcher, student, or professional, Aila can enhance your AI interactions and streamline your workflow.

ChatGPT-On-CS

This project is an intelligent dialogue customer service tool based on a large model, which supports access to platforms such as WeChat, Qianniu, Bilibili, Douyin Enterprise, Douyin, Doudian, Weibo chat, Xiaohongshu professional account operation, Xiaohongshu, Zhihu, etc. You can choose GPT3.5/GPT4.0/ Lazy Treasure Box (more platforms will be supported in the future), which can process text, voice and pictures, and access external resources such as operating systems and the Internet through plug-ins, and support enterprise AI applications customized based on their own knowledge base.

obs-localvocal

LocalVocal is a live-streaming AI assistant plugin for OBS that allows you to transcribe audio speech into text and perform various language processing functions on the text using AI / LLMs (Large Language Models). It's privacy-first, with all data staying on your machine, and requires no GPU, cloud costs, network, or downtime.