obsidian-NotEMD

A Easy way to create your own Knowledge-base! Notemd enhances your Obsidian workflow by integrating with various Large Language Models (LLMs) to process your notes, automatically generate wiki-links for key concepts, create corresponding concept notes, perform web research, and more.

Stars: 60

Obsidian-NotEMD is a plugin for the Obsidian note-taking app that allows users to export notes in various formats without converting them to EMD. It simplifies the process of sharing and collaborating on notes by providing seamless export options. With Obsidian-NotEMD, users can easily export their notes to PDF, HTML, Markdown, and other formats directly from Obsidian, saving time and effort. This plugin enhances the functionality of Obsidian by streamlining the export process and making it more convenient for users to work with their notes across different platforms and applications.

README:

==================================================

_ _ _ _ ___ __ __ ___

| \ | | ___ | |_| |___| | \/ |___ \

| \| |/ _ \| __| |___| | |\/| | | |

| |\ | (_) | |_| |___ | | | |___| |

|_| \_|\___/ \__|_|___| | | | |____/

==================================================

AI-Powered Multi-Languages Knowledge Enhancement

==================================================

A Easy way to create your own Knowledge-base!

Notemd enhances your Obsidian workflow by integrating with various Large Language Models (LLMs) to process your multi-languages notes, automatically generate wiki-links for key concepts, create corresponding concept notes, perform web research, helping you build powerful knowledge graphs and more.

Version: 1.4.0

- Quick Start

- Features

- Installation

- Configuration

- Usage Guide

- Supported LLM Providers

- Troubleshooting

- Contributing

- License

- Install & Enable: Get the plugin from the Obsidian Marketplace.

-

Configure LLM: Go to

Settings -> Notemd, select your LLM provider (like OpenAI or a local one like Ollama), and enter your API key/URL. - Open Sidebar: Click the Notemd wand icon in the left ribbon to open the sidebar.

-

Process a Note: Open any note and click "Process File (Add Links)" in the sidebar to automatically add

[[wiki-links]]to key concepts.

That's it! Explore the settings to unlock more features like web research, translation, and content generation.

- Multi-LLM Support: Connect to various cloud and local LLM providers (see Supported LLM Providers).

- Smart Chunking: Automatically splits large documents into manageable chunks based on word count for processing.

- Content Preservation: Aims to maintain original formatting while adding structure and links.

- Progress Tracking: Real-time updates via the Notemd Sidebar or a progress modal.

- Cancellable Operations: Cancel any processing task (single or batch) initiated from the sidebar via its dedicated cancel button. Command palette operations use a modal which can also be cancelled.

- Multi-Model Configuration: Use different LLM providers and specific models for different tasks (Add Links, Research, Generate Title, Translate) or use a single provider for all.

- Stable API Calls (Retry Logic): Optionally enable automatic retries for failed LLM API calls with configurable interval and attempt limits.

-

Automatic Wiki-Linking: Identifies and adds

[[wiki-links]]to core concepts within your processed notes based on LLM output. - Concept Note Creation (Optional & Customizable): Automatically creates new notes for discovered concepts in a specified vault folder.

- Customizable Output Paths: Configure separate relative paths within your vault for saving processed files and newly created concept notes.

-

Customizable Output Filenames (Add Links): Optionally overwrite the original file or use a custom suffix/replacement string instead of the default

_processed.mdwhen processing files for links. - Link Integrity Maintenance: Basic handling for updating links when notes are renamed or deleted within the vault.

-

AI-Powered Translation:

- Translate note content using the configured LLM.

-

Large File Support: Automatically splits large files into smaller chunks based on the

Chunk word countsetting before sending them to the LLM. The translated chunks are then seamlessly combined back into a single document. - Supports translation between multiple languages.

- Customizable target language in settings or in UI.

- Automatically open the translated text on the right side of the original text for easy reading.

-

Web Research & Summarization:

- Perform web searches using Tavily (requires API key) or DuckDuckGo (experimental).

- Summarize search results using the configured LLM.

- The output language of the summary can be customized in the settings.

- Append summaries to the current note.

- Configurable token limit for research content sent to the LLM.

-

Content Generation from Title:

- Use the note title to generate initial content via LLM, replacing existing content.

- Optional Research: Configure whether to perform web research (using the selected provider) to provide context for generation.

-

Batch Content Generation from Titles: Generate content for all notes within a selected folder based on their titles (respects the optional research setting). Successfully processed files are moved to a configurable "complete" subfolder (e.g.,

[foldername]_completeor a custom name) to avoid reprocessing.

-

Summarise as Mermaid diagram:

- This feature allows you to summarize the content of a note into a Mermaid diagram.

- The output language of the Mermaid diagram can be customized in the settings.

- Mermaid Output Folder: Configure the folder where the generated Mermaid diagram files will be saved.

- Translate Summarize to Mermaid Output: Optionally translate the generated Mermaid diagram content into the configured target language.

- Check for Duplicates in Current File: This command helps identify potential duplicate terms within the active file.

- Duplicate Detection: Basic check for duplicate words within the currently processed file's content (results logged to console).

- Check and Remove Duplicate Concept Notes: Identifies potential duplicate notes within the configured Concept Note Folder based on exact name matches, plurals, normalization, and single-word containment compared to notes outside the folder. The scope of the comparison (which notes outside the concept folder are checked) can be configured to the entire vault, specific included folders, or all folders excluding specific ones. Presents a detailed list with reasons and conflicting files, then prompts for confirmation before moving identified duplicates to system trash. Shows progress during deletion.

-

Batch Mermaid Fix: Applies Mermaid and LaTeX syntax corrections (

refineMermaidBlocksandcleanupLatexDelimiters) to all Markdown files within a user-selected folder. - LLM Connection Test: Verify API settings for the active provider.

- Open Obsidian Settings → Community plugins.

- Ensure "Restricted mode" is off.

- Click Browse community plugins and search for "Notemd".

- Click Install.

- Once installed, click Enable.

- Download the latest release files (

main.js,styles.css,manifest.json) from the GitHub Releases page . - Navigate to your Obsidian vault's configuration folder:

<YourVault>/.obsidian/plugins/. - Create a new folder named

notemd. - Copy the downloaded

main.js,styles.css, andmanifest.jsonfiles into thenotemdfolder. - Restart Obsidian.

- Go to Settings → Community plugins and enable "Notemd".

Access plugin settings via: Settings → Community Plugins → Notemd (Click the gear icon).

- Active Provider: Select the LLM provider you want to use from the dropdown menu.

-

Provider Settings: Configure the specific settings for the selected provider:

-

API Key: Required for most cloud providers (e.g., OpenAI, Anthropic, DeepSeek, Google, Mistral, Azure, OpenRouter). Not needed for Ollama. LMStudio often uses

EMPTYor can be left blank. - Base URL / Endpoint: The API endpoint for the service. Defaults are provided, but you may need to change this for local models (LMStudio, Ollama), OpenRouter, or specific Azure deployments. Required for Azure OpenAI.

-

Model: The specific model name/ID to use (e.g.,

gpt-4o,claude-3-5-sonnet-20240620,google/gemini-flash-1.5,llama3,mistral-large-latest). Ensure the model is available at your endpoint/provider. For OpenRouter, use the model ID shown on their site (e.g.,gryphe/mythomax-l2-13b). - Temperature: Controls the randomness of the LLM's output (0=deterministic, 1=max creativity). Lower values (e.g., 0.2-0.5) are generally better for structured tasks.

-

API Version (Azure Only): Required for Azure OpenAI deployments (e.g.,

2024-02-15-preview).

-

API Key: Required for most cloud providers (e.g., OpenAI, Anthropic, DeepSeek, Google, Mistral, Azure, OpenRouter). Not needed for Ollama. LMStudio often uses

- Test Connection: Use the "Test Connection" button for the active provider to verify your settings. This now uses a more reliable method for LM Studio.

-

Manage Provider Configurations: Use the "Export Providers" and "Import Providers" buttons to save/load your LLM provider settings to/from a

notemd-providers.jsonfile within the plugin's configuration directory. This allows for easy backup and sharing.

-

Use Different Providers for Tasks:

- Disabled (Default): Uses the single "Active Provider" (selected above) for all tasks.

- Enabled: Allows you to select a specific provider and optionally override the model name for each task ("Add Links", "Research & Summarize", "Generate from Title", "Translate"). If the model override field for a task is left blank, it will use the default model configured for that task's selected provider.

-

Select different languages for different tasks:

- Disabled (Default): Uses the single "Output language" for all tasks.

- Enabled: Allows you to select a specific language for each task ("Add Links", "Research & Summarize", "Generate from Title", "Summarise as Mermaid diagram").

-

Enable Stable API Calls (Retry Logic):

- Disabled (Default): A single API call failure will stop the current task.

- Enabled: Automatically retries failed LLM API calls (useful for intermittent network issues or rate limits).

- Retry Interval (seconds): (Visible only when enabled) Time to wait between retry attempts (1-300 seconds). Default: 5.

- Maximum Retries: (Visible only when enabled) Maximum number of retry attempts (0-10). Default: 3.

-

Customize Processed File Save Path:

-

Disabled (Default): Processed files (e.g.,

YourNote_processed.md) are saved in the same folder as the original note. - Enabled: Allows you to specify a custom save location.

-

Disabled (Default): Processed files (e.g.,

-

Processed File Folder Path: (Visible only when the above is enabled) Enter a relative path within your vault (e.g.,

Processed NotesorOutput/LLM) where processed files should be saved. Folders will be created if they don't exist. Do not use absolute paths (like C:...) or invalid characters. -

Use Custom Output Filename for 'Add Links':

-

Disabled (Default): Processed files created by the 'Add Links' command use the default

_processed.mdsuffix (e.g.,YourNote_processed.md). - Enabled: Allows you to customize the output filename using the setting below.

-

Disabled (Default): Processed files created by the 'Add Links' command use the default

-

Custom Suffix/Replacement String: (Visible only when the above is enabled) Enter the string to use for the output filename.

- If left empty, the original file will be overwritten with the processed content.

- If you enter a string (e.g.,

_linked), it will be appended to the original base name (e.g.,YourNote_linked.md). Ensure the suffix doesn't contain invalid filename characters.

-

Remove Code Fences on Add Links:

- Disabled (Default): Code fences (```) are kept in the content when adding links, and (```markdown) will be delete automaticly.

- Enabled: Removes code fences from the content before adding links.

-

Customize Concept Note Path:

-

Disabled (Default): Automatic creation of notes for

[[linked concepts]]is disabled. - Enabled: Allows you to specify a folder where new concept notes will be created.

-

Disabled (Default): Automatic creation of notes for

-

Concept Note Folder Path: (Visible only when the above is enabled) Enter a relative path within your vault (e.g.,

ConceptsorGenerated/Topics) where new concept notes should be saved. Folders will be created if they don't exist. Must be filled if customization is enabled. Do not use absolute paths or invalid characters.

-

Generate Concept Log File:

- Disabled (Default): No log file is generated.

-

Enabled: Creates a log file listing newly created concept notes after processing. The format is:

generate xx concepts md file 1. concepts1 2. concepts2 ... n. conceptsn

-

Customize Log File Save Path: (Visible only when "Generate Concept Log File" is enabled)

- Disabled (Default): The log file is saved in the Concept Note Folder Path (if specified) or the vault root otherwise.

- Enabled: Allows you to specify a custom folder for the log file.

-

Concept Log Folder Path: (Visible only when "Customize Log File Save Path" is enabled) Enter a relative path within your vault (e.g.,

Logs/Notemd) where the log file should be saved. Must be filled if customization is enabled. -

Customize Log File Name: (Visible only when "Generate Concept Log File" is enabled)

-

Disabled (Default): The log file is named

Generate.log. - Enabled: Allows you to specify a custom name for the log file.

-

Disabled (Default): The log file is named

-

Concept Log File Name: (Visible only when "Customize Log File Name" is enabled) Enter the desired file name (e.g.,

ConceptCreation.log). Must be filled if customization is enabled.

- Chunk Word Count: Maximum words per chunk sent to the LLM. Affects the number of API calls for large files. (Default: 3000)

- Enable Duplicate Detection: Toggles the basic check for duplicate words within processed content (results in console). (Default: Enabled)

- Max Tokens: Maximum tokens the LLM should generate per response chunk. Affects cost and detail. (Default: 4096)

- Default Target Language: Select the default language you want to translate your notes into. This can be overridden in the UI when running the translation command. (Default: English)

-

Customise Translation File Save Path:

- Disabled (Default): Translated files are saved in the same folder as the original note.

-

Enabled: Allows you to specify a relative path within your vault (e.g.,

Translations) where translated files should be saved. Folders will be created if they don't exist.

-

Use custom suffix for translated files:

-

Disabled (Default): Translated files use the default

_translated.mdsuffix (e.g.,YourNote_translated.md). - Enabled: Allows you to specify a custom suffix.

-

Disabled (Default): Translated files use the default

-

Custom Suffix: (Visible only when the above is enabled) Enter the custom suffix to append to translated filenames (e.g.,

_esor_fr).

-

Enable Research in "Generate from Title":

- Disabled (Default): "Generate from Title" uses only the title as input.

- Enabled: Performs web research using the configured Web Research Provider and includes the findings as context for the LLM during title-based generation.

-

Output Language: (New) Select the desired output language for "Generate from Title" and "Batch Generate from Title" tasks.

- English (Default): Prompts are processed and output in English.

- Other Languages: The LLM is instructed to perform its reasoning in English but provide the final documentation in your selected language (e.g., Español, Français, 简体中文, 繁體中文, العربية, हिन्दी, etc.).

-

Change Prompt Word: (New)

- Change Prompt Word: Allows you to change the prompt word for a specific task.

- Custom Prompt Word: Enter your custom prompt word for the task.

-

Use Custom Output Folder for 'Generate from Title':

-

Disabled (Default): Successfully generated files are moved to a subfolder named

[OriginalFolderName]_completerelative to the original folder's parent (orVault_completeif the original folder was the root). - Enabled: Allows you to specify a custom name for the subfolder where completed files are moved.

-

Disabled (Default): Successfully generated files are moved to a subfolder named

-

Custom Output Folder Name: (Visible only when the above is enabled) Enter the desired name for the subfolder (e.g.,

Generated Content,_complete). Invalid characters are not allowed. Defaults to_completeif left empty. This folder is created relative to the original folder's parent directory.

This feature allows you to override the default instructions (prompts) sent to the LLM for specific tasks, giving you fine-grained control over the output.

-

Enable Custom Prompts for Specific Tasks:

- Disabled (Default): The plugin uses its built-in default prompts for all operations.

- Enabled: Activates the ability to set custom prompts for the tasks listed below. This is the master switch for this feature.

-

Use Custom Prompt for [Task Name]: (Visible only when the above is enabled)

- For each supported task ("Add Links", "Generate from Title", "Research & Summarize"), you can individually enable or disable your custom prompt.

- Disabled: This specific task will use the default prompt.

- Enabled: This task will use the text you provide in the corresponding "Custom Prompt" text area below.

-

Custom Prompt Text Area: (Visible only when a task's custom prompt is enabled)

- Default Prompt Display: For your reference, the plugin displays the default prompt that it would normally use for the task. You can use the "Copy Default Prompt" button to copy this text as a starting point for your own custom prompt.

- Custom Prompt Input: This is where you write your own instructions for the LLM.

-

Placeholders: You can (and should) use special placeholders in your prompt, which the plugin will replace with actual content before sending the request to the LLM. Refer to the default prompt to see which placeholders are available for each task. Common placeholders include:

-

{TITLE}: The title of the current note. -

{RESEARCH_CONTEXT_SECTION}: The content gathered from web research. -

{USER_PROMPT}: The content of the note being processed.

-

-

Duplicate Check Scope Mode: Controls which files are checked against the notes in your Concept Note Folder for potential duplicates.

- Entire Vault (Default): Compares concept notes against all other notes in the vault (excluding the Concept Note Folder itself).

- Include Specific Folders Only: Compares concept notes only against notes within the folders listed below.

- Exclude Specific Folders: Compares concept notes against all notes except those within the folders listed below (and also excluding the Concept Note Folder).

- Concept Folder Only: Compares concept notes only against other notes within the Concept Note Folder. This helps find duplicates purely inside your generated concepts.

-

Include/Exclude Folders: (Visible only if Mode is 'Include' or 'Exclude') Enter the relative paths of the folders you want to include or exclude, one path per line. Paths are case-sensitive and use

/as the separator (e.g.,Reference Material/PapersorDaily Notes). These folders cannot be the same as or inside the Concept Note Folder.

-

Search Provider: Choose between

Tavily(requires API key, recommended) andDuckDuckGo(experimental, often blocked by the search engine for automated requests). Used for "Research & Summarize Topic" and optionally for "Generate from Title". - Tavily API Key: (Visible only if Tavily is selected) Enter your API key from tavily.com.

- Tavily Max Results: (Visible only if Tavily is selected) Maximum number of search results Tavily should return (1-20). Default: 5.

-

Tavily Search Depth: (Visible only if Tavily is selected) Choose

basic(default) oradvanced. Note:advancedprovides better results but costs 2 API credits per search instead of 1. - DuckDuckGo Max Results: (Visible only if DuckDuckGo is selected) Maximum number of search results to parse (1-10). Default: 5.

- DuckDuckGo Content Fetch Timeout: (Visible only if DuckDuckGo is selected) Maximum seconds to wait when trying to fetch content from each DuckDuckGo result URL. Default: 15.

- Max Research Content Tokens: Approximate maximum tokens from combined web research results (snippets/fetched content) to include in the summarization prompt. Helps manage context window size and cost. (Default: 3000)

-

Enable Focused Learning Domain:

- Disabled (Default): Prompts sent to the LLM use the standard, general-purpose instructions.

- Enabled: Allows you to specify one or more fields of study to improve the LLM's contextual understanding.

- Learning Domain: (Visible only when the above is enabled) Enter your specific field(s), e.g., 'Materials Science', 'Polymer Physics', 'Machine Learning'. This will add a "Relevant Fields: [...]" line to the beginning of prompts, helping the LLM generate more accurate and relevant links and content for your specific area of study.

This is the core functionality focused on identifying concepts and adding [[wiki-links]].

Important: This process only works on .md or .txt files. You can convert PDF files to MD files for free using Mineru before further processing.

-

Using the Sidebar:

- Open the Notemd Sidebar (wand icon or command palette).

- Open the

.mdor.txtfile. - Click "Process File (Add Links)".

- To process a folder: Click "Process Folder (Add Links)", select the folder, and click "Process".

- Progress is shown in the sidebar. You can cancel the task using the "Cancel Processing" button in the sidebar.

- Note for folder processing: Files are processed in the background without being opened in the editor.

-

Using the Command Palette (

Ctrl+PorCmd+P):-

Single File: Open the file and run

Notemd: Process Current File. -

Folder: Run

Notemd: Process Folder, then select the folder. Files are processed in the background without being opened in the editor. - A progress modal appears for command palette actions, which includes a cancel button.

-

Note: The plugin automatically removes leading

\boxed{and trailing}lines if found in the final processed content before saving.

-

Single File: Open the file and run

-

Summarise as Mermaid diagram:

- Open the note you want to summarize.

- Run the command

Notemd: Summarise as Mermaid diagram(via command palette or sidebar button). - The plugin will generate a new note with the Mermaid diagram.

-

Translate Note/Selection:

- Select text in a note to translate just that selection, or invoke the command with no selection to translate the entire note.

- Run the command

Notemd: Translate Note/Selection(via command palette or sidebar button). - A modal will appear allowing you to confirm or change the Target Language (defaulting to the setting specified in Configuration).

- The plugin uses the configured LLM Provider (based on Multi-Model settings) to perform the translation.

- The translated content is saved to the configured Translation Save Path with the appropriate suffix, and opened in a new pane to the right of the original content for easy comparison.

- You can cancel this task via the sidebar button or modal cancel button.

-

Research & Summarize Topic:

- Select text in a note OR ensure the note has a title (this will be the search topic).

- Run the command

Notemd: Research and Summarize Topic(via command palette or sidebar button). - The plugin uses the configured Search Provider (Tavily/DuckDuckGo) and the appropriate LLM Provider (based on Multi-Model settings) to find and summarize information.

- The summary is appended to the current note.

- You can cancel this task via the sidebar button or modal cancel button.

- Note: DuckDuckGo searches may fail due to bot detection. Tavily is recommended.

-

Generate Content from Title:

- Open a note (it can be empty).

- Run the command

Notemd: Generate Content from Title(via command palette or sidebar button). - The plugin uses the appropriate LLM Provider (based on Multi-Model settings) to generate content based on the note's title, replacing any existing content.

- If the "Enable Research in 'Generate from Title'" setting is enabled, it will first perform web research (using the configured Web Research Provider) and include that context in the prompt sent to the LLM.

- You can cancel this task via the sidebar button or modal cancel button.

-

Batch Generate Content from Titles:

- Run the command

Notemd: Batch Generate Content from Titles(via command palette or sidebar button). - Select the folder containing the notes you want to process.

- The plugin will iterate through each

.mdfile in the folder (excluding_processed.mdfiles and files in the designated "complete" folder), generating content based on the note's title and replacing existing content. Files are processed in the background without being opened in the editor. - Successfully processed files are moved to the configured "complete" folder.

- This command respects the "Enable Research in 'Generate from Title'" setting for each note processed.

- You can cancel this task via the sidebar button or modal cancel button.

- Progress and results (number of files modified, errors) are shown in the sidebar/modal log.

- Run the command

-

Check and Remove Duplicate Concept Notes:

- Ensure the Concept Note Folder Path is correctly configured in settings.

- Run

Notemd: Check and Remove Duplicate Concept Notes(via command palette or sidebar button). - The plugin scans the concept note folder and compares filenames against notes outside the folder using several rules (exact match, plurals, normalization, containment).

- If potential duplicates are found, a modal window appears listing the files, the reason they were flagged, and the conflicting files.

- Review the list carefully. Click "Delete Files" to move the listed files to the system trash, or "Cancel" to take no action.

- Progress and results are shown in the sidebar/modal log.

| Provider | Type | API Key Required | Notes |

|---|---|---|---|

| DeepSeek | Cloud | Yes | |

| OpenAI | Cloud | Yes | Supports various models like GPT-4o, GPT-3.5 |

| Anthropic | Cloud | Yes | Supports Claude models |

| Cloud | Yes | Supports Gemini models | |

| Mistral | Cloud | Yes | Supports Mistral models |

| Azure OpenAI | Cloud | Yes | Requires Endpoint, API Key, API Version |

| OpenRouter | Cloud | Yes | Accesses many models via OpenRouter API |

| LMStudio | Local | No (Use EMPTY) |

Runs models locally via LM Studio server |

| Ollama | Local | No | Runs models locally via Ollama server |

Note: For local providers (LMStudio, Ollama), ensure the respective server application is running and accessible at the configured Base URL.

Note: For OpenRouter, use the full model identifier from their website (e.g., google/gemini-flash-1.5) in the Model setting.

-

Plugin Not Loading: Ensure

manifest.json,main.js,styles.cssare in the correct folder (<Vault>/.obsidian/plugins/notemd/) and restart Obsidian. Check the Developer Console (Ctrl+Shift+IorCmd+Option+I) for errors on startup. -

Processing Failures / API Errors:

-

Check File Format: Ensure the file you are trying to process or check has a

.mdor.txtextension. Notemd currently only supports these text-based formats. - Use the "Test LLM Connection" command/button to verify settings for the active provider.

- Double-check API Key, Base URL, Model Name, and API Version (for Azure). Ensure the API key is correct and has sufficient credits/permissions.

- Ensure your local LLM server (LMStudio, Ollama) is running and the Base URL is correct (e.g.,

http://localhost:1234/v1for LMStudio). - Check your internet connection for cloud providers.

- For single file processing errors: Review the Developer Console for detailed error messages. Copy them using the button in the error modal if needed.

-

For batch processing errors: Check the

error_processing_filename.logfile in your vault root for detailed error messages for each failed file. The Developer Console or error modal might show a summary or general batch error.

-

Check File Format: Ensure the file you are trying to process or check has a

- LM Studio Test Connection Fails: Ensure LM Studio server is running and the correct model is loaded and selected within LM Studio. The test now uses the chat completions endpoint, which should be more reliable.

-

Folder Creation Errors ("File name cannot contain..."):

- This usually means the path provided in the settings (Processed File Folder Path or Concept Note Folder Path) is invalid for Obsidian.

-

Ensure you are using relative paths (e.g.,

Processed,Notes/Concepts) and not absolute paths (e.g.,C:\Users\...,/Users/...). - Check for invalid characters:

* " \ / < > : | ? # ^ [ ]. Note that\is invalid even on Windows for Obsidian paths. Use/as the path separator.

- Performance Problems: Processing large files or many files can take time. Reduce the "Chunk Word Count" setting for potentially faster (but more numerous) API calls. Try a different LLM provider or model.

- Unexpected Linking: The quality of linking depends heavily on the LLM and the prompt. Experiment with different models or temperature settings.

Contributions are welcome! Please refer to the GitHub repository for guidelines: https://github.com/Jacobinwwey/obsidian-NotEMD

MIT License - See LICENSE file for details.

Notemd v1.4.0 - Enhance your Obsidian knowledge graph with AI.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for obsidian-NotEMD

Similar Open Source Tools

obsidian-NotEMD

Obsidian-NotEMD is a plugin for the Obsidian note-taking app that allows users to export notes in various formats without converting them to EMD. It simplifies the process of sharing and collaborating on notes by providing seamless export options. With Obsidian-NotEMD, users can easily export their notes to PDF, HTML, Markdown, and other formats directly from Obsidian, saving time and effort. This plugin enhances the functionality of Obsidian by streamlining the export process and making it more convenient for users to work with their notes across different platforms and applications.

Conversational-Azure-OpenAI-Accelerator

The Conversational Azure OpenAI Accelerator is a tool designed to provide rapid, no-cost custom demos tailored to customer use cases, from internal HR/IT to external contact centers. It focuses on top use cases of GenAI conversation and summarization, plus live backend data integration. The tool automates conversations across voice and text channels, providing a valuable way to save money and improve customer and employee experience. By combining Azure OpenAI + Cognitive Search, users can efficiently deploy a ChatGPT experience using web pages, knowledge base articles, and data sources. The tool enables simultaneous deployment of conversational content to chatbots, IVR, voice assistants, and more in one click, eliminating the need for in-depth IT involvement. It leverages Microsoft's advanced AI technologies, resulting in a conversational experience that can converse in human-like dialogue, respond intelligently, and capture content for omni-channel unified analytics.

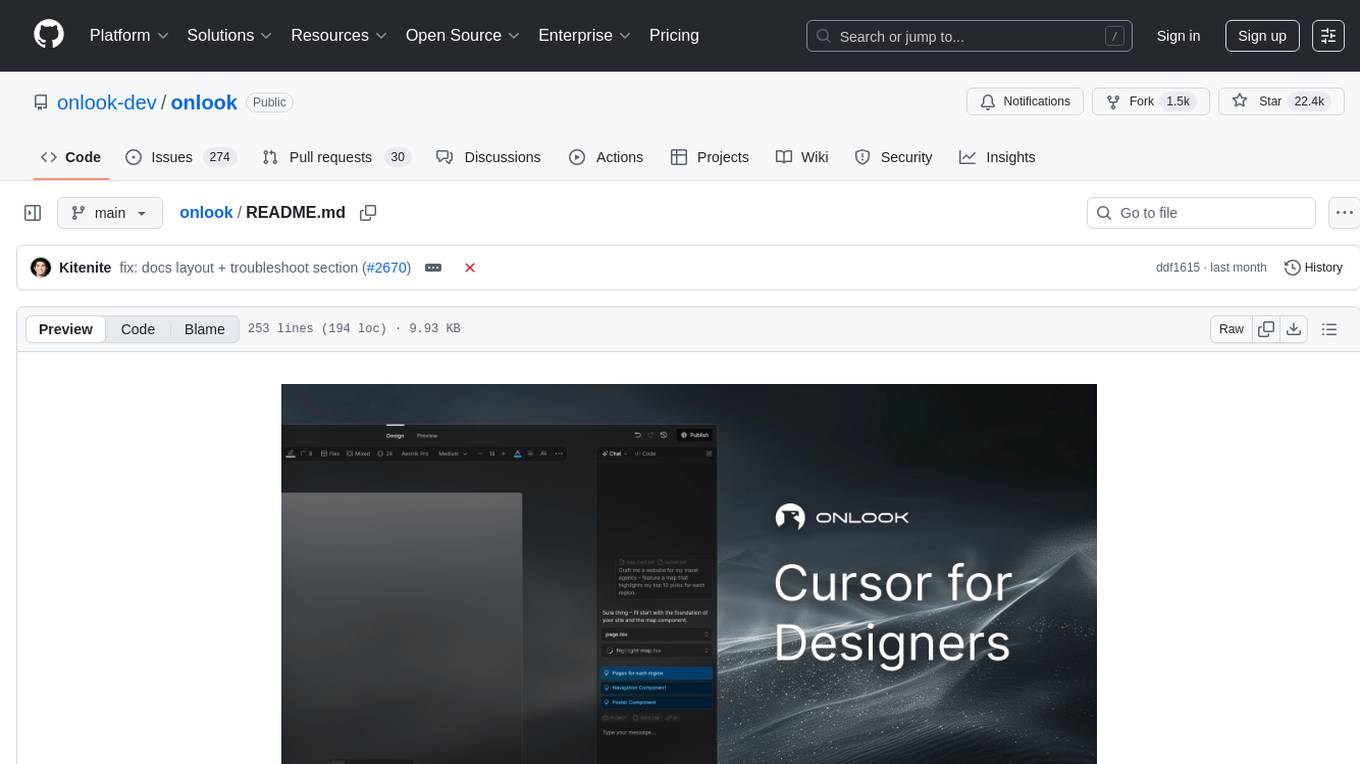

onlook

Onlook is a web scraping tool that allows users to extract data from websites easily and efficiently. It provides a user-friendly interface for creating web scraping scripts and supports various data formats for exporting the extracted data. With Onlook, users can automate the process of collecting information from multiple websites, saving time and effort. The tool is designed to be flexible and customizable, making it suitable for a wide range of web scraping tasks.

LocalAI

LocalAI is a free and open-source OpenAI alternative that acts as a drop-in replacement REST API compatible with OpenAI (Elevenlabs, Anthropic, etc.) API specifications for local AI inferencing. It allows users to run LLMs, generate images, audio, and more locally or on-premises with consumer-grade hardware, supporting multiple model families and not requiring a GPU. LocalAI offers features such as text generation with GPTs, text-to-audio, audio-to-text transcription, image generation with stable diffusion, OpenAI functions, embeddings generation for vector databases, constrained grammars, downloading models directly from Huggingface, and a Vision API. It provides a detailed step-by-step introduction in its Getting Started guide and supports community integrations such as custom containers, WebUIs, model galleries, and various bots for Discord, Slack, and Telegram. LocalAI also offers resources like an LLM fine-tuning guide, instructions for local building and Kubernetes installation, projects integrating LocalAI, and a how-tos section curated by the community. It encourages users to cite the repository when utilizing it in downstream projects and acknowledges the contributions of various software from the community.

Build-Modern-AI-Apps

This repository serves as a hub for Microsoft Official Build & Modernize AI Applications reference solutions and content. It provides access to projects demonstrating how to build Generative AI applications using Azure services like Azure OpenAI, Azure Container Apps, Azure Kubernetes, and Azure Cosmos DB. The solutions include Vector Search & AI Assistant, Real-Time Payment and Transaction Processing, and Medical Claims Processing. Additionally, there are workshops like the Intelligent App Workshop for Microsoft Copilot Stack, focusing on infusing intelligence into traditional software systems using foundation models and design thinking.

yao

YAO is an open-source application engine written in Golang, suitable for developing business systems, website/APP API, admin panel, and self-built low-code platforms. It adopts a flow-based programming model to implement functions by writing YAO DSL or using JavaScript. Yao allows developers to create web services by processes, creating a database model, writing API services, and describing dashboard interfaces just by JSON for web & hardware, and 10x productivity. It is based on the flow-based programming idea, developed in Go language, and supports multiple ways to expand the data stream processor. Yao has a built-in data management system, making it suitable for quickly making various management backgrounds, CRM, ERP, and other internal enterprise systems. It is highly versatile, efficient, and performs better than PHP, JAVA, and other languages.

intelligent-app-workshop

Welcome to the envisioning workshop designed to help you build your own custom Copilot using Microsoft's Copilot stack. This workshop aims to rethink user experience, architecture, and app development by leveraging reasoning engines and semantic memory systems. You will utilize Azure AI Foundry, Prompt Flow, AI Search, and Semantic Kernel. Work with Miyagi codebase, explore advanced capabilities like AutoGen and GraphRag. This workshop guides you through the entire lifecycle of app development, including identifying user needs, developing a production-grade app, and deploying on Azure with advanced capabilities. By the end, you will have a deeper understanding of leveraging Microsoft's tools to create intelligent applications.

AIaW

AIaW is a next-generation LLM client with full functionality, lightweight, and extensible. It supports various basic functions such as streaming transfer, image uploading, and latex formulas. The tool is cross-platform with a responsive interface design. It supports multiple service providers like OpenAI, Anthropic, and Google. Users can modify questions, regenerate in a forked manner, and visualize conversations in a tree structure. Additionally, it offers features like file parsing, video parsing, plugin system, assistant market, local storage with real-time cloud sync, and customizable interface themes. Users can create multiple workspaces, use dynamic prompt word variables, extend plugins, and benefit from detailed design elements like real-time content preview, optimized code pasting, and support for various file types.

Companion

Companion is a software tool designed to provide support and enhance development. It offers various features and functionalities to assist users in their projects and tasks. The tool aims to be user-friendly and efficient, helping individuals and teams to streamline their workflow and improve productivity.

trafilatura

Trafilatura is a Python package and command-line tool for gathering text on the Web and simplifying the process of turning raw HTML into structured, meaningful data. It includes components for web crawling, downloads, scraping, and extraction of main texts, metadata, and comments. The tool aims to focus on actual content, avoid noise, and make sense of data and metadata. It is robust, fast, and widely used by companies and institutions. Trafilatura outperforms other libraries in text extraction benchmarks and offers various features like support for sitemaps, parallel processing, configurable extraction of key elements, multiple output formats, and optional add-ons. The tool is actively maintained with regular updates and comprehensive documentation.

sycamore

Sycamore is a conversational search and analytics platform for complex unstructured data, such as documents, presentations, transcripts, embedded tables, and internal knowledge repositories. It retrieves and synthesizes high-quality answers through bringing AI to data preparation, indexing, and retrieval. Sycamore makes it easy to prepare unstructured data for search and analytics, providing a toolkit for data cleaning, information extraction, enrichment, summarization, and generation of vector embeddings that encapsulate the semantics of data. Sycamore uses your choice of generative AI models to make these operations simple and effective, and it enables quick experimentation and iteration. Additionally, Sycamore uses OpenSearch for indexing, enabling hybrid (vector + keyword) search, retrieval-augmented generation (RAG) pipelining, filtering, analytical functions, conversational memory, and other features to improve information retrieval.

Gito

Gito is a lightweight and user-friendly tool for managing and organizing your GitHub repositories. It provides a simple and intuitive interface for users to easily view, clone, and manage their repositories. With Gito, you can quickly access important information about your repositories, such as commit history, branches, and pull requests. The tool also allows you to perform common Git operations, such as pushing changes and creating new branches, directly from the interface. Gito is designed to streamline your GitHub workflow and make repository management more efficient and convenient.

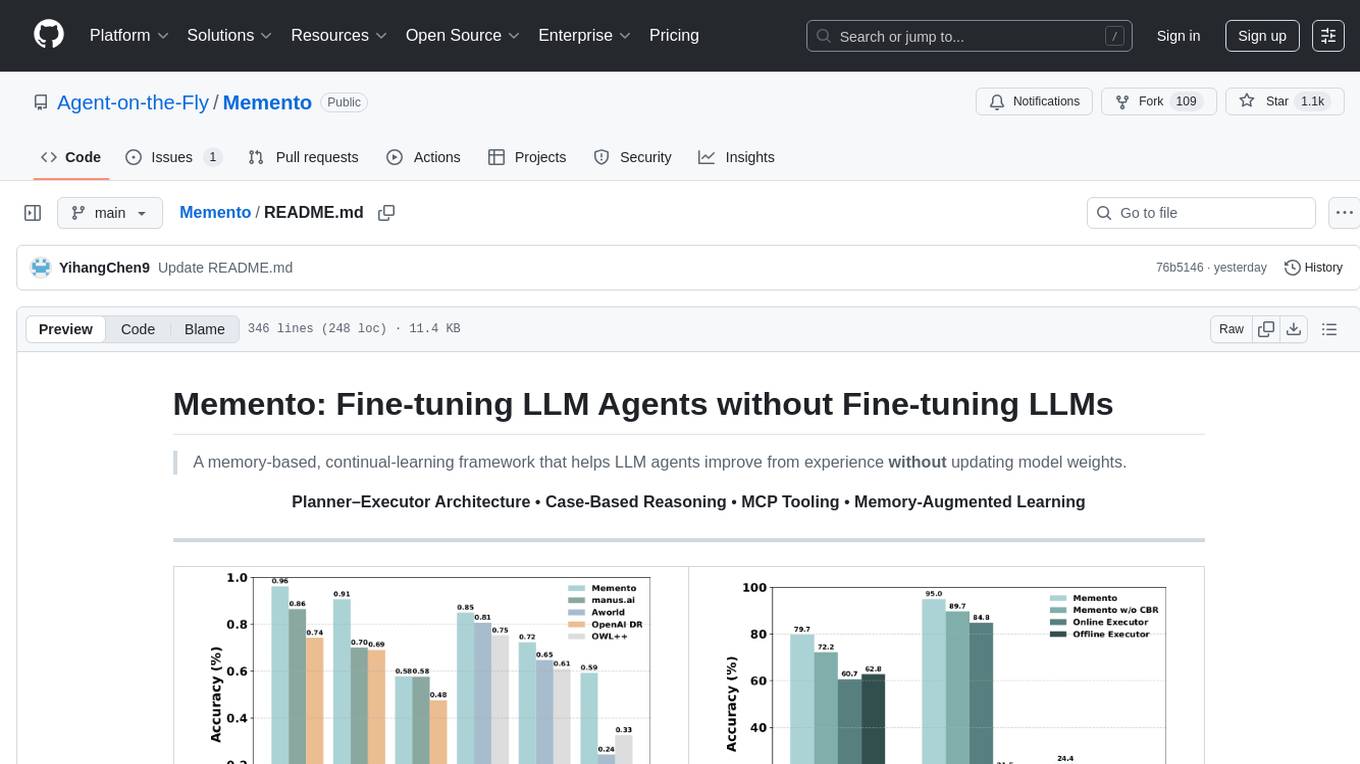

Memento

Memento is a lightweight and user-friendly version control tool designed for small to medium-sized projects. It provides a simple and intuitive interface for managing project versions and collaborating with team members. With Memento, users can easily track changes, revert to previous versions, and merge different branches. The tool is suitable for developers, designers, content creators, and other professionals who need a streamlined version control solution. Memento simplifies the process of managing project history and ensures that team members are always working on the latest version of the project.

pullfrog

Pullfrog is a versatile tool for managing and automating GitHub pull requests. It provides a simple and intuitive interface for developers to streamline their workflow and collaborate more efficiently. With Pullfrog, users can easily create, review, merge, and manage pull requests, all within a single platform. The tool offers features such as automated testing, code review, and notifications to help teams stay organized and productive. Whether you are a solo developer or part of a large team, Pullfrog can help you simplify the pull request process and improve code quality.

baibot

Baibot is a versatile chatbot framework designed to simplify the process of creating and deploying chatbots. It provides a user-friendly interface for building custom chatbots with various functionalities such as natural language processing, conversation flow management, and integration with external APIs. Baibot is highly customizable and can be easily extended to suit different use cases and industries. With Baibot, developers can quickly create intelligent chatbots that can interact with users in a seamless and engaging manner, enhancing user experience and automating customer support processes.

llm

The 'llm' package for Emacs provides an interface for interacting with Large Language Models (LLMs). It abstracts functionality to a higher level, concealing API variations and ensuring compatibility with various LLMs. Users can set up providers like OpenAI, Gemini, Vertex, Claude, Ollama, GPT4All, and a fake client for testing. The package allows for chat interactions, embeddings, token counting, and function calling. It also offers advanced prompt creation and logging capabilities. Users can handle conversations, create prompts with placeholders, and contribute by creating providers.

For similar tasks

examples

This repository contains a collection of sample applications and Jupyter Notebooks for hands-on experience with Pinecone vector databases and common AI patterns, tools, and algorithms. It includes production-ready examples for review and support, as well as learning-optimized examples for exploring AI techniques and building applications. Users can contribute, provide feedback, and collaborate to improve the resource.

OpenAGI

OpenAGI is an AI agent creation package designed for researchers and developers to create intelligent agents using advanced machine learning techniques. The package provides tools and resources for building and training AI models, enabling users to develop sophisticated AI applications. With a focus on collaboration and community engagement, OpenAGI aims to facilitate the integration of AI technologies into various domains, fostering innovation and knowledge sharing among experts and enthusiasts.

sirji

Sirji is an agentic AI framework for software development where various AI agents collaborate via a messaging protocol to solve software problems. It uses standard or user-generated recipes to list tasks and tips for problem-solving. Agents in Sirji are modular AI components that perform specific tasks based on custom pseudo code. The framework is currently implemented as a Visual Studio Code extension, providing an interactive chat interface for problem submission and feedback. Sirji sets up local or remote development environments by installing dependencies and executing generated code.

dewhale

Dewhale is a GitHub-Powered AI tool designed for effortless development. It utilizes prompt engineering techniques under the GPT-4 model to issue commands, allowing users to generate code with lower usage costs and easy customization. The tool seamlessly integrates with GitHub, providing version control, code review, and collaborative features. Users can join discussions on the design philosophy of Dewhale and explore detailed instructions and examples for setting up and using the tool.

max

The Modular Accelerated Xecution (MAX) platform is an integrated suite of AI libraries, tools, and technologies that unifies commonly fragmented AI deployment workflows. MAX accelerates time to market for the latest innovations by giving AI developers a single toolchain that unlocks full programmability, unparalleled performance, and seamless hardware portability.

Awesome-CVPR2024-ECCV2024-AIGC

A Collection of Papers and Codes for CVPR 2024 AIGC. This repository compiles and organizes research papers and code related to CVPR 2024 and ECCV 2024 AIGC (Artificial Intelligence and Graphics Computing). It serves as a valuable resource for individuals interested in the latest advancements in the field of computer vision and artificial intelligence. Users can find a curated list of papers and accompanying code repositories for further exploration and research. The repository encourages collaboration and contributions from the community through stars, forks, and pull requests.

ZetaForge

ZetaForge is an open-source AI platform designed for rapid development of advanced AI and AGI pipelines. It allows users to assemble reusable, customizable, and containerized Blocks into highly visual AI Pipelines, enabling rapid experimentation and collaboration. With ZetaForge, users can work with AI technologies in any programming language, easily modify and update AI pipelines, dive into the code whenever needed, utilize community-driven blocks and pipelines, and share their own creations. The platform aims to accelerate the development and deployment of advanced AI solutions through its user-friendly interface and community support.

NeoHaskell

NeoHaskell is a newcomer-friendly and productive dialect of Haskell. It aims to be easy to learn and use, while also powerful enough for app development with minimal effort and maximum confidence. The project prioritizes design and documentation before implementation, with ongoing work on design documents for community sharing.

For similar jobs

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

Windrecorder

Windrecorder is an open-source tool that helps you retrieve memory cues by recording everything on your screen. It can search based on OCR text or image descriptions and provides a summary of your activities. All of its capabilities run entirely locally, without the need for an internet connection or uploading any data, giving you complete ownership of your data.

forge

Forge is a free and open-source digital collectible card game (CCG) engine written in Java. It is designed to be easy to use and extend, and it comes with a variety of features that make it a great choice for developers who want to create their own CCGs. Forge is used by a number of popular CCGs, including Ascension, Dominion, and Thunderstone.

userscripts

Greasemonkey userscripts. A userscript manager such as Tampermonkey is required to run these scripts.

freeGPT

freeGPT provides free access to text and image generation models. It supports various models, including gpt3, gpt4, alpaca_7b, falcon_40b, prodia, and pollinations. The tool offers both asynchronous and non-asynchronous interfaces for text completion and image generation. It also features an interactive Discord bot that provides access to all the models in the repository. The tool is easy to use and can be integrated into various applications.

open-saas

Open SaaS is a free and open-source React and Node.js template for building SaaS applications. It comes with a variety of features out of the box, including authentication, payments, analytics, and more. Open SaaS is built on top of the Wasp framework, which provides a number of features to make it easy to build SaaS applications, such as full-stack authentication, end-to-end type safety, jobs, and one-command deploy.

AIGODLIKE-ComfyUI-Translation

A plugin for multilingual translation of ComfyUI, This plugin implements translation of resident menu bar/search bar/right-click context menu/node, etc

free-for-life

A massive list including a huge amount of products and services that are completely free! ⭐ Star on GitHub • 🤝 Contribute # Table of Contents * APIs, Data & ML * Artificial Intelligence * BaaS * Code Editors * Code Generation * DNS * Databases * Design & UI * Domains * Email * Font * For Students * Forms * Linux Distributions * Messaging & Streaming * PaaS * Payments & Billing * SSL