trae-agent

Trae Agent is an LLM-based agent for general purpose software engineering tasks.

Stars: 9252

Trae-agent is a Python library for building and training reinforcement learning agents. It provides a simple and flexible framework for implementing various reinforcement learning algorithms and experimenting with different environments. With Trae-agent, users can easily create custom agents, define reward functions, and train them on a variety of tasks. The library also includes utilities for visualizing agent performance and analyzing training results, making it a valuable tool for both beginners and experienced researchers in the field of reinforcement learning.

README:

Trae Agent is an LLM-based agent for general purpose software engineering tasks. It provides a powerful CLI interface that can understand natural language instructions and execute complex software engineering workflows using various tools and LLM providers.

For technical details please refer to our technical report.

Project Status: The project is still being actively developed. Please refer to docs/roadmap.md and CONTRIBUTING if you are willing to help us improve Trae Agent.

Difference with Other CLI Agents: Trae Agent offers a transparent, modular architecture that researchers and developers can easily modify, extend, and analyze, making it an ideal platform for studying AI agent architectures, conducting ablation studies, and developing novel agent capabilities. This research-friendly design enables the academic and open-source communities to contribute to and build upon the foundational agent framework, fostering innovation in the rapidly evolving field of AI agents.

- 🌊 Lakeview: Provides short and concise summarisation for agent steps

- 🤖 Multi-LLM Support: Works with OpenAI, Anthropic, Doubao, Azure, OpenRouter, Ollama and Google Gemini APIs

- 🛠️ Rich Tool Ecosystem: File editing, bash execution, sequential thinking, and more

- 🎯 Interactive Mode: Conversational interface for iterative development

- 📊 Trajectory Recording: Detailed logging of all agent actions for debugging and analysis

- ⚙️ Flexible Configuration: YAML-based configuration with environment variable support

- 🚀 Easy Installation: Simple pip-based installation

- UV (https://docs.astral.sh/uv/)

- API key for your chosen provider (OpenAI, Anthropic, Google Gemini, OpenRouter, etc.)

git clone https://github.com/bytedance/trae-agent.git

cd trae-agent

uv sync --all-extras

source .venv/bin/activate-

Copy the example configuration file:

cp trae_config.yaml.example trae_config.yaml

-

Edit

trae_config.yamlwith your API credentials and preferences:

agents:

trae_agent:

enable_lakeview: true

model: trae_agent_model # the model configuration name for Trae Agent

max_steps: 200 # max number of agent steps

tools: # tools used with Trae Agent

- bash

- str_replace_based_edit_tool

- sequentialthinking

- task_done

model_providers: # model providers configuration

anthropic:

api_key: your_anthropic_api_key

provider: anthropic

openai:

api_key: your_openai_api_key

provider: openai

models:

trae_agent_model:

model_provider: anthropic

model: claude-sonnet-4-20250514

max_tokens: 4096

temperature: 0.5Note: The trae_config.yaml file is ignored by git to protect your API keys.

In some cases, we need to use a custom URL for the api. Just add the base_url field after provider, take the following config as an example:

openai:

api_key: your_openrouter_api_key

provider: openai

base_url: https://openrouter.ai/api/v1

Note: For field formatting, use spaces only. Tabs (\t) are not allowed.

You can also configure API keys using environment variables and store them in the .env file:

export OPENAI_API_KEY="your-openai-api-key"

export OPENAI_BASE_URL="your-openai-base-url"

export ANTHROPIC_API_KEY="your-anthropic-api-key"

export ANTHROPIC_BASE_URL="your-anthropic-base-url"

export GOOGLE_API_KEY="your-google-api-key"

export GOOGLE_BASE_URL="your-google-base-url"

export OPENROUTER_API_KEY="your-openrouter-api-key"

export OPENROUTER_BASE_URL="https://openrouter.ai/api/v1"

export DOUBAO_API_KEY="your-doubao-api-key"

export DOUBAO_BASE_URL="https://ark.cn-beijing.volces.com/api/v3/"To enable Model Context Protocol (MCP) services, add an mcp_servers section to your configuration:

mcp_servers:

playwright:

command: npx

args:

- "@playwright/[email protected]"Configuration Priority: Command-line arguments > Configuration file > Environment variables > Default values

Legacy JSON Configuration: If using the older JSON format, see docs/legacy_config.md. We recommend migrating to YAML.

# Simple task execution

trae-cli run "Create a hello world Python script"

# Check configuration

trae-cli show-config

# Interactive mode

trae-cli interactive# OpenAI

trae-cli run "Fix the bug in main.py" --provider openai --model gpt-4o

# Anthropic

trae-cli run "Add unit tests" --provider anthropic --model claude-sonnet-4-20250514

# Google Gemini

trae-cli run "Optimize this algorithm" --provider google --model gemini-2.5-flash

# OpenRouter (access to multiple providers)

trae-cli run "Review this code" --provider openrouter --model "anthropic/claude-3-5-sonnet"

trae-cli run "Generate documentation" --provider openrouter --model "openai/gpt-4o"

# Doubao

trae-cli run "Refactor the database module" --provider doubao --model doubao-seed-1.6

# Ollama (local models)

trae-cli run "Comment this code" --provider ollama --model qwen3# Custom working directory

trae-cli run "Add tests for utils module" --working-dir /path/to/project

# Save execution trajectory

trae-cli run "Debug authentication" --trajectory-file debug_session.json

# Force patch generation

trae-cli run "Update API endpoints" --must-patch

# Interactive mode with custom settings

trae-cli interactive --provider openai --model gpt-4o --max-steps 30Important: You need to download a directory "_interal" from Google Drive and unzip it into trae-agent/trae_agent/dist/dist_tools. This is used to execute agent tools in Docker containers.

# Specify a Docker image to run the task in a new container

trae-cli run "Add tests for utils module" --docker-image python:3.11

# Attach to an existing Docker container by ID

trae-cli run "Update API endpoints" --docker-container-id 91998a56056c

# Specify an absolute path to a Dockerfile to build an environment

trae-cli run "Debug authentication" --dockerfile-path test_workspace/Dockerfile

# Specify a path to a local Docker image file (tar archive) to load

trae-cli run "Fix the bug in main.py" --docker-image-file test_workspace/trae_agent_custom.tar

# Remove the Docker container after finishing the task (keep default)

trae-cli run "Add tests for utils module" --docker-image python:3.11 --docker-keep falseIn interactive mode, you can use:

- Type any task description to execute it

-

status- Show agent information -

help- Show available commands -

clear- Clear the screen -

exitorquit- End the session

Trae Agent provides a comprehensive toolkit for software engineering tasks including file editing, bash execution, structured thinking, and task completion. For detailed information about all available tools and their capabilities, see docs/tools.md.

Trae Agent automatically records detailed execution trajectories for debugging and analysis:

# Auto-generated trajectory file

trae-cli run "Debug the authentication module"

# Saves to: trajectories/trajectory_YYYYMMDD_HHMMSS.json

# Custom trajectory file

trae-cli run "Optimize database queries" --trajectory-file optimization_debug.jsonTrajectory files contain LLM interactions, agent steps, tool usage, and execution metadata. For more details, see docs/TRAJECTORY_RECORDING.md.

For contribution guidelines, please refer to CONTRIBUTING.md.

Import Errors:

PYTHONPATH=. trae-cli run "your task"API Key Issues:

# Verify API keys

echo $OPENAI_API_KEY

trae-cli show-configCommand Not Found:

uv run trae-cli run "your task"Permission Errors:

chmod +x /path/to/your/projectThis project is licensed under the MIT License - see the LICENSE file for details.

@article{traeresearchteam2025traeagent,

title={Trae Agent: An LLM-based Agent for Software Engineering with Test-time Scaling},

author={Trae Research Team and Pengfei Gao and Zhao Tian and Xiangxin Meng and Xinchen Wang and Ruida Hu and Yuanan Xiao and Yizhou Liu and Zhao Zhang and Junjie Chen and Cuiyun Gao and Yun Lin and Yingfei Xiong and Chao Peng and Xia Liu},

year={2025},

eprint={2507.23370},

archivePrefix={arXiv},

primaryClass={cs.SE},

url={https://arxiv.org/abs/2507.23370},

}We thank Anthropic for building the anthropic-quickstart project that served as a valuable reference for the tool ecosystem.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for trae-agent

Similar Open Source Tools

trae-agent

Trae-agent is a Python library for building and training reinforcement learning agents. It provides a simple and flexible framework for implementing various reinforcement learning algorithms and experimenting with different environments. With Trae-agent, users can easily create custom agents, define reward functions, and train them on a variety of tasks. The library also includes utilities for visualizing agent performance and analyzing training results, making it a valuable tool for both beginners and experienced researchers in the field of reinforcement learning.

Gym

Gym is a toolkit for developing and comparing reinforcement learning algorithms. It provides a wide variety of environments ranging from simple grid worlds to complex 3D environments, allowing researchers to easily test and benchmark their algorithms. With a user-friendly interface and extensive documentation, Gym is suitable for both beginners and experts in the field of reinforcement learning.

AgentsMeetRL

AgentsMeetRL is an awesome list that summarizes open-source repositories for training LLM Agents using reinforcement learning. The criteria for identifying an agent project are multi-turn interactions or tool use. The project is based on code analysis from open-source repositories using GitHub Copilot Agent. The focus is on reinforcement learning frameworks, RL algorithms, rewards, and environments that projects depend on, for everyone's reference on technical choices.

AReaL

AReaL (Ant Reasoning RL) is an open-source reinforcement learning system developed at the RL Lab, Ant Research. It is designed for training Large Reasoning Models (LRMs) in a fully open and inclusive manner. AReaL provides reproducible experiments for 1.5B and 7B LRMs, showcasing its scalability and performance across diverse computational budgets. The system follows an iterative training process to enhance model performance, with a focus on mathematical reasoning tasks. AReaL is equipped to adapt to different computational resource settings, enabling users to easily configure and launch training trials. Future plans include support for advanced models, optimizations for distributed training, and exploring research topics to enhance LRMs' reasoning capabilities.

deeppowers

Deeppowers is a powerful Python library for deep learning applications. It provides a wide range of tools and utilities to simplify the process of building and training deep neural networks. With Deeppowers, users can easily create complex neural network architectures, perform efficient training and optimization, and deploy models for various tasks. The library is designed to be user-friendly and flexible, making it suitable for both beginners and experienced deep learning practitioners.

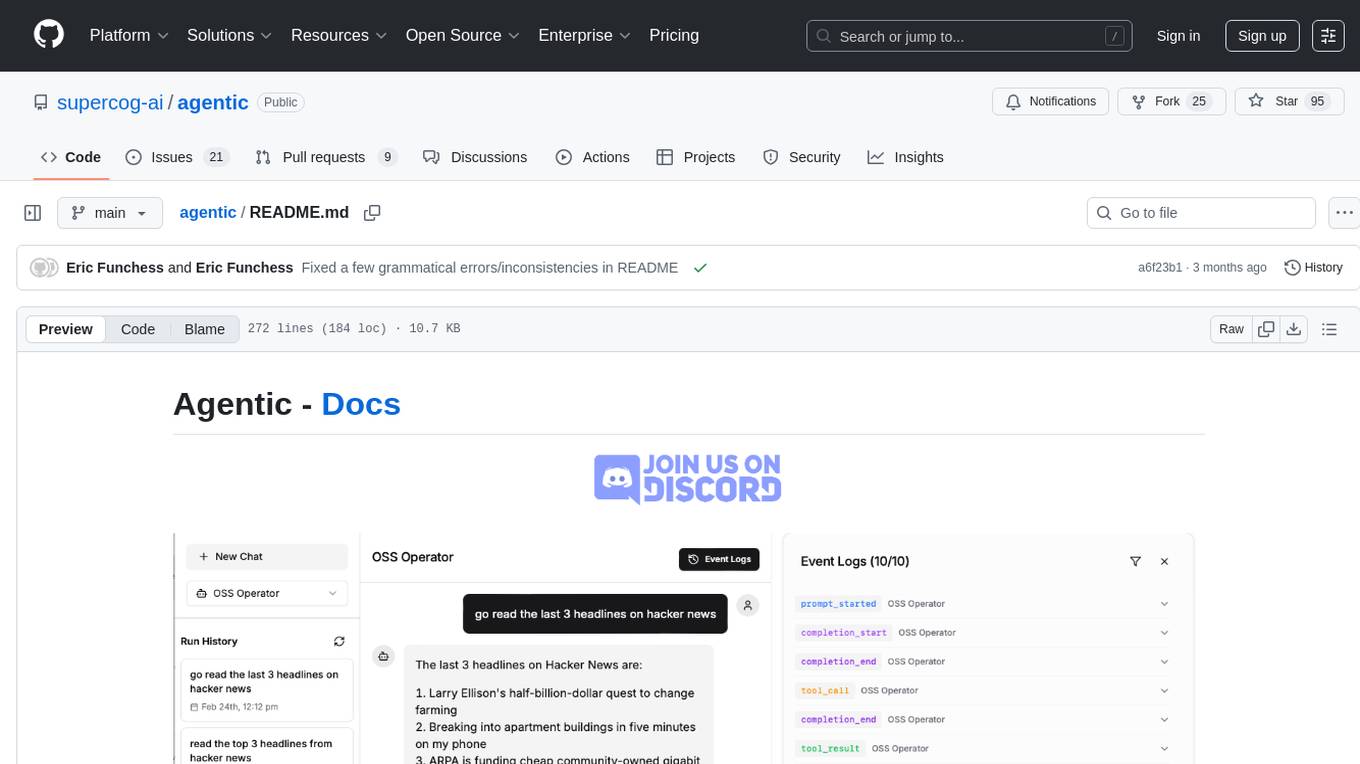

agentic

Agentic is a lightweight and flexible Python library for building multi-agent systems. It provides a simple and intuitive API for creating and managing agents, defining their behaviors, and simulating interactions in a multi-agent environment. With Agentic, users can easily design and implement complex agent-based models to study emergent behaviors, social dynamics, and decentralized decision-making processes. The library supports various agent architectures, communication protocols, and simulation scenarios, making it suitable for a wide range of research and educational applications in the fields of artificial intelligence, machine learning, social sciences, and robotics.

lemonai

LemonAI is a versatile machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and algorithms for data preprocessing, model training, and evaluation. With LemonAI, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is well-documented and beginner-friendly, making it suitable for both novice and experienced data scientists. LemonAI aims to streamline the development of AI applications and empower users to create innovative solutions using state-of-the-art machine learning methods.

deepteam

Deepteam is a powerful open-source tool designed for deep learning projects. It provides a user-friendly interface for training, testing, and deploying deep neural networks. With Deepteam, users can easily create and manage complex models, visualize training progress, and optimize hyperparameters. The tool supports various deep learning frameworks and allows seamless integration with popular libraries like TensorFlow and PyTorch. Whether you are a beginner or an experienced deep learning practitioner, Deepteam simplifies the development process and accelerates model deployment.

ms-agent

MS-Agent is a lightweight framework designed to empower agents with autonomous exploration capabilities. It provides a flexible and extensible architecture for creating agents capable of tasks like code generation, data analysis, and tool calling with MCP support. The framework supports multi-agent interactions, deep research, code generation, and is lightweight and extensible for various applications.

ai

This repository contains a collection of AI algorithms and models for various machine learning tasks. It provides implementations of popular algorithms such as neural networks, decision trees, and support vector machines. The code is well-documented and easy to understand, making it suitable for both beginners and experienced developers. The repository also includes example datasets and tutorials to help users get started with building and training AI models. Whether you are a student learning about AI or a professional working on machine learning projects, this repository can be a valuable resource for your development journey.

ml-retreat

ML-Retreat is a comprehensive machine learning library designed to simplify and streamline the process of building and deploying machine learning models. It provides a wide range of tools and utilities for data preprocessing, model training, evaluation, and deployment. With ML-Retreat, users can easily experiment with different algorithms, hyperparameters, and feature engineering techniques to optimize their models. The library is built with a focus on scalability, performance, and ease of use, making it suitable for both beginners and experienced machine learning practitioners.

OpenManus-RL

OpenManus-RL is an open-source initiative focused on enhancing reasoning and decision-making capabilities of large language models (LLMs) through advanced reinforcement learning (RL)-based agent tuning. The project explores novel algorithmic structures, diverse reasoning paradigms, sophisticated reward strategies, and extensive benchmark environments. It aims to push the boundaries of agent reasoning and tool integration by integrating insights from leading RL tuning frameworks and continuously updating progress in a dynamic, live-streaming fashion.

awesome-ai-agent-papers

This repository contains a curated list of papers related to artificial intelligence agents. It includes research papers, articles, and resources covering various aspects of AI agents, such as reinforcement learning, multi-agent systems, natural language processing, and more. Whether you are a researcher, student, or practitioner in the field of AI, this collection of papers can serve as a valuable reference to stay updated with the latest advancements and trends in AI agent technologies.

AI_Spectrum

AI_Spectrum is a versatile machine learning library that provides a wide range of tools and algorithms for building and deploying AI models. It offers a user-friendly interface for data preprocessing, model training, and evaluation. With AI_Spectrum, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is designed to be flexible and scalable, making it suitable for both beginners and experienced data scientists.

BrowserGym

BrowserGym is an open, easy-to-use, and extensible framework designed to accelerate web agent research. It provides benchmarks like MiniWoB, WebArena, VisualWebArena, WorkArena, AssistantBench, and WebLINX. Users can design new web benchmarks by inheriting the AbstractBrowserTask class. The tool allows users to install different packages for core functionalities, experiments, and specific benchmarks. It supports the development setup and offers boilerplate code for running agents on various tasks. BrowserGym is not a consumer product and should be used with caution.

God-Level-AI

A drill of scientific methods, processes, algorithms, and systems to build stories & models. An in-depth learning resource for humans. This repository is designed for individuals aiming to excel in the field of Data and AI, providing video sessions and text content for learning. It caters to those in leadership positions, professionals, and students, emphasizing the need for dedicated effort to achieve excellence in the tech field. The content covers various topics with a focus on practical application.

For similar tasks

intro-llm-rag

This repository serves as a comprehensive guide for technical teams interested in developing conversational AI solutions using Retrieval-Augmented Generation (RAG) techniques. It covers theoretical knowledge and practical code implementations, making it suitable for individuals with a basic technical background. The content includes information on large language models (LLMs), transformers, prompt engineering, embeddings, vector stores, and various other key concepts related to conversational AI. The repository also provides hands-on examples for two different use cases, along with implementation details and performance analysis.

LLM-Viewer

LLM-Viewer is a tool for visualizing Language and Learning Models (LLMs) and analyzing performance on different hardware platforms. It enables network-wise analysis, considering factors such as peak memory consumption and total inference time cost. With LLM-Viewer, users can gain valuable insights into LLM inference and performance optimization. The tool can be used in a web browser or as a command line interface (CLI) for easy configuration and visualization. The ongoing project aims to enhance features like showing tensor shapes, expanding hardware platform compatibility, and supporting more LLMs with manual model graph configuration.

llm-colosseum

llm-colosseum is a tool designed to evaluate Language Model Models (LLMs) in real-time by making them fight each other in Street Fighter III. The tool assesses LLMs based on speed, strategic thinking, adaptability, out-of-the-box thinking, and resilience. It provides a benchmark for LLMs to understand their environment and take context-based actions. Users can analyze the performance of different LLMs through ELO rankings and win rate matrices. The tool allows users to run experiments, test different LLM models, and customize prompts for LLM interactions. It offers installation instructions, test mode options, logging configurations, and the ability to run the tool with local models. Users can also contribute their own LLM models for evaluation and ranking.

eureka-ml-insights

The Eureka ML Insights Framework is a repository containing code designed to help researchers and practitioners run reproducible evaluations of generative models efficiently. Users can define custom pipelines for data processing, inference, and evaluation, as well as utilize pre-defined evaluation pipelines for key benchmarks. The framework provides a structured approach to conducting experiments and analyzing model performance across various tasks and modalities.

Pixelle-MCP

Pixelle-MCP is a multi-channel publishing tool designed to streamline the process of publishing content across various social media platforms. It allows users to create, schedule, and publish posts simultaneously on platforms such as Facebook, Twitter, and Instagram. With a user-friendly interface and advanced scheduling features, Pixelle-MCP helps users save time and effort in managing their social media presence. The tool also provides analytics and insights to track the performance of posts and optimize content strategy. Whether you are a social media manager, content creator, or digital marketer, Pixelle-MCP is a valuable tool to enhance your online presence and engage with your audience effectively.

trae-agent

Trae-agent is a Python library for building and training reinforcement learning agents. It provides a simple and flexible framework for implementing various reinforcement learning algorithms and experimenting with different environments. With Trae-agent, users can easily create custom agents, define reward functions, and train them on a variety of tasks. The library also includes utilities for visualizing agent performance and analyzing training results, making it a valuable tool for both beginners and experienced researchers in the field of reinforcement learning.

dataset-viewer

Dataset Viewer is a modern, high-performance tool built with Tauri, React, and TypeScript, designed to handle massive datasets from multiple sources with efficient streaming for large files (100GB+) and lightning-fast search capabilities. It supports instant large file opening, real-time search, direct archive preview, multi-protocol and multi-format support, and features a modern interface with dark/light themes and responsive design. The tool is perfect for data scientists, log analysis, archive management, remote access, and performance-critical tasks.

LiftShift

LiftShift is a web application that provides analytics and tracking features for fitness enthusiasts. Users can upload workout data, explore analytics dashboards, receive real-time feedback, and visualize workout history. The tool supports different body types and units, and offers insights on workout trends and performance. LiftShift also detects session goals and provides set-by-set feedback to enhance workout experience. With local storage support and various theme modes, users can easily track their fitness progress and customize their experience.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.